Abstract

Computer aided instruction (CAI) software is becoming commonplace in medical education. Our experience with CAI programs in our pediatric ED raised concerns about the time commitment some of these programs require. We developed a just-in-time learning program, the Virtual Preceptor (VP) and evaluated this program for use in a busy clinical environment.

Forty-three of 47 pediatric residents used the VP at least once. Interns used the program 2 ½ times more often than upper level residents. Of 321 topics available in 18 subject categories, 153 (48%) were selected at least once. Content was rated as appropriate by 72% of users. 95% of residents would use the program again. Although no resident felt the program itself took too long to use, 51% said they were too busy to use the VP. Time of use and level of training may be important factors in CAI use in the pediatric ED environment.

INTRODUCTION

Resident education is a primary responsibility of academic faculty practicing in busy clinical settings. While clinical demands have increased in recent years, these instructional responsibilities remain1. Complementary educational resources may help extend the learning opportunities for residents in these settings. Computer-aided instructional (CAI) software is one possible resource2.

CAI tools are becoming commonplace in medicine, as indicated by the expanding medical literature on this topic3. At least one study suggests that pediatric residents are interested in using CAI as a supplement to direct one-on-one instruction.4 Educational World Wide Web sites5,6, electronic textbooks7, tutorials 8, and simulations9 have been produced for both general audiences and specific subspecialties. In our practice area, the pediatric emergency department (PED), a limited amount of specific CAI has been produced. Yamamoto’s Radiology Cases in Pediatric Emergency Medicine appears to be the best known.10

Acute care settings (emergency departments, busy clinics, etc.) are difficult educational environments. Some specific learning challenges include:

High patient volumes

Serious, acute illnesses are infrequent.

Seasonality of disease may result in a resident not experiencing common illness

Pusic and colleagues developed three CAI tutorials and evaluated residents’ use of these programs.11 The tutorials focused on infrequent, high-risk illnesses. In this study, only 34% of residents completed a tutorial; 37% interacted with the program for less than five minutes. This may reflect one limitation on the use of CAI in the PED. Our research question stems from this point: Does integrating CAI into the workflow of a busy clinical setting offer any advantages?

To adapt CAI to our acute care environment, we used as a guide the just-in-time (JIT) learning model described by Chueh and Barnett12. JIT instruction involves the provision of information in a concise, learner-specific form “just as the learner needs it”. This concept is borrowed from the JIT inventory model used in business. Just as a business is more efficient if the needed part arrives the day it is used, avoiding the cost of storage, learning is more efficient if the appropriate information is provided just as its use is required. As one might have guessed, the JIT model is not so much a novel concept as a formulation of what is already practiced – it is precisely the method used by academic medical faculty in settings like the PED. We simply call it precepting. (Of course, precepting encompasses other functions such as role modelling.) In a survey of PED faculty, precepting was the most commonly employed educational strategy.13

To evaluate the value of this model of CAI design, we developed a CAI program called the Virtual Preceptor that:

Requires less than five minutes to use.

Provides a brief amount of information based on a user’s request.

Provides information in a timely fashion.

In this paper, we describe the development of the Virtual Preceptor program and evaluate the resident use of and satisfaction with the program.

METHODS

Study Setting and Population

This study was conducted at the Johns Hopkins Hospital Pediatric Emergency Department (Baltimore, MD), in which 26,000 children are seen annually. Residents provide the first line of care and are precepted by faculty or fellows in the Division of Pediatric Emergency Medicine. The number of residents working at any one time varies from one to seven. Pediatric residents (all years) rotate to the PED for month-long rotations one to two months per year. During any month, fourteen to seventeen residents are assigned to the PED for at least two weeks.

Development of the Virtual Preceptor Software

Our goal in developing the Virtual Preceptor was to provide brief, focused educational content for use in the pediatric emergency department. To accomplish this goal, we designed the software with specific constraints.

To address the time pressures of the PED, the program was designed to take less than 5 minutes per encounter. The content was broken down into paragraph-long segments for quick reading. The interface was streamlined to require only three Web pages in total.

To increase initial acceptance, we avoided the introduction of new software. This was a second motivation for choosing a Web interface, as previous work at our institution has demonstrated that a majority of pediatric residents have used the Web4. Our hospital information service deploys personal computers (PCs) that run Microsoft (Microsoft, Redmond, WA) software and uses the Internet Explorer™ browser software. The Web site was designed using Drumbeat 2000™ (Macromedia Inc., San Francisco, CA) and was made available to the Web from a server running Microsoft NT4 Server™.

To reduce development time, the content of the database was limited to eighteen subject categories and a total of 321 topics. The content of the database was written by one of the authors (MA) with citations to source material as necessary. Total development time for this database was three months. Faculty members reviewed all topics for accuracy. The content was stored in a relational database created using Microsoft Access 97™.

In this version of the Virtual Preceptor, the user was restricted in how the content could be queried. Restricting search options reduced the need for sophisticated searching software and a complex database structure.

A user logs into the site from any Web connected computer using his/her hospital assigned doctor ID number. In our PED, there were two main computers used by residents. A second page was then displayed, allowing a user to choose from a list of subjects . (e.g. asthma). That choice, in turn, returned a new list of 12–20 topics pertaining to the subject chosen (e.g. asthma medications). The user could choose up to three subject/topic pairs, which were displayed on the results page (Figure 1).

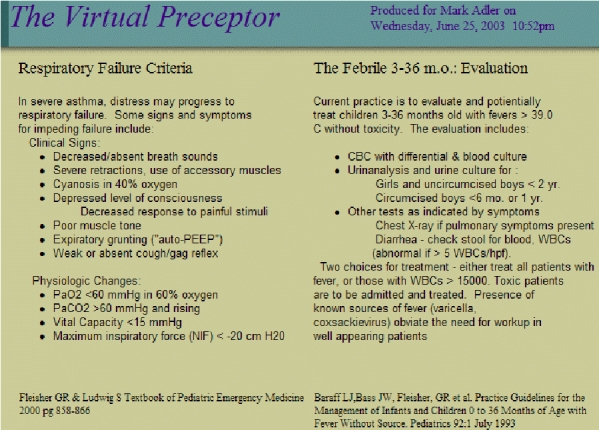

Figure 1.

(Cropped for space) Example of the output of the Virtual Preceptor.

The user was then prompted to print out the page for later use. The searching technique was restrictive in that it limited a user to the choices displayed in drop-down menus displayed on the Web page. The software was configured to track each user interaction, including the user ID, the time of the interaction, and the subjects/topics chosen. Any use that proceeded to the result page was logged. Printing was not necessary for the use to be tracked.

Study Design

This was a single group descriptive study. The study cohort comprised all residents rotating in the pediatric emergency department from July through September 2000.

The program was available on all PED computers; use was voluntary. Each resident received a demonstration of the program at the beginning of the rotation and subsequently had unlimited access to the program. Additional prompting was limited to a posted reminder in the PED work area. The faculty were free to suggest use of the program; we did not track how often this occurred. On-line help in the form of both a text help page and a multimedia tutorial was available.

Residents completed a 40-question survey at the end of their rotation. The survey covered demographic information, computer familiarity, and previous use of CAI. Residents were asked how often they used the program. Satisfaction questions asked for resident agreement or disagreement with 19 statements regarding their use of the Virtual Preceptor. We used a four-point Likert-type scale – Disagree Strongly, Disagree, Agree, Strongly Agree. This paper survey was distributed by one of the authors (MA).

Residents participated in a focus group at the end of the study period to develop a more in -depth understanding of barriers to use of the program specifically and CAI in general. The focus group was led by one of the authors (KJ) who is not an ED faculty member and had not demonstrated or recommended the program. Two of the investigators (KJ and MA) arrived at consensus on important themes after reviewing audio tapes of the focus group.

Our Institutional Review Board approved this study. Informed consent was obtained from each participant. The survey was not anonymous, as the detail of information collected would have made it easy to guess the resident’s identity. The lack of anonymity also allowed the investigators to contact non-respondents to encourage completion.

Data Analysis

We conducted a univariate analysis of descriptive measures of usability and satisfaction to obtain percent agreement values with 95% confidence intervals. Bivariate analysis of the impact of gender, age, level of training, length of PED rotation, month in study, and comfort with computers on satisfaction was conducted using Fisher’s Exact test for categorical variables and Kruskal-Wallis test for equality of populations for ordinal variables. Resident age was evaluated as a continuous variable using Student’s t-test. For usage data, we used the Wilcoxon Rank-sum test to compare means. All ordinal scales were evaluated both as originally collected and after dichotomization into agree/disagree categories.

RESULTS

Forty-seven residents were asked to participate and all agreed. Computer use and familiarity was high among residents: 89% had access to a computer at home, 85% rated themselves as at least comfortable using a computer, and all used the computer on a daily basis to obtain clinical information. Eighty-one percent had used CAI programs in the past, and of those previous users, all felt that CAI was a useful educational tool. Forty-eight percent rated CAI to be very useful. Female residents were more likely to report a lack of comfort with computers (p=.03).

According to resident survey responses, 43/47 (91%) used the program at least once during their rotation. The four non-users were all upper level residents who were in the PED for two-week rotations (the typical rotation is four weeks); their demographic and previous computer use data were included in the survey results.

Overall, the program was accessed 1.2 times/day (range 0–7). We divided the workday into three shifts: day (8a–6p), evening (6p–12a), and overnight (12a–8p). Forty-eight percent of use occurred during the day shift, adjusting for the changing number of residents during the day. Seventy-three percent of use occurred on weekdays. The average user logged into the Virtual Preceptor three times during the study (range 0–10). Interns used the program an average of 4.6 times per rotation or 2 ½ times as often as upper level residents (p=0.001). Use was not related to gender, previous computer experience, or the length of PED rotation.

Of 321 topics available in 18 subject categories, 153 (48%) were selected at least once. The most popular subjects were seizures, abdominal pain, child abuse, fever, and asthma. Ninety-five percent of residents would use the program again. Gender, age, training year, past computer use, or comfort with computers were not associated with any satisfaction measure. User satisfaction scores are summarized in Table 1.

Table 1.

(Italics indicate responses where low scores are better).

| Question | % Agree | 95% CI (Binomial) | |

|---|---|---|---|

| Overall, VP was easy to use | 100% | 92% | 100% |

| I would use VP again | 95% | 84% | 99% |

| Printer worked | 93% | 78% | 97% |

| Handout was useful | 93% | 78% | 97% |

| I got the answer I expected | 88% | 75% | 96% |

| Computer was available | 86% | 69% | 93% |

| Provided the right amount of information | 72% | 56% | 85% |

| ED was too busy to use VP | 51% | 35% | 67% |

| Hard to get specific information | 47% | 31% | 62% |

| More useful than reading | 38% | 23% | 53% |

| VP was too basic | 35% | 21% | 51% |

| ED was too hectic to CAI in general | 30% | 17% | 46% |

| More convenient to read on the screen | 26% | 14% | 41% |

| I would have preferred to enter my query as text | 19% | 8% | 33% |

| Less convenient than reading | 19% | 8% | 33% |

| Used VP but never read printout | 9% | 3% | 22% |

| Similar to being taught by faculty | 5% | 1% | 16% |

| Did not teach me anything | 2% | 0% | 12% |

| I needed more instructions | 0% | 0% | 8% |

| VP took too long to use | 0% | 0% | 8% |

Time was felt to be a constraint: 51% of residents said they were too busy to use the Virtual Preceptor specifically and 30% felt the PED was too hectic to use any CAI program. Interestingly, no resident felt the program itself took too long to use. Content was thought to be appropriate for the level of training by 65% of users and length was felt to be appropriate by 72%. Impediments to use, such as an available computer or functioning printer, were noted by 14% and 7% respectively.

Focus group participants gave the Virtual Preceptor generally positive reviews. Five themes emerged from analysis of the focus group:

Despite the survey results suggesting computers were available, focus group participants suggested otherwise. Even if the computer was free for the moment, they feared that the computer would be needed for a clinical function.

Participants reinforced the time-pressures experienced by residents. They reported that most use occurred at slower times, and that at busy times they felt too pressured to use the program.

Participants noted that the content available was limited. One focus group member indicated she would have used the program more if additional content were available. The same upper level resident suggested that once she discovered that the content was limited, she did not return to the Virtual Preceptor.

Residents suggested that their need for different types of content varied depending on the clinical situation. Some wished for brief content to help before they went to see a patient, others wanted direct links to diagnosis or management by disease process, while others liked the current Virtual Preceptor design based on a mix of symptoms and diagnoses. One participant suggested that the program should be able to offer different kinds of information (diagnostic vs. educational) based on the user’s specific need at that moment.

Some participants expressed frustration with the program design that limited the ability to answer very specific queries.

DISCUSSION

In this study, we applied just-in-time learning concepts to CAI design for use in the pediatric ED. Overall, use of the program was modest. Users reported generally positive experience with use of the program and most expressed a willingness to use the program again.

How time affects CAI use in the pediatric ED was one of the main interests driving this study. Unfortunately, our results pertaining to this issue are conflicting. Residents stated that the program (VP) did not take to long to use. Despite this fact, 51% felt too busy to use this program and almost one-third of residents felt the ED was too busy to use any CAI program. One possible way to interpret this information is to reconsider the role of CAI in the ED. Instruction might be moved to another time (before a shift to supplement a lecture schedule) or CAI might be re -imagined as decision support. In the decision support model, prompts built into clinical information tools provide instantaneous warnings, suggestions, calculator tools, or links to reference material. Since clinicians necessarily use these clinical tools, learning in this fashion requires little effort.

Alternately, the fact that this study used a relatively limited database of content may have produced the conflicted opinion about time. If the residents did not feel that the time spent was rewarded sufficiently (the content was not rich enough), they might report feeling “too busy” to use the program.

Time of day was related to use as well, with more use occurring on weekday daytime shifts, taking into account the varying number of residents amongst shifts. In general, the peak patient volumes in the our ED are late afternoon through midnight, so this relationship may simply reflect the fact the daytime shifts were less busy and offered more time to go to the computer.

In this small-scale study, it is difficult to extrapolate our results further. Some interesting findings deserve further investigation:

We did not directly evaluate the use of other educational resources during the study. It would be of interest to know how use of this program compared to these other resources.

The variation in use between interns and upper level residents was substantial. Because the content of the program was “slanted” toward introductory content and that residents in our ED fill different roles in different years, we cannot easily interpret this difference. More information about how the level of the resident learner affects CAI use would be of value in future software design.

This study has some limitations. A study of a new intervention is potentially subject to the Hawthorne effect. One way to assess this effect is to use a much longer evaluation period. While this was beyond the scope of this pilot study, we plan to include a late evaluation component in the next phase of our research.

Faculty were free to encourage use of the program. Variation in prompting among faculty may have affected our outcomes. One of the authors distributed the survey, which may have affected residents’ willingness to respond negatively to the questionnaire that was given to them by a supervisor.

As a pilot study at one institution, we were limited by the size of the resident subject population. Also, the use of a Likert scale with four points limits the discriminative power of our survey results. A larger sample and the use of either a 0–100 scale or a visual analog scale might have lent more discriminative power to the study results.

Lastly, we evaluated a specific software application in a single PED, limiting the generalizability of our conclusions to other emergency departments. Further study at more than one site would be necessary to evaluate use in a wider variety of settings.

CONCLUSION

Residents gave generally positive ratings to our just-in-time learning based CAI program, the Virtual Preceptor. Time of use and level of training may be important factors in CAI use in the pediatric ED environment.

Footnotes

Previously presented in part at the Ambulatory Pediatric Association Annual Meeting, May 28, 2001 and at the Society for Academic Emergency Medicine Annual Meeting, June 6–7, 2001.

References

- 1.Syverud S. Academic juggling. Acad Emerg Med. 1999 Apr;6(4):254–5. doi: 10.1111/j.1553-2712.1999.tb00383.x. [DOI] [PubMed] [Google Scholar]

- 2.Dev P, Hoffer EP, Barnett GO. Computers in Medical Education. In: Shortliffe EH, Perrault LE, et al, eds. Medical Informatics: Computer Applications in Health Care and Biomedicine. 2nd ed. New York: Springer; 2001. p 610–37.

- 3.Adler MD, Johnson KB. Quantifying the literature of computer-aided instruction in medical education. Acad Med. 2000 Oct;75(10):1025–8. doi: 10.1097/00001888-200010000-00021. [DOI] [PubMed] [Google Scholar]

- 4.Pusic MV. Pediatric residents: are they ready to use computer-aided instruction? Arch Pediatr Adolesc Med. 1998 May;152(5):494–8. doi: 10.1001/archpedi.152.5.494. [DOI] [PubMed] [Google Scholar]

- 5.eMedicine. www.emedicine.com 1999.

- 6.Spooner SA, Anderson KR. The internet for pediatricians. Pediatr Rev. 1999 Dec;20(12):399–409. [PubMed] [Google Scholar]

- 7.Santer DM, D’Alessandro MP, Huntley JS, Erkonen WE, Galvin JR. The multimedia textbook. A revolutionary tool for pediatric education. Arch Pediatr Adolesc Med. 1994 Jul;148(7):711–5. doi: 10.1001/archpedi.1994.02170070049008. [DOI] [PubMed] [Google Scholar]

- 8.Overton DT. A microcomputer application curriculum for emergency medicine residents using computer-assisted instruction. Ann Emerg Med. 1990 May;19(5):584–6. doi: 10.1016/s0196-0644(05)82195-x. [DOI] [PubMed] [Google Scholar]

- 9.Tanner TB, Gitlow S. A computer simulation of cardiac emergencies. Proc Annu Symp Comput Appl Med Care. 1991:894–6. [PMC free article] [PubMed] [Google Scholar]

- 10.Radiology Cases in Pediatric Emergency Medicine. Dept. of Pediatrics, John A. Burns School of Medicine, University of Hawaii; 1996.

- 11.Pusic MV, Johnson KB, Duggan AK. Utilization of a Pediatric Emergency Department Education Computer. Arch Pediatr Adolesc Med. 2001 Feb;155(2):129–34. doi: 10.1001/archpedi.155.2.129. [DOI] [PubMed] [Google Scholar]

- 12.Chueh H, Barnett GO. “Just-in-time” clinical information. Acad Med. 1997 Jun;72(6):512–7. doi: 10.1097/00001888-199706000-00016. [DOI] [PubMed] [Google Scholar]

- 13.Fein JA, Lavelle J, Giardino AP. Teaching Emergency Medicine to Pediatric Residents: A National Survey and Proposed Model. Pediatr Emerg Care . 1995 Aug;11(4):208–11. doi: 10.1097/00006565-199508000-00003. [DOI] [PubMed] [Google Scholar]