Abstract

Background

Computer-based clinical decision support systems (CDSSs) vary greatly in design and function. A taxonomy for classifying CDSS structure and function would help efforts to describe and understand the variety of CDSSs in the literature, and to explore predictors of CDSS effectiveness and generalizability.

Objective

To define and test a taxonomy for characterizing the contextual, technical, and workflow features of CDSSs.

Methods

We retrieved and analyzed 150 English language articles published between 1975 and 2002 that described computer systems designed to assist physicians and/or patients with clinical decision making. We identified aspects of CDSS structure or function and iterated our taxonomy until additional article reviews did not result in any new descriptors or taxonomic modifications.

Results

Our taxonomy comprises 95 descriptors along 24 descriptive axes. These axes are in 5 categories: Context, Knowledge and Data Source, Decision Support, Information Delivery, and Workflow. The axes had an average of 3.96 coded choices each. 75% of the descriptors had an inter-rater agreement kappa of greater than 0.6.

Conclusions

We have defined and tested a comprehensive, multi-faceted taxonomy of CDSSs that shows promising reliability for classifying CDSSs reported in the literature.

1. INTRODUCTION

There is increasing interest in the use of computer-based clinical decision support systems (CDSSs) to reduce medical errors (1) and to increase health care quality and efficiency (2). However, CDSSs vary greatly in their design, function, and use, and little is known about how various CDSS characteristics are related to clinical effectiveness, generalizability of evaluation results, and system portability. A system for characterizing CDSSs is needed to better understand the diversity of CDSSs.

In this paper, we present a taxonomy of CDSSs highlighting aspects of CDSS design and function that are likely to be important for understanding CDSS effectiveness, generalizability, and workflow impact. While there have been other CDSS taxonomies (3–6), ours is the first designed specifically for furthering the science of CDSS evaluation rather than being part of broad reviews for technical (4) or information technology management (3) audiences.

2. METHODS

2.1 Data Source

Using the keywords clinical decision support, decision support, computers, and software, we searched Medline for English articles published between 1975 and 2002 describing or reviewing computer systems that assist physicians and/or patients with clinical decision making. We excluded systems that were strictly educational or results display, or were directly therapeutic (e.g., x-ray therapy dosing or computer-assisted surgery). Meta-analysis and review articles were used to identify additional reports. Studies of all types, from descriptive reports to randomized controlled trials, were included.

2.2 Taxonomy Development

The taxonomy was developed iteratively. We identified CDSS descriptors (e.g., outpatient target setting) by independently reviewing 10 to 20 articles at a time. We then grouped these descriptors into axes representing higher-order aspects of CDSS structure or function. This taxonomy was extended and refined as needed through additional rounds of independent article review and joint discussion, until additional article reviews did not result in any new descriptors or taxonomic modifications. Our guiding principle was to identify descriptors that may be associated with CDSS effectiveness (7–9) and generalizability, or that would clarify how the CDSS was implemented within the users’ workflow. After the taxonomy was finalized, inter-rater agreement for each descriptor was calculated for a subset of 9 RCT articles from 1995 to 2002 using Cohen’s kappa (10).

3. RESULTS

155 articles were retrieved, yielding 150 articles that satisfied our inclusion criteria. The taxonomy was developed using a purposeful sample of 150 articles selected to reflect a wide range of CDSS characteristics. These 150 articles included 27 descriptive reports, 27 non-randomized evaluation studies, and 96 randomized trials. A preliminary calculation of inter-rater agreement was performed on the coding of 9 RCT reports that had not been used for taxonomy development.

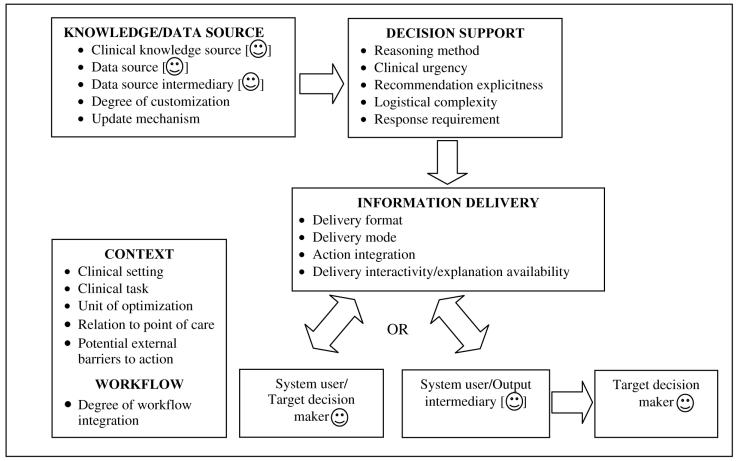

The final taxonomy (Figure 1) consists of 24 axes grouped into 5 broad categories: Context (n = 6 axes), Knowledge and Data Source (n = 5), Decision Support (n = 5), Information Delivery (n = 4), and Workflow (n = 4). Each axis had an average of 3.96 descriptors (total = 95 descriptors). 19 axes could be coded with more than one descriptor; the remaining 3 axes (Update Mechanism, Action Integration, and Degree of Workflow Integration) had mutually exclusive descriptors. The taxonomy and detailed usage instructions are available online at http://rctbank.ucsf.edu/CDSStaxonomy/.

Figure 1. Overview of Taxonomy Axes.

This figure illustrates how the 24 taxonomy axes are related to the CDSS workflow and to each other. The ☺and [☺] symbols denote human and possible human roles respectively. It is apparent that both CDSS features (e.g., Clinical Knowledge Source) and workflow features (e.g., whether a data input intermediary is needed) are important for describing the CDSS.

3.1 Context Axes

The context of a CDSS’s use and evaluation affects the system’s generalizability and relevance.

Clinical setting: CDSSs can be used in an inpatient or outpatient setting, or without any relationship to a healthcare entity (e.g., a web site for smoking cessation). Medical trainees may be involved in teaching institution settings.

Clinical Task: Traditionally, CDSSs have been evaluated on the basis of their target task, such as prevention or screening, diagnosis, treatment, drug dosing or prescribing, and test ordering. To these tasks, we add health-related behaviors (e.g., exercise), as well as chronic disease management because CDSSs for chronic diseases may be more likely to fail (8).

Unit of Optimization: CDSSs may be designed to optimize patient outcomes (e.g, blood pressure), or physician/organizational outcomes (e.g., clinic costs). This characteristic affects relevance and the ability to secure buy-in from future clinical users.

Relation to Point of Care: Some CDSSs aim to support decisions that should be made during a shared clinician-patient encounter (e.g., whether to undergo surgery). For these CDSSs, the recommendation could be delivered to the target decision maker before, during, or after that shared encounter (synchronous with the “point of care”). Other decisions could be (e.g., flu vaccination) or always are (e.g., preparation of a low-fat meal) made independently of any shared clinician-patient encounter (asynchronous), and are coded as such.

Potential External Barriers to Action: All CDSSs by definition support decision making: the committment to an action that allocates resources. If the patient is the CDSS’s target decision maker, the recommended actions may involve substanstial socioeconomic, psychological, or other barriers (e.g., a recommendation for a followup visit may require arranging transportation and child care). CDSSs recommending actions with greater potential external barriers may be more prone to failure.

3.2 Knowledge and Data Source Axes

Axes in this category classify the source, quality, and customization of the CDSS’s knowledge and data. Both system users and researchers need this information to judge the credibility and scientific strength of the CDSS’s recommendations.

Clinical Knowledge Source: The clinical knowledge underlying a CDSS can be coded as being derived from high-quality sources, clinician-developers, and/or from participation of the system’s eventual users (buy-in processes). We designated as high-quality sources national or professional society guidelines, well-accepted standards (e.g., the Beck Depression Inventory for depression diagnosis), and published randomized trials or analytic reviews (systematic reviews, decision and cost-effectiveness analyses). Most CDSS reports provide sufficient data to allow the coding of knowledge source to this approximation of quality.

Data Source: The patient and other data upon which the recommendations are generated may be acquired directly from computerized order entry, an electronic medical record (EMR) or data repository, or a medical instrument (e.g., EKG). Alternatively, the data may come from a paper chart, in which case a data input intermediary (see axis below) must exist. If the data comes from a person (the patient, a clinician, or an ancillary staff member), that person may directly enter the data or a data input intermediary may be required. These characteristics affect the likelihood that a CDSS can be successfully fielded in various settings.

Data Source Intermediary: A data input intermediary is a person who inputs data from the Patient Data Source (see above) into the CDSS. Intermediaries can be patients, physicians, other clinicians (e.g., a nurse), or non-clinicians. Clinician intermediaries can be active — one who uses clinical knowledge (e.g., taking a history) — or passive. Systems may or may not require a data source intermediary.

Data coding: Input data could be free text, coded according to a local coding scheme, or coded according to a widely used schema (e.g., ICD-9, SNOMED). This characteristic was rarely described in our reviewed articles.

Degree of Customization: CDSS recommendations vary in their customization to the patient. Generic recommendations are undifferentiated (e.g., exercise more). Targeted ones are customized only to the patient’s age, gender, and/or diagnosis (e.g., mammography for older women). Personalized recommendations incorporate individual patient clinical or lifestyle data (e.g., mammography overdue given date of last mammogram). Greater customization may be associated with greater clinical effectiveness.

Update Mechansim: A CDSS’s knowledge base should ideally be evidence-adaptive: kept up-to-date with the best available evidence (11). The update mechansim can be automated, manual, or non-existent. In the reviewed articles, this feature is almost never described.

3.3 Decision Support Axes

These axes characterize the nature of the decision(s) supported and the type of support given.

Reasoning Method: Types of CDSS reasoning engines include rule-based systems, neural networks, belief networks, and many others. The reasoning method was described only in very few of the CDSS reports we reviewed.

Clinical Urgency: CDSSs may provide decision support for decisions that need to be made imminently. Such CDSSs may receive more user attention and may therefore have greater effectiveness. A related notion, clinical priority, is also likely to be related to effectiveness, but cannot reliably be coded and is therefore not part of the taxonomy.

Recommendation Explicitness: Users may be more likely to follow explicit recommendations (e.g., “screen for depression”) than implicit ones (e.g., “daily CBC tests are not ordinarily indicated”).

Logistical Complexity of Recommended Action: Users may be more likely to perform simple, one-step recommended actions than complex actions with multiple steps over time or space or requiring coordination with other people. All behavior changes (e.g., exercise more) are considered complex.

Response Requirement: CDSSs may require the target decision maker to respond to the recommendation with a non-committal acknowledgement, a statement of what action was taken, and/or an explanation for non-compliance. As the response requirement increases, compliance may improve but CDSS usage may decrease.

3.4 Information Delivery Axes

CDSS recommendations can be delivered in many paper- and computer-based ways.

Delivery Format: Recommendations can be delivered in paper-based format (e.g., alone or accompanied by paper chart, fax, mail), online access (stand-alone or integrated with EMR, or WWW), or via other technololgy (e.g., phone, pager, e-mail).

Delivery Mode: “Pull” systems deliver their recommendations only after the target decision maker explicitly requests a recommendation (e.g., entered data into a particular field). “Push” systems deliver recommendations without the target decision maker’s request or consent. In a busy practice, push systems may be more effective but may also be more resented.

Action Integration: CDSSs may offer target decision makers the ability to complete the recommended action with minimal effort (e.g., a click, a check mark). Action integration does not have to be computer-based.

Delivery Interactivity/Explanation Availability: Target decision makers who can request additional information or an explanation of the recommended action may be more likely to comply with the CDSS.

3.5 Workflow Axes

CDSSs are as much a process as a technological intervention. Thus, a CDSS’s workflow requirements and impact are important for understanding its effects and its generalizability.

System User: System users are those individuals who directly interact with the CDSS output. System users may include data output intermediaries, and target decision makers.

Target Decision Maker: The targeted decision maker may be the physician, patient, ancillary staff member, or the patient together with a physician or staff member.

Output Intermediary: An output intermediary is a person who handles or manipulates the information generated by the CDSS before it is viewed by the Target Decision Maker. Systems may or may not have an output intermediary. The output intermediary can be active (e.g., paging correct care provider depending on type of recommended action) or passive (e.g., paper-clipping encounter form to chart). To isolate the workflow requirements of a CDSS, an output intermediary is coded only if that person’s function is entirely a result of that CDSS (e.g., only if no encounter form would otherwise have to be paper-clipped to the chart).

Degree of Workflow Integration: CDSSs that are compatible with the organization’s workflow are likely to have higher usage and effectiveness. For “push” CDSSs, we additionally code whether the target decision maker must halt his/her workflow to process the CDSS recommendation.

3.6 Inter-Rater Agreement

Reporting completeness varied greatly and was often ambiguous, especially regarding knowledge source and workflow. For 65 (74%) of the 88 descriptors that we tested inter-rater agreement on, there was complete agreement between the two raters. For 48 (55%) of the 71 descriptors for which a kappa could be calculated, the kappa was >= 0.6. For 10 (11%) descriptors in the following axes, the kappa was < 0.5: Unit of Optimization, Relation to Point of Care, Data Source, Delivery Interactivity/Explanation Availability, System User, Output Intermediary, Potential External Barriers to Action, and Degree of Workflow Integration. All disagreements resulted from ambiguous reporting or coding errors, and were in each case resolved by subsequent discussion.

4. DISCUSSION

Computer-based CDSSs vary greatly in their context of use, knowledge and data sources, nature of decision support offered, information delivery, and workflow demands and integration. To characterize this variety, we have defined a CDSS taxonomy based on a wide range of CDSS reports in the literature. Our taxonomy consists of 95 descriptors along 24 axes that capture key elements of CDSS design and function. Compared to other CDSS taxonomies (3–6), ours is as or more comprehensive and is the only one specifically designed for classifying CDSSs reported in the literature.

Our taxonomy has three primary uses. First, it can be used to describe individual CDSSs in a standard form, for a quick overview of the CDSS’s characteristics and workflow impact. Table 1 shows an example of this descriptive use. We expect the taxonomy to be continually refined from use and from empiric studies of CDSS effectiveness or generalizability, which may suggest new taxonomic descriptors. As it evolves, the taxonomy should serve as a guide to CDSS investigators on how to improve the completeness with which they describe their CDSSs in the literature.

Table 1.

CDSS coded using taxonomy.

| Context | |

| Clinical setting | Outpatient |

| Clinical Task | Prevention |

| Unit of Optimization | Patient |

| Relation to Point of Care | May or may not occur during shared clinician-patient encounter |

| Potential Completion Barriers | Contextual or socioeconomic barriers |

| Knowledge and Data Source | |

| Clinical Knowledge Source | National or professional society guidelines |

| Data Source | Paper chart |

| Data Source Intermediary | Non-physician staff active intermediary |

| Data Coding | Not described |

| Degree of Customization | Personalized |

| Update Mechanism | Not described |

| Decision Support | |

| Reasoning Method | Not described |

| Clinical Urgency | Non-urgent |

| Recommendation Explicitness | Explicit recommendation |

| Logistical Complexity | Complex for patient decision maker, simple for physician decision maker |

| Information Delivery | |

| Delivery Format | By mail for patient, printed |

| with chart for physician | |

| Delivery Mode | Push |

| Action Integration | Integrated for physician, not for patient |

| Delivery Interactivity/ Explanation Availability | Non interactive system for patient and physician, with explanation available for physician only |

| Workflow | |

| System User | Non-physician staff |

| Target Decision Maker | Patient, physician |

| Output Intermediary | Non-clinician staff passive processor |

| Workflow Integration | Moderately to well integrated, allows for workflow flexibility |

A second use of our taxonomy, which we are currently undertaking, is for classifying reported CDSSs to characterize the types of CDSSs that are being developed and evaluated. Finally, in other ongoing work, we are using the taxonomy’s descriptors as potential explanatory variables in a meta-regression on CDSS success.

Limitations of our current taxonomy include the need to determine inter-rater coding agreement among other coders and on a larger number of articles. Also, our taxonomy is not intended for Example coding of Burack, et al. (12), a CDSS that generated mammography reminders to both physicians and patients. supporting CDSS purchasing decisions: there are no descriptors for commercial characteristics such as manufacturer or cost, for example. The taxonomy is primarily intended for characterizing CDSSs to achieve greater understanding of potential predictors of CDSS effectiveness and generalizability. As this understanding develops, the taxonomy can be pruned to include only the most important descriptors and axes.

In conclusion, we have defined and tested a multi-faceted taxonomy for characterizing the contextual, technical, and workflow features of CDSSs. This taxonomy should help efforts to describe and understand the variety of CDSSs in the literature, and to explore predictors of CDSS effectiveness and generalizability.

REFERENCES

- 1.Kohn LT, Corrigan JM, Donaldson MS, editors. To Err is Human: Building a Safer Health System. Washington, DC: National Academy Press; 1999. [PubMed]

- 2.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century: National Academy Press; 2001. [PubMed]

- 3.Perreault L.A Pragmatic Framework for Understanding Decision Support Journal of Healthcare Information Management 19991325–21.10747702 [Google Scholar]

- 4.Kuperman G, Sittig D, Shabot M. Clinical Decision Support for Hospital and Critical Care. Journal of Healthcare Information Management. 1999;13(2):81–96. [Google Scholar]

- 5.Teich JM, Wrinn MM. Clinical decision support systems come of age. MD Comput. 2000;17(1):43–6. [PubMed] [Google Scholar]

- 6.Broverman CA. Standards for Clinical Decision Support Systems. Journal of Healthcare Information Management. 1999;13(2):23–31. [Google Scholar]

- 7.Trivedi MH, Kern JK, Marcee A, Grannemann B, Kleiber B, Bettinger T, et al. Development and implementation of computerized clinical guidelines: barriers and solutions. Methods Inf Med. 2002;41(5):435–42. [PubMed] [Google Scholar]

- 8.Rousseau N, McColl E, Newton J, Grimshaw J, Eccles M. Practice based, longitudinal, qualitative interview study of computerised evidence based guidelines in primary care. BMJ. 2003;326(7384):314. doi: 10.1136/bmj.326.7384.314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wendt T, Knaup-Gregori P, Winter A. Decision support in medicine: a survey of problems of user acceptance. Stud Health Technol Inform. 2000;77:852–6. [PubMed] [Google Scholar]

- 10.Cohen J. A coefficient of agreement for nominal scales. Educ Psych Meas. 1960;20(37–46) [Google Scholar]

- 11.Sim I, Gorman P, Greenes R, Haynes R, Kaplan B, Lehmann H, et al. Clinical Decision Support Systems for the Practice of Evidence-Based Medicine. J Am Med Inform Assoc. 2001;8(6):527–534. doi: 10.1136/jamia.2001.0080527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Burack RC, Gimotty PA. Promoting Screening Mammography in Inner-City Settings. Med Care. 1997;35(9):921–31. doi: 10.1097/00005650-199709000-00005. [DOI] [PubMed] [Google Scholar]