Abstract

policymakers concerned about maintaining the integrity of science have recently expanded their attention from a focus on misbehaving individuals to characteristics of the environments in which scientists work. Little empirical evidence exists about the role of organizational justice in promoting or hindering scientific integrity. Our findings indicate that when scientists believe they are being treated unfairly they are more likely to behave in ways that compromise the integrity of science. Perceived violations of distributive and procedural justice were positively associated with self-reports of misbehavior among scientists.

Keywords: scientific misbehavior, integrity, organizational justice, procedural justice, distributive justice

Procedural and distributive justice are central concepts in the organizational justice literature (Bies & Tripp, 1998; Folger & Cropanzano, 1998; Greenberg, 1993; Greenberg, 1997; Masterson, Lewis, Goldman & Taylor, 2000; Moorman, 1991; Organ, 1988; Podsakoff, MacKenzie, Paine, & Bacharach, 2000; Skarlicki & Folger, 1997). When people regard the distribution of resources within an organization—and the decision process underlying that distribution—as fair, their confidence in the organization is likely to be bolstered. When they believe either the distribution or the procedures for distribution to be unfair, however, they may take actions to compensate for the perceived unfairness. Furthermore, current work reported in the justice literature suggests that social identity plays a crucial role in how people respond behaviorally to perceptions of justice or fairness (Clay-Warner, 2001; Skitka, 2003; Tyler & Blader, 2003). Perceptions of injustice may threaten one’s feelings of identification or standing within a group, a threat that may prompt compensatory behavior to protect or enhance one’s group membership or reputation.

Policymakers intent on maintaining the integrity of science have turned their attention to the way characteristics of the environments in which scientists work promote or inhibit scientific integrity (Institute of Medicine and National Research Council Committee on Assessing Integrity in Research Environments, 2002), but at present there is little empirical evidence about the way perceptions of organizational justice influence the behavior of scientists. Our research offers the first systematic analysis of the relationship between perceptions of justice and scientists’ behaviors. In particular, we focus on the interactions among perceptions of organizational justice, the social identity of scientists, and behaviors that threaten the integrity of science.

Theoretical Background and Hypotheses

In previous work (Martinson, Anderson, & De Vries, 2005) we documented substantial levels of behaviors that may compromise the integrity of science in two samples of National Institutes of Health (NIH)-funded scientists: these behaviors ranged from carelessness, to misbehavior, to serious misconduct. Our goal here is to examine whether these self-reported misbehaviors are associated with these scientists’ perceptions of organizational justice (both distributive and procedural), and whether perceived threats to one’s identity as a scientist affect the strength of the relationship between perceptions of procedural justice and behavioral responses. Using data from our survey, we test the following hypotheses:

Hypothesis 1: The greater the perceived distributive injustice in science, the greater the likelihood of a scientist engaging in misbehavior.

Hypothesis 2: The greater the perceived procedural injustice in science, the greater the likelihood of a scientist engaging in misbehavior.

Hypothesis 3: Perceptions of injustice are more strongly associated with misbehavior among those for whom the injustice represents a more serious threat to social identity (e.g., early-career scientists, female scientists in traditionally male fields).

These hypotheses are based on concepts drawn from the field of organizational justice and social psychology, areas of research that are quite useful for understanding the behavior of scientists but that are seldom employed by those who study research integrity. Empirical tests of our first and second hypotheses can be made by assessing the “main effects” of procedural and distributive injustice measures on the outcome measure of misbehavior. Testing our third hypothesis, however, requires assessment of interactions between measures of injustice and characteristics of individuals such as their field of study, sex, and career stage. Before presenting our data and findings, we offer a brief history of research and theories of organizational justice.

Organizational Justice Principles

Organizational justice is an umbrella term used to refer to individuals’ perceptions about the fairness of decisions and decision-making processes within organizations and the influences of those perceptions on behavior (Adams, 1965; Clayton & Opotow, 2003; Colquitt, Conlon, Wesson, Porter, & Ng, 2001; Folger & Cropanzano, 1998; Greenberg, 1988; Pfeffer & Langton, 1993; Tyler & Blader, 2003). Subsumed in this term are multiple types of justice, with two sub-types being most extensively studied: distributive justice — fairness in the distribution of resources as an organizational outcome (Adams, 1965; Leventhal, 1976, 1980) and procedural justice—fairness in the processes and procedures used to determine the distribution of resources (Leventhal, 1980; Thibaut & Walker, 1975).

Work in organizational justice theory has largely moved from a focus on distributive justice to consideration of the justice of organizational procedures. Research on distributive justice, typified by the “equity equation” positing a balance between one’s own ratio of inputs to outcomes and “another’s” ratio of inputs to outcomes, examined the balance between inputs to, and rewards from, exchange relationships with others (Adams, 1965; Deutsch, 1975; Leventhal, 1976). Researchers soon recognized that mathematical formulations of equity could not fully explain observed behaviors. For example, individuals often apply principles other than equity, such as equality or need (Deutsch, 1975) in evaluating social exchanges, and fairness in organizations is often assessed using factors other than distributional outcomes (such as welfare, deservingness, liberty, etc. (Lamont, 2003). In the past few years, as a result of research that “found a predominant influence of procedural justice on people’s reactions in groups” (Tyler & Blader, 2000), the field shifted its focus to perceptions of the fairness of procedures used to arrive at distribution decisions (Clayton, et al., 2003; Masterson et al., 2000; Skitka & Crosby, 2003).

Efforts to integrate the findings of research in organizational justice and social identity represent a new and promising trend in justice studies (Clayton, et al., 2003; Skitka, et al., 2003; Tyler, et al., 2000, 2003). In this line of theory development, social identity mediates the relationship between perceived justice and behavior. Perceptions of justice provide a sense of security in one’s membership or standing in a group. Violations of justice principles introduce vulnerability to one’s social identity, which may in turn increase the likelihood of engaging in harmful or unsanctioned behavior (Lind & Tyler, 1988; Tyler, 1989; Tyler, et al., 2000, 2003). This process may be especially pronounced for those whose social identity is already more fragile or uncertain. We expect that scientists with less well-established reputations in their field will have more fragile identities than their more established counterparts.

Organizational Justice and the Social identity of Scientists

As academic science has become increasingly dependent on external resources, whether from government or private industry, the culture of science has changed, and with it, what it means to be an academic scientist (Hackett, 1990). The rise of entrepreneurial universities and scientists has increased the overall emphasis on careerism and may have lessened, somewhat, the view of science as a calling, (Etzkowitz, 1983; Ziman, 1990), but scientists continue to have a strong sense of identification with their role (Hackett, 1999; Weber, 1946). Not only is science still widely regarded as a vocation, but academic scientists invest substantial resources (e.g., time, money, labor) and incur substantial opportunity costs in preparing themselves for their careers. These factors create a strong identification as a scientist, make it difficult for scientists to create and inhabit alternative identities, and increase the distress associated with threats to that identity.

The practice of academic science in the United States all but requires that its practitioners be members in good standing of networks (often of other scientists) that guide the direction of scientific inquiry, the governance of science, the training of new scientists, and the distribution of scarce resources. Interdependent networks of peers, departments, and institutions of employment, along with agents in the external task environment (included here are peer reviewers, journal editors, grant reviewers, funding agencies, project officers, competitors and the like) are responsible for implementing agreed-upon procedures for distributing the highly-valued and scarce resources of science. Given their multiple levels of membership in local settings (e.g. department, university) and dispersed groups (e.g. disciplines, peer-review networks), group membership and social identity are particularly important issues for scientists, not least because one’s success or failure as a scientist is strongly influenced by how one is evaluated by the multiple members of these multiple groups.

Scientists who are relatively peripheral in the scientific community are especially attuned to perceptions of injustice (Noel, Wann & Branscombe, 1995) and their social identities more strongly affected by it. They may also be more likely to be targets of injustice. Those on the periphery include those who lack tenure or who are in un-tenurable positions, as well as female scientists working in predominantly male fields. For these scientists, perceptions of being treated unjustly pose both a psychological threat to their social identity and a subjective threat to their livelihood and continued membership in the scientific community. As the popular phrase “publish or perish” makes clear, the failure to obtain funded grants, and successfully publish one’s research typically signal the end of a scientist’s research career. Thus, compounding scientists’ identity threat, organizational injustice can contribute to employment instability, inadequate fulfillment of career potential, role ambiguity, lack of control over the working environment, lack of feedback from superiors, and uncertainty about expected pay-offs from invested efforts (Matschinger, Siegrist, Siegrist & Dittmann, 1986; Siegrist, 1996).

Anomie, Strain, and Misbehavior

The predicament of researchers who are peripheral to the scientific community is anticipated by an important strand of deviance theory, as is the pressure to deviate from accepted norms as a response to perceived injustice. In 1938, drawing on Durkheim’s concept of anomie, or normlessness, Robert Merton proposed that deviance was the result of structural “strain” produced when valued cultural ends could not be achieved by legitimate societal means (Merton, 1938). Those caught in these stressful situations, where legitimate means to valued ends are blocked, are likely to find deviant (Merton called them “innovative”) pathways to success.

Building on Merton’s ideas, Agnew developed “general strain theory,” a more nuanced examination of both the individual responses to strain, and the contexts that produce strain. Agnew emphasizes the motivation for deviance: he posits that strain, resulting from negative social relationships, produces negative affect in the individual (e.g. fear, anger, frustration, alienation) which, in turn, leads to pressure on the individual to “correct” the situation and reduce such affect, with one possible coping response being deviant behavior (Agnew, 1985, 1992, 1995a, 1995b). Agnew’s general strain theory recognizes that deviant behavior is not an inevitable outcome of such pressures, and that the likelihood of deviance is a function of individual traits and dispositions as well as contextual factors. An important distinction of general strain theory is its focus on situational provocations to deviance, and an argument that some individuals are more easily provoked than others.

Agnew further asserts that strong social support networks are expected to decrease the likelihood of deviant responses to strain, while having many deviant peers is expected to increase the likelihood of deviant responses. According to Agnew, strategies for coping with strain are also important components of the linkage between strain and deviance. He delineates possible (legitimate) coping responses including cognitive, emotional, and behavioral strategies, noting that these coping strategies are not equally available to all individuals. The unavailability of legitimate coping responses increases the potential for deviant behavior (Agnew, 1992). Many of the coping strategies identified by Agnew hinge on the individuals’ minimizing, de-emphasizing or detaching from the situation causing them strain. We argue that the legitimate coping responses to strain enumerated by Agnew are less readily available to academic scientists, because of the centrality of their work roles to their individual identities; thus, for scientists, misbehavior is a more likely response to strain.

Behavioral Responses to Perceptions of Organizational Justice and Injustice

Prior research on behavioral responses to perceived injustice has focused on acts against local targets (e.g., sabotage, theft, interpersonal violence) see Colquitt, et al. 2002. This is not surprising because most studies of organizational justice have been conducted in settings in which social exchanges take place within a local organization. The organizational structure of science, however, spans both local settings (departments or centers housed within universities) and external organizational structures (grant-funding systems, national and international peer-review systems). Threats to social identity may come from procedures enacted by either local or distant entities; in the case of the former, typically having to do with issues such as salary, promotions, office space, or other resources, and in the case of the latter typically having to do with grant funding decisions, and editorial peer review processes.

Based on the theoretical suppositions discussed above, we believe that perceptions of organizational injustice may be a key motivation for deviant behavior among scientists (Agnew, 1992). If scientists perceive that unjust procedures result in an unfair distribution of resources, individuals may resort to illegitimate, or unsanctioned responses to compensate for these injustices (Folger, 1977; Leventhal, 1980; Thibaut & Walker, 1975). Moreover, we believe that behavioral responses to organizational injustice are moderated by the degree to which the injustice threatens one’s identity as a scientist (Clay-Warner, 2001; Clayton, et al., 2003; Tyler, et al., 2003), with deviant responses being more likely among scientists whose identities are less established and secure.1

Method

In order to explore the relationship between perceived injustice and scientific misbehavior, we developed a survey instrument that measured respondents’ attitudes toward, and behaviors in, the research workplace. Because we wished to contrast the working conditions of newly minted and more established scientists, we designed our sampling frame to include researchers at both early and middle stages of their careers. Our study was reviewed and approved prior to implementation by the Institutional Review Boards of both HealthPartners Research Foundation and the University of Minnesota.

Using databases maintained by the NIH Office of Extramural Research we created a sample of mid-career researchers—3,600 scientists who had received their first research grant (R01) awards from NIH sometime during the period 1999–2001—and a sample of recently graduated scientists—4,160 NIH-supported, postdoctoral trainees who had received either institutional (T32) or individual (F32) postdoctoral training support during 2000 or 2001. In the fall of 2002 we mailed our survey to these two random samples.2

To increase participation in the survey, we used Dillman’s (2000) tailored design method as follows. We sent each scientist in the sample a cover letter, a survey, a postage-paid envelope, and a “response notification” postage-paid postcard. Individuals were asked to return the postcard separately from the survey, to ensure that respondents could remain anonymous and still notify us that they had completed the survey. Non-respondents received another complete survey packet approximately three weeks after the original mailing, and a third mailing several weeks later.

From the sample of 3,600 mid-career scientists, 191 surveys were returned as undeliverable. For purposes of calculating response rates, we removed these individuals from the denominator. We received 1,768 completed surveys from this sample (response rate = 52%). From the sample of 4,160 early-career scientists, 685 surveys were returned as undeliverable and 1,479 completed surveys were received (response rate = 43%). It is possible that many of the non-responses were due to incorrect or incomplete addresses in the NIH database, although the extent of this problem is unknown. For the vast majority of scientists, the addressing information available from NIH was not that of their home department, but that of their institution’s grants office. To the extent that grants offices did not forward our surveys to the appropriate departments, surveys never reached their intended recipients.

Independent Variables

Our survey included self-report measures of theoretically important dimensions of perceived work environments and personal characteristics. We enumerate these measures here and present descriptive information about them in Table 1.

TABLE 1.

Means, (Standard Deviations) or Percentage Distributions of Independent Variables.

| Mid-Career N = 1,768

|

Early-Career N = 1,479

|

|||

|---|---|---|---|---|

| M/% | SD | M/% | SD | |

| Age | 44 | (6.8) | 35 | (5.4) |

| Female | 34% | 53% | ||

| Never Partnered | 10% | 21% | ||

| Academic Rank | ||||

| Postdoctoral Fellows | 0% | 58% | ||

| Instructor/Unranked | 9 | 31 | ||

| Assistant Professor | 41 | 11 | ||

| Associate Professor and above | 51 | 0 | ||

| Field of Study | ||||

| Biology | 16% | 20% | ||

| Chemistry | 16 | 16 | ||

| Medicine | 38 | 42 | ||

| Social Science | 19 | 17 | ||

| Physics/Math/Engineering | 7 | 3 | ||

| Other or unknown | 5 | 3 | ||

| Distributive Injustice (range 0.17–5) | 0.7 | (0.4) | 0.6 | (0.4) |

| Extrinsic Effort (range 5–30) | 15.5 | (4.9) | 12.7 | (4.9) |

| Extrinsic Reward (range 11–55) | 46.9 | (8.6) | 43.7 | (9.3) |

| Procedural Injustice (range 0–100) | 63.3 | (18.0) | 66.5 | (18.2) |

| Intrinsic Drive (range 6–24) | 15.6 | (3.1) | 15.2 | (3.3) |

DISTRIBUTIVE INJUSTICE

One of our primary predictive measures is the 23-item short form of the Effort-Reward Imbalance (ERI) questionnaire (Peter et al., 1998; Siegrist, 1996; Siegrist, 2001). This measure corresponds directly to the concept of distributive justice as originally proposed by Leventhal (Leventhal, 1976), and which has been used extensively in the fields of management, human resources, and applied social psychology (Colquitt et al., 2001; Gilliland & Beckstein, 1996; Greenberg & Cropanzano, 2001). Subscales derived from the extrinsic effort items (e.g., “Over the past years, my job has become more and more demanding,” “I am often pressured to work more hours than reasonable”) and extrinsic reward items (“I receive the respect I deserve from my colleagues,” “Considering all my efforts and achievements, I receive the respect and prestige I deserve at work”) were used to compute a ratio of perceived effort (E) required at work to perceived extrinsic rewards (R) received, or E/R. Respondents rated sub-scale items on a 1-to-5 scale with higher scores indicating greater effort and reward, respectively. Distributive injustice was calculated as the ratio of extrinsic effort to reward (with a correction factor to compensate for the different number of items in the two scales), so that higher values represented greater perceived distributive injustice.

PROCEDURAL INJUSTICE

We chose the Ladd and Lipset six-item alienation scale (Ladd & Lipset, 1978) over more standard measures of procedural justice (Colquitt, 2001; Moorman, 1991). The Ladd and Lipset measure is appropriate in this context because it is directed specifically to academic scientists and the organizational structures within which they work. Moreover, it covers five of the six core components of procedural justice originally proposed by Leventhal (Leventhal, 1980), addressing bias suppression, correctability, information accuracy, representation, and ethicality, primarily within the context of the peer-review systems of science (e.g., “The top people in my field are successful because they are more effective at ‘working the system’ than others,” “The ‘peer review’ system of evaluating proposals for research grants is, by and large, unfair; it greatly favors members of the ‘old boy network.’”). The scale has been used elsewhere to examine associations among alienation, deviance, and scientists’ perceptions of rewards and career success (Braxton, 1993). Respondents rated their agreement with each item on a 1 to 5 scale.

INTRINSIC DRIVE

Siegrist’s model of effort/reward imbalance posits the potential importance of “intrinsic drive” (also referred to as “over-commitment”) as a factor that may moderate the relationships between effort/reward imbalance and outcomes (Siegrist, 2001). This possibility arises from the fact that the effort one exerts in work may be a function of intrinsic drive as well as extrinsic motivators. To the extent that one’s work effort is driven by intrinsic factors as well as extrinsic factors, we anticipate that high intrinsic drive alone may not always be problematic, but when paired with perceptions of effort/reward imbalance or procedural injustice might well motivate misbehavior. Within the framework of general strain theory, high intrinsic drive can be viewed as a characteristic that could predispose an individual to be more easily provoked to misbehavior in the face of unfair treatment. Intrinsic drive is measured as a sum of respondents’ agreement with six items (e.g., “Work rarely lets me go; it is still on my mind when I go to bed,” “People close to me say I sacrifice too much for my job.”), rated on a 1-to-4 scale and coded so that higher values indicate more intrinsic drive.

SCIENTIST IDENTITY

Central to hypothesis 3 is the notion that respondents’ self-identification as scientists may moderate the relationship between justice perceptions and the likelihood of misbehavior. Scientists may perceive themselves as relatively peripheral to their scientific community by virtue of their career stage, field of study, sex (Hosek, et al., 2005) or a combination of these factors. (See the discussion of organizational justice and identity above regarding the relationship between identity and peripheral group membership.) The sampling frames used provide a marker of career stage (early-career versus mid-career). Data on field of study were collected through an open-ended question (i.e., “In what specific disciplinary field did you earn your highest degree?”) and coded into the broad categories of biology, chemistry, medicine, social science, physics/math/engineering and miscellaneous or unknown.

PERSONAL CHARACTERISTICS

Given a relative lack of theoretical guidance about what personal characteristics might operate as potential confounders or modifiers of the associations of interest, it seemed reasonable to adjust our predictive models for variables such as sex and marital status. Recent proposed changes to create more “family-friendly” tenure clocks in places such as Princeton and the University of California system point to an increasing recognition of the conflicting demands of academic science careers and family life, particularly for women (Bhattacharjee, 2005). In our own samples we find notable differences in family status by sex, patterns of which are similar in both the early- and mid-career samples. Overall, women are more likely than men to be never married (17% vs. 10%), or previously married (8% vs. 4%), and less likely to be currently married or cohabiting (75% vs. 86%) (χ2(2) = 59.8, p <.001). Likewise, both early- and mid-career women are less likely than their male counterparts to currently be the parent of any young children (18 or under). Only 44% of women, but 60% of the men in our sample are currently parents of young children (χ2(1) = 80.0, p <.001). These sex differences in family formation can be described as representing opportunity costs that are differentially higher for female than male scientists. Alternatively, these patterns might indicate a stronger identification with the scientist role among female than among male scientists. Either interpretation would lead us to expect female scientists to be more acutely attuned than male scientists to violations of justice in their work roles. The lack of theoretical expectations with respect to age effects on misbehavior, combined with a correlation of 0.56 in our sample between age and career stage (a theoretically relevant factor), led us to omit age from our multivariate models.

For each of the scales discussed above (distributive and procedural injustice, intrinsic drive) if an individual had missing data for two or fewer items in any given scale, we imputed the mean of that individuals’ non-missing scale items for the missing item values before summing to create the scale. After this imputation, there still remained a number of subjects who had skipped more than two items on any given scale rendering their data unusable: There were N = 167 missing for distributive injustice, N = 95 missing for procedural injustice, and N = 102 missing for intrinsic drive. Along with those missing on other personal characteristics or the dependent variable, we were missing data on one or more variables for N = 271 individuals who were omitted from the analyses.

Dependent Variable

Based on previous research (Anderson, Louis, & Earle, 1994; Swazey, Anderson & Louis., 1993) and results from six focus-group discussions with a total of 51 scientists at several top-tier research universities (De Vries, Anderson, & Martinson, 2006), we developed a list of 33 problematic behaviors that were included in the survey. These range from the relatively innocuous (e.g. signing a form, letter or report without reading it completely), to questionable research practices, outright misbehaviors, and misconduct as formally defined by the U.S. federal government (OSTP, 2000), including data falsification and plagiarism. Survey respondents were asked to report whether they themselves had engaged in any of the specified behaviors during the past three years. We did not attempt to assess frequency as we doubted that most people could report this accurately. Even though the survey was designed and administered to ensure respondent anonymity absolutely and transparently, we suspect that residual fear of discovery led to some under-reporting, particularly for the most serious misbehaviors. Psychological denial about misbehavior is another reason to suspect some under-reporting. We have previously published descriptive information on a subset of these behaviors (Martinson et al., 2005).

TOP-TEN MISBEHAVIORS

Given the spectrum of behaviors in our list, it was necessary to identify which would be most likely to be sanctionable. Recognizing that this would be a controversial, perhaps contentious issue, we consulted six compliance officers at five major research universities and one independent research organization. Four of the universities represented are in the top-20 recipients of NIH funding and the other is an ivy-league institution; furthermore, 4 of the 5 universities are among the top-20 producers of doctorates. We asked the compliance officials to assess the likelihood that each behavior, if discovered, would get a scientist in trouble at the university or federal level (from 0, unlikely, to 2, very likely). The 10 items that received the top scores by this assessment each received scores of 2 from at least 4 of the 6 compliance officers and no score below 1. Having identified the top-ten misbehaviors as judged by these independent observers, we constructed a binary dependent variable, coded 1 if an individual responded “yes” to one or more of these top-ten misbehavior items, 0 otherwise. If no response was received for any of the ten items (N = 120) this indicator was coded as missing and the observation excluded from the analysis.

Analytical Approach

The continuous and categorical measures used in the analyses are described using means (M) and standard deviations (SD) or percentages (%), respectively. Associations between these measures are quantified by Pearson product-moment, or point-biserial, or rank-order correlation coefficients, as appropriate. Scale reliabilities are quantified using Cronbach’s alpha (α).

Multivariate logistic regression was used to predict the likelihood of a “yes” response to one or more of the top-ten misbehaviors. Binary predictors were coded so that 1 corresponded to the presence of the characteristic described by the variable name (e.g., 0 = male, 1 = female for the ‘female’ variable). Prior to analysis, continuous measures were standardized to M = 0 and SD = 1 for ease in interpreting the regression parameters. The main effects for covariates (i.e., sex, marital status) and independent variables (distributive injustice, procedural injustice, intrinsic drive, career stage, field of study) were included in the model. A systematic search for two- and three-way interactions within and between the covariates and independent variables was conducted so that complex relationships between these variables could be identified and the model properly specified. Higher-order effects were retained in the model, along with their constituent effects, if the Type III sums of squares for the effect was significant at p <.05. We explain key results from this model by presenting graphs of predicted probabilities derived from significant interactions.

An alternative way to code the outcome variable would be as a count of affirmative responses within the set of ten items. In analyses not reported here, we estimated both Poisson and negative binomial regression models predicting such a count variable as an outcome. The results were essentially the same as those obtained with logistic regression, so we present the logistic regression results for their greater ease of interpretation.

Similarly, we have tested the sensitivity of our model results to the omission of one behavior item some have criticized as being open to multiple interpretations; specifically, the item asking about changing the design, methodology or results of a study in response to pressure from a funding source. Omitting this item from our dependent variable does not alter the results we present in support of our hypotheses. Based on this observation we retained this item in our dependent variable for these analyses.

Results

We first present descriptive statistics on the independent and dependent variables, as well as correlations among them. We then present the results of a multivariate logistic regression analysis, with discussion of interaction effects found in follow-up analyses.

Table 1 presents a description of our study participants in the early and mid-career samples. The two groups are roughly one decade apart in mean age. Women make up only about a third of the mid-career sample, but over half of the early-career group. Ten percent of the mid-career sample has never been married or cohabited with a partner, with twice the proportion of early-career scientists falling into that category. There is little overlap between the two samples in terms of academic rank: the mid-career sample is concentrated at the assistant- and associate-professor levels, while the early-career sample is concentrated at entry-level positions with more than half in postdoctoral positions. The respondents are spread across NIH-funded fields of study, though approximately 40% of each group are in medical fields.

Early- and mid-career scientists reported similar levels of injustice of both kinds. Distributive injustice (the ratio of extrinsic effort to extrinsic reward, with a correction factor for unequal numbers of component items) has a mean of less than 1 for each group, indicating that reward scores exceed effort scores on average. Procedural injustice, ranging from 0 to 100, has a mean near 65 for each group, while intrinsic drive averaged about 15 for both groups, on a scale from 6 to 24.

The dependent variable in this study is self-reported misbehavior by scientists. Table 2 displays the top-ten misbehavior items that the compliance officers we consulted viewed as most likely to be sanctionable. We list here the percentages of respondents who indicated that they had engaged in a given behavior at least once in the previous three years (as previously published and discussed elsewhere Martinson et al., 2005)

TABLE 2.

Percentage indicating having engaged in behavior in the past three years, by sample (Sorted by prevalence in mid-career sample).

| Mid-Career %Yes | Early-Career %Yes | |

|---|---|---|

| 1. Falsifying or “cooking” research data | 0.2 | 0.5 |

| 2. Ignoring major aspects of human-subjects requirements | 0.3 | 0.4 |

| 3. Not properly disclosing involvement in firms whose products are based on one’s own research | 0.4 | 0.3 |

| 4. Relationships with students, research subjects or clients that may be interpreted as questionable | 1.3 | 1.4 |

| 5. Using another’s ideas without obtaining permission or giving due credit | 1.7 | 1.0 |

| 6. Unauthorized use of confidential information in connection with one’s own research | 2.4 | 0.8 |

| 7. Failing to present data that contradict one’s own previous research | 6.5 | 5.3 |

| 8. Circumventing certain minor aspects of human-subjects requirements (e.g. related to informed consent, confidentiality, etc.) | 9.0 | 6.0 |

| 9. Overlooking others’ use of flawed data or questionable interpretation of data | 12.2 | 12.8 |

| 10. Changing the design, methodology or results of a study in response to pressure from a funding source | 20.6 | 9.5 |

Table 3 presents bivariate correlations between variables that are included in the multivariate analyses. Here, misbehavior is a dichotomous variable that indicates whether a scientist did (1) or did not (0) engage in any of the top-ten behaviors in the previous three years. The measures of injustice and intrinsic drive are standardized. Distributive injustice, procedural injustice and intrinsic drive are modestly correlated with each other and have adequate internal consistencies. Although the ratio nature of the distributive injustice measure precludes calculation of an alpha statistic, the two component scales also have adequate internal consistencies (.83 for extrinsic effort and .86 for extrinsic reward). The modest positive correlations among these scales indicate that: (1) those perceiving procedural injustice are somewhat more likely than others to also report perceiving distributive injustice, and (2) higher reports of intrinsic drive are positively correlated with perceptions of both procedural and distributive injustice.

TABLE 3.

Correlations and Descriptive Statistics of Analytic Variables.

| Top Ten | Distrib. Injust. | Proced. Injust. | Intrin. Drive | Female | Early Career | Never Partnered | |

|---|---|---|---|---|---|---|---|

| Top Ten Misbehaviors | 0.03 | 0.10** | 0.05* | −0.08** | −0.11** | −0.03 | |

| Distributive Injustice (z) | 0.25** | 0.42** | 0.01 | −0.07** | 0.02 | ||

| Procedural Injustice (z) | 0.18** | 0.06** | 0.09** | −0.02 | |||

| Intrinsic Drive (z) | −0.005 | −0.06** | 0.01 | ||||

| Female | 0.21** | 0.11** | |||||

| Early Career | 0.19** | ||||||

| N | 3127 | 3080 | 3247 | 3145 | 3247 | 3247 | 3186 |

| M/% | 33% | 0 | 0 | 0 | 42% | 46% | 13% |

| SD | 1 | 1 | 1 | ||||

| α | NA | 0.75 | 0.78 |

p <.05

p <.01

Engagement in one of the top-ten misbehaviors is positively correlated with procedural injustice and intrinsic drive, but its correlation with distributive injustice is not statistically significant. The misbehavior measure is negatively correlated with being an early-career scientist and being female.

Scientists in the mid-career group tend to report higher levels of distributive injustice. Procedural injustice shows the opposite pattern, in that early-career scientists report higher levels of this form of injustice (as do women). Intrinsic drive, like distributive injustice, is higher among mid-career scientists.

The large sample size leads to significance in small bivariate associations. The reader should note, however, that we anticipate interaction terms in our models that should reveal the circumstances under which stronger associations occur.

Table 4 presents the parameter estimates of a multivariate, main-effects only, logistic regression model in which the dependent variable is the likelihood of a “yes” response to one or more of the top-ten misbehaviors. Procedural injustice is significantly and positively associated with engagement in misbehavior, but the main effect of distributive injustice is not significant. Consistent with the bivariate correlations, the multivariate estimates show that early-career and female scientists are less likely to engage in misbehavior. The field-of-study indicators are significant as a group, with social sciences showing a strong, positive association with misbehavior.

TABLE 4.

Type III effects and regression coefficients for main-effects only, logistic regression model predicting yes response to one or more of the top-ten misbehaviors (N = 2,976).

| Effect | DF | Coeff | Wald χ2 | p |

|---|---|---|---|---|

| Intercept | 1 | −.595 | 29.58 | <.001 |

| Distributive Injustice (z) | 1 | −.035 | .60 | .44 |

| Procedural Injustice (z) | 1 | .309 | 35.25 | <.001 |

| Intrinsic Drive (z) | 1 | .072 | 2.63 | .11 |

| Early-Career | 1 | −.451 | 28.18 | <.001 |

| Female | 1 | −.382 | 19.52 | <.001 |

| Never Partnered | 1 | .044 | .12 | .72 |

| Field of study | 5 | 36.01 | <.001 | |

| medicine | .128 | 1.19 | .28 | |

| social science | .693 | 25.87 | <.001 | |

| chemistry | .061 | .19 | .66 | |

| other/unknown | .222 | .94 | .33 | |

| physics/math/engineering biology (reference) | −.002 | .00 | .99 |

These results do not provide support for our first hypothesis (concerning distributive injustice’s direct effect on misbehavior), but they do support our second hypothesis, in that procedural injustice shows a positive effect on misbehavior. Our third hypothesis requires examination of interaction effects, which also shed further light on the relationship between distributive injustice and misbehavior. The full model with interactions is not presented here for the sake of brevity; the complete model results are available from the first author upon request. Instead, the salient interactions are presented in graphs of predicted probabilities derived from the full model.

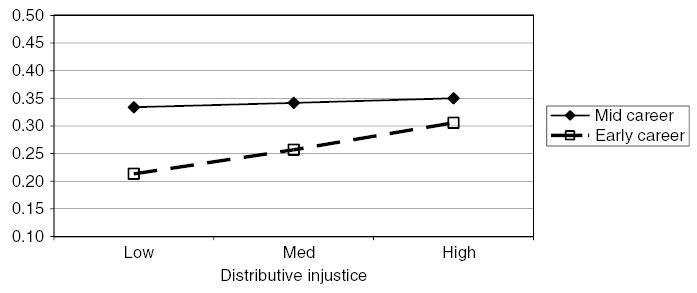

Our third hypothesis proposes that the relationship between distributive or procedural injustice and engagement in misbehavior is stronger among those, such as early-career scientists and female scientists in male-dominated fields, for whom injustice represents a greater threat to their social identity as scientists. In fact, a significant interaction between distributive injustice and career stage demonstrated such an effect.

Figure 1 shows the probability of early- and mid-career scientists engaging in misbehavior that would be predicted at three levels of distributive injustice: at the mean value (Med) and at one standard deviation above (High) and below (Low) the mean. These predicted probabilities are derived from the full model with interaction effects, with all other variables assigned their mean values (0 for indicator variables). The mid-career scientists are clearly more likely to engage in misbehavior, regardless of their perceptions of distributive injustice, but the association between distributive injustice and misbehavior is more strongly positive among early-career (.243) than among mid-career (.036) scientists, a finding that lends support to our third hypothesis. We did not observe interactions to support our expectation of differential associations between injustice perceptions and misbehavior among women in traditionally male dominated fields.

FIG. 1.

Predicted probability of top 10 misbehavior by career stage and distributive injustice.

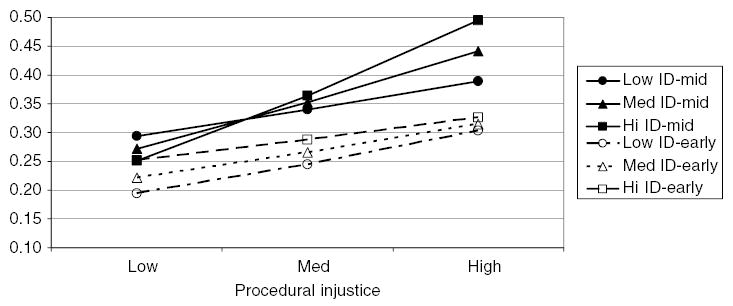

Four other significant interaction effects in our full model further illuminate our findings. A significant three-way interaction between procedural injustice, intrinsic drive and career stage provided insight into the manner in which contextual factors (procedural injustice) and individual dispositions (intrinsic drive) predict the likelihood of misbehavior once coupled with career stage. The main effects of career stage and procedural injustice can clearly be seen in Figure 2. Mid-career scientists are more likely to report misbehavior, and there is a generally positive relationship between perceptions of procedural injustice and misbehavior. Procedural injustice is an increasingly important predictor of misbehavior, however, among mid-career scientists as their intrinsic drive increases. This culminates in the highest likelihood of misbehavior among mid-career scientists with high intrinsic drive who perceive a relatively high level of procedural injustice.

FIG. 2.

Predicted probability of top 10 misbehavior by procedural injustice, intrinsic drive and career stage.

Perceptions of injustice played a role in two additional higher-order effects. An interaction between procedural and distributive injustice showed that the positive relationship between procedural injustice and misbehavior was strongest among scientists who perceived the least distributive injustice and weakest among those perceiving the most distributive injustice. Though statistically significant, this effect is not large. Second, while distributive injustice was not related to misbehavior among scientists in most fields, those in the social science and other/unknown groups who reported more distributive injustice were less likely to report misbehavior. Finally, a significant interaction between gender and “never partnered” shows that the negative association of female status with misbehavior is not simply a gender effect. In fact, women who have never been partnered are even more unlikely than other women to report misbehavior while men who have never been partnered are more likely than other men to do so.

Discussion

Until recently, much of the attention to ethical issues in science arose in response to specific instances of egregious behavior, typically those falling in the category of misconduct as formally defined by the U.S. federal Office of Science and Technology Policy: “fabrication, falsification, or plagiarism [FFP] in proposing, performing, or reviewing research, or in reporting research results” (OSTP, 2000). As a result, corrective mechanisms focused largely on local institutional responses, and on person-specific factors, such as training in ethics, personal responsibility, and moral orientation. While this perspective on ethical issues in science is still evident in current literature and discussions (Bebeau, 2000; Fischer & Zigmond, 2001), a broader conceptualization of both problems and solutions is emerging.

The recent Institute of Medicine report, Integrity in Scientific Research: Creating an Environment That Promotes Responsible Conduct, and the studies on which it is based, exemplify a broader approach that incorporates the social and organizational contexts of science (Institute of Medicine and National Research Council Committee on Assessing Integrity in Research Environments, 2002). Indeed, the charge to the committee that produced the report was to: 1) define “integrity in research” and the “research environment,” 2) to identify environmental effects on integrity, and 3) to propose ways to study these effects (Institute of Medicine and National Research Council Committee on Assessing Integrity in Research Environments, 2002). This relatively recent change in the way the causes of misbehavior in research are understood is only now beginning to generate empirical evidence about the relationship between scientific integrity and organizational factors.

Our research confirms concerns about the influence of the contexts of science on misconduct. We found support for our second hypothesis, that perceptions of procedural injustice are significantly associated with self-report of misbehaviors. We did not find a significant, direct association between perceptions of distributive injustice and misbehavior in the total sample, but we did find such an association among early-career scientists. This result supports our first hypothesis among early-career scientists, thereby also supporting our third hypothesis that stronger associations between perceived organizational injustice and reported misbehavior are to be found among scientists who are more likely to face threats to their identity. These observations are consistent with the expectations of strain theory (Agnew, 1985, 1992; Merton, 1938), as well as expectations derived from organizational justice research (Adams, 1965; Colquitt, et al., 2001; Greenberg, 1988; Greenberg, 1993; Pfeffer & Langton, 1993).

The significant interaction between intrinsic drive, perceptions of procedural injustice and career status, though not explicitly anticipated in our hypotheses, is intriguing. The results in Table 2 indicate significant correlations between intrinsic drive and both kinds of injustice as well as misbehavior. Moreover, the mid-career scientists reported higher intrinsic drive. In Figure 2 we see the combined effect of intrinsic drive, perceptions of procedural injustice and career stage: mid-career faculty with high intrinsic drive who perceive relatively high levels of procedural injustice are most likely to report misbehavior.

The three-way interaction involving intrinsic drive, procedural injustice, and career stage provides a demonstration of strain theory’s anticipation of misbehavior as a function of situational provocation combined with individual disposition. The critical factor is intrinsic drive. Scientists who are personally driven to achieve may be particularly sensitive to violations of procedural justice, especially if these violations are seen as hindering or thwarting their career success. Furthermore, it appears likely that selection processes in science favor those exhibiting a high degree of personal drive leading to an overall higher level of intrinsic drive among those who reach mid-career status. This selection may thus increase, within the mid-career ranks, sensitivity to perceptions of procedural injustice, and thereby the likelihood of misbehavior.

While there is a great deal of overlap between organizational justice theory and general strain theory, this association reveals differential foci of these theories. Organizational justice theory, from which we derived our main hypotheses, recognizes social identity threat as a potential factor in explaining misbehavior, but it does not consider the potential effects of individual dispositional factors, such as personal drive for success, as general strain theory does. As an aside, we would note that the observed interaction of marital status and sex is also not anticipated by either of these theories, but would be consistent with theoretical expectations in family sociology that marriage may act as a form of social control, operating on men in particular.

The contribution of our research must be considered in light of some limitations of the study. First, the cross-sectional nature of our data may understate the strength of associations if there are time-lags in how the studied variables operate with respect to one another. Second, the collection of both predictor and outcome data using self-report requires caution. There is the potential for bias as a result of common variance attributable to the reporter on both sides of the model—that is, our measures of organizational injustice rely on reports from the same people reporting on their behaviors in that environment. Furthermore, these data allow us to determine only that there are significant associations between the behaviors of interest and perceived working conditions, without illuminating causality. Thus, it would be possible to observe the patterns we have found if, for instance, those who have misbehaved were not truly the victims of injustice, but merely rationalize or excuse their misbehavior through reference to unfair treatment. Additionally, as in any study relying on self-report of misbehavior, there is likely to be an under-reporting bias in our data. This bias may be particularly pronounced for the more serious or legally sanctionable behaviors. We note, however, that any under-reporting would make our estimates of associations conservative. Related to this issue, interpretation of the differences in levels of reported behaviors between the early- and mid-career samples must be undertaken cautiously. Some of these differences may be explainable by reference to the fact that not all individuals had equal opportunity or exposure to engage in all of the behaviors about which we asked (e.g., only that subset of scientists engaged in conducting human-based research would have had opportunity to ignore major or minor details of human-subjects requirements). Finally, some of these differences may be attributable to differential under-reporting on the part of early-career scientists who, because of their more tenuous career standing, may be more hesitant than more well-established scientists to admit wrongdoing.

These limitations notwithstanding, the strengths of this study include the use of large, nationally representative samples of a broad spectrum of NIH funded scientists, increasing the generalizability of our findings. Ours is also one of the first studies (along with Keith-Spiegel, Koocher and Tabachnick, in this issue) to address misbehaviors among scientists as they are related to perceptions of organizational justice. Similarly, the behaviors about which we asked scientists to report were not limited to those falling into the formal definition of misconduct (i.e., FFP) but extended to include a range of behaviors that scientists themselves identify as representing day-to-day threats to the integrity of science (see De Vries et al., 2006), in this volume). Moreover, these include behaviors identified as being problematic by compliance officers in some of the top research universities in the United States.

Our findings suggest that a variety of misbehaviors are relatively common among scientists, and that these misbehaviors are associated with perceptions of distributive and procedural injustice in resource distribution—the former among early-career scientists—as suggested by theoretical expectations. Recent work in organizational justice theory indicates that perceptions of fair processes are expected to increase tolerance for unfair distributional outcomes of resources (rewards) (Lind, et al., 1988; Skitka, et al., 2003; Tyler, et al., 2003). To the extent this relationship holds for scientists, our findings suggest that ensuring that scientists perceive distributional processes as being fair may be a fruitful way to reduce unwanted and unproductive behaviors in science.

Our study of the relationships between organizational justice principles and misbehavior among academic scientists demonstrates the utility of extending the study of organizational justice to encompass the unique organizational structures of the scientific enterprise. Because our measure of procedural injustice tapped primarily aspects of the peer review systems in science, we have also demonstrated that violations of organizational justice are perceived beyond just the local institutional setting to include aspects of the peer-review system, and that such perceptions may affect behaviors with implications well beyond the local setting as well.

Best Practices

Our findings suggest that early- and mid-career scientists’ perceptions of organizational injustice are associated with behaviors that may compromise the integrity of science and may lead to ethical, legal, or regulatory problems for scientists and their institutions. This connection highlights the need for organizations that employ scientists to ensure fairness in decision making processes and the distribution of valued resources. At the institutional level, perceived injustice in distributions of responsibilities or unfairness in the decision processes that generate these distributions may contribute to an environment in which scientific misbehavior increases. In the distribution of institutional rewards, greater attention to the quality of research would foster better scientific conduct than rewards that appear to be based on the number and size of research grants, the “glamour” of one’s topics and findings, or sheer number of publications. But in judging a scientist’s research, better indices of quality are needed to counteract the increasing tendency to judge the quality of a researcher’s curriculum vitae based on the impact factor of the journals in which s/he publishes (Monastersky, 2005). Of particular interest, and generally beyond the purview of local institutions, is perceived unfairness in peer-review systems for grants and publications. Best practices to address these issues must be undertaken at levels beyond the local institution, and include roles played by journal editors and reviewers as well as leaders of professional societies, and the peer reviewers and funding decision makers working for, and within federal grant-making agencies such as the NIH.

We cannot judge, of course, the extent to which our respondents’ perceptions of injustice reflect reality. It is clear, however, that their perceptions, accurate or not, correlate with their behavior. When the means or results of decision processes are unknown or misunderstood, they are more likely to be subject to speculation, rumor and individuals’ own value calculations. It is important, therefore, for research institutions, journals, and federal agencies to ensure that their decisions and decision processes related to rewards and responsibilities are as transparent, widely disseminated to researchers, and fair as possible. Admittedly, this might well require reassessment of some of the long-held precepts of peer review and other oversight systems in science (e.g., blinded review as an unmitigated good; primacy of local IRB review in the context of multi-site studies) and a willingness to restructure these systems to more fittingly reflect the realities of the current scientific work environment.

Research Agenda

Further research along the lines of this study should examine the forces that contribute to procedural or distributive injustice in science. A system dependent on the expertise and labor of cadres of postdoctoral fellows and graduate students, for whom there are simply not enough positions in their scientific research area, creates perceptions of organizational injustice, if not injustice itself. It has recently been argued that biomedical research in the U.S. operates as a pyramid scheme, whose ever-expanding base of junior investigators is required to keep the system from collapsing (Rajan, 2005). Such perceptions seem affirmed by the objective evidence of a drastic decline over the past twenty five years in the proportion of NIH grant awards going to “new” investigators and the recurring cycle of dire warnings of shortages of scientists followed by gluts of new investigators (Kennedy, Austin, Urquhart & Taylor, 2004) making it easy to see how junior scientists might feel unfairly treated. Many commentators have argued that the distribution of rewards in science and the processes used to arrive at distributional decisions have characteristics that are perceived as unfair by many scientists, particularly younger, less well-established scientists (Babco & Jesse, 2003; Butz, Bloom, Gross, Kelly, Kotner, & Rippen, 2003; Freeman et al., 2001; Goodstein, 1999; Juliano, 2003; Juliano & Oxford, 2001; National Research Council, 1994; Teitelbaum, 2003). And while some clearly benefit from such an arrangement, as Donald Kennedy and his co-editors have recently noted:

“The present situation provides real advantages for the science and technology sector and the academic and corporate institutions that depend on it. We’ve arranged to produce more knowledge workers than we can employ, creating a labor-excess economy that keeps labor costs down and productivity high …”

these benefits are not without a larger, societal downside:

“The consequences of this are troubling. To be sure, the best graduates of the most prestigious programs may eventually find good jobs, but only after they are well past the age at which their predecessors were productively established. The rest—scientists of considerable potential who didn’t quite make it in a tough market—form an international legion of the discontented.” (Kennedy et al., 2004)

Some have recently noted that current arrangements very much resemble a “tournament model,” (Freeman et al., 2001) with small numbers of winners and large numbers of losers, which is exacerbated by the well-established concept of the “Matthew effect” in science (Merton, 1968, 1988), of credit and reward tending to accrue to those already established at the expense of those less well so. There are significant costs to science and society to train all these early-career scientists, only to have them engage in compromising behavior or to abandon research altogether after substantial investments have been made in their training. Our work suggests a need for analyses of the broader environment of U.S. science, and a need for attention to how both competitive and anti-competitive elements of that environment may motivate misbehavior, damaging the integrity of scientists’ work and, by extension, the scientific record.

Educational Implications

The scientific community must be prepared to address and correct instances or patterns of organizational injustice through constructive, not destructive means. Early introductions to expectations, work norms and rewards associated with academic careers, as well as a solid understanding of peer-review processes, will help scientists, especially those early in their careers, to recognize and deal openly with injustices. Training in constructive confrontation, conflict management, and grievance processes are valuable in dealing not only with injustice but also misbehavior in science. Scientists who have skills in these areas have options beyond nursing a simmering frustration, complaining, or engaging in questionable behaviors—or whistle-blowing when their colleagues are observed misbehaving. Such training must include, however, a full and honest acknowledgement of potential consequences of confrontations to either injustice or misbehavior. Organizations must prepare their administrators to respond in a fair, effective and even-handed way both to complaints of organizational injustice and to allegations of misbehavior. Their goal should be to educate and rehabilitate, rather than to punish and destroy (Gunsalus, 1998).

Finally, scientists, especially early-career ones who may be tempted to misbehave in response to perceptions of injustice in their work environments, must understand the risks of such behavior. As disheartening as it may be to work under conditions of unfairness, it is a potentially career-ending event to be found guilty of violating professional rules, regulations or laws. Misbehavior is itself unjust to those who conduct their research in accordance with appropriate standards and norms.

Acknowledgments

This research was support by the Research on Research Integrity Program, an ORI/NIH collaboration, grant # R01-NR08090. Raymond De Vries’ work on this project was also supported by grant # K01-AT000054-01 (NIH, National Center For Complementary & Alternative Medicine).

Footnotes

A brief discussion of organizational justice theory and reference to the findings presented in this manuscript were published in a Science News item written by Jim Giles—“Researchers break the rules in frustration at review boards,” Nature, 2005, 438(7065): 136-7.

Senior scientists were intentionally excluded because we were less sanguine about the likely relationship between perceptions of organizational injustice and misbehavior among well-established researchers.

Contributor Information

Brian C. Martinson, HealthPartners Research Foundation

Melissa S. Anderson, University of Minnesota Department of Educational Policy and Administration

A. Lauren Crain, HealthPartners Research Foundation.

Raymond De Vries, University of Michigan Bioethics Program.

References

- A dams, J. S. (1965). Inequity in social exchange. In L. Berkowitz (Ed.), Advances in experimental social psychology (Vol. 2, pp. 267–299). New York: Academic Press.

- Agnew R. A revised strain theory of delinquency. Social Forces. 1985;64:151–167. [Google Scholar]

- Agnew R. Foundation for a general strain theory of crime and delinquency. Criminology. 1992;30(1):47–87. [Google Scholar]

- A gnew, R. (1995a). The contribution of social-psychological strain theory to the explanation of crime and delinquency. In F. Adler & W. Laufer (Eds.), The legacy of anomie theory (pp. 113–137). New Brunswick, NJ: Transaction Publishers.

- Agnew R. Testing the leading crime theories: An alternative strategy focusing on motivational processes. Journal of Research in Crime and Delinquency. 1995b;32(4):363–398. [Google Scholar]

- Anderson M, Louis K, Earle J. Disciplinary and departmental effects on observations of faculty and graduate student misconduct. Journal of Higher Education. 1994;65:331–350. [Google Scholar]

- B abco, E. L., & Jesse, J. K. (2003). What does the future of the scientific labor market look like? Looking back and looking forward— http://www.Cpst.Org/future.Pdf (Symposium presentation—2003 AAAS Annual Meetings). Washington, D.C.: Commission on Professionals in Science and Technology.

- B ebeau, M. J. (2000). Influencing the moral dimensions of professional practice: Implications for teaching and assessing for research integrity. Bethesda, MD: Paper presented at the 2000 ORI Research Conference on Research Integrity.

- Bhattacharjee Y. Higher education: Princeton resets family-friendly tenure clock. Science. 2005;309(5739):1308b. doi: 10.1126/science.309.5739.1308b. [DOI] [PubMed] [Google Scholar]

- B ies, R. J., & Tripp, T. M. (1998). Revenge in organizations: The good, the bad, and the ugly. In R. W. Griffin, A. O’Leary-Kelly & J. M. Collins (Eds.), Dysfunctional behavior in organizations: Non-violent dysfunctional behavior (pp. 49–67). Stamford, CN: JAI Press Inc.

- Braxton J. Deviancy from the norms of science: The effects of anomie and alienation in the academic profession. Research in Higher Education. 1993;34(2):213–228. [Google Scholar]

- B utz, W. P., Bloom, G. A., Gross, M. E., Kelly, T. K., Kofner, A., & Rippen, H. E. (2003). Is there a shortage of scientists and engineers? How would we know? (No. IP-241-OSTP). Santa Monica, CA: RAND.

- Clay-Warner J. Perceiving procedural injustice: The effects of group membership and status. Social Psychology Quarterly. 2001;64(3):224–238. [Google Scholar]

- Clayton S, Opotow S. Justice and identity: Changing perspectives on what is fair. Personality and Social Psychology Review. 2003;7(4):298–310. doi: 10.1207/S15327957PSPR0704_03. [DOI] [PubMed] [Google Scholar]

- Colquitt JA. On the dimensionality of organizational justice: A construct validation of a measure. Journal of Applied Psychology. 2001;86(3):386–400. doi: 10.1037/0021-9010.86.3.386. [DOI] [PubMed] [Google Scholar]

- Colquitt JA, Conlon DE, Wesson MJ, Porter CO, Ng KY. Justice at the millennium: A meta-analytic review of 25 years of organizational justice research. Journal of Applied Psychology. 2001;86(3):425–445. doi: 10.1037/0021-9010.86.3.425. [DOI] [PubMed] [Google Scholar]

- Colquitt JA, Noe RA, Jackson CL. Justice in teams: Antecedents and consequences of procedural justice climate. Personnel Psychology. 2002;55(1) [Google Scholar]

- De Vries R, Anderson MS, Martinson BC. Normal misbehavior: Scientists talk about the ethics of research. Journal of Empirical Research on Human Research Ethics. 2006;1(1):43–50. doi: 10.1525/jer.2006.1.1.43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deutsch M. Equity, equality, and need: What determines which value will be used as the basis for distributive justice? Journal of Social Issues. 1975;31:137–149. [Google Scholar]

- D illman, D. A. (2000). Mail and internet surveys: The tailored design method (2nd ed.). New York: John Wiley & Sons.

- Etzkowitz H. Entrepreneurial scientists and entrepreneurial universities in american academic science. Minerva. 1983;21:198–233. doi: 10.1007/BF01097964. [DOI] [PubMed] [Google Scholar]

- Fischer BA, Zigmond MJ. Promoting responsible conduct in research through ‘survival skills’ workshops: Some mentoring is best done in a crowd. Science and Engineering Ethics. 2001;7:563–587. doi: 10.1007/s11948-001-0014-x. [DOI] [PubMed] [Google Scholar]

- Folger R. Distributive and procedural justice: Combined impact of “voice” and improvement on experienced inequity. Journal of Personality and Social Psychology. 1977;35:108–119. [Google Scholar]

- F olger, R., & Cropanzano, R. (1998). Organizational justice and human resource management Thousand Oaks: Sage Publishers.

- Freeman R, Weinstein E, Marincola E, Rosenbaum J, Solomon F. Competition and careers in biosciences. Science. 2001;294(5550):2293–2294. doi: 10.1126/science.1067477. [DOI] [PubMed] [Google Scholar]

- Gilliland SW, Beckstein BA. Procedural and distributive justice in the editorial review process. Personnel Psychology. 1996;49(3):669–691. [Google Scholar]

- G oodstein, D. (1999). Conduct and misconduct in science— http://www.Its.Caltech.Edu/~dg/conduct_art.Html (Website).

- Greenberg J. Equity and workplace status: A field experiment. Journal of Applied Psychology. 1988;73:606–613. [Google Scholar]

- Greenberg J. Stealing in the name of justice: Informational and interpersonal moderators of theft reactions to underpayment inequity. Organizational Behavior and Human Decision Processes. 1993;54:81–103. [Google Scholar]

- G reenberg, J. (1997). A social influence model of employee theft: Beyond the fraud triangle. In R. J. Lewicki, R. J. Bies & B. H. Sheppard (Eds.), Research on negotiation in organizations (pp. 29–51). Greenwich, CT: Jai Press Inc.

- G reenberg, J., & Cropanzano, R. (Eds). (2001). Advances in organizational justice Stanford, CA: Stanford University Press.

- Gunsalus CK. Preventing the need for whistleblowing: Practical advice for university administrators. Science and Engineering Ethics. 1998;4(1):75–94. [Google Scholar]

- Hackett E. Science as a vocation in the 1990s. Journal of Higher Education. 1990;61(3):241–279. [Google Scholar]

- H ackett, E. (1999). A social control perspective on scientific misconduct. In J. Braxton (Ed.), Perspectives on scholarly misconduct in the sciences (pp. 99–115). Columbus, OH: Ohio State University Press.

- H osek, S. D., Cox, A. G., Ghosh-Dastidar, B., Kofner, A., Ramphal, N., Scott, J., et al. (2005). Gender differences in major federal external grant programs (No. TR-307-NSF). Santa Monica, CA: RAND Corporation.

- Institute of Medicine and National Research Council Committee on Assessing Integrity in Research Environments. (2002). Integrity in scientific research: Creating an environment that promotes responsible conduct Washington, D.C.: The National Academies Press. [PubMed]

- Juliano RL. A shortage of Ph.Ds? Science. 2003;301(5634):763b. doi: 10.1126/science.301.5634.763b. [DOI] [PubMed] [Google Scholar]

- Juliano RL, Oxford GS. Critical issues in phd training for biomedical scientists. Academic Medicine. 2001;76(10):1005–1012. doi: 10.1097/00001888-200110000-00007. [DOI] [PubMed] [Google Scholar]

- Kennedy D, Austin J, Urquhart K, Taylor C. Supply without demand. Science. 2004;303(5661):1105. doi: 10.1126/science.303.5661.1105. [DOI] [PubMed] [Google Scholar]

- Keith-Speigel P, Koocher GP, Tabachnick B. What scientists want from their research ethics committee. Journal of Empirical Research on Human Research Ethics. 2006;1(1):67–82. doi: 10.1525/jer.2006.1.1.67. [DOI] [PubMed] [Google Scholar]

- L add, E. J., & Lipset, S. (1978). Technical report 1977 survey of the american professoriate Storrs, CT: Social Data Center, University of Connecticut.

- L amont, J. (2003, September 8, 2003). Distributive justice. Fall 2003. Retrieved November 1, 2004, from http://plato.stanford.edu/archives/fall2003/entries/justice-distributive/

- L eventhal, G. S. (1976). The distribution of rewards and resources in groups and organizations. In L. Berkowitz & W. Walster (Eds.), Advances in experimental social psychology (Vol. 9, pp. 91–131). New York: Academic Press.

- L eventhal, G. S. (1980). What should be done with equity theory? In K. J. Gergen, M. S. Greenberg & R. H. Willis (Eds.), Social exchanges: Advances in theory and research (pp. 27–55). New York: Plenum.

- L ind, E. A., & Tyler, T. R. (1988). The social psychology of procedural justice New York: Plenum.

- Martinson BC, Anderson MS, De Vries R. Scientists behaving badly. Nature. 2005;435(7043):737–738. doi: 10.1038/435737a. [DOI] [PubMed] [Google Scholar]

- Masterson SS, Lewis K, Goldman BM, Taylor MS. Integrating justice and social exchange: The differing effects of fair procedures and treatment on work relationships. Academy of Management Journal. 2000;48(4):738–748. [Google Scholar]

- M atschinger, H., Siegrist, J., Siegrist, K., & Dittmann, K. (1986). Type A as a coping career—toward a conceptual and methodological redefinition. In T. Schmidt, T. Dembroski & G. Blumchen (Eds.), Biological and psychological factors in cardiovascular disease Berlin: Springer-Verlag.

- Merton RK. Social structure and anomie. American Sociological Review. 1938;3:672–682. [Google Scholar]

- Merton RK. The Matthew effect in science. Science. 1968;159(3810):56–63. [PubMed] [Google Scholar]

- Merton RK. The Matthew effect in science, ii: Cumulative advantage and the symbolism of intellectual property. Isis. 1988;79:607–623. [Google Scholar]

- Monastersky R. The number that’s devouring science. Chronicle of Higher Education. 2005;52(8):A12. [Google Scholar]

- Moorman RH. Relationship between organizational justice and organizational citizenship behaviors: Do fairness perceptions influence employee citizenship? Journal of Applied Psychology. 1991;76(6):845–855. [Google Scholar]

- National Research Council. (1994). The funding of young investigators in the biological and biomedical sciences Washington, D.C.: National Academy Press. [PubMed]

- Noel JG, Wann DL, Branscombe NR. Peripheral ingroup membership, status and public negativity towards outgroups. Journal of Personality and Social Psychology. 1995;68:127–137. doi: 10.1037//0022-3514.68.1.127. [DOI] [PubMed] [Google Scholar]

- O rgan, D. (1988). Organizational citizenship behavior: The good soldier syndrome. Lexington, MA: Lexington Books.

- OSTP. Federal policy on research misconduct—http://www.Ostp.Gov/html/001207_3.Html U.S. Office of Science and Technology Policy, Executive Office of the President

- Peter R, Alfredsson L, Hammar N, Siegrist J, Theorell T, Westerholm P. High effort, low reward, and cardiovascular risk factors in employed swedish men and women: Baseline results from the wolf study. Journal of Epidemiology and Community Health. 1998;52:540–547. doi: 10.1136/jech.52.9.540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeffer J, Langton N. The effects of wage dispersion on satisfaction, productivity, and working collaboratively: Evidence from college and university faculty. Administrative Science Quarterly. 1993;38:382–407. [Google Scholar]

- Podsakoff P, MacKenzie S, Paine J, Bacharach D. Organizational citizenship behaviors: A critical review of the theoretical and empirical literature and suggestions for future research. Journal of Management. 2000;26(3):513–563. [Google Scholar]

- Rajan TV. Biomedical scientists are engaged in a pyramid scheme. Chronicle of Higher Education. 2005;51(39):b16. [Google Scholar]

- Siegrist J. Adverse health effects of high-effort/low-reward conditions. Journal of Occupational Health Psychology. 1996;1(1):27–41. doi: 10.1037//1076-8998.1.1.27. [DOI] [PubMed] [Google Scholar]

- S iegrist, J. (2001). A theory of occupational stress. In J. Dunham (Ed.), Stress in the workplace (pp. 52–66). London and Philadelphia: Whurr Publishers.

- Skarlicki DP, Folger R. Retaliation in the work-place: The roles of distributive, procedural, and interactional justice. Journal of Applied Psychology. 1997;82:434–443. [Google Scholar]

- Skitka LJ. Of different minds: An accessible identity model of justice reasoning. Personality and Social Psychology Review. 2003;7:286–297. doi: 10.1207/S15327957PSPR0704_02. [DOI] [PubMed] [Google Scholar]

- Skitka LJ, Crosby FJ. Trends in the social psychological study of justice. Personality and Social Psychology Review. 2003;7(4):282–285. doi: 10.1207/S15327957PSPR0704_01. [DOI] [PubMed] [Google Scholar]

- Swazey JM, Anderson MS, Louis KS. Ethical problems in academic research. American Scientist. 1993;81:542–553. [Google Scholar]

- Teitelbaum MS. Do we need more scientists? The Public Interest. 2003;153(Fall):40–53. [Google Scholar]

- T hibaut, J. W., & Walker, L. (1975). Procedural justice: A psychological perspective Hillsdale, NJ: Lawrence Erlbaum.

- Tyler TR. The psychology of procedural justice: A test of the group value model. Journal of Personality and Social Psychology. 1989;57:830–838. [Google Scholar]

- T yler, T. R., & Blader, S. L. (2000). Cooperation in groups: Procedural justice, social identity, and behavioral engagement. Philadelphia: Psychology Press.

- Tyler TR, Blader SL. The group engagement model: Procedural justice, social identity, and cooperative behavior. Personality and Social Psychology Review. 2003;7(4):349–361. doi: 10.1207/S15327957PSPR0704_07. [DOI] [PubMed] [Google Scholar]

- W eber, M. (1946). Science as a vocation. In H. Gerth & C. Mills (Eds.), From Max Weber: Essays in sociology (pp. 129–156). New York: Oxford University Press.

- Z iman, J. (1990). Research as a career. In S. Cozzens, et al (Eds.), The research system in transition (pp. 345–359). Dordrecht, the Netherlands: Kluwer.