Abstract

OBJECTIVE

To comply with pain management standards, Bellevue Hospital in New York City implemented a mandatory computerized pain assessment screen (PAS) in its electronic medical record (EMR) system for every outpatient encounter. We assessed provider acceptance of the instrument and examined whether the intervention led to increased documentation of pain-related diagnoses or inquiries.

DESIGN

Cross-sectional survey; a pre-and posthistorically controlled observational study.

SUBJECTS AND MEASUREMENTS

The utility of the computerized tool to medicine housestaff and attendings was assessed by an anonymous survey. We conducted an electronic chart review comparing all adult primary care patient encounters over a 2-day period 6 months prior to implementation of the PAS and on 2 days 6 months after its implementation.

RESULTS

Forty-seven percent of survey respondents felt that the computerized assessment tool was “somewhat difficult” or “very difficult” to use. The majority of respondents (79%) felt the tool did not change their pain assessment practice. Of 265 preintervention patients and 364 postintervention patients seen in the clinic, 42% and 37% had pain-related diagnoses, respectively (P=.29). Pain inquiry by the physician was noted for 49% of preintervention patients and 44% of the postintervention patients (P=.26). In 55% of postintervention encounters, there was discordance between the pain documentation using the PAS tool and the free text section of the medical note.

CONCLUSION

A mandatory computerized pain assessment tool did not lead to an increase in pain-related diagnoses and may have hindered the documentation of pain assessment because of the perceived burden of using the application.

Keywords: electronic medical record, pain assessment, physician compliance, computerized assessment

Pain is one of the most common reasons for medical appointments in the United States, with over 70 million ambulatory visits and $79 billion each year in health care costs and lost productivity.1, 2 Pain is notoriously underdiagnosed and undertreated as a result of a variety of patient, physician, and system factors.3–6

In January 2001, the Joint Commission on Accreditation of Healthcare Organizations (JCAHO) implemented new pain management standards for health care organizations.7 Documentation of pain for all patients is now required.8 Using the electronic medical record (EMR) for pain assessment should allow for more efficient surveillance of compliance with regulatory mandates, leading to improvements in care. However, such tools are only effective if they are correctly utilized.9–11 Barriers to successful use of EMR-based interventions include usability, workflow factors, and computer literacy.12

At Bellevue Hospital, the nation's oldest public hospital, located in New York City, electronic clinic notes were first introduced in December 2001. During a transition period, providers could choose to write either electronic or paper notes until July 2003, when electronic documentation became mandatory. Beginning on August 19, 2002, Bellevue implemented a menu-driven pain assessment screen (PAS) into its Patient 1 (Misys Healthcare Systems, Raleigh, NC) EMR system.

We sought to assess provider acceptance of the instrument and to examine the hypothesis that requiring all health care providers to address pain using the EMR would lead to increased documentation of pain-related diagnoses and inquiries.

METHODS

Site and Subjects

This study was conducted in the Bellevue Hospital Adult Primary Care Clinics using a protocol approved by our Institutional Review Board. The provider population included all medicine house staff and faculty seeing patients in the clinic. The clinical notes were selected by evaluating all clinic visits on 2 consecutive days 6 months prior to (preintervention group, n=392) and 6 months after (postintervention group, n=395) the implementation of the PAS.

Intervention

The mandatory PAS debuted in August 2002 without prior announcement or training. It was added to the outpatient encounter note in the ambulatory EMR menu and appeared immediately following the chief complaint entry. The PAS screens were menu driven and prompted providers to document whether the patient had any complaint of pain. If there were no pain issues, the provider would click the “no pain issues at this time” box, exit the PAS, and move on to the rest of the note. If there was pain, the provider was expected to “document pain” using screens identifying the location, severity, frequency, quality, precipitating and alleviating factors, and effects on activities of daily living. The initial 2 screens were mandatory (location and severity), the remainder were optional.

Measurements

Excluding visits by nonphysician providers, a chart review was conducted by 2 reviewers for all patients seen on the selected days pre and postintervention. Electronic notes were composed of a free text history of present illness (HPI), and menu-driven entry screens for pain assessment, review of systems (ROS), physical exam (PE), and active problems addressed during the visit. Charts were evaluated for the presence of pain-related diagnoses in their visit's problem list or visit-specific international classification of diseases (ICD-9) codes, and for documentation about pain in the free-text narrative portion of the note. If a visit record was judged to contain documentation of pain in any form, reviewers were asked to classify it as being acute/active or not, based on the available information. For the charts from the postintervention group, the physician selection on the PAS was also recorded. Given that the EMR transition was occurring during the prePAS observation period, some of the clinic notes in this group were paper based. Sixty of 128 paper notes were located and included in the analysis. Of 392 and 395 encounters pre-and postintervention, 265 (67%) and 395 (92%), respectively, were eligible for analysis (Table 1, online).

Table 1.

Providers' Attitudes Regarding the Pain Assessment Screen

| Theme | % of Survey Respondents | Selected Comments |

|---|---|---|

| Usability | 32 (n=30) | “It's cumbersome and adds to an already complicated computer system and doesn't encourage more questioning about pain.”“I don't like having to click on the qualifiers.”“The extra screens are exactly that … extra.”“Would prefer a type in system that's free-text.” |

| Time | 27 (n=24) | “It takes too long to go through the whole pain assessment. It is easier to just write it in the note.”“The pain assessment is too long and there are too many pages to go through.” |

| Workflow | 18 (n=18) | “Pain issue should be included in HPI section of a note, not a separate computer section.”“Because this is a repetitive extra step I skip the tedious clicking and document “no pain issues at this time” because it is already in my HPI!”“It is so annoying, takes so many steps and makes me repeat what's in the HPI.” |

| Terminology, descriptors | 9 (n=9) | “Many patients have difficulty describing their pain precisely. It is hard to translate their descriptions onto the computer.”“… nothing comes close to documenting pain in the patients words with their own descriptions/nuances.” |

To assess self-reported usage and attitudes toward the computerized PAS an anonymous mailed survey was sent to all house staff (170) and faculty (30) of the adult primary care clinics. The brief questionnaire had 8 items and 1 free-text comment box.

RESULTS

Of 200 surveys, 94 were completed (47%) on the first and only mailing (Table 2, online). Forty-seven percent of respondents felt the PAS was “somewhat difficult” or “very difficult,” 31% reported to never using the assessment tool, and the majority of respondents (79%) thought that the tool did not change their pain assessment practice. Fifty-one percent of respondents said they would be more inclined to use a computerized pain assessment tool if it had a different interface. The majority (73%) said that despite using the PAS they documented pain in their note as well. The most common complaints with regards to the pain assessment tool were that it was lengthy, cumbersome, and that pain assessment belonged in the HPI, as part of the note (Table 1).

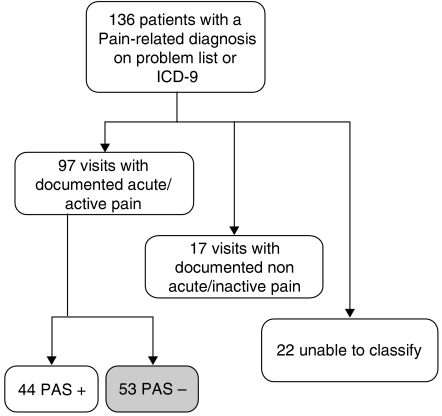

The complaints registered in the surveys were consistent with our study of pain reporting before and after introduction of the PAS. Of the 136 patients with pain-related diagnoses in the postintervention group, 97 had documentation of acute/active pain in the free-text portion or problem list, 17 did not, and 22 had insufficient documentation for classification. Notably, of the 97 records with documented acute/active pain, 53 (55%) had a contradictory “no pain” entry in the PAS (Fig. 1).

FIGURE 1.

Parameters of patients in the postintervention with pain-related diagnoses. *PAS+, pain assessment screen indicated presence of pain; †PAS−, pain assessment screen indicated no pain.

We found no significant increase in pain reporting following introduction of the PAS. Specifically, pain-related diagnoses were present in 42% (110/265) of records before introduction of the PAS and in 37% (136/364) after (95% confidence interval [CI] for the difference, −4% to −12%, P=.29). Documentation of pain inquiry in the free-text portion of the note remained the same and may have decreased after the introduction of the PAS with 49% (130/265) of records preintervention and 44% (162/364) post (95% CI for the difference, −3% to 12%, P=.26). Interreviewer agreement regarding assessment of pain diagnoses and pain inquiry during the chart review was high for the 2 reviewers (κ=0.98 and 0.96, respectively).

Pre and postintervention groups were similar in terms of patients' gender, age, and visits by provider, i.e., attending versus house staff (Table 1, online).

DISCUSSION

The growing number of mandates for documentation and reporting pain pose significant challenges for health care delivery systems. The time constraints of the medical encounter and ease of data management make the EMR an attractive and potentially efficient solution to these problems. However, this approach may introduce unintended consequences and, as our evaluation suggests, may lead to misuse, and worsen compliance and quality of data in certain situations.

Our survey showed that for the majority of providers, the PAS did not change their practices of inquiring about pain. We anticipated that the mandated PAS would encourage providers to ask more about pain and, thus, add to the number of patients whose pain is being diagnosed. However our data showed that there was no significant difference after PAS introduction.

The lack of increase in pain documentation following PAS implementation could be because of many factors, such as the use of mandatory screens that add additional time to the documentation process.13 From our survey it was apparent that providers did not like to change workflow, regardless of regulatory mandates. That pain assessment is important was acknowledged by most practitioners by the fact that most patients with pain diagnoses had documentation about their pain somewhere in the EMR.

Pain is a complex process that is subjectively described by patients. Some providers found the structured PAS did not allow for proper pain descriptions. Thus, providers did not use the PAS as a replacement for free-text descriptions. Difficulties with the PAS may have led to the misuse of the system so that 55% of provider encounters were incorrectly entered as “no pain” in the PAS despite describing pain in the note, presumably just to avoid the subsequent screens. The tool also contributed to undue anxiety, as one attending said “(the PAS) frustrated me because I often bypassed the screen even if the patient had pain and then felt like I was falsifying the patient medical record.” The lack of training and announcement about the pain assessment tool may have been an important factor in diminishing the acceptability of the tool. Regrettably, many similar interventions are introduced without much publicity. It is, thus, important to collect data about the consequences of such ad hoc implementations.

Some limitations of this study may lead to an apparent under-documentation of pain in our results. For example, for some charts it was not possible to differentiate between acute/active and chronic pain diagnoses. Also, this study was limited by what was documented in the electronic chart; pain assessment may have occurred verbally and not entered into the EMR. These limitations, however, do not change the important outcomes of our study, given that satisfying mandated regulations requires proof by documentation.

To our knowledge, this is the first study to measure and verify the effects of incorporating pain assessment into the EMR. A study from the UCLA Emergency Department embedded nonmandatory practice guidelines for a few chief complaints such as low back pain for which electronic charting was available.9 The UCLA study reported that 78% of eligible charts had information entered electronically. However, only 45% of the house staff physicians believed that the EMR was better than hand-writing and 28% thought it was worse. In our study, mandating the PAS was expected to lead to 100% reporting compliance. However, as we have shown, what was entered on the PAS may not truly reflect the patient encounter, primarily because of software not adequately tailored to the needs of a real-time medical encounter.

As a result of this evaluation and feedback from providers, the PAS has been revised to include new options for documentation: “no change in pain” and “see clinical note.”

CONCLUSION

The mandatory computerized pain assessment tool did not lead to increased documentation of pain-related diagnoses and may have actually hindered the documentation of pain assessment because of the perceived burden of using the application. Documentation solutions should be carefully designed taking into account the needs and preferences of their ultimate users and thoroughly evaluated before they are integrated into an EMR.

Acknowledgments

We thank Mark D. Schwartz, M.D. and Sundar Natarajan, M.D., M.Sc. for illuminating discussions about the project; Michael N. Cantor, M.D. for helpful review of the manuscript.O.S. was supported by a Health Resources and Services Administration, Bureau of Health Professions, Training in Primary Care Medicine and Dentistry, HRSA-05-088.

Supplementary Material

The following supplementary material is available for this article online:

Table 1. Patient and Provider Characteristics.

Table 2. Results of Provider Survey.

REFERENCES

- 1.Kirschstein R. Disease-specific estimates of direct and indirect costs of illness and NIH support—Fiscal year 2000 update. [August 2 2005]; Available at http://ospp.od.nih.gov/ecostudies/COIreportweb.htm.

- 2.Cherry DK, Woodwell DA. National ambulatory medical care survey: 2000 summary, CDC advance data from vital and health statistics, No. 328, June 5, 2002. [August 2 2005]; Available at http://www.cdc.gov.nchs/data/ad/ad328.pdf. [PubMed]

- 3.Pargeon KL, Hailey BJ. Barriers to effective cancer pain management: a review of the literature. J Pain Symptom Manage. 1999;18:358–68. doi: 10.1016/s0885-3924(99)00097-4. [DOI] [PubMed] [Google Scholar]

- 4.Glajchen M. Chronic pain: treatment barriers and strategies for clinical practice. J Am Board Fam Pract. 2001;14:211–8. [PubMed] [Google Scholar]

- 5.Miaskowski C, Dodd MJ, West C, et al. Lack of adherence with the analgesic regimen: a significant barrier to the effective cancer pain management. J Clin Oncol. 2001;19:4275–9. doi: 10.1200/JCO.2001.19.23.4275. [DOI] [PubMed] [Google Scholar]

- 6.Potter M, Schafer S, Gonzalez-Mendez E, et al. Opioids for chronic nonmalignant pain. Attitudes and practices of primary care physicians in the UCSF/Stanford Collaborative Research Network. University of California, San Francisco. J Fam Pract. 2001;50:145–51. [PubMed] [Google Scholar]

- 7.Background on the Development of the Joint Commission Standards on Pain Management. [February 23, 2003]; Available at http://www.jcaho.org/news+room/health+care+issues/pain.htm.

- 8.Phillips DM. JCAHO pain management standards are unveiled. Joint commission on accreditation of healthcare organizations. JAMA. 2000;284:428–9. doi: 10.1001/jama.284.4.423b. [DOI] [PubMed] [Google Scholar]

- 9.Mikulich VJ, LIU YC, Steinfeld J, Schriger DL. Implementation of clinical guidelines through an electronic medical record: physician usuage, satisfaction and assessment. Int J Med Inform. 2001;63:169–78. doi: 10.1016/s1386-5056(01)00177-0. [DOI] [PubMed] [Google Scholar]

- 10.Cook AJ, Roberts DA, Henderson MD, van Winkle LC, Hamill-Ruth RJ. Electronic pain questionnaires: a randomized, crossover comparison with paper questionnaires for chronic pain assessment. Pain. 2004;110:310–7. doi: 10.1016/j.pain.2004.04.012. [DOI] [PubMed] [Google Scholar]

- 11.Van Den Kerkhof EG, Goldstein DH, Lane J, Rimmer MJ, Van Dijk JP. Using a personal digital assistant enhances gathering of patient data on an acute pain management service:a pilot study. Can J Anaesth. 2003;50:368–75. doi: 10.1007/BF03021034. [DOI] [PubMed] [Google Scholar]

- 12.Cork RD, Detmer WM, Friedman CP. Development and initial validation of an instrument to measure physicians' use of, knowledge about, and attitudes toward computers. J Am Med Inform Assoc. 1998;5:164–76. doi: 10.1136/jamia.1998.0050164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–12. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]