Abstract

OBJECTIVE

To describe the rate and types of events reported in acute care hospitals using an electronic error reporting system (e-ERS).

DESIGN

Descriptive study of reported events using the same e-ERS between January 1, 2001 and September 30, 2003.

SETTING

Twenty-six acute care nonfederal hospitals throughout the U.S. that voluntarily implemented a web-based e-ERS for at least 3 months.

PARTICIPANTS

Hospital employees and staff.

INTERVENTION

A secure, standardized, commercially available web-based reporting system.

RESULTS

Median duration of e-ERS use was 21 months (range 3 to 33 months). A total of 92,547 reports were obtained during 2,547,154 patient-days. Reporting rates varied widely across hospitals (9 to 95 reports per 1,000 inpatient-days; median=35). Registered nurses provided nearly half of the reports; physicians contributed less than 2%. Thirty-four percent of reports were classified as nonmedication-related clinical events, 33% as medication/infusion related, 13% were falls, 13% as administrative, and 6% other. Among 80% of reports that identified level of impact, 53% were events that reached a patient (“patient events”), 13% were near misses that did not reach the patient, and 14% were hospital environment problems. Among 49,341 patient events, 67% caused no harm, 32% temporary harm, 0.8% life threatening or permanent harm, and 0.4% contributed to patient deaths.

CONCLUSIONS

An e-ERS provides an accessible venue for reporting medical errors, adverse events, and near misses. The wide variation in reporting rates among hospitals, and very low reporting rates by physicians, requires investigation.

Keywords: medical errors, adverse events, error reporting systems, electronic reporting

“Health care organizations should be encouraged to participate in voluntary reporting systems as an important component of their patient safety programs.” In To Err is Human: Building a Safer Health System. Institute of Medicine, 2000.1

The reporting of medical errors (an incorrect action or plan that may or may not cause harm to a patient), adverse events (injury to a patient because of medical management, not necessarily because of error), and near misses (an error that does not reach the patient) has been a focus of efforts to reduce their incidence. Evaluation of the types, frequency, and effects on patients and their care of errors and adverse events are critical for understanding defects in processes of care, identifying “root causes,” and developing interventions aimed at their reduction and prevention.1–3 Two commonly used methods for error detection, direct observation and chart review, are personnel-and time-intensive, and thus impractical for routine implementation across medical care settings.4–6 Malpractice claims data are subject to reporting bias; administrative data may not include clinical context and/or information on near misses (errors that did not reach the patient) or latent errors (defects in the hospital environment and operations that can lead to medical errors and adverse events).6–8

Voluntary error reporting systems (ERS) were strongly endorsed in the Institute of Medicine's report on errors in medical care1 and last year the U.S. Senate passed an amendment to The Public Health Safety Act to establish a framework for health care providers to voluntarily report medical errors to patient safety organizations with confidentiality protections.9

Existing reporting systems, such as the Sentinel Event system of the Joint Commission for Accreditation of Health Care Organizations (JCAHO) and the MedMARx system of the United Sates Pharmacopeia and the Institute for Safe Medication Practices, are limited to certain types of errors and adverse events, and may not collect reports on near misses, and/or may not be familiar or accessible to all hospital employees.10, 11 Hospital-based electronic ERS (e-ERS) may facilitate voluntary reporting of all types of medical errors and adverse events through ease of use and accessibility, and may allow real-time review, oversight, and intervention. Additionally, an e-ERS that captures near misses and latent errors may provide further insights into system processes that need to be modified to help reduce the likelihood of error. To illustrate the feasibility of reporting and types of events reported using a hospital-based e-ERS, we describe reports obtained from 26 U.S. acute care hospitals that implemented the same commercially available e-ERS.

METHODS

Institutions

We evaluated all reported events from 26 acute care nonprofit, nonfederal hospitals throughout the U.S. that voluntarily implemented a web-based e-ERS for at least 3 months. Each hospital implemented and used the same commercial product (DrQuality) as a component of quality improvement efforts. Twenty-four hospitals were adult or adult/pediatric tertiary care centers, 2 were exclusively pediatric, 9 were academic medical centers, 11 hospitals were in urban, 13 in suburban, and 2 in rural settings. The hospitals were located in 12 geographically dispersed states. Eighteen hospitals were part of hospital groups or health care systems each comprising of several facilities. The first facility in the cohort implemented the e-ERS in November 2000, and the last facility in June 2003.

Reporting System

The reporting system consisted of a secure, web-based portal available on all hospital PCs. Any hospital employee could submit a report after a secure login. The reporting system leads the reporter through a series of standardized screens with pull-down response choices designed to collect information on event demographics including time, location, and service, and personnel involved, as well as type of event, contributing factors, impact on patient care, and subsequent patient outcome. The reporting process took an average of 10 minutes to complete. Although reporting was not anonymous, reports were peer-review protected at each hospital site and accessible only to prespecified hospital personnel. In most cases, the chief medical officer and quality improvement executives had access to all reports; ward leaders (nurse managers and attending physicians) had access to and responsibility for all events that occurred on their ward; pharmacy leaders had access to all medication-related events; and so on. Reports could be accessed immediately after entry, and could be amended to reflect information obtained from subsequent investigation, verification, and patient follow-up. Managers and executive leadership could also edit reports for accuracy during final review. Figure 1 in the on-line Appendix shows examples of the e-ERS input screens.

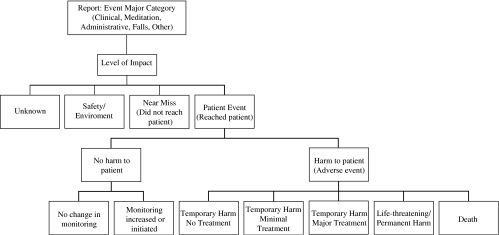

FIGURE 1.

Diagram of impact level categories and study definitions.

Report Definitions

In each reporting session, reporters specified a major category for each event: (a) Nonmedication-related clinical (events related to medical management, excluding administration, delivery, or reaction to medications), (b) Medication/infusion (events related to the administration, delivery, dosing, or reaction to medications), (c) Administrative (including events related to system processes and infrastructure issues), (d) Falls, or (e) Other. Examples of the types of events in each major category are listed in Table 1 in the on-line appendix.

Table 1.

Most Common Reported Events Within Each Major Category of Event

| Nonmedication Clinical Events, n=31,900 | % | Medication/Infusion Events, n=30,988 | % | Administrative Events, n=11,857 | % |

|---|---|---|---|---|---|

| Laboratory | 34 | Wrong dose | 16 | Discharge process | 25 |

| Transfusion related | 10 | Omitted drug | 16 | Documentation | 14 |

| Operative/invasive procedures | 9 | Wrong drug | 12 | Property loss | 7 |

| Skin integrity | 8 | Drug reaction/allergy | 10 | Communication | 7 |

| Nonoperative test/treatment | 7 | Wrong route | 8 | Patient/family dissatisfaction | 7 |

| Blood/body fluid exposure | 3 | Wrong time/frequency | 7 | Medical device/equipment | 6 |

| Respiratory management | 2 | Wrong form/infusion rate | 4 | Patient identification | 6 |

| Wrong patient | 3 | Consent process | 4 | ||

| Infiltration/extravasation | 2 | Admission process | 3 | ||

| Controlled substance procedure | 2 | Appointments/scheduling | 2 | ||

| Other* | 25 | Other | 20 | Other | 19 |

Events reported<2% of total are not shown.

Reporters were also asked to specify “Impact Level” on patients and their care: (a) unknown; (b) safety/environment (unsafe practices and/or conditions in the institution such as a liquid spill, broken patient bed, etc.); (c) near miss (error/adverse event corrected or averted before it reached the patient, e.g., a dosing error noted prior to administering medication); (d) no harm and no change in monitoring; (e) no harm but monitoring initiated or increased; (f) temporary harm not requiring additional treatment; (g) temporary harm, minimal treatment required; (h) temporary harm, major treatment/prolonged hospitalization required; (i) permanent harm; (j) life threatening (e.g., cardiac arrest, anaphylaxis); or (k) death. For the purposes of this study, we differentiated between events that did not reach a patient (b and c, above) and those that did (d to k, above), which we designated “patient events.” We further divided patient events into those that did not cause harm (d and e) and those that did (f to k), and defined the latter as adverse events. We grouped the 2 most severe injury categories, i and j, together because of small numbers. Figure 1 illustrates the classification system.

Data Analysis

All reports that occurred from January 1, 2001 through September 30, 2003 were analyzed. Multiple reports of the same event were combined manually at each hospital site. All completed reports were placed in a single database for this analysis. Hospitals were deidentified to study investigators. Only aggregate analyses were performed and all reported events were analyzed, regardless of whether an error and/or adverse outcome occurred. Correlations between hospital characteristics (e.g., size, volume) and reporting rates were performed using Spearman rank correlation coefficient. The data were analyzed and results interpreted by 3 authors (C. M., D. S., S. P.), none of whom had or have ties to commercial companies associated with medical events reporting systems. The commercial entity from which the data were obtained was not involved at any level in data analysis or interpretation of results, and did not provide financial support for the study.

RESULTS

Reporting Rates

A total of 92,547 reports from 26 hospitals were evaluated over a total of 2,547,154 inpatient days. The hospitals ranged in size from 120 to 582 beds, had used the e-ERS from 3 to 33 months (median 21), and contributed 674 to 9,617 reports (median 4,237). The range of reports per eligible 1,000 inpatient days (when the e-ERS was in use) was wide (9 to 95, median 35). There were no statistically significant correlations between size of hospital or number of months of use of the e-ERS and reports per inpatient days. Most of the variability among the institutions occurred among institutions in which the e-ERS in use for less than 24 months. Table 2 in the on-line Appendix shows reporting rates and hospital characteristics for each of the hospital sites.

Of all reports, registered nurses reported 47%, pharmacists and pharmacy technicians 16%, laboratory technicians 10%, unit clerks/secretarial staff 10%, licensed practical nurses and nursing assistants 3%, and physicians (including house staff) 1.4%. The remainder of reports was entered by a variety of employees including medical assistants, physician assistants, physical therapists, security personnel, social workers, and risk and case managers.

Report Classification

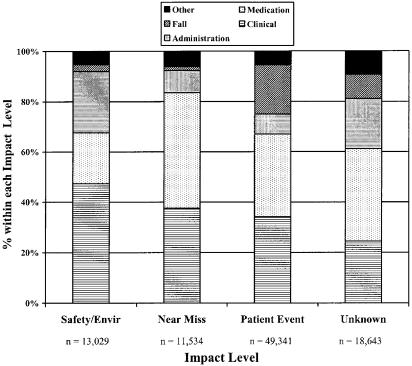

Of the total 92,547 reports, 34% were nonmedication-related clinical events, 33%medication/infusion events, 13%falls, 13%administrative events, and 6%other. Table 1 shows rates for the most commonly reported events within each major category. Regarding impact level, the majority of reports, 53%, were events that reached a patient (patient events), 14% were related to environmental safety, and 13% were near misses. In 20% of reports, the impact level on patients and patient care was unknown or not specified. In each of the impact levels, clinical and medication/infusion-related events together made up more than 60%, although their relative contributions varied (Fig. 2). For example, among all safety/environment events, one fifth were medication related, compared with nearly half among near miss events, and one third among patient events.

FIGURE 2.

Major categories of events within each impact level.

Impact on Patients and Patient Care

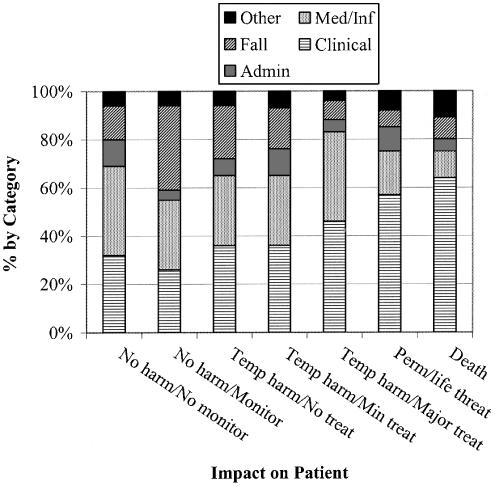

Among the 49,341 patient events, 67% caused no harm to patients. The remaining third caused injury: 32% temporary harm (of which 4% resulted in major treatment), 0.8% permanent or life-threatening harm, and 0.4% contributed to death of a patient.

Across the levels of patient impact, the types of events varied, as shown in Fig. 3. For example, the relative proportion of nonmedication-related clinical events increased as severity of patient impact increased, whereas the relative proportions of medication-related events decreased. The relative proportions of administrative-related events remained fairly constant across impact levels, contributing approximately 10% in all categories, including 2 patient deaths.

FIGURE 3.

Proportions of events by major category within Patient Event impact levels. This figure represents impact levels within Patient Events only. Classification as in text.

Overall, on average, 1 electronic report was generated every 28 patient-days; a patient event occurred every 52 patient-days, an adverse patient event (harm to the patient) every 173 patient-days, and a life-threatening or permanent injury or death, every 4,303 patient-days. Estimated overall admissions, a patient event occurred in approximately 10% of admissions, an adverse event in 3%, and life-threatening or permanent injury or death in 0.1%.

DISCUSSION

As health care organizations increasingly focus on the monitoring of medical errors and adverse events, the use of voluntary reporting systems to detect, evaluate, and track such events has increased. This study describes types and rates of voluntarily reported events in 26 acute care hospitals using an electronic reporting system. Nonmedication-related clinical and medication-related events each represented about a third of all reports. Events that reached a patient made up the majority of reports, of which two thirds caused no harm to the patient and slightly over 1% resulted in permanent or life-threatening harm or death. Thirteen percent of reports were near misses that did not reach a patient and a similar percentage were environmental safety events. In this sample, a patient event occurred in approximately 10% of admissions, an adverse event in 3%, and life-threatening or permanent injury or death in 0.1%.

Our study represents “real-life” reporting by medical personnel of medical errors, adverse events, and near misses. No study personnel were employed to prompt reporting or observe actions by hospital staff, thus minimizing a “Hawthorne” effect because of study participation. We are not aware of a study to date that has described the types and frequency of adverse events and errors voluntarily reported as part of routine hospital operations.

Our study presents several important aspects of using an e-ERS in acute care hospitals. First, the rate of reports per 1,000 inpatient days varied substantially among institutions and did not correlate with hospital size or duration of e-ERS use, although there was a trend toward less variation among hospitals that had used the e-ERS for 2 or more years. Thus, a steady state may be reached once acceptance and adoption of the e-ERS spreads throughout an institution. Importantly, high reporting rates in an institution may not necessarily represent poor patient care, but rather an institutional culture that encourages reporting of errors and adverse events, integrates reporting into quality improvement processes, and focuses on system-level changes instead of individual blame and punitive actions.1

Second, the proportion of very serious adverse events, although small, was not negligible: slightly more than 1 per 1,000 admissions. If this rate is applied to the entire population of 33.7 million inpatients in nonfederal acute care hospitals in the U.S.,12 an estimated 34,000 patients per year could be seriously or permanently injured or die during hospitalization because of an adverse event.

Third, the e-ERS allowed for the reporting of a wide variety of different types and severities of adverse events and errors, and did not merely capture the most serious events. Nearly 70% of events that reached the patient produced no harm, and one quarter of all reports were either environmental safety issues or near misses. Thus, an e-ERS may be particularly helpful in capturing system defects (latent errors) and near misses that may not be detected by reviews of patient charts or medication records. Importantly, analyses of such near misses may help identify “root causes” of errors and adverse events.1, 3, 6

Finally, reporting rates reflect the reporters. Although the e-ERS was available and accessible to any hospital employee and staff member, physicians contributed less than 2% of all reports. The variation in reporting rates between nurses and physicians may be attributed to different definitions or perceptions of what constitutes an error or adverse event, and, importantly, different training about and attitudes toward reporting them. Nurses, but not physicians, receive training in and are encouraged to report adverse events and complications arising from medical treatment.13 Physicians do not receive education in the systematic evaluation of errors and adverse events, and thus operate within a belief system of self-blame and personal responsibility, rather than viewing such events as the end process of a series of systematic deficiencies. Additionally, physicians may not report because of “professional courtesy,” concern for implicating colleagues, or fear of repercussions.1, 14

It is difficult to compare the rates of events in our study to previously published ones primarily because of differences in data collection methods. Most published studies have relied on retrospective chart reviews, or have been research-based observational studies.4–6, 15–17 Interestingly, the adverse event rate of 3% of admissions in our study is similar to the 3% to 4% rates reported in 2 large medical record reviews of hospital discharges, the Harvard Medical Practice Study18 and a similar study in Colorado and Utah.19 Additionally, most studies have not relied on event reporting presumably because of low reporting rates. Studies of prompted reports of adverse events by house staff have shown rates of 0.5% to 4% of admissions,10, 20, 21, 22 and overall “quality problems,” including near misses, in 10% of admissions.10, 22 In 1 study, 2 hospitalists observed medical errors during routine patient care, finding an adverse event rate of 4% of admissions,22 again similar to the 3% rate in our study. In comparison, 1 study found a reported adverse event rate of 0.04% using a traditional paper-based method.23

There are several limitations of our study. Despite the widespread availability of the e-ERS in each institution and accessibility to all hospital employees, it is likely that not all errors, adverse events, or near misses were reported, and we did not rely on alternative methods to identify such events. Additionally, reporting bias is likely, exemplified by the exceedingly low rate of reporting by physicians; bias in the types of events reported may also exist.

Medical error and adverse event reporting rates are additionally influenced by institutional factors. The hospitals varied in size, geographic location, setting, academic affiliation, and number of months the e-ERS was in use, and these factors may have contributed to the large differences in reporting rates across hospitals. Additionally, as the e-ERS was implemented in each institution at different times, secular trends may also have affected reporting of events. Furthermore, institutions likely differed regarding implementation and adoption of an e-ERS, and overall culture regarding the reporting and management of adverse events and errors. Additionally, the understanding of processes that lead to medical errors and their systematic evaluation likely vary across hospital administrators and executive personnel. Thus, implementing any ERS requires training beyond the operational aspects to include education in the processes that lead to errors and adverse events. We did not have data on each institution's efforts in adopting and training in the e-ERS, acceptance of the e-ERS among hospital personnel, or hospital culture toward the reporting of errors and adverse events.

Despite these limitations, to our knowledge, this is the largest multihospital review of types of medical errors and adverse events reported using a commercial e-ERS as part of routine hospital operations. More research is needed to determine whether an e-ERS increases the reporting of adverse events and errors and reduces their occurrences. In one large hospital in the current study, use of the e-ERS increased the overall reporting rate of adverse events and errors by nearly fourfold. Additionally, occurrences of repeated events were easily and expediently detected and a common cause then identified (e.g., extravasation of parental nutrition associated with a procedure). Subsequent occurrences were tracked after changes in policy were instituted to determine their effects.

Thus, an e-ERS may help overcome 2 of the roadblocks to improving safety of medical care identified by Berwick.24 First, by making errors and adverse events reporting accessible to all hospital employees, as well as easy to review and track, they become more visible to clinicians, hospital administrators, government officials, and the public. Second, a reporting system that allows for reporting of near misses and problems in the safety of the hospital environment may help uncover “root causes,” such as some system errors, that may not be identified by retrospective review. Additionally, a web-based e-ERS allows for real-time event notification and oversight, and for concurrent tracking of rates over time, tasks not easily performed with a paper-based system. Over the past 2 years, the National Patient Safety Agency in England has introduced a national system for identifying and reporting adverse events in health care; in the absence of such a national system in the U.S., hospital-wide e-ER systems may be important in the reporting, measuring, and tracking of adverse events and medical errors.

Physicians should take a leading role in quality efforts to reduce medical errors and adverse events. The factors associated with the low reporting rates by physicians and in some hospitals require further evaluation.

Acknowledgments

Disclosures: Dr. Lundquist was formerly Chief Medical Officer of DrQuality Inc., an electronic medical error and adverse event reporting system. Dr. Kumar is the Chief Medical Officer at Quantros Inc., and Mr. Chen is a statistician at Quantros Inc., which also produces an electronic event reporting system.

Neither DrQuality nor Quantros provided financial support for this study or were involved in analysis or interpretation of results.

Supplementary Material

The following supplementary material is available for this article online:

Examples of the reporting system input screens and reports, and institutional reporting rates.

REFERENCES

- 1.Kohn LT, Corrigan JM, Donaldson MS, editors. To Err Is Human. Washington, DC: National Academy Press; 1999. [PubMed] [Google Scholar]

- 2.Leape LL, Berwick DM, Bates DW. What practices will most improve safety: evidence-based medicine meets patient safety. JAMA. 2002;288:501–7. doi: 10.1001/jama.288.4.501. [DOI] [PubMed] [Google Scholar]

- 3.Ioannidis JP, Lau J. Evidence on interventions to reduce medical errors: an overview and recommendations for future research. J Gen Intern Med. 2001;16:325–34. doi: 10.1046/j.1525-1497.2001.00714.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bates DW, Cullen DJ, Laird N, et al. Incidence of adverse drug events and potential adverse drug events: implications for prevention. JAMA. 1995;274:29–34. [PubMed] [Google Scholar]

- 5.Andrews L, Stocking C, Krizek T, et al. An alternative strategy for studying adverse events in medical care. Lancet. 1997;349:309. doi: 10.1016/S0140-6736(96)08268-2. [DOI] [PubMed] [Google Scholar]

- 6.Thomas EJ, Petersen LA. Measuring errors and adverse events in health care. J Gen Intern Med. 2003;18:61–7. doi: 10.1046/j.1525-1497.2003.20147.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Iezzoni LI. Identifying complications of care using administrative data. Med Care. 1994;32:700–15. doi: 10.1097/00005650-199407000-00004. [DOI] [PubMed] [Google Scholar]

- 8.Iezzoni LI. Assessing quality using administrative data. Ann Intern Med. 1997;127:666–74. doi: 10.7326/0003-4819-127-8_part_2-199710151-00048. [DOI] [PubMed] [Google Scholar]

- 9.Health Policy News. [August 23, 2004];August 18 2004 Society of General Internal Medicine. Available at: http://www.sgim.org/HPNews0804.cfm.

- 10.Suresh G, Horbar JD, Plsek P, et al. Voluntary anonymous reporting of medical errors for neonatal intensive care. Pediatrics. 2004;13:1609–18. doi: 10.1542/peds.113.6.1609. [DOI] [PubMed] [Google Scholar]

- 11. [July 12, 2004]; National Coordinating Council for Medication Error Reporting and Prevention. Available at: http://www.usp.org/pdf/patientSafety/medform.

- 12.Defrances CJ, Hall MJ. Hyattsville, Md: National Center for Health Statistics; 2004. 2002 National Hospital Discharge Survey. Advance Data from Vital and Health Statistics; No. 342. [PubMed] [Google Scholar]

- 13.Weingart SN, Davis RB, Palmer RH, et al. Discrepancies between explicit and implicit review: physician and nurse assessments of complications and quality. Health Serv Res. 2002;37:483–98. doi: 10.1111/1475-6773.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Leape LL. Reporting of adverse events. N Engl J Med. 2002;347:1633–8. doi: 10.1056/NEJMNEJMhpr011493. [DOI] [PubMed] [Google Scholar]

- 15.Weingart SN, Wilson RM, Gibberd RW, Harrison B. Epidemiology of medical error. BMJ. 2000;230:774–7. doi: 10.1136/bmj.320.7237.774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bates DW, Teich JM, Lee J, et al. The impact of computerized physician order entry on medication error prevention. JAMA. 1999;6:313–21. doi: 10.1136/jamia.1999.00660313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Barker KN, Flynn EA, Pepper GA, Bates DW, Mikeal RL. Medication errors observed in 36 health care facilities. Arch Intern Med. 2002;162:1897–903. doi: 10.1001/archinte.162.16.1897. [DOI] [PubMed] [Google Scholar]

- 18.Brennan TA, Leape LL, Laird NM, et al. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study 1. N Engl J Med. 1991;324:370–6. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- 19.Thomas EJ, Studdert DM, Burstin HR. Incidence and types of adverse events and negligent care in Utah and Colorado. Med Care. 2000;38:261–71. doi: 10.1097/00005650-200003000-00003. [DOI] [PubMed] [Google Scholar]

- 20.O'Neil AC, Peterson LA, Cook EF, Bates DW, Lee TH, Brennan TA. A comparison of physicians self-reporting to medical record review to identify medical adverse events. Ann Intern Med. 1993;119:370–6. doi: 10.7326/0003-4819-119-5-199309010-00004. [DOI] [PubMed] [Google Scholar]

- 21.Weingart SN, Callanan LD, Ship AN, Aronson MD. A physician-based voluntary reporting system for adverse events and medical errors. J Gen Intern Med. 2001;16:809–14. doi: 10.1111/j.1525-1497.2001.10231.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chaudhry SI, Olofinboba KA, Krumholz HM. Detection of errors by attending physicians on a general medicine service. J Gen Intern Med. 2003;18:595. doi: 10.1046/j.1525-1497.2003.20919.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Flynn EA, Barker KN, Pepper GA, Bates DW, Mikeal RL. Comparison of methods for detecting medication errors in 36 hospitals and skilled-nursing facilities. Am J Health-System Pharmacy. 2002;59:436–46. doi: 10.1093/ajhp/59.5.436. [DOI] [PubMed] [Google Scholar]

- 24.Berwick DM. Errors today and errors tomorrow. N Engl J Med. 2003;348:2570–2. doi: 10.1056/NEJMe030044. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Examples of the reporting system input screens and reports, and institutional reporting rates.