Abstract

BACKGROUND

We developed computer-based virtual patient (VP) cases to complement an interactive continuing medical education (CME) course that emphasizes skills practice using standardized patients (SP). Virtual patient simulations have the significant advantages of requiring fewer personnel and resources, being accessible at any time, and being highly standardized. Little is known about the educational effectiveness of these new resources. We conducted a randomized trial to assess the educational effectiveness of VPs and SPs in teaching clinical skills.

OBJECTIVE

To determine the effectiveness of VP cases when compared with live SP cases in improving clinical skills and knowledge.

DESIGN

Randomized trial.

PARTICIPANTS

Fifty-five health care providers (registered nurses 45%, physicians 15%, other provider types 40%) who attended a CME program.

INTERVENTIONS

Participants were randomized to receive either 4 live cases (n = 32) or 2 live and 2 virtual cases (n = 23). Other aspects of the course were identical for both groups.

RESULTS

Participants in both groups were equivalent with respect to pre-post workshop improvement in comfort level (P = .66) and preparedness to respond (P = .61), to screen (P = .79), and to care (P = .055) for patients using the skills taught. There was no difference in subjective ratings of effectiveness of the VPs and SPs by participants who experienced both (P = .79). Improvement in diagnostic abilities were equivalent in groups who experienced cases either live or virtually.

CONCLUSIONS

Improvements in performance and diagnostic ability were equivalent between the groups and participants rated VP and SP cases equally. Including well-designed VPs has a potentially powerful and efficient place in clinical skills training for practicing health care workers.

Keywords: randomized trial, virtual patient, standardized patient, OSCE, computer

Using standardized patients (SPs) to provide learners with high fidelity simulations of patient interactions has become a staple of medical education and assessment. Standardized patients have been employed to both teach and assess clinical skills for over 2 decades.1, 2 Standardized patients are a valid and reliable method to teach sophisticated topics to a variety of learners; several studies have demonstrated improved scores in interpersonal skills and knowledge when compared with traditional teaching strategies using didactic methods.3–5 Many institutions have combined SP cases for assessment into an objective structured clinical examination (OSCE).2

Using OSCEs and SPs requires significant personnel, financial, and logistical resources. These types of simulations are synchronous, requiring observing faculty, students, SPs, and coordinating staff to be in 1 location at 1 time. The SP and OSCE interactions are also often conducted with a single learner, SP, and faculty observer, limiting the number of learners that can be accommodated at any given time. Computer-based virtual patients (VPs) are now being explored as a new method for creating high-fidelity simulated patient interactions that can overcome many of the challenges associated with using live SPs. As computer-based VPs can be used at any time, they can be integrated into curricula in a much more flexible manner. Many learners can use a single VP case simultaneously. Virtual patients offer true standardization across interactions creating a more consistent but less flexible experience for learners. The VP has the advantage of being easily modified to demonstrate a variety of clinical or interview scenarios, for example changing the gender or race of the patient.6

Virtual patients have worked well in simulating concrete and predictable topics, such as physiological processes and performing procedures. More recently they have been used to model the patient interview and are being used to teach clinical interviewing skills. Previous work has included the development of VP cases designed to teach bedside competencies of bioethics, basic patient communication and history taking, and clinical decision making.7–9 Combining live SPs and VPs to create more realistic simulations of procedures or the effects of medications has also been explored.10

We have designed and administered a continuing education program, Psychosocial Aspects of Bioterrorism and Disasters: Education for Readiness and Response, to prepare primary care health care providers at all levels in the key clinical skills of screening, diagnosing, and treating individuals experiencing psychosocial sequelae of disasters.11 The program focuses on the 4 most common stress and anxiety disorders seen in the settings of disasters: posttraumatic stress disorder (PTSD), acute stress disorder (ASD), sub-diagnostic distress (“Worried Well”), and bereavement.12

One of the key elements of our workshop is faculty-facilitated SP interaction. During the workshop, participants experience simulated patient interactions with 4 SP cases that represent each of the 4 stress disorders we address. Integrating SPs into our program lets learners perform skills practice, review relevant information gathering and screening strategies, and for formative feedback from faculty preceptors.13

We have been actively developing an interactive online version of our course. In 2004, the authors (H.F. and M.T.) created a web-based application using freely available, open-source tools to replicate the process and experience of the SP interaction, using VPs. To evaluate the impact of this new modality, we conducted a randomized trial to determine the efficacy of VP case simulations when compared with live SP case simulations in improving the knowledge, skills, and attitudes of primary care providers in the screening, diagnosing, and treatment of individuals experiencing psychosocial sequelae of disasters.

METHODS

Design of the VP

The web-based VP cases were modeled directly on our observations and analysis of live SP interactions from several of our previous educational programs. The module begins with introductory instructions and provides the context of the case: the user's role, relationship to the patient, and a brief description of the disaster scenario. The user is then presented with the main interview screen that consists of a video window to see patient responses and a list of potential questions to ask the patient. The question list was compiled from reviewing prior live SP interactions, psychosocial screening questions, focus group feedback, and evaluating usage data and feedback from users who beta-tested the application. Each of the 4 VP cases has approximately 75 potential questions available to ask of the patient with an accompanying video clip response for all questions.

Although users are limited to asking questions for which we have a filmed video response, they have complete freedom to determine the sequence and quantity of their interview. As users ask questions, the application unlocks additional relevant follow-up questions. For example, the CAGE14 questions are not available until the user asks preliminary questions about alcohol use. When the users are satisfied with their interview, they proceed to a differential diagnosis module where they are asked to create a prioritized differential list based on the content of the interview. We also ask the user to enter a rating for the confidence they have in their differential list.

After completing the interview and differential sections, the user enters the feedback section of the VP application. We have emphasized formative feedback as a key component of our VP interactions.15 The feedback section is divided into 4 parts: didactic, interview analysis, differential analysis, and diagnostic criteria. The didactic section includes a short video by an expert faculty member (M.L.) who discusses the communication challenges present in the case and reinforces the necessary skills and screening strategies.

The interview analysis section was designed around existing techniques of assessing provider-patient interactions. Using elements of the Macy Model,16 the Calgary-Cambridge Observation Guide,17 the Davis Observation Code, 18 and the Cox evaluation tool for videotape review, 19 we created a coding schema to classify the characteristics of patient interview questions into 1 of the following categories: open-ended, empathic statements, information gathering, psychosocial or contextual variable, patient perspective, patient involvement, patient understanding, and shared decision making. In this feedback section the system analyzes which questions the user asked and in what sequence. The users are then presented with a graphical breakdown of how they used their interview time by question type and characteristics.

The system also evaluates and provides feedback on basic communication skills. For example, this feedback section presents the user with a graph of the proportion of open-ended questions asked in the beginning, middle, and end of the interview. The system then provides customized feedback to the users based on their pattern of asking these types of questions. If the user did not ask the majority of open-ended questions in the beginning of the interview, this strategy is reinforced in the text of the feedback and a link to appropriate literature is displayed.

Customized feedback is also generated for the differential diagnosis that reinforces what the correct diagnosis is and where it appeared on the user's list, if at all. The final feedback section consists of a review of the diagnostic criteria for the case and a review of the appropriate screening questions.

Study Population

Anyone who attended a 1-day continuing medical education (CME) workshop, sponsored by NYU School of Medicine and the University of South Florida Center for Biodefense, was eligible to participate in the study. The workshop was advertised via print and electronic methods and was offered for CME credit with no associated fee. Attendees represented a mix of practitioner types including registered nurses, physicians, psychologists, and public health workers (Table 1).

Table 1.

Characteristics of Workshop Participants

| Control Group, N = 32 | InterventionGroup, N = 23 | P-Value | |

|---|---|---|---|

| Female, no. (%) | 19 (59) | 16 (70) | .44 |

| Age, no. (%) | |||

| 30 to 45 | 7 (22) | 5 (22) | .99 |

| 46 to 60 | 16 (50) | 14 (61) | .42 |

| 60+ | 9 (28) | 2 (9) | .10* |

| Professional role, no. (%) | |||

| Registered nurse | 14 (44) | 11 (48) | .76 |

| Physician | 5 (16) | 3 (13) | 1.00* |

| Other nurse | 4 (13) | 5 (22) | .47* |

| Psychologist | 8 (25) | 2 (9) | .17* |

| Other | 1 (3) | 2 (9) | .57* |

| Practice setting, no. (%) | |||

| Ambulatory care | 2 (6) | 4 (17) | .38* |

| Hospital-based | 15 (47) | 7 (3) | .22 |

| Academic | 3 (9) | 6 (26) | .14* |

| Public health | 4 (13) | 4 (17) | .71* |

| Other | 8 (25) | 2 (9) | .17* |

| Distribution of professional efforts, mean ( ± SD) | |||

| Percent time providing clinical services | 39.38 ( ± 37.46) | 45.25 ( ± 40.08) | .58 |

| Percent time teaching | 18.00 ( ± 30.41) | 10.94 ( ± 13.11) | .25 |

| Percent time performing research | 3.96 ( ± 16.35) | 4.72 ( ± 12.42) | .85 |

| Computer use and experience, no. (%) | |||

| Use computers several times a day | 25 (78) | 18 (78) | .99 |

| Use email | 29 (91) | 21 (91) | 1.00* |

| Use internet | 27 (84) | 20 (87) | 1.00* |

| Have used a computer for >2 years | 28 (88) | 20 (87) | 1.00* |

| Self-described computer knowledge, no. (%) | |||

| None | 1 (3) | 0 (0) | 1.00* |

| Beginner | 5 (16) | 3 (13) | 1.00* |

| Intermediate | 22 (69) | 18 (78) | .44 |

| Expert | 2 (6) | 1 (4) | 1.00* |

Calculated using Fisher's exact test

Study Design

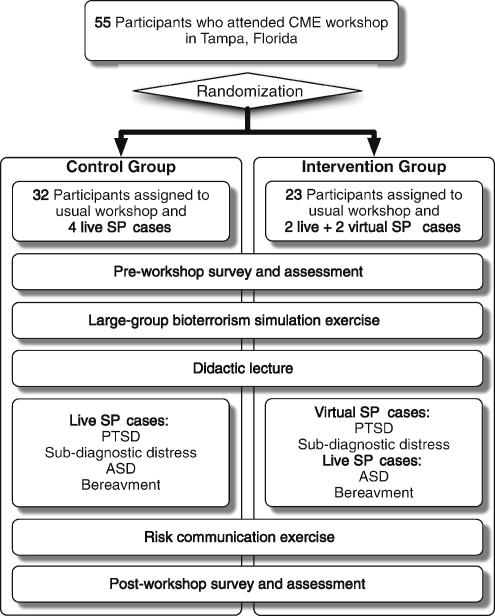

This study utilized pre- and posttests in the context of a within-subject design. Using a computer-generated random number table, participants were randomly assigned to a control group that received the traditional workshop including all 4 live SP cases or an intervention group that received the same course with 2 of the 4 patient cases being virtual and 2 live (Fig. 1). The number of computer workstations available limited the size of the intervention group. After randomization, the baseline characteristics of the subjects were similar in the 2 groups (Table 1). Of the 55 participants, 32 (58%) were randomized to receive the usual workshop with 4 live SP cases, and 23 (42%) were randomized to receive 2 live and 2 virtual SP cases. Neither participants nor workshop faculty were blinded to group assignments.

Figure 1.

Study design and workshop structure.

Following a large group disaster simulation and a didactic lecture, clinical skills were practiced in the control group using 2 1-hour faculty-facilitated mixed-discipline small group sessions with SPs presenting with the 4 prevalent disorders or, in the intervention group, 1 1-hour session with VPs where they interacted with 2 cases while sitting at a computer, followed by a 1-hour SP session. All participants in the intervention group experienced the PTSD and sub-diagnostic distress cases virtually and the ASD and bereavement cases using live SPs (Fig. 1). Except for the modality of patient simulation, all aspects of the course were identical across groups.

A pre- and postworkshop questionnaire primarily used 4-point Likert strength of agreement scale questions to assess perceptions of attitudes toward the subject matter and comfort or reluctance in caring for these types of patients. Knowledge and diagnostic skills were assessed through the use of clinical vignettes that asked participants to correctly identify appropriate screening strategies and to make a diagnosis. The postworkshop survey was identical to the preworkshop survey and also included Likert-scale questions addressing workshop effectiveness. These data and data from participant interaction with the computer-based VP application were captured in a de-identified database. The Institutional Review Board at our medical center reviewed and approved the research protocol, and all participants consented to study participation.

Statistical Analysis

Continuous variables were compared using Student's t test. Dichotomous data were compared with Fisher's exact test and contingency tables as appropriate. Normally distributed data are expressed as mean ± standard deviation (SD), and binomial data as proportions. Statistical analysis was performed using SPSS software version 11.0.3 for Macintosh OSX (SPSS, Chicago, IL), and a 2-tailed P value of .05 was considered statistically significant.

RESULTS

The groups had similar preworkshop comfort and reluctance regarding assessment of the psychosocial needs of patients after disasters as well as their sense of being prepared to respond to, screen, and care for affected patients (Table 2). The pre-post workshop change in comfort, reluctance, and preparedness to respond and screen was similar in both groups. Difference in pre-post workshop change in preparedness to care for and treat stress disorders between the control and intervention groups approached significance (P = .055, 95% confidence interval (CI) for the difference = 0.00 to 0.79).

Table 2.

Participant Perceptions of Their Clinical Skills and the Workshop

| Mean ( ± SD) | P-Value | ||

|---|---|---|---|

| Control Group, N = 32 | Intervention Group, N = 23 | ||

| Comfort assessing the psychosocial needs of patients after a disaster | |||

| Pretest | 2.43 ( ± 0.77) | 2.14 ( ± 0.64) | |

| Posttest | 3.19 ( ± 0.65) | 2.97 ( ± 0.60) | |

| Pre-post change | 0.77 ( ± 0.24) | 0.74 ( ± 0.25) | .66 |

| 95% CI of the difference* | −0.17 to 0.11 | ||

| Overall reluctance to address psychosocial needs of patients during a crisis | |||

| Pretest | 2.43 ( ± 0.62) | 2.42 ( ± 0.70) | |

| Posttest | 1.93 ( ± 0.55) | 1.97 ( ± 0.49) | |

| Pre-post change | −0.50 ( ± 0.66) | −0.45 ( ± 0.57) | .77 |

| 95% CI of the difference* | −0.28 to 0.38 | ||

| How prepared are you to respond to psychosocial needs of patients during a crisis | |||

| Pretest | 1.94 ( ± 0.84) | 1.75 ( ± 0.72) | |

| Posttest | 3.25 ( ± 0.51) | 3.15 ( ± 0.67) | |

| Pre-post change | 1.31 ( ± 0.59) | 1.40 ( ± 0.68) | .61 |

| 95% CI of the difference* | −0.26 to 0.45 | ||

| How prepared are you to screen patients for stress disorders in the setting of a crisis | |||

| Pretest | 1.94 ( ± 0.80) | 1.95 ( ± 0.95) | |

| Posttest | 3.28 ( ± 0.58) | 3.35 ( ± 0.75) | |

| Pre-post change | 1.34 ( ± 0.55) | 1.40 ( ± 0.99) | .79 |

| 95% CI of the difference* | −0.40 to 0.52 | ||

| How prepared are you to care for and treat stress and anxiety during a crisis | |||

| Pretest | 2.06 ( ± 1.22) | 1.65 ( ± 0.88) | |

| Posttest | 3.12 ( ± 0.94) | 3.10 ( ± 0.72) | |

| Pre-post change | 1.06 ( ± 0.67) | 1.45 ( ± 0.76) | .055 |

| 95% CI of the difference* | 0.00 to 0.79 | ||

| Overall workshop effectiveness | 3.66 ( ± 0.60) | 3.45 ( ± 0.60) | .26 |

| Effectiveness of small groups with live SPs | 3.71 ( ± 0.59) | 3.55 ( ± 0.67) | .36 |

| Effectiveness of virtual SPs | 3.50 ( ± 0.67) | ||

This number represents the 95% confidence interval (CI) of the difference between the pre-post change in the intervention group and the pre-post change in the control group.

CI, confidence interval, SP, standardized patients

In the intervention group both the live and virtual SPs were rated highly (3.55 and 3.50, respectively) with no significant difference between the 2 modalities (P = .79, Table 2). Pre-post workshop diagnostic abilities were equivalent for the 2 live cases: Acute stress disorder and bereavement (Table 3). There was no significant change in pre-post workshop diagnostic abilities for the PTSD case in either the control or intervention group. Those participants in the intervention group experienced a greater increase in their ability to correctly diagnose the sub-diagnostic distress case when compared with the control group; this difference approached significance (P = .054, Table 3).

Table 3.

Participant Ability to Correctly Diagnose Stress Disorders

| Control Group, N = 31* | Intervention Group, N = 21* | ||

|---|---|---|---|

| Posttraumatic stress disorder case† | |||

| Pretest (%) | 23 (74) | 17 (81) | |

| Posttest (%) | 23 (74) | 18 (86) | |

| Pre-post change % (95% CI) | 0 (−22,22) | 2 (−30, 19) | χ2 = 0.12 P = .73 |

| Sub-diagnostic stress case† | |||

| Pretest (%) | 11 (35) | 6 (29) | |

| Posttest (%) | 20 (65) | 17 (81) | |

| Pre-post change % (95% CI) | 30 (4,49) | 52 (22,71) | χ2 = 3.72 P = .054 |

| Acute stress disorder case | |||

| Pretest (%) | 9 (29) | 9 (43) | |

| Posttest (%) | 19 (61) | 18 (86) | |

| Pre-post change % (95% CI) | 32 (9,56) | 43 (14,63) | χ2 = 0.61 P = .44 |

| Bereavement case | |||

| Pretest (%) | 25 (81) | 19 (90) | |

| Posttest (%) | 26 (84) | 19 (90) | |

| Pre-post change % (95% CI) | 3 (−16,22) | 0 (−21,21) | χ2 = 0.03 P = .86 |

One participant in the SP group and 2 participants in the VP group did not complete the posttest clinical vignettes.

The posttraumatic stress disorder and sub-diagnostic distress cases utilized VP in the intervention group.

SP, standardized patients; VP, virtual patients, CI, confidence interval.

DISCUSSION

Participants who experienced both the live and virtual cases rated them highly, with no difference in rated effectiveness. In previous evaluations of such interventions participants have expressed similar enthusiasm to using these novel applications.7,20, 21 That the VP cases are less realistic than the SP cases did not appear to negatively affect participant's appreciation of their value. Subjective ratings by workshop participants were equivalent in both groups when assessing their level of comfort and preparedness addressing psychosocial issues in actual patients. Those participants who used the VPs had a much higher rating of feeling prepared to care for and treat these disorders. This may reflect the true intent of simulations, that participants can progress from the least intimidating virtual environments where mistakes have no consequence, to very realistic live simulated patients where the stakes are higher, and finally to real clinical situations. Learners who experience all 3 modalities may have better insight into the progression of and improvement in their clinical skills as they practice and reinforce them. In a prior comparison of VPs and small-group learning, medical students who used VPs in addition to live SPs felt more prepared and were more satisfied with the learning intervention overall.7

Case-based learning and simulations have traditionally employed 2 pedagogical approaches: narrative and problem solving. The narrative design guides users through cases and focuses on the impact of decisions or treatments as the simulation unfolds. Problem-solving cases lack much of this guidance and allow users more freedom in the tasks of information gathering. Our VP application employs a hybrid approach that includes elements of both narrative (progression through the case and feedback) and problem-solving (unstructured and unconstrained patient interview) designs. Previous work has shown that these 2 design philosophies are not equivalent, with preliminary studies suggesting that the narrative approach may be superior in teaching communication skills.21 Using the hybrid approach in this evaluation resulted in equivalent improvements of performance, measured by self-assessment of attitudes and a knowledge questionnaire, when compared with the traditional live SPs in this setting. This approach also ensured a more standardized exposure to case content and patient interview experience across individual learners.

The feedback section of the VP cases provided a significant amount of didactic information and a more consistent and rigorous evaluation of the screening questions and criteria. These aspects are much less consistently integrated into the live SP cases and rely heavily on the skills and experience of the faculty facilitator. The clinical vignettes, designed to test diagnostic knowledge, showed that at baseline our workshop participants were sophisticated in their recognition of PTSD and bereavement. There was no significant increase in either group in these conditions, a fact that we have seen consistently among primary care providers.12 Pre-post intervention improvements were greater in the sub-diagnostic distress and the ASD cases with the VP group experiencing a larger benefit, though not significantly so. This may also reflect the structured customized feedback in the VP application that included significant didactic information and reinforced the key diagnostic and screening criteria. The VP application provided every learner with the same high-quality feedback from our clinical experts.

One additional benefit that the VP application has over traditional SP cases is the ease with which we can integrate changing medical evidence into customized feedback for the learner. We embed PubMed22 links to current literature on screening and diagnostic techniques as well as literature addressing general communication and interviewing skills. Using our application we can easily update which literature is included and the changes are instantaneously reflected in the patient simulations without having to reprint curricular material or re-train faculty preceptors.

Our evaluation was limited by the amount of quantitative and qualitative data we could collect from the live SP sessions. This limitation highlights an additional benefit of the VP, in that data collection for evaluation, feedback, and assessment is easier and more efficient but not as qualitatively rich. Our VP system can perform a much more accurate and objective evaluation of the content of the interview; however, there are many aspects such as nonverbal communication, eye contact, and the use of proper language and jargon that are routinely captured during live SP interactions but missed by this approach. The ultimate goal is to assess the impact on clinical skills with actual patients, which we could not perform in the setting of this workshop.

Our study was also limited by relatively small numbers of participants, reducing our ability to detect changes across the 2 groups and to formally evaluate changes in performance as learners progressed from VP to SP cases, 2 of the cases, PTSD and bereavement, had very high baseline performance with little change postintervention, demonstrating significant “case-effect” and reducing the sensitivity of our assessments of changes in knowledge and diagnostic ability. The majority of participants were MDs and RNs; however, others had little experience with building differential diagnoses and evaluating clinical vignettes, potentially limiting their performance, though these participants were equally represented in both study groups.

Future directions for this project include the integration of natural language processing to allow users to perform interviews of VPs by typing free-text questions rather than selecting them from a list. We anticipate that this type of interactivity will create a much more natural and realistic patient interview. This enhancement will introduce more elements of narrative design and would theoretically improve the effectiveness of the application to teach communication skills.

Even as rapidly improving technology allows for more realistic VPs, and evidence suggests their educational effectiveness, the role of these applications in medical curricula remains unclear. Whether these applications should be used in place of live SP interactions or serve as a complement to them remains to be seen. Current evidence suggests that the use of VPs as a component of escalating simulations from computer-based to SP to actual clinical encounters may be the most appropriate and effective strategy.7,21

Conclusion

With respect to subjective experience of the workshop, SP encounters using VPs had equivalent impact on learners when compared with those exposed to live cases. Objective measures of performance, knowledge, and diagnostic abilities were equivalent between live and virtual standardized patients, and the VP may be superior in certain specific applications.

Acknowledgments

This work is supported under a National Health Care Workforce Development Initiative of the Centers for Disease Control and Prevention through the Association of American Medical Colleges, grant number U36/CCU319276-02-3, AAMC ID number MM-0344-03/03.

REFERENCES

- 1.Anderson MB, Kassebaum DG. Proceedings of the AAMC's consensus conference on the use of standardized patients in the teaching and evaluation of clinical skills. Acad Med. 1993;68:437–483. [PubMed] [Google Scholar]

- 2.Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE) Med Educ. 1979;13:41–54. [PubMed] [Google Scholar]

- 3.Sachdeva AK, Wolfson PJ, Blair PG, et al. Impact of a standardized patient intervention to teach breast and abdominal examination skills to third-year medical students at two institutions. Am J Surg. 1997;173:320–325. doi: 10.1016/S0002-9610(96)00391-1. [DOI] [PubMed] [Google Scholar]

- 4.Roche AM, Stubbs JM, Sanson-Fischer RW, et al. A controlled trial of educational strategies to teach medical students brief intervention skills for alcohol problems. Prev Med. 1997;26:78–85. doi: 10.1006/pmed.1996.9990. [DOI] [PubMed] [Google Scholar]

- 5.McGraw RC, O'Connor HM. Standardized dized patients in the early acquisition of clinical skills. Med Educ. 1999;33:572–578. doi: 10.1046/j.1365-2923.1999.00381.x. [DOI] [PubMed] [Google Scholar]

- 6.Hubal RC, Kizakevich PN, Guinn CI, Merino KD, West SL. The virtual standardized patient. Simulated patient-practitioner dialog for patient interview training. Stud Health Technol Inform. 2000;70:133–138. [PubMed] [Google Scholar]

- 7.Fleetwood J, Vaught W, Feldman D, Gracely E, Kassutto Z, Novack D. MedEthEx online: a computer-based learning program in medical ethics and communication skills. Teach Learn Med. 12:96–104. doi: 10.1207/S15328015TLM1202_7. 2000 Spring. [DOI] [PubMed] [Google Scholar]

- 8.Stevens A, Hernandex J, Johnsen K, et al. The Use of Virtual Patients to Teach Medical Students Communication Skills. The 2005 Association for Surgical Education Annual Meeting; 2005 April 7–10; New York, NY. [Google Scholar]

- 9.McGee JB, Neill J, Goldman L, Casey E. Using multimedia virtual patients to enhance the clinical curriculum for medical students. Medinfo. 1998;9(Part 2):732–735. [PubMed] [Google Scholar]

- 10.Greenberg R, Loyd G, Wesley G. Integrated simulation experiences to enhance clinical education. Med Educ. 2002;36:1109–1110. doi: 10.1046/j.1365-2923.2002.136131.x. [DOI] [PubMed] [Google Scholar]

- 11.Lipkin M, Kalet A, Zabar S, Triola M, Freeman R. Psychosocial Aspects of Bio-terrorism: Preparing Physicians Utilizing an Evidence-Based, Experiential Model and Multi-Modal Evaluation. The 114th American Association of Medical Colleges Annual Meeting; 2003 November 7–12; Washington, DC. [Google Scholar]

- 12.Lipkin M, Cope D, Eisenman D, et al. Psychosocial aspects of bioterrorism: preparing Primary Care. The 26th Annual Meeting of the Society of General Internal Medicine; April 30–May 3; Vancouver, Canada. [Google Scholar]

- 13.Zabar S, Kachur E, Kalet A, et al. Practicing Bioterrorism-Related Communication Skills with Standardized Patients (SPs). American Academy on Physician and Patient 2003 Teaching & Research Forum: Emerging Trends in Health Communication; 2003 October 9–12; Baltimore Maryland. [Google Scholar]

- 14.Ewing JA. Detecting alcoholism. The CAGE questionnaire. J Am Med Assoc. 1984;252:1905–1907. doi: 10.1001/jama.252.14.1905. [DOI] [PubMed] [Google Scholar]

- 15.Triola M, Feldman H, Kachur E, Holloway W, Friedman B. A novel feedback system for virtual patient interactions. In: Shortliffe E, editor. MEDINFO 2004. Proceedings of the 11th World Congress on Medical Informatics; 2004; San Francisco, CA. Amsterdam: IOS Press; 1886. [Google Scholar]

- 16.Yedidia MJ, Gillespie CC, Kachur E, et al. Effects of communications training on medical student performance. JAMA. 2003;290:1157–65. doi: 10.1001/jama.290.9.1157. [DOI] [PubMed] [Google Scholar]

- 17.Kurtz SM, Silverman JD. The Calgary-Cambridge referenced observation guides an aid to defining the curriculum and organizing the teaching in communication training programmes. Med Edu. 1996;30:83–89. doi: 10.1111/j.1365-2923.1996.tb00724.x. [DOI] [PubMed] [Google Scholar]

- 18.Callahan EJ, Bertakis KD. Development and validation of the Davis observation code. Fam Med. 1991;23:19–24. [PubMed] [Google Scholar]

- 19.Cox J, Mulholland H. An instrument for assessment of videotapes of general practitioners' performance. BMJ. 1993;306:1043–1046. doi: 10.1136/bmj.306.6884.1043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bearman M. Is virtual the same as real? Medical students' experiences of a virtual patient. Acad Med. 2003;78:538–545. doi: 10.1097/00001888-200305000-00021. [DOI] [PubMed] [Google Scholar]

- 21.Bearman M, Cesnik B, Liddell M. Random comparison of ‘virtual patient’ models in the context of teaching clinical communication skills. Med Educ. 2001;35:824–832. doi: 10.1046/j.1365-2923.2001.00999.x. [DOI] [PubMed] [Google Scholar]

- 22.PubMed. National Library of Medicine. [April 20, 2005]. Available at http://www.pubmed.org. [DOI] [PubMed]