Abstract

Background

The Cochrane Highly Sensitive Search Strategy (HSSS), which contains three phases, is widely used to identify Randomized Controlled Trials (RCTs) in MEDLINE. Lefebvre and Clarke suggest that reviewers might consider using four revisions of the HSSS. The objective of this study is to validate these four revisions: combining the free text terms volunteer, crossover, versus, and the Medical Subject Heading CROSS-OVER STUDIES with the top two phases of the HSSS, respectively.

Methods

We replicated the subject search for 61 Cochrane reviews. The included studies of each review that were indexed in MEDLINE were pooled together by review and then combined with the subject search and each of the four proposed search strategies, the top two phases of the HSSS, and all three phases of the HSSS. These retrievals were used to calculate the sensitivity and precision of each of the six search strategies for each review.

Results

Across the 61 reviews, the search term versus combined with the top two phases of the HSSS was able to find 3 more included studies than the top two phases of the HSSS alone, or in combination with any of the other proposed search terms, but at the expense of missing 56 relevant articles that would be found if all three phases of the HSSS were used. The estimated time needed to finish a review is 1086 hours for all three phases of the HSSS, 823 hours for the strategy versus, 818 hours for the first two phases of the HSSS or any of the other three proposed strategies.

Conclusion

This study shows that compared to the first two phases of the HSSS, adding the term versus to the top two phases of the HSSS balances the sensitivity and precision in the reviews studied here to some extent but the differences are very small. It is well known that missing relevant studies may result in bias in systematic reviews. Reviewers need to weigh the trade-offs when selecting the search strategies for identifying RCTs in MEDLINE.

Background

The new century has seen a significant proliferation of systematic reviews, and they have become one of the key tools for the evidence-based medicine movement. A quality systematic review involves a comprehensive search for relevant studies on a specific topic, and those identified are then assessed and synthesized according to a predetermined and explicit method [1]. Although the studies evaluated in systematic reviews can be any kind of research [2], reviewers of the effects of health care interventions tend to base their reviews on Randomized Controlled Trials (RCTs), when possible, as they are one of the most rigorous study designs [3]. Comprehensive searching, when conducting systematic reviews of RCTs, is considered a standard practice [4]. It has long been assumed that information specialists should use highly sensitive search strategies to identify potentially relevant primary studies for systematic reviews. Sensitivity and precision are two parameters to evaluate the performances of a search strategy. Sensitivity is defined as the proportion of relevant studies retrieved, while precision is the proportion of retrieved studies that are relevant. An ideal search strategy would have both high sensitivity and high precision, which means most of the available relevant items in a database are retrieved by the search strategy and most of the items retrieved by the search strategy are relevant. However, sensitivity and precision are inversely related. The higher the sensitivity, the lower the precision [5]. This means that when a highly sensitive search strategy is used, many irrelevant studies are retrieved, thus increasing the workload for the researchers conducting the systematic research. In practice, reviewers, restricted by time and cost, must strive to identify the maximum number of eligible trials, hoping that the studies included in the review will be a representative sample of all eligible trials [6]. The overall time and cost of doing a systematic review is dependent on the size of initial bibliographic retrieval [7]. Thus, fine-tuning this initial step in the review process can yield great efficiencies.

The MEDLINE database, created and maintained by the United States National Library of Medicine, is the most widely-used database in medicine and other health science fields. It includes 15 million citations dating back to the mid-1960's [8]. The Highly Sensitive Search Strategy (HSSS) [9] is a standard search strategy recommended by the Cochrane Collaboration to identify RCTs in the MEDLINE database. It was developed in the early 1990's and contains three phases (See Additional file 1). While agreeing that the top two phases of the HSSS should always be used to identify RCTs in MEDLINE, a pilot study by the U.K. Cochrane Centre in 1994 concluded that the terms of the third phase were too broad to warrant their inclusion in the MEDLINE Retagging Project [10,11]. Another study found that the search for RCTs on hypertension was sufficiently sensitive only when all three phases of the search strategy were used [12]. As Lefebvre and Clarke [11] suggest, it would not be worth applying all three phases but individual reviewers might consider it worth combining the top two phases with individual terms, such as the free-text terms volunteer, crossover and versus, and the Medical Subject Heading (MeSH) CROSS-OVER STUDIES which was introduced after the search strategy was devised.

A few recent studies [13,14] have explored different search strategies to identify RCTs in MEDLINE. Haynes and colleagues [13] developed separate strategies for different purposes: strategies with high sensitivity for comprehensive searching and strategies with high precision for more focused searching. Robinson and Dickersin [14] tested a revised search strategy of all three phases of the HSSS for OVID MEDLINE and PubMed. This strategy has a better performance than the original HSSS. Because an increasing number of systematic reviews have to be completed within tight budgets and timelines, it is sometimes necessary to strike a balance between comprehensiveness and precision. Several proposals to refine that balance by making minor modifications to the first two phases of the HSSS were put forth by Lefebvre and Clarke in 2001 [11]. A comprehensive literature search reveals that there are no published data that evaluate the performances of the four search strategies proposed. Because balancing the initial retrieval size greatly improves the efficiency of a systematic review, we tested the performances of these four proposed revisions of the HSSS: combining the top two phases of HSSS with the free-text terms volunteer, crossover, versus, and the MeSH term CROSS-OVER STUDIES, respectively [11].

Methods

Selection of systematic reviews

Systematic reviews, which might have used the HSSS to identify RCTs, were selected from the Cochrane Database of Systematic Reviews (CDSR), OVID interface (1st Quarter 2003) using the following search strategy:

(hsss.tw.) or (highly sensitive search.tw.)

These systematic reviews were then screened using three eligibility criteria. To be selected, each systematic review had to use at least one phase of the HSSS, report the citations for included and excluded studies, and indicate if primary studies were either RCTs or quasi RCTs.

Finding the index status of each included study

We did a known-item search for the included studies of each systematic review that met the three inclusion criteria in OVID MEDLINE (1966 – 2003) to determine whether they were indexed in MEDLINE or not. The bibliographic records of the included studies that were indexed in MEDLINE were aggregated together by review using the Boolean operator "or". Each known-item search strategy was saved in OVID MEDLINE. We recorded the number of included studies that were indexed in MEDLINE for each systematic review. This was used to calculate sensitivity for each review. One sample of the known-item search strategy is listed in Additional file 2.

Test search strategies

Each of the four candidate terms the free-text terms volunteer, crossover, versus and MeSH term CROSS-OVER STUDIES was combined with the first two phases of the HSSS to create four test strategies with the Boolean operator "or" (See Additional file 1. The search strategies are hereafter abbreviated as SSvolunteer, SScrossover, SSversus, SSCROSS-OVERSTUDIES, respectively).

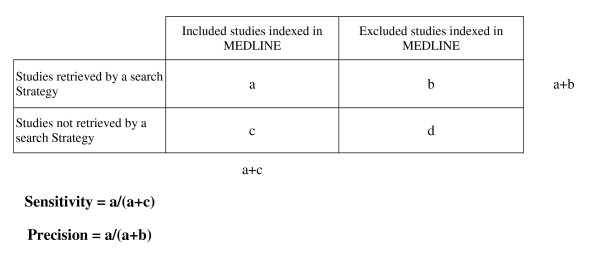

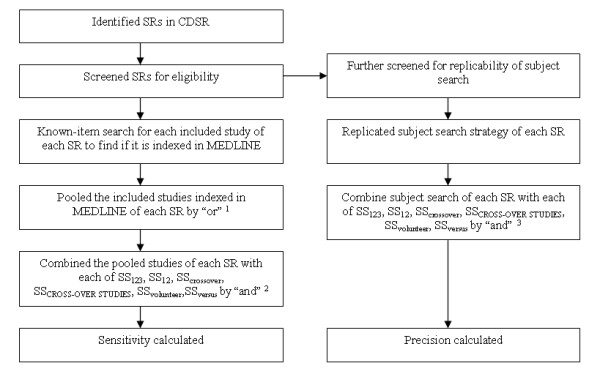

Sensitivity

For each of the systematic reviews that met the three inclusion criteria, we combined the pooled included studies indexed in MEDLINE and each of the four test search strategies, all three phases of the HSSS (hereafter abbreviated as SS123), and the top two phases of the HSSS (hereafter abbreviated as SS12) by using Boolean operator "and" in OVID MEDLINE (1966-February 2004) to find out how many included studies indexed in MEDLINE were retrieved by each search strategy (See Figure 1). We recorded the numbers of included trials indexed in MEDLINE that could be retrieved by each of SS123, SS12, SScrossover, SSCROSS-OVERSTUDIES, SSvolunteer, and SSversus. Based on these data, the sensitivity of each strategy for each review was calculated (See Figure 2). More specifically, the sensitivity for each review is defined as:

Figure 1.

Steps of how sensitivity and precision were calculated.

Note 1. The number of the pooled studies of each SR is the denominator of the sensitivity for each SR.

Note 2. The number of items retrieved in this step is the nominator of the sensitivity and the precision for each SR.

Note 3. The number of items retrieved in this step is the initial search output (articles needed to screen) of a SR, which is also the denominator of the precision.

SR = systematic review

Figure 2.

Formula for calculating sensitivity and precision.

Sensitivity =

Precision

Each systematic review that met the three eligibility criteria was further screened to determine if the subject search was presented in enough detail to permit replication. For the reviews with a detailed description of the subject search, we replicated the search strategy of the specific topic (e.g., search strategy to find "hormone"-related studies). We assumed that the subject search presented in each review was a comprehensive search for identifying the subject- related studies. The subject search strategy was combined with each of SS123, SS12, SScrossover, SSCROSS-OVERSTUDIES, SSvolunteer, and SSversus using the Boolean operator "and" (See Additional file 1). If the subject search presented in the Cochrane reviews was conducted in MEDLINE interfaces other than OVID (e.g., SilverPlatter), the search was converted into OVID syntax. This gave us the size of the initial search retrieval. We recorded the number of the initial retrieval for each review, which was used to calculate the precision (See Figure 1). Based on these data, the precision of each search strategy was calculated for each review (See Figure 2). The precision of each search strategy for each review is defined as:

Precision =

Results

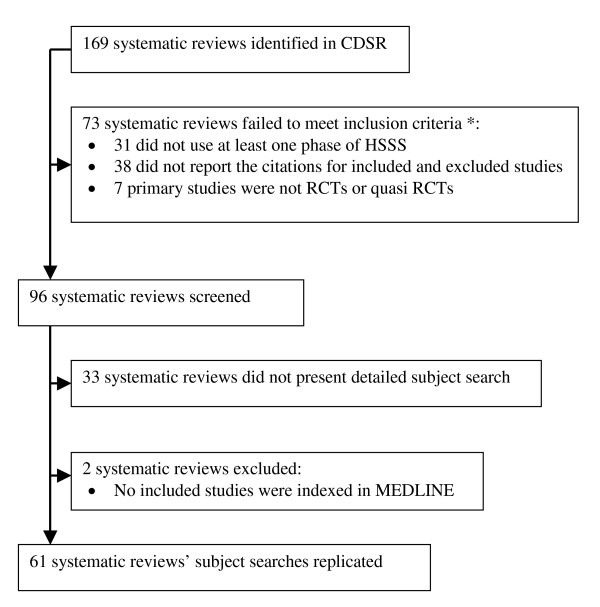

We identified 169 systematic reviews from CDSR, which represented about 10% of the reviews published in the database in 2003. Of the 169 systematic reviews, 96 reviews met the three inclusion criteria. Of the 96 reviews, 61 reviews (63.54%) presented detailed subject search to allow us to replicate their subject search (See Additional file 3); 33 (34.38%) systematic reviews did not list detailed subject search strategies; in 2 (2.08%) reviews, none of the included studies was indexed in MEDLINE (See Figure 3). The median number of included studies per review is 12 studies. The 61 reviews were done by different Review Groups, mainly Musculoskeletal Injuries, Eyes and Vision, Renal, Prostatic Diseases and Urologic Cancers, Back, Upper Gastrointestinal and Pancreatic Diseases, and Skin. The review group that listed the largest number of detailed search strategies is Musculoskeletal Injuries Review Group (13 reviews, 21.3%). Characteristics of included studies are presented in Table 1.

Figure 3.

Flow of systematic reviews through the phase of screening and eligibility evaluation. * For some reviews, more than one exclusion criteria was noted, therefore numbers do not add up to 73.

Table 1.

Characteristics of included studies.

| n (total = 61) | % | |

| Year of publication or substantive update | ||

| Median | 2001 | |

| Interquartile range | 1999–2001 | |

| Focus of the review | ||

| Treatment | 52 | 85.2 |

| Prevention | 7 | 11.5 |

| Diagnosis | 1 | 1.6 |

| Other | 1 | 1.6 |

| Study designs included | ||

| RCT only | 33 | 54.1 |

| RCT and quasiRCT | 24 | 39.3 |

| RCT and other controlled trials | 4 | 6.6 |

| Number of included studies per review | ||

| Median | 12 | |

| Interquartile range | 5.5–19.5 | |

| Review Group | ||

| Musculoskeletal Injuries | 13 | 21.3 |

| Eyes and Vision | 11 | 18.0 |

| Renal | 6 | 9.8 |

| Prostatic Diseases and Urologic Cancers | 5 | 8.2 |

| Back | 4 | 6.6 |

| Upper Gastrointestinal and Pancreatic Diseases | 3 | 4.9 |

| Skin | 3 | 4.9 |

| Other | 16 | 30.0 |

We were able to calculate the sensitivity for 94 reviews. The overall sensitivity of the four proposed search strategies, SS12, and SS123 is very high, with the same median of 100%. For SScrossover, SSCROSS-OVERSTUDIES, SSvolunteer, SSversus, and SS12, 52% of the reviews achieved a perfect sensitivity (100%). For SS123, 70% of the reviews achieved a perfect sensitivity. A closer examination of the data found that, across the 94 reviews, SSversus was able to find 3 more relevant articles than SScrossover, SSCROSS-OVERSTUDIES, SSvolunteer, or SS12, but SS123 found 56 more relevant articles than SSversus. There is no obvious difference between the sensitivities of the 61 reviews which listed their detailed subject specific search strategies and those of the 33 reviews which did not list their search strategies (The medians of the sensitivities for each search strategy for both categories are all 100%). The sensitivity of the four test search strategy, SS12, and SS123 are presented in Table 2.

Table 2.

Sensitivity of each search strategy.

| Search strategy | Median (%) | Interquartile range (%) | Mean rank (Friedman test) | Reviews with perfect sensitivity (%) | Relevant items missed (across 94 reviews) |

| SS123 | 100.00 | 4.17 | 4.24 | 70.21 | 49 |

| SS12 | 100.00 | 12.15 | 3.34 | 52 | 108 |

| SScrossover | 100.00 | 12.15 | 3.34 | 52 | 108 |

| SSCROSS-OVERSTUDIES | 100.00 | 12.15 | 3.34 | 52 | 108 |

| SSvolunteer | 100.00 | 12.15 | 3.34 | 52 | 108 |

| SSversus | 100.00 | 12.15 | 3.41 | 52 | 105 |

χ2 = 131.667, p < .01

We were able to calculate the precision for the 61 reviews presented a detailed subject search. The precision of the six search strategies can be found in Table 3. The precision of the four proposed search strategies and the top two phases of HSSS (Median range: 1.48% – 1.68%) was much higher than that of the all three phases of HSSS (median 0.54%). The size of initial retrieval of each search strategy is shown in Table 4. The medians of initial retrieval of the four proposed search strategies and SS12 for each review range from 408–430 studies, and the median of SS123 is 1636 studies. The median of initial retrieval of SSversus is about 1/4 of that of SS123, which means the number of articles needed to read by reviewers would be reduced significantly if SSversus instead of SS123 was used to identify RCTs. When SS123 was used, 36 reviews (59.02%) had a very low precision (less than 1%); when SS12, SScrossover, SSCROSS-OVERSTUDIES, or SSvolunteer was used, 21 reviews (34.43%) had a precision less than 1%; When SSversus was used, 22 reviews (36.07%) had a precision less than 1%. Table 4 also shows the Article Read Ratio (ARR), which is defined as the median of articles initially retrieved divided by the median of included studies retrieved. The ARR of SS123 (182) is significantly higher than that of SSversus (54), and the ARRs of SS12, SScrossover, SSCROSS-OVERSTUDIES, and SSvolunteer are the same (51). We calculated the estimated time to finish a review for each search strategy based on the regression equation developed by Allen and Olkin [7]: time = 721 + 0.243 x - 0.0000123x2, where x denotes the number of articles initially retrieved, as shown in Table 4. The time needed to finish a review is 1086 hours for SS123, 823 hours for SSversus, 818 hours for SS12, SScrossover, SSCROSS-OVERSTUDIES, or SSvolunteer.

Table 3.

Precision of each search strategy.

| Search strategy | Median (%) | Interquartile range (%) | Mean rank (Friedman test) | Reviews with precision < 1% (%) | Total retrieval size (across 61 reviews) |

| SS123 | 0.54 | 1.87 | 1.02 | 59.02 | 508625 |

| SS12 | 1.68 | 5.55 | 5.17 | 34.43 | 151691 |

| SScrossover | 1.68 | 5.54 | 4.24 | 34.43 | 152662 |

| SSCROSS-OVERSTUDIES | 1.68 | 5.55 | 4.77 | 34.43 | 151844 |

| SSvolunteer | 1.68 | 5.51 | 3.52 | 34.43 | 152880 |

| SSversus | 1.48 | 5.04 | 2.29 | 36.07 | 171032 |

χ2 = 258.634, p < .01

Table 4.

Size of initial retrieval per review.

| Search strategy | Size of initial retrieved articles Median (interquartile range) | Size of included studies retrieved Median (interquartile range) | Article Read Ratio1 | Estimated time to finish a review (hours)2 |

| SS123 | 1636 (4042) | 9 (14) | 182 | 1086 |

| SS12 | 408 (1210) | 8 (12) | 51 | 818 |

| SScrossover | 408 (1216) | 8 (12) | 51 | 818 |

| SSCROSS-OVERSTUDIES | 408 (1211) | 8 (12) | 51 | 818 |

| SSvolunteer | 408 (1235) | 8 (12) | 51 | 818 |

| SSversus | 430 (1361) | 8 (12) | 54 | 823 |

Note 1. Article Read Ratio = Median of Initial Retrieved Articles/Median of Included Studies Retrieved

Note 2. Estimated time to finish a review = 721 + 0.243x - 0.0000123x2, where x denotes the median of size of initial retrieved articles showed in Column 2 of this table.

Discussion

Searching bibliographic databases to identify relevant studies is one of the most important steps of a systematic review [6]. All the systematic reviews identified in this research searched MEDLINE; therefore, developing an effective MEDLINE search strategy is an integral component of a comprehensive search plan [14]. We validated four previously proposed variants of the HSSS by testing their retrieval characteristics. Across the 61 reviews, the performance of SScrossover, SSCROSS-OVERSTUDIES, and SSvolunteer are the same as SS12. SS123 found 56 more relevant articles than SSversus, and SSversus found 3 more relevant articles than SS12, SScrossover, SSCROSS-OVERSTUDIES, or SSvolunteer. The number of articles needed to read per review when SSversus is used is about 1/4 of that when SS123 is used, and the estimated time to finish a review for SS123 is 32% higher than that for SSversus. On the other hand, the number of articles needed to read when SSversus is used is only 5% (22 additional articles) more than that when SS12 is used, and the estimated time to finish a review for SSversus is 0.6% (5 hours) more than that for SS12. The result shows that, compared to SS123, SSversus will reduce the number of articles needed to read significantly, thus reducing the reviewers' work in assessing citations for eligibility and the total time to complete a review, while still maintaining a workload comparable to SS12 but a slightly better sensitivity than SS12. Although the other three proposed search strategies also have a lower initial retrieval size than SS123, their sensitivity is the same as SS12.

A comprehensive search is considered one of the key factors that distinguish a systematic review from a narrative review, and it is well-known that missing relevant studies will possibly result in bias for systematic reviews. This study confirms that SS123 will miss fewer relevant studies than SS12, and the four variants recommended by Lefebvre and Clarke, including SSversus. Because timelines and financial costs are usually a concern to most reviewers, they must decide whether these benefits justify the extra costs for much broader screening of the initial retrievals and the longer time needed to complete a review.

The comprehensiveness of systematic review searches not only depends on search filters but also on the varieties of databases searched. The Cochrane Central Register of Controlled Trials (CENTRAL) is the most comprehensive database of controlled trials. It is hoped that CENTRAL can serve as an all-inclusive source of controlled trials. When searching this database, reviewers need only to develop subject search strategies, thus avoiding the problem of selecting search filters. Each Collaborative Review Group (CRG) also develops a subject specialized register of trials to ensure that reviewers within the CRG have access to the maximum number of studies to their topic. All the reviews screened in this study indicated that they searched CENTRAL and/or the specialized CRG registers. The Cochrane Collaboration has gone to great effort to enhance the comprehensiveness of CENTRAL. Therefore, if reviewers do decide to use the first two phases of the HSSS and plan to search CENTRAL and/or the specialized CRG registers as well, they may consider using SSversus because it maintains a workload comparable to SS12 but a slightly better sensitivity. If reviewers do not have access to CENTRAL or the specialized registers, we suggest that they still use all three phases of the HSSS to maintain the quality of systematic reviews.

Since the first publication of the Highly Sensitive Search Strategy [9] few studies have examined the performance of the HSSS and its variations in general. Robinson and Dickersin [14] in their study testing a revision of the HSSS, concluded that adding the search terms "(latin adj square).tw." and "CROSS-OVER STUDIES" in all three phases of the HSSS would retrieve more controlled trials. Haynes and colleagues [13] recently developed a search strategy to identify RCTs in the MEDLINE that has a sensitivity of 99.3%. Across the medical information science field, although an increasing number of studies [15-22] have been done to test various search strategies to find RCTs in subject-specific areas, all of them tested the results on the RCT level. That is, their focus was determining how many studies identified by a search strategy were actually RCTs. Our study applied a different approach to test the performances of the search strategies. Because we tested how many studies included in a systematic review could be found through the four test strategies and the HSSS, our study is the first one that calculates the sensitivity and the precision of the search strategies at the systematic review level. Jenkins [23] states that the ultimate test of a search filter is to find out how well it performs in the real situation. We replicated the search strategies used in real systematic reviews, therefore, our method and results may have more practical significance for those conducting systematic reviews because they provide quantified data, including initial retrieval size and time needed to finish a review, to describe the cost-effectiveness of each search strategy. Systematic reviews should use evidence-based methods, and the validation of search filters is important in that context. Our contribution is to provide methodological rigor to previous non-validated recommendations. Thus reviewers can make informed decision based on this evidence.

The strength of a systematic review over any other kind of review is that it provides readers with an approach to replicate it [1]. The Quality of Reporting of Meta-analyses (QUOROM) statement suggests that a high-quality systematic review should explicitly describe all search strategies used to identify relevant studies [24]. The Cochrane Collaboration first recommended that reviewers report the full search strategies in the additional tables section of the Cochrane systematic review reports in 2002 [25], and the recent Cochrane Handbook for Systematic Reviews of Interventions clearly indicates that reviewers should describe their search strategies in sufficient detail so that the process could be replicated [3]. When screening the 96 Cochrane reviews, we found that 33 (34.38%) reviews did not describe their detailed search strategies, thus we could not replicate their search strategies. Of the 33 reviews, although a few listed their search strategies, they were not accurate. Common problems were that non-MeSH terms were listed as MeSH terms, and truncation was not used correctly. The 33 reviews that did not list the full search strategies all referred to the specific CRG search strategies with a hyperlink to individual CRG websites. But when browsing these websites, we did not find the search strategies. In order to improve the quality of reporting of systematic reviews, investigators should report their exact search strategies to allow readers to judge the breadth and depth of the search. If journals publishing systematic reviews do not have space for authors to list their full search strategies, we suggest that authors indicate that the search strategy is available upon request. Further, we suggest that the group search strategies for each CRG be documented on their websites. Our results mirror the findings of a recent study that assessed the quality of reporting of systematic reviews published in both CDSR and journals in pediatric complementary and alternative medicine (CAM) [26]. The study found that half of the CAM reviews reported the search terms used, but that very few (8.5%) actually listed the search strings. Our research indicates that the reporting quality of literature searches for systematic reviews has been improving but is still less than optimal.

Our study has some limitations. First, we tested only how many included studies could be retrieved by each of the six search strategies, not whether the 56 relevant studies missed by SSversus would change the outcomes of the 61 reviews. Therefore, we can not judge whether skipping Phase Three of the HSSS would result in bias in systematic reviews. Second, an effective search strategy for systematic reviews usually includes two parts: a subject search and a search filter, both of which are integral to the search strategy. In our study, we replicated the subject search for each systematic review, but the quality of these subject searches is unknown. There is a possibility that some relevant studies could be retrieved if the subject search were more comprehensive. Therefore, the sensitivity of the test search strategies could be lower, and precision higher than those we presented if the subject search were truly comprehensive. More studies should be done on developing high-quality subject searches, which calls for cooperation between medical subject experts and information specialists. In addition, there are limitations in using sensitivity and precision to evaluate the performances of information retrieval, which were discussed by Kagolovsky and Moehr [27].

Conclusion

Since MEDLINE is the most widely used database in searching for evidence for systematic reviews, formulating an optimal search strategy to find RCTs in MEDLINE will greatly increase the efficiency of systematic reviews. Our study demonstrates that of the variants to HSSS12 proposed by Lefebvre and Clarke, adding the free text word versus to the first two phases of the HSSS provides a modest balance of the precision and sensitivity in the reviews studied here. We hope that this finding will become part of the evidence used by systematic reviewers and information specialists in making decisions on developing their search strategies for systematic reviews.

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

LZ initially conceived of, designed, and executed the study, and wrote and revised the entire manuscript draft. IA reviewed the study design and results, and participated in the revision of the manuscript. MS participated in the study design, data extraction, drafting and revision of the manuscript. All authors read and approved the final manuscript.

| Appendix 1: Search strategies used | |

| Appendix 1: Search strategies used | |

| Phase 1 | |

| RANDOMIZED CONTROLLED TRIAL.pt. | |

| CONTROLLED CLINICAL TRIAL.pt. | |

| RANDOMIZED CONTROLLED TRIALS.sh. | |

| RANDOM ALLOCATION.sh. | |

| DOUBLE BLIND METHOD.sh. | |

| SINGLE-BLIND METHOD.sh. | |

| or/1–6 | |

| (ANIMAL not HUMAN).sh. | |

| 7 not 8 | |

| Phase 2 | |

| RANDOMIZED CONTROLLED TRIAL.pt. | |

| CONTROLLED CLINICAL TRIAL.pt. | |

| RANDOMIZED CONTROLLED TRIALS.sh. | |

| RANDOM ALLOCATION.sh. | |

| DOUBLE BLIND METHOD.sh. | |

| SINGLE-BLIND METHOD.sh. | |

| or/1–6 | |

| (ANIMAL not HUMAN).sh. | |

| 18 or/10–17 | |

| 19 18 not 8 | |

| 20 19 not 9 | |

| Phase 3 | |

| 21 COMPARATIVE STUDY.sh. | |

| 22 exp EVALUATION STUDIES/ | |

| 23 FOLLOW UP STUDIES.sh. | |

| 24 PROSPECTIVE STUDIES.sh. | |

| 25 (control$ or prospectiv$ or volunteer$).ti, ab. | |

| 26 or/21–25 | |

| 27 26 not 8 | |

| 28 27 not (9 or 20) | |

| 29 9 or 20 or 28 | |

| Test Strategies | |

| All 3 Phases | Subject SS and (9 or 20 or 28) |

| Top 2 Phases | Subject SS and (9 or 20) |

| Crossover | Subject SS and (9 or 20 or (crossover.ti, ab. not (ANIMAL not HUMAN).sh.)) |

| CROSS-OVER STUDIES | Subject SS and (9 or 20 or (CROSS-OVER-STUDIES.sh. not (ANIMAL not HUMAN).sh.)) |

| Volunteer | Subject SS and (9 or 20 or (volunteer.ti, ab. not (ANIMAL not HUMAN).sh.)) |

| Versus | Subject SS and (9 or 20 or (versus.ti, ab. not (ANIMAL not HUMAN).sh.)) |

Subject SS is Subject search strategy, as presented in the review

| This known item search strategy was to identify included studies in Review 24, as listed in Appendix 3. There were two included studies in this review. Numbers in brackets were the number of records found in OVID MEDLINE. | |

| 1 (Ekberg$ and Bjorkqvist$).au. and "1994" .yr. (3) | |

| 2 from 1 keep 1 (1) | Line 1 and Line 2 identified 1st included study |

| 3 (Jensen$ and Nygren$).au. and "1995" .yr. (1) | Line 3 identified 2nd included study |

| 4 or/2–3 (2) | Line 4 pooled the two included studies by "or" |

Subject SS is Subject search strategy, as presented in the review

Pre-publication history

The pre-publication history for this paper can be accessed here:

Supplementary Material

Highly Sensitive Search Strategy (HSSS)

This known item search strategy was to identify included studies in Review 24, as listed in Additional file 3. There were two included studies in this review. Numbers in brackets were the number of records found in OVID MEDLINE.

Acknowledgments

Acknowledgements

The data used in this study are partly based on a project, Finding Evidence to Inform the Conduct, Reporting, and Dissemination of Systematic Reviews, which was funded by Canadian Institutes of Health Research (CIHR grant MOP57880).

We thank Erin Watson and Raymond Daniel for proofreading the manuscript.

This data was presented as a poster at the 2004 Cochrane Colloquium.

Contributor Information

Li Zhang, Email: li.zhang@usask.ca.

Isola Ajiferuke, Email: iajiferu@uwo.ca.

Margaret Sampson, Email: msampson@cheo.on.ca.

References

- Klassen TP, Jadad AR, Moher D. Guides for reading and interpreting systematic reviews: I. Getting started. Arch Pediatr Adolesc Med. 1998;152:700–704. [PubMed] [Google Scholar]

- Rychetnik L, Hawe P, Waters E, Barratt A, Frommer M. A glossary for evidence based public health. J Epidemiol Community Health. 2004;58:538–545. doi: 10.1136/jech.2003.011585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins JPT, Green S, editors Cochrane handbook for systematic reviews of interventions 4.2.5 http://www.cochrane.org/resources/handbook/hbook.htm [updated May 2005]. accessed 31st August 2005.

- Sampson M, Barrowman NJ, Moher D, Klassen TP, Pham B, Platt R, John PD, St, Viola R, Raina P. Should meta-analysts search EMBASE in addition to MEDLINE? J Clin Epidemiol. 2003;56:943–955. doi: 10.1016/S0895-4356(03)00110-0. [DOI] [PubMed] [Google Scholar]

- Salton G, McGill MJ. Introduction to modern information retrieval. New York, McGraw-Hill Book Company; 1983. [Google Scholar]

- Jadad AR, Moher D, Klassen TP. Guides for reading and interpreting systematic reviews: II. How did the authors find the studies and assess their quality? Arch Pediatr Adolesc Med. 1998;152:812–817. doi: 10.1001/archpedi.152.8.812. [DOI] [PubMed] [Google Scholar]

- Allen IE, Olkin I. Estimating time to conduct a meta-analysis from number of citations retrieved. JAMA. 1999;282:634–635. doi: 10.1001/jama.282.7.634. [DOI] [PubMed] [Google Scholar]

- National Library of Medicine. PubMed. 2004. http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?db=PubMed Accessed 14th December 2004.

- Dickersin K, Scherer R, Lefebvre C. Identifying relevant studies for systematic reviews. BMJ. 1994;309:1286–1291. doi: 10.1136/bmj.309.6964.1286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boynton J, Glanville J, McDaid D, Lefebvre C. Identifying systematic reviews in MEDLINE: developing an objective approach to search strategy design. J Info Sci. 1998;24:137–154. doi: 10.1177/016555159802400301. [DOI] [Google Scholar]

- Lefebvre C, Clarke MJ. Identifying randomised trials. In: Egger M, Smith GD, Altman DG, editor. Systematic reviews in health care: meta-analysis in context. London: BMJ; 2001. pp. 69–86. [Google Scholar]

- Brand M, Gonzalez J, Aguilar C. Identifying RCTs in MEDLINE by publication type and through the Cochrane Strategy: the case in hypertension [abstract] Proceedings of the 6th International Cochrane Colloquium: Baltimore. 22–26 October 1998; poster B22.

- Haynes RB, McKibbon KA, Wilczynski NL, Walter SD, Werre SR, Hedges Team. Optimal search strategies for retrieving scientifically strong studies of treatment from MEDLINE: analytical survey. BMJ. 2005;330:1179. doi: 10.1136/bmj.38446.498542.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson KA, Dickersin K. Development of a highly sensitive search strategy for the retrieval of reports of controlled trials using PubMed. Int J Epidemiol. 2002;31:150–153. doi: 10.1093/ije/31.1.150. [DOI] [PubMed] [Google Scholar]

- Adams CE, Power A, Frederick K, Lefebvre C. An investigation of the adequacy of MEDLINE searches for randomized controlled trials (RCTs) of the effects of mental health care. Psychol Med. 1994;24:741–748. doi: 10.1017/s0033291700027896. [DOI] [PubMed] [Google Scholar]

- Dumbrigue HB, Esquivel JF, Jones JS. Assessment of MEDLINE search strategies for randomized controlled trials in prosthodontics. J Prosthodont. 2000;9:8–13. doi: 10.1111/j.1532-849X.2000.00008.x. [DOI] [PubMed] [Google Scholar]

- Langham J, Thompson E, Rowan K. Identification of randomized controlled trials from the emergency medicine literature: comparison of hand searching versus MEDLINE searching. Ann Emerg Med. 1999;34:25–34. doi: 10.1016/S0196-0644(99)70268-4. [DOI] [PubMed] [Google Scholar]

- Marson AG, Chadwick DW. How easy are randomized controlled trials in epilepsy to find on MEDLINE? The sensitivity and precision of two MEDLINE searches. Epilepsia. 1996;37:377–380. doi: 10.1111/j.1528-1157.1996.tb00575.x. [DOI] [PubMed] [Google Scholar]

- Nwosu CR, Khan KS, Chien PF. A two-term MEDLINE search strategy for identifying randomized trials in obstetrics and gynecology. Obstet Gynecol. 1998;91:618–622. doi: 10.1016/S0029-7844(97)00703-5. [DOI] [PubMed] [Google Scholar]

- Park J, Niederman R. Estimating MEDLINE's identification of randomized control trials in pediatric dentistry. J Clin Pediatr Dent. 2002;26:395–399. doi: 10.17796/jcpd.26.4.h85147v25974j354. [DOI] [PubMed] [Google Scholar]

- Sjogren P, Halling A. MEDLINE search validity for randomised controlled trials in different areas of dental research. Br Dent J. 2002;192:97–99. doi: 10.1038/sj.bdj.4801303a. [DOI] [PubMed] [Google Scholar]

- Solomon MJ, Laxamana A, Devore L, McLeod RS. Randomized controlled trials in surgery. Surgery. 1994;115:707–712. [PubMed] [Google Scholar]

- Jenkins M. Evaluation of methodological search filters – a review. Health Info Libr J. 2004;21:148–163. doi: 10.1111/j.1471-1842.2004.00511.x. [DOI] [PubMed] [Google Scholar]

- Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. Quality of Reporting of Meta-analyses. Lancet. 1999;354:1896–1900. doi: 10.1016/S0140-6736(99)04149-5. [DOI] [PubMed] [Google Scholar]

- RevMan Advisory Group, Cochrane Collaboration's Information Management System Minutes of the RevMan Advisory Group meeting held at the UK Cochrane Centre on 14 November 2002 http://www.cc-ims.net/IMSG/RAG/Minutes/rag14-10-2002.htm accessed 5th September 2005.

- Moher D, Soeken K, Sampson M, Ben Porat L, Berman B. Assessing the quality of reports of systematic reviews in pediatric complementary and alternative medicine. BMC Pediatr. 2002;2:3. doi: 10.1186/1471-2431-2-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kagolovsky Y, Moehr JR. Current status of the evaluation of information retrieval. J Med Syst. 2003;27:409–424. doi: 10.1023/A:1025603704680. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Highly Sensitive Search Strategy (HSSS)

This known item search strategy was to identify included studies in Review 24, as listed in Additional file 3. There were two included studies in this review. Numbers in brackets were the number of records found in OVID MEDLINE.