Abstract

We designed, implemented, and evaluated a 4-week practice-based learning and improvement (PBLI) elective. Eleven internal medicine residents from 2 separate residency programs participated in the PBLI elective and 22 other residents comprised a comparison group. Residents in each group had similar pretest Quality Improvement Knowledge Application Tool scores; but after the PBLI elective, participant scores were significantly higher. Also, participants’ self-assessed ratings of PBLI skills increased after the rotation and remained elevated 6 months afterward. In this curriculum, residents completed a project to improve patient care and demonstrated their knowledge on an evaluation tool in a way that was superior to nonparticipants.

Keywords: practice-based learning and improvement, resident teaching, competencies, quality improvement

Residents work and learn in complex organizations but are not routinely taught how to evaluate, analyze, or improve the systems in which they play a vital role. Improving health care is a skill-based professional activity that requires a combination of theory and practice.1–5 Our experience has shown that limiting residents to an observational role in improvement activities produces the same frustrations that learners express when they observe a clinician-patient interaction but are not allowed to interact with patients themselves. Now that the Accreditation Council for Graduate Medical Education (ACGME) has added practice-based learning and improvement (PBLI) as one of its core competencies,6 it is imperative for residency programs to teach this material.

There are several models for learning PBLI in professional training.1,7–12 Most involve a combination of knowledge and experience-based strategies. Examples of successful resident involvement in PBLI include lowering the use and adverse effects of intravenous catheters,13 improving diabetes care,14 and improving a residency program itself.15 In these models the project work must be done over time,9,14 which can present a challenge for residents, given their work lives and schedules. Well-intentioned attempts to proceed without addressing this barrier can result in a sense of frustration over unmet commitments.

Case Western Reserve University (CWRU) and Dartmouth Medical School's Center for Evaluative Clinical Sciences each provides time-limited, hands-on PBLI experiences for graduate students, which allow students to contribute to an ongoing improvement effort.16 We believed a similar approach in residency training would provide a consistent, reproducible learning opportunity for residents about PBLI.

Curriculum Description

Residents in internal medicine and internal medicine combined programs (e.g., medicine-pediatrics or medicine-psychiatry) at MetroHealth Medical Center in Cleveland, OH (a major teaching affiliate of CWRU), and Dartmouth-Hitchcock Medical Center in Lebanon, NH were invited to participate in a 1-month PBLI elective during the 2001–2002 academic year. Nine individuals from MetroHealth and two from Dartmouth completed the PBLI elective. The PBLI elective lasted at least 4 weeks. Participants devoted between 4 and 8 half-days per week to this rotation. One resident dedicated 1 to 2 half-days per week over a 6-month period. All the residents maintained their continuity clinic and night call duties and several of the residents also participated in ambulatory subspecialty clinics. No participants were on inpatient rotations.

The primary goal for each participant was to gain hands-on experience in PBLI. Although some residents used the rotation to fulfill departmental research requirements, the core experience differed from a research elective. PBLI focuses on examination and improvement of care delivered in a specific setting and context, and the goal of the residents’ work was to improve care and outcomes in that particular context, rather than develop new knowledge applicable in any context. Instead of focusing on traditional study design and research skills, residents learned how to understand their workplace, collect and present data, and propose interventions. They were expected to meet the following learning objectives:

Describe the connection between professional knowledge and PBLI knowledge;

Develop and focus an aim for a PBLI project;

Understand the model for improvement and the components of the Plan-Do-Study-Act (PDSA) cycle;

Describe why and how various disciplines must work together to achieve improvement;

Demonstrate how data can be collected under time and resource limitations as well as appropriately display and analyze data;

Use diagrams to understand the process under study;

Identify areas to change within a process and recognize whether changes are successful.

The curriculum combined didactic and experiential learning. Didactic sessions were 1 to 2 hours each week and provided the foundations of PBLI. These sessions combined prereading with interactive discussions to advance the work on the resident's project. Specific details and a list of course materials are available from the authors.

Resident projects were either chosen from a menu of existing institution quality improvement efforts or crafted anew with assistance from a project sponsor. Project sponsors were faculty members (physician or nurse) who were distinct from the faculty who taught the didactic sessions. In some cases, team leaders of improvement initiatives identified time-limited projects that the residents could complete independently during the elective. For example, one resident studied the process and time to follow-up after discharge from a hospital admission for acute sickle cell pain crisis. Other residents, with a focused interest, worked with a project sponsor to design their own projects. An example of this was a resident who worked with occupational medicine to investigate needle stick injuries. Each resident presented his or her project to a group of residents and faculty at the end of the rotation. Project topics are listed in Table 1.

Table 1.

Resident Projects Completed During the Practice-based Learning and Improvement Elective

| Although 11 residents completed the elective, two residents collaborated on one project, so there are a total of 10 projects. |

| • Coordinating follow-up after admission for acute pain crisis from sickle cell disease |

| • Reducing needle stick injuries for medical students and residents |

| • Increasing screening for depression in a transgender clinic |

| • Increasing the use of maximum sterile barrier precautions in the Medical Intensive Care Unit to decrease catheter-related infections |

| • Examining the effectiveness of a computerized medical record alert in identifying beta blocker use candidates |

| • Determining the effectiveness of inpatient initiation of anticoagulation with warfarin |

| • Providing safe, cost-efficient, and effective outpatient anticoagulation with Lovenox |

| • Decreasing barriers to advance care planning discussion in the outpatient setting |

| • Assessing osteoporosis knowledge and risk factors of clinic patients |

| • Determining whether a heart failure database can be useful in improving quality of care |

Evaluation of the Curriculum

Seven of the eleven participants were third-year residents, one was a fourth-year resident in a combined program, and three were second-year residents. Two nonparticipant residents were matched on specialty (i.e., internal medicine) and postgraduate year (PGY) of training to each participant. Twenty-two internal medicine residents served in this comparison group. Both groups were volunteer, convenience samples. Most participant and nonparticipant residents were in the categorical internal medicine track, with the remaining residents split equally between the primary care and international health tracks. Only 36% of the participants were male while 82% of the nonparticipants were male (P < .05). There was no difference in the percent of participants or nonparticipant residents who had prior PBLI experience. Each of the evaluation instruments had a 100% response rate from the participants and the nonparticipants.

The curriculum was evaluated using 4 previously described domains17: 1) core learning, 2) resident self-assessed proficiency, 3) resident and project sponsor satisfaction, and (4) faculty and resident time investment.

Core Learning

Core learning was measured two ways. The first was with the Quality Improvement Knowledge Application Tool (QIKAT), in which the learner responds to each of 3 scenarios with an aim, measures, and possible changes one might propose for improvement. Each response is scored from 0 (low) to 5 (high), generating a cumulative score of 0 to 15 points. The 11 participants and 22 nonparticipants completed this instrument before and at the end of the rotation. This instrument has accurately discriminated between groups of individuals with PBLI training versus those without (mean score 10.7 vs 7.4; P < .01).18

Two scorers were blinded to the identity of the residents and to the pre and post status of each QIKAT. Weighted interrater agreement was calculated for each pair of scorers on the raw scores. One-point discrepancies were averaged and any 2-point discrepancies were reconciled between the scorers. Final QIKAT scores were compared using paired t test for participants’ and nonparticipants’ pre- and posttest scores and independent t test for comparing participants to nonparticipants.

Pretest QIKAT scores were similar for participants and nonparticipants, but participants’ scores after the PBLI rotation were significantly higher (Table 2). Also, participants’ scores were significantly higher in the posttest compared to their own pretest scores, while nonparticipant scores did not change over time. Interrater agreement for each pair of scorers was acceptable (κ 0.20 to 0.42; P < .05 to P < .001).19

Table 2.

Comparison of the Pre- and Post-Quality Improvement Knowledge Application Tool (QIKAT) Scores for Participants and Nonparticipants*

| PretestMean (SD) | PosttestMean (SD) | P Value | |

|---|---|---|---|

| Participants | 9.2 (2.6) | 11.4 (2.4) | <.05 |

| Nonparticipants | 8.2 (2.2) | 8.7 (2.5) | NS |

| P value | NS | <.01 |

Scores were compared using paired t test for participants’ and nonparticipants’ pre- and posttest scores and independent t test for comparing participants to nonparticipants.

SD, standard deviation; NS, not significant.

The second assessment of core learning was faculty evaluation of each resident's end-of-rotation presentation. Faculty rated items related to each of the learning objectives on a 4-point scale (1 = unsatisfactory to 4 = excellent). These results were used to provide feedback to the residents. Faculty rated the end-of-rotation presentations highly for items related to developing an aim (mean, 3.6; standard deviation [SD], 0.67); describing and diagramming the work process (mean, 3.8; SD, 0.40); and linking data to change (mean, 3.5; SD, 0.71). Scores were lower for describing the PDSA cycle (mean, 2.5; SD, 1.23); eliciting interdisciplinary input (mean, 2.7; SD, 1.12); and incorporating interdisciplinary perspective (mean, 2.9; SD, 1.25), indicating common weaknesses in the residents’ presentations.

Several of the projects were presented as senior resident lectures to the department of medicine during noon conference. One project presentation won an award at a regional subspecialty meeting20 and another was presented as part of a workshop at an international conference in Bergen, Norway.21

Self-assessed Proficiency in PBLI

Resident self-assessed proficiency in PBLI was measured before, immediately after, and 6 months after completing the elective. The instrument used a 4-point scale to gauge each participant's self-assessed confidence in his or her ability to improve care for patients. Self-assessment scores (pre-, post-, and 6-month follow-up for each item) were compared using general linear model repeated measures procedure.

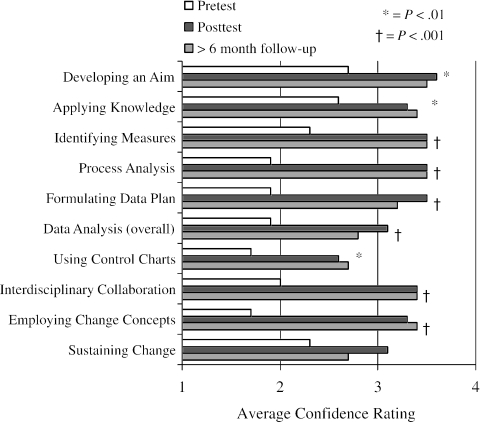

Participants’ ratings of 9 of the 10 items increased after the rotation and remained elevated at the 6-month follow-up (P < .001 to .01; Fig. 1). Several of the items that were rated low before the elective (process analysis, formulating data plan, data analysis [overall], using control charts, interdisciplinary collaboration, and employing change concepts) were rated high after the elective. No difference was found in the participants’ self-evaluation of “sustaining change” immediately after the elective or at the 6-month follow-up.

FIGURE 1.

Comparison of participants’ pre-, post-, and 6-month self-assessment scores. 1 = not confident to 4 = very confident.

Resident and Project Sponsor Satisfaction

A 1-page instrument was used to assess resident satisfaction. Participants were asked whether the elective met each of the learning objectives on a 5-point scale (1 = not at all to 5 = extremely well). Free text items assessed the most important learning objective, the skills that might be helpful in the future, and suggestions for improvements to each part of the curriculum (scheduling, readings, meetings with faculty, meeting with project sponsors, and completing the project). Project sponsors completed a free text evaluation of the elective experience that was separate from an assessment of the resident's work.

Participants reported high satisfaction with developing and focusing an aim (mean, 4.4; SD, 0.67); understanding the model for improvement (mean, 4.6; SD, 0.67); understanding interdisciplinary care (mean, 4.7; SD, 0.47); and analyzing the work process (mean, 4.6; SD, 0.67). All of the participants reported they would recommend the elective to colleagues. Free text comments also indicated a high degree of satisfaction with the experience. One resident stated that the PBLI skills felt like “putting on a new pair of glasses” to assess clinical care. Another wrote “this training should be mandatory for all residents.” One resident felt that the skills “will be useful when I enter private practice.” Several participants reported disappointment that few clinical faculty seemed prepared to practice and teach these skills.

Project sponsors expressed delight with the resident projects. One sponsor commented that the resident “helped [get] a project going within our [section]” and another stated, “the resident's analysis of our data and suggestions have given us some momentum.” Suggestions to improve the experience from project sponsors included making the expectations of faculty more explicit in the beginning, ensuring a shared understanding of faculty roles, and expressing that the experience “[was] challenging and require[d] creativity.” Both participants and sponsors requested more time to devote to work on the projects.

Faculty and Resident Time

Time is a valuable commodity for both residents and faculty. Both groups maintained time logs as a proxy for costs. Residents averaged 119 hours (SD, 64; range, 41 to 232) and faculty 6 hours (SD, 1.4; range, 3.3 to 8.0) over the course of the rotation. Project sponsors reported spending 1 to 2 hours per week on elective-related activities. Direct costs such as photocopying and computer access were negligible and absorbed by the respective institutions.

CONCLUSIONS

Using a standardized curriculum, we delivered an educational experience for residents about practice-based learning and improvement (PBLI). We demonstrated that residents were able to undertake an improvement project, describe their results in a presentation to colleagues, and apply the knowledge in a standardized evaluation tool—all with a reasonable time commitment from residents, faculty, and sponsors.

This curriculum is important because of the recent changes in accreditation and certification. In addition to the ACGME competencies,6 the American Board of Internal Medicine22 and the American Board of Pediatrics23 now require practice improvement as part of the recertification process. These recommendations and changes in policy follow similar recommendations from the Pew Health Professions Commission,24 the Institute of Medicine,25,26 and the Council on Graduate Medical Education.27

As regulatory agencies redesign the focus of medical education, novel curricula must emerge to meet the challenge. While most are comfortable with the ACGME core competencies of medical knowledge, patient care, and interpersonal skills, many find systems-based practice and practice-based learning and improvement competencies more difficult to grasp conceptually and challenging to implement and assess. Our curriculum adds a novel, standardized, and flexible curriculum to teach and assess PBLI.

One consistent theme from others who have pioneered PBLI curricula is that learning must be experience based.1–5,11 For this reason, the resident's project was a cornerstone of our curriculum. By having residents identify a problem, create an aim, study the work process, measure the processes and outcomes, and recommend improvements, they applied PBLI to real situations that were important to them. This approach also led to benefits for the institutions in which the projects took place. While it is widely recognized that residents provide a major contribution to the workforce in an institution, PBLI offers a chance for residents to contribute to the redesign of care for patients (Table 1).

While our findings are encouraging, this study has several limitations. The sample size was small and unbalanced between the two institutions. Participants and nonparticipants were volunteers. The use of the nonparticipant group allowed the assessment of the baseline knowledge and trends of PBLI learning in these residency programs. While the participants were motivated learners, their baseline knowledge as measured by the QIKAT was not different from that of nonparticipants (Table 2). Available resources limited the follow-up to 6 months; it would be interesting to assess the participants’ longer-term application of the content. Finally, the QIKAT requires further development. This tool is promising from pilot tests18 and in the evaluation of this elective, but we recognize that systematic psychometric development will make it more applicable to a range of environments and users.

MetroHealth and Dartmouth-Hitchcock Medical Centers have active cultures of quality improvement and qualified faculty available to teach PBLI concepts and skills to residents. Other institutions may not have the infrastructure in place to initiate such a curriculum. Providing this experience at two separate institutions increases the generalizability of our findings to the many institutions that now have faculty with PBLI experience. The PBLI elective continues at both sites and has been expanded to include family practice residents at MetroHealth and obstetrics and gynecology residents at Dartmouth-Hitchcock, in addition to internal medicine residents. The curriculum also has been piloted with a group of nonvolunteer internal medicine residents during a required ambulatory rotation.

While a 1-month experience fits into the traditional resident “block” scheduling, it may not be the ideal way to learn or practice PBLI. The time-limited nature of the elective limited the resident's ability to make and follow changes. The results from the resident presentations, self-assessed proficiency, and satisfaction show that participants lacked an understanding of making and sustaining changes in a system. This is, in fact, the most difficult aspect of improvement. Perhaps as PBLI becomes more common in clinical practice, and learning about PBLI becomes integrated into medical school curricula,28 teaching these skills to residents will become less challenging.

As residency programs continue to wrestle with the new ACGME core competencies, many new curricula will emerge. Our approach was to create a time-limited opportunity for residents to initiate an improvement project, describe the results, and apply the knowledge to a standardized assessment tool. The results suggest this approach can be effective for teaching PBLI to residents.

Acknowledgments

This material is based upon work supported by the Office of Academic Affiliations, Department of Veterans Affairs. Drs. Ogrinc and Foster were fellows in the VA National Quality Scholars Program.

The opinions and findings contained herein are those of the authors and do not necessarily represent the opinions or policies of the Department of Veterans Affairs or Dartmouth Medical School.

REFERENCES

- 1.Headrick LA, Knapp M, Neuhauser D, et al. Working from upstream to improve health care: the IHI Interdisciplinary Professional Education Collaborative. Jt Comm J Qual Improv. 1996;22:149–64. doi: 10.1016/s1070-3241(16)30217-6. [DOI] [PubMed] [Google Scholar]

- 2.Baker G, Gelmon S, Headrick LA, et al. Collaborating for improvement in health professions education. Qual Manag Health Care. 1998;6:1–11. doi: 10.1097/00019514-199806020-00001. [DOI] [PubMed] [Google Scholar]

- 3.Headrick LA. Guidelines to Accelerate Teaching and Learning About the Improvement of Health Care. Washington DC: Community-based Quality Improvement Education for Health Professions Health Resources and Services Administration/Bureau of Health Professions. 1999:1–23. [Google Scholar]

- 4.Cleghorn G, Baker G. What faculty need to learn about improvement and how to teach it to others. J Interprof Care. 2000;14:147–59. [Google Scholar]

- 5.Ogrinc G, Headrick LA, Mutha S, Coleman M, O'Donnell J, Miles P. A framework for teaching medical students and residents about practice-based learning and improvement, synthesized from the literature. Acad Med. 2003;78:748–56. doi: 10.1097/00001888-200307000-00019. [DOI] [PubMed] [Google Scholar]

- 6.Leach D. Evaluation of competency: an ACGME perspective. Am J Phys Med Rehab. 2000;79:487–9. doi: 10.1097/00002060-200009000-00020. [DOI] [PubMed] [Google Scholar]

- 7.Ashton C. “Invisible” doctors: making a case for involving medical residents in hospital quality improvement programs. Acad Med. 1993;68:823–4. doi: 10.1097/00001888-199311000-00003. [DOI] [PubMed] [Google Scholar]

- 8.Kyrkjebo J. Beyond the classroom: integrating improvement learning into health professions education in Norway. Jt Comm J Qual Improv. 1999;25:588–97. doi: 10.1016/s1070-3241(16)30472-2. [DOI] [PubMed] [Google Scholar]

- 9.Schillinger D, Wheeler M, Fernandez A. The populations and quality improvement seminar for medical residents. Acad Med. 2000;75:562–3. doi: 10.1097/00001888-200005000-00098. [DOI] [PubMed] [Google Scholar]

- 10.Weeks W, Robinson J, Brooks W, Batalden P. Using early clinical experiences to integrate quality-improvement learning into medical education. Acad Med. 2000;75:81–4. doi: 10.1097/00001888-200001000-00020. [DOI] [PubMed] [Google Scholar]

- 11.Gould B, Grey M, Huntington C, et al. Improving patient care outcomes by teaching quality improvement to medical students in community-based practices. Acad Med. 2002;77:1011–18. doi: 10.1097/00001888-200210000-00014. [DOI] [PubMed] [Google Scholar]

- 12.Kyrkjebo J, Hanestad B. Personal improvement project in nursing education: learning methods and tools for continuous quality improvement in nursing practice. J Adv Nurs. 2003;41:8D–98. doi: 10.1046/j.1365-2648.2003.02510.x. [DOI] [PubMed] [Google Scholar]

- 13.Parenti C. Reduction of unnecessary intravenous catheter use. Internal medicine house staff participate in a successful quality improvement project. Arch Intern Med. 1994;154:1829–32. doi: 10.1001/archinte.154.16.1829. [DOI] [PubMed] [Google Scholar]

- 14.Fox C, Mahoney M. Improving diabetes care in a family practice residency program: a case study in continuous quality improvement. Fam Med. 1998;30:441–5. [PubMed] [Google Scholar]

- 15.Ellrodt A. Introduction of total quality management (TQM) into an internal medicine training program. Acad Med. 1993;68:817–23. doi: 10.1097/00001888-199311000-00002. [DOI] [PubMed] [Google Scholar]

- 16.Moore S, Alemi F, Headrick L, et al. Using learning cycles to build an interdisciplinary curriculum in CI for health professions students in Cleveland. Jt Comm J Qual Improv. 1996;22:165–71. doi: 10.1016/s1070-3241(16)30218-8. [DOI] [PubMed] [Google Scholar]

- 17.Ogrinc G, Headrick LA, Boex JR. Understanding the value added to clinical care by educational activities. Acad Med. 1999;74:1080–6. doi: 10.1097/00001888-199910000-00009. [DOI] [PubMed] [Google Scholar]

- 18.Morrison LJ, Headrick LA, Ogrinc G, Foster T. The quality improvement knowledge application tool: an instrument to assess knowledge application in practice-based learning and improvement. J Gen Intern Med. 2003;18:250. Abstract presented at Society of General Internal Medicine Meeting, Vancouver, British Columbia, May 2003. [Google Scholar]

- 19.Landis J, Koch G. The measurement of observer agreement for categorical data. Biometrics. 1977;33:671–79. [PubMed] [Google Scholar]

- 20.Reddy S, McKinney T, Harrington M, Morrison LJ. Utilizing education and prompted scripts in the electronic medical record to overcome barriers of advance care planning in the outpatient setting. J Palliat Care. 2002;5:589. [Google Scholar]

- 21.Morrison L, Fesko Y. A controlled trial of graduate physician training in the improvement of health care: providing safe and effective outpatient anticoagulation. Paper presented at the Eighth European Forum on Quality Improvement in Health Care, Bergen, Norway, May 14, 2003.

- 22.Continuous Profession Development—Board Recertification Program and Information Guide. 2003. American Board of Internal Medicine. Available at: http://www.abim.org/cpd/programinfo.htm#3b. Accessed November 24, 2003.

- 23.Stockman J, Miles P, Ham H. The Program for Maintenance of Certification in Pediatrics. American Board of Pediatrics. Available at: http://www.abp.org/frpmcp. Accessed November 25, 2003. [DOI] [PubMed]

- 24.Healthy America: Practitioners for 2005. San Francisco: Pew Health Professions Commission: The Pew Charitable Trusts; 1991. [Google Scholar]

- 25.Briere R, editor. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- 26.Kohn L, Corrigan J, Donaldson M, editors. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press; 2000. [PubMed] [Google Scholar]

- 27.Fourth Report: Recommendations to Improve Access to Care Through Physician Workforce Reform. Rockville, Md: Council on Graduate Medical Education; 1994. [Google Scholar]

- 28.Batalden P. Report V: Contemporary Issues in Medicine: Quality of Care. Washington, DC: Association of American Medical Colleges; 2001. [Google Scholar]