Abstract

BACKGROUND

Voluntary reporting of near misses/adverse events is an important but underutilized source of information on errors in medicine. To date, there is very little information on errors in the ambulatory setting and physicians have not traditionally participated actively in their reporting or analysis.

OBJECTIVES

To determine the feasibility and effectiveness of clinician-based near miss/adverse event voluntary reporting coupled with systems analysis and redesign as a model for continuous quality improvement in the ambulatory setting.

DESIGN

We report the initial 1-year experience of voluntary reporting by clinicians in the ambulatory setting, coupled with root cause analysis and system redesign by a patient safety committee made up of clinicians from the practice.

SETTING

Internal medicine practice site of a large teaching hospital with 25,000 visits per year.

MEASUREMENTS AND MAIN RESULTS

There were 100 reports in the 1-year period, increased from 5 in the previous year. Faculty physicians reported 44% of the events versus 22% by residents, 31% by nurses, and 3% by managers. Eighty-three percent were near misses and 17% were adverse events. Errors involved medication (47%), lab or x-rays (22%), office administration (21%), and communication (10%) processes. Seventy-two interventions were recommended with 75% implemented during the study period.

CONCLUSION

This model of clinician-based voluntary reporting, systems analysis, and redesign was effective in increasing error reporting, particularly among physicians, and in promoting system changes to improve care and prevent errors. This process can be a powerful tool for incorporating error reporting and analysis into the culture of medicine.

Keywords: medical error, patient safety, ambulatory care, voluntary reporting

The 2000 Institute of Medicine (IOM) report, “To Err Is Human,” raised awareness of medical error as an important cause of adverse patient outcomes and challenged the medical community to improve patient safety.1 Thus far, much of our information about medical error has come from retrospective chart review focusing on adverse events.2,3 Although this approach has provided useful information, finding “errors” in the care of a patient who has had a bad outcome is hindered by hindsight bias and the limited scope of information contained in the medical record. The IOM report called for a reporting system that would focus on both adverse events and near misses in order to provide new information about medical error. Current incident reporting systems are administrator based, perceived as punitive, poorly utilized by physicians, and miss many clinically significant events.4 Recent studies have suggested that clinician-based voluntary reporting systems implemented on inpatient services are valuable means of detecting errors in the process of care.5–7

The IOM report further calls for a systems approach to analyze and respond to reported near misses and adverse events. Identifying faulty or inadequate systems that contribute to error allows for the redesign of such systems and the prevention of recurrent events. This is a major shift in focus from the traditional “blame and shame” approach, which has focused on the performance of individuals. Physicians generally lack training in this area and have not been involved in the analysis of errors or the redesign of systems to prevent future errors. Recognizing this, the Accreditation Council of Graduate Medical Education (ACGME) has established new competency requirements in systems-based care.8 Opportunities for physician involvement in these activities have remained quite limited.

Finally, little is known about the nature of adverse events and near misses in ambulatory care.9–12 What we know suggests that adverse events and near misses occur frequently13,14 and that the types of errors may be quite different than those occurring in hospitals. Because most medical care is provided in the ambulatory setting, more information about the types of errors, contributing factors, root causes, and effective interventions is urgently needed.

We have established a voluntary, clinician-based reporting system that is coupled with clinician-based systems analysis and redesign. This report describes the context and design of this system and provides a summary of results after 1 year, including examples of interventions and their impact.

METHODS

Setting

This project was implemented in an academic ambulatory internal medicine practice with 12 faculty physicians and 88 residents. The residents attended 1 or 2 half-day clinic sessions per week and faculty physicians attended 5 to 8 clinical sessions per week. There are approximately 25,000 patient visits per year, with 23% of patients over age 65, and 60% below the federal poverty level. The practice utilizes a paper charting system but lab and radiology results are available on computer. The practice is the largest provider of ambulatory care to indigent patients in central Virginia.

Patient Safety Committee

A patient safety committee for the ambulatory practice was formed to review cases, conduct root cause analyses, and design interventions. Representatives from multiple disciplines and levels of training served on the committee, including a nurse, a pharmacist, four residents, one faculty physician, the nursing director, and the medical director. Consultants to the group included a social worker and the practice manager.

Voluntary Reporting Process

Residents, faculty physicians, nurses, medical assistants, and pharmacists in the ambulatory teaching practice were encouraged to report near misses or adverse events that came to their attention. The process was discussed in resident and staff meetings during a 3-month period around implementation. Reminder emails were sent at 1- to 2-month intervals initially, with reinforcement in existing conference forums. Near miss/adverse event was defined as “any event in a patient's medical care which did not go as intended and either harmed or could have harmed the patient.” The reports were confidential but not anonymous, submitted in paper format (dropped into a locked box in the conference room) and included a brief summary of the event, the date of occurrence, the medical record number of the patient, and the reporter's name and status (RN, postgraduate year [PGY], attending, pharmacist). A copy of each report was sent to the quality improvement office of the medical center to maintain central coordination of all reporting. The quality improvement office provided oversight and was available for assistance in the case of a sentinel event report. Participants were reminded that this was a nonpunitive process and that these and any quality reports were protected from legal discovery.15 This project was approved by the Human Investigation Committee of the University of Virginia and was an approved Quality Improvement project of the University of Virginia Medical Center.

Committee Review Process

The reports were reviewed by the committee chair and distributed to one of the committee members to collect any further information needed to conduct a root cause analysis. Information was collected by chart review, interviews with parties involved in the incident, and review of pharmacy data. Cases were then summarized for committee review. Patients were not contacted as a part of the process.

The committee met every 2 weeks for 2 hours to review these summarized cases, with all physician and patient identifiers removed. The committee reviewed each event for contributing factors and identified root causes underlying the identified problems. The root cause classification system was developed by Battles and Shea.16 Root causes are broadly divided into “latent” (systems) and “active” (human) root causes, the latent subdivided into technical (design, construction, materials) and organizational (protocol/procedures, transfer of knowledge, management priorities, culture), and active subdivided into knowledge-based versus rules-based (qualifications coordination, verification, intervention, monitoring) root causes. It has been studied across a variety of medical settings and is a reliable means of causal classification. The taxonomy of ambulatory medical errors developed by Elder and Dovey17 was utilized, after some modification, to categorize the errors by type. In this taxonomy, errors are categorized broadly as either process errors or knowledge/skills-based errors and then subcategorized to a quite specific level (e.g., process error subcategories included office administration, medication delivery, lab x-ray, and communication processes). Even further subcategorization (e.g., medication process subcategorized into various parts of the delivery process) allows tracking of very specific types of errors.

Utilizing a consensus process, the committee then generated a list of recommended interventions designed to address the proximal and root causes identified in the review. These recommendations fell into 3 general categories:

Level 1: quick and easy fix to solve a simple problem.

Level 2: more complex intervention for a more difficult problem, still within the scope of the ambulatory practice.

Level 3: beyond the scope of the ambulatory practice, involving other departments to collaborate on a solution.

Implementation and Dissemination Process

The committee prioritized interventions by consensus and various members were assigned tasks to proceed with implementation. Progress in implementation was tracked and reviewed periodically by the committee. The ability of the committee to implement changes was facilitated by having key change agents (medical and nursing directors) for the practice as members of the committee. Dissemination of information regarding interventions was accomplished via email, resident conferences and weekly meetings, and staff newsletters and meetings.

RESULTS

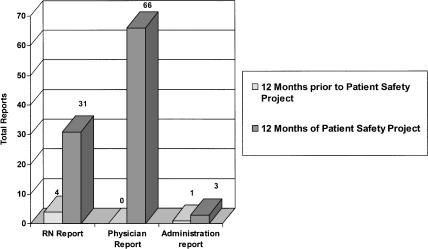

There was a 20-fold increase in reporting of adverse events and near misses in the 12 months following project inception compared to the preceding year, during which a traditional quality reporting system was utilized (Fig. 1). The number of physician reports rose from 0 to 66 and reports from nurses and administrators also substantially increased. Faculty physicians reported 44% of the errors versus 22% by residents, 31% by nurses, and 3% by managers.

FIGURE 1.

Near miss and adverse event reporting.

Events by Category

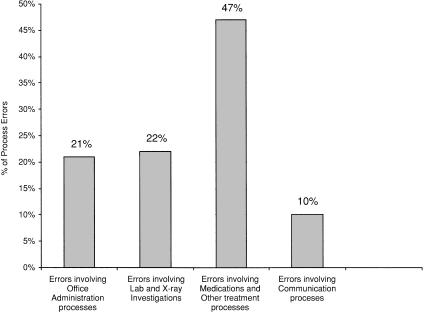

Of 100 total reports, 83 were near misses and only 17 were actual adverse events. Utilizing Elder and Dovey's taxonomy, 90% of the reported events were process errors and only 10% were knowledge/skills-based errors (such as setting up an oxygen tank incorrectly). Of the process errors, almost half were medication related (Fig. 2).

FIGURE 2.

Types of process errors.

The greatest number of medication-related reports (41%) occurred in the prescribing stage involving contraindicated drugs (allergy), incorrect doses, and poor handwriting or documentation. A total of 26% of the medication errors involved lack of monitoring, with the drugs most commonly implicated being statins (liver function test [LFT] monitoring), diuretics (potassium monitoring), thiazoladinediones (LFT monitoring), narcotic analgesics (failing to follow established guidelines for clinical follow-up of patients on chronic narcotics), and anticoagulants (international normalized ratio [INR]). Medication errors involving the receiving stage (19%) included patients continuing to take medications after they were intended to be stopped, taking the wrong doses, or delays in obtaining medications.

Of the lab-related errors, the majority involved either failure to report labs to physicians in a timely manner or physician failure to recognize and respond appropriately to abnormal labs. The office administration errors included failure to follow up missed appointments (e.g., process for assuring follow-up of important clinical issue if patient is a no show), failure to respond in a timely way to an urgent issues (e.g., clinically urgent phone messages not delivered to physician reliably), and problems in patient flow such as long waits for hospital admission from the practice.

More than 70 interventions were recommended by the committee to address the problems identified in the events analyses, with 75% implemented in the 1-year period (Table 1). Overall, 41% were primarily educational in nature. Forty-one percent involved systems redesign and 18% involved equipment or supplies.

Table 1.

Interventions Implemented During One-year Period

| Intervention Level and Status | Intervention Type | Number | Examples |

|---|---|---|---|

| Level 1 (easy quick fixes) | |||

| • Accomplished = 30 | Education | 25 | Workshop on medical errors for residents. Practice with IVs for nurses. In-service for nurses on oxygen use. Education of MDs to include “purpose of medication” on all prescriptions. |

| • In progress = 6 | Systems | 15 | Oxygen sats added to vitals for all COPD patients. Redesign of hemoccult notification processes and tracking system. |

| • Not yet attempted = 13 | Equipment | 9 | Glucagon added to standard supplies in clinic. |

| Level 2 (higher complexity) | |||

| • Accomplished = 6 | Education | 3 | Guidelines for lab monitoring “high-risk medications.” |

| • In progress = 8 | Systems | 12 | Protocol for reporting of lab results based on severity of abnormality. Admission checklist (to standardize holding orders and the admission process). Redesign of no-show policy to assure appropriate follow-up. |

| • Not yet attempted = 3 | Equipment | 2 | No-show follow-up form. |

| Level 3 (requires work with other clinics or departments) | |||

| • Accomplished = 6 | Education | 2 | Education of radiology and medicine staff regarding policies and procedures for rapid read, film returns, and abnormal readings. |

| • In progress = 0 | Systems | 3 | Establish communications between internal medicine and anticoag clinic. Centralized process for film returns. |

| • Not yet attempted = 1 | Equipment | 2 | Radiology online images available in outpatient clinic. Electronic prescribing. |

IV, intravenous lines; COPD, chronic obstructive pulmonary disease.

Two thirds of the 70 interventions were level 1 and 23% of interventions were level 2, involving more complex interventions usually requiring significant groundwork. An example of a level 2 intervention involved developing a protocol for the physician to be notified of abnormal test results. This required the work of a small group to develop standards and achieve consensus on the relative urgency of physician notification for each type of test, and staff training to implement the changes. Ten percent of interventions were level 3 (involving other services) an example being lost or unread radiologic films. Addressing this problem required collaborating with the radiology department on a standard film request and retrieval protocol, as well as a more timely wet read procedure and the installation of a terminal for viewing digital images in our clinic.

We evaluated the interventions either by direct or surrogate measures. Interventions that were not formally monitored relied on continued voluntary reporting. The fecal occult blood tracking system was an example of a direct monitoring measure. After a change in the notification process for positive hemoccults, the percentage with complete follow-up increased from 77% in the 1-year pre-intervention period to 100% in the year after the intervention was implemented fully. A surrogate measure was used to evaluate the success of a protocol designed to facilitate the hospital admission process. In this case, the staff administering the protocol was surveyed 3 months after its implementation to assess whether the deficiencies in the prior process had been resolved and whether any additional issues had arisen. Results indicated 100% of staff surveyed felt that the protocol had improved communication regarding orders for patients awaiting admission.

DISCUSSION

Our initial experience with a continuous ambulatory error reporting, review, and response model has demonstrated its feasibility and effectiveness. It resulted in a substantial increase in the reporting of errors from all levels of clinicians (most prominently physician reporting) as well as the design and implementation of interventions to address underlying causes of these errors. The preexisting administrator-based quality reporting system had recently undergone a revision to improve the quality and quantity of reports, but had failed to increase reporting by physicians. It is noteworthy, then, that this clinician-based error-reporting process has been utilized most by physicians (predominantly faculty), and that it has mainly generated near-miss reports, almost all process based.

The 20-fold increase in volume and the predominant physician reporting of errors may be due to a number of factors. Unlike the preceding quality report system, the current process is directed by a physician with the committee having significant faculty and resident physician representation. This active peer participation and the declared goal of using the error-reporting process as an opportunity to achieve meaningful practice improvement likely contributed to physician interest and participation. Our hands-on practice-based approach was an opportunity for physicians to see the process of error analysis resulting in tangible improvements in practice on a continuous basis. Furthermore, educational forums were utilized and reminders were sent to stimulate the submission of error reports. This helped to maintain a high profile for the committee and its work. The fact that the committee was practice based, multidisciplinary, and included both peers and leaders added to the sense that it could effect change. That it actually did both review and redress errors helped build and maintain a momentum that would not have been possible with an error-reporting process alone. Most reports were observation of clinically relevant systems errors. When individual errors were reported, they were generally self-reported. The system-based (rather than finger-pointing) confidential approach likely contributed to greater reporter comfort in disclosing errors. Given the nature of the process and the source of the reports, it is not surprising that the reports were much more clinically oriented than the traditional quality reports. This has helped maintain the interest and enthusiasm of the practice physicians, staff, and committee members.

Although medical residents utilized the new error-reporting system, they did so less than faculty physicians. This could reflect less time devoted to ambulatory care and/or less commitment to the practice or to the error-reporting process than their faculty preceptors. Residents and staff were assured that this process was nonpunitive and that prior to committee review, all names and identifiers would be removed. Nevertheless it is possible that resident physicians were more reluctant to report their errors due to a fear of adverse consequences. Their participation in reporting and on the committee was crucial in building trust and opening communication about errors as well as in disseminating changes implemented to improve care. These factors contributed significantly to the culture change that was apparent in the practice as a result of this project.

In addition to the direct clinical practice benefits derived from error reporting, analysis, and improvement, this process fulfills an important educational role for the residency program. Consequent to the new ACGME guidelines for practice and systems-based competencies, the awareness and understanding of clinical quality improvement activities must assume a more prominent role in physician residency training.18 The participatory approach we have implemented has clearly facilitated such systems-based learning for both faculty and resident physicians as well as for involved staff members. Formal didactic methods of instruction in the residency program are also being utilized to complement this experiential learning tool. The fact that these small group sessions have a “living” framework with recognizable and relevant examples (rather than simply abstract concepts) adds interest to the educational content and also stimulates participation in the error reduction process.

The reports generated were primarily near misses rather than adverse events, consistent with other studies of voluntary reporting.6,7,12 This suggests either that near misses are simply far more frequent or that a bias exists favoring reporting near misses over bad outcomes. The presence of such a reporting bias would, of course, be easy to understand given the current malpractice climate19 and could hinder the effectiveness of the reporting system if the unreported errors were qualitatively different in nature than the reported near misses. However, there may also be an advantage to utilizing near misses rather than adverse events. When a reporting system focuses on adverse events, the process of deciding whether an error has occurred is often long and difficult,20 suffering from hindsight bias and operating under the constraints of risk management. Because most reports to our committee were near misses, reported because of errors noted in the processes of care, it has been easy to focus on the related systems issues.

We used Elder and Dovey's taxonomy of ambulatory error, with modifications, for categorization purposes.12 We believe that it is important to continue the development of a common error-reporting schema in order to both aggregate and compare data across sites. The current predominantly qualitative nature of the science of error analysis would be greatly advanced by the quantitative and comparative approaches that a common lexicon would permit. Our modifications to Elder and Dovey's taxonomy accommodate errors (such as medication-associated monitoring) that did not fit into the existing schema.

The clearest example of the success of this project in effecting change in our practice involves medications. Analysis of medication-related events indicated that over half were potentially preventable by an electronic prescribing system with decision support, a figure consistent with other studies on the effect of computerized physician order entry.21–25 Although arguments and attempts had previously been made to obtain a prescription expert system for the practice, the committee succeeded in obtaining this very resource-intensive solution whereas prior efforts had failed. We believe there are several compelling reasons for this that illustrate the power and importance of this process. First, the reporting process moved errors from anecdotes to more noticeable trends (or sometimes epidemics), lending a more quantitative argument to any justification. Second, the formal committee evaluation process both established and lent credibility to the causal processes involved in an error. Third, the “institutionalization” of the error committee into both the practice culture and organizational hierarchy has permitted even complex and expensive interventions to be “on the table” when a need was demonstrated.

Most of the interventions implemented by the study committee were of low complexity, involving an educational tool or simple system redesign. Such changes required limited commitments of time and money and were easy to institute within the existing clinic systems. Although important in evaluating the success of the interventions, a monitoring strategy beyond continued occurrence tracking was designed only in certain instances due to resource constraints and logistical issues.

This report has several limitations. First, the error-reporting process itself was obviously limited by the interest of the reporters, their willingness to report errors, and their ability to discern them. Undoubtedly, many if not most errors will not be detected and/or reported in this fashion. Our philosophy has been that each error reported by this process is an opportunity for improvement that would otherwise have been missed given the dearth of errors reported prior to the inception of this process. However, further research using independent error ascertainment methods will be needed to determine the representativeness and completeness of such voluntary reporting processes. Ideally, perhaps, voluntary reporting can be utilized in conjunction with other methods, such as computerized surveillance.26–28 Though not attempted in our project, interviews of affected patients would have provided valuable insights in many instances. We recognize that the principal limitations of this report relate to its uniqueness. This is in fact a single case study and its success is impossible to dissect from the people involved in the process. Though a validated root cause framework and error taxonomy was utilized, other individuals may have focused on alternative causal mechanisms or solutions. Furthermore, the site was an academic training program and the interventions were tailored to this setting.

In conclusion, we examined the feasibility and effectiveness of a model for voluntary clinician-based medical error reporting coupled with practice-based analysis and response. Our model was effective in increasing medical error reporting overall and especially effective in increasing physician reporting. Although begun as a pilot project, the model is now an integral part of our practice quality improvement structure and, importantly, has become part of our practice “culture.” This process has enabled us to remove many of the “roadblocks” to making health care safer identified by Berwick.29 It has made errors and injuries routinely “visible” in our health care setting and created changes in systems to improve care, even when they cost money. We believe that this or a similar process can be a powerful tool for incorporating error reporting and analysis into the culture of medicine, helping clinicians translate their desire to make health care safer into actions that can prevent harm.

Acknowledgments

Support was received from the Academic Administrative Units in Primary Care for Dr. Schorling; U.S. Health Resources and Services Administration Project Period: September 1, 2000, to August 31, 2005.

REFERENCES

- 1.Kohn KT, Corrigan JM, Donaldson MS, editors. Washington, DC: Committee on Quality of Health Care in America. Institute of Medicine, National Academy Press; To Err Is Human: Building a Safer Health System. [Google Scholar]

- 2.Brennan TA, Leape LL, Laird NM, et al. Incidence of adverse events and negligence in hospitalized patients. N Engl J Med. 1991;324:377–84. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- 3.Thomas E, Studdert D, Burstin H, et al. Incidents and types of adverse events and negligent care in Utah and Colorado. Med Care. 2000;38:261–71. doi: 10.1097/00005650-200003000-00003. [DOI] [PubMed] [Google Scholar]

- 4.Cullen CJ, Bates DW, Small SD, Cooper JB, Nemenskal AR, Leape LL. The incident reporting system does not detect adverse drug events: a problem for quality improvement. Jt Comm J Qual Improv. 1995;12:541–52. doi: 10.1016/s1070-3241(16)30180-8. [DOI] [PubMed] [Google Scholar]

- 5.Weingart S, Callanan L, Ship A, Aronsen M. A physician-based voluntary reporting system for adverse events and medical errors. J Gen Intern Med. 2001;16:809–14. doi: 10.1111/j.1525-1497.2001.10231.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Weingart SN, Ship AN, Aronson MD. Confidential clinician-reported surveillance of adverse events among medical inpatients. J Gen Intern Med. 2000;15:470–7. doi: 10.1046/j.1525-1497.2000.06269.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chardhry S, Olfinboba K, Krumholz H. Detection of errors by attending physicians on a general medicine service. J Gen Intern Med. 2003;18:595–600. doi: 10.1046/j.1525-1497.2003.20919.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.ACGME Outcome Project Chicago: Accreditation Council of Graduate Medical Education. 2001. Available at: http://www.acgme.org/outcome/comp/comphome.asp. Accessed March 30, 2004.

- 9.Hammons T, Piland NF, Small SD, Hatlie MJ, Burstin HR. Ambulatory patient safety. What we know and need to know. J Ambul Care Manage. 2003;26:63–82. doi: 10.1097/00004479-200301000-00007. [DOI] [PubMed] [Google Scholar]

- 10.Gurwitz JH, Field TS, Harrold LR, et al. Incidence and preventability of adverse drug events among older persons in the ambulatory setting. JAMA. 2003;289:1107–16. doi: 10.1001/jama.289.9.1107. [DOI] [PubMed] [Google Scholar]

- 11.Gandhi TK, Burstin HR, Cook EF, et al. Drug complications in outpatients. J Gen Intern Med. 2000;15:149–54. doi: 10.1046/j.1525-1497.2000.04199.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dovey SM, Meyers DS, Phillips RL, et al. A preliminary taxonomy of medical errors in family practice. Qual Saf Health Care. 2002;11:233–8. doi: 10.1136/qhc.11.3.233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gandhi T, Weingart S, Borus B, et al. Adverse drug events in primary care. N Engl J Med. 2003:1556–64. doi: 10.1056/NEJMsa020703. [DOI] [PubMed] [Google Scholar]

- 14.Foster A, Murff H, Peterson J, Gandhi T, Bates D. The incidence and severity of adverse events affecting patients after discharge from the hospital. Ann Intern Med. 2003;138:161–7. doi: 10.7326/0003-4819-138-3-200302040-00007. [DOI] [PubMed] [Google Scholar]

- 15. Virginia State Code Statute No. 8.01–581.16 and 581.17.

- 16.Battles JB, Shea CE. A system of analyzing medical errors to improve GME curricula and programs. Acad Med. 2001;76:125–33. doi: 10.1097/00001888-200102000-00008. [DOI] [PubMed] [Google Scholar]

- 17.Elder NC, Dovey SM. Classification of medical errors and preventable adverse events in primary care: a synthesis of the literature. J Fam Pract. 2002;51:927–32. [PubMed] [Google Scholar]

- 18.pp. 1–22. Patient Safety and Graduate Medical Education: A Report and Annotated Bibliography by the Joint Committee of the Group on Resident Affairs and Organization of Resident Representatives of the AAMC; February 2003.

- 19.Studdert D, Mello M, Brennan T. Medical malpractice. N Engl J Med. 2004;350:283–91. doi: 10.1056/NEJMhpr035470. [DOI] [PubMed] [Google Scholar]

- 20.Hayward RA, Hofer TP. Estimating hospital deaths due to medical errors: preventability is in the eyes of the reviewer. JAMA. 2001;286:415–20. doi: 10.1001/jama.286.4.415. [DOI] [PubMed] [Google Scholar]

- 21.Leape LL, Bates DN, Cullen DJ. Systems analyses of adverse drug events in hospitalized patients. JAMA. 1995;274:35–43. [PubMed] [Google Scholar]

- 22.Bates DW, Teich JM, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc. 1999;6:313–21. doi: 10.1136/jamia.1999.00660313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bates DW, Leape LL, Cullen DJ. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280:1311–6. doi: 10.1001/jama.280.15.1311. [DOI] [PubMed] [Google Scholar]

- 24.Teich JM, Merchia PR, Schmiz JL, Kuperman GJ, Spurr CD, Bates DW. Effects of computerized physician order entry on prescribing practices. Arch Intern Med. 2000;160:2741–7. doi: 10.1001/archinte.160.18.2741. [DOI] [PubMed] [Google Scholar]

- 25.Chertow GM, Lee J, Kuperman GJ, et al. Guided medication dosing for inpatients with renal insufficiency. JAMA. 2001;286:2839–44. doi: 10.1001/jama.286.22.2839. [DOI] [PubMed] [Google Scholar]

- 26.Honigman B, Lee J, Rochschild J, et al. Using computerized data to identify adverse drug events in outpatients. J Am Med Inform Assoc. 2001;8:254–66. doi: 10.1136/jamia.2001.0080254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Classen DC, Pestonik SL, Evans RS, Burk JP. Computerized surveillance of adverse drug events in hospitalized patients. JAMA. 1991;266:2842–51. (erratum, JAMA. 1992;267:1922). [PubMed] [Google Scholar]

- 28.Bates D, Gawande AA. Patient safety: improving safety with information technology. N Engl J Med. 2003;348:2526–34. doi: 10.1056/NEJMsa020847. [DOI] [PubMed] [Google Scholar]

- 29.Berwick D. Errors today and errors tomorrow. N Engl J Med. 2003;348:2570–2. doi: 10.1056/NEJMe030044. [DOI] [PubMed] [Google Scholar]