Abstract

BACKGROUND

It has been suggested that inexperience of new housestaff early in an academic year may worsen patient outcomes. Yet, few studies have evaluated the “July Phenomenon,” and no studies have investigated its effect in intensive care patients, a group that may be particularly susceptible to deficiencies in management stemming from housestaff inexperience.

OBJECTIVE

Compare hospital mortality and length of stay (LOS) in intensive care unit (ICU) admissions from July to September to admissions during other months, and compare that relationship in teaching and nonteaching hospitals, and in surgical and nonsurgical patients.

DESIGN, SETTING, AND PATIENTS

Retrospective cohort analysis of 156,136 consecutive eligible patients admitted to 38 ICUs in 28 hospitals in Northeast Ohio from 1991 to 1997.

RESULTS

Adjusting for admission severity of illness using the APACHE III methodology, the odds of death was similar for admissions from July through September, relative to the mean for all months, in major (odds ratio [OR], 0.96; 95% confidence interval [95% CI], 0.91 to 1.02; P = .18), minor (OR, 1.02; 95% CI, 0.93 to 1.10; P = .66), and nonteaching hospitals (OR, 0.96; 95% CI, 0.91 to 1.01; P = .09). The adjusted difference in ICU LOS was similar for admissions from July through September in major (0.3%; 95% CI, −0.7% to 1.2%; P = .61) and minor (0.2%; 95% CI, −0.9% to 1.4%; P = .69) teaching hospitals, but was somewhat shorter in nonteaching hospitals (−0.8%; 95% CI, −1.4% to −0.1%; P = .03). Results were similar when individual months and academic years were examined separately, and in stratified analyses of surgical and nonsurgical patients.

CONCLUSIONS

We found no evidence to support the existence of a July phenomenon in ICU patients. Future studies should examine organizational factors that allow hospitals and residency programs to compensate for inexperience of new housestaff early in the academic year.

Keywords: hospitals, teaching; outcome assessment (health care); intensive care units; quality of health care; severity of illness

A growing body of literature has demonstrated that hospitals or physicians with higher volumes achieve better outcomes for patients undergoing surgical procedures or hospitalized for some medical conditions.1–5 Further studies have demonstrated that physician experience with such procedures as laparoscopic cholecystectomy, total hip arthroplasty, colonoscopy, and cardiovascular interventions, is an important determinant of outcomes.6–11 Given such findings, it is reasonable to be concerned about the potential impact of the relative inexperience of trainees in teaching hospitals early in the academic year.

Each July, teaching hospitals experience an influx of new physicians recently graduated from medical schools, and they assign new positions of responsibility to senior residents and fellows. Medical education is a fundamental component of the mission of teaching hospitals, and in these centers, interns, residents, and fellows directly provide much of the care delivered to patients. This dynamic of care delivery by less experienced physicians has led to concern about the quality of care delivered early in the academic year, known colloquially as the July Phenomenon.12

While some prior studies suggest that the costs of care in teaching hospitals are higher early in the academic year,13–15 a few others have found no differences in quality of care.14–17 However, these earlier studies were based on administrative data, which makes it difficult to adjust for potential differences in case-mix and severity of illness. Moreover, no prior studies have evaluated critical care patients. Because of their higher acuity, such patients may be particularly susceptible to initial errors in management that may stem from physician inexperience and may represent an ideal group to detect the presence of a July Phenomenon.

To address these issues further, we studied consecutive admissions to intensive care units of 5 major teaching hospitals in a large metropolitan region. We compared 2 widely used outcome measures—in-hospital mortality and length of stay (LOS) in patients admitted from July through September and during later months of the academic year. Data abstracted from medical records were included in risk adjustment models based on the APACHE III methodology,18 a validated model used to adjust for differences in severity of illness in critical care patients. We performed similar analyses in 23 minor teaching and nonteaching facilities to determine whether relationships between month of admission and outcomes were similar in hospitals that should not be as susceptible to a July Phenomenon.

METHODS

Study Design

The study was designed as a retrospective cohort analysis of data previously collected for the Cleveland Health Quality Choice Program, a regionally initiated effort to benchmark and profile hospital performance.19,20

Patients

The study sample included consecutive eligible patients admitted to 38 intensive care units (ICUs) in 28 hospitals in Northeast Ohio from March 1, 1991 to March 31, 1997. Of the participating ICUs, 19 were mixed medical and surgical units, 8 were medical only, 8 were surgical only, and 3 were neurological and/or neurosurgical. All patients admitted to the study ICUs were eligible for data collection with the exception of: 1) patients less than 16 years old; 2) patients with burn injuries; 3) patients admitted to an ICU solely for hemodialysis or peritoneal dialysis; 4) patients who died within 1 hour of admission to the ICU or within the first 4 hours after ICU admission in cardiopulmonary arrest; and 5) patients admitted to the ICU after undergoing coronary artery bypass, cardiac valvular, or heart transplant surgery. Eligibility criteria were developed prior to data collection by an advisory committee of ICU physicians from participating hospitals and included several criteria used in the development of the APACHE III methodology.20,21

A total of 205,835 admissions met eligibility criteria. Of this total, we excluded 11,824 (5.7%) admissions that represented readmissions to the ICU during a single hospitalization, 14,691 (7.3%) admissions that resulted in a discharge to another acute care hospital for further inpatient care, and 753 (0.4%) admissions with missing data (severity of illness, admission or discharge date, admission diagnosis, or location prior to admission). Also excluded were 22,431 (10.9%) admissions with diagnoses that would be typically managed in a coronary care unit (unstable angina, cardiac arrhythmia, acute myocardial infarction, and cardiac surgery), as such units were excluded from data collection per protocols defined by Cleveland Health Quality Choice.20,21 These exclusions left a final cohort of 156,136 admissions.

Of the 28 hospitals, 5 were classified as major teaching hospitals on the basis of membership in the Council of Teaching Hospitals (COTH) of the Association of American Medical Colleges during the period of data collection. Six hospitals had 1 or more residency training programs, but were not members of COTH and were classified as minor teaching hospitals. The remaining 17 hospitals were classified as nonteaching.

Data

Data were abstracted from patients' medical records by trained personnel using standard forms and data collection software provided by APACHE Medical Systems, Inc. (McLean, Va). Data abstracted included age, gender, presence of 7 specific comorbid conditions (e.g., AIDS, hepatic failure, lymphoma), primary ICU admitting diagnosis, location prior to admission (i.e., admission source), dates of ICU and hospital admission, and discharge, vital status at time of ICU and hospital discharge (i.e., alive or dead), destination after hospital discharge (e.g., home, nursing facility, other acute care hospital), hospital and ICU LOS, and variables necessary to determine an admission APACHE III acute physiology score.18

The APACHE III acute physiology score is based on the most abnormal value during the first 24 hours of ICU admission for 17 specific physiologic variables (e.g., mean arterial blood pressure, serum sodium and blood urea nitrogen, arterial pH, abbreviated Glasgow Coma Score). Physiologic variables with missing data were assumed to have normal values, consistent with earlier applications of APACHE III,18,21 although a minimum of 9 physiologic measurements were required for inclusion in the study. APACHE III acute physiology scores have a possible range of 0 to 252 and were determined using previously validated weights for each of the 17 variables.18 ICU admission diagnosis was determined using a previously developed taxonomy18,21 that included 47 nonoperative and 31 postoperative categories. Admission source was based on 8 mutually exclusive categories (e.g., emergency room, other acute care hospital, other hospital ward).20,21

Explicit steps were taken to ensure the reliability and validity of the study data. These included the use and development of a data dictionary, formal semiannual training sessions sponsored by Cleveland Health Quality Choice for data abstractors, manual and electronic audits to identify outlying or discrepant data, and independent re-abstraction of data for a randomly selected number of patients from each hospital to determine inter-rater reliability.19,20

Analysis

Demographic and clinical characteristics of patients admitted during different months and quarters of the academic year were compared using analysis of variance and the χ2 statistic. Independent relationships between month of admission and in-hospital mortality were determined using multiple logistic regression analysis. These analyses were adjusted for age, gender, APACHE III Acute Physiology Score, admission source and admission diagnosis, and day of the week of admission. In the logistic regression models, age was expressed as 13 indicator variables reflecting 5-year ranges (e.g., <35, 35–39, 40–44), while admission source, diagnosis, and individual ICUs were represented as n-1 indicator variables, where n is equal to the number of categories for these elements. In addition, because the relationship between APACHE III Acute Physiology Scores and in-hospital mortality was not linear, physiology scores were represented in the models as both a continuous and a series of 22 indicator variables for specific ranges of scores (e.g., 20–24, 25–29, and 30–34). The addition of the physiology score indicator variables better fit the curvilinear relationship and improved model calibration, relative to models that included only the continuous variable. Separate analyses were performed for patients admitted to major teaching hospitals, minor teaching hospitals, and nonteaching hospitals.

Discrimination of the multivariable model in the 3 groups of hospitals was determined using the c statistic, which reflects the proportion of time the risk of death was higher in patients who died than in patients who were discharged alive. The c statistic is numerically equivalent to the area under the receiver operating characteristic curve.22,23 Calibration of the multivariable model was assessed suing the Hosmer-Lemeshow statistic, which compares observed and predicted mortality rates in deciles of increasing risk.22

The effect of month of admission on hospital mortality was examined by including variables for either the quarter of the academic year during which the admission occurred (e.g., Quarter 1 = admissions from July through September) or the individual month. We examined quarter of the academic year, in addition to month of the academic year because inexperience is not only limited to the month of July. Analyses examining quarter of admission also provided more power to detect statistically significant differences in mortality. Additional stratified analyses examined each of the 5 academic years for which we had complete data separately, surgical and nonsurgical patients separately, and individual hospitals and ICUs separately. Further analyses were conducted that were limited to patients who died within 1 and 3 days of ICU admission, given that patients who die soon after ICU admission may be particularly susceptible to housestaff inexperience and delays in diagnosis and treatment. For the same reason, a separate analysis of patients transferred to the ICU from another hospital ward was conducted.

All analyses used the technique of effect coding, which provides parameter estimates for each quarter or month of the academic year, relative to the overall mean for the entire year, rather than to an arbitrary reference month or quarter, as is done with indicator variables.24

Finally, to examine the association between month of admission and ICU and hospital LOS, we conducted linear regression analyses adjusting for the same variables as in the logistic regression analyses. Because of the skewed distribution, these analyses used the natural logarithm of the LOS as the dependent variable. Due to differences in the relationship between severity of illness and LOS in patients who died and patients discharged alive, analyses were conducted only in patients discharged alive. The anti-logs of the coefficients associated with the months of admission were used to estimate the percent differences in LOS, relative to the overall LOS for the entire year. All analyses were conducted using SAS for Windows, Version 8.0 (SAS Institute Inc., Cary, NC).

RESULTS

Major Teaching Hospitals

Admissions from July through September comprised 25.3% (n = 12,365) of total admissions to major teaching hospitals during the study period. Although statistically significant differences in age, admission source, and in the prevalence of some comorbid conditions according to quarter of admission were observed (Table 1), the magnitude of the differences was generally small. Moreover, mean Apache III score Acute Physiology scores were similar (P = .26).

Table 1.

Characteristics of Patients According to Months of Admission to Major Teaching Hospitals

| Jul–Sep (N = 12,365) | Oct–Dec (N = 12,029) | Jan–Mar (N = 12,088) | Apr–Jun (N = 12,371) | P Value | |

|---|---|---|---|---|---|

| Mean age, y ± SD | 57 ± 19 | 58 ± 18 | 58 ± 18 | 58 ± 18 | <.001 |

| Male, % | 54 | 54 | 52 | 53 | <.01 |

| Mean Apache III score ± SD | 43 ± 27 | 43 ± 26 | 44 ± 27 | 43 ± 27 | .26 |

| Admission type, % | |||||

| Nonoperative | 58 | 58 | 58 | 57 | .55 |

| Postoperative | 42 | 42 | 42 | 43 | |

| Comorbid conditions, % | |||||

| AIDS | 1.0 | 1.0 | 1.3 | 0.9 | .17 |

| Cirrhosis | 11.7 | 10.4 | 13.5 | 11.7 | <.001 |

| Renal dialysis | 5.3 | 5.3 | 5.2 | 4.9 | .54 |

| Hepatic failure | 4.0 | 3.6 | 4.1 | 3.9 | .69 |

| Lymphoma | 3.1 | 2.9 | 3.1 | 2.6 | .55 |

| Leukemia | 3.4 | 3.0 | 3.4 | 3.7 | .37 |

| Solid tumor | 14.4 | 13.5 | 14.0 | 14.2 | .66 |

| Immunosuppression | 21.7 | 21.0 | 24.0 | 22.5 | .01 |

| 1 Or more conditions | 18.3 | 19.4 | 19.2 | 18.4 | .05 |

| 2 Or more conditions | 4.2 | 4.5 | 4.1 | 3.9 | .19 |

| Unadjusted mortality, % | 13.9 | 14.7 | 14.8 | 14.2 | .15 |

| Mean ICU length of stay, d ± SD | 4 ± 6 | 4 ± 6 | 4 ± 5 | 4 ± 5 | .68 |

| Mean hospital length of stay, d ± SD | 14 ± 16 | 14 ± 15 | 14 ± 15 | 14 ± 14 | .06 |

| Admission source, % | |||||

| Operating room | 26.0 | 26.3 | 26.2 | 27.1 | .20 |

| Recovery room | 17.5 | 17.0 | 16.8 | 16.8 | .40 |

| Emergency room | 29.7 | 29.2 | 29.3 | 27.7 | <.01 |

| Hospital floor | 5.3 | 5.3 | 5.2 | 4.9 | .53 |

| Other ICU in hospital | 2.9 | 2.6 | 3.0 | 3.1 | .06 |

| Other hospital | 5.3 | 5.5 | 5.2 | 5.5 | .54 |

| Other facility | 3.6 | 3.8 | 3.8 | 3.9 | .71 |

| Non-ICU holding area | 0.0 | 0.1 | 0.1 | 0.1 | <.01 |

ICU, intensive care unit.

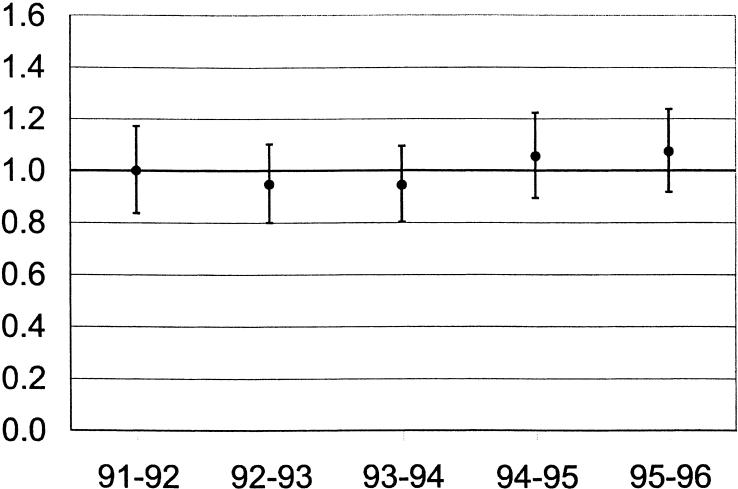

Unadjusted hospital mortality was similar in admissions during different quarters of the academic year (13.9%, 14.7%, 14.8%, and 14.2% in July–September, October–December, January–March, and April–June, respectively; P = .15). Using logistic regression to control for gender, age, comorbidity, ICU admission source and diagnosis, APACHE III Acute Physiology Scores, and day of the week of admission, the odds of death in patients admitted during Quarter 1 (July–September) of the academic year (odds ratio [OR], 0.96; 95% confidence interval [95% CI], 0.91 to 1.02, P = 0.21) were similar to the odds of death in Quarters 2, 3, and 4 (Table 2). These results were consistent in separate analyses of each of the 5 academic years for which we had complete data (Fig. 1). The adjusted odds of death for first-quarter admissions ranged from 0.94 (P = .43) in 1993–94 to 1.07 (P = .31) in 1995–96. Results also were consistent within individual hospitals and individual ICUs; for example, the odds of death in the 5 major teaching hospitals for patients admitted from July through September were 0.91, 0.92, 1.00 1.04, and 1.21 (P = .15, .87, .74, .94, and .49, respectively).

Table 2.

Adjusted Odds of Death by Quarter of Academic Year in Major, Minor, and Non-teaching Hospitals: OR (95% CI, P Value)

| Quarter 1 (Jul–Sep) | Quarter 2 (Oct–Dec) | Quarter 3 (Jan–Mar) | Quarter 4 (Apr–Jun) | |

|---|---|---|---|---|

| Major teaching | 0.96 (0.91 to 1.02, .18) | 1.01 (0.95 to 1.06, .85) | 1.05 (0.99 to 1.11, .09) | 0.98 (0.93 to 1.04, .59) |

| Minor teaching | 1.07 (0.98 to 1.16, .11) | 0.90 (0.82 to 0.97, <.01) | 1.07 (0.98 to 1.15, .10) | 0.98 (0.90 to 1.06, .61) |

| Nonteaching | 0.96 (0.91 to 1.01, .11) | 1.06 (1.01 to 1.11, .02) | 1.00 (0.95 to 1.05, .96) | 0.98 (0.93 to 1.03, .47) |

FIGURE 1.

Odds of death and associated 95% confidence intervals in major teaching hospitals for first-quarter admissions by academic year.

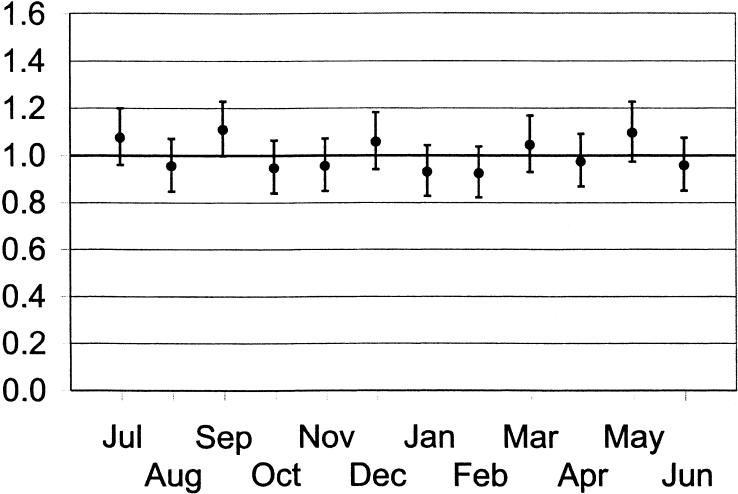

There was also no difference in the odds of death when months of admission to major teaching hospital ICUs were evaluated separately (Fig. 2). All odds ratios were relatively similar, ranging from 0.92 (P = .16) in February to 1.10 (P = .10) in September, and no consistent trends in the odds ratio were observed (e.g., declines over the course of the academic year).

FIGURE 2.

Odds of death and associated 95% confidence intervals in major teaching hospitals during individual months of the academic year.

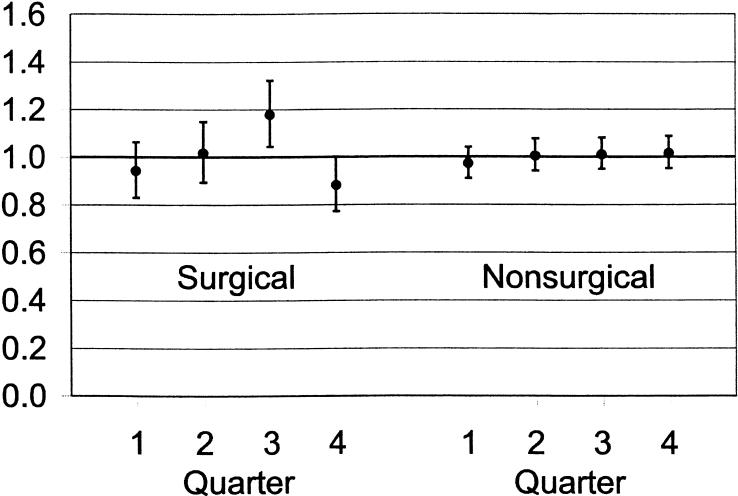

Findings were consistent in further analyses that examined surgical and nonsurgical patients separately (Fig. 3), although these analyses did find an unexpected, significantly higher odds of death in surgical patients during Quarter 3. Findings were also similar in an analysis limited to patients admitted to the ICU from hospital wards (OR for first-quarter admissions, 0.99; 95% CI, 0.88 to 1.10; P = .99) and in analyses limited to patients who died within 1 day of ICU admission (OR for first-quarter admissions, 1.06; 95% CI, 0.98 to 1.15; P = .29) or within 3 days of admission (OR for first-quarter admissions, 0.97; 95% CI, 0.86 to 1.02; P = .43).

FIGURE 3.

Odds of death and associated 95% confidence intervals in major teaching hospitals during Quarters 1 to 4 of the academic year for surgical and nonsurgical patients.

The c statistic for the mortality risk adjustment model (in all patients) was 0.901, indicating excellent model discrimination. Although the Hosmer-Lemeshow statistic was significant (χ2 [8 df], 22.65; P < .01), there was no systematic variation across severity deciles. Moreover, the magnitude of the statistic was relatively small for the large study sample.22,23

Unadjusted mean ICU and hospital LOS were 4.3 and 14.3 days, respectively and were similar (P≥ .05) across individual quarters and months. In linear regression analyses, adjusting for the same variables as in the logistic regression analyses, ICU length of stay was 0.3% higher (i.e., an additional 0.3 hours) in Quarter 1 (Table 3). This difference was not significant. Differences for Quarters 2, 3, and 4 were also not significant. Generally, similar results were obtained for hospital LOS.

Table 3.

Adjusted Percent Difference in Length of Stay (LOS) by Quarter of Academic Year, Relative to the Overall Length of Stay for the Entire Year, in Major, Minor, and Nonteaching Hospitals: % Difference (95% CI)

| Quarter 1 (Jul–Sep) | Quarter 2 (Oct–Dec) | Quarter 3 (Jan–Mar) | Quarter 4 (Apr–Jun) | |

|---|---|---|---|---|

| ICU LOS | ||||

| Major teaching | 0.3 (−0.7 to 1.2) | −0.3 (−1.3 to 0.7) | −0.4 (−1.4 to 0.6) | 0.5 (−0.5 to 1.5) |

| Minor teaching | 0.2 (−0.9 to 1.4) | 0.5 (−0.7 to 1.6) | −1.0 (−2.2 to 0.1) | 0.3 (−0.8 to 1.4) |

| Nonteaching | −0.8* (−1.4 to −0.1) | −0.5 (−1.2 to 0.1) | 0.5 (−0.1 to 1.2) | 0.7* (.07 to 1.4) |

| Hospital LOS | ||||

| Major teaching | −0.8 (−2.7 to 1.0) | −2.8† (−4.6 to −1.1) | −1.0 (−2.8 to 0.8) | 4.9‡ (3.0 to 6.8) |

| Minor teaching | 0.1 (−2.1 to 2.3) | 1.0 (−1.2 to 3.2) | 0.8 (−1.4 to 3.0) | −1.9 (−4.0 to 0.2) |

| Nonteaching | −1.1 (−2.3 to 0.2) | −0.9 (−2.2 to 0.4) | 1.3* (0.0 to 2.5) | 0.7 (−0.6 to 2.0) |

P < .05.

P < .01.

P < .001.

Minor Teaching and Nonteaching Hospitals

Unadjusted hospital mortality was similar in admissions during different quarters of the academic year in minor teaching hospitals (11.0%, 11.3%, 12.2%, and 11.5% in July–September, October–December, January–March, and April–June, respectively; P = .13). However, mortality was somewhat lower in first-quarter admissions in nonteaching hospitals (10.4%, 11.2%, 11.7%, and 10.9%, respectively; P = .001). Adjusting for gender, age, comorbidity, ICU admission source and diagnosis, APACHE III Acute Physiology Scores, and day of the week of admission, the odds of death in patients admitted during Quarter 1 of the academic year were similar in minor teaching (OR, 1.07; 95% CI, 0.98 to 1.16; P = .11) and nonteaching hospitals (OR, 0.96; 95% CI, 0.91 to 1.01; P = .11; Table 2).

Unadjusted mean ICU length of stay in minor teaching and nonteaching hospitals was 4.0 and 3.8 days, respectively, while mean hospital LOS was 11.5 and 10.7 days, respectively. Similar to results in major teaching hospitals, adjusted ICU length of stay (Table 3) and hospital LOS were similar in first quarter admissions in minor teaching hospitals, although ICU LOS was 1% shorter in first-quarter admissions in nonteaching hospitals.

C statistics for the mortality risk adjustment models in minor and nonteaching hospitals were 0.912 and 0.905, respectively, while Hosmer-Lemeshow statistics were 20.72 and 20.92, respectively (P < .01 for each). These values were similar to values for major teaching hospitals.

DISCUSSION

In analyses of over 48,000 patients admitted to ICUs in 5 major teaching hospitals, using a validated method of adjusting for admission severity of illness, several important findings emerge. First, in-hospital mortality and LOS were similar in patients admitted to intensive care units from July through September and during later months of the academic year. Moreover, results were consistent when July, August, and September were analyzed separately, and there was no discernible pattern of variation when examining outcomes for individual months over the entire year. Furthermore, we were unable to detect differences when individual academic years, surgical and nonsurgical patients, and individual hospitals and ICUs were examined separately. These results were all similar in analyses of roughly 108,000 patients admitted to minor teaching and nonteaching hospitals.

While the existence of a so-called July Phenomenon in teaching centers remains in popular perception, few prior studies have examined differences in mortality or other indicators of quality. Buchwald, et al. sampled 2,703 medical and surgical patients admitted from 1982 to 1984 to a single hospital in Boston, and found no significant difference for patients admitted in July or August compared to those admitted in April and May.13 Rich, et al. also found no difference in mortality in 21,679 medical patients admitted from 1980 to 1986 to a single hospital in St. Paul,14 and in a follow-up examination of 240,467 medical and surgical patients admitted to 3 teaching hospitals in the Minneapolis–St. Paul region between 1983 and 1987.15

These same studies found conflicting results in LOS outcomes. In the study by Buchwald et al., there was no significant difference in LOS.13 In contrast, the first study by Rich et al. found decreased LOS and decreased hospital charges as housestaff experience increased.14 However, the multicenter follow-up found no difference in LOS on the medical services, and that LOS actually increased over the academic year on the surgical services.15

The current findings add to these earlier studies in important ways. First, the study involved a more contemporary cohort of patients and may be more reflective of recent patterns of hospital utilization. Second, the current study adjusted for admission severity of illness using more sophisticated risk-adjustment models that were developed from clinical data abstracted from patients' medical records. Moreover, the current study was the first to analyze a cohort of critical care patients who may be particularly susceptible to harm from errors in judgment. The current study also included a large cohort of patients in nonteaching hospitals for comparison. Finally, the study employed a large sample size and was powered to detect relatively small differences in severity-adjusted mortality. Examination of the confidence intervals around the odds ratios for major teaching hospitals (Table 2Fig. 1) indicates that our analysis would have been able to detect a statistically significant difference if the odds of death was roughly 1.20 for July or roughly 1.10 for the first quarter of the academic year.

When interpreting our findings it is important to consider several limitations. First, although the sample included several teaching and nonteaching hospitals, our study was limited to evaluation of patients admitted in a single geographic region. The generalizability of our findings to regions should be established. Moreover, the generalizability of our findings to non-ICU settings of care is uncertain. Although we chose to study critical care patients because of their higher acuity and the likelihood that they would be susceptible to initial errors in judgment, it is possible that ICU residents may have a greater level of supervision than their non-ICU counterparts, thus masking any evidence of the July Phenomenon.

Second, our findings are based on data from the early to mid 1990s and may not reflect the impact of recent changes in billing regulations in teaching hospitals. However, one would expect that these recent changes would increase oversight of trainees by attending physicians and further diminish the July Phenomenon.

Third, while our risk-adjustment model exhibited excellent discrimination, it is possible that variables not assessed by APACHE III may have contributed to variations in mortality. Such factors as functional status, mental health, social support, and health insurance status, may confound the interpretation of our data with respect to the outcomes observed.25 Moreover, no information was collected regarding the goals of ICU treatment, patient and/or family preferences for specific ICU treatments, or resuscitation status.

In addition, our analysis was unable to exclude an effect of seasonal variation in hospital mortality that may mask or enhance variation due to housestaff inexperience. For example, an underlying increase in hospital mortality during winter months could mask the appearance of a July Phenomenon and give the impression of a constant risk across the calendar year. However, we did not see evidence of seasonal variation in mortality in nonteaching hospitals.

Fourth, because risk-adjustment models incorporated data from up to 24 hours after admission, it is possible that adverse effects during the first 24 hours of admission were included in the severity assessment. However, prior studies that compared severity-of-illness assessments at admission and at 24 hours have found little difference between the 2 timepoints.18,26 Furthermore, we could identify no significant variation in Apache III Acute Physiology Scores over the academic year, indicating no evidence for a July Phenomenon in care delivered during the first 24 hours of ICU admission.

Fifth, protocols for collecting study data excluded patients who died within 1 hour of admission to the ICU. Such patients may have been particularly vulnerable to delays in diagnosis and therapy. However, analyses examining mortality occurring within 1 day and 3 days of ICU admission yielded nearly identical findings as results examining all deaths.

Finally, our analysis was limited to the outcomes of in-hospital mortality and LOS. Although these 2 outcome measures are widely used indicators, quality of care encompasses multiple dimensions. Thus, the implications of our study for other aspects of the quality of care, such as the processes of care, costs, functional outcomes, patient satisfaction, and long-term mortality, are uncertain.

The current findings are in contrast with a growing body of literature that suggests that physician experience contributes to patient outcomes.1–11 This could indicate that the overall impact of trainees on patient outcomes is small. Alternatively, these results could suggest that hospitals and residency training programs compensate for housestaff inexperience early in the academic year. Compensation might include selective scheduling of more senior residents and greater oversight by attending physicians, nurses, and critical care fellows. Our data did not allow for direct analyses of these organizational factors that might protect from a July Phenomenon.

In conclusion, we found no evidence to support the existence of a July Phenomenon in this cohort of patients admitted to teaching hospital ICUs. Further research should analyze other dimensions of quality of care and examine those organizational features that may help teaching hospitals compensate for the inexperience of housestaff early in the academic year.

Acknowledgments

Dr. Rosenthal is a Senior Quality Scholar, Office of Academic Affiliation, Department of Veterans Affairs. Dr. Barry supported, in part, by a Quality Scholar's Fellowship, Department of Veterans Affairs.

REFERENCES

- 1.Luft HS, Bunker JP, Enthoven AC. Should operations be regionalized? The empirical relation between surgical volume and mortality. N Engl J Med. 1979;301:1364–9. doi: 10.1056/NEJM197912203012503. [DOI] [PubMed] [Google Scholar]

- 2.Begg CB, Cramer LD, Hoskins WJ, Brennan MF. Impact of hospital volume on operative mortality for major cancer surgery. JAMA. 1998;280:1747–51. doi: 10.1001/jama.280.20.1747. [DOI] [PubMed] [Google Scholar]

- 3.Birkmeyer JD, Warshaw AL, Finlayson SRG, Grove MR, Tosteson ANA. Relationship between hospital volume and late survival after pancreatoduodenectomy. Surgery. 1999;126:178–83. [PubMed] [Google Scholar]

- 4.Wennberg DE, Lucas FL, Birkmeyer JD, Bredenberg CE, Fisher ES. Variation in carotid endarterectomy mortality in the Medicare population: trial hospitals, volume, and patient characteristics. JAMA. 1998;279:1278–81. doi: 10.1001/jama.279.16.1278. [DOI] [PubMed] [Google Scholar]

- 5.Hannan EL, Siu AL, Kumar D, Kilburn H, Chassin MR. The decline in coronary artery bypass graft surgery mortality in New York state: the role of surgeon volume. JAMA. 1995;273:209–13. [PubMed] [Google Scholar]

- 6.Sanchez PL, Harrell LC, Salas RE, Palacios IF. Learning curve of the Inoue technique of percutaneous mitral balloon valvuloplasty. Am J Cardiol. 2001;88:662–7. doi: 10.1016/s0002-9149(01)01810-0. [DOI] [PubMed] [Google Scholar]

- 7.Liberman L, Benton CL, Dershaw DD, Abramson AF, Latrenta LR, Morris EA. Learning curve for stereotactic breast biopsy: how many cases are enough? Am J Roentgenol. 2001;176:721–7. doi: 10.2214/ajr.176.3.1760721. [DOI] [PubMed] [Google Scholar]

- 8.Laffel GL, Barnett AI, Finklestein S, Kaye MP. The relationship between experience and outcome in heart transplantation. N Engl J Med. 1992;327:1220–5. doi: 10.1056/NEJM199210223271707. [DOI] [PubMed] [Google Scholar]

- 9.Kitahata MM, Koepsell TD, Deyo RA, Maxwell CL, Dodge WT, Wagner EH. Physicians' experience with the acquired immunodeficiency syndrome as a factor in patients's survival. N Engl J Med. 1996;334:701–6. doi: 10.1056/NEJM199603143341106. [DOI] [PubMed] [Google Scholar]

- 10.The Southern Surgeons Club. Moore MJ, Bennett CL. The learning curve for laparoscopic cholecystectomy. Am J Surg. 1995;170:55–9. doi: 10.1016/s0002-9610(99)80252-9. [DOI] [PubMed] [Google Scholar]

- 11.Tassios PS, Ladas SD, Grammenos I, Demertzis K, Raptis SA. Acquisition of competence in colonoscopy : the learning curve of trainees. Endoscopy. 1999;31:702–6. doi: 10.1055/s-1999-146. [DOI] [PubMed] [Google Scholar]

- 12.Zuger A. Essay; it's July, the greenest month in hospitals. No need to panic. New York Times. Science Desk, Section F4, July 7, 1998. Available at: www.nytimes.com. Accessibility verified May 21, 2002.

- 13.Buchwald D, Komaroff AL, Cook EF, Epstein AM. Indirect costs for Medical Education: is there a July Phenomenon? Arch Intern Med. 1989;149:765–8. [PubMed] [Google Scholar]

- 14.Rich EC, Gifford G, Luxenberg M, Dowd B. The relationship of house staff experience to the cost and quality of inpatient care. JAMA. 1990;263:953–8. [PubMed] [Google Scholar]

- 15.Rich EC, Hillson SD, Dowd B, Morris N. Specialty differences in the July Phenomenon for Twin Cities teaching hospitals. Med Care. 1993;31:73–83. doi: 10.1097/00005650-199301000-00006. [DOI] [PubMed] [Google Scholar]

- 16.Shulkin DJ. The July Phenomenon revisited: are hospital complications associated with new house staff? Am J Med Qual. 1995;10:14–7. doi: 10.1177/0885713X9501000104. [DOI] [PubMed] [Google Scholar]

- 17.Claridge JA, Schulman AM, Sawyer RG, Ghezel-Ayagh A, Young JS. The “July Phenomenon” and the care of the severely injured patient: fact or fiction? Surgery. 2001;130:346–53. doi: 10.1067/msy.2001.116670. [DOI] [PubMed] [Google Scholar]

- 18.Knaus WA, Wagner DP, Draper EA, et al. The APACHE III prognostic system. Risk prediction of hospital mortality for critically ill hospitalized adults. Chest. 1991;100:1619–36. doi: 10.1378/chest.100.6.1619. [DOI] [PubMed] [Google Scholar]

- 19.Rosenthal GE, Harper DL. Cleveland Health Quality Choice: a model for collaborative community-based outcomes assessment. Jt Comm J Qual Improv. 1994;20:425–42. doi: 10.1016/s1070-3241(16)30088-8. [DOI] [PubMed] [Google Scholar]

- 20.Sirio CA, Angus DC, Rosenthal GE. Cleveland Health Quality Choice (CHQC)—an ongoing collaborative, community-based outcomes assessment program. New Horiz. 1994;2:321–5. [PubMed] [Google Scholar]

- 21.Sirio CA, Shepardson LB, Rotondi AJ, et al. Community-wide assessment of intensive care outcomes using a physiologically based prognostic measure: implications for critical care delivery from Cleveland Health Quality Choice. Chest. 1999;115:793–801. doi: 10.1378/chest.115.3.793. [DOI] [PubMed] [Google Scholar]

- 22.Ash AS, Shwartz M. Evaluating the performance of risk-adjustment methods: dichotomous outcomes. In: Iezzoni LI, editor. Risk Adjustment for Measuring Healthcare Outcomes. Chicago, Ill: Health Administration Press; 1997. pp. 427–70. [Google Scholar]

- 23.Daley J. Validity of risk-adjustment methods. In: Iezzoni LI, editor. Risk Adjustment for Measuring Healthcare Outcomes. Chicago, Ill: Health Administration Press; 1997. pp. 239–61. [Google Scholar]

- 24.SAS/STAT Users Guide, Version 8. Vol. 1. Cary, NC: SAS Institute, Inc.; 1999. The LOGISTIC Procedure; pp. 1914–5. [Google Scholar]

- 25.Vella K, Goldfrad C, Rowan K, Bion J, Black N. Use of consensus development to establish national research priorities in critical care. BMJ. 2000;320:976–80. doi: 10.1136/bmj.320.7240.976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Knaus WA, Draper EA, Wagner DP, Zimmerman JE. APACHE II: a severity of disease classification system. Crit Care Med. 1985;13:818–29. [PubMed] [Google Scholar]