Abstract

The poor translation of evidence into practice is a well-known problem. Hopes are high that information technology can help make evidence-based practice feasible for mere mortal physicians. In this paper, we draw upon the methods and perspectives of clinical practice, medical informatics, and health services research to analyze the gap between evidence and action, and to argue that computing systems for bridging this gap should incorporate both informatics and health services research expertise. We discuss 2 illustrative systems—trial banks and a web-based system to develop and disseminate evidence-based guidelines (alchemist)— and conclude with a research and training agenda.

Keywords: medical informatics, evidence-based medicine, decision making, knowledge bases, guidelines

Despite the broad acceptance of evidence-based medicine as the gold standard approach to clinical practice, patients often fail to receive the care that the scientific evidence dictates. For example, randomized clinical trials (RCTs) and other studies have consistently shown since the 1980s that β-blockers given at hospital discharge from an acute myocardial infarction (MI) reduce subsequent mortality,1–3 yet in 1998, only 50% of eligible patients nationwide were prescribed β-blockers at hospital discharge.2 What is required to ensure that patients receive evidence-based care during their encounters with the health care system?

In recent years, computer-based approaches to managing the clinical literature have become widespread. With over 10,000 RCTs indexed in medline in 1999 alone, computers are no doubt a necessary, but not sufficient, technology for evidence-based practice. Broadly speaking, information technologies will be needed to 1) help the research community manage what is and is not known, 2) assist clinicians with applying the totality of the research evidence to individual patients, and 3) encourage, facilitate, and monitor practice change. Informatics systems that meet these objectives will have to be not only technically sophisticated but also grounded in an understanding of real-world clinical reasoning and practice. Furthermore, to affect point-of-care decision making, these systems must work within the complex organizational and policy environment of today's fractured health care system. Therefore, we believe that efforts to help physicians achieve evidence-based practice through technology need to draw from both medical informatics and health services research.

In this paper, we first draw upon the methods and perspectives of clinical practice, medical informatics, and health services research to analyze the critical gaps between evidence and action. We then provide 2 illustrative examples of informatics solutions being developed for bridging this gap that are grounded in health services research: trial banks to capture the design and results of RCTs for systematic reviewing and computer-based reasoning,4 and the alchemist system for creating and customizing decision-model–based guidelines.5 These examples will emphasize the joint role of health services research and medical informatics as foundational disciplines for informatics systems that will help methodologists and practitioners translate evidence into practice more effectively and efficiently. We then suggest an agenda for future methodological work in informatics and health services research.

FROM EVIDENCE TO ACTION

Four major difficulties span the gap between evidence and action, the difficulties in 1) obtaining evidence that is relevant to the clinical action, 2) systematically reviewing this evidence, 3) applying this summary evidence within a specific decision context at the point of care, and 4) supporting and measuring the practice change that should ensue from applying this evidence. While informatics systems can ameliorate each of these difficulties, their success at doing so has been limited to date. We discuss how strategies that combine informatics, health services research, and clinical-practice expertise promise greater success than current strategies at resolving each of these 4 problem areas.

Obtaining Relevant Evidence

Estimates are that only about 20% of clinical care is addressed by research evidence, and controversy exists as to the merits of efficacy versus effectiveness trials for rectifying this problem.6 Notwithstanding this debate, an even more fundamental challenge faces the clinical research community: we do not know what we know,7 what has been or is currently being investigated,8 or which important research questions are not being investigated at all.9 If the clinical research community as a whole is without such knowledge, how can individual physicians be expected to know the status of relevant clinical research?

The policy response to these shortcomings of clinical research is beyond the scope of this paper, but a first step toward a better managed clinical research portfolio would be to construct comprehensive databases of past and ongoing research projects that facilitate systematic review and interpretation. The www.clinicaltrials.gov trial registry and others like it10–12 are a start, but because study registries are often geared only toward study enrollment, they do not typically contain sufficient details of study design, execution, and results for analysis. Furthermore, existing study registries do not use standardized medical vocabularies and are thus difficult to cross-search comprehensively. A high priority should therefore be placed on constructing databases of clinical research that meet the performance standards of health services research (sufficient detail for systematic reviews) and medical informatics (use of standardized vocabularies).

Systematic Review of Evidence

Over a decade of work has emphasized the importance of systematically reviewing clinical research results. The methodological and logistical challenges to performing systematic reviews have been discussed elsewhere.13 From the informatics perspective, systematic reviewing consists of 4 main tasks: 1) identifying and retrieving research studies, 2) judging the internal and external validity of the studies, 3) performing a qualitative and/or quantitative synthesis as appropriate based on the heterogeneity of the studies, and 4) interpreting the totality of the evidence in its proper socioeconomic and ethical context. Most computer programs for systematic reviewing support only the quantitative synthesis task, leaving users to struggle with retrieving poorly indexed study reports14,15 that are too often incomplete16–18 and nonstandardized.19

Information technologies can better support these 4 main tasks of systematic reviewing if clinical studies are available in computer-understandable databases as well as in prose articles. Thus, high-performance clinical research databases that are built for managing the clinical research portfolio, as discussed above, could double as completely reported, standardized databases for systematic reviewing. To build such multipurpose databases, careful attention must be paid to which study attributes are captured, so as to ensure that the necessary and sufficient data are available for all intended uses. In addition, these databases should have compatible data designs so that cross-searches among them would yield valid and reliable results. For example, the RCT Bank database20 stores study inclusion and exclusion criteria separately, whereas the ClinicalTrials.gov database21 stores all criteria together as generic entrance criteria. With such incompatibility, a search for inclusion criteria across these 2 databases may yield incorrect results. Informatics techniques of knowledge modeling and interoperation are thus critical for computer-based approaches to support systematic reviewing.

Application of Evidence in the Decision-making Context

The point of care is where the logistical barriers of practicing evidence-based medicine becoming overwhelming. These difficulties are likely to increase as Internet-based consumer health programs shift the point of care beyond the clinic and the hospital.22 Computing systems for supporting evidence-based decision making must mesh with complex workflow patterns while encouraging and supporting methodologically sound applications of research evidence.

At present, the majority of computer-based approaches to facilitating evidence-based practice involve the electronic dissemination of vast quantities of static and generic information to practitioners. For example, the National Guideline Clearinghouse23 includes over 1,000 guidelines, but while the website provides some support for choosing among potentially relevant guidelines, it does not help users to adapt these guidelines to particular patients or populations. Furthermore, many computer-based approaches disseminate information that is not necessarily based on rigorous evidence appraisal, synthesis, and application methodologies. For example, Kuntz and colleagues compared expert panel and decision analytic recommendations for coronary angiography after myocardial infarction, and found that some interventions that the experts judged as inappropriate had cost-effectiveness ratios comparable with many generally recommended medical interventions.24 Decision models provide a formal analytic framework for representing the evidence, outcomes, and preferences involved in a clinical decision. Basing guideline creation on such a decision analytic framework allows the performance of sensitivity analyses to identify critical variables and thus to focus refinement of the evidence. This analytic process can enrich the resulting guideline.5,25–28 In the National Guideline Clearinghouse however, only a minority of the guidelines reference decision models for their development.

Three contingencies are crucial for enabling a new breed of decision support systems that are more workflow-sensitive and methodologically rigorous. These contingencies have profound informatics implications. First, the best current evidence must be available to decision support systems in computer-understandable form. Second, to increase physician acceptance of decision support systems, their recommendations should be patient specific. Third, decision support systems must be designed to complement the workflow and preferences of their intended users, be they physicians, nurses, or patients.

To meet these contingencies, the following technologies are needed:

computer-understandable clinical research databases as discussed above;

electronic medical records (EMRs) and other clinical systems that use a rich standardized clinical vocabulary, e.g., SNOMED,29 to ensure that different systems share a common understanding of the clinical situation;

standardized interfaces among clinical and practice management systems to facilitate communication among multiple systems;

new and higher-performance technologies (e.g., speech recognition and wireless computers) to make it easier for physicians to enter data and to enable better workflow compatibility.

With this suite of technologies, frontline decision support systems would be better able to dynamically generate patient-specific assessments and recommendations by matching studies, systematic reviews, and guidelines with individual patient characteristics as stored in the EMR. The recommended action could then be delivered to handheld computers, which can send wireless instructions to practice management and other systems to execute the recommended action.

Unfortunately, the needed technologies are not yet widely available. At present, commercial EMR systems tend to use only ICD-9 and CPT-4 coding30—rather than SNOMED, the most expressive and robust clinical vocabulary available31,32—in part because the benefits of SNOMED over ICD-9 are not widely appreciated. More standard interfaces such as HL-7 are still needed to integrate the information systems of our highly fragmented health care industry.33 In summary, the development of practical decision support systems for routine clinical use still faces major informatics and health services research barriers.34 It remains to be determined how such more-rigorous and workflow-sensitive decision support systems will affect physician practice behavior.

Effect and Measure Practice Change

In addition to their role as a methodological tool, computers can be used to implement and measure practice change. Although evidence suggests that computerized reminder systems are effective at changing practice behavior, other types of computerized decision support systems have been less promising.35–37 Indeed, physicians are notorious for being resistant to computer-based initiatives,38,39 in part because physicians are reluctant to type, but also because system designs often fail to accommodate the time and resource constraints of daily practice. Information systems can thus both help and hinder attempts to overcome the multitude of organizational and cognitive psychological barriers that impede practice change.40 Findings from human factors41,42 and organizational change43 research are thus applicable to designing user-friendly and error-proof decision support systems.

Computers have been far more successful at measuring than at effecting practice change, especially in situations in which data warehouses and other large healthcare databases are available for outcomes analysis. In addition to the health services research methods required for these analyses, informatics expertise in database engineering, networking, and electronic security are also relevant for constructing and analyzing these databases. Furthermore, both health policy and informatics are relevant to the important task of protecting health information privacy in the electronic age.44

In all 4 problem areas of evidence-based practice—obtaining, reviewing, and applying evidence, as well as effecting practice change—the disciplines of both informatics and health services research are vital; both are often underused.

CLOSING THE GAP: TWO EXAMPLES

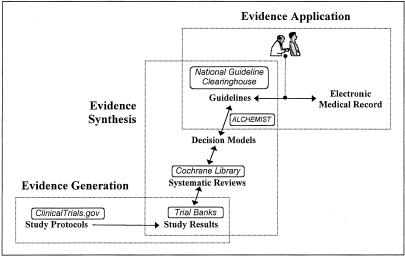

To be of greatest impact, computer-based approaches to these 4 problem areas should together provide integrated end-to-end support for evidence-based practice—from evidence generation to synthesis to application (Fig. 1). We now describe 2 informatics systems that provide complementary solutions to the incorporation of up-to-date randomized trial evidence into patient-specific guidelines.

FIGURE 1.

Life stages of evidence in evidence-based practice. To help translate evidence to practice, information systems are needed for supporting all the life stages of evidence, from evidence generation to synthesis to application. Solid lines denote connections that should, in the future, be implemented as open applications programming interfaces that all use the same standardized clinical vocabulary. Example systems as discussed in the text (boxed) are shown to illustrate their relationships to each other. For example, the ClinicalTrials.gov electronic trial registry expedites evidence generation by supporting patient recruitment. The study results are stored in trial banks and synthesized into systematic reviews. The systematic reviews are themselves stored electronically, in repositories such as the Cochrane Library. Systematic review findings can then be further synthesized into decision models, which alchemist can transform into guidelines. Finally, guidelines in electronic repositories such as the National Guideline Clearinghouse can be matched to electronic medical records to facilitate evidence application.

Trial Bank Project

Millions of dollars are spent every year on RCTs. Regrettably, information about these studies are available to the scientific community only as nonstandardized, often incomplete text articles of several thousand words in length. Because computers cannot yet read text well, computers can only keyword-search these articles and cannot perform any advanced computation on these articles' informational content.

To address these shortcomings, the trial-bank publishing project4 asks authors of RCT reports that have been accepted to either the Annals of Internal Medicine or JAMA to describe their trials directly into a structured and standardized electronic knowledge base called RCT Bank. A web site called Bank-a-Trial will guide authors to report their trials using controlled clinical terms from the Unified Medical Language System45 and in accordance with an extended version of the CONSORT RCT reporting statement.46 By capturing RCTs as both prose and electronic data, trial-bank publishing provides both humans and computers with access to details of the design, execution, and results of RCTs. A wide variety of computer systems, including the alchemist system described below, can then have access to up-to-date RCT information without having to build and maintain their own RCT knowledge bases. Therefore, widespread use of trial-bank publishing would facilitate the development of clinical decision support systems that incorporate current RCT evidence into their deliberations. A similar approach could also be used for publishing clinical research of other study designs into computer-understandable knowledge bases, to assist in those situations in which RCT evidence is not available or feasible.

RCT Bank, our initial trial bank for trial-bank publishing, promotes the rigorous use of RCT evidence by being able to store all the information that a systematic reviewer would need about a trial. The types of required trial information were derived from a hierarchical task analysis of systematic reviewing.47,48 Trial-bank publishing authors can thus be assured that they can fully describe their trials for subsequent detailed review.

If trial-bank publishing does become widespread, it is likely that there will be many trial banks, some affiliated with journals and others with governmental programs (e.g., PubMed Central) or for-profit companies. To facilitate the comprehensive identification of relevant RCTs for systematic reviews, all trial banks should be accessible through uniform data access protocols, so that, for example, a meta-analysis support system would need to issue only 1 command to search across multiple trial banks. Such uniform protocols—also called open applications programming interfaces (APIs)—will be critical for integrating all the systems that will together support evidence-based practice (Fig. 1).

The trial-bank project exemplifies the importance and relevance of both informatics and health services research methods to the development of systems to support evidence-based practice. Trial banks, however, are designed primarily to meet the needs of researchers and policy makers and only indirectly to meet the needs of clinicians and patients. To bring RCT evidence to the point of care, other systems that process and present trial-bank data are required.

alchemist Project

Clinical-practice guidelines are one avenue by which RCT and other research evidence can be brought to practitioners in a synthesized, actionable format. In 1999 alone, 691 clinical-practice guidelines were indexed in medline, a remarkable increase from 65 guidelines in 1990, and reflecting the current view that guidelines may be able to reduce unjustified practice variation, improve health outcomes, and contain costs.49–54

Optimally, guideline development involves the input of clinical experts in the target condition as well as experts in meta-analysis and decision and cost-effectiveness analysis.55,56 National organizations often convene varied numbers of such experts to develop “global” guidelines for patients with the “average” clinical characteristics of the target condition. Such traditional guidelines have several problems, however, including their static presentation on paper or the web and their propensity to being outdated as new evidence emerges. A more complex problem is that local use of global guidelines may require some guideline modification if the characteristics of the local patient population or practice differ from those of the “average” case. Because clinical and methodological experts are often locally unavailable or are prohibitively costly, local guideline developers face large barriers to developing, adapting, and maintaining high-quality evidence-based guidelines.

To address these problems, the alchemist web-based system5,57 creates clinical-practice guidelines automatically from decision models. alchemist contains a conceptual framework that represents the knowledge needed for a guideline, the knowledge inherent in a decision model, and a mapping algorithm between these 2 representations. Using this conceptual framework, alchemist analyzes a decision model and determines what it can extract automatically from the decision model and what information it must obtain from the decision analyst. alchemist then creates a web-based editor that uses free-text queries, radio buttons, and check boxes to solicit this additional knowledge from the decision analyst. Finally, alchemist combines the information extracted from the decision model and the decision analyst to create a web-accessible guideline applicable to the global patient population. This alchemist guideline includes a flowchart showing the optimal recommended strategy as well as supportive information such as the guideline's available options, outcomes, evidence, values, benefits, harms, costs, recommendations, methods of validation, sponsors, and sources. Through an open API to the trial bank system described above, alchemist would also include links to the RCT evidence base, links that can help either humans or semiautomated systems to keep guidelines up to date with the evidence.

Once a global guideline is available on the web, local guideline developers and users can adapt it to the local patient population by altering the inputs to the underlying decision model. If the underlying decision model changes as a result of new research findings, for example, these changes would be propagated rapidly and consistently to local sites. Thus, alchemist provides in these web-based guidelines the requisite tools and knowledge for customizing guidelines to specific patients or populations, and for modifying guidelines as the underlying decision model or evidence evolves over time.

The alchemist system depends on the availability of clinically valid decision models. Although decision models provide a powerful tool for analysis of clinical problems, their development requires substantial expertise, and may be expensive. In addition, some clinical problems, particularly those with many sequential decisions, are difficult to represent with decision models.

DISCUSSION

The trial bank system aims to capture RCTs in a computer-understandable form, to enable a new generation of systems for retrieving, analyzing, and presenting RCT evidence. The alchemist system aims to support a formalized method for creating guidelines, and to facilitate the dissemination and translation of these guidelines into clinical practice. Both informatics and health services research methods were central to the design of these projects.

How might the trial bank and alchemist systems help address the multi-faceted problem of improving post-MI β-blocker prescription rates? First, if the β-blocker trials of the 1970s had been available in trial banks, it might have sped the recognition that β-blockers reduce post-MI mortality, a finding that was recognized only many years after sufficient evidence had accumulated7. For the present day, Bradley et al identified several critical factors for improving post-MI β-blocker prescription rates, including the timely provision of benchmarking and performance feedback data and the availability to physicians of “credible empirical evidence to support recommended practice.”58 Certainly, the EMR is a critical technology for delivering timely practice and performance data to physicians and managers. The trial bank and alchemist systems can help deliver credible, patient-specific evidence to physicians if1 the supporting RCTs provide evidence for relevant subgroups of patients (e.g., diabetics),2 the RCTs are captured into trial banks,3 and the guidelines are based on decision models parameterized by relevant patient subgroups. If these 3 conditions were true for post-MI β-blocker prescription, then alchemist could interface with the EMR to dynamically present physicians at the point of care with recommendations specific to patient groups such as asthmatics and the elderly for which physicians are reluctant to prescribe β-blockers.59 Such guideline customization would begin to counter the common complaint that guideline recommendations are not patient specific.40 Physicians who wish to see the “credible empirical evidence” behind a guideline could then access trial banks to dynamically retrieve and view the supporting RCT data by specific patient subgroups. The trial bank and alchemist systems thus offer a general infrastructure for bringing customized evidence to the point of care, but it remains to be seen how these technologies would affect practice behavior. As has been characterized for the β-blocker prescription problem,58 information technologies are only part of the solution to achieving evidence-based practice. Organizational and other interventions are also important.34 Even so, technologies that are well crafted on the basis of both informatics and health services research promise to be more effective at promoting evidence-based practice than those that are not.

CONCLUSION

The scientific evidence from medical research is not clinician-friendly. Rather than the evidence being usable “off-the-shelf,” clinicians bear the full onus of identifying, retrieving, comparing, appraising, and synthesizing multiple studies. Even when relevant evidence is available, the microstructure and the macrostructure of health care delivery conspire to make it difficult for mere mortal physicians to change their practice behavior. Evidence-based practice is currently the hardest way to practice. It will not become the norm until it is the easiest way to practice.

What are the required next steps for the medical community? Attention and resources must be paid to train, foster, and develop a community of scholars who are cross-trained in the basic principles and methods of informatics and health services research. These scholars should include both generalist and specialist clinicians, who will play critical roles in helping to design and develop workable clinical information systems. Funders, professional organizations, and individuals from both fields must encourage and facilitate continued interactions among health services research methodologists, medical informatics researchers, and end users. Research into basic infrastructure technologies as well as end-user systems must be accompanied by rigorous evaluations of these projects, using both qualitative and quantitative methods where appropriate.

Now is a critical and exciting time for the health services research and medical informatics communities to jointly build an infrastructure based on sound health services research methods and informatics principles. By working together and involving the general medical community, we can make the most of a major opportunity for developing integrated computing systems that promise a much-needed avenue for promoting efficient and effective translation of evidence into practice. The ultimate goal of such a concerted and coordinated effort would be to make evidence-based medicine the easiest and thus the most common way to practice.

Acknowledgments

This work was supported in part by contract 297-90-0013 to the UCSF-Stanford Evidence-based Practice Center from the Agency for Healthcare Research and Quality, Rockville, Md (IS, GDS, KMM), and LM06780-01 from the National Library of Medicine (IS).

REFERENCES

- 1.Lau J, Antman EM, Jimenez-Silva J, Kupelnick B, Mosteller F, Chalmers TC. Cumulative meta-analysis of therapeutic trials for myocardial infarction. N Engl J Med. 1992;327:248–54. doi: 10.1056/NEJM199207233270406. [DOI] [PubMed] [Google Scholar]

- 2.Krumholz HM, Radford MJ, Wang Y, Chen J, Heiat A, Marciniak TA. National use and effectiveness of beta-blockers for the treatment of elderly patients after acute myocardial infarction. National Cooperative Cardiovascular Project. JAMA. 1998;280:623–9. doi: 10.1001/jama.280.7.623. [DOI] [PubMed] [Google Scholar]

- 3.Marciniak TA, Ellerbeck EF, Radford MJ, et al. Improving the quality of care for Medicare patients with acute myocardial infarction: results from the Cooperative Cardiovascular Project. JAMA. 1998;279:1351–7. doi: 10.1001/jama.279.17.1351. [see comments] [DOI] [PubMed] [Google Scholar]

- 4.Sim I, Owens DK, Lavori PW, Rennels GD. Electronic trial banks: a complementary method for reporting randomized trials. Med Decis Making. 2000;20:440–50. doi: 10.1177/0272989X0002000408. [DOI] [PubMed] [Google Scholar]

- 5.Sanders GD, Nease RF, Owens DK. Design and pilot evaluation of a system to develop computer-based site-specific practice guidelines from decision models. Med Decis Making. 2000;20:145–59. doi: 10.1177/0272989X0002000201. [DOI] [PubMed] [Google Scholar]

- 6.Carne X, Arnaiz JA. Methodological and political issues in clinical pharmacology research by the year 2000. Eur J Clin Pharmacol. 2000;55:781–5. doi: 10.1007/s002280050697. [DOI] [PubMed] [Google Scholar]

- 7.Antman E, Lau J, Kupelnick B, Mosteller F, Chalmers T. A comparison of results of meta-analyses of randomized control trials and recommendations of clinical experts. Treatments for myocardial infarction. JAMA. 1992;268:240–8. [PubMed] [Google Scholar]

- 8.Dickersin K. Why register clinical trials?–Revisited. Control Clin Trials. 1992;13:170–7. doi: 10.1016/0197-2456(92)90022-r. [DOI] [PubMed] [Google Scholar]

- 9.Lundberg GD, Wennberg JE. A JAMA theme issue on quality of care. A new proposal and a call to action. JAMA. 1998;278:1615–6. [PubMed] [Google Scholar]

- 10.TrialsCentral Clinical Trials Register. Available at: www.TrialsCentral.org. Accessed August 17, 2000.

- 11.National Cancer Institute. CancerNet: Clinical Trials Search. Available at: http://cancernet.nci.nih.gov/trialsrch.shtml. Accessed August 17, 2000.

- 12.AIDS Clinical Trial Information Service. Available at: http://www.actis.org/. Accessed March 29, 2000.

- 13.Chalmers I, Altman D. Systematic Reviews. London: BMJ Publishing Group; 1995. [Google Scholar]

- 14.Dickersin K, Scherer R, Lefebvre C. Identifying relevant studies for systematic reviews. BMJ. 1994;309:1286–91. doi: 10.1136/bmj.309.6964.1286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hersh WR, Hickam DH. How well do physicians use electronic information retrieval systems? A framework for investigation and systematic review. JAMA. 1998;280:1347–52. doi: 10.1001/jama.280.15.1347. [see comments] [DOI] [PubMed] [Google Scholar]

- 16.DerSimonian R, Charette LJ, McPeek B, Mosteller F. Reporting on methods in clinical trials. N Engl J Med. 1982;306:1332–7. doi: 10.1056/NEJM198206033062204. [DOI] [PubMed] [Google Scholar]

- 17.Meinert CL, Tonascia S, Higgins K. Content of reports on clinical trials: a critical review. Controlled Clin Trials. 1984;5:328–47. doi: 10.1016/s0197-2456(84)80013-6. [DOI] [PubMed] [Google Scholar]

- 18.Moher D, Dulberg CS, Wells GA. Statistical power, sample size, and their reporting in randomized controlled trials. JAMA. 1994;272:122–4. [PubMed] [Google Scholar]

- 19.The Standards of Reporting Trials Group. A proposal for structured reporting of randomized controlled trials. JAMA. 1994;272:1926–31. [PubMed] [Google Scholar]

- 20.Sim I RCT Presenter. Available at: rctbank.ucsf.edu/. Accessed November 16, 2001.

- 21.National Institutes of Health. ClinicalTrials.gov. Available at: www.clinicaltrials.gov. Accessed November 16, 2001.

- 22.Committee on Enhancing the Internet for Health Applications NRC. Networking Health. Prescriptions for the Internet. Washington, DC: National Academy Press; 2000. [Google Scholar]

- 23.National Guideline Clearinghouse. Available at: http://www.guideline.gov/. Accessed August 17, 2000.

- 24.Kuntz KM, Tsevat J, Weinstein MC, Goldman L. Expert panel vs decision-analysis recommendations for postdischarge coronary angiography after myocardial infarction. JAMA. 1999;282:2246–51. doi: 10.1001/jama.282.23.2246. [DOI] [PubMed] [Google Scholar]

- 25.Oddone EZ, Samsa G, Matchar DB. Global judgments versus decision-model-facilitated judgments: are experts internally consistent? Med Decis Making. 1994;14:19–26. doi: 10.1177/0272989X9401400103. [published erratum appears in Med Decis Making. 1995, Jul-Sep;15(3):230] [DOI] [PubMed] [Google Scholar]

- 26.Owens DK, Nease RF., Jr A normative analytic framework for development of practice guidelines for specific clinical populations. Med Decis Making. 1997;17:409–26. doi: 10.1177/0272989X9701700406. [DOI] [PubMed] [Google Scholar]

- 27.Hayward RS, Wilson MC, Tunis SR, Bass EB, Guyatt G. Users' guides to the medical literature. VIII. How to use clinical practice guidelines. A. Are the recommendations valid? The Evidence-Based Medicine Working Group. JAMA. 1995;274:570–4. doi: 10.1001/jama.274.7.570. [DOI] [PubMed] [Google Scholar]

- 28.Wilson MC, Hayward RS, Tunis SR, Bass EB, Guyatt G. Users' guides to the Medical Literature. VIII. How to use clinical practice guidelines. B. What are the recommendations and will they help you in caring for your patients? The Evidence-Based Medicine Working Group. JAMA. 1995;274:1630–2. doi: 10.1001/jama.274.20.1630. [DOI] [PubMed] [Google Scholar]

- 29.SNOMED International: The Systematized Nomenclature of Medicine. Available at: http://snomed.org/. Accessed August 8, 2000.

- 30.Rehm S, Kraft S. Electronic medical records: the FPM vendor survey. Fam Pract Manag. 2001;8:45–54. [PubMed] [Google Scholar]

- 31.Chute CG, Cohn SP, Campbell KE, Oliver DE, Campbell JR. The content coverage of clinical classifications. for The Computer-Based Patient Record Institute's Work Group on Codes and Structures. J Am Med Inform Assoc. 1996;3:224–33. doi: 10.1136/jamia.1996.96310636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Campbell JR, Carpenter P, Sneiderman C, Cohn S, Chute CG, Warren J. Phase II evaluation of clinical coding schemes: completeness, taxonomy, mapping, definitions, and clarity. CPRI Work Group on Codes and Structures. J Am Med Inform Assoc. 1997;4:238–51. doi: 10.1136/jamia.1997.0040238. [see comments] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.McDonald CJ. The barriers to electronic medical record systems and how to overcome them. J Am Med Inform Assoc. 1997;4:213–21. doi: 10.1136/jamia.1997.0040213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sim I, Gorman P, Greenes R, et al. Clinical decision support systems for the practice of evidence-based medicine. J Am Med Inform Assoc. 2001;8:527–34. doi: 10.1136/jamia.2001.0080527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review [see comment] JAMA. 1998;280:1339–46. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- 36.Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA. Closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. The Cochrane Effective Practice and Organization of Care Review Group. BMJ. 1998;317:465–8. doi: 10.1136/bmj.317.7156.465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Balas EA, Austin SM, Mitchell JA, Ewigman BG, Bopp KD, Brown GD. The clinical value of computerized information services. A review of 98 randomized clinical trials. Arch Fam Med. 1996;5:271–8. doi: 10.1001/archfami.5.5.271. [DOI] [PubMed] [Google Scholar]

- 38.Massaro TA. Introducing physician order entry at a major academic medical center: I. Impact on organizational culture and behavior. Acad Med. 1993;68:20–5. doi: 10.1097/00001888-199301000-00003. [DOI] [PubMed] [Google Scholar]

- 39.Friedman CP. Information technology leadership in academic medical centers: a tale of four cultures. Acad Med. 1999;74:795–9. doi: 10.1097/00001888-199907000-00013. [DOI] [PubMed] [Google Scholar]

- 40.Cabana MD, Rand CS, Powe NR, et al. Why don't physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282:1458–65. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 41.Baecker RM, Buxton WAS, editors. Readings in Human-Computer Interaction: A Multidisciplinary Approach. Los Altos, Calif: Morgan-Kaufmann; 1987. [Google Scholar]

- 42.Shneiderman B. Designing the User Interface: Strategies for Effective Human-Computer Interaction. Reading, Mass: Addison-Wesley Publishing Co.; 1997. [Google Scholar]

- 43.Kaplan B. Addressing organizational issues into the evaluation of medical systems. J Am Med Inform Assoc. 1997;4:94–101. doi: 10.1136/jamia.1997.0040094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wynia MK, Coughlin SS, Alpert S, Cummins DS, Emanuel LL. Shared expectations for protection of identifiable health care information: report of a national consensus process. J Gen Intern Med. 2001;16:100–11. doi: 10.1111/j.1525-1497.2001.00515.x. [Comment in: J Gen Intern Med. 2001 Feb;16:132–4] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.National Library of Medicine. Unified Medical Language System. Available at: http://www.nlm.nih.gov/pubs/factsheets/umls.html. Accessed August 9, 2000.

- 46.Begg C, Cho M, Eastwood S, et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996;276:637–9. doi: 10.1001/jama.276.8.637. [DOI] [PubMed] [Google Scholar]

- 47.Sim I. Trial Banks: An Informatics Foundation for Evidence-based Medicine [Dissertation] Stanford, Calif: Stanford University; 1997. [Google Scholar]

- 48.Sim I, Rennels G. 6th Annual Cochrane Collaboration Meeting, Baltimore, Md, October 22–26, 1998. Baltimore, Md: Cochrane Collaboration; 1998. Task analysis of systematic reviewing: implications for electronic RCT reporting. [Google Scholar]

- 49.Woolf SH. Practice guidelines: a new reality in medicine. I. Recent developments. Arch Intern Med. 1990;150:1811–8. [PubMed] [Google Scholar]

- 50.Chassin MR, Brook RH, Park RE, et al. Variations in the use of medical and surgical services by the Medicare population. N Engl J Med. 1986;314:285–90. doi: 10.1056/NEJM198601303140505. [DOI] [PubMed] [Google Scholar]

- 51.fsConway AC, Keller RB, Wennberg DE. Partnering with physicians to achieve quality improvement. Jt Comm J Qual Improv. 1995;21:619–26. doi: 10.1016/s1070-3241(16)30190-0. [DOI] [PubMed] [Google Scholar]

- 52.Iscoe NA, Goel V, Wu K, Fehringer G, Holowaty EJ, Naylor CD. Variation in breast cancer surgery in Ontario. CMAJ. 1994;150:345–52. [see comments] [PMC free article] [PubMed] [Google Scholar]

- 53.Keller RB, Soule DN, Wennberg JE, Hanley DF. Dealing with geographic variations in the use of hospitals. The experience of the Maine Medical Assessment Foundation Orthopaedic Study Group. J Bone Joint Surg Am. 1990;72:1286–93. [PubMed] [Google Scholar]

- 54.Welch WP, Miller ME, Welch HG, Fisher ES, Wennberg JE. Geographic variation in expenditures for physicians' services in the United States. N Engl J Med. 1993;328:621–7. doi: 10.1056/NEJM199303043280906. [see comments] [DOI] [PubMed] [Google Scholar]

- 55.Field MJ, Lohr KN, editors. Guidelines for Clinical Practice: From Development to Use. Washington, DC: Institute of Medicine; 1992. [PubMed] [Google Scholar]

- 56.U.S. Preventive Services Task Force. Guide to Clinical Preventive Health Services. Baltimore, Md: International Publishing, Inc.; 1996. [Google Scholar]

- 57.Sanders GD, Nease RF, Jr, Owens DK. Publishing web-based guidelines using interactive decision models. J Eval Clin Pract. 2001;7:175–89. doi: 10.1046/j.1365-2753.2001.00271.x. [DOI] [PubMed] [Google Scholar]

- 58.Bradley EH, Holmboe ES, Mattera JA, Roumanis SA, Radford MJ, Krumholz HM. A qualitative study of increasing beta-blocker use after myocardial infarction: Why do some hospitals succeed? JAMA. 2001;285:2604–11. doi: 10.1001/jama.285.20.2604. [DOI] [PubMed] [Google Scholar]

- 59.Gottlieb SS, McCarter RJ, Vogel RA. Effect of beta-blockade on mortality among high-risk and low-risk patients after myocardial infarction. [see comments] N Engl J Med. 1998;339:489–97. doi: 10.1056/NEJM199808203390801. [DOI] [PubMed] [Google Scholar]