Abstract

OBJECTIVE

To determine if patient satisfaction with ambulatory care visits differs when medical students participate in the visit.

DESIGN

Randomized controlled trial.

SETTING

Academic general internal medicine practice.

PARTICIPANTS

Outpatients randomly assigned to see an attending physician only (N = 66) or an attending physician plus medical student (N = 68).

MEASUREMENTS AND MAIN RESULTS

Patient perceptions of the office visit were determined by telephone survey. Overall office visit satisfaction was higher for the “attending physician only” group (61% vs 48% excellent), although this was not statistically significant (P = .16). There was no difference between the study groups for patient ratings of their physician overall (80% vs 85% excellent; P = .44). In subsidiary analyses, patients who rated their attending physician as “excellent” rated the overall office visit significantly higher in the “attending physician only” group (74% vs 55%; P = .04). Among patients in the “attending physician plus medical student” group, 40% indicated that medical student involvement “probably” or “definitely” did not improve their care, and 30% responded that they “probably” or “definitely” did not want to see a student at subsequent office visits.

CONCLUSIONS

Although our sample size was small, we found no significant decrement in patient ratings of office visit satisfaction from medical student involvement in a global satisfaction survey. However, a significant number of patients expressed discontent with student involvement in the visit when asked directly. Global assessment of patient satisfaction may lack sensitivity for detection of dissatisfaction. Future research in this area should employ more sensitive measures of patient satisfaction.

Keywords: medical education, medical student, patient satisfaction, randomized controlled trial

The past two decades have seen a major shift of patient care from the hospital to the ambulatory setting, and with it, a recognition of the need for change in clinical education.1–3 Many medical schools have responded by expanding ambulatory education.4

However, the increasing appearance of students in the office has provoked concern about patient dissatisfaction, particularly in managed care plans.5–9 Medical education may reduce physician time with patients, compromise patients' perceptions of privacy and confidentiality, and reduce physician productivity.5,10–12 What makes these concerns particularly urgent in the ambulatory setting is the prospect of losing patients. In contrast to their inpatient counterparts, who are a relatively captive audience for medical education, outpatients have greater latitude in choosing providers and services and so may be particularly sensitive to education-related dissatisfaction.

Most previous studies have found that trainee involvement has little or no influence on patient satisfaction, suggesting that such fears are unjustified.13–19 However, these observational studies shared one key methodological limitation: student involvement was not randomly assigned. Thus, attending physicians might have guided trainees toward patients more accepting of the medical education process. For example, in the most widely quoted study,15 patients selected to be seen by students were more likely to be non-white and to have Medicaid as compared to their counterparts who were not selected to see students. The bias produced by such selection poses a major threat to validity.20 In order to circumvent this bias, we conducted a randomized controlled trial to assess patient satisfaction with medical student involvement in ambulatory care visits.

METHODS

Setting and Participants

The study setting was the ambulatory General Internal Medicine Clinic of the Johns Hopkins Outpatient Center. This university practice provides primary and consultative internal medicine care to a diverse population, including low-socioeconomic-status patients from inner-city Baltimore and high-socioeconomic-status patients from the Mid-Atlantic region and beyond. Enrollment of patients into the study took place from February 1998 to April 1999 following approval by the Joint Committee on Clinical Investigation.

Four board-certified academic general internists who were full-time faculty members in General Internal Medicine participated in the study. All were trained in a university-based internal medicine residency, all had served as chief residents, and all devoted at least 60% effort to the practice of ambulatory medicine. There were 2 female and 2 male attending physicians with an age range of 35 to 38 years.

Four third-year students (2 male, 2 female) from the Johns Hopkins School of Medicine MD/PhD program participated in the study. Each student was paired with an attending physician in 1/2 day of continuity clinic per week as a part of his or her training program. Clinic schedules typically consisted of 1 to 2 new patients (1-hour time slot per patient) and 6 to 9 returning patients (20-minute time slot per patient). Each attending physician was allotted 2 examination rooms, allowing both the attending physician and medical student to evaluate different patients simultaneously.

Written notice of the study was provided to each patient when they registered for the office visit that day. This letter described the study, including the possibility of being selected by a “coin toss” to see a student and the possibility of a telephone survey following the visit. Patients were asked to let their physician know prior to entering the examination room if they did not want to participate in the study.

Study Groups

Patients were randomly assigned to one of two study groups: 1) attending physician only or 2) attending physician plus medical student. In the “attending physician only” group, the attending physician completed the patient history and physical exam, assessment, and plan for management without medical student involvement. In the “attending physician plus medical student” group, the attending physician introduced the patient to the medical student, and then left the examination room while the medical student obtained the patient's history. When the attending physician returned to the examination room, the medical student presented the history to the attending physician in the patient's presence (with key components of the history repeated by the attending physician), and then the attending physician and medical student examined the patient simultaneously. Finally, the attending physician formulated and discussed the assessment and plan with the patient in the presence of the medical student. The attending physician performed all medical record documentation in both study groups.

Randomization

Study group assignment was made in pairs using random numbers generated with Stata 5.0 (Stata Corp., College Station, Tex) and sealed in an envelope marked by study identification number. Attending physicians were asked to open the envelope after they had identified an eligible pair of patients from their schedule. For a pair to be eligible, both patients had to meet the following three conditions: 1) agreed to participate in the study; 2) agreed to be seen by a medical student; and 3) concordant in regard to visit status (i.e., either both new or both return patients). This pair-wise assignment produced matching of the groups on attending physician and date and type of visit. In general, randomization proceeded only when all inclusion criteria for a pair of patients were met, i.e., all eligibility criteria were met, both patients were available to be seen at roughly the same time, and there was no overriding pedagogical issue (such as an interesting physical examination finding or an unusual diagnosis). Occasionally, attending physicians proceeded with randomization when only 1 patient of the pair was present, anticipating that the second patient would appear shortly. When the second patient missed this appointment (N = 12), pair-wise matching failed. In addition, because of the strict eligibility criteria and mostly the requirement that both patients be available at the same time for randomization, we enrolled only 134 of the approximately 400 patients who attended clinic sessions to which medical students were assigned.

Measurements

Data were collected from three sources: 1) the medical record, 2) the randomization form, and 3) an interviewer-administered telephone survey. Eighty percent of patients were contacted for the telephone survey within 72 hours of the visit. Consent to administer the survey was obtained at the beginning of the telephone interview.

The survey included items from 6 of 9 office visit domains identified by Laine et al.21 (physician clinical skill, physician interpersonal skill, support staff, office environment, provision of information, patient involvement), as well as specific items from the Medical Outcomes Study.22 The survey was arranged in 2 phases to blind the interviewer to study group assignment. In the first phase (see Appendix A), participants in both study groups were asked general questions about the office visit and their physician but not questions that would reveal study group assignment to the interviewer. Patients in this phase were asked to rate each item on a 5-point ordinal rating scale (1 = excellent to 5 = poor).

The second phase of the survey (see Appendix B) was administered only to the participants who responded “yes” when asked if a medical student was involved in their care, at which point the interviewer was unblinded to group assignment. Patients were asked to rate the medical student overall, the clinical skills of the student, their comfort with the medical student, and whether they would want to have medical students involved in future visits or in visits with a family member.

After completion of the 2 phases of the survey, patients were asked to rate their health status and provide information on their race and level of education. Education was categorized as less than high school, high school graduate, or some college or greater. Additional data were obtained from 2 other sources. First, an electronic patient record was used to retrieve information on age, sex, insurance status, and zip code. Insurance status was categorized into three categories: 1) Medicaid and self-pay; 2) Medicare only; 3) private (including those with Medicare and supplemental private insurance). Zip code was linked to median household income using 1990 U.S. Census Bureau data. Second, the following office visit times were recorded by the attending physician on the randomization form: 1) start of the patient history by the medical student, 2) start of the patient history by the attending physician, and 3) conclusion of the office visit (i.e., when the patient left the examining room).

Data Analysis

Baseline characteristics of the two study groups were compared using χ2 and Fisher's exact tests for categorical variables, the Student's t test for normally distributed continuous variables, and the Wilcoxon rank-sum for continuous variables that were not normally distributed.

Satisfaction variables were dichotomized into a rating of “excellent” versus all others since responses were skewed to the left toward a rating of “excellent.” Satisfaction variables were compared using χ2 analysis. To verify our results and minimize the effect of unmatched participants (N = 12), conditional logistic regression was used to adjust for patient factors, including age, sex, race, education level, self-reported health rating, and insurance status. These models were also used to assess for potential pair-wise interactions among baseline variables or office visit domains and study group assignment.

Student-specific items on the survey were analyzed using descriptive statistics. Multiple logistic regression was used to assess the effect of patient, physician, and office visit characteristics and ratings on participants' responses to student-specific survey items. All analyses were performed using Stata 5.0 (Stata Corp., College Station, Tex).

RESULTS

Composition of Cohort

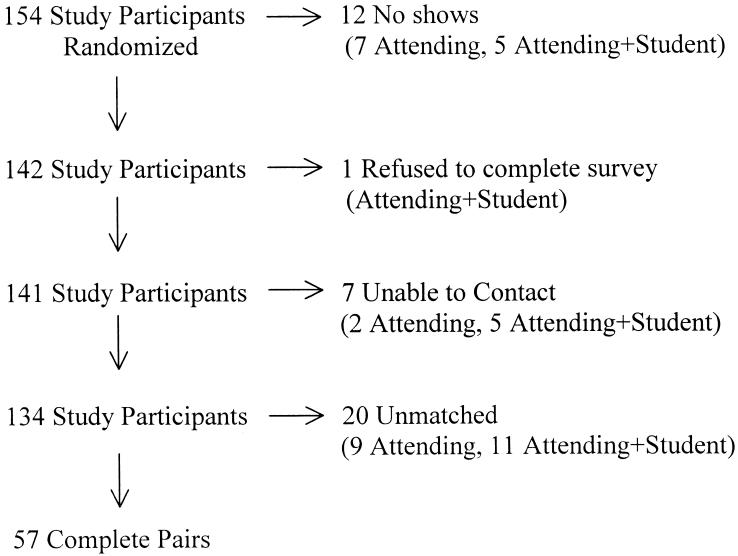

Attending physicians randomized 154 patients (i.e., 77 pairs) over the 16-month study period. Of these, 134 (87%) patients (including 57 complete pairs) completed the survey (Fig. 1). One patient refused to complete a survey, and 7 could not be contacted. Each attending physician enrolled an average of 38 patients (range 16 to 58).

FIGURE 1.

Randomization and participation. This figure displays study participation following randomization. “No shows” indicates those who missed their appointments after being randomized. “Unable to contact” means telephone communication could not be established with the participant after the visit.

Baseline Characteristics

Baseline characteristics were similar between the two study groups (see Table 1). There appeared to be some difference in insurance status, with more Medicaid and self-pay patients in the “attending physician only” group, but this difference was not statistically significant (P = .08).

Table 1.

Characteristics of 134 Adult Medicine Outpatients by Study Group

| Characteristic | Attending Physician Only (N = 66) | Attending Physician + Medical Student (N = 68) | P Value |

|---|---|---|---|

| Mean age, y | 60.4 | 57.5 | .26 |

| Female, % | 48.8 | 51.2 | .89 |

| White, % | 47.0 | 48.5 | .86 |

| Education, % | |||

| Less than high school | 53.0 | 42.7 | .37 |

| High school graduate | 19.7 | 19.1 | |

| College graduate or greater | 27.3 | 38.2 | |

| Health insurance, % | |||

| Medicaid or self-pay | 16.7 | 5.9 | .08 |

| Medicare only | 19.7 | 14.7 | |

| Private | 63.6 | 79.4 | |

| Median household income, $ | 33,764 | 31,156 | .26 |

| Self-reported health rating | 2.8 | 3.0 | .45 |

This table displays the baseline characteristics of the “attending physician only” group and the “attending physician plus medical student” group. Categorical comparisons were made using χ2 (or Fisher's exact), and continuous comparisons were made using Student's t test.

Time

The time that attending physicians spent in the examination room with the patient during the office visit was similar in the “attending physician only” and the “attending physician plus medical student” groups (median 22 vs 20 min; P = .26). However, attending physicians spent significantly more time with new patients in the “attending physician plus medical student” group (median 45 vs 35 min; P = .03) As expected, total visit time for all patient visits in the “attending physician plus medical student” group was significantly longer than in the “attending physician only” group (median 54 vs 22 min; P < .001).

Despite the objective differences in attending physician time and total time spent with the patients between the two groups, patients did not perceive major differences. Patients rated the time spent registering for the office visit, being checked into the examination room by the nurse, and waiting in the examination room for the physician similarly in both study groups (all P ≥ .12).

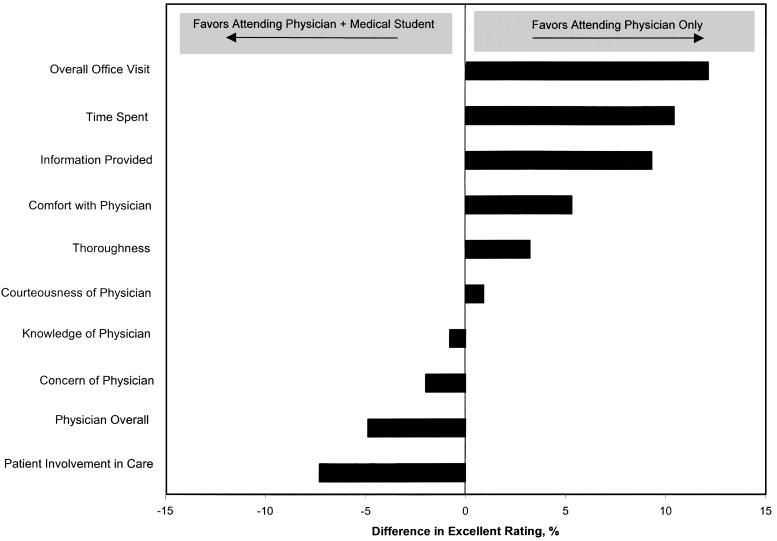

Effect on Satisfaction

Patient responses to the satisfaction survey are summarized in Table 2. Their perceptions were generally favorable, with >95% of patients rating their visit as “good,”“very good,” or “excellent” across 10 domains. Absolute differences in percent “excellent” ratings of office visit and physician characteristics are displayed in Figure 2. Ratings were more favorable for the “attending physician only” group for overall office visit (absolute difference = 12.1%), patient perception of time spent with them during the visit (absolute difference = 10.5%), and information provided during the visit (absolute difference = 9.4%). Patients favored the student group in the perception of patient self-involvement in the care provided (absolute difference = 7.6%). However, none of these differences reached statistical significance. Likewise, multiple logistic regression analysis adjusting for age, sex, race, education level, insurance status, median household income, and self-reported health rating demonstrated little or no adverse effect of students on patient perceptions, and again, all results were not statistically significant.

Table 2.

Patient Perceptions of Office Visit and Physician Characteristics by Study Group

| Characteristic | Group | Excellent | Very Good | Good | Fair | Poor |

|---|---|---|---|---|---|---|

| Overall office visit | A | 60.6 | 25.8 | 12.1 | 1.5 | 0.0 |

| A+S | 48.5 | 35.3 | 13.2 | 3.0 | 0.0 | |

| Time spent | A | 54.6 | 24.2 | 18.2 | 3.0 | 0.0 |

| A+S | 44.1 | 29.4 | 25.0 | 1.5 | 0.0 | |

| Information provided | A | 66.7 | 22.7 | 7.6 | 3.0 | 0.0 |

| A+S | 57.3 | 35.3 | 5.9 | 1.5 | 0.0 | |

| Comfort with physician | A | 78.8 | 13.6 | 6.1 | 1.5 | 0.0 |

| A+S | 73.5 | 23.5 | 3.0 | 0.0 | 0.0 | |

| Thoroughness | A | 56.1 | 22.7 | 18.2 | 3.0 | 0.0 |

| A+S | 52.9 | 30.9 | 13.2 | 3.0 | 0.0 | |

| Courteousness of physician | A | 81.8 | 15.2 | 3.0 | 0.0 | 0.0 |

| A+S | 80.9 | 17.6 | 1.5 | 0.0 | 0.0 | |

| Knowledge of physician | A | 72.8 | 21.2 | 3.0 | 3.0 | 0.0 |

| A+S | 73.5 | 17.6 | 7.4 | 1.5 | 0.0 | |

| Concern of physician | A | 77.3 | 10.6 | 9.1 | 3.0 | 0.0 |

| A+S | 79.4 | 13.2 | 7.4 | 0.0 | 0.0 | |

| Physician overall | A | 80.3 | 15.2 | 4.5 | 0.0 | 0.0 |

| A+S | 85.3 | 11.8 | 2.9 | 0.0 | 0.0 | |

| Patient involvement in care | A | 53.0 | 28.8 | 15.2 | 3.0 | 0.0 |

| A+S | 60.3 | 19.1 | 19.1 | 0.0 | 1.5 |

All numbers represent percentages. A = “attending only” group (N = 66) and A+S = “attending + medical student” group (N = 68). Differences in ratings between the study groups for each characteristic did not reach statistical significance.

FIGURE 2.

Absolute difference in percent “excellent” patient satisfaction ratings of office visit and physician characteristics. Comparison of patient perceptions of office visit and attending physician characteristics by study group assignment. Results reflect the difference in the percentage of patients who rated the domain as “excellent” (1 on a 5-point scale) among those assigned to the “attending physician only” group minus the percentage who rated the domain as “excellent” among those assigned to the “attending physician plus medical student” group. Thus, differences >0 favor the “attending physician only” group and differences <0 favor the “attending physician plus medical student” group. None of the results reached statistical significance.

To minimize the possibility of confounding due to chance imbalance in the study groups, we conducted a conditional logistic regression analysis confined to the 57 complete pairs. After adjustment for age, sex, race, education level, insurance status, median household income, and self-reported health rating, patients in the “attending physician plus medical student” group appeared somewhat less likely to rate as “excellent” the overall office visit (relative odds 0.40; 95% confidence interval 0.12 to 1.4).

Only 1 pair-wise interaction reached statistical significance. Patients who rated their attending physician as “excellent” were significantly more likely to rate the overall office visit as “excellent” in the “attending physician only” group (74% vs 55%; P = .04) compared to the “attending physician plus medical student” group.

Patients' Perceptions of the Medical Students

Those assigned to the “attending physician plus medical student” group answered 6 student-specific questions and all reported being seen by medical students on previous office visits (Table 3). Twenty-six percent rated the student as “excellent” overall. When asked if they would want to be seen in a similar arrangement on the following visit with medical student involvement, 30% responded “probably not” or “‘definitely not.” Almost 40% responded that the medical student “probably” or “definitely” did not improve the care they received. Only three (4%) participants responded that they were annoyed about repeating information to the attending physician that had already been discussed with the medical student. Finally, 17% in the “attending physician plus medical student” group responded that they would “probably” or “definitely” not recommend a similar arrangement to a friend or family member.

Table 3.

Patient Ratings of Student-specific Aspects of the Office Visit

| Aspect of Visit | Excellent | Very Good | Good | Fair | Poor |

|---|---|---|---|---|---|

| Student overall | 25.8 | 43.9 | 25.8 | 4.5 | 0.0 |

| Comfort with student | 30.3 | 39.4 | 22.7 | 6.1 | 1.5 |

| Definitely Yes | Probably Yes | Don't Know | Probably Not | Definitely Not | |

|---|---|---|---|---|---|

| Annoyed about repeating information | 1.5 | 3.0 | 0.0 | 10.6 | 84.9 |

| Student improved care | 31.8 | 22.7 | 6.1 | 27.3 | 12.1 |

| Similar arrangement next visit | 25.8 | 37.9 | 6.0 | 21.2 | 9.1 |

| Recommend to friend/family* | 37.9 | 34.8 | 9.1 | 15.2 | 1.5 |

One person did not answer.

All numbers represent percentages (N = 68).

In a multiple logistic regression model, younger age was independently associated with a greater reluctance to see a medical student on the next visit (relative odds 0.82 per 5-year decrease in age; 95% confidence interval 0.67 to 0.98). In contrast, sex, race, education level, insurance status, median household income, self-reported health rating, prior visits with student involvement, or the specific medical student did not predict responses to this question.

COMMENTS

We found no significant differences in global patient satisfaction ratings of the office visit between patients seen by a medical student and attending physician compared to those seen by an attending physician alone. Despite similar office visit ratings between the two groups, there were a significant number of patients who expressed discontent with student involvement in the office visit when asked unblinded student-specific questions. The main strength of our study was the randomized trial design, which eliminated the selection bias inherent in observational studies.

Nonetheless, there were several limitations to our study. First, the small sample size limited statistical power: post hoc calculations indicate 80% power to detect a 30% between-group difference in the percentage of patients who rated the overall office visit as “excellent.” Second, conduct of the study in a single practice within one university limits generalizability. Third, despite careful design and implementation of the pair-wise randomization scheme, some patients were unmatched. However, this number was relatively small and was equally distributed in both groups; moreover, their exclusion in subsidiary analyses left our results essentially unchanged. Finally, we used only 4 medical students, all from the MD/PhD program, each paired with an attending physician in a long-term relationship in a continuity clinic. The interaction between the attending and student, as well as the uniqueness of students compared to traditional medical students, needs to be considered when interpreting our study results.

Few studies have examined the effect of medical students on patient satisfaction, and to our knowledge, no study has measured this effect in a randomized trial. Many studies lacked a comparison group13,14,17 and therefore could not estimate the effect of student involvement. Of those with a comparison group,16,18 study design was highly variable, with significant limitations, including the use of an historical comparison group,16 lack of a standard office visit format,16,18 and a lack of information on factors that might influence satisfaction ratings, such as demographic characteristics of the patients.16,18

Two recent well-designed observational studies15,19 found no difference in overall satisfaction ratings of office visits in which medical students were involved in care compared to visits not involving students. However, as with all observational studies, there was potential bias from patient selection. In one of these studies,15 more patients in the student group were non-white and had Medicaid. Previous work has demonstrated the influence of demographic and socioeconomic factors on patient satisfaction with medical care, including ratings of medical student care.14,23–25 Both studies properly attempted to reduce this bias by statistical adjustment. However, it is likely that patients selected to see students differed from those not selected in other ways (such as personality and level and type of illness), and differences in such unmeasured factors are beyond the reach of statistical adjustment and must be balanced by design, i.e., randomization.

The format of student involvement in Frank's study15 was not described, and unlike our study, average office visit times were relatively short (10 min). Simon used a format of student involvement similar to ours and found a much higher rate of “dislike” with the overall office visit than we did (10%“dislike” vs 2%“fair” in our study).19 We controlled for the visit type (new versus return patient) using our paired randomization scheme, an issue not described in either study.15,19 Finally, patient satisfaction was assessed globally in both studies without asking student-specific questions.15,19 We asked global satisfaction questions, but also included student-specific questions for the “attending physician plus medical student” group.

We found that 30% of patients in the “attending physician plus medical student” group would not choose a similar arrangement with medical student involvement at subsequent office visits. These findings are surprising considering the low rate of overall office visit dissatisfaction (2%; defined as a rating of “fair” or “poor”) and absence of attending physician dissatisfaction in our study. However, patient unwillingness to see medical trainees has been shown previously.14 In our study, age was the only predictive factor of willingness to be seen at the next office visit by a medical student, a finding consistent with previous work.14,24 No other patient factors were significantly associated, including patient report of previous experience with medical student care.

We found that patients rated the office visit similarly regardless of student involvement, but expressed some discontent when asked student-specific questions. This suggests that global office visit satisfaction questionnaires may not be sensitive enough to detect patient dissatisfaction with the office visit. The finding of no difference in office visit ratings probably means that patients are willing to tolerate the medical education process, but certainly the student-specific questions do raise some concern about this issue. Larger scale studies in this area are needed to assess patient satisfaction with medical trainee involvement in ambulatory care, but development of a more sensitive means of assessing patient satisfaction is needed prior to undertaking such studies.

Acknowledgments

We would like to thank Dr. Carol Ann Huff and Dr. Joseph Cofrancesco for their participation in this study.

Dr. Gress was supported by a training grant in Behavioral Research in Heart and Vascular Diseases from the National Institutes of Health (T32HL07180).

APPENDIX A

Office Visit and Physician-specific Questions Asked of Both Study Groups (Phase I)

| How do you rate….? |

| (5-point ordinal scale where 1 = excellent, 2 = very good, 3 = good, 4 = fair, 5 = poor) |

| …your office visit overall? |

| …how clear and understandable the information was about your medical problems? |

| …amount of time spent with you during the office visit? |

| …the thoroughness of your office visit? |

| …how much you were involved in decisions about your health care? |

| …the convenience of the appointment time you received? |

| …the amount of time it took you to register downstairs? |

| …the amount of time from when you first registered to the time the nurse saw you? |

| …the amount of time you spent in the examining room waiting for the doctor? |

| …how comfortable you were with your doctor? |

| …how courteous your doctor was to you during the office visit? |

| …your doctor's knowledge about your medical problems? |

| …doctor's concern about your feelings and needs? |

| …your doctor overall? |

APPENDIX B

Questions Asked of Attending Physician + Student Group only (Phase II)

| 5-point ordinal scale where 1 = excellent, 2 = very good, 3 = good, 4 = fair, 5 = poor for the following questions: |

| How do you rate the doctor in training overall? |

| How do you rate how comfortable you were with the doctor-in-training? |

| 5-point ordinal rating scale where 1 = definitely yes, 2 = probably yes, 3 = don't know, 4 = probably no, 5 = definitely no for the following questions: |

| Did you feel annoyed about repeating information to Dr. (Attending Physician Name) that you had already discussed with the doctor in training? |

| Do you think that having the doctor in training involved improved the care you received? |

| In the future, would you want a similar arrangement where you would be seen by the doctor in training as well as Dr. (Attending Physician Name)? |

| Would you recommend that a friend or family member be seen in a clinic where doctors in training are involved in their care? |

REFERENCES

- 1.Schroeder SA, Showstack JA, Gerbert B. Residency training in internal medicine: time for a change. Ann Intern Med. 1986;104:554–61. doi: 10.7326/0003-4819-104-4-554. [DOI] [PubMed] [Google Scholar]

- 2.Kassirer JP. Redesigning graduate medical education–location and content. N Engl J Med. 1996;335:507–9. doi: 10.1056/NEJM199608153350709. [DOI] [PubMed] [Google Scholar]

- 3.Schroeder SA. Expanding the site of clinical education: moving beyond the hospital walls. J Gen Intern Med. 1988;(3suppl):5–14. doi: 10.1007/BF02600247. [DOI] [PubMed] [Google Scholar]

- 4.Hunt CE, Kallenberg GA, Whitcomb ME. Medical students' education in the ambulatory care setting: background paper 1 of the Medical School Objectives Project. Acad Med. 1999;74:289–96. doi: 10.1097/00001888-199903000-00022. [DOI] [PubMed] [Google Scholar]

- 5.Kirz HL, Larsen C. Costs and benefits of medical student training to a health maintenance organization. JAMA. 1986;256:734–9. [PubMed] [Google Scholar]

- 6.Moore GT. Health maintenance organizations and medical education: breaking the barriers. Acad Med. 1990;65:427–32. doi: 10.1097/00001888-199007000-00001. [DOI] [PubMed] [Google Scholar]

- 7.Corrigan JM, Thompson LM. Involvement of health maintenance organizations in graduate medical education. Acad Med. 1991;66:656–61. doi: 10.1097/00001888-199111000-00002. [DOI] [PubMed] [Google Scholar]

- 8.Rivo ML, Mays HL, Katzoff J, Kindig DA. Managed health care. Implications for the physician workforce and medical education. Council on Graduate Medical Education. JAMA. 1995;274:712–5. doi: 10.1001/jama.274.9.712. [DOI] [PubMed] [Google Scholar]

- 9.Moore G, Nash D. Adding managed care to the physician education equation. Qual Lett Healthc Lead. 1999;11:10–2. [PubMed] [Google Scholar]

- 10.Gamble JG, Lee R. Investigating whether education of residents in a group practice increases the length of the outpatient visit. Acad Med. 1991;66:492–3. doi: 10.1097/00001888-199108000-00019. [DOI] [PubMed] [Google Scholar]

- 11.Jones TF. The cost of outpatient training of residents in a community health center. Fam Med. 1997;29:347–52. [PubMed] [Google Scholar]

- 12.Kuttner R. Managed care and medical education. N Engl J Med. 1999;341:1092–6. doi: 10.1056/NEJM199909303411421. [DOI] [PubMed] [Google Scholar]

- 13.York NL, DaRosa DA, Markwell SJ, Niehaus AH, Folse R. Patients' attitudes toward the involvement of medical students in their care. Am J Surg. 1995;169:421–3. doi: 10.1016/s0002-9610(99)80189-5. [DOI] [PubMed] [Google Scholar]

- 14.Simons RJ, Imboden E, Martel JK. Patient attitudes toward medical student participation in a general internal medicine clinic. J Gen Intern Med. 1995;10:251–4. doi: 10.1007/BF02599880. [DOI] [PubMed] [Google Scholar]

- 15.Frank SH, Stange KC, Langa D, Workings M. Direct observation of community-based ambulatory encounters involving medical students. JAMA. 1997;278:712–6. [PubMed] [Google Scholar]

- 16.O'Malley PG, Omori DM, Landry FJ, Jackson J, Kroenke K. A prospective study to assess the effect of ambulatory teaching on patient satisfaction. Acad Med. 1997;72:1015–7. doi: 10.1097/00001888-199711000-00023. [DOI] [PubMed] [Google Scholar]

- 17.Klamen DL, Williams RG. The effect of medical education on students' patient-satisfaction ratings. Acad Med. 1997;72:57–61. [PubMed] [Google Scholar]

- 18.Devera-Sales A, Paden C, Vinson DC. What do family medicine patients think about medical students' participation in their health care. Acad Med. 1999;74:550–2. doi: 10.1097/00001888-199905000-00024. [DOI] [PubMed] [Google Scholar]

- 19.Simon SR, Peters AS, Christiansen CL, Fletcher RH. Effect of medical student teaching on patient satisfaction in a managed care setting. J Gen Intern Med. 2000;15:457–61. doi: 10.1046/j.1525-1497.2000.06409.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gordis L. Assessing the Efficacy of Preventive and Therapeutic Measures: Randomized Trials. Epidemiology. Philadelphia: W.B. Saunders Company; 1996. pp. 89–97. [Google Scholar]

- 21.Laine C, Davidoff F, Lewis CE, et al. Important elements of outpatient care: a comparison of patients' and physicians' opinions. Ann Intern Med. 1996;125:640–5. doi: 10.7326/0003-4819-125-8-199610150-00003. [DOI] [PubMed] [Google Scholar]

- 22.Rubin HR, Gandek B, Rogers WH, Kosinski M, McHorney CA, Ware JE., Jr Patients' ratings of outpatient visits in different practice settings Results from the Medical Outcomes Study. JAMA. 1993;270:835–40. [PubMed] [Google Scholar]

- 23.Richardson PH, Curzen P, Fonagy P. Patients' attitudes to student doctors. Med Educ. 1986;20:314–7. doi: 10.1111/j.1365-2923.1986.tb01371.x. [DOI] [PubMed] [Google Scholar]

- 24.King D, Benbow SJ, Elizabeth J, Lye M. Attitudes of elderly patients to medical students. Med Educ. 1992;26:360–3. doi: 10.1111/j.1365-2923.1992.tb00186.x. [DOI] [PubMed] [Google Scholar]

- 25.Adams DS, Adams LJ, Anderson RJ. The effect of patients' race on their attitudes toward medical students' participation in ambulatory care visits. Acad Med. 1999;74:1323–6. doi: 10.1097/00001888-199912000-00016. [DOI] [PubMed] [Google Scholar]