Abstract

OBJECTIVE

The effect of care by medical residents on hospital length of stay (LOS), indirect costs, and reimbursement was last examined across a range of illnesses in 1981; the issue has never been examined at a community hospital. We studied resource utilization and reimbursement at a community hospital in relation to the involvement of medical residents.

DESIGN

This nonrandomized observational study compared patients discharged from a general medicine teaching unit with those discharged from nonteaching general medical/surgical units.

SETTING

A 620-bed community teaching hospital with a general medicine teaching unit (resident care) and several general medicine nonteaching units (no resident care).

PATIENTS

All medical discharges between July 1998 and February 1999, excluding those from designated subspecialty and critical care units.

MEASUREMENTS AND MAIN RESULTS

Endpoints included mean LOS in excess of expected LOS, mean cost in excess of expected mean payments, and mean profitability (payments minus total costs). Observed values were obtained from the hospital's database and expected values from a proprietary risk–cost adjustment program. No significant difference in LOS between 917 teaching-unit patients and 697 nonteaching patients was demonstrated. Costs averaged $3,178 (95% confidencence interval (CI) ± $489) less than expected among teaching-unit patients and $4,153 (95% CI ± $422) less than expected among nonteaching-unit patients. Payments were significantly higher per patient on the teaching unit than on the nonteaching units, and as a result mean, profitability was higher: $848 (95% CI ± $307) per hospitalization for teaching-unit patients and $451 (95% CI ± $327) for patients on the nonteaching units. Teaching-unit patients of attendings who rarely admitted to the teaching unit (nonteaching attendings) generated an average profit of $1,299 (95% CI ± $613), while nonteaching patients of nonteaching attendings generated an average profit of $208 (95% CI ± $437).

CONCLUSIONS

Resident care at our community teaching hospital was associated with significantly higher costs but also with higher payments and greater profitability.

Keywords: health care finance, residents, length of stay, indirect costs

Competitive pressures and a decline in federal education subsidies have encouraged many teaching hospitals to evaluate their training programs more closely from a financial perspective. Such analysis has proved difficult to accomplish in a manner convincing to both educators and financial planners.1–4 Because postgraduate medical education is conducted in close association with patient care, allocation of costs between the two is necessarily complex and subjective. Without resolving this methodologic problem, most previous studies have found resident care more costly.5–11 Likewise, current government-funded subsidies of teaching hospitals reflect the generally held belief that postgraduate medical education is a net financial burden to its sponsors.12

No recent study has examined resource use and reimbursements associated with resident care across a range of medical diagnoses, and none has done so in a community teaching hospital. At our community hospital, we compared payments and costs associated with care of general medicine patients on a teaching unit (staffed by residents and their supervising attending physicians) and on nonteaching units (where no residents were present). Our method considered differences between these two patient groups in the distribution of case complexity, diagnoses, and characteristics of the attending physicians.

METHODS

Site

This study was performed using data from Saint Barnabas Medical Center (SBMC), a 620-bed community teaching hospital in Livingston, NJ. The hospital is affiliated with Mount Sinai School of Medicine, NY. Saint Barnabas Medical Center (SBMC) had 142 postgraduate trainees in nine residency programs at the time of the study. There were 40 residents in SBMC's internal medicine residency training program, which was fully accredited by the Accreditation Council for Graduate Medical Education (ACGME) in August 1999, reflecting performance during the period of this study.

Patients were admitted to the hospital's medical service by 487 attending staff members. There were 40 beds on a geographic general medicine teaching unit, 40 beds on a geographic specialty renal teaching unit, and up to 270 other beds including intensive care, cardiac care, and designated pulmonary, oncology, and nonteaching medical/surgical units. Patients were admitted to the geographic general medicine teaching unit based on the preference of the attending physician and patient, and on bed availability. Teaching patients were admitted exclusively to teaching units; however, when the hospital was full, nonteaching-unit patients were also placed on the teaching units.

Teaching-unit patients were under the care of residents supervised by attending physicians in accordance with requirements of the Residency Review Committee for Internal Medicine of the ACGME. Nonteaching-unit patients were cared for by attending physicians without residents, but coverage assistance was available at night and on weekends from licensed house physicians. No resident served as a house physician, and no physician assistant or nurse practitioner provided inpatient care. Nurse-to-patient ratios were the same on the geographic general medicine unit as on nonteaching medical/surgical units. Social workers and case managers were available on all nonintensive care hospital units.

A review of 100 randomly selected charts of medical patients discharged from the general medicine teaching unit during the study period indicated that supervised residents cared for 68% of patients. Review of 100 discharges from nonteaching medical/surgical units indicated that residents cared for none of these patients.

Patient Selection

Using a comprehensive patient database (Trendstar, HBOC, Inc., Atlanta, Ga), we collected data on discharged patients assigned a medical diagnosis-related group (DRG). We included all patients discharged between July 1998 and January 1999 (inclusive) from all wards of the hospital other than intensive care and specialty units. Specialty units excluded from study consisted of geographically designated renal, pulmonary, and oncology beds. Timing of the study was determined at its onset by implementation of a cost database and at its termination by changes in the geographic teaching service because of hospital construction. Both winter and summer months were represented. We excluded any patient whose attending physician at discharge was not a member of the department of medicine or family practice. Patients with a length of stay (LOS) over 30 days were classified as outliers; all endpoints were calculated with these patients included.

Data Collection

Abstracted data for each discharge included demographic information, the nursing unit from which the patient was discharged, DRG, disposition, source of admission, attending physician at discharge with departmental affiliation, LOS, principal insurer, hospital days for which payment was denied by the insurer, and payments to the hospital. Costs to the hospital were calculated for each patient using a cost allocation program and were based on the unit cost of each item, service, or procedure used for care of that patient. Salary costs were allocated to each item, service, or procedure based on time for implementation expended by personnel of the relevant cost center. The hospital's capital and overhead costs (including those for its teaching programs) were distributed in accordance with Medicare step-down methodology. This methodology assigns among clinical services overhead and other costs not easily attributable to a particular patient care activity.13

At the time of this study, the cost allocation program in the pharmacy cost center was not fully functional. Therefore, we assessed costs based on pharmacy-specific charge-to-cost ratios determined by the cost allocation program immediately subsequent to the study period. We combined cost centers of clinically related services into seven cost areas: pharmacy, radiology, cardiopulmonary, laboratory, critical care room and board, ward room and board, and other costs.

It seemed likely that patients admitted to the teaching unit and nonteaching units might differ in clinical characteristics and DRG distribution. To compare resource utilization between these two groups, we calculated the difference between observed and predicted outcomes (“excess” cost or LOS) for each patient in each group. Predicted cost and LOS for each patient were obtained using a proprietary risk adjustment program (see below). Mean and median excess values were calculated for all teaching-unit and nonteaching-unit patients. In addition, we compared patients on the two types of unit with respect to observed costs, observed payments (at least a year after delivery of services), and the difference between them (profitability).

To provide a measure of clinical severity, we stratified patients by their risk of in-hospital death using All Payer Refined DRGs (APR-DRGs, 3M Health Information Systems, Wallingford, Conn). This methodology first assigns patients to an adjacent DRG (ADRG) formed by grouping individual DRGs previously split by complications and co-morbidities. Patients within each ADRG are then assigned to one of four levels of severity based on data derived from billing information.14

Risk Adjustment

Risk adjustment modeling was used in this study to determine expected LOS and costs for each patient. Calculations were performed by New Solutions, Inc. (New Brunswick, NJ), using a refinement of SysteMetrics disease-stage modeling.15–18 Models are based on multiple or logistic (for dichotomous data) regression of variables obtained from the standardized Uniform Bill data set.19 Data for model development are drawn from state and national resources, including the national Medicare Provider Analysis and Review File (MEDPAR) database.20 Models are reviewed on an annual basis and are specific for risk of particular outcomes, including cost and length of stay.

Briefly, the modeling method first assigns each patient to a DRG and then to an ADRG using standard grouper software. Patients within each ADRG are then further characterized by the presence of variables found (on univariate analysis) to be associated with the outcome under study. Clinical diagnoses are excluded from the model (even if associated with outcome) if, in the opinion of an expert physician panel, they represent complications of hospitalization rather than preadmission predictors of outcome. Finally, remaining variables (selected from all diagnoses, demographics, and the number of involved body systems) are assigned individual likelihood weights from multiple regression. Using this method, each patient is assigned a probability of the outcome under study, and a group probability can be readily calculated. Variables used for modeling are regularly reviewed for face validity by clinical experts. Models with variable weights and measures of statistical validity including R2calculations are open to inspection.

In calculating predicted cost and LOS, the risk adjustment program weighted, among other variables, the factors by which DRGs are determined. These factors include principal diagnosis, outcome, comorbidities, and age. We therefore included all patients, irrespective of DRG, in our analysis of group endpoints.

Stratification by Attending Attributes

Attending physicians who frequently admit patients to a teaching unit may differ in their utilization of resources from those who rarely admit to a teaching unit. Such differences could bias the effect of resident care. We therefore stratified patients according to the admitting habits of the attending physician. Patients of attending physicians who admitted more than half of their patients to the teaching unit (teaching attendings) were compared for all endpoints to patients with attending physicians who admitted fewer than half their patients to the teaching unit (nonteaching attendings).

Statistical Analysis

Excess costs, excess LOS, payments, and profitability were reviewed for approximation to a normal distribution. We applied nonparametric testing (Mann-Whitney U test) to data sets when the mean and median differed by more than 15%, when less than 3% (or more than 7%) of the data points fell beyond 1.96 standard deviations from the mean, or when outliers were not evenly distributed to the two tails (30%–70% in each tail). We reported z scores for non-parametric tests. For all other data sets, we reported 95% parametric confidence intervals.

RESULTS

There were 2,550 discharges meeting Health Care Financing Administration criteria for medical DRGs. Of these, 1,615 represented patients who met our inclusion criteria. They were cared for by 126 teaching attending physicians and 76 nonteaching attending physicians in the departments of medicine or family practice. There were 917 teaching-unit patients and 698 from other units. Twenty-one of these patients were outliers, 18 were teaching-unit patients and three were from the other units. One patient who was eligible was excluded because of incomplete data.

Patient Characteristics

Table 1 summarizes characteristics of patients on the teaching and nonteaching units stratified by attending physician teaching preference. Patients were similar in mean age, gender, ethnic makeup, referral source, disposition on discharge, and insurance coverage (the majority were insured by Medicare). As expected, patient groups differed in the distribution of DRGs, confirming the need for risk adjustment in comparing costs and LOS. In addition, patients on the teaching unit were more severely ill. Among teaching unit patients, 8% were in APR-DRG category four (extreme risk of death), compared with 3% among nonteaching-unit patients (P < .05 by χ2).

Table 1.

Patient Characteristics*

| Teaching-Unit Patients | Nonteaching-Unit Patients | |||

|---|---|---|---|---|

| Teaching Attending (n = 760) | Nonteaching Attending (n = 158) | Teaching Attending (n = 371) | Nonteaching Attending (n = 325) | |

| Male, % | 41 | 39 | 36 | 35 |

| Mean age, y | 68.3 | 69.1 | 67.8 | 64.5 |

| Ethnicity, % | ||||

| White | 68 | 66 | 66 | 71 |

| African American | 18 | 20 | 18 | 18 |

| Unknown | 12 | 13 | 16 | 10 |

| Other | 0 | 0 | 2 | 1 |

| Admission type, % | ||||

| Emergency room | 86 | 73 | 84 | 77 |

| Routine scheduled | 9 | 17 | 8 | 17 |

| Routine unscheduled | 3 | 6 | 5 | 4 |

| Other | 0 | 4 | 3 | 0 |

| Disposition, % | ||||

| Died | 4 | 3 | 4 | 3 |

| Home self-care | 71 | 73 | 74 | 76 |

| Intermediate care | 1 | 0 | 2 | 2 |

| Other | 10 | 4 | 9 | 8 |

| Short term hospice | 1 | 1 | 1 | 1 |

| Skilled nursing facility | 13 | 18 | 9 | 7 |

| Most common DRGs, % | ||||

| Simple pneumonia 89 | 7 | 4 | 3 | – |

| Septicemia 416 | 6 | 7 | 4 | – |

| Heart failure 127 | 5 | – | 4 | – |

| Cerebrovascular 14 | 4 | – | – | – |

| GI hemorrhage 174 | 4 | 8 | – | – |

| Esophageal/Gastric misc. 182 | – | 5 | – | 5 |

| Esophageal/Gastric misc 183 | – | 4 | 4 | 6 |

| Seizure/headache 25 | – | – | – | 5 |

| Kidney/Urinary Infection 320 | – | – | – | 4 |

| Medical back problem 243 | – | – | 4 | 3 |

| Severity (APR-DRG), % | ||||

| Mild | 35 | 35 | 43 | 54 |

| Moderate | 30 | 28 | 33 | 24 |

| Major | 28 | 29 | 20 | 18 |

| Extreme | 8 | 6 | 3 | 2 |

| Most common payers, % | ||||

| Medicare | 62 | 68 | 58 | 55 |

| Blue Cross | 5 | 7 | 6 | 9 |

| Medicare HMO | – | – | 5 | – |

| Other Managed Care | 5 | 5 | – | 6 |

Stratified by attending preference for teaching. Some entries do not add up to 100 because of rounding errors. DRG indicates diagnosis-related group; GI, gastrointestinal; APR, all payer refined.

Excess Length of Stay

The distribution of excess LOS was skewed to the right. Both teaching and nonteaching groups had stays slightly shorter than predicted. Median excess LOS among teaching patients was −0.6 days among nonteaching patients, the median excess LOS; −0.5 days. The Mann-Whitney Z statistic was 0.75, indicating the difference was not a significant. Across both types of unit, excess LOS was not affected by attending preference for teaching. Median excess LOS was −0.6 days for all teaching attendings and −0.5 for all nonteaching attendings.

Excess Costs

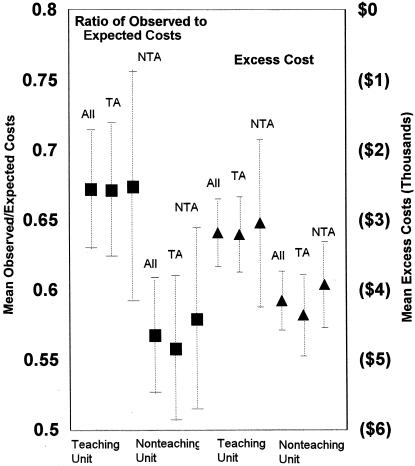

Although observed costs were skewed to the right, excess costs were normally distributed. Figure 1 shows mean excess costs and ratios of expected to actual cost per hospital discharge. All patient groups generated costs that were much lower than predicted by the risk adjustment program. On average, care of teaching-unit patients cost $3,178 (95% confidence interval(CI) ± $489) less than predicted, while care of nonteaching-unit patients cost $4,153 (95% CI ± $422) less than predicted. Teaching-unit patients cost 67% (95% CI ± 4%) as much as predicted, and nonteaching-unit patients code 57% (95% CI ± 4%) as much as predicted, indicating a significant difference in excess cost between teaching and nonteaching units. Like LOS, costs were not greatly affected by attending preference for teaching. Across both types of unit, patients of teaching attendings cost an average of $3,504(95% CI ± $408) less than expected, or 63% (95% CI ± 4%) of predicted. The average cost of patients cared for by nonteaching attendings was $3,632 (95% CI ± $569) less than expected, or 61% (95% CI ± 5%) of predicted.

Figure 1.

Absolute and relative excess cost for patients on the teaching unit and nonteaching units, and for teaching and nonteaching attending physicians. Means and confidence intervals are shown. TA indicates teaching attendings; NTA, nonteaching attendings.

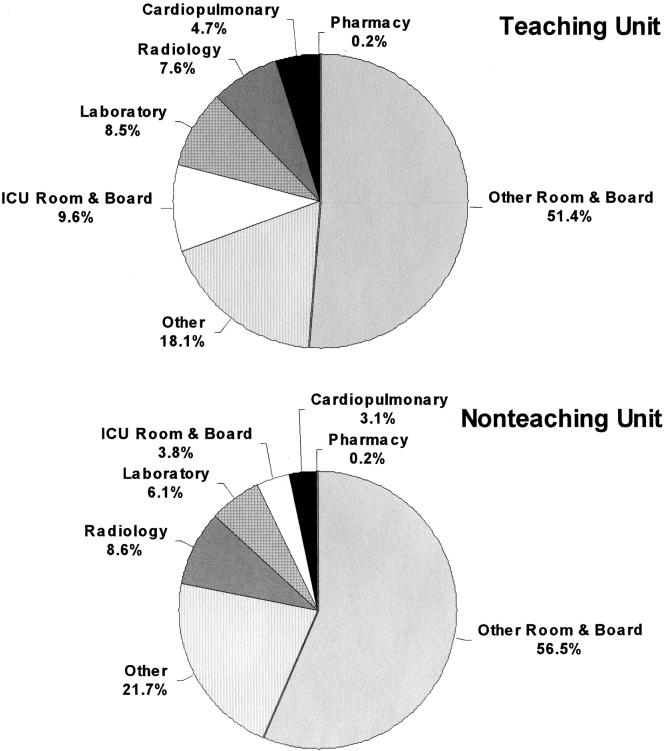

Despite variability in DRG distribution between the groups, no marked difference in percentage distribution of observed costs for most cost areas was demonstrated (Fig. 2). However, intensive care room and board accounted for 5.8% more of the total costs for teaching-unit patients than for patients discharged from nonteaching units.

Figure 2.

Distribution among cost areas of observed costs for teaching-unit and nonteaching-unit patients. ICU indicates intensive care unit.

Payments and Profitability

Distribution of observed payments was skewed to the right. The median payment among teaching patients was $6,285; for nonteaching patients, the median was $5,436. The Z statistic was less than .001, indicating a significant difference.

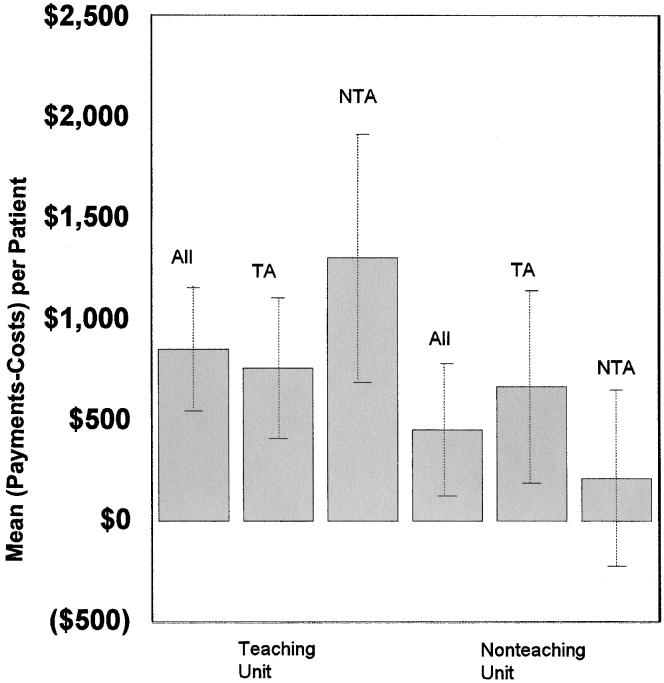

Although observed costs for teaching-unit patients were higher than for other patients, higher payments resulted in greater profitability, which was normally distributed (Fig. 3). On average, there was a profit of $848 (95% CI ± $307) per hospitalization for teaching-unit patients and $451 (95% CI ± $327) for nonteaching-unit patients. This difference can be attributed primarily to the patients of nonteaching attendings. Among these attendings, patients on the teaching unit generated an average profit of $1,299 (95% CI ±$613) per discharge, while patients on the nonteaching unit generated an average profit of $208 (95% CI± $437). Recalculation of endpoints without the 21 outliers did not substantially alter these results.

Figure 3.

Profitability for patients on the teaching and nonteaching units, and for teaching and nonteaching attending physicians. Means and 95%confidence intervals are shown.

Table 2 summarizes the difference in payments between teaching-unit and nonteaching-unit patients. Nine of the 12 payers contributing more than 1% to reimbursements of patients on both types of unit reimbursed more for teaching-unit discharges. Median payment by Medicare, the most common payer, was $583 more for teaching unit patients than for patients from other units.

Table 2.

Median Payments by Payer and Payer Characteristics

| Teaching Unit | Non-Teaching Unit | |||||

|---|---|---|---|---|---|---|

| Payer | Payment Basis | Payment Unit | Patients, % | Median Payment, $ | Patients, % | Median Payment, $ |

| Medicare | Prospective | Per case | 63 | $6,641 | 57 | $6,057 |

| Other managed care | Prospective | Per day | 5 | $4,653 | 4 | $5,017 |

| Medicare HMO | Prospective | PER Day | 3 | $5,145 | 5 | $3,316 |

| Commercial other | Fee-for-Service | 3 | $5,201 | 4 | $4,104 | |

| US Healthcare | Prospective | Per day | 3 | $2,758 | 3 | $1,997 |

| Oxford | Prospective | Per day | 2 | $2,925 | 3 | $2,786 |

| Blue Cross Managed Care | Prospective | Per day | 2 | $3,608 | 2 | $2,930 |

| Self pay | Fee-for-Service | 3 | $0 | 2 | $0 | |

| Prucare | Prospective | Per day | 2 | $4,396 | 3 | $1,954 |

| Medicaid | Prospective | Per case | 3 | $3,879 | 2 | $2,883 |

| FOHP | Prospective | Per day | 2 | $1,908 | 2 | $4,750 |

| Blue Cross | Prospective | Per day | 5 | $5,636 | 8 | $3,627 |

FOHP means First Option Health Plan

One possible explanation for observed differences in profitability between teaching and nonteaching units is that more detailed chart documentation was typical of residents and resulted in fewer days for which reimbursement was refused or lowered by the insurer. We found that teaching unit patients were reimbursed at a lower rate (or not at all) for 2.4% of hospital days; nonteaching-unit patients were poorly reimbursed 3.1% of hospital days (P = .03 by χ2). The difference in poorly reimbursed days between teaching and nonteaching units was greater when only the patients of nonteaching attendings were considered (1.4% vs 2.7% P = .02).

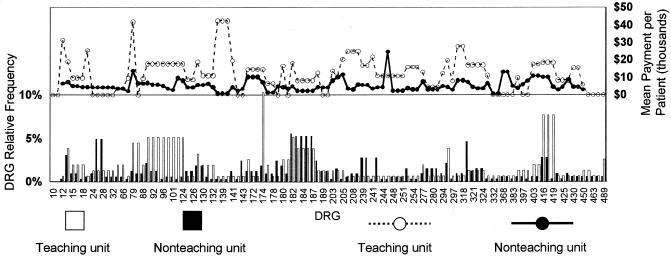

Although costs and LOS were compared between patient groups only after adjustment for expected values, no such adjustment was made for payments. Therefore, another possible reason for observed differences in profitability is that the teaching unit contained a higher proportion of more profitable DRGs, particularly among patients of nonteaching attendings. Figure 4 shows the distribution of DRGs between the two most discrepant groups with respect to profitability (teaching-unit and nonteaching-unit patients of nonteaching attendings). Although there are differences in the distribution of DRGs between the two types of unit, most DRGs received a higher reimbursement for patients on the teaching unit.

Figure 4.

Distribution of drug-related group (DRG) for patients of nonteaching physicians on the teaching and nonteaching units. Relative frequency and median payment for each DRG are shown. The bars in the lower part of the figure indicate relative frequency of DRGs in the two patient groups. The lines in the upper part of the figure indicate median payment for each DRG in the two patient groups.

DISCUSSION

At a community teaching hospital with a large proportion of Medicare-insured patients, we found that resident care had no association with LOS but was associated with increased costs. Payments and therefore profitability were substantially higher, particularly among attending physicians who do not usually admit to a teaching service.

One contributing factor to the difference in profitability was certainly a decrease in denied days for teaching unit patients, presumably due to fuller resident documentation. Differences between teaching and nonteaching units were more marked among nonteaching attendings, both for profitability and denied days. However, the observed difference in mean per-discharge profitability of $1,091 would have required a difference in denied days far greater than 1.3% for this to be the only explanation. The finding that teaching-unit patients were at greater risk of in-hospital death suggests another explanation—that elements of cost may have been more highly reimbursed in these sicker teaching patients. The utilization of Intensive care unit services among teaching cases was greater and may support this hypothesis. Finally, there may have been differences in case selection that influenced reimbursement in ways we did not measure, at present, our finding is largely unexplained.

We undertook the present study for several reasons. First, most of the literature predates health care's new emphasis on cost containment. Second, data are lacking on the association between resident care and utilization of services across a range of patients in a community teaching hospital. Third, no study has addressed the possible confounding of resident resource use by differences in practice patterns between the physicians who usually supervise them and those who usually do not. Fourth, payments and profitability represent important but seldom-measured determinants of net teaching costs.

Several multicenter studies comparing teaching and nonteaching hospitals have found that costs of care and LOS are higher in institutions where residents train. These increases have been related to the level of training.5,6 The type of residency program may also be important: one study in New Jersey found that hospitals with family practice residencies had lower inpatient costs compared with nonteaching hospitals or with hospitals sponsoring other types of training programs.7

These interinstitutional comparisons have attributed increased use of resources at teaching hospitals in part to the inexperience of residents. However, one study specifically refuted this explanation by finding similar resource utilization among teaching patients admitted early and late in the academic year.21 Another proposed reason for higher costs in teaching hospitals is that these institutions may make tests and procedures available, primarily for teaching purposes, and the availability encourages overspending on both teaching and nonteaching patients. Higher costs at teaching hospitals may result not from teaching per se but from the necessary overhead of large tertiary care institutions in which teaching tends to occur. These hospitals also may care for patients who are sicker in ways not measured by DRGs.22

Comparing teaching and nonteaching services within an individual hospital narrows the focus but can minimize problems of confounding resident costs with those institutional overheads for which teaching may be a marker but not a cause. Some studies taking this approach have found that residents may actually increase efficiency. For example, expansion of a residency program in Pennsylvania in 1989 increased hospital revenue more than costs and may have decreased LOS.23 A “minor teaching hospital” in Minnesota studied LOS and high-cost interventions in patients with myocardial infarction on their teaching and nonteaching services. Even after stratifying by severity, these authors found that the mean LOS was 0.6 days shorter, the mean charges $2,060 were lower, and cardiac catheterizations were 15% less prevalent on the teaching service.22

Conversely, a comprehensive review of surgical and medical patients at Stanford's university hospital in 1981 and a focused study of four surgical DRGs at the same institution found that costs within DRGs were higher on the teaching service. Costs for surgical patients were lower when residents received closer supervision.8–10 Similarly, comparison of a teaching and a faculty-run hospital service at a major teaching institution in 1991 found higher costs for teaching patients.11

Our results were similar to those of the Stanford study with regard to costs, although differences between teaching and nonteaching patients were considerably less in our study. Our setting, period of observation, and methods were more similar to those of the Minnesota study, although we did not find lower costs. Unlike those investigators, who developed and applied their own regression model of risk adjustment within the study population, we chose a proprietary risk adjustment methodology. A possible advantage of our approach is that the risk analysis is derived from and validated on a large and separate database. Our study appears to be unusual in examining profitability, which we found to be improved by resident care despite an increased use of resources. We also found that the attending physician's teaching preference influenced differences in profitability between a teaching and a nonteaching service.

Because of current controversy over issues of residency finance, it is particularly important to identify the limitations of this study. Most importantly, we did not directly explore the net financial effect of our internal medicine training program on the hospital. All patients at SBMC assumed some of the overhead costs of teaching (based on their utilization of resources to which these costs were allocated). Similarly, all Medicare and Medicaid patients, teaching and nonteaching, were reimbursed in part by federal teaching subsidies that augmented payments to the hospital. Whether the costs or the subsidies associated with teaching were greater at our community hospital is a question that this study did not address. We found that medical residents at our institution produced more in extra payments than they expended in extra resource use. We cannot deduce whether this incremental revenue enhanced, offset, or had little effect on the difference between fixed teaching costs and current or future government subsidies.

In addition, an important subset of medical patients was not considered in this study. Patients discharged from the medical intensive care unit and the pulmonary, renal, oncology, and cardiac care wards were all excluded.

Another limitation of this study is that teaching patients were identified by the unit from which they were discharged. We found no obvious differences between this unit and other medical-surgical units in the hospital, however it is possible that attributes of the teaching unit other than the presence of residents may have contributed to observed differences. Conversely, the fact that residents cared for only 68% of patients on the teaching unit almost certainly produced an underestimate of their effect.

Finally, in this complex determination by retrospective analysis, it is possible that unmeasured sources of bias produced the observed differences in costs and payments.

We conclude that teaching hospitals should not assume an adverse effect of residency training on profitability, even if residents are shown to increase resource use. With the advent of comprehensive patient-based computer databases and the availability of risk adjustment software, even relatively small teaching hospitals may be able to review the economic consequences of their own teaching programs.

References

- 1.Cameron JM. The indirect costs of graduate medical education. N Engl J Med. 1985;312:1233–8. doi: 10.1056/NEJM198505093121906. [DOI] [PubMed] [Google Scholar]

- 2.Anderson GF, Lave JR. Financing graduate medical education using multiple regression to set payment rates. Inquiry. 1986;23:191–9. [PubMed] [Google Scholar]

- 3.Goldfarb MG, Coffey RM. Case-mix differences between teaching and nonteaching hospitals. Inquiry. 1987;24:68–84. [PubMed] [Google Scholar]

- 4.Foderaro L. Many hospitals in New York quit plan for fewer doctors. New York Times. 1999 April 1. [Google Scholar]

- 5.Trenton, NJ: New Jersey Department of Health; 1989. The Relationship of Direct and Indirect Patient Care Costs to Graduate Medical Education in New Jersey Hospitals: Network, Inc. Study. [Google Scholar]

- 6.Frick AP, Martin SG, Schwartz M. Case-mix and cost differences between teaching and nonteaching hospitals. Med Care. 1985;23:283–95. doi: 10.1097/00005650-198504000-00001. [DOI] [PubMed] [Google Scholar]

- 7.Tallia AF, Swee DE, Winter RO, Lichtig LK, Knabe FM, Knauf RA. Family practice graduate medical education and hospitals' patient care costs in New Jersey. Acad Med. 1994;9:747–53. doi: 10.1097/00001888-199409000-00021. [DOI] [PubMed] [Google Scholar]

- 8.Garber AM, Fuchs VR, Silverman JF. Case mix, costs and outcomes: differences between faculty and community services in a university hospital. N Engl J Med. 1984;312:1233–8. doi: 10.1056/NEJM198405103101906. [DOI] [PubMed] [Google Scholar]

- 9.Jones KR. The influence of the attending physician on indirect graduate medical education costs. J Med Educ. 1984;59:789–98. doi: 10.1097/00001888-198410000-00003. [DOI] [PubMed] [Google Scholar]

- 10.Jones KR. Predicting hospital charge and stay variation: the role of patient teaching status, controlling for diagnosis-related group, demographic characteristics, and severity of illness. Med Care. 1985;23:220–35. [PubMed] [Google Scholar]

- 11.Simmer TL, Nerenz R, Rutt WM, Newcomb CS, Benfer DW. A randomized controlled trial of an attending staff service in general internal medicine. Med Care. 1991;29:JS31–JS40. [PubMed] [Google Scholar]

- 12.Lave JR. The Medicare Adjustment to the Indirect Costs of Medical Education: Historical Development and Current Status. Washington DC: Association of American Medical Colleges; 1985. [Google Scholar]

- 13.Health Care Finance Administration. Medicare Provider Reimbursement Manual Part II — Provider Cost Reporting Forms and Instructions. Sudbury, Ohio: Health Care Finance Administration; 1996. pp. 36–75. [Google Scholar]

- 14.Edwards ND, Honemann D, Burley D, Navarro M. Refinement of the Medicare diagnosis related groups to incorporate a measure of severity. Health Care Financ Rev. 1994;16:45–64. [PMC free article] [PubMed] [Google Scholar]

- 15.Gonella JS, Hornbrook MC, Louis DZ. A case -mix measurement. JAMA. 1984;251:637–44. [PubMed] [Google Scholar]

- 16.Conklin JE, Lieberman JV, Barnes CA, Louis DZ. Disease staging: implications for hospital reimbursement and management. Health Care Financ Rev. 1984;(Suppl):13–22. [PMC free article] [PubMed] [Google Scholar]

- 17.Yuen EJ, Gonella JS, Louis DZ, Epstein KR, Howell SL, Markson LE. Severity adjusted differences in hospital utilization by gender. Am J Med Qual. 1995;10:76–80. doi: 10.1177/0885713X9501000203. [DOI] [PubMed] [Google Scholar]

- 18.Naessens JM, Leibson CL, Krishan I, Ballard DJ. Contribution of a measure of disease complexity (COMPLEX) to prediction of outcome and charges among hospitalized patients. Mayo Clinic Proc. 1992;67:1140–9. doi: 10.1016/s0025-6196(12)61143-4. [DOI] [PubMed] [Google Scholar]

- 19.Federal Register. Rules and Regulations. 1991;58:43213–5. [Google Scholar]

- 20.Bureau of Data Management and Strategy. Data Users Review Guide 1995. Baltimore, Md: Healthcare Financing Administration, US Dept of Health and Human Services; 1995. [Google Scholar]

- 21.Buchwald D, Komaroff AL, Cook F, Epstein AM. Indirect costs for medical education. Is there a July phenomenon? Arch Intern Med. 1989;149:765–8. [PubMed] [Google Scholar]

- 22.Udvarhelyi IS, Rosborough T, Lofgren RP, Lurie N, Epstein AM. Teaching status and resource use for patients with acute myocardial infarction: a new look at the indirect costs of graduate medical education. Am J Public Health. 1990;80:1095–100. doi: 10.2105/ajph.80.9.1095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Deamond HS, Fitzgerald LL, Day R. An analysis of the cost and revenue of an expanded medical residency. J Gen Intern Med. 1993;8:614–8. doi: 10.1007/BF02599717. [DOI] [PubMed] [Google Scholar]