Abstract

We sought to determine whether an Internet-based continuing medical education (CME) program could improve physician confidence, knowledge, and clinical skills in managing pigmented skin lesions. The CME program provided an interactive, customized learning experience and incorporated well-established guidelines for recognizing malignant melanoma. During a 6-week evaluation period, 354 physicians completed the on-line program as well as a pretest and an identical posttest. Use of the CME program was associated with significant improvements in physician confidence, correct answers to a 10-question knowledge test (52% vs 85% correct), and correct answers to a 15-question clinical skills test (81% vs 90% correct). We found that the overall improvement in clinical skills was due to a marked increase in specificity and a small decrease in sensitivity for evaluating pigmented lesions. User satisfaction was extremely high. This popular and easily distributed online CME program increased physicians’ confidence and knowledge of skin cancer. Remaining challenges include improving the program to increase physician sensitivity for evaluating pigmented lesions while preserving the enhanced specificity.

Keywords: medical education, distance education, melanoma, guidelines, skin cancer

Skin cancer is the most common human malignancy; however, there is no screening test other than direct skin examination by a trained professional. Numerous studies have documented that practicing physicians do not regularly perform skin cancer screening1 and are not skilled at recognizing or appropriately managing early melanomas.2,3. This may be due to lack of training. Physicians in primary care training programs are not comfortable managing skin cancer and are not adept at distinguishing lesions that require biopsy from those that can be safely left alone or followed.4–7

Several educators have developed classroom programs to teach skin cancer management skills to physicians,4,8 but these programs are unlikely to reach the more than 200,000 primary care physicians in the United States. To address this need, we created an interactive, computer-based program on the management of melanoma, which can be distributed via the Internet.

Our initial evaluation of this program with a group of house officers and faculty showed that it could improve physicians' ability to apply a melanoma guideline and increase their confidence in managing pigmented skin lesions.9 After refining this program we made it available to physicians via the Internet. The present study sought to determine whether this approach to continuing medical education (CME) would increase physicians' confidence in managing pigmented skin lesions, increase skin cancer knowledge, and improve physicians' decision-making skills.

METHODS

Educational Program Development

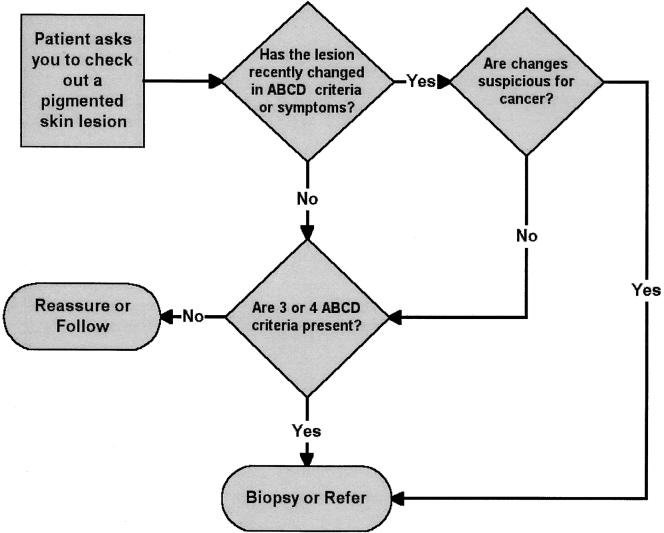

We developed the online skin cancer education program in two stages. The initial online program, The Early Recognition and Management of Melanoma, emphasized a management algorithm based on a combination of the ABCD criteria for recognizing melanoma proposed by Friedman in 1985,10,11 and the 7-point Glasgow checklist proposed by MacKie12 in 1990. The algorithm was modified during the project and the final version (Fig. 1) was incorporated into the online program described here. The algorithm and program were not altered during the evaluation period.

FIGURE 1.

The ABCD/change algorithm used to teach a decision rule for managing pigmented lesions. The ABCD criteria associated with malignancy are “Asymmetry,”“Border irregularity,”“Color irregularity,” and “Diameter (>6 mm).” See references 10 and 11 for further discussion. The teaching program provided customized feedback as users practiced applying the rule in clinical situations.

We used an interactive, problem-based teaching approach to introduce the algorithm to the user and then asked the user to apply the algorithm to nine clinical situations. Specifically, the user was presented with a clinical vignette that included a distant and close-up view of a skin lesion and a brief clinical history. The user then decided whether the lesion should be biopsied or could be safely followed. As the user applied the algorithm (recommending biopsy or observation), he or she was given customized feedback. The initial development and technical characteristics of this CME program have been described elsewhere.9

During the second stage, we refined and tested this CME program, adding information on benign skin lesions and skin cancer risk factors.13 We incorporated many of the suggestions for screening and prevention discussed by Robinson14 as well as editorial and technical enhancements suggested via the Internet by physician users. The final completed program contained four educational modules dealing with early recognition of melanoma (based on the algorithm shown in Figure 1), management of skin cancer risk factors, skin cancer prevention strategies, and recognition of benign pigmented lesions. Each module presented the user with a number of typical clinical situations and self-assessment questions with interactive, customized responses. The complete program contained 44,000 words, 128 images, 90 references and 19 article abstracts. It was sponsored for 6 hours of American Medical Association category 1 CME credit. Users did not have to complete the program at a single sitting; they were able to exit the program and laterreenter at the same place.

Educational Program Distribution via the Internet

We deployed and tested the revised program, Melanoma Education for Primary Care, to interested Internet users via a commercial CME Web site and via a linkage from a commercial Internet medical portal (Physicians' Online). Although freely available to any Internet user, registration was required for access. During the 6-week evaluation period in early 1999, CME credit was provided at no charge to persons completing the course.

Study Population

The program was promoted to regular users of the two Internet sites during the study period via e-mail announcements and notices on the sites' home pages. There was no other advertising. The study population included users who stated, that they were physicians who completed the program, Melanoma Education for Primary Care, during the study period, and who requested category 1 CME credit.

Program Evaluation

To receive CME credit, users had to complete a test before and after viewing the program. Both tests were identical and contained seven questions about confidence and attitudes (opinions), 10 questions about general skin cancer knowledge, and 15 clinical vignettes. For the vignettes, users were shown a picture of a lesion with a brief clinical history and asked to decide whether the lesion should be biopsied (or referred for biopsy) or not (provide reassurance). We recognize that in some clinical situations, a physician may choose to refer a patient to a dermatologist to make this decision, but users were deliberately not given this option. Because the educational program emphasized the two choices (biopsy/reassure), we felt that presenting only these two alternatives would be the most rigorous test of the program. Users had the option of viewing enlargements of the clinical lesions. There was no time limit to the pretest and posttest. Users were given information on correct answers only on the posttest.Following the posttest, there was a voluntary on-line satisfaction survey to assess program effectiveness. No attempt was made to prevent users from obtaining skin cancer information from other sources between the two tests nor was any attempt made to measure possible decay in knowledge or skills over time after completing the program.

The questions used to test skin cancer knowledge represented important teaching points from the four educational modules. The information required to answer these questions correctly was presented in the teaching program and supported by literature evidence. The 15 clinical vignettes used to test clinical skills were chosen to give adequate representation of malignant lesions, premalignant lesions, benign lesions that could be confused with malignancy, and benign lesions that could be safely ignored. While an actual biopsy diagnosis had been obtained in the cases presented in the vignettes, the “correct” answer was based on successful application of the information in the program, not the final biopsy diagnosis. For example, a small pigmented lesion that had recently changed should be biopsied, even if the final histologic diagnosis was benign. We felt this situation most adequately represented good clinical practice. None of the testing images were used in the education program. A description of the knowledge questions and clinical vignettes, including correct answers, is shown in the Appendix. The pretest/posttest also contained 7 questions about respondent confidence and beliefs (see Table 1)

Table 1.

Mean Confidence and Attitude Scores*

| Item | Pre-test | Post-test | P Value |

|---|---|---|---|

| Question 13, I am not confident in my ability to distinguish benign pigmented lesions from early melanoma. | 3.19 | 2.55 | <0.001 |

| Question 14, It is more important for primary care physicians to know when to refer out for biopsy of suspicious lesions than to accurately diagnose all types of skin cancer. | 4.06 | 4.36 | <0.001 |

| Question 15, The primary care physician's major role in the management of skin cancer should be to provide the initial assessment of lesions. | 3.95 | 4.21 | <0.001 |

| Question 16, My professional training provided me with a good grounding in the clinical diagnosis of skin cancers. | 3.00 | 3.08 | 0.0974 |

| Question 17, I am confident in my ability to provide the appropiate management (including referral) for people with suspicious pigmented lesions. | 3.53 | 4.03 | <0.001 |

| Question 18, I believe that many of my patients are at risk of developing skin cancer. | 3.86 | 4.11 | <0.001 |

| Question 19, I am confident in my ability to diagnose late melanoma. | 3.64 | 4.21 | <0.001 |

Mean of pre-test and post-test confidence and attitudes scores of 354 physicians, where 1 = strongly disagree with statement, 2 = disagree, 3 = not sure, 4 = agree, and 5 = strongly agree. P value tests difference between pre-test and post-test mean scores.

Analysis

Primary study endpoints were change in confidence in managing pigmented lesions, change in skin cancer knowledge, and overall change in recommended management approaches to the 15 clinical vignettes between the pretest and posttest. Secondary endpoints were changes in the sensitivity and specificity of management decisions and measures of user satisfaction with the program.

We summarized individual responses and compared results between the pretest and posttest. Answers to the confidence survey were expressed as mean scores for each item, based on a 5-point Likert scale and presented as differences between pretest and posttest scores. We calculated a summary knowledge score (mean percentage correct) for all 10 knowledge questions. Answers to the management skills test were expressed as the percentage of correct responses for each question as well as the overall percentage of correct responses. Although not specified in advance, we also analyzed answers to the management skills test based on sensitivity (percentage of lesions requiring biopsy when study participants selected “recommend biopsy”) and specificity (percentage of lesions not requiring biopsy when study participants selected “reassure”). Paired t tests were used to assess the statistical significance of the change in scores between the pretest and the posttest; 2-sided P values were also calculated. We calculated pretest and posttest responses by category of respondent (referral nondermatology practice, e.g., surgery, cardiology; dermatology; primary care; and not in active practice).

RESULTS

Validation of the Test Instrument

Three dermatology faculty members, two private dermatology practitioners, and 3 dermatology residents reviewed the knowledge and clinical vignette questions on the pretest/posttest. The expert reviewers averaged 80% (8 of 10) correct answers on the knowledge questions and 89% correct on the 15 vignettes. For 9 of the 15 clinical vignettes, all reviewers agreed with our “correct” answer. For 3 vignettes, 7 of 8 agreed with our answer. For the remaining 3 vignettes, 6 (question 23), 5 (question 26), and 3 (question 28) reviewers agreed with our answer. With no clear guidelines to decide which level of expert agreement was “acceptable,” we chose to analyze our results with and without the inclusion of data from question 28. When asked their opinion, all expert reviewers felt that the test instrument was the right length and covered clinically relevant information.

User Demographic Data

The pretest was completed and Melanoma Education for Primary Care started by 691 users during the study period. Of this group, 354 users stated they were physicians, completed the entire program and posttest, and requested CME credit. This was the study population. Self-reported data on experience in dermatology and current medical practice of the study population demonstrated that 4% of users had no dermatology training, 41% had medical school lectures in dermatology only, 24% had residency or postgraduate dermatology lectures, 29% had experienced a rotation on a dermatology service, and 2% had completed a dermatology residency. The majority of the users (65%) were in active primary care practice. Most of the remaining users were in nondermatology referral specialties (22%); 10% were not in active practice, and 2% were in active dermatology practice.

Changes in Confidence and Attitudes in the Study Population

After viewing the program, physicians felt considerably more confident in their abilities to manage pigmented lesions, including melanoma. There were generally positive, although less pronounced, changes in attitude about the role of primary care physicians and the risk of skin cancer. The results for all physicians are shown in Table 1.

Changes in Skin Cancer Knowledge in the Study Population

Associated with an improvement in confidence, there was a significant improvement in skin cancer knowledge between the pretest and the posttest. The overall percentage of correct answers on the pretest was 52% versus 85% on the posttest (P < .001). We analyzed results on the knowledge test based on the current medical practice of the user. Eight dermatologists (not the same persons as the expert reviewers) took the educational program and completed the pretest and posttest. These persons initially performed significantly better than other users on the knowledge questions. Our analysis showed that the differences between the four types of practitioners on overall pretest knowledge scores was significant (P < .001), as were overall improvements in scores (P = .03). However, the difference between the groups in overall posttest knowledge scores was not significant (P = .10). The pretest and posttest knowledge scores, based on clinical practice, are shown in Table 2.

Table 2.

Knowledge Scores*

| Group | N | Pre-test Correct, % | Post-test Correct, % | P Value |

|---|---|---|---|---|

| All | 354 | 52 | 85 | <.001 |

| Non-dermatologic Referral | 79 | 49 | 84 | <.001 |

| Dermatology | 8 | 76 | 90 | .045 |

| Primary Care | 232 | 52 | 87 | <.001 |

| Not in Active Practice | 35 | 48 | 81 | <.001 |

Percent correct answers on 10 knowledge questions, based on experienced of respondent. P values test differrences between pretest and posttest scores for each class of respondent.

Changes in Clinical Decision Skills in the Study Population

When presented with 15 clinical vignettes, users improved their decision making on 11, but performed worse on 4. On the pretest, the average user answered 81% of the vignettes correctly, compared with 90% on the posttest (9% improvement, P < .001). Removing question 28 (the dysplastic nevus) from the analyses changed the pretest average to 84% correct, the posttest average to 92% correct, and the difference to 8% (P < .001). No significant differences between the four types of practitioners on the pretest scenario scores (P = .09), the posttest scores (P = .35), or improvement (P = .19) were demonstrated.

The vignettes reflected an even mix of benign and potentially malignant lesions, whereas more patients with benign lesions would likely present to the physician in actual clinical situations. To further evaluate the effectiveness of our program, we estimated the sensitivity (percentage of times that a physician correctly biopsied a lesion) and specificity (percentage of times a physician correctly reassured the patient that further action was not necessary) of the physicians' clinical judgment before and after the program. We performed these analyses with and without the problematic lesion (question 28, a “negative” case). These analyses demonstrated considerable improvement in the specificity of clinical judgment regardless of whether the question was included (from 69% to 89%, P < .001) or excluded (from 72% to 92%, P < .001). However, this improvement in specificity was accompanied by a slight decrease in sensitivity (from 95%to 91%, P < .001).

User Satisfaction with the Program

The voluntary satisfaction survey was completed by 309 users in the study population (87% of total). These participants were quite enthusiastic about the program. On a 5-point scale, where 5 was the most favorable rating, the average rating and standard deviation) for the three satisfaction questions was as follows: (1) 4.72 ± 0.51 for how well the learning objectives were met; (2) 4.50 ± 0.86 for the relevance of the information in this program to your clinical practice; and (3) 4.83 ± 0.41 for the overall rating of the program.

When asked to evaluate the quality of the teaching images, no users rated them “poor” or “below average.” Nineteen users felt the images were “average,” 76 felt they were “above average,” and 214 felt they were “excellent.” When asked about download/transmission times, 5 users felt that times were “poor,” 5 felt the times were “below average” (i.e., inadequate), 59 felt they were “average,” 104 felt they were “above average” (i.e., good), and 134 felt that download/transmission times were “excellent.”

DISCUSSION

This study demonstrates that CME can be effectively and efficiently distributed via the Internet. In sex weeks in 1999, with very little advertising, more than 350 physicians voluntarily found and took this complex computer-based course on skin cancer.

The study also shows that an easily distributed, online program can improve physician confidence and knowledge and, possibly, skills in managing skin cancer. This type of program could be beneficial because lack of confidence in identifying suspect lesions is a major barrier to primary care physicians performing skin cancer screening.1

One conclusion from our study is that a large (20%) increase in user specificity (i.e., the ability to correctly decide that a pigmented lesion does not need further action) was offset by a small (4%) decrease in sensitivity (i.e., the ability to correctly decide that a lesion should be biopsied). Some have noted that primary care clinicians tend to be overly sensitive and inadequately specific in their judgment of potential skin cancer15; thus, results such as we have shown might be considered an improvement.

However, we believe that this type of debate is not the central issue. The task is to devise a program that can reliably improve the sensitivity and specificity of a clinician's judgment. When we reevaluated our data, we learned that virtually all of the decrease in sensitivity was due a single vignette (question 21,Appendix) where users failed to biopsy a small (<6 mm), round, symmetrical, homogeneously brown spot in a preexisting mole. In other words, this lesion met none of the ABCD criteria. The clue was that the patient had come in for “a change in color.” On biopsy, the lesion was found to be a superficial spreading melanoma. Thus, our users had correctly applied part of the algorithm, but not all of it. This information allows us to improve our program by reemphasizing the need to consider “change” as well as the static characteristics of a lesion. The singular benefit of Internet-based CME is that such programmatic changes are easy to implement and test.

As noted, the evaluation tool affects interpretation of a CME program's effectiveness. If we had substituted a different lesion for question, we might have shown an improvement in sensitivity as well as specificity. We measured performance against a test instrument that had been reviewed by eight dermatologists, but is this the best measure? It can be argued that specific clinical actions (with real patients) are better measures of educational interventions, but, under any circumstances, who designs the measurement tool(s)? Based on our experience, we recommend that externally developed, externally validated instruments, representing consensus gold standards, be used to evaluate CME programs. All CME developers should be able to test their work against the same external reference standards.

Another issue that exists in this and almost any other evaluation of CME is selection bias. While CME can only be evaluated in persons who choose to use it, we do not know if our group of Internet users was representative of most physicians. As early users of online CME, they may have been more receptive to computer-based education than others.

We tested this program with a commonly used, but artificial device of asking knowledge questions and presenting clinical vignettes. Although improvement in knowledge and skills are prerequisites to improvement in performance, we do not know if the changes measured on this test will persist or if they will be translated into actual practice, leading to improved patient care. We believe these types of studies are important and are pursuing them.

To conclude, Internet-based CME, which can also be described as computer-assisted distance learning, has the potential to improve on the recognized shortcomings of traditional course-based CME. Ongoing reviews have shown that course-based and lecture-based CME does not improve physician performance or health outcomes. The authors of these reviews call for “interactive CME sessions that enhance participant activity and provide the opportunity to practice skills” as a better alternative.16 We believe that well-crafted Internet-based CME can meet these requirements and that it can achieve goals for CME that have been advocated for almost 40 years: a national faculty, equal opportunity for all physicians, individual learning, continuous availability, an organized curriculum, active participation by the learner, self-testing, and ongoing evaluation.17

Acknowledgments

This work was supported by grant 1 R 43 CA78056-01 from the National Cancer Institute. Its contents are solely the responsibility of its authors and do not represent the views of the National Cancer Institute.

APPENDIX

Pretest/Posttest Knowledge Questions and Clinical Vignettes

| Question | Question or Clinical History | Answer Choice or Pathologic Diagnosis | Correct Answer |

|---|---|---|---|

| 3 | Dysplastic (atypical) nevi: | 1. Should all be surgically removed | Incorrect |

| 2. Become melanomas over time | Incorrect | ||

| 3. Are significant markers of those prone to melanoma | Correct | ||

| 4. Are the results of multiple sunburns during childhood | Incorrect | ||

| 5. All of the above | Incorrect | ||

| 4 | The term “SPF” on a sunscreen refers to: | 1. The “Skin Persistence Factor” or how long the sunscreen will stay on the skin | Incorrect |

| 2. The percentage of the sun's ultraviolet rays that are blocked from penetrating the skin | Incorrect | ||

| 3. The increase in time one can stay in the sun before burning | Correct | ||

| 4. The ultraviolet frequencies absorbed by the sunscreen | Incorrect | ||

| 5 | Which skin malignancy is not related to the sun exposure? | 1. Basal cell carcinoma | Incorrect |

| 2. Squamous cell carcinoma | Incorrect | ||

| 3. Malignant melanoma | Incorrect | ||

| 4. None of the above | Correct | ||

| 6 | Common acquired nevi: | 1. May be brown, black, or blue in color | Correct |

| 2. Typically begin as elevated nodules in childhood, becoming flatter with age | Incorrect | ||

| 3. Are more likely to arise in persons over age 40 | Incorrect | ||

| 4. Do not exhibit a “halo” (depigmentation of adjacent skin) unless they have undergone malignant degeneration | Incorrect | ||

| 5. None of the above | Incorrect | ||

| 7 | What is the appropriate skin cancer risk management strategy for a fair-skinned 20 year-old woman with facial freckles who burns easily and tans poorly, but has no history of skin cancer or nevi? | 1. With no other risk factors, no strategy is needed | Incorrect |

| 2. She should receive education on skin cancer | Incorrect | ||

| 3. She should receive education on skin cancer and sun protection techniques | Correct | ||

| 4. She should receive education and also been seen by a physician yearly for screening | Incorrect | ||

| 8 | The mortality rate of cutaneous melanoma is most closely related to: | 1. The anatomic site of the tumor | Incorrect |

| 2. The thickness of the tumor | Correct | ||

| 3. How dark the tumor is | Incorrect | ||

| 4. The gender of the patient | Incorrect | ||

| 5. The age of the patient | Incorrect | ||

| 9 | Which component of ultraviolet radiation is most strongly associated with skin cancer? | 1. The 100–280 nm range, UVC | Incorrect |

| 2. The 280–320 nm range, UVB | Correct | ||

| 3. The 320–400 nm range, UVA | Incorrect | ||

| 10 | Which of the following is most likely to require biopsy or removal? | 1. A 10 × 6mm irregular waxy lesion with a stuck-on appearance that has been slowly growing and changing color in a 60-year old man | Incorrect |

| 2. A 12-mm flat, irregular, brown-colored lesion in a newborn | Incorrect | ||

| 3. A newly arisen 6-mm flat, irregular, blue-brown lesion on the lower lip of a 25-year-old woman | Incorrect | ||

| 4. An 8 mm irregular, slightly-raised, brown and black lesion that has been on the back of a 30 year old man for an unknown time but has recently begun to itch | Correct | ||

| 5. All of these should be biopsied or removed | Incorrect | ||

| 11 | Which of the following attributes of a garment is most important in protecting against ultraviolet radiation? | 1. Tightness of the weave | Correct |

| 2. Color | Incorrect | ||

| 3. Fiber type | Incorrect | ||

| 4. Weight | Incorrect | ||

| 12 | Which risk factor is most strongly associated with malignant melanoma? | 1. Freckling and 15 dysplastic nevi on the torso | Correct |

| 2. African American skin type skin type | Incorrect | ||

| 3. More than 20 benign appearing small moles on the trunk | Incorrect | ||

| 4. Multiple blistering sunburns as a child | Incorrect | ||

| 20 | 18 m/o female, unchanged since birth | congenital nevus | Reassure |

| 21 | 48 y/o male, dark spot appeared 6 months ago in long-standing mole | Superficial spreading melanoma | Biopsy |

| 22 | 24 y/o woman, persistent spot on side of neck | Compound nevus | Reassure |

| 23 | 62 y/o male, slowly enlarging lesion on trunk | Seborrheic keratosis | Reassure |

| 24 | 65 y/o male, asymptomatic, slowly enlarging blemish on left cheek | Lentigo maligna melanoma | Biopsy |

| 25 | 43 y/o female with lesion on arm, told by friend to get it checked | Superficial spreading melanoma | Biopsy |

| 26 | 60 y/o male, asymptomatic, worried about cancer | Venous lake | Reassure |

| 27 | 71 y/o male, dark spot for years, asymptomatic | Lentigo maligna melanoma | Biopsy |

| 28 | 17 y/o female, asymptomatic, several similar lesions | Dysplastic nevus | Reassure |

| 29 | 43 y/o woman, lesion present for years without change | Compound nevus | Reassure |

| 30 | 22 y/o female, dark spot appeared among freckles 3 months ago | Solar lentigo | Biopsy |

| 31 | 39 y/o male, reports that parts of a mole are getting lighter | Superficial spreading melanoma - regression | Biospy |

| 32 | 36 y/o male, lesion noted on physical exam, patient unaware that it was there | Superficial spreading melanoma | Biospy |

| 33 | 58 y/o male, asymptomatic | Seborrheic keratosis | Reassure |

| 34 | 30 y/o male, asymptomatic, rubs shirtsleeve | Compound nevus — stable | Reassure |

Questions used in online pretest/posttests to measure changes in knowledge (questions 3–12) and clinical skills (questions 20–34).

Questions 1 and 2, demographic data were only included on pre-test (results shown in text), questions 13–19, opinions shown in Table 1.

REFERENCES

- 1.Kirsner RS, Muhkerjee S, Federman Dg. Skin cancer screening in primary care: prevalence and barriers. J Am Acad Dermatol. 1999;41:564–6. [PubMed] [Google Scholar]

- 2.Morton CA, Mackie Rm. Clinical accuracy of the diagnosis of malignant melanoma. Br J Dermatol. 1998;138:283–7. doi: 10.1046/j.1365-2133.1998.02075.x. [DOI] [PubMed] [Google Scholar]

- 3.Whited JD, Hall RP, Simel DL, Horner Rd. Primary care clinicians' performance for detecting actinic keratoses and skin cancer. Arch Intern Med. 1997;157:985–90. [PubMed] [Google Scholar]

- 4.Gerbert B, Bronstone A, Wolff M, et al. Improving primary care residents' proficiency in the diagnosis of skin cancer. J Gen Intern Med. 1998;13:91–7. doi: 10.1046/j.1525-1497.1998.00024.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Doolan NC, Martin GJ, Robinson JK, Rademaker AF. Skin cancer control practices among physicians in a university general medicine practice. J Gen Intern Med. 1995;10:515–9. doi: 10.1007/BF02602405. [DOI] [PubMed] [Google Scholar]

- 6.Robinson JK, McGaghie Wc. Skin cancer detection in a clinical practice examination with standardized patients. J Am Acad Dermatol. 1996;34:709–11. doi: 10.1016/s0190-9622(96)80093-4. [DOI] [PubMed] [Google Scholar]

- 7.Stephenson A, From L, Cohen A, Tipping J. Family physicians' knowledge of malignant melanoma. J Am Acad Dermatol. 1997;37:953–7. doi: 10.1016/s0190-9622(97)70071-9. [DOI] [PubMed] [Google Scholar]

- 8.Weinstock MA, Goldstein MG, Dubé CE, Rhodes AR, Sober AJ. Basic skin cancer triage for teaching melanoma detection. J Am Acad Dermatol. 1996;34:1063–6. doi: 10.1016/s0190-9622(96)90287-x. [DOI] [PubMed] [Google Scholar]

- 9.Harris JM, Salasche SJ, Harris RB. Using the Internet to teach melanoma management guidelines to primary care physicians. J Eval Clin Pract. 1999;5:199–211. doi: 10.1046/j.1365-2753.1999.00194.x. [DOI] [PubMed] [Google Scholar]

- 10.Friedman RJ, Rigel DS, Kopf AW. Early detection of malignant melanoma: the role of physician examination and self-examination of the skin. CA Cancer J Clin. 1985;35:130–51. doi: 10.3322/canjclin.35.3.130. [DOI] [PubMed] [Google Scholar]

- 11.Rigel DS, Friedman RJ, Perelman RO. The rationale of the ABCDs of early melanoma. J Am Acad Dermatol. 1993;29:1060–1. doi: 10.1016/s0190-9622(08)82059-2. [DOI] [PubMed] [Google Scholar]

- 12.MacKie R. Clinical recognition of early invasive malignant melanoma. BMJ. 1990;301:1005–6. doi: 10.1136/bmj.301.6759.1005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Weinstock MA, Goldstein MG, Dubé CE, Rhodes AR, Sober AJ. Clinical diagnosis of moles vs melanoma. JAMA. 1998;280:881–2. [PubMed] [Google Scholar]

- 14.Robinson JK. Clinical crossroads: A 28-year-old fair-skinned woman with multiple moles. JAMA. 1997;278:1693–9. [PubMed] [Google Scholar]

- 15.Whited JD, Hall RP, Simel DL, Horner RD. Primary care clinicians' performance for detecting actinic keratoses and skin cancer. Arch Intern Med. 1997;157:985–90. [PubMed] [Google Scholar]

- 16.Davis D, O'Brien MA, Freemantle N, Wolf FM, Mazmanian P, Taylor-Vaisey A. Impact of formal continuing medical education: do conferences, workshops, rounds, and other traditional continuing medical education activities change physician behavior or health outcomes? JAMA. 1999;282:867–74. doi: 10.1001/jama.282.9.867. [DOI] [PubMed] [Google Scholar]

- 17.Dryer BV. Lifetime learning for physicians: principles, practices, proposals. J Med Educ. 1962;37(part 2):89–92. [PubMed] [Google Scholar]