Abstract

OBJECTIVES

To measure the effectiveness of an educational intervention designed to teach residents four essential evidence-based medicine (EBM) skills: question formulation, literature searching, understanding quantitative outcomes, and critical appraisal.

DESIGN

Firm-based, controlled trial.

SETTING

Urban public hospital.

PARTICIPANTS

Fifty-five first-year internal medicine residents: 18 in the experimental group and 37 in the control group.

INTERVENTION

An EBM course, taught 2 hours per week for 7 consecutive weeks by senior faculty and chief residents focusing on the four essential EBM skills.

MEASUREMENTS AND MAIN RESULTS

The main outcome measure was performance on an EBM skills test that was administered four times over 11 months: at baseline and at three time points postcourse. Postcourse test 1 assessed the effectiveness of the intervention in the experimental group (primary outcome); postcourse test 2 assessed the control group after it crossed over to receive the intervention; and postcourse test 3 assessed durability. Baseline EBM skills were similar in the two groups. After receiving the EBM course, the experimental group achieved significantly higher postcourse test scores (adjusted mean difference, 21%; 95% confidence interval, 13% to 28%; P < .001). Postcourse improvements were noted in three of the four EBM skill domains (formulating questions, searching, and quantitative understanding [P < .005 for all], but not in critical appraisal skills [P = .4]). After crossing over to receive the educational intervention, the control group achieved similar improvements. Both groups sustained these improvements over 6 to 9 months of follow-up.

CONCLUSIONS

A brief structured educational intervention produced substantial and durable improvements in residents' cognitive and technical EBM skills.

Keywords: evidence-based medicine, clinical trial, graduate medical education, internship and residency

Clinicians recognize the potential value of evidence-based medicine (EBM) but many feel ambivalent about its use in patient care. Major barriers to implementation include a perception that the appeal of EBM is more academic than practical, and clinicians cite their own lack of EBM skills as a contributing factor.1

Many postgraduate training programs currently attempt to teach EBM skills, primarily in journal clubs and through didactic lectures. However, published outcomes of these efforts have shown only limited effectiveness.2–8 Most of the studies have focused on critical appraisal while excluding or inadequately measuring other EBM skills.9 Consequently, there are no published studies that assess how best to teach more fundamental EBM skills: the ability to create searchable questions that arise from knowledge gaps in patient care and the ability to perform efficient literature searches to answer those questions.10 The acquisition of these skills, in addition to those of critical appraisal, is essential if EBM is to favorably influence patient outcomes.11–13

We conducted a trial to test whether an educational program could improve four essential EBM skills among first-year internal medicine residents: (1) posing well-structured, searchable questions arising from clinical cases; (2) performing efficient electronic literature searches to answer these questions; (3) understanding quantitative outcomes from published studies about diagnosis and treatment; and (4) evaluating the methodological quality of published studies and their clinical relevance to specific patients. We also carried out repeat assessments to see if these acquired skills were durable over time. These four skills are similar, but not identical, to those espoused by proponents of EBM—namely, question formulation, literature searching, critical appraisal skills, and application of the results to the patient.14

METHODS

Study Design

We performed a firm-based, controlled trial of an EBM educational course for first-year residents in the Cook County Hospital internal medicine training program. All residents and faculty in the program are assigned (without formal randomization) to one of three firms in the Department of Medicine.

There were 55 eligible first-year residents in our program at the start of the study. First-year residents in one firm (n = 18) were assigned to the experimental group; those in the remaining two firms (n = 37) were assigned to the control group. The experimental firm received a 7-week course taught twice weekly by three faculty members (BMR, ATE, and RAM), and six chief medical residents. During this time, the control firms participated in equally intense educational curricula of identical duration, usually case-based clinical presentations in small interactive groups emphasizing clinical practice, but without formal EBM teaching.

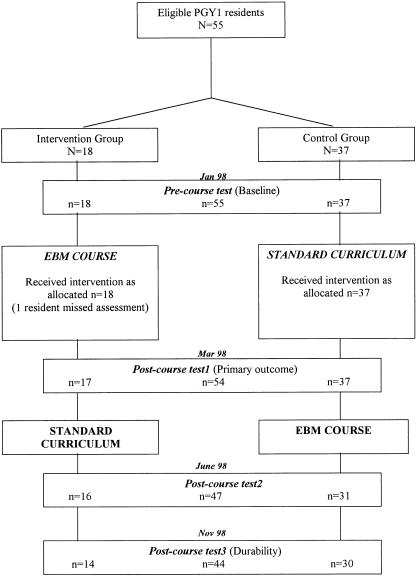

Evidence-based medicine skills were assessed before the intervention in January 1998 (precourse test); after the experimental firm completed the EBM course in March 1998 (postcourse test 1); after the control group received the EBM course in June 1998 (postcourse test 2); and a final time in November 1998 (postcourse test 3). The primary outcome was the difference in test scores at postcourse test 1. The study design is shown in Figure 1.

FIGURE 1.

Study design and timetable.

Educational Intervention

The EBM course consisted of 1-hour noon conferences twice a week for 7 consecutive weeks and a 1.5-hour session in a computer lab. The course curriculum highlighted diagnosis and treatment issues—not risk, prognosis, or other content areas. The first session of each week was a didactic seminar which focused on selected components of the EBM curriculum. In the second session, these skills were practiced in an interactive format involving actual patient cases (Table 1).

Table 1.

Evidence-Based Medicine Educational Curriculum and Course Design

| Week | Session 1: Didactic Seminar | Session 2: Practice |

|---|---|---|

| 1 | Introduction and question formulation | Case presentations |

| Asking clinical questions | ||

| 2 | Literature searching | Case presentation |

| Computerized searching for diagnosis and treatment articles | Searches assigned for diagnosis questions | |

| 3 & 4 | Diagnosis | Case presentations |

| Quantitative concepts: 2 × 2 tables, sensitivity, specificity, pretest and posttest probabilities | Searches assigned for diagnosis questions (Week 3) | |

| Core validity criteria for diagnosis studies | Searches assigned for treatment questions (Week 4) | |

| Review assigned searches (diagnosis) | ||

| 5 & 6 | Treatment | Case presentations |

| Quantitative concepts: ARR, RRR, NTT, confidence intervals | Searches assigned for treatment questions (Week 5) | |

| Core validity criteria for treatment studies | Searches assigned for both diagnosis and treatment questions (Week 6) | |

| Review assigned searches (treatment) | ||

| 7 | Review | Postcourse test 1 |

| Major topics and assigned searches |

ARR indicates absolute risk reduction; RRR, relative risk reduction; NTT, number needed to treat.

In teaching question formulation, we focused on four components: (1) a defined patient population; (2) the intervention of interest (a test or a treatment); (3) clinically meaningful outcome measures; and (4) a comparison group.15 Literature searching was taught in 1 didactic and 1 computer lab session; the searching process was also reinforced weekly during follow-up discussions. Diagnosis seminars focused on understanding 2 × 2 tables and core criteria necessary to appraise studies about diagnostic tests rather than likelihood ratios and odds.16 Treatment seminars focused on the numerical measures of treatment benefits and harms (risk reductions and number needed to treat [NNT]),17 understanding confidence intervals (CIs), and core criteria to appraise the validity of treatment trials.16

Outcome Measures

The primary outcome measure was a written test of EBM skills. We also surveyed residents' self-reported attitudes and behaviors. The EBM skills test included 5 sets of questions; each set was prefaced by a clinical case scenario and designed to evaluate one of five content areas: (1) posing well-structured clinical questions; (2) performing computerized literature searches (using ovid medline—the only resource available at our institution) for both diagnostic and treatment questions; (3) understanding quantitative aspects of published diagnostic studies (2 × 2 tables, predictive value, pretest and posttest probabilities, etc.); (4) understanding quantitative aspects of published treatment studies (relative risk reduction, absolute risk reduction, number needed to treat, confidence intervals); and (5) assessing the quality of treatment studies and their clinical relevance to particular patients. For purposes of scoring the tests, we collapsed content areas 3 and 4 above into one skill domain (quantitative understanding), yielding four tested skill domains. The maximum total score (100%) was a weighted average of scores in the four domains: formulating questions (16%), literature searches (36%), quantitative understanding (24%), and appraisal of study quality and clinical relevance (24%).

The overall structure of the test, the weighting of each skill domain, and the specific content areas remained unchanged for the four tests given between January and November. However, the clinical cases were changed and the wording of test questions varied somewhat to minimize recall bias on test performance. Two raters graded each exam independently; disagreements were resolved by consensus or after consultation with a third or fourth co-investigator; however, raters were not blinded to study group assignment.

In addition, we collected basic demographic data from the residents and a survey of self-reported EBM skills and behavior. This survey was repeated each time the written test was administered. The residents were not given any feedback on test performance or any answers until after the completion of the study to minimize the impact of testing four times in 11 months.

Statistical Analysis

Our primary analysis was a comparison of the mean total test scores and scores in the four domains between control and intervention groups after controlling for baseline differences. The study had greater than 90% power to detect a 15-percentage point difference in total test scores assuming a standard deviation of 15 and an α of 5%.

We tested the differences in postcourse test scores with an analysis of covariance while controlling for precourse test performance. We also examined whether other baseline characteristics of study participants were possibly confounding or modifying the effect of the educational intervention.

The demographic data and residents' self-reported EBM attitudes, skills, and behavior were analyzed using Mann-Whitney U tests for ordinal data and χ2 tests for dichotomous data. All analyses were by intention-to-treat using the statistical software SPSS 8.0 (Chicago, Ill).

RESULTS

Study Subjects

Baseline characteristics of the experimental and control groups are described in Table 2. Mean age was higher in the experimental group, but age was not associated with either precourse (P = .7) or postcourse test scores (P = 1.0). Among the 55 first-year residents enrolled in the study, all completed the precourse test, 54 (98%) completed postcourse test 1, 47 (85%) completed postcourse test 2, and 44 (80%) completed all four tests over the 10-month evaluation period. The overall course attendance for the initial intervention was 77%. Precourse total test scores demonstrated equivalent baseline EBM skills in the 2 groups: 40% and 42% for the experimental and control groups, respectively (P = .6).

Table 2.

Subject Characteristics at Baseline

| Experimental Groupn = 18 | Control Groupn = 37 | P Value | |

|---|---|---|---|

| Background characteristics | |||

| Mean age, y (range) | 31 (25–48) | 29 (25–37) | .04 |

| Male, % (n) | 61 (11) | 65 (24) | .8 |

| Previous research experience, median, y (range) | 1 (0–2) | 1 (0–4) | 1.0 |

| Previous critical appraisal training, % yes (n) | 67 (12) | 68 (25) | .7 |

| Own home computer, % yes (n) | 39 (7) | 43 (16) | .9 |

| Self-reported behavior | |||

| Hours reading medicine/wk, median (IQ range*) | 6 (3–7) | 5 (4–7) | .7 |

| Journal articles read/wk, median (IQ range*) | 2 (2–2) | 2 (1–2) | .3 |

| Perform ≥3 literature searches/wk, % (n) | 17 (3) | 14 (5) | 1.0 |

| Seek an original study article, % frequently or almost always (n) | 22 (4) | 19 (7) | .6 |

| Self-reported attititudes and skills | |||

| Importance of evaluating the medical literature, % very important or essential (n) | 78 (14) | 65 (24) | .7 |

| Ability to interpret study results, % good or very good (n) | 28 (5) | 21 (8) | .7 |

| Ability to apply study results, % good or very good (n) | 39 (7) | 38 (14) | .7 |

| Precourse test | |||

| Question formulation, mean % correct | 52 | 46 | .6 |

| Literature searching, mean % correct | 33 | 33 | .9 |

| Quantitative understanding, mean % correct | 33 | 38 | .5 |

| Study quality and clinical relevance, mean % correct | 49 | 55 | .5 |

| Total score, mean % correct | 40 | 42 | .6 |

IQ range indicates interquartile range.

Primary Outcomes

Following the educational intervention, the experimental group achieved significantly higher mean total postcourse test 1 scores (64%) than the control group (45%). After adjusting for baseline differences, the mean adjusted difference was 21 percentage points (95% CI, 13% to 28%; P < .001).

The experimental group's improved test scores reflected statistically significant improvements in three of the four EBM skill domains (Table 3).

Table 3.

Primary Outcomes: Total and Domain Scores in Postcourse Test 1

| Experimental Groupn = 17 | Control Groupn = 37 | |||

|---|---|---|---|---|

| Mean % Correct | Adjusted Difference*(95% Confidence Interval) | P Value | ||

| Question formulation | 82 | 56 | 26% (16 to 36) | <.001 |

| Literature searching | 74 | 43 | 31% (20 to 42) | <.001 |

| Quantitative understanding | 59 | 46 | 15% (5 to 25) | .004 |

| Study quality and clinical relevance | 44 | 38 | 7% (−9 to 23) | .4 |

| Total score | 64 | 45 | 21% (13 to 28) | <.001 |

Adjusted for precourse test score.

Secondary Outcomes

After the intervention, we found improvements in several of the residents' self-reported EBM skills and behavior. The number of residents performing three or more literature searches per week was 10 (59%) of 17 in the experimental group and only 6 (16%) of 37 in the control group (P = .003). In addition, over half of the experimental group (9 residents, 53%) reported their ability to interpret studies as “good or very good” compared with 11 (30%) in the control group (P = .04). There was no difference between groups in their self-perceived ability to apply the results of studies to patients (P = .4). None of the remaining self-reported behaviors or attitudes toward EBM was different between groups.

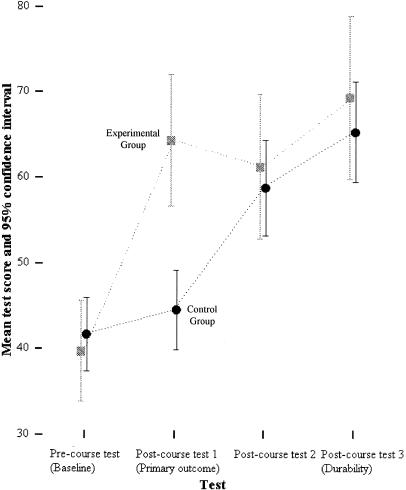

After the control group residents were crossed-over and received the educational intervention, their scores improved by 14 percentage points to a total of 58% (Fig. 2), similar to the score (62%) achieved by the experimental group at postcourse test 2 (P = .4). Thus, the EBM course produced similar improvements in test scores for both the control group (postcourse test 2 – postcourse test 1) and intervention group (postcourse test 1 – precourse test) (P = .1). When the four skill domains were examined separately for postcourse test 2, no differences between groups were found (P = .2 to .9).

FIGURE 2.

Test scores for the precourse test and the three postcourse tests by experimental group. The gray squares represent the mean test scores in the experimental group for each test with 95% confidence intervals. The black circles represent the mean scores for the control group with 95% confidence intervals.

The durability of the educational intervention effect was demonstrated by scores on postcourse test 3, which was administered 9 and 6 months, respectively, after the experimental and control groups had received the EBM course (Fig. 2). At that time, both groups sustained their previously improved performance, and there was no difference between them (P = .2).

DISCUSSION

These results show that our educational intervention significantly enhanced residents' EBM skills. Compared with the control group, residents in the intervention group improved substantially their ability to pose well-structured clinical questions, to search the literature for studies pertinent to their questions, and to understand studies' quantitative results. These educational outcomes were durable over a 6 to 9 month period. We did not demonstrate an effect of the EBM course on residents' abilities to evaluate studies' quality or relevance to individual patients. Taken together, these objective findings were consistent with the residents' subjective self perceptions: after taking the EBM course, they believed they were better able to interpret study results but not better able to apply those results to patients' care.

We are encouraged by these findings. Many observers have noted the difficulties inherent in applying published clinical evidence to the care of individual patients.18–21 Thus, we were not surprised to find that a brief intervention for relatively inexperienced clinicians failed to improve measures of that outcome. We suspect that acquiring expertise in this “ultimate” EBM skill may require a career-long, conscientious effort. In contrast, as shown in this study, the more fundamental EBM skills can be taught successfully even to junior physicians.

There are several potential limitations to our study. First, residents were not formally randomized. However, the two groups had similar baseline characteristics, and adjustment for baseline differences, including performance on the precourse test, did not alter the results. Second, contamination between groups was possible, but this bias would minimize, not increase, differences between groups. Third, nondifferential measurement bias might have occurred, since our test to measure EBM skills had not been previously assessed. However, the impressive initial improvement by the intervention group and the consistency of that improvement by the “crossed over” control group support the instrument's validity and reliability. Finally, the two raters grading the test were not blinded to group assignment, but each scored the tests independently using strict criteria for free-text answers, and most test questions were multiple choice, minimizing the opportunity for measurement bias. In fact, the greatest magnitude of improvement was seen for literature searching (multiple choice questions), and no improvement was seen in study quality assessment (free-text answers), supporting our belief that bias was unlikely to explain our findings.

Despite these limitations, we believe that we have shown that physicians in training can achieve proficiency in seeking, finding and understanding the “best evidence.” Clearly, further efforts are required to produce greater improvements in critical appraisal skills22–24 with the goal being for learners to achieve all-round competency in EBM.25 We think our results propel the EBM movement toward new more challenging terrain, such as assessing whether residents will employ these new skills in taking care of patients. The objective of teaching EBM should be to promote its adoption into everyday clinical practice. As technology and the availability of high quality evidence improve, our hope is that this can be accomplished during physicians' formative training years.

Our expectation is that research and innovation in medical education will lead the way. In our own educational program, where advocacy of the principles of EBM continues to grow,26 we plan to study next the interface between “best evidence” and “best practice.” Assessing whether the application of EBM skills by practicing physicians actually produces improvements in patient care, and evaluating why physicians choose to use, or not use, published evidence relevant to the care of their patients are important areas for future research.27–29 What can medical educators learn from those choices? These and other questions need answers before EBM can realize its full potential.

Acknowledgments

We thank Alice Furumoto-Dawson, PhD, for her help with data analysis.

The Department of Medicine of Cook County Hospital provided all funding and support for this project.

REFERENCES

- 1.McAlister FA, Graham I, Karr GW, Laupacis A. Evidence-based medicine and the practicing clinician. J Gen Intern Med. 1999;14:236–42. doi: 10.1046/j.1525-1497.1999.00323.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Green ML, Ellis PJ. Impact of an evidence-based medicine curriculum based on adult learning theory. J Gen Intern Med. 1997;2:742–50. doi: 10.1046/j.1525-1497.1997.07159.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Williams BC, Stern DT. Exploratory study of residents' conceptual framework for critical appraisal of the literature. Teaching and Learning in Medicine. 1997;9:270–75. doi: 10.1207/s15328015tlm0904_5. [DOI] [PubMed] [Google Scholar]

- 4.Stern DT, Linzer M, O'sullivan PS, Weld L. Evaluating medical residents' literature appraisal skills. Acad Med. 1995;70:152–4. doi: 10.1097/00001888-199502000-00021. [DOI] [PubMed] [Google Scholar]

- 5.Kitchens JM, Pfeifer MP. Teaching residents to read the medical literature: a controlled trial of curriculum in critical appraisal/clinical epidemiology. J Gen Intern Med. 1989;4:384–7. doi: 10.1007/BF02599686. [DOI] [PubMed] [Google Scholar]

- 6.Linzer M, Brown JT, Frazier LM, DeLong ER, Siegel WC. Impact of a medical journal club on housestaff reading habits, knowledge and critical appraisal: a randomized controlled trial. JAMA. 1988;260:2537–41. [PubMed] [Google Scholar]

- 7.Seelig CB. Affecting residents' literature reading attitudes, behaviors, and knowledge through a journal club intervention. J Gen Intern Med. 1991;6:330–4. doi: 10.1007/BF02597431. [DOI] [PubMed] [Google Scholar]

- 8.Linzer M, DeLong ER, Hupart KH. A comparison of two formats for teaching critical reading skills in a medical journal club. J Med Educ. 1987;62:690–2. doi: 10.1097/00001888-198708000-00014. [DOI] [PubMed] [Google Scholar]

- 9.Green ML. Graduate medical education training in clinical epidemiology, critical appraisal and evidence-based medicine: a critical review of curricula. Acad Med. 1999;74:686–94. doi: 10.1097/00001888-199906000-00017. [DOI] [PubMed] [Google Scholar]

- 10.Datta D, Adler J, Sullivant J, Leipzig RM. Test instruments for assessing evidence-based medicine competency. J Gen Intern Med. 1999;14(suppl 2):132. Abstract. [Google Scholar]

- 11.Sackett DL, Rosenberg WMC. On the need for evidence-based medicine. J R Soc Med. 1995;88:620–4. doi: 10.1177/014107689508801105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sackett DL, Rosenberg WMC. On the need for evidence-based medicine. Health Econ. 1995;4:249–54. doi: 10.1002/hec.4730040401. [DOI] [PubMed] [Google Scholar]

- 13.Sackett DL, Rosenberg WMC. On the need for evidence-based medicine. J Public Health Med. 1995;17:330–4. [PubMed] [Google Scholar]

- 14.Sackett DL, Richardson WS, Rosenberg W, Haynes RB. Evidence-based Medicine: How to Practice and Teach EBM. Edinburgh, UK: Churchill Livingstone; 1997. p. 3. [Google Scholar]

- 15.Richardson WS, Wilson MC, Nishikawa J, Hayward RSA. The well-built clinical question: a key to evidence-based decisions. ACP J Club. 1995;123:A12–3. Editorial. [PubMed] [Google Scholar]

- 16.Haynes RB, Sackett DL, Muir-Grey JA, Cook DL, Guyatt GH. Transferring evidence from research in to practice: 2. Getting the evidence straight. ACP J Club. 1997;126:A14–6. Editorial. [PubMed] [Google Scholar]

- 17.Guyatt GH, Cook DJ, Jaeschke R. How should clinicians use the results of randomized trials? ACP J Club. 1995;122:A12–3. Editorial. [PubMed] [Google Scholar]

- 18.VanWeel C, Knottnerus JA. Evidence-based interventions and comprehensive treatment. Lancet. 1999;353:916–8. doi: 10.1016/S0140-6736(98)08024-6. [DOI] [PubMed] [Google Scholar]

- 19.Culpepper L, Gilbert TT. Evidence and ethics. Lancet. 1999;353:829–31. doi: 10.1016/S0140-6736(98)09101-6. [DOI] [PubMed] [Google Scholar]

- 20.Mant D. Can randomized trials inform clinical decisions about individual patients? Lancet. 1999;353:743–6. doi: 10.1016/S0140-6736(98)09102-8. [DOI] [PubMed] [Google Scholar]

- 21.Rosser WW. Application of evidence from randomized controlled trials to general practice. Lancet. 1999;353:661–4. doi: 10.1016/S0140-6736(98)09103-X. [DOI] [PubMed] [Google Scholar]

- 22.Linzer M. Critical appraisal: more work to be done. J Gen Intern Med. 1990;5:457–9. doi: 10.1007/BF02599700. [DOI] [PubMed] [Google Scholar]

- 23.Norman GR, Shannon SI. Effectiveness of instruction in critical appraisal (evidence-based medicine) skills: a critical appraisal. CMAJ. 1998;158:177–81. [PMC free article] [PubMed] [Google Scholar]

- 24.Sackett DL. Teaching critical appraisal: no quick fixes. CMAJ. 1998;158:203–4. Editorial. [PMC free article] [PubMed] [Google Scholar]

- 25.Ghali WA, Lesky LG, Hershmann WY. The missing curriculum. Acad Med. 1998;737:734–6. doi: 10.1097/00001888-199807000-00006. [DOI] [PubMed] [Google Scholar]

- 26.Reilly B, Lemon M. Evidence-based morning report: a popular new format in a large teaching hospital. Am J Med. 1997;103:419–26. doi: 10.1016/s0002-9343(97)00173-3. [DOI] [PubMed] [Google Scholar]

- 27.Ellis J, Mulligan I, Rowe J, Sackett DL. Inpatient medicine is evidence based. Lancet. 1995;346:407–10. [PubMed] [Google Scholar]

- 28.Antman EM, Lau J, Kupelnick B, Mosteller F, Chalmers TC. A comparison of results of meta-analyses of randomized controlled trials and recommendations of clinical experts. Treatments for myocardial infarctions. JAMA. 1992;268:240–8. [PubMed] [Google Scholar]

- 29.Weingarten S, Stone E, Hayward R, et al. The adoption of preventive care guidelines by primary care physicians: do actions match intentions? J Gen Intern Med. 1995;10:138–44. doi: 10.1007/BF02599668. [DOI] [PubMed] [Google Scholar]