Abstract

OBJECTIVE

To determine whether a brief, multicomponent intervention could improve the skin cancer diagnosis and evaluation planning performance of primary care residents to a level equivalent to that of dermatologists.

PARTICIPANTS

Fifty-two primary care residents (26 in the control group and 26 in the intervention group) and 13 dermatologists completed a pretest and posttest.

DESIGN

A randomized, controlled trial with pretest and posttest measurements of residents' ability to diagnose and make evaluation plans for lesions indicative of skin cancer.

INTERVENTION

The intervention included face-to-face feedback sessions focusing on residents' performance deficiencies; an interactive seminar including slide presentations, case examples, and live demonstrations; and the Melanoma Prevention Kit including a booklet, magnifying tool, measuring tool, and skin color guide.

MEASUREMENTS AND MAIN RESULTS

We compared the abilities of a control and an intervention group of primary care residents, and a group of dermatologists to diagnose and make evaluation plans for six categories of skin lesions including three types of skin cancer—malignant melanoma, squamous cell carcinoma, and basal cell carcinoma. At posttest, both the intervention and control group demonstrated improved performance, with the intervention group revealing significantly larger gains. The intervention group showed greater improvement than the control group across all six diagnostic categories (a gain of 13 percentage points vs 5, p < .05), and in evaluation planning for malignant melanoma (a gain of 46 percentage points vs 36, p < .05) and squamous cell carcinoma (a gain of 42 percentage points vs 21, p < .01). The intervention group performed as well as the dermatologists on five of the six skin cancer diagnosis and evaluation planning scores with the exception of the diagnosis of basal cell carcinoma.

CONCLUSIONS

Primary care residents can diagnose and make evaluation plans for cancerous skin lesions, including malignant melanoma, at a level equivalent to that of dermatologists if they receive relevant, targeted education.

Keywords: skin cancer, diagnosis, residents, dermatologists

In the United States, managed care organizations currently rely on primary care physicians to triage for many specialized areas of medicine, including dermatology. Successful triaging involves screening, making correct initial diagnosis and evaluation planning decisions, and appropriately referring to specialists. Because many of these managed care organizations use financial incentives and administrative mechanisms to encourage generalists to limit referrals to specialists,1 we must ensure that primary care physicians can screen, diagnose, and evaluate effectively with reduced access to specialists. In dermatology, primary care physicians may not need to be as skilled as dermatologists at diagnosing and planning treatment for the approximately 2,000 named skin diseases,2 but, at a minimum, they need to proficiently screen, diagnose, and evaluate lesions indicative of skin cancer.3 In this study, we focused on improving primary care residents' performance on diagnosing and making evaluation plans for lesions indicative of skin cancer.

Primary care physicians have the opportunity to play a major role in the early detection of skin cancer. Up to 79% of the U.S. population visits a primary care physician annually,4 and the vast majority of patients with skin complaints initially are seen by nondermatologists.5 Given the significant morbidity and mortality of skin cancer, primary care physicians should be able to perform skin cancer diagnosis and evaluation planning at a high level—equivalent to that of dermatologists. If this level cannot be achieved, then the barriers to referrals to dermatologists must be eliminated to maintain quality patient care.

Data suggest that many primary care physicians currently are not able to perform at a level equivalent to dermatogists in diagnosing and making evaluation plans for all forms of skin cancer, including malignant melanoma.6–9 One reason primary care physicians do not perform as well as dermatologists in diagnosing and providing evaluation plans for skin cancer is that they receive little education in this area.7, 10 Past efforts that used single-component interventions to improve medical students' dermatologic abilities have had limited success.11, 12 We designed this study to determine whether a brief, multicomponent educational intervention could improve the skin cancer diagnosis and evaluation planning performance of primary care residents for skin lesions that were selected and pointed out to them; we did not aim to improve primary care residents' ability to conduct full-body skin examinations although the skills learned in diagnosing selected lesions may transfer to this task. We also aimed to determine whether this intervention could improve primary care residents' ability to diagnose and provide evaluation plans, especially for malignant melanoma, to an acceptable level—equivalent to that of dermatologists.

METHODS

Participants and Randomization

In November 1995, we began a randomized, controlled trial with 62 residents in primary care and family medicine, 11 residents in dermatology, and 4 attending physicians in dermatology. We recruited these primary care residents from seven San Francisco Bay Area residency programs into a brief, targeted, multicomponent intervention study. The study consisted of pretest, intervention, and posttest periods. All 62 primary care residents participated in the pretest. Following the pretest, the 62 primary care residents were randomized into two groups: 33 in the intervention group and 29 in the control group. The 11 dermatology residents and 4 attending physicians in dermatology (not including the coinvestigator dermatologists, Drs. Berger and Maurer) completed the pretest, but not the intervention, and were invited to complete the posttest.

The sample and recruitment procedures have been described previously.9 Our methods were approved by the Committee on Human Research at the University of California, San Francisco.

The Pretest

Six categories of skin lesions served as stimuli for the pretest—malignant melanoma, basal cell carcinoma, squamous cell carcinoma, actinic keratosis, seborrheic keratosis, and nevus—viewed on slides, digitized computer images, and patients.9 Participants viewed the same 25 lesions by all three methods. Because patients with lesions indicative of malignant melanoma usually require immediate care, 12 more lesions indicative of melanoma were shown only as slides and digitized computer images. Thus, we showed 25 lesions on patients and 37 lesions on slides and computer images.

The participants were randomly assigned to one of six different sequences of method presentation to control for potential order-of-presentation effects. For example, one of the six presentation sequences was slides, then computers, and then patients. Participants were asked to record the diagnosis and initial evaluation plan for each lesion. For initial evaluation planning, participants chose from the following six options: (1) reassure, no treatment needed; (2) observe, schedule follow-up; (3) topical medication; (4) liquid nitrogen; (5) shave or punch biopsies; and (6) excisional biopsy. Referral to a dermatologist was not offered as an option because the goal of the study was to determine primary care residents' own skills at diagnosing and evaluation planning for lesions.

The Educational Intervention

The intervention extended over 5 months from January to May 1996, yet took between 3 and 4 hours for each primary care resident to complete. The three intervention components, based on the literature of successful interventions on changing health care professionals' behaviors,13 targeted the diagnostic and evaluation planning skills of primary care residents for six categories of skin lesions including the three major types of skin cancer, malignant melanoma, squamous cell carcinoma, and basal cell carcinoma, and three of their noncancerous differential diagnoses, actinic keratosis, seborrheic keratosis, and nevus.

Face-to-Face Individualized Feedback Sessions.

Individualized feedback sessions were conducted over a 2-month period in January and February 1996. The 33 primary care residents in the intervention group each met face-to-face with a coinvestigator dermatologist (TB or TM) for approximately 20 minutes, focusing on improving their deficiencies as identified on the pretest for diagnosis and evaluation planning for six categories of skin lesions. At this meeting, the dermatologist provided individualized feedback by showing residents a table of their own pretest scores, the mean scores of all of the primary care residents, and the mean scores of the dermatologists. The dermatologist targeted the teaching using a training manual containing photographs of the pretest skin lesions and their corresponding true and differential diagnoses and treatment options.

Interactive Seminar.

An interactive seminar, conducted by the coinvestigator dermatologists, was offered four times over a 4-month period from February to May 1996 to accommodate primary care residents' busy schedules; 4 of the 33 primary care residents in the intervention group could not attend. The 2-hour seminar focused on pigmented and nonpigmented skin lesions, with emphasis on the six categories of skin lesions covered in the pretest. The seminar consisted of a standardized slide-show lecture, an 8-minute motivational videotape, and case examples as well as discussion and interaction between the dermatologist instructors and the intervention participants. The seminar also included demonstrations on how to conduct a total-body skin examination.

During the seminar, primary care residents received an educational packet including a laminated 3 × 5-index pocket card with photographs and descriptions of malignant melanomas, basal cell carcinomas, and squamous cell carcinomas; a color diagram of three skin biopsy techniques (shave, punch, and excisional); a pamphlet on skin cancer prevention and early detection prepared for patients by Scripps Memorial Hospital's Stevens Cancer Center; descriptions of the clinical features of the three major types of skin cancer; and a journal article, “Recognizing Skin Cancer,” that provides an overview of the clinical characteristics, diagnostic considerations, and treatment of malignant melanomas, basal cell carcinomas, and squamous cell carcinomas.14

Melanoma Prevention Kit.

Each of the 33 primary care residents in the intervention group received the Melanoma Prevention Kit, a 15-page booklet with information on prevention, signs of, and risks for malignant melanoma and nonmelanoma skin cancer.15 The booklet also provided a magnifier tool, measuring tool, and skin color guide to help users practice identifying malignant melanoma.

The Posttest

On May 31 and June 7, three weeks after completion of all intervention components, we administered a posttest that mirrored our pretest. Pretest and posttest stimuli were different, though we used similar numbers of each type of lesion in both. Participants viewed the same 23 lesions by all three methods. Participants viewed a total of 23 lesions on patients, and 38 lesions on slides and computer images.

Patient participation and primary care resident test-taking procedures replicated the pretest procedures described previously.9 Participants viewed the lesions at multiple slide, computer, and patient stations in the dermatology clinic of the San Francisco Veterans Health Affairs Medical Center. For the slide and computer stations, participants could view the 38 lesion images, presented at programmed intervals of 25 to 30 seconds, as many times as they wished.

For the patient stations, participants viewed the 23 lesions selected for the study on 12 patients who had one to four lesions each. Most lesions were located on the head, neck, hands, or arms; two patients had lesions on their backs; and one patient had a lesion on her trunk. All the patients wore tags pointing to the study lesions. The patients were instructed not to talk to the physicians about their lesions.

Background Information

We measured participant's demographic characteristics (gender, age, and year of residency) and past dermatology experience (previous experience in a dermatology clinic, biopsy experience, and interim dermatology experience—dermatology training received between the pretest and posttest not inclusive of our intervention).

Statistical Analysis

Background Characteristics.

Differences in background characteristics between control and intervention primary care residents were assessed by Student's t tests (for continuous variables) and Mantel-Haenszel χ2 tests (for nominal variables).

Calculation and Comparison of Summary Scores.

All skin lesions on patients were biopsied after the posttest. The correct diagnosis and evaluation plans were determined by the consensus opinion of the coinvestigator dermatologists based on the biopsy results. For diagnoses, most participants usually recorded a single provisional diagnosis such as “actinic keratosis.” Participants could, however, offer a provisional diagnosis such as nevus and a rule-out diagnosis such as malignant melanoma for lesions they suspected to be malignant melanoma and receive credit if one of these was a correct diagnosis. This more lenient standard was believed to simulate the actual clinical decision-making process for diagnosing certain skin lesions. Some lesions had more than one possible correct evaluation plan. For example, in a few cases participants could receive credit for recommending a biopsy for a benign lesion that was judged by our experts to be potentially indicative of cancer but was found on biopsy to be benign. Participants were given credit for answering shave, punch, or excisional biopsy for lesions that were cancerous on biopsy and were small enough to be excised. Participants were not given credit for a correct answer if they gave multiple answers for evaluation plans.

To determine participants' accuracy in diagnosing and treating skin lesions, summary scores were calculated. All summary scores were calculated using participants' responses to lesions in all three viewing methods. There were diagnostic and evaluation planning summary scores for each of the six categories of lesions, and for all the lesions combined. Differences in summary scores among the three groups (control, intervention, and dermatologists) were assessed by one-way analysis of variance (ANOVA). When the F value was found to be significant, a comparison among means was performed by the Tukey-Kramer method. Differences in change scores between the control and intervention group were calculated using Student's t test. We conducted Wilcoxon tests to confirm the results of the Tukey-Kramer comparison among means and the Student's t tests.

Multiple Regression Analyses.

We conducted a series of full-model multiple regression analyses to determine the effect of the intervention on primary care residents' posttest scores when taking into account residents' background characteristics and pretest scores. The independent variables were resident's age, gender, year of residency, previous experience in a dermatology clinic, biopsy experience, interim dermatology experience, pretest score, and type of group (intervention vs control group). The dependent variables were each of the 14 summary score variables.

RESULTS

Description of Participants

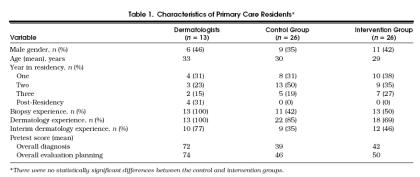

Of the 62 primary care residents who completed the pretest, 52 (26 in the control and 26 in the intervention group) also completed the posttest (retention rate of 84%); 10 primary care residents were unable to attend the posttest.Table 1 presents descriptive characteristics of the primary care residents who completed the pretest and posttest. There were no significant differences between control and intervention primary care residents on the demographic variables, dermatology experience variables, and pretest overall diagnosis and overall evaluation planning scores.

Table 1.

Characteristics of Primary Care Residents*

Nine of the 11 dermatology residents and all four of the dermatology attending physicians who were invited to take both the pretest and posttest completed the posttest. Because diagnosis and evaluation planning scores for the dermatology residents and attending dermatologists were not significantly different, these two groups were combined into one group, which we refer to as “dermatologists.”Table 1 presents the descriptive characteristics of the dermatologists.

Primary Care Residents' Skin Cancer Diagnostic and Evaluation Planning Performance

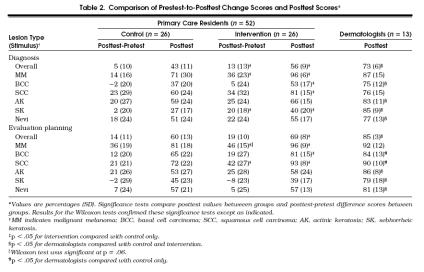

The control group, the intervention group, and the dermatologists all demonstrated improved performance over time, with the intervention group revealing the largest gains (Table 2) The intervention group showed significantly greater improvement than the control group in overall diagnosis and diagnosis of malignant melanoma and seborrheic keratosis. Although the intervention did not improve primary care residents' overall evaluation planning, it did improve the evaluation planning for malignant melanoma and squamous cell carcinoma.

Table 2.

Comparison of Prestest-to-Posttest Change Scores and Posttest Scores*

To confirm these findings, we conducted multiple regession analyses that took into account the effects of primary care residents' background characteristics and pretest scores. These analyses confirmed the superior performance of primary care residents in the intervention group as described above.

Primary Care Residents' Performance Relative to Dermatologists' Performance

There were significant differences among the three groups on all 14 posttest summary scores (p < .01) (Table 2). Intervention group primary care residents performed as well as the dermatologists on five of the six skin cancer diagnosis and evaluation planning scores with the exception of the diagnosis of basal cell carcinoma. The control group performed as well as the dermatologists on three of the six skin cancer diagnosis and evaluation planning scores. The primary care residents in the intervention group performed significantly better than the control group on overall diagnosis and overall evaluation planning, diagnosis and evaluation planning of all three cancerous lesion categories, and diagnosis of seborrheic keratosis. The dermatologists had significantly higher scores than the control group in 11 of the 14 diagnosis and evaluation planning categories.

Attrition Analysis

Of the 10 primary care residents who did not complete the posttest, 5 had been assigned to the control group and 5 to the intervention group. There were no statistically significant differences in age, gender, dermatology experience, or pretest scores between those primary care residents who completed the posttest and those who did not. In order to identify any potential bias caused by subjects who had dropped out of the study, we reanalyzed the data to include the 10 subjects who had dropped out. We tested a conservative scenario in which all subjects who had dropped out were assigned a change score equivalent to the average change score attained by subjects in the control group. We were unable to assume a change score of 0 because all subjects scored higher on the posttest than the pretest. When we included in our analyses subjects who had dropped out, we found the same significant differences in performance between the control group, the intervention group, and dermatologists.

DISCUSSION

Our brief, multicomponent educational intervention improved the skin cancer diagnosis and evaluation planning test performance of primary care residents, especially for malignant melanoma. The intervention also improved residents' performance on five of the six skin cancer diagnosis and evaluation planning categories to an acceptable level—equivalent to that of dermatologists—supporting the notion that dermatologists are not born, but can be made.16 The intervention group performed as well as the dermatologists on the diagnosis and evaluation planning for malignant melanoma and squamous cell carcinoma, performing only slightly lower than the dermatologists on the diagnosis of basal cell carcinoma. These findings suggest that after a targeted, brief educational intervention primary care residents could proficiently triage cancerous lesions. This is good news, given the movement of much of health care toward managed care systems, and the fact that generalists have the opportunity and the responsibility to diagnose and evaluate many more patients for skin cancer than dermatologists.

In contrast to past efforts that have had little success in improving primary care physicians' dermatologic abilities,11, 12 our intervention included components shown to be maximally effective in changing physician behavior. In a review of the literature on the effectiveness of interventions to change physician performance, Davis et al. found that interventions with three or more components produced positive results in 79% of the studies whereas single-component interventions led to improvement in 60% of the studies.13 Several components of the interventions that produced positive results were needs assessment, academic detailing, and feedback provided by respected academic faculty.13, 17 In our study, primary care residents were exposed to multiple components: they completed a needs assessment (pretest) of their skin cancer diagnosis and evaluation planning abilities, received academic detailing and individualized feedback from respected faculty members (the coinvestigator dermatologists) targeting areas identified in the pretest as needing improvement, and participated in interactive seminars conducted by these same faculty members. These components were designed to motivate primary care residents to improve specific skills needed to triage skin cancer, especially malignant melanoma, the most deadly skin cancer.

In systems of limited referral to specialists, such as managed care organizations, quality patient care depends on the ability of generalists to triage successfully for dermatology and other medical specialties. With respect to dermatology, we defined the proficiency level that primary care physicians need to achieve in diagnosing and making evaluation plans to ensure quality patient care: that is, primary care physicians need to identify the three types of cancerous skin lesions at a level equivalent to that of dermatologists. Other medical specialties also may need to define levels of proficiency for primary care physicians, assess their abilities in these domains, and develop core curricula to target the abilities that need improvement. Although it is not possible during a 3-year residency for primary care residents to complete a rotation in each medical specialty, their education could be supplemented by brief, multicomponent interventions, such as ours, targeting and reinforcing specific skills related to triaging skin cancer.

This study represents an important step toward establishing triage performance standards and developing relevant core curricula for dermatology. The development and implementation of a core curriculum in dermatology for primary care residents, however, will not prepare primary care physicians who are currently practicing in systems that require them to triage based on correct diagnosis and evaluation planning for skin cancer. Until all primary care physicians reach a level of proficiency for skin cancer triage, managed care organizations may need to eliminate the barriers to dermatology referrals.

This study had several limitations. First, the performance of residents on our test of their skin cancer diagnosis and evaluation planning abilities may not translate into differences in residents' performance with live patients in primary care practice. For example, while we pointed out skin lesions to study participants, in practice physicians are responsible for conducting total-body skin examinations to detect skin lesions unless patients call attention to them.

Second, part of the effectiveness of this intervention may have been due to the participation of the coinvestigator dermatologists. These specialists helped plan the intervention curriculum and interacted directly with the primary care residents in individual and seminar sessions. The success of the intervention may be attributed to the skilled delivery of these specialists more than to the design and content of the intervention.

Third, we used a relatively small sample of primary care residents as our subjects; the intervention should be tested with larger and more varied samples of primary care residents and primary care physicians.

Fourth, because residents in the intervention group received the answers to the pretest lesion set as part of their feedback, we used different sets of lesions for the pretest and posttest. Although we attempted to create two lesion sets of equal difficulty, the pretest was more difficult than the posttest as evidenced by the higher posttest scores of all three groups of subjects, including the dermatologists who were not expected to improve in performance. For example, the pretest contained atypical lesions, such as an amelanotic melanoma, which lowered the pretest malignant melanoma scores for all three groups. Nonetheless, the intervention group's gains in performance far surpassed those of the other two groups.

Fifth, we presented subjects with melanoma lesions on slides and computers, but not on live patients. Although this may have cued all subjects to suspect melanoma for the additional lesions presented on slides and computers, it does not negate the intervention group's significantly improved performance in the diagnosis of malignant melanoma.

Finally, we did not measure the cost-effectiveness and feasibility of implementing multicomponent interventions into residency programs. In the short-term, it could be more cost-effective and beneficial to patient health to allow patients direct access to dermatologists than to prepare primary care physicians to proficiently triage for skin cancer. Preparing primary care physicians in this area, however, could reduce the long-term costs associated with misdiagnoses and unnecessary referrals to dermatologists.

We currently are testing a computer application of our skin intervention designed to address several of these limitations. This application is delivered via the Internet and will be made widely available if proven effective by our study in progress. If this computer-based intervention is successful, it may be a more cost-effective and feasible method to implement targeted education into primary care residents' curricula and primary care physicians' continuing education. The costs of delivering the intervention by computer would be minimal because the computer would present and score the pretest, offer individualized feedback, teach to the physician's deficiencies, and deliver and score the posttest. In this computer educational program, we will focus more teaching on the harder-to-diagnose basal cell carcinoma. Although less deadly than malignant melanoma, this type of skin cancer is more prevalent than either malignant melanoma or squamous cell carcinoma.

In sum, our study suggests that primary care physicians can fulfill the skin cancer triage responsibilities required of them in managed care if they receive relevant interventions. To be successful, such interventions must be multicomponent, cost-effective, and easily disseminated. Considering the increasing incidence of skin cancer, including malignant melanoma, the secondary prevention of skin cancer by primary care physicians, detecting and triaging lesions indicative of cancer, is crucial to the health and well-being of the public.

Acknowledgements

The authors thank the following people for their contributions to this study: Nona Caspers, MFA, and Richard Carlton, MPH, for writing assistance; Sylvia Flatt, PhD, for assisting with data analysis; Lisa Velarde, BA, for assisting with data collection; Martin Weinstock, MD, Allen Dietrich, MD, and Wayne Putnam, MD, for their comments on earlier drafts of this paper; Tom Kauth for computer expertise; Barbara Bielan, NPC, Kirsten Vin-Christian, MD, Max Fung, MD, and Thea Mauro, MD, for helping recruit patients, for photographing lesions, and for allowing the use of their facility for the study; and Karen Reisch and Catherine Dolan for helping with the logistics of the intervention. The authors also thank the residency directors, staff, physician participants, and patients who participated in the study.

References

- 1.Alper PR. Primary care in transition. JAMA. 1994;274:1523–7. [PubMed] [Google Scholar]

- 2.Lynch PJ. Dermatology for the House Officer. The House Officer Series. 2nd ed. Baltimore, Md: Williams and Wilkins; 1987. [Google Scholar]

- 3.Weinstock MA, Goldstein MG, Dube CE, Rhodes AR, Sober AJ. Basic skin cancer triage for melanoma detection. J Am Acad Dermatol. 1996;34:1063–6. doi: 10.1016/s0190-9622(96)90287-x. [DOI] [PubMed] [Google Scholar]

- 4.National Center for Health Statistics. Vital and Health Statistics: Current Estimates from the National Health Interview Survey. Hyattsville, Md: National Center for Health Statistics, Centers for Disease Control and Prevention, Public Health Service, U.S. Dept. of Health and Human Services; 1994. [Google Scholar]

- 5.Johnson MA. On teaching dermatology to nondermatologists. Arch Dermatol. 1994;130:850–2. [PubMed] [Google Scholar]

- 6.McCarthy GM, Lamb GC, Russell TJ, Young MJ. Primary care–based dermatology practice: internists need more training. J Gen Intern Med. 1991;6:52–6. doi: 10.1007/BF02599393. [DOI] [PubMed] [Google Scholar]

- 7.Cassileth BR, Clark W, Jr, Lusk EJ, Frederick BE, Thompson CJ, Walsh WP. How well do physicians recognize melanoma and other problem lesions? J Am Acad Dermatol. 1986;14:555–60. doi: 10.1016/s0190-9622(86)70068-6. [DOI] [PubMed] [Google Scholar]

- 8.Ramsay DL, Mayer F. National survey of undergraduate dermatologic medical education. Arch Dermatol. 1985;121:1529–30. [PubMed] [Google Scholar]

- 9.Gerbert B, Maurer T, Berger T, et al. Primary care physicians as gatekeepers in managed care: primary care physicians' and dermatologists' skills at secondary prevention of skin cancer. Arch Dermatol. 1996;132:1030–8. [PubMed] [Google Scholar]

- 10.Solomon BA, Collins R, Silverberg N, Glass AT. Quality of care: issue or oversight in health care reform? J Am Acad Dermatol. 1996;34:601–7. doi: 10.1016/s0190-9622(96)80058-2. [DOI] [PubMed] [Google Scholar]

- 11.Allen SW, Norman GR, Brooks LR. Experimental studies of learning dermatologic diagnosis: the impact of examples. Teaching Learning Med. 1992;4:35–44. [Google Scholar]

- 12.Robinson J, McGaghie W. Skin cancer detection in a clinical practice examination with standardized patients. J Am Acad Dermatol. 1996;34:709–11. doi: 10.1016/s0190-9622(96)80093-4. [DOI] [PubMed] [Google Scholar]

- 13.Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance. A systematic review of the effect of continuing medical education strategies. JAMA. 1995;274:700–5. doi: 10.1001/jama.274.9.700. [DOI] [PubMed] [Google Scholar]

- 14.Amonette R, Sparks D. Recognizing skin cancer. Am Fam Physician Monograph. 1993:1–16. [Google Scholar]

- 15.USC Melanoma Prevention Program . The Melanoma Prevention Kit. Los Angeles, Calif: USC School of Medicine; 1995. [Google Scholar]

- 16.Norman GR, Coblenz CL, Brooks LR, Babcook CJ. Expertise in visual diagnosis: a review of the literature. Acad Med. 1992;67:S78–83. doi: 10.1097/00001888-199210000-00045. [DOI] [PubMed] [Google Scholar]

- 17.Davis D, Thomson MA. Implications for undergraduate and graduate education derived from quantitative research in continuing medical education: lessons learned from an automobile. J Cont Educ Health Prof. 1996;16:159–66. [Google Scholar]