Abstract

OBJECTIVE

To evaluate an innovative approach to continuing medical education, an outreach intervention designed to improve performance rates of breast cancer screening through implementation of office systems in community primary care practices.

DESIGN

Randomized, controlled trial with primary care practices assigned to either the intervention group or control group, with the practice as the unit of analysis.

SETTING

Twenty mostly rural counties in North Carolina.

PARTICIPANTS

Physicians and staff of 62 randomly selected family medicine and general internal medicine practices, primarily fee-for-service, half group practices and half solo practitioners.

INTERVENTION

Physician investigators and facilitators met with practice physicians and staff over a period of 12 to 18 months to provide feedback on breast cancer screening performance, and to assist these primary care practices in developing office systems tailored to increase breast cancer screening.

MEASUREMENTS AND MAIN RESULTS

Physician questionnaires were obtained at baseline and follow-up to assess the presence of five indicators of an office system. Three of the five indicators of office systems increased significantly more in intervention practices than in control practices, but the mean number of indicators in intervention practices at follow-up was only 2.8 out of 5. Cross-sectional reviews of randomly chosen medical records of eligible women patients aged 50 years and over were done at baseline (n = 2,887) and follow-up (n = 2,874) to determine whether clinical breast examinations and mammography, were performed. Results for mammography were recorded in two ways, mention of the test in the visit note and actual report of the test in the medical record. These reviews showed an increase from 39% to 51% in mention of mammography in intervention practices, compared with an increase from 41% to 44% in control practices (p = .01). There was no significant difference, however, between the two groups in change in mammograms reported (intervention group increased from 28% to 32.7%; control group increased from 30.6% to 34.0%, p = .56). There was a nonsignificant trend (p = .06) toward a greater increase in performance of clinical breast examination in intervention versus control practices.

CONCLUSIONS

A moderately intensive outreach intervention to increase rates of breast cancer screening through the development of office systems was modestly successful in increasing indicators of office systems and in documenting mention of mammography, but had little impact on actual performance of breast cancer screening. At follow-up, few practices had a complete office system for breast cancer screening. Outreach approaches to assist primary care practices implement office systems are promising but need further development.

Keywords: breast cancer screening, office systems, continuing medical education, clinical breast examination, mammography

Despite much attention over the past 10 years, rates of breast cancer screening (the performance of mammography and clinical breast examination [CBE]) in primary care practices remain suboptimal.1–3 A number of factors explaining these low rates have been considered, including physician factors, patient factors, and organizational factors.4–6 Most physicians report that they are in favor of breast cancer screening, yet attempts to increase screening by educational messages to physicians have been largely unsuccessful.7 Community-based interventions directed toward patients have met with only limited success.1, 8 There have been few patient-directed interventions based in medical practice.9–11 There has been some success with such organizational approaches as prompting,12, 13 although these have been tried primarily in academic and limited community settings. One study of community primary care practices staffed by motivated volunteers in New England used an “office systems” approach to practice organization, finding significant increases in intervention practices compared with control practices in performance of mammography and CBE.14

An office system is an organized approach within a medical practice for routinely providing a given service (such as breast cancer screening) to patients for whom this service is indicated. The key to this strategy is breaking down an activity into its component steps, and then developing and implementing a clear process involving both physicians and office staff to ensure the steps are performed for every appropriate patient.15, 16 An important characteristic of office systems is that they involve teamwork among a number of office staff, not only physicians. “Tools” such as flow sheets, chart prompts, patient education brochures, and patient-held cards may be part of an effective office system,17, 18 but most important is how these materials are integrated within the usual procedures of the practice.

Organizational approaches such as office systems to improve breast cancer screening are promising, but it is still unclear how to assist a broad range of community primary care practices (not only volunteers) to implement such systems, and whether the systems, once implemented, will be sufficient to optimize breast cancer screening within these practices. Answering these questions for breast cancer screening could help us develop more effective processes and messages for continuing medical education in general.19

We hypothesized that an active outreach program could assist community family physicians and general internists to develop office systems for breast cancer screening. We further hypothesized that implementation of office systems in these practices would lead to increased rates of breast cancer screening. The outreach was from the Lineberger Comprehensive Cancer Center at the University of North Carolina together with the North Carolina Area Health Education Centers Program (AHEC) and was cosponsored by the North Carolina chapter of the American College of Physicians, the North Carolina Academy of Family Physicians, and the Old North State Medical Society (the North Carolina chapter of the National Medical Association).

METHODS

Study Design

The North Carolina Prescribe for Health study (one of five “Prescribe for Health” projects funded by the National Cancer Institute) was a randomized, controlled trial in which the unit of randomization and analysis was the primary care practice. Practices were recruited to participate, and baseline data were collected before randomization. Randomization was performed by the project biostatistician (BQ), masked to the results of baseline data collection. These baseline data were given to all participating practices which were then informed of their assignment to the intervention group or control group. (The control group received intervention materials after final data collection.) Baseline and follow-up data were collected by trained research assistants, masked to the purpose of the study and to the fact that there were two different groups of practices. The study was approved by the Institutional Review Committee of the School of Public Health of the University of North Carolina at Chapel Hill.

Subjects and Recruitment

Eligible primary care practices met the following criteria: at least one physician in the practice was a member of one of the three professional organizations sponsoring the project; the physicians provided primary care (at least 20 hours per week); the practice was located in one of the two AHEC areas (predominantly rural) in the state; and the practice was not considering relocating or closing owing to retirement of the physicians within the 4 years of the study. Practices were recruited in random order using a standardized approach.20 To increase the number of practices with minority physicians and patients, several primary care practices in a third geographic area of the state were also approached for participation. Overall practices were recruited to include about half family medicine practices and half internal medicine, and about half solo-physician practices and half group practices with two or more physicians. Medical care in the study areas was almost entirely fee-for-service. Mammography was available within a short distance of all study practices, but only one practice (in the control group) itself provided mammography.

Intervention

The intervention, which consisted of a series of activities designed to assist primary care practices in developing and implementing individualized office systems for breast cancer screening, was conducted with intervention practices over 12 to 18 months (1993–1994). The primary focus of the intervention was on breast cancer screening by mammography and CBE, although other preventive activities were also discussed with practices.

After baseline data were collected, the physician investigators (LSK, RH) met with physicians of all intervention practices to review performance rates for mammograms and CBE. These rates were based on chart reviews of continuing care patients aged 50 years and older (described in detail below). The information was presented in colorful bar graphs specially designed to be visually interesting. Physicians received information about their practice only. The goal of the meeting, which also included office staff at the physicians' discretion, was to help the practices identify a “performance gap”21 in breast cancer screening, and to discuss the role of an organized preventive care office system in optimizing the delivery of breast cancer screening. Physicians were encouraged to establish practicewide policies (i.e., written recommendations agreed to by all physicians) for mammography, CBE, and other preventive care services.

Each intervention practice was then encouraged to continue working with the research team (2.5 full-time-equivalent trained facilitators and two physician investigators) over the course of the intervention. The research team assisted physicians and office staff in each practice in assessing the practice's approach to breast cancer screening and in planning office system changes to increase performance. An office system for breast cancer screening was considered to routinely accomplish the following activities for a high percentage of eligible patients: (1) identify patients due for mammograms and CBE; (2) recommend those procedures to the patients; (3) order or perform the procedures; (4) inform patients of the results and follow-up on abnormal results; and (5) recall patients when the procedures were next due. The research team worked with the staff to document how they were currently carrying out these steps (“current office system plan”) and how they would revise these activities to improve screening (“revised office system plan”). Each practice was encouraged to design a system tailored to its own needs and work patterns.

To facilitate the implementation of their office system plans, practices were encouraged to adopt resources (“tools”) for tracking and prompting (e.g., flow sheets, chart prompts and stickers, wall posters, card files, and patient-held records) and for patient education (e.g., brochures listing recommended preventive care for women over 50 years of age). Materials tailored for each practice were created and supplied to the practices by the project for the duration of the intervention period. Once the revised office system plans and tools were ready, each practice was advised to set a “start date” for implementing the breast cancer screening office system. The facilitators kept close communication with the practices throughout the intervention period to provide ongoing support and assistance as needed. In-service educational programs on breast cancer screening were presented to office staff members of practices that requested them.

At the beginning of the intervention period, physicians in control practices also received information about their own practice's baseline breast cancer screening rates. This information was presented, however, in a simple, black-and-white printed handout that merely listed the performance rates.

All study practices (both intervention and control) were invited to a conference at the conclusion of the project, during which study findings were presented and discussed. Several small group sessions, covering topics from recommendations for preventive care to working in teams, were included in the conference.

Data Collection

Data on performance of breast cancer screening were collected through cross-sectional reviews of randomly chosen medical records of eligible patients at two points in the study, preintervention (baseline, 1992) and postintervention (follow-up, 1995). Eligible patients were women aged 50 years and older who had at least one visit to the practice in the index year (1991 for the baseline survey and 1994 for the follow-up survey) and at least one previous visit, and did not have a previous diagnosis of cancer (other than nonmelanoma skin cancer). The number of records reviewed in each practice was based on the number of physicians in the practice. Forty records were reviewed in solo-physician practices; and in practices with two or more physicians, the number of records reviewed was 30 times the number of physicians, up to a maximum of 200 charts in practices with seven or more physicians. Medical records were selected for review at random.

The medical records were reviewed by trained research assistants, masked to the study purpose and design, using standardized data collection forms and code books. To ensure reliability of medical record reviews, the first five records in each practice and a random 5% of other records were reviewed independently by at least two research assistants and the results were compared to assess agreement. Disagreements were resolved by referring to the written code book or by discussion with the physician investigators. Finally, two physician investigators (LSK, RH) reviewed a random two to three records in each practice, comparing their results with the research assistants.7 These physicians were also continuously available by telephone for questions from the research assistants and met together with all of the research assistants periodically to reinforce coding rules.

Information collected from the medical records included patient age; race; insurance status; number of visits to the practice; physician of record; receipt of mammography and CBE; and presence, as well as use, of preventive services flow sheets. Records were reviewed for a 3-year time period (1989–1991 at baseline and 1992–1994 at follow-up). For mammography, data were recorded in two ways: “mention” of the test in the visit note in any way and actual “report” of the test result (regardless of where the test was done or who did it). Thus “mention” of mammography includes all cases of “reported” mammography plus others in which the note indicated that mammography was considered in some way. This was done to “credit” the practice for a physician recommending a test to a patient, even if the patient did not follow through with the recommendation. For CBE, data were recorded in only one way, giving credit for either completion of CBE or mention of CBE recommendation.

Physicians were also asked to complete questionnaires at both baseline and follow-up time periods. They were queried on their breast cancer screening policies, perceptions of effectiveness of breast cancer screening, interest in changing their breast cancer screening practices, standard of practice for breast cancer screening in the community, and attitudes toward barriers to breast cancer screening. Practices were paid $100 per physician for their time and effort to complete the data collection.

Data Analysis

The SAS system was used in the data analysis. Logistic regression models were used in the analysis of mammography and CBE as binary outcomes. The generalized estimating equations procedure was used to take into account the correlation of outcomes within primary care practices and within physicians,22 thus allowing the practice to be the unit of analysis. Specifically the odds ratio (OR) estimates and p values reported in Tables 3 and 5 were obtained in that manner. All hypothesis tests were done at the probability level of .05.

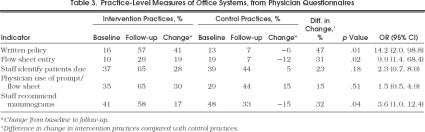

Table 3.

Practice-Level Measures of Office Systems, from Physician Questionnaires

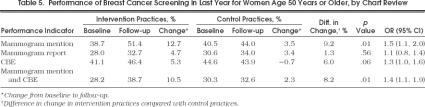

Table 5.

Performance of Breast Cancer Screening in Last Year for Women Age 50 Years or Older, by Chart Review

RESULTS

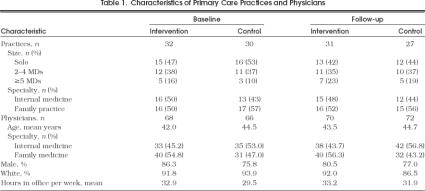

Sixty-one eligible primary care practices in the two AHEC areas of North Carolina were randomly chosen for participation and approached as described;2058 (95%) agreed to participate and completed baseline data collection. Five minority practices in the third geographic area were approached; 4 (80%) of these also enrolled in the study, for a total of 62 intervention and control practices. Practice and physician characteristics in the intervention and control groups were similar (Table 1 Of the 62 study practices at baseline, 58 remained in the study at follow-up. One intervention practice was lost owing to retirement of the physician; the solo physician in one control practice moved out of the area; and two other control practices refused follow-up data collection. Response rates for the physician questionnaire were 95% at baseline and 88% at follow-up.

Table 1.

Characteristics of Primary Care Practices and Physicians

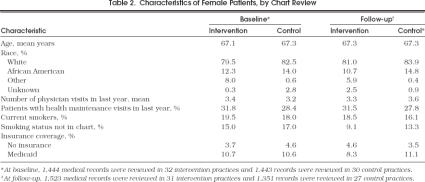

At baseline, we reviewed the medical records of 2,887 female patients who met the eligibility criteria and the records of 2,874 different women at follow-up. Patients in the two study groups were similar (Table 2)

Table 2.

Characteristics of Female Patients, by Chart Review

During the 12 to 18 months of the intervention period, physician investigators made, on average, three visits to each intervention practice. The research team facilitators conducted a mean of seven other visits per practice for 20 to 45 minutes each and communicated with the practices by telephone or by shorter, “drop-in” visits another three to four times each. Of 32 intervention practices, 30 developed revised plans for office systems, and 31 practices requested and were given customized tools for their office systems. The tools most frequently used were patient education brochures, flow sheets, reminder stickers, and wall posters.

We developed a priori five practice-level indicators for the presence of an office system, based on data from medical record reviews and physician questionnaires: (1) 50% or more of medical records in a practice have an entry in the last year on a preventive care flow sheet; (2) 50% or more of physicians in a practice report they have a written preventive care policy; (3) 50% or more of physicians in a practice report that nurses frequently or sometimes identify patients who are due for mammograms; (4) 50% or more of physicians report that they frequently use flow sheets, tickler files, or computerized reminders to identify patients due for mammograms; and (5) 50% or more of physicians report that nurses frequently or sometimes recommend mammograms to patients who are due for them.

All indicators showed a greater increase in intervention practices compared with control practices, with significant increases in three of the five indicators (Table 3). The mean number of indicators in intervention practices increased from 1.3 at baseline to 2.8 at follow-up, while the mean number of indicators in control practices decreased from 1.5 to 1.4 (p= .0003). Nonetheless, at the end of the project, only 23% of intervention physicians reported that they had a complete office system for breast cancer screening.

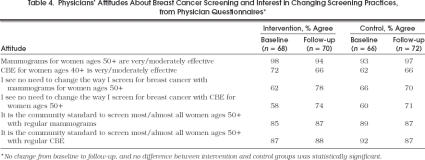

At baseline, almost all physicians in the study (88%) reported a policy advising annual mammograms for women aged 50 years and older and 95% recommended yearly CBE. Nearly all (94 –98%) felt that mammograms for women aged 50 years and older were moderately to very effective, and the majority (66 –72%) felt that CBEs for women aged 40 years and older were moderately to very effective (Table 4 Most physicians in both groups reported that they saw no reason to change the way they organize breast cancer screening, either mammography or CBE, in their practices. There was no significant difference in change in these attitudes over time in one group compared with the other.

Table 4.

Physicians Attitudes About Breast Cancer Screening and Interest in Changing Screening Practices, from Physician Questionnaires*

At baseline, the proportions of women's records with mention, as well as report, of yearly mammogram and CBE were similar in intervention and control practices (Table 5) Over the study period, the proportion of women's records with mention of a mammogram in the last year increased significantly more in intervention practices than in control practices (12.7% vs 3.5%, respectively;p= .014; OR 1.5; 95% confidence interval [CI] 1.1, 2.0). The proportion of women's records with mention of a CBE in the last year showed a trend toward an increase in intervention practices (5.3% increase), compared with control practices (0.7% decrease;p= .058). The proportion of women's records with both a mammogram and a CBE mentioned in the last year also improved significantly more in the intervention group than in the control group (10.5% increase vs 2.3% increase, respectively;p= .013; OR 1.4; 95% CI 1.1, 1.9). There was, however, no difference between intervention and control practices in the change in the proportion of women's records with a mammogram report in the last year (4.7% increase compared with 3.4% increase, respectively;p= .56). Fourteen practices increased by 20% or more from baseline to follow-up in the proportion of women's records with a mammogram mentioned or reported in the last year; of these, 11 were intervention practices. The increase in the number of office system indicators explained approximately one third of the intervention effect.

We examined a number of factors to identify associations with improvement in performance over time. No differences were found between family physicians and general internists, between physicians in solo practice and those in group practices, or between practices of older physicians and those of younger physicians. Physicians' change in performance over time was not associated with their beliefs in the effectiveness of breast cancer screening procedures, their stated readiness to change their breast cancer screening activities, their attitudes about barriers to breast cancer screening, or their perceptions of the community standard of practice for breast cancer screening. There also was no association between number of intervention visits and improved performance.

At follow-up, we asked all physicians if they believe it is possible for them to screen 80% or more of their eligible women patients for breast cancer. Eighty-three percent of intervention physicians and 63% of control physicians agreed (p= .03). Agreement with this question was significantly associated with improvement in mention of mammography from baseline to follow-up (p= .04). We also asked intervention group physicians about their perceptions of the project. Of these, 88% reported that the project had had some effect on their practice; only 8% answered that the project took a lot of their time; 69% reported that the project was worth the time and effort involved; 66% said that it caused them to think more about prevention; 72% reported that it helped them document preventive care better; and 50% said it helped them deliver preventive care more efficiently.

DISCUSSION

We found that a moderately intensive outreach intervention to increase breast cancer screening in randomly selected community primary care practices in North Carolina was successful in increasing several indicators of office systems, especially use of preventive care flow sheets, development of written preventive care policies, and involvement of the office staff in recommending mammograms. At follow-up, however, no indicator was present in more than two thirds of intervention practices, the mean number of the five indicators in intervention practices was 2.8, and only 23% of intervention physicians reported that they had a complete office system. Thus, although the intervention was feasible and acceptable, it was not greatly effective in influencing the development of complete office systems within practices.

Given the lack of success in helping practices to develop office systems, it is not surprising that there was little impact on actual performance of breast cancer screening. The proportion of medical records in which there was “mention” of mammography increased more in intervention than in control practices, and there was a nonsignificant trend toward greater performance of CBE. The intervention had no effect, however, on the proportion of women with reports of completed mammograms in their medical records.

Our results contrast with some previous studies of office-based interventions. McPhee and Detmer reviewed a number of studies of office-based interventions for cancer screening activities and found that, in general, such interventions as physician reminders did increase breast cancer screening performance.12 They also noted that several studies of interventions to increase the role of office staff in cancer screening activities had shown improvements in CBE and mammography. Likewise, Dietrich et al.'s study of an office system intervention among volunteer community practices in New England showed a statistically significant improvement in breast cancer screening.14 An observational study of community practices participating in an independent practice association model HMO in Massachusetts found that practices using flow sheets and reminders had modestly higher performance of mammography than other practices, but there was no attempt to influence practices to use these tools.13 The major difference between these studies and our intervention, however, is that all of the others studied either academic or motivated volunteer community practices. We intentionally set out to recruit a generalizable sample of community primary care practices, and succeeded in doing so.

From this study, the answer to the question of whether outreach from a university (or an AHEC or a professional association) can assist practices to make organizational changes is neither a resounding yes nor a clear no. Certainly, we had hoped for greater changes, and thus greater improvement in performance. Conversely, some changes did occur in use of flow sheets, in setting a written office policy, and in enlarging the roles of office staff. These changes occurred over a fairly brief intervention period of 12 to 18 months, and by only 2.5 full-time-equivalent facilitators, with intermittent assistance from two study physicians, working with 30 primary practices scattered over a wide geographic area.

In retrospect, the intervention had several deficiencies. First, the intervention was based on the assumption that physicians already had appropriate attitudes about breast cancer screening, and that all they needed to institute change was assistance in setting up an office system. We discovered, however, that although physician attitudes toward breast cancer screening were very positive, many had difficulty understanding the need for a systems approach to accomplishing screening goals. Many physicians were either skeptical that it is possible for a busy practice to screen a high percentage of eligible patients, or inclined to solve the problem by simply “trying harder” themselves. An intervention that helps physicians and their office staff understand the potential of office systems could possibly be more successful.

Second, as this study was a randomized, controlled trial, practices next to each other in the same community could be (and often were) randomized to different groups. Yet we know that physicians often consider changes in practice with respected peers before making the change.23, 24 This study took no advantage of social factors involved in helping physicians consider change.

Third, the intervention in this study made little use of such potentially useful tools as computers, or of such potentially useful techniques of change as continuous quality improvement, or of various approaches to positive reinforcement.

Finally, although we measured few practice or physician characteristics at baseline that predicted improvement during the project, we have the strong retrospective impression that practices that improved consistently demonstrated strong leadership, either by a physician or by another respected staff member. Several practices that we initially thought had such leadership did not improve. In these practices, there were usually other respected practice members who had little interest in the project, thus counteracting the influence of the leaders.

An important strength of this study was its ability to recruit and retain a large percentage of randomly selected community primary care practices, thus making its results more widely generalizable. A limitation of its generalizability is that it was conducted in a single, mostly rural state at a time when there was low penetrance of managed care in the study practices.

There may be lessons in this study for designers of innovative continuing medical education.25, 26 Although some volunteer practices may be enthusiastic enough to make substantial changes with assistance, change for many medical practices, like change for individuals, comes slowly and with difficulty. Providing the type of assistance practices really need may take considerable time as practices work through the process of change; it may take discussions of attitudes and knowledge of populations and systems as opposed to only technical medical matters; it may take finding ways for networks of practices to support one another as they change; it may take assistance in using computers in ways other than for billing;27, 28 it may take helping practices learn the basics of continuous quality improvement; and it may take identifying and supporting leaders within practices. Finally, designers of continuing medical education programs should consider innovative ways to positively reinforce change that leads to better health care for patients and communities.25, 26

Although it is disappointing that we did not find large increases in breast cancer screening as a result of the intervention, we believe there are reasons to be encouraged about this type of intervention in the future. We are very encouraged by our finding that community primary care physicians, medical schools, and professional societies can work together to improve the quality of health care. The intervention was widely accepted. Also, the intervention clearly had an impact on the processes of care within medical practices, even though few practices developed office systems complete enough to affect performance of breast cancer screening.

The practice of high-quality medical care requires ongoing change as we discover new and better ways to care for patients. Those involved in continuing medical education should continue to work with practicing physicians to develop effective approaches to help medical practices change in appropriate ways.

Acknowledgments

This research was supported under grant CA 54343-02 from the National Cancer Institute.

The authors gratefully acknowledge the invaluable support and assistance from Tom Bacon and Barry Fox in the Mountain Area Health Education Center, from David Webb, Karen Thomas, and Brad Chappell in the Area L Area Health Education Center, and from Ann Chamberlin and Kevin Squires at the University of North Carolina. The authors are also most appreciative of all the physicians and staff members in the practices with whom they worked.

References

- 1.Fletcher SW, Harris RP, Gonzalez JJ, et al. Increasing mammography utilization: a controlled study. J Natl Cancer Inst. 1993;85:112–20. doi: 10.1093/jnci/85.2.112. [DOI] [PubMed] [Google Scholar]

- 2.Breen N, Kessler L. Changes in the use of screening mammography: evidence from the 1987 and 1990 National Health Interview Surveys. Am J Public Health. 1994;84:62–7. doi: 10.2105/ajph.84.1.62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Centers For Disease Control Use of mammography services by women aged ≥ 65 years enrolled in Medicare—United States, 1991–1993. MMWR. 1995;44:777–81. [PubMed] [Google Scholar]

- 4.Kottke TE, Brekke ML, Solberg LI. Making “time” for preventive services. Mayo Clin Proc. 1993;68:785–91. doi: 10.1016/s0025-6196(12)60638-7. [DOI] [PubMed] [Google Scholar]

- 5.Jaen CR, Stange KC, Nutting PA. Competing demands of primary care: a model for the delivery of clinical preventive services. J Fam Pract. 1994;38:166–71. [PubMed] [Google Scholar]

- 6.Belcher DW, Berg AO, Inui TS. Practical approaches to providing better preventive care: are physicians a problem or solution. Am J Prev Med. 1988;4(suppl):27–48. [PubMed] [Google Scholar]

- 7.Davis DA, Thomson MA, Oxman AD, Haynes RB. Evidence for the effectiveness of CME: a review of 50 randomized controlled trials. JAMA. 1992;268:1111–7. [PubMed] [Google Scholar]

- 8.NCI Breast Cancer Screening Consortium Screening mammography: a missed clinical opportunity? JAMA. 1990;264:54–8. [PubMed] [Google Scholar]

- 9.Dietrich AJ, Duhamel M. Improving geriatric preventive care through a patient-held checklist. Fam Med. 1989;21:195–8. [PubMed] [Google Scholar]

- 10.Dickey LL, Petitti D. A patient-held minirecord to promote adult preventive care. J Fam Pract. 1992;34:457–63. [PubMed] [Google Scholar]

- 11.Margolis KL, Menart TC. A test of two interventions to improve compliance with scheduled mammography appointments. J Gen Intern Med. 1996;11:539–41. doi: 10.1007/BF02599601. [DOI] [PubMed] [Google Scholar]

- 12.McPhee SJ, Detmer W. Office-based interventions to improve delivery of cancer prevention services by primary care physicians. Cancer. 1993;72:1100–2. doi: 10.1002/1097-0142(19930801)72:3+<1100::aid-cncr2820721327>3.0.co;2-n. [DOI] [PubMed] [Google Scholar]

- 13.Gann P, Melville SK, Luckmann R. Characteristics of primary care office systems as predictor of mammography utilization. Ann Intern Med. 1993;118:893–8. doi: 10.7326/0003-4819-118-11-199306010-00011. [DOI] [PubMed] [Google Scholar]

- 14.Dietrich AJ, O'Connor GT, Keller A, Carney PA, Levy D, Whaley FS. Cancer: improving early detection and prevention: a community practice randomised trial. BMJ. 1992;304:687–91. doi: 10.1136/bmj.304.6828.687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Leininger LS, Harris R, Jackson RS, Strecher VS, Kaluzny AD. In: Continuous Quality Improvement in Health Care: Theory, Implementation, and Applications. McLaughlin CP, Kaluzny AD, editors. Gaithersburg, Md: Aspen Publishers Inc.; 1994. CQI in primary care. Chap 14. [Google Scholar]

- 16.Leininger LS, Finn L, Dickey L, et al. An office system for organizing preventive services. A report by the American Cancer Society Advisory Group on Preventive Health Care Reminder Systems. Arch Fam Med. 1996;5:108–15. doi: 10.1001/archfami.5.2.108. [DOI] [PubMed] [Google Scholar]

- 17.Carney PA, Dietrich AJ, Keller A, Landgraf J, O'Connor GT. Tools, teamwork, and tenacity: an office system for cancer prevention. J Fam Pract. 1992;35:388–94. [PubMed] [Google Scholar]

- 18.Dickey LL, Kamerow DB. Primary care physicians' use of office resources in the provision of prevention care. Arch Fam Med. 1996;5:399–404. doi: 10.1001/archfami.5.7.399. [DOI] [PubMed] [Google Scholar]

- 19.Lane DS, Burg MA. Promoting physician preventive practices: needs assessment for CME in breast cancer detection. J Contin Educ Health Prof. 1989;9:245–56. doi: 10.1002/chp.4750090409. [DOI] [PubMed] [Google Scholar]

- 20.Carey TS, Kinsinger LS, Keyserling T, Harris R. Research in the community: recruiting and retaining practices. J Community Health. 1996;21:315–27. doi: 10.1007/BF01702785. [DOI] [PubMed] [Google Scholar]

- 21.Kaluzny AD, Harris RP, Strecher VJ, Stearns S, Qaqish B, Leininger L. Prevention and early detection activities in primary care: new directions for implementation. Cancer Detect Prev. 1991;15:459–64. [PubMed] [Google Scholar]

- 22.Diggle PJ, Liang K-Y, Zeger SL. In: Analysis of Longitudinal Data. Diggle PJ, Liang K-Y, Zeger SL, editors. Oxford, UK: Oxford University Press; 1994. pp. 151–2. Generalized estimating equations. [Google Scholar]

- 23.Lomas J, Enkin M, Anderson GM, Hannah WJ, Vayda E, Singer J. Opinion leaders vs audit and feedback to implement practice guidelines. JAMA. 1991;265:2202–7. [PubMed] [Google Scholar]

- 24.Greco PJ, Eisenberg JM. Changing physicians' practices. N Engl J Med. 1993;329:1271–4. doi: 10.1056/NEJM199310213291714. [DOI] [PubMed] [Google Scholar]

- 25.Moore DE, Green JS, Jay SJ, Leist JC, Maitland FM. Creating a new paradigm for CME: seizing opportunities within the health care revolution. J Contin Educ Health Prof. 1994;14:4–31. [Google Scholar]

- 26.Moore DE. Moving CME closer to the clinical encounter: the promise of quality management and CME. J Contin Educ Health Prof. 1995;15:135–45. [Google Scholar]

- 27.Frame PS, Zimmer JG, Werth PL, Martens WB. Description of a computerized health maintenance tracking system for primary care practice. Am J Prev Med. 1991;7:311–8. [PubMed] [Google Scholar]

- 28.Ornstein SM, Garr DR, Jenkins RG. A computerized microcomputer-based medical records system with sophisticated preventive services features for the family physician. J Am Board Fam Pract. 1993;6:55–60. [PubMed] [Google Scholar]