Abstract

Over the past half century economists have responded to the challenges of Allais [Econometrica (1953) 53], Ellsberg [Quart. J. Econ. (1961) 643] and others raised to neoclassicism either by bounding the reach of economic theory or by turning to descriptive approaches. While both of these strategies have been enormously fruitful, neither has provided a clear programmatic approach that aspires to a complete understanding of human decision making as did neoclassicism. There is, however, growing evidence that economists and neurobiologists are now beginning to reveal the physical mechanisms by which the human neuroarchitecture accomplishes decision making. Although in their infancy, these studies suggest both a single unified framework for understanding human decision making and a methodology for constraining the scope and structure of economic theory. Indeed, there is already evidence that these studies place mathematical constraints on existing economic models. This article reviews some of those constraints and suggests the outline of a neuroeconomic theory of decision.

1. Introduction

The history of economics has been marked by an iterative tension between prescriptive and descriptive advances. Prescriptive theories seek to define efficient or optimal decision making which descriptive advances then invariably suggest do not accurately describe human behavior. The neoclassical revolution, and the period that followed it, were no exception to this general paradigm. Working from the assumption that all of human behavior could be described as a rational effort to maximize utility, the neoclassical theorists largely succeeded in developing a coherent basic mathematical framework. What followed, beginning with the work of scholars like Allais (1953) and Ellsberg (1961), were a series of descriptive insights which indicated either that humans were poor utility maximizers or that the underlying assumptions of the neoclassical revolution were flawed.

Over the last two or three decades economists have responded to the descriptive challenge raised by these post-neoclassical studies by adopting one of two basic approaches. Either they argue that rational decisions based on utility theory occur only under some conditions and that defining those conditions is of paramount importance (cf. Simon, 1947, 1983), or they argue that standard utility theory requires modifications, additions, or novel approaches (cf. Savage, 1954; Kahneman and Tversky, 1979). The fundamental problem imposed by bounding rationality is that the resultant models have little or no predictive power outside of their bounded domains. The problem modified utility theories face is that these newer models often fail to be parsimonious and often appear ad hoc or under-constrained.

One recent trend in economic thought may reconcile this tension between prescriptive and descriptive approaches. There is some hope that it may yield an economic theory that is both highly constrained and parsimonious while still offering significant predictive power under a wide range of environmental conditions. That trend is the growing interest amongst both economists and neuroscientists in the physical mechanisms by which human decisions are made within the human brain. There is reason to believe, some of these neuroeconomic scholars argue, that the basic outlines of the human decision making architecture are already known and that studies of this architecture have already revealed some of the actual computations that the brain performs when making decisions. If this is true, then a combination of economic and neuroscientific approaches may succeed in providing a methodology for reconciling prescriptive and descriptive economics by producing a highly predictive and parsimonious model based on the actual economic computations performed by the human brain.

At this time there are, however, profound differences between the approaches taken by neuroscientists and economists interested in this problem. Neuroscientists tend to underestimate the complexity of actual human decision making and thus fail to take full advantage of the existing economic corpus, studying choice under conditions that economists often see as trivial. Indeed, to economists many of the recent neurobiological studies of decision making seem to be more about reflexes than about economic behavior. Economists, in a similar way, often employ overly simplistic or outdated notions of brain function that are only weakly related to the modern consensus views held by neuroscientists. As a result, many neurobiologists dismiss the work of economists as irrelevant to the study of the human mind and brain. This often leads members of the larger economic community to reject neuroeconomics as irrelevant to advancing economic knowledge and it leads members of the broader neuroscientific community to reject neuroeconomics as outdated or overly simplistic.

The primary goal of this paper is to attempt to resolve this discrepancy between economic and neuroscientific approaches to human decision making by demonstrating, for scholars with primary expertise in economics, how neuroscientific experiments can be used as tools for developing real economic theories and models. After a brief introduction, the paper reviews a series of empirical studies that many neuroscientists believe describe the basic architecture for decision making in both human and non-human primates. Working from an understanding of this architecture, we next describe an experiment aimed at deriving the neurobiological algorithm for calculating utilities in a two-alternative lottery task. The economic model derived from this physiological experiment is then used to predict the dynamic play-by-play behavior of real subjects engaged in the actual lottery task. We hope that presenting the material in this way will demonstrate that economic theory can be used to guide neurobiological experiments which can, in turn, yield new economic theories.

Along the way, we hope to explain three central points around which future developments in neuroeconomics will likely have to be organized. First, we hope to explain how very profoundly our current neuroscientific and current economic theories of brain function differ and how these differences can only be reconciled if economists become familiar with the highly quantitative models of brain function that are at the core of contemporary neuroscience. Second, we hope to stress the importance, to economics, of evolutionary biology. Humans are unique organisms, but there is growing evidence that we are far less unique in the production of economical behavior than most working economists suspect. For example, monkeys can play mixed strategy equilibrium games with the same efficiency as humans (Dorris and Glimcher, 2003) and birds can systematically alter the shape of their utility functions to adopt risk preferences appropriate for their environments (Caraco et al., 1980). There is now abundant evidence that our own economic behavior is evolved from, and very closely related to, the economic behaviors of our animal relatives. This may be the most critical point made in this paper because it calls into question the pervasive assumption amongst economists that our decision making process is both a uniquely human faculty and a broadly rational faculty. Third and finally, we hope to show that neuroscientific studies of economic behavior can be much more than efforts to locate a brain region associated with some hypothetical faculty like ‘justice’ or ‘cooperation.’ Such studies are valuable starting points, but have troubled some economists because they provide no predictive power with regard to economic behavior. We hope to demonstrate that neuroeconomic experiments can and will reveal the nature of the economic computations brains perform.

1.1. The gap between economic and neuroscientific conceptualizations of the brain

The neoclassical revolution had two profound effects during the second half of the twentieth century: it largely revealed how a rational utility maximizer would behave and essentially proved that humans could not be viewed as efficient utility maximizers under all conditions. This insight led a number of economists, perhaps most notably Herbert Simon (1997), to conclude that human decision makers could be viewed as rational utility maximizers in only a bounded sense. Conditions do occur under which humans behave rationally but there are also conditions under which humans behave in a clearly irrational manner. One result of this insight has been a growing conviction in the economic community that human decision making can often be viewed as the product of two underlying processes, a bounded rational process well described by prescriptive economic theory and an irrational process which is best described empirically.

During the last decade a number of economists have begun to suggest that these two processes, the rational and irrational, may be instantiated within the human brain as two distinct mechanisms. Indeed, many have even suggested that irrational behavior can be uniquely attributed to limitations intrinsic to the neural architecture while rational behavior can be viewed as the product of a conscious faculty that somehow transcends this biological limitation. Vernon Smith put this in his 2003 Nobel prize lecture, when explaining the irrational effects of context on decision making, “[t]he brain, including the entire neurophysiological system, takes over gradually in the case of familiar mastered tasks and plays the equivalent of lightning chess … all without conscious thinking by the mind.” Smith and others have argued that it is the mechanical processes of the brain itself which account for the irrationality that bounds the rational processes of the conscious mind. Arguing in more detail, Camerer et al. (2003) have suggested that human decision-making can be viewed as the product of one cognitive and one affective (or emotional) system and that these two systems co-exist as independent entities within the neural architecture because they have different evolutionary origins. These authors have even drawn on the existing neuroscientific literature to argue that each of these distinct modules for decision making can be localized to distinct anatomical regions within the human brain. For example they suggest that “regions that support cognitive automatic activity are concentrated in the back (occipital), top (parietal) and side (temporal) parts of the brain.”

At the same time that this revolution has been occurring in economic circles, neuroscientists interested in human decision making have begun to head in a surprisingly different direction. The revolution that gave birth to modern neuroscience in the early part of the twentieth century argued that all of human behavior could be conceived of as the product of two fundamentally distinct mechanisms: a sophisticated faculty that governed complex behavior and a simpler, cruder, mechanism that could produce reliable, but unavoidably simplistic, behaviors (Sherrington, 1906; Damasio, 1995; LeDoux, 1996; Glimcher, 2003a). This simpler mechanism, which came to be identified with the notion of a reflex, was widely believed to be tractable to neurophysiological analysis and formed the core of our understanding of brain function during the first half of that century.

During the last several decades, however, ongoing empirical work has begun to suggest to many neuroscientists that this view of the neural architecture is no longer tenable. More and more biological evidence now suggests to neuroscientists an essentially unitary view of the neural architecture that is much more deeply rooted in evolutionary theory than this original dualistic conception. What is emerging in neuroscientific circles is the view that a surprisingly holistic (though multi-component) decision making process governs behavior (Parker and Newsome, 1998; Schall and Thompson, 1999; Glimcher, 2003b). The varied inputs to this decision making process, it is argued, have all been shaped by evolution in order to yield a unified pattern of behavior that maximizes the reproductive fitness of organisms within the environments in which they operate (Maynard Smith, 1982; Stephens and Krebs, 1986; Krebs and Davies, 1991). Evolution makes animals, these scientists argue, fitness maximizers. But critically, evolution performs this role on all parts of the organism simultaneously. Evolution yields a unitary organism, the global rationality of which is bounded by the requirements of the environment within which it evolved.

The economic capabilities of humans have, however, led many to conclude that we are fundamentally different from other animals in this regard, that we achieve rationality through a distinct and uniquely human mechanism than stands apart from the mechanisms possessed by other animals. The mechanisms that other animals possess may indeed still reside within our brains, but it is the irrational aspects of human behavior which can be uniquely attributed to this biological heritage. Quite compelling empirical data argue against this conclusion. First, it now seems clear that even animals with very small brains can behave in a surprisingly rational manner under a broad range of conditions. This seems to argue against the idea that in order to behave rationally humans would have needed to evolve some unique facility. Second, there is growing evidence that we share with our nearest relatives not just the ability to behave rationally, but we also share with them common boundaries to our rationality. If this is true then it is both the rational and irrational which we share with our nearest relatives, challenging the assumption that any of these aspects of behavior involve some uniquely human process. These data argue, in essence, that we differ more in degree than in nature from our nearest living relatives.

1.2. Evolutionary biology and economics: rational choice in simpler brains

In 1982, D.G.C. Harper published an influential experiment on the rationality with which mallard ducks forage for food (Harper, 1982). Mallard ducks were an interesting choice because their avian lineage evolved from dinosaurs about 200 million years ago and thus they are animals with an evolutionary heritage very different from our own. Further, they are animals with extremely small brains, typically less than 5 grams in weight. (In contrast, the human brain weighs about 1400 grams.) At an environmental level, these ducks live in small groups of about 10–50 individuals and normally obtain food by foraging together at waters edge. Finally, as with all animals who must maintain very low body weights in order to fly, they store little energy internally and thus their ability to survive and reproduce is well correlated with their ability to obtain food on a daily basis; at least amongst flighted birds, individuals who maximize the rate at which they obtain food each day maximize their long-term reproductive fitness (Krebs and Davies, 1991).

Harper’s experiment focused on the behavior of a particular flock of 33 mallards that wintered on the main pond in the botanical gardens of Cambridge University in 1979. What specifically interested Harper was foraging strategies. To examine that possibility, Harper conducted a series of group decision-making experiments of a kind that will be familiar to most economists. At the beginning of each day two experimenters would approach the pond, each with a sack of bread-balls all having a particular size and weight. Standing at two separate locations the experimenters began throwing those bread-balls simultaneously but at different rates. The job of each duck was simply to decide in front of which experimenter to stand. On a typical day experimenter 1 would, for example, throw a 2-gram bread-ball once every 5 seconds while experimenter 2 would throw a 2-gram bread-ball once every 10 seconds. What Harper would measure was the moment-by-moment decisions of each duck, both while these conditions were held constant and when they changed, during a foraging period that lasted tens of minutes.

If we treat the situation as a 33-duck Nash-type game and assume that the ducks employ a standard concave utility curve for bread-balls, then a single Nash equilibrium emerges under these conditions. Taking a locally linear approximation of utility for the range of bread-ball sizes from 0 to 4 grams, then we can identify the precise Nash equilibrium and make quite specific predictions about what constitutes rational behavior for these animals. Under these conditions the expected utility of standing in front of experimenter 1 for any one duck should be equal to the probability of obtaining a bread-ball from that experimenter multiplied by the size of the bread-ball being thrown. If the likelihood, for any individual, of obtaining a bread-ball is a linear function of the number of other ducks standing before experimenter 1 (and Harper verified that it was) then the expected utility (EU) of standing in front of experimenter 1 is proportional to the bread per minute thrown by experimenter one divided by the number of ducks standing in front of experimenter one. At Nash equilibrium the EUs of standing before either experimenter must be equivalent, so if experimenter 1 were throwing a 2-gram bread-ball every 5 seconds and experimenter 2 were throwing a 2-gram bread-ball every 10 seconds then equilibrium would be reached when two-thirds of the ducks stood in front of experimenter 1 and the other one-third stood in front of experimenter 2. Equilibrium would occur when the ducks were probability matching.

Perhaps surprisingly, Harper found that this very accurately described the behavior of the ducks under a wide range of conditions. At all of the rates and bread-ball sizes Harper explored, within about 60 seconds of the start of bread-ball throwing, the population of ducks had assorted itself out at the Nash equilibrium solution. That means that they achieved this solution after as few as 6 bread-balls had been thrown by one of the experimenters. Further, when Harper and his assistants changed either their rate of throwing or the size of the bread-balls, the ducks re-assorted themselves, once again achieving a rational equilibrium within about 60 seconds. The ducks as a group behaved in a perfectly rational manner, in a manner that many economists would argue was evidence of a rational conscious process if this same behavior had been produced by humans operating under simple market conditions like these.

But perhaps just as interesting as these observations on the behavior of the group were Harper’s observations on how individual ducks behaved. Within the flock ducks have an established pecking order and conflict between the ducks continually challenges and renews this order. Harper observed that this pecking order was evident within the flock as they foraged. Not all ducks obtained the same amount of bread (the likelihood of obtaining any given bread-ball was proportional to rank) and ducks conflicted with each other for access to the bread. Mechanisms of conflict, aggression and competition were operating while this rational solution was being achieved.

The ducks behaved rationally. Does the fact that it was ducks who behaved in this way make decisions of this type uninteresting to economists or irrelevant to studies of human choice? Or do these results suggest that the classical models of rationality based in utility theory could in principle be used by biologists to study brain function in non-human animals? If such a study were undertaken could it tell us anything of interest to economics? To begin to answer those critical questions we need to re-examine two final issues before turning to the body of this paper, one issue in economics and one issue in neuroscience. First, we need to review the development of classical utility theory. Second, we need to ask how classical utility theory may be related to the neural architecture for decision making that exists in animals ranging from ducks to humans.

1.3. Using neuroscience as an economic tool

Modern utility theory has its origins in the theory of expected value first proposed by Pascal. He argued that the value of any course of action could be determined by multiplying the gain that could be realized from that action by the likelihood of receiving that gain. This product, which we now call expected value, was presumed to represent a rational decision variable. While Pascal and his colleagues recognized that not all human decision making could be accurately described with expected value theory, they argued that all rational decision making should follow this prescriptive theory (cf. Arnauld and Nicole, 1996; Pascal, 1966).

By the mid-1700s, however, it was clear that the Pascalian approach did an extremely poor job of predicting human choice behavior under conditions of significant risk. Daniel Bernoulli made this point in 1738 (see Bernoulli, 1954). “To make this clear it is perhaps advisable to consider the following example: somehow a very poor fellow obtains a lottery ticket that will yield with equal probability either nothing or twenty thousand ducats. Will this man evaluate his chance of winning at ten thousand ducats? Would he not be ill-advised to sell his lottery ticket for nine thousand ducats? To me it seems that the answer is in the negative.” Bernoulli argued for a model of rational decision making in which the likelihood of a gain was multiplied by the utility, rather than the value, of that gain. His notion was that gains were represented in the decision process by a roughly logarithmic function of value that also incorporated a representation of the chooser’s wealth.

Modern work has, however, made it abundantly clear that this theory also falls far short of the descriptive goal of predicting actual human behavior. For example, Allais (1953) demonstrated that human choice can be non-transitive, Kahneman and Tversky (cf. Kahneman et al., 1982) demonstrated that human choice behavior deviates widely from monotonicity, and most recently game theorists have even shown that under some conditions (cf. Guth et al., 1982) humans knowingly make choices that will result in losses rather than gains. All of these experiments point out the limits of classical utility theory as a tool for understanding human choice behavior. As a result many economists have proposed that decision making is best viewed as involving the interaction of a utility-based mechanism and a second, perhaps less rational, process. The central argument that we will make in this paper is that current neurobiological data contradicts this view and instead supports a model of human and animal decision making more closely tied to the core insight that Pascal and Bernoulli provided.

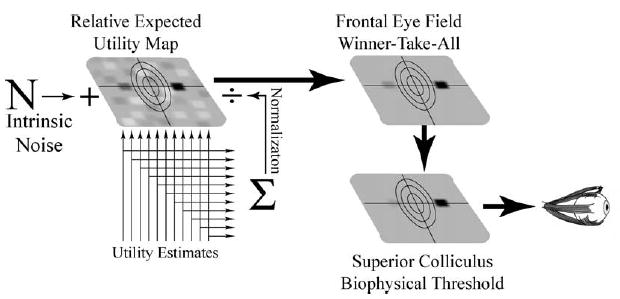

Utility theory proposes that decision makers must represent the desirability of each possible course of action using a common scale and that choosing is the process of selecting the most desirable of these possible courses of action. Pascal had argued that desirability should be computed as the product of value and likelihood of gain. Bernoulli had taken an important step forward by arguing that desirability involved a more complex computation influenced by properties intrinsic to the chooser, like current wealth. Although Bernoulli clearly meant utility theory to be an objective and prescriptive model for decision making, in this regard he came very close to introducing a subjective model (cf. Savage, 1954) for the decision making process. In Bernoulli’s model, two variables from the external world were modified by processes internal to the chooser and the product of these internal computations, expected utility, was then represented and used to make choices. Although there is still significant uncertainty about the precise form of that internal computation, current neurobiological evidence seems to strongly support this early claim. The brains of primates, almost certainly including humans, appear to represent a complex variable which under many circumstances closely parallels classical expected utility. In the final stages of decision making, the neural architecture seems to select the most desirable action from amongst representations of the desirability of all available actions by a winner-take-all process.

This model, which will be developed at length below, does however depart from neoclassical economic theory in an important way. Neoclassical theory has always made the famous as if argument: it is as if expected utility was computed by the brain. Modern neuroscience suggests an alternative, and more literal, interpretation. The available data suggest that the neural architecture actually does compute a desireability for each available course of action. This is a real physical computation, accomplished by neurons, that derives and encodes a real variable. The process of choice that operates on this variable then seems to be quite simple; it is the process of executing the action encoded as having the greatest desirability. Of course the challenge that this emerging view poses is thus to determine exactly how this desirability is computed. It is this process which combines elements of Bernoulli’s utility theory and other operators in an evolutionary context to achieve efficient decision making in the environments for which each species evolved.

While neuroscientists are only just now beginning to describe the computation that transduces objective measures from the outside world into this representation of desirability, several factors are already becoming clear. First, under many conditions, conditions under which choice appears rational, this desireability encoded by the neurons of the brain very closely approximates expected utility. Second, under conditions in which choice behavior is poorly predicted by rational choice models, these neural representations still encode the desireability of each course of action, although under these conditions desirability and expected utility are of necessity not identical. The available data suggest that the neural decision-making process is always rational with regard to these internal representations of desireability. When choosers deviate from rationality it is this physiological encoding of desirability, which we refer to as physiological expected utility, that departs from neoclassical theory.

Together, these observations raise an intriguing possibility which forms a central subject of this paper: the neural architecture may indeed compute and represent the physiological expected utility of many possible courses of action, much as neoclassical utility theory proposes. Evolution may have shaped the neural architecture to perform efficiently under many, but not all, environmental circumstances. When choosers are efficient in the economic sense, that architecture accurately represents the expected utility of available choices. When physiological and objective utility differ, it reflects inefficiency not in the mechanism that chooses, but in the ability of the neural architecture antecedent to the choice mechanism to compute physiological expected utilities efficiently. In some cases inefficiencies of these types will arise when the most complicated cortical mechanisms for estimating likelihoods encounter problems that they did not evolve to solve. In other cases, inefficiencies will occur because simpler brainstem systems encounter problems that they did not evolve to solve efficiently. All of these biologically generated inefficiencies would therefore bound rational behavior. The available evidence thus suggests a synthesis of modern economic and neuroscientific approaches. By biologically defining the mechanisms which compute physiological expected utility we should be able to derive a mechanistically accurate economic theory which is by necessity predictive.

In the following sections we hope to present a case study of this approach. Beginning with a physiological investigation of choice mechanisms, we will derive a mathematical description of the process by which a particular class of dynamic decision making is accomplished. Having derived an algorithm for choice under these conditions we will use that surprisingly parsimonious model to predict the dynamic play-by-play choice behavior of individuals under novel conditions. The accuracy of those predictions will then be tested to assess this neurobiologically derived model. In essence, what we hope to do is to use neurobiological techniques to develop a simple economic theory that is both testable and parsimonious.

2. The neuroscience of connecting sensation and action

2.1. Overview of sensory and motor neuroscience

During the second half of the twentieth century neuroscience made huge advances, particularly towards understanding both the structure and function of the sensory systems that gather data about the outside world and the movement control systems through which all behavioral responses are generated. For the most part, these studies provided the insights upon which our current understanding of the human brain rests. These studies provide, essentially, a core theory of brain function which, like the neoclassical approach in economics, organizes the ways scholars address almost all questions of neural function. In order to understand how neurobiologists attempt to understand decision making, it is therefore necessary to know a little about the organizing principles of these input and output systems.

2.1.1. Sensory systems

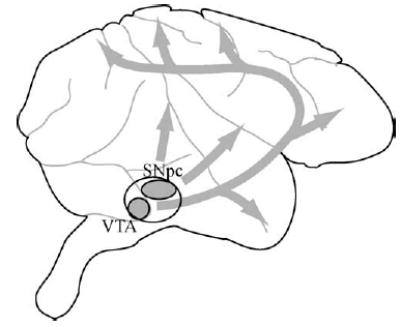

Tremendous progress has been made towards understanding all of our senses but the brain system we understand best is the visual system. (For an introductory overview of the visual system see the vision chapter in the excellent textbook by Rosenzweig et al. (2002). For a more detailed overview see the textbook by Squire et al. (2002)). Insights from the study of this system organize neurobiological approaches not just to sensory systems but to brain function in general. The work of this system begins in the retina, a five layer thick sheet of cells lining the inner surface of the eyeball like a sheet of photographic film. At each location on this sheet lies a single photoreceptor, a cell which transduces individual photons of light into electrochemical signals that can be passed to the brain. These electrochemical signals are, in turn, passed by a class of retinal neurons called retinal ganglion cells, through the optic nerve which leaves the eyeball and connects to the neurons of the lateral geniculate nucleus of the thalamus which lies inside the mammalian brain (Fig. 1).

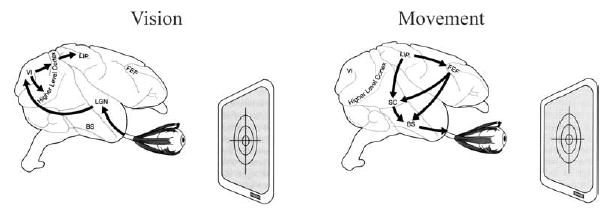

Fig. 1.

The basic flow of information in the primate visual and eye movement systems shown superimposed on a monkey brain. Vision: photons entering the eyeball activate neurons in the retina. That activity is relayed through the optic nerve to the lateral geniculate nucleus (LGN) of the thalamus. From there information passes to the primary visual cortex (VI) and on to higher level visual cortices (V2, V3, V4, MT, etc.). These signals gain access to movement control systems via a number of pathways, one of which involves the parietal cortex. One subregion of the parietal cortex, the lateral intraparietal area (LIP) is known to be of particular importance. Movement: movements of the eyes are controlled by many areas acting in concert. Of particular importance is the lateral intraparietal area (LIP) of parietal cortex. Activity in this area influences both the cortical frontal eye field (FEF) and the subcortical superior colliculus (SC). These areas in turn regulate the brainstem regions (BS) that govern the muscles that surround the eye.

The lateral geniculate nucleus in humans and monkeys is a laminar structure, composed of six pancake-like sheets of neurons stacked one on top of each other. Each sheet receives a topographically organized set of projections from one of the two retinae. This topographic organization means that at a particular location in, for example, the second layer of the lateral geniculate, all the neurons receive inputs from a single fixed location in one of the two retinae. Because individual locations in a retina monitor a single location in visual space (like a single location on a photographic negative) each location in the geniculate is thus specialized to monitor a particular position in space.

It has also been shown that adjacent positions within any given geniculate layer receive projections from adjacent positions within the referring retina. This adjacent topographic mapping means that each layer in the geniculate forms a complete and topographically organized map of the images that fall on the retinae. In this map, as in almost all structures made of nerve cells, information is encoded by the level of electrochemical activity of the individual cells that make up the map. Geniculate neurons highly activated by light falling on the portion of the retina they monitor respond by producing pulses of electrical activity, called action potentials, at a high rate. In these neurons it is essentially the rate of action potential generation that is used to encode properties like the contrast or brightness of a location in the visual world. This set of organizing principles means that each layer in the geniculate forms a complete and topographically organized screen on which are projected, as a pattern of action potentials, the visual images that fall on one of the retinae. Thus activation of a particular neuron at a particular location within the geniculate map indicates that a visual stimulus has appeared at that location in the visual world.

These geniculate maps project, in turn, to the primary visual cortex. Lying against the back of the skull, the primary visual cortex, also called area V1, is composed of several million neurons. These neurons form their own complex topographic map of the visual world organized into roughly 1 square millimeter patches. Each square millimeter of tissue is specialized to perform a common basic set of analyses on the light that falls on a specific region of the retina. Within these 1 mm by 1 mm chunks of cortex, individual neurons have been shown to be highly specialized in ways that allow many different analyses to proceed simultaneously. For example, some neurons in each patch become active, producing action potentials, whenever a vertically oriented boundary between light and dark falls on the region of the retina they monitor. Others are specialized for light-dark edges tilted to the right or to the left. Some respond to input exclusively from one retina, others respond equally well to inputs from either retina. Yet others respond preferentially to colored stimuli. Amongst neurophysiologists this complex pattern of sensitivities in area V1, or of receptive field properties, is of tremendous conceptual importance. It suggests that information coming from the retina is sorted, analyzed and recoded before being passed on to visual areas that lay farther along in the processing stream.

The topographic, or retinotopic, map in area V1 projects to a host of other areas which also contain topographically mapped representations of the of the visual world. Areas with names like V2, V3, V4 and MT construct a maze of ascending and descending projections amongst what may be more than 30 mapped representations of the visual environment. Each of these maps appears to be specialized, extracting specific types of information from the visual image. One of these areas, for example, forms a topographic map that encodes the speed and direction at which visual images move across the retina. Others encode information about the presence of faces or objects. This network of maps is the neural hardware with which we perceive the visual world around us.

The most critical features of this brain organization are first, that incoming sensory information is organized in a massively parallel topographic fashion and second that there seems to be an orderly progression of information from the peripheral receptors to the cerebral cortex where some of the most complex analyses are performed. Fortunately, most of the other sensory systems follow a very similar organizational plan. The sense of touch, for example, involves the passage of signals originating in the skin to a topographically mapped nucleus in the thalamus. From there these signals pass to the topographically organized somatosensory area I of the cortex and from there to higher order somatosensory areas in the cortex. Our understanding of the visual system therefore serves as a guide for understanding how essentially all information about the outside world is gathered by the brain.

2.1.2. Motor systems

Within neurobiology, studies of movement control areas, usually referred to collectively as the components of the motor system, are segregated into two main divisions: those that control systems that regulate movements of the body, hands, feet and mouth (the skeletomuscular system) and those that move the eyes (the oculomotor system). As with the sensory systems, there seem to be strong parallels between the multiple motor systems of the brain and as in studies of the sensory systems, our core framework largely derives from studies of one system, in this case the oculomotor system. The oculomotor system has provided especially fertile ground for study because of the simplicity of the mechanics of the eyeball. While movements of the arm, for example, involve dozens of muscles and complex inertial moments, movements of each eye involve only 6 muscles and no detectable inertia. (For an introductory overview of the motor system see the motor chapters in Rosenzweig et al. (2002). For more detail see Squire et al. (2002).)

When an eye movement is produced, for example an orienting eye movement or saccade that rapidly shifts the point-of-gaze from one location to another in the outside world, the six muscles that control the position of each eye are activated by six groups of neurons that lie deep in the brainstem. These motor neurons are, in turn, controlled by two systems in the brainstem. One that regulates the horizontal position of the eye and one that regulates the vertical position of the eye. These two control centers receive inputs from the superior colliculus which lies just beneath the thalamus and the colliculus, in turn, receives its principal input from the frontal eye field of the cerebral cortex. Like the visual areas described above, the superior colliculus and the frontal eye field are also constructed in topographic fashion. In this case, their constituent neurons form topographic maps of all possible eye movements. Imagine a photograph of a landscape. Now lay a transparent coordinate grid that shows the horizontal and vertical rotation of the eye that would be required to look directly at any point on the underlying photograph. Both the superior colliculus and the frontal eye fields contain maps very like these transparent coordinate grids. Activation of neurons at a particular location in the frontal eye field produces activation in a corresponding position in the superior colliculus which in turn activates the brainstem areas that cause a saccade of a particular amplitude and direction to be executed. If this point of activation were to move across the cortical map of the frontal eye field, the amplitude and direction of the elicited movement would change in a lawful manner specified by the horizontal and vertical lines of the coordinate grid around which it is organized. The neurons of the superior colliculus and the frontal eye field can thus be viewed as topographically organized command arrays in which every neuron sits at a location in the map dictated by the direction and length of the saccade it produces.

Studies of the arm movement or verbal movement systems are at a comparatively early stage, but it seems fair to say that the basic features of these systems appear quite similar at this level of analysis. Again, a few critical features of the nervous system seem to emerge from this knowledge. First, outgoing signals are organized in a massively parallel topographic fashion. Second, there seems to be an orderly progression of information from higher areas like the cortex down to lower areas that ultimately control the movements of the muscles themselves.

2.2. Early studies of decision making

Over the course of the last 15 or 20 years a number of very influential studies, initially conducted in monkeys, have begun to examine the simplest possible connections between these sensory and motor architectures. As such, these studies constituted the first serious neural examination of decision making, albeit decision making of a very simple kind. Jeffrey Schall and his colleagues at Vanderbilt University conducted some of the first of these studies (cf. Hanes and Schall, 1996; Schall and Thompson, 1999), training thirsty rhesus monkeys to stare straight ahead at a centrally located spot of light presented on a video display (Fig. 2). Shortly after the monkey began staring straight ahead eight secondary targets appeared, arranged radially around the central fixation stimulus. Seven of those targets appeared in a common color and one appeared in a different color, an oddball. If the animal looked at any of the 7 common color targets the play, or trial, ended immediately. If he looked at the oddball, he received a drop of fruit juice as a reward.

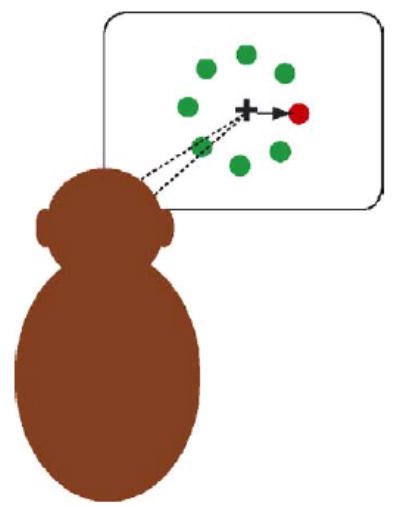

Fig. 2.

The Oddball Task. In the original Schall experiments thirsty monkeys were seated staring at a cross in the center of a blank display. Eight spots of light then illuminated, seven in one color and an eighth, the oddball, in a different color. The monkey had to decide where to look, and only if he looked at the oddball did he earn a fluid reward. While monkeys made these decisions Schall and his colleagues monitored the activity of single neurons in the frontal eye fields.

Under conditions like these, we know quite a lot about both the sensory and motor processes that must become active in the monkey’s brain. When the targets illuminate, we know that eight locations in the retinal, lateral geniculate and visual cortical maps become active. One for each of the eight visual targets. These signals propagate through the visual system towards saccadic eye movement control centers like the frontal eye fields and the superior colliculus. Only one of the 8 locations, however, represents the oddball and ultimately leads to activation of the eye movement control circuitry in those areas. So how is the translation, from 8 visual signals to one motor command, actually accomplished? To answer that question Schall and his colleagues studied the activity of single nerve cells in the saccadic movement maps of the frontal eye fields while monkeys performed this oddball detection task.1

Schall found that rate of action potential generation by neurons at each of the eight locations in the frontal eye field map rose to an early peak shortly after the 8 targets were illuminated, but only after about 0.08 seconds was there evidence, in these neurons, of an underlying decision process in operation. At that point, neuronal action potential firing rates continued to grow only in neurons at the one location encoding the oddball. After the level of activity at that location crossed an apparently fixed threshold value, the movement was produced. This led Schall to suggest the existence of a decisional threshold in each neuron in the topographic map of the frontal eye field and raised the possibility that the topographic map was constructed in such a way that only one local cluster of neurons within the map could reach the decisional threshold at a time. The topographic map seemed to serve as an organizational framework for imposing something like a winner-take-all decision making strategy. Of course a winner-take-all strategy is critical because one cannot usefully look in two directions at once.

Where do the sensory signals that trigger the threshold activations of these neurons originate and how, if at all, are these signals related to the more complex decisions that are the subject of economic study? William Newsome and his colleagues at Stanford University provided a critical set of data for answering that question (cf. Parker and Newsome, 1998). They were initially interested in understanding how the brain generates the perception of motion so they began by training rhesus monkeys to watch a visual display that humans see as moving in an ambiguous fashion. They then asked their monkey subjects to report the direction in which the display appeared most likely to be moving. In these experiments the monkeys looked through a circular window at a cloud of white dots that appeared to move against a black background for two seconds. Critically, whenever the dots appeared, not all of them moved in the same direction. During any given two second display, many of the individual dots were moving in different, randomly selected, directions. Only a small fraction of the dots actually moved in a coordinated direction and it was this coordinated direction of movement that the monkeys were trained to detect.

Newsome hypothesized that activity in one of the cortical visual areas might be might be both necessary and sufficient for the perceptual experience we have when we see an object move, activity in that area might be the physical instantiation of the subjective experience of seeing motion. Quite a bit was also known about the activity of individual neurons in this topographic map, the map in cortical area MT. Each MT neuron was known to become active whenever a visual stimulus moved in a particular direction across the portion of the visual world scrutinized by that cell’s location in the MT topographic map. Each neuron thus had an idiosyncratic preferred direction and because each neuron prefers motion in a different direction and because many neurons work together to encode motion at each location in the visual world, the population of neurons in area MT could, in principle, discriminate motion in all possible directions at all visible locations.

In a series of experiments Newsome and his colleagues (Newsome et al., 1989; Salzman et al., 1990) were able to demonstrate that area MT forms a topographic map of the visual world in which the strength of motion in the visual world is encoded by the rate at which each neuron in the map fires action potentials. In principle this map thus provides, in Newsome’s task, instantaneous and independent estimates of the strength and direction of motion at all locations in the visual world. If, for example, the animals were rewarded for correctly determining whether the spots they were watching tended to drift rightwards or leftwards, the activity in area MT encodes the information used by the animals to perform the task.

In a series of subsequent experiments and simulations Newsome and his colleague Michael Shadlen (cf. Shadlen et al., 1996; Shadlen and Newsome, 2001) sought to extend these observations by trying to determine how the signal originating in area MT was actually analyzed and used by the animal to produce eye movements that would consistently yield juice rewards in this environment, presumably by triggering the appropriate eye movement in the motor map of the frontal eye fields. It was known that neurons in area MT are functionally connected to maps in the posterior parietal cortex (Fig. 1) which are themselves connected to the maps in the frontal eye fields. This led Shadlen to propose that while the monkeys stared at the moving dot display, neurons in the posterior parietal cortex mathematically integrated the output of neurons in area MT with respect to time, yielding a new topographical map that encoded a time averaged estimate of motion direction at each location in the visual world. And critically, it was this time averaged estimate that should have served as the critical decision variable in the task that their monkeys had been taught. The precise conditions of the task they employed defined this as the optimal strategy for analyzing the visual motion. In other words, they proposed that the map in area MT made topographic connections to the posterior parietal cortex which extracted a decision variable from the MT activity and passed this decision variable, presumably topographically, to the frontal eye fields. In their model, which was developed formally (Shadlen et al., 1996), the neurons of the posterior parietal cortex thus served as topographically organized accumulators that could be used to trigger topographically aligned neurons in the frontal eye field map, thus generating the saccade most likely to be reinforced.

In a series of elegant experiments Shadlen and his colleagues went on to test this hypothesis and were able to verify the accuracy of many of their predictions. They were even able to demonstrate that the activity of neurons at each location in one of the areas of the posterior parietal cortex, area LIP, was tightly correlated with the log of the likelihood that the eye movements encoded at that location would yield a reward (Gold and Shadlen, 2001).

2.3. Summary

These results suggest that the simplest kind of connection between sensation and action can be described as a process by which topographic parallel representations of signals from the outside world are used to trigger behavioral responses in topographically organized output maps, perhaps through the intermediate representation of a simple decision variable (see Glimcher (2003a) for a more in depth survey of this work). This much is uncontroversial. Also uncontroversial is that these sensory-motor connections do not constitute decision making in the economic sense. Neoclassical variables like value and expected utility, which are central to formal rational decision making, do not occur in a very clear fashion during these experiments. One possibility that this raises is that these are precisely the kinds of crude and primitive processes that are responsible for economically irrational behavior. Rational choice models may break down, be bounded, because mechanisms like these “take over.” But there is an alternative hypothesis. These mechanisms may be much more complicated than they appear from the experiments that have already been presented. Indeed, these experiments may reveal only the tip of the neurobiological iceberg. Distinguishing between these two hypotheses is, fortunately, an empirical problem. We can begin to ask whether these circuits and this general model can account for more complicated classes of decision making by examining these same neurons under conditions that more closely approximate the kinds of rational choice that are of interest to economists.

3. Economic studies of decision making in the brain

Ever since Pascal, economic analysis has focused on two variables which play an important role in rational choice: the likelihood of realizing a gain or loss and the magnitude of that gain or loss. Certainly other variables are important determinants of behavior, for example the time at which the gains will be realized, but magnitude and likelihood almost always influence rational choice. Do these variables influence the neural circuits we have already described? One of the first experiments to address that question systematically was conducted in 1999 by Platt and Glimcher. In that series of studies we asked whether the neurons in area LIP which Shadlen and his colleagues had examined might form a topographical map of either the magnitude or likelihood of gain associated with particular eye movements. Shadlen’s results had strongly suggested that the neurons in area LIP connected stimulus and response during simple perceptual decision making, and that the rate of action potential generation in these neurons might encode some kind of decision variable. We hoped to verify this hypothesis by constructing an experiment in which monkeys could make one of two possible movements while we systematically varied either the likelihood or magnitude of gain associated with each movement. This would allow us to determine whether the neurons in area LIP carried signals that might be useful for real economic decision making.

In that experiment, thirsty monkeys were trained to stare straight ahead at a central visual stimulus (Fig. 3) while two eye movement targets, which served the same role as response buttons serve in a typical economic task, were illuminated. At the end of each trial, or play, monkeys would have to choose whether to look at the left target, the right target, or to do nothing. Immediately before they had to make that decision, however, the color of the fixation light would change, identifying one of the two targets as valueless on that particular trial. The critical manipulation was that on sequential blocks of 100 trials the amount of juice that the monkeys would earn for each of the leftwards and rightwards movements was systematically manipulated. Finally, while the monkeys made decisions under these varying conditions, the activity of single neurons in area LIP was recorded.2 Each neuron was examined while 5–7 different reward magnitude conditions were presented.

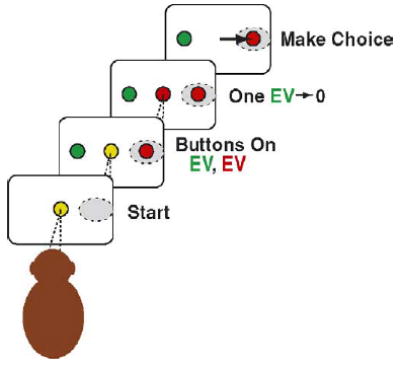

Fig. 3.

Platt and Glimcher’s Two-Choice Cued Lottery. Once again thirsty monkeys are seated staring at the center of a display. Red and green response targets, acting as buttons, then appear. At this point in the play the red and green responses have widely divergent expected values which the monkey is expected to have learned. After an imposed delay the central spot changes color identifying one of the targets as the rational choice and the other target as valueless on this trial. If the monkey looks at the target that is the same color as the central target he earns the appropriate reward.

At a theoretical level, these subjects faced an exceedingly simple task. At the end of each play the color of the fixation light indicated what movement had both the highest expected value and expected utility and a rational chooser would be expected to produce that movement. We recognized, however, that expected utilities could be computed for each response early in each play, before the color of the fixation light simplified the task. Consider a block of 100 plays during which a leftward movement would yield 0.1 ml of juice and a rightward movement would yield 0.3 ml of juice. At the beginning of each play there was a 50% chance that a leftward movement would be identified as reinforced and, similarly, a 50% chance that a rightward movement would be reinforced. At that time the expected value of the two movements would be 0.05 ml and 0.15 ml of juice respectively. Then, after the fixation light changes color identifying, for example, the leftward movement as rewarded, the expected values change. After that point in the play the expected value of the leftward movement is 0.1 ml and the expected value of the rightward movement is 0 ml. We hoped to determine whether these early estimates of expected value were related to the activity of neurons in area LIP.

What we found was that the activity of LIP neurons was a surprisingly, though not precisely, linear function of these values (Fig. 4). More precisely, we found that both early and late in a play the firing rate for neurons associated with the leftward movement encoded:

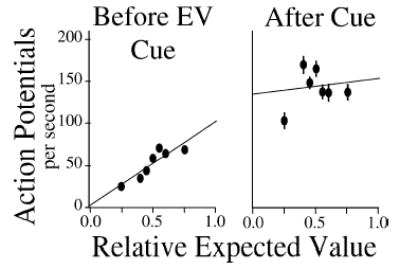

Fig. 4.

The firing rate of a neuron in area LIP is correlated with relative expected value. Early in the play, when the expected value of each of the two possible movements is non-zero, firing rate is a roughly linear function of [Reward1 ÷ (Reward1 + Reward2)]. After the cue indicates that the value of Reward2 = 0, firing rate elevates. These results, and other described in the text, suggested that firing rates in area LIP may encode either the relative expected value or the relative expected utility of movements.

| (1) |

Early in each play the firing rates of all LIP neurons were correlated with the relative expected value of their movements with regard to other possible movements. Late in the play, after the relative expected values of all but one movement had been reduced to 0, the neurons encoding the reinforced movement rose to a fixed firing rate near the maximum for these neurons.

What this suggested was that something very close to an economic choice variable was indeed being carried by the firing rates of these neurons. We next had the monkeys perform the same task in a way that would let us determine whether the likelihood of a gain influenced LIP firing rates. To do that, we held the magnitude of the juice reward constant for both leftwards and rightwards movements across a series of blocks and varied the likelihood that at the end of each play the left or right movements would be reinforced. Thus on a block of trials in which both movements yielded 0.15 ml of juice but there was an 0.8 probability that the right movement would be reinforced and an 0.2 probability that the left movement would be reinforced, the expected values of the two movements early in each play would be 0.12 and 0.03 ml, respectively. Under these conditions the early firing rates of the neurons were again a roughly linear function of these values. Specifically, the firing rate of a neuron associated with the leftward movement was a linear function of the probability that the leftward movement would yield the juice reward. Together, these results suggested an interesting possibility, that the topographic map in area LIP encodes something like the relative expected value, or perhaps even the relative expected utility, of each possible eye movement under the conditions we had been studying.

If these neurons encoded relative expected utility under these simple conditions, what happens when behavior deviates from prescriptive economic theory, what happens when choice is only weakly related to expected value? Does some other less rational system gain control of decision-making while these neurons continue to encode prescriptive economic variables? The neurons in this area had been originally identified as a link in a very simple sensory-motor behavior, the kind of brain system that might have been expected to account for the bounds of rationality. Instead, in this experiment we had gathered evidence that these neurons might carry a signal that could be predicted by prescriptive theory. What exactly do neurons in area LIP encode and how are they related to rational and irrational choice? To answer this question we next turned to an experiment more like those employed by experimental economists.

3.1. Game theory and parietal maps

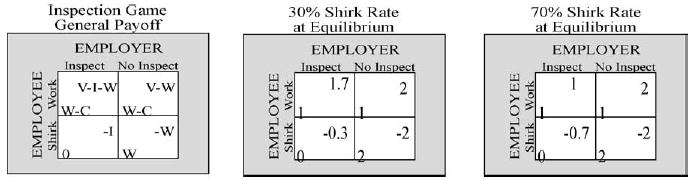

Our first goal in this next set of experiments (Dorris and Glimcher, 2004) was to behavioral task which engaged humans in voluntary decision making and which could also be employed in a neurophysiological setting with monkeys. To this end, we had both human and animal subjects play the role of the employee in the classic inspection game (cf. Kreps, 1990). The general form of the 2 × 2 payoff matrix for this game is shown in Fig. 5. We selected the inspection game because the payoff matrix can be easily adjusted to yield any mixed strategy equilibrium. We accomplished this exclusively, in our version of the game, by varying the cost of inspection to the employer (Fig. 5, left panel, variable I) such that at equilibrium the probability of shirking for the employee ranged from 10 to 90% in randomly ordered sequentially presented blocks of trials.

Fig. 5.

The Inspection Game. Left panel shows the game in normal form. W = wage earned by the employee. C = cost of working for employee, V = value of work to employer, I = cost of inspection to employer. Right panels show representative payoffs yielding mixed strategy Nash equilibria when a linear utility function is assumed.

Rational decision-makers should choose the option with the highest expected utility on each play. If both subjects act rationally, then a mixed strategy equilibrium will be reached when the expected utility for each choice is equal for both players.

Thus at Nash equilibrium for the employee:

| (2) |

where EU(S) is the expected utility for choosing to shirk, EU(W) is the expected utility for choosing to work. If p(I) is the probability of the employer inspecting and 1 − p(I) is the probability of the employer not inspecting when at equilibrium (and we assume, for an initial analysis, that utility can be approximated as a linear function), W is the wage paid by the employer to the employee, and C is the cost of work to the employee then the payoff matrix (Fig. 5) expands to

| (3) |

solving for p(I):

| (4) |

Similarly for the subject acting as the employer, at Nash equilibrium (again assuming for the initial analysis a linear utility function) the expected utility for inspecting is equal to the expected utility for not inspecting. Solving for p(S):

| (5) |

where p(S) is the probability of the employee shirking when at equilibrium.

Because the employee payoffs remained the same for all blocks of trials, p(I) for the employer should remain constant at 50% at all equilibria. Between blocks, p(S) for the employee varied from 10 to 90% in 20% steps and was manipulated by varying the employer’s cost of inspection from 0.1 to 0.9 in steps of 0.2 (see Eq. (5)).

3.1.1. Human vs. human

In the first set of experiments, pairs of human subjects were placed in separate rooms and played a repeated version of the inspection game. They were not aware of the nature of their opponent. It was another human in this case but could also have been a dynamic computer algorithm (see below). All subjects were naive to the nature of the payoff matrix and the game and were simply instructed to “make as much money as possible.” From the point of view of the employee, on each trial, it was necessary to use a mouse to chose one of two unlabeled buttons on a computer screen that corresponded to either working or shirking. After each play the payoff was presented on the screen along with a cumulative total of earnings over the last 10 trials. The first block of 50 trials was a practice session at a 50% shirking rate Nash equilibrium. Afterwards, 5 separate unsignaled Nash equilibrium blocks of 150 trials each were played in a randomized order over the course of two 1.5 h sessions.3 A total of 8 subjects were tested. At the end of each session, subjects were paid their cumulative earnings which were typically about $35 US.

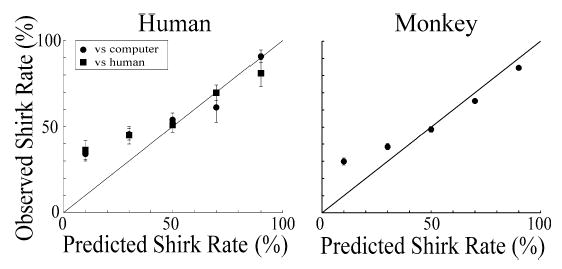

The equilibrium equations presented above gave us a crude prescriptive theory of what subjects would do, and their actual behavior provided descriptive data. Our task as neurophysiologists would be to determine which, if either, of these two approaches predicted the activity of neurons in area LIP. To begin to do that we compared the observed rates of “working” and “shirking” with the rates predicted at equilibrium, once again assuming linear utility over the range of values we examined.

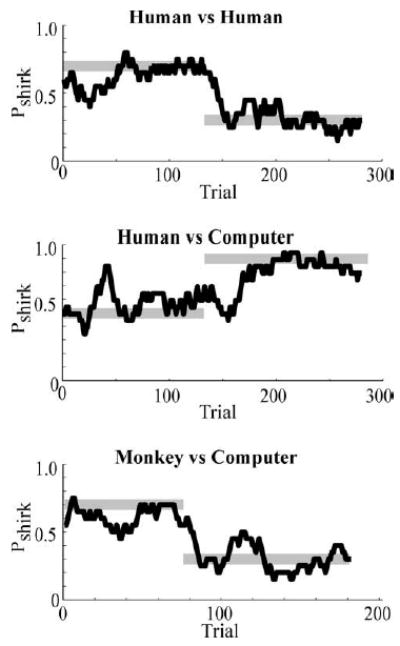

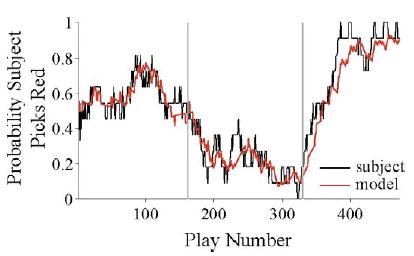

Figure 6 shows a 20-trial running average of the behavior of a human employee playing a human employer during two sequentially presented blocks. Although both players were free to choose either of two actions on every trial, we found that the overall behavior of our human subjects was surprisingly well predicted by game theory given our simple utility assumption. The gray lines show the unique Nash equilibrium solution for each block. Note that the employee quickly reached, and then fluctuated unpredictably around, these prescriptively defined equilibrium shirk rates.

Fig. 6.

Dynamic behavior of humans and monkeys playing the inspection game. Black lines plot a 20-play running average of the employee’s behavior during two equilibrium blocks. Grey lines plot Nash equilibrium solutions during both blocks assuming a linear utility curve.

To examine equilibrium behavior in greater detail we quantified, for each subject, the probability of shirking during the last half of each block. We then plotted this shirk rate against the predicted equilibrium rate (Fig. 7, Eq. (4)). We found that the responses of humans closely tracked these prescriptively defined shirk rates at behavioral equilibrium for rates above 40%. When, however, our prescriptive theory predicted rates below about 40%, we observed that subjects shirked more than predicted. There may be a temptation to conclude that this deviation reflects a non-linearity in the true underlying utility function. Were the underlying utility function for money to be significantly concave over the range of our measurements, as might be expected, then shirk rates would have decreased rather than increased at these lower points. A more plausible explanation is either that this deviation reflects some sort of sampling strategy that maximizes the ability of players to detect block switches, or reflects an irrationality. In either case, what we had obtained were behavioral measures during voluntary choice and at least some of these measures were well predicted by our prescriptive theory.

Fig. 7.

Plot of equilibrium behavior for humans and monkeys. During the last half of each block of plays we computed the average rates of shirking (±S.E.M.) for our three groups during a total of 5 equilibrium conditions.

The implications of the measurements poorly predicted by our prescriptive theory were, however, uncertain. As with almost all experimental economic data, this either indicated an inadequacy in our prescriptive theory or a frank irrationality in our subjects. As physiologists, however, our goal would be to engage this deviation from our prescriptive theory using a neurobiological approach.

3.1.2. Human vs. computer

A second set of experiments were then conducted which were identical to the human versus human experiment except that the role of the employer was played by a standardized computer algorithm (see http://www.cns.nyu.edu/glimcher/inspection_game for MATLAB code of complete algorithm). That was critical because methodological constraints imposed by single neuron recording experiments essentially precluded our use of a real opponent against the monkeys. Briefly, the computer algorithm worked by tracking two variables of the employee’s behavior:

the history of employee’s choices to give an estimate of the overall probability that the employee would shirk (p(S)),

the employee’s repetition rate (repactual), that is, how often a subject repeated the response of the previous play.

We calculated the expected repetition rate (repexpected) for a given proportion of shirking assuming the probability of a response on each trial was controlled by a random process independent of previous choices:

| (6) |

the difference in the repactual from repexpected was used to bias the computer’s estimate of p(S) for the upcoming trial

| (7) |

in which λ was set to 0.1.

The variable p(S)corrected represents an estimate of the probability of the employee shirking on the current play given his past proportion of shirking and allows the algorithm to exploit any dependency of upcoming behavior on actions taken during the previous play. The variable p(S)corrected was substituted for p(S) in calculating the relative expected utilities of inspect and no inspecting (for the computer employer) on the upcoming play which, in turn, was used to guide the computer’s choice. In addition, an exploration bonus was added which gradually increased as the algorithm continued to produce a single response. This was necessary so the computer employer did not maintain a fixed p(I) calculation (and thus fixed expected utility calculation) after every work trial, but rather continued to search for a maximally efficient behavior throughout the changing conditions of the experiment.

Of course, the computer employer would be deterministic if it always chose the option with the highest expected utility on every trial. If a human or monkey subject had sufficient precision in a play-by-play estimate of p(S), they could then accurately predict the actions of the algorithm. In order to incorporate stochasticity into the actions of the algorithm we employed a decision-rule which converted relative expected utility into a response probability. When inspecting and not inspecting had equal values, the decision rule randomly selected the inspect and no inspect options with equal likelihood. As the expected utility of one response increased, the probability that the more valuable response would be selected increased gradually.

Eight additional human subjects again played this version of the inspection game, playing five 150 trial blocks over 2 sessions. As in the first experiment they were not aware of the nature of their opponent and were simply instructed to “make as much money as possible.” Blocks of plays were presented exactly as before and subjects were paid their cumulative earnings which were again about $35 US.

Figure 6 shows a 20-trial running average of the dynamic behavior of a human employee playing the inspection game during a typical session. The gray lines show the unique Nash equilibrium solutions. Just as when playing a human employer, our human subjects quickly reached and then fluctuated around, the shirk rates associated with the two sequentially presented Nash equilibrium states studied in this session.

During the last half of each block, once subjects had reached a stable state, we again determined the average shirk rate and plotted this against the equilibrium shirk rate prescribed by a linear utility function (Fig. 7, Eq. (4)). As was the case in the preceding experiment, these human subjects tended to deviate from the prescribed solution by over-shirking when shirk rates of 10 or 30% were predicted at equilibrium (p < 0.05, t-test against zero assuming unequal variance). Our standardized computer opponent thus elicited behavior from our employees that was statistically indistinguishable from their behavior when playing against human employers (two-way ANOVA, F = 0.22, p > 0.05, d.f. = 1).

3.1.3. Monkey vs. computer

We then trained monkeys to play a version of the inspection game against our computer employer and assessed whether their behavior was comparable to that of humans. In monkey experiments, thirsty animals competed for a water reward delivered after each play and indicated their choices on each play with a saccadic eye movement directed to one of two eccentric visual targets. Plays began with the illumination of a centrally located yellow fixation target. Once subjects were looking at this target two eccentric targets were illuminated, a red shirk target that was positioned so that the neuron under study was active when the monkey picked that target and a green work target that appeared opposite to the red target. Halfway through each play, the fixation point blinked and when it reappeared yellow the subject had 0.75 seconds to select and execute a response.

As Fig. 6 indicates, the dynamic behavior of monkeys playing this game appeared remarkably similar to the behavior of humans. At the beginning of each block monkeys, just like humans, quickly reached and then fluctuated unpredictably around the shirk rates associated with the Nash equilibrium states. When we examined the equilibrium behavior of the monkeys we found that it too appeared to be very similar to the equilibrium behavior produced by humans, even deviating from the prescriptive predictions in a similar manner. As Fig. 7 indicates, just like humans, during the inspection game the monkeys tracked the Nash equilibrium solutions (again assuming linear utility) and deviated from those solutions when shirking rates of 30% or less were prescribed (p < 0.01). Two monkeys were studied while they played 8 sets of 100–200 trial blocks. Although the behavior of monkeys and humans was statistically differentiable during the 70 and 90% Nash equilibrium blocks, monkeys appeared to provide a surprisingly accurate model of humans overall.

3.1.4. The physiological basis of strategic decision making

Having thus established that humans and monkeys play this strategic game in an very similar fashion both when their behavior is predicted by the equilibrium equations we were using and when it was not, we were able to move on to our neurophysiological question: How is LIP activity related to choice during strategic decision making? One of Nash’s (1951) fundamental insights was that at a mixed strategy equilibrium the desirability of the actions in equilibrium must be equivalent. This means that during the inspection game the expected utilities of working and shirking must be equal at equilibrium.

For the purposes of the foregoing analysis we had assumed a linear utility function and were able to thus prescriptively define a rational equilibrium. Our descriptive data indicated that this rational equilibrium did a fair job of predicting the behavior of our subjects under some conditions but failed under others. One could, of course, extend this particular prescriptive approach by incorporating a more realistic utility function and adding to that a learning algorithm that might even predict the over-shirking observed at low shirk rates. Our enhanced prescriptive theory would then better account for our descriptive observations.

If, however, the neurons in area LIP are the substrate upon which actual choice is generated and that choice is generated at equilibrium by a process similar to the one Nash envisioned, then we might be able to take an alternative approach. The Nash approach argues, essentially, that equilibrium occurs when the desirability of working and shirking are equal. Economists define those desireabilities as rational when they are well predicted by the expected utility of prescriptive theory. But rational or not, those desireabilities might well be represented at some point in the neural architecture. What we were trying to determine was whether the quantitative desirability of an action is encoded by the activity of neurons in area LIP not just for some categories of behavior, rational or irrational, but for behavior in general. If our economic approach was sound, then at behavioral equilibrium the desirability of working and shirking should have been equivalent. If our neurobiological approach was sound, then at behavioral equilibrium the level of neuronal activity associated with working and shirking should also have been equivalent. This should be true regardless of whether choice is rational or not. Put another way, if LIP encodes a physiological form of expected utility and this physiological expected utility is the actual substrate from which choice is produced, then rational behavior could be defined as occurring when prescriptive theory accurately predicts this physiological expected utility.

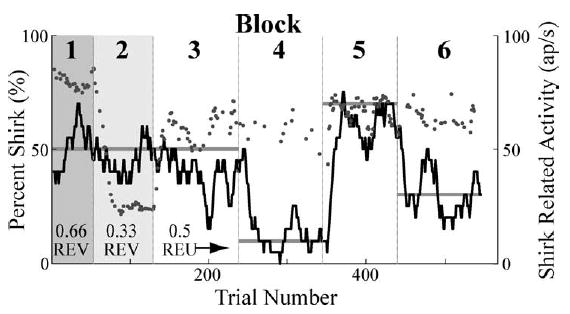

To begin to test the validity of this schema we began by repeating the Platt and Glimcher experiment described in the preceding section on our game playing monkeys. During the first block of plays highlighted in grey (Fig. 8), when a movement to the red target was instructed it yielded 0.25 ml of juice and when a movement to the green target was instructed it yielded 0.5 ml of juice. In the second block of trials, the payoffs associated with each target were reversed. Before the change in fixation point color indicated which movement would be rewarded, the neuron responded more strongly if the red target (which would serve as the shirk target later in the session) yielded a larger reward. We refer to these as instructed trials, and this difference in firing rate was typical of our population in this experiment as it was in the Platt and Glimcher experiments (Fig. 8; p < 0.01, paired t-test for visual and delay epochs, N = 20).

Fig. 8.

Behavior of a monkey and an LIP neuron during the inspection game. The black line plots a 20-trial running average of the behavior of the monkey across six blocks of plays. In the first two blocks, highlighted in grey, no game was played but rather fixed expected values were presented. (Relative expected values of 0.66 and 0.33, respectively.) Blocks 3–6 presented 4 sequential payoff matrices corresponding to Nash mixed equilibria of 50, 10, 70, and 30% shirking. Grey dots plot the firing rate of the neuron on each play in which the monkey shirked.

Figure 8 examines the relationship between physiological expected utility, behavior, and firing rate for this neuron. The lower axis plots the play numbers during which 6 sequential blocks were presented. In the first block, instructed trials were presented which reinforced a movement to the shirk target with twice as much juice as a movement to the work target, a relative expected value4 of 0.66. The second block presented a relative expected value of 0.33. Blocks 3–6 presented inspection trials in which the dynamic interactions of the two players should have maintained a relative desireability for the two responses of near 0.5. The solid gray lines initially plot the probability of the red target being rewarded during the first two instructed blocks followed by the predicted equilibrium rate of picking the red (shirk) target during the four inspection trial blocks. At a purely behavioral level, the animal seemed to closely approximate the response strategies predicted by our simple prescriptive model. Initially the probability of picking the red target was roughly 50% during the instructed blocks and then shifted dynamically to each of the equilibrium strategies in the subsequent 4 inspection trial blocks. The gray dots plot the neuronal firing rate after target onset. Note that when the relative expected value of a movement to the red target is high, firing rate is high. When the relative expected value of the red target is low, firing rate is low, and when the animal is engaged in a strategic conflict the firing rate associated with this same movement is fairly constant at an intermediate level. This is the specific result that would be expected if LIP neurons encode the desireability, that is they instantiate the physiological expected utility, of movements into their neuronal response fields.

3.2. Encoding shirk targets versus work targets

For a subset of 20 neurons we also examined the effects of reversing the locations of the work and shirk targets during 50% shirking rate Nash equilibrium blocks of the inspection game. This effectively changed both the probability of being rewarded and the magnitude of that reward associated with the target monitored by our neuron, while the relative physiological expected utility of working and shirking should have remained at equilibrium. Firing rates should differ across blocks if they reflect either of these individual decision variables but they should remain constant if they reflect physiological expected utility. The firing rates were indistinguishable, a finding consistent with the hypothesis that LIP firing rates encode the physiological expected utility of choices (p > 0.05, paired t-test, N = 20, for all 6 epochs).

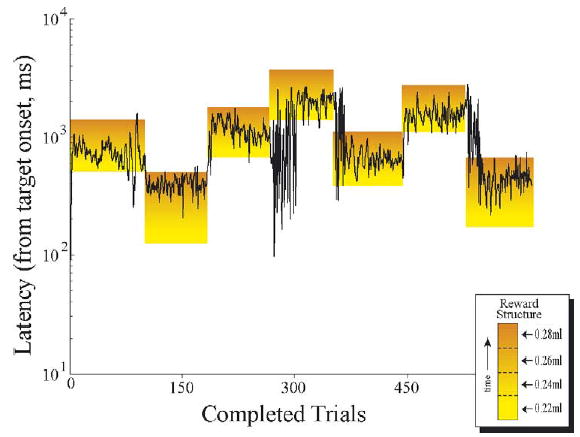

3.3. Encoding relative versus absolute desirability