Abstract

Context: Although patient safety is a major problem, most health care organizations rely on spontaneous reporting, which detects only a small minority of adverse events. As a result, problems with safety have remained hidden. Chart review can detect adverse events in research settings, but it is too expensive for routine use. Information technology techniques can detect some adverse events in a timely and cost-effective way, in some cases early enough to prevent patient harm.

Objective: To review methodologies of detecting adverse events using information technology, reports of studies that used these techniques to detect adverse events, and study results for specific types of adverse events.

Design: Structured review.

Methodology: English-language studies that reported using information technology to detect adverse events were identified using standard techniques. Only studies that contained original data were included.

Main Outcome Measures: Adverse events, with specific focus on nosocomial infections, adverse drug events, and injurious falls.

Results: Tools such as event monitoring and natural language processing can inexpensively detect certain types of adverse events in clinical databases. These approaches already work well for some types of adverse events, including adverse drug events and nosocomial infections, and are in routine use in a few hospitals. In addition, it appears likely that these techniques will be adaptable in ways that allow detection of a broad array of adverse events, especially as more medical information becomes computerized.

Conclusion: Computerized detection of adverse events will soon be practical on a widespread basis.

Patient safety is an important issue and has received substantial national attention since the 1999 Institute of Medicine (IOM) report, “To Err is Human.”1 A subsequent IOM report, “Crossing the Quaity Chasm,” underscored the importance of patient safety as a key dimension of quality and identified information technology as a critical means of achieving this goal.2 These reports suggest that 44,000–98,000 deaths annually in the U.S. may be due to medical errors.

Although the “To Err is Human” report brought patient safety into the public eye, the principal research demonstrating this major problem was reported years ago, with much of the data coming from the 1991 Harvard Medical Practice Study.3,4 The most frequent types of adverse events affecting hospitalized patients were adverse drug events, nosocomial infections, and surgical complications.4 Earlier studies identified similar issues,5,6 although their methodology was less rigorous.

Hospitals routinely underreport the number of events with potential or actual adverse impact on patient safety. The main reason is that hospitals historically have relied on spontaneous reporting to detect adverse events. This approach systematically underestimates the frequency of adverse events, typically by a factor of about 20.7–9 Although manual chart review is effective in identifying adverse events in the research setting,10 it is too costly for routine use.

Another approach to finding events in general and adverse events in particular is computerized detection. This method generally uses computerized data to identify a signal that suggests the possible presence of an adverse event, which can then be investigated by human intervention. Although this approach still typically involves going to the chart to verify the event, it is much less costly than review of unscreened charts,11 because only a small proportion of charts need to be reviewed and the review can be highly focused.

This paper reviews the evidence regarding the use of electronic tools to detect adverse events, first based on the type of data, including ICD-9 codes, drug and laboratory data, and free text, and then on the type of tool, including keyword and term searches and natural language processing. We then discuss the evidence regarding the use of these tools to identify nosocomial infections, adverse drug events in both the inpatient and outpatient setting, falls, and other types of adverse events. The focus of this discussion is to detect the events after they occur, although such tools can also be used to prevent or ameliorate many events.

Electronic Tools for Detecting Adverse Events

Developing and maintaining a computerized screening system generally involve several steps. The first and most challenging step is to collect patient data in electronic form. The second step is to apply queries, rules, or algorithms to the data to find cases with data that are consistent with an adverse event. The third step is to determine the predictive value of the queries, usually by manual review.

The data source most often applied to patient safety work is the administrative coding of diagnoses and procedures, usually in the form of ICD-9-CM and CPT codes. This coding represents one of the few ubiquitous sources of clinically relevant data. The usefulness of this coding—if it is accurate and timely—is clear. The codes provide direct and indirect evidence of the clinical state of the patient, comorbid conditions, and the progress of the patient during the hospitalization or visit. For example, administrative data have been used to screen for complications that occur during the course of hospitalization.12,13

However, because administrative coding is generated for reimbursement and legal documentation rather than for clinical care, its accuracy and appropriateness for clinical studies are variable at best. The coding suffers from errors, lack of temporal information, lack of clinical content,15 and “code creep”—a bias toward higher-paying diagnosis-related groups (DRGs).16 Coding is usually done after discharge or completion of the visit; thus its use in real-time intervention is limited. Adverse events are poorly represented in the ICD-9-CM coding scheme, although some events are present (for example, 39.41 “control of hemorrhage following vascular surgery”). Unfortunately, the adverse event codes are rarely used in practice.17

Despite these limitations, administrative data are useful in detecting adverse events. Such events may often be inferred from conflicts in the record. For example, a patient whose primary discharge diagnosis is myocardial infarction but whose admission diagnosis is not related to cardiac disease (e.g., urinary tract infection) may have suffered an adverse event.

Pharmacy data and clinical laboratory data represent two other common sources of coded data. These sources supply direct evidence for medication and laboratory adverse events (e.g., dosing errors, clinical values out of range). For example, applications have screened for adverse drug reactions by finding all of the orders for medications that are used to rescue or treat adverse drug reactions—such as epinephrine, steroids, and antihistamines.18–20 Anticoagulation studies can utilize activated partial thromboplastin times, a laboratory test reflecting adequacy of anticoagulation. In addition, these sources supply information about the patient’s clinical state (a medication or laboratory value may imply a particular disease), corroborating or even superseding the administrative coding. Unlike administrative coding, pharmacy and laboratory data are available in real time, making it possible to intervene in the care of the patient.

With increasing frequency, hospitals and practices are installing workflow-based systems such as inpatient order entry systems and ambulatory care systems. These systems supply clinically rich data, often in coded form, which can support sophisticated detection of adverse events. If providers use the systems in real time, it becomes possible to intervene and prevent or ameliorate patient harm.

The detailed clinical history, the evolution of the clinical plan, and the rationale for the diagnosis are critical to identifying adverse events and to sorting out their causes. Yet this information is rarely available in coded form, even with the growing popularity of workflow-based systems. Visit notes, admission notes, progress notes, consultation notes, and nursing notes contain important information and are increasingly available in electronic form. However, they are usually available in uncontrolled, free-text narratives. Furthermore, reports from ancillary departments such as radiology and pathology are commonly available in electronic narrative form. If the clinical information contained in these narrative documents can be turned into a standardized format, then automated systems will have a much greater chance of identifying adverse events and even classifying them by cause.

A study by Kossovsky et al.22 found that distinguishing planned from unplanned readmissions required narrative data from discharge summaries and concluded that natural language processing would be necessary to separate such cases automatically. Roos et al.23 used claims data from Manitoba to identify complications leading to readmission and found reasonable predictive value, but similar attempts to identify whether or not a diagnosis represented an in-hospital complication of care based on claims data met with difficulties resolved only through narrative data (discharge abstracts).

A range of approaches is available to unlock coded clinical information from narrative reports. The simplest is to use lexical techniques to match queries to words or phrases in the document. A simple keyword search, similar to what is available on Web search engines and MEDLINE, can be used to find relevant documents.12,25–27 This approach works especially well when the concepts in question are rare and unlikely to be mentioned unless they are present.26 A range of improvements can be made, including stemming prefixes and suffixes to improve the lexical match, mapping to a thesaurus such as the Unified Medical Language System (UMLS) Metathesaurus to associate synonyms and concepts, and simple syntactic approaches to handle negation. A simple key-word search was fruitful in one study of adverse drug events based on text from outpatient encounters.17 The technique uncovered a large number of adverse drug events, but its positive predictive value was low (0.072). Negative and ambiguous terms had the most detrimental effect on performance, even after the authors employed simple techniques to avoid the problem (for example, avoid sentences with any mention of negation).

Natural language processing28.29 promises improved performance by better characterizing the information in clinical reports. Two independent groups have demonstrated that natural language processing can be as accurate as expert human coders for coding radiographic reports as well as more accurate than simple keyword methods.30–32 A number of natural language processing systems are based on symbolic methods such as pattern matching or rule-based techniques and have been applied to health care.30–45 These systems have varied in approach: pure pattern matching, syntactic grammar, semantic grammar, or probabilistic methods, with different tradeoffs in accuracy, robustness, scalability, and maintainability. These systems have done well in domains, such as radiology, in which the narrative text is focused, and the results for more complex narrative such as discharge summaries are promising.36,41,46–50

With the availability of narrative reports in real time, automated systems can intervene in the care of the patient in complex ways. In one study, a natural language processor was used to detect patients at high risk for active tuberculosis infection based on chest radiographic reports.45 If such patients were in shared rooms, respiratory isolation was recommended. This system cut the missed respiratory isolation rate approximately in half.

Given clinical data sources, which may include medication, laboratory, and microbiology information as well as narrative data, the computer must be programmed to select cases in which an adverse event may have occurred. In most patient safety studies, someone with knowledge of patient safety and database structure writes queries or rules to address a particular clinical area. For example, a series of rules to address adverse drug events can be written.17 One can broaden the approach by searching for general terms relevant to patient safety or look for an explicit mention of an adverse drug event or reaction in the record. Automated methods to produce algorithms may also be possible. For example, one can create a training set of cases in which some proportion is known to have suffered an adverse event. A machine learning algorithm, such as a decision tree generator, a neural network, or a nearest neighbor algorithm, can be used to categorize new cases based on what is learned from the training set.

Finally, the computer-generated signals must be assessed for the presence of adverse events. Given the relatively low sensitivity and specificity that may occur in computer based screening,17,51 it is critical to verify the accuracy of the system. Both internal and external validations are important. Manual review of charts can be used to estimate sensitivity, specificity, and predictive value. Comparison with previous studies at other institutions also can serve to calibrate the system.

Identification of Studies Using Electronic Tools to Detect Adverse Events

To identify studies assessing the use of information technology to detect adverse events, we performed an extensive search of the literature. English-language studies involving adverse event detection were identified by searching 1966–2001 MEDLINE records with two Medical Subject Headings (MeSH), Iatrogenic Disease and Adverse Drug Reporting Systems; with the MeSH Entry Term, Nosocomial Infection; and with key words (adverse event, adverse drug event, fall, and computerized detection). In addition, the bibliographies of original and review articles were hand-searched, and relevant references were cross-checked with those identified through the computer search. Two of the authors (HJM and PDS) initially screened titles and abstracts of the search results and then independently reviewed and abstracted data from articles identified as relevant.

Studies were included in the review if they contained original data about computerized methods to detect nosocomial infections, adverse drug events, adverse drug reactions, adverse events, or falls. We excluded studies that focused on adverse event prevention strategies, such as physician order entry or clinical decision support systems, and did not include detailed information regarding methods for adverse event detection. We also excluded studies of computer programs designed to detect drug-drug interactions.

Included studies evaluated the performance of a diagnostic test (an adverse event monitor). The methodologic quality of each study was determined using previously described criteria for assessing diagnostic tests.52 Studies were evaluated for the inclusion of a “gold standard.” For the purpose of this review the gold standard was manual chart review, with the ultimate judgment of an adverse event performed by a clinician trained in adverse event evaluation. Furthermore, the gold standard had to be a blinded comparison applied to charts independently of the application of the study tool. Only studies that evaluated their screening tool against a manual chart review of records without alerts were considered to have properly utilized the gold standard.

Reviewers abstracted information concerning the patients included, the type of event monitor implemented, the outcome assessed, the signals used for detection, the performance of the monitor, and any barriers to implementation described by the authors. The degree of manual review necessary to perform the initial screening for an adverse event was assessed to determine the level of automation associated with each monitor. An event monitor using signals from multiple data sources that generated an alert that was then directly reviewed by the clinician making the final adverse event judgements was considered “high-end” automation. An event monitor that relied on manual entry of specific information into the monitor for an alert to be generated was considered “low-end.” All disagreements were settled by consensus of the two reviewers.

Twenty-five studies were initially identified for review (Table 1▶). Of these studies, seven included a gold standard in the assessment of the screening tool (Table 2▶).

Table 1 .

Studies Evaluating Computerized Adverse Event Monitors

| Study | Patients | Outcome Measured | Signal Used for Detection | Gold Standard | Level of Automation |

|---|---|---|---|---|---|

| Nosocomial infections | |||||

| Rocha et al.62 | Newborns admitted to either the well-baby unit or the neonatal intensive care unit at a tertiary care hospital over a 2-year period (n = 5201) | NI rates = definition not specified | Microbiology data (microbiology cultures and cerebrospinal fluid cultures) | Yes | High end (preexisting integrated computer system with POE and an event monitor) |

| Evans et al.55 | All patients discharged from a 20-bed tertiary care center over a 2-month period (n = 4679) | NI rates = definition not specified | Microbiology, laboratory, and pharmacy data | Yes | High end (preexisting integrated computer system with POE and an event monitor) |

| Dessau et al.63 | Patients not described; admitted to an acute care hospital (n = not described) | NI rates = any infectious outbreak (during the study the system detected an outbreak of Campylobacter jejuni) | Microbiology data | No | High end (not reported) |

| Pittet et al.64 | All patients admitted to a 1,600-bed hospital over a 1-year period (n = not described) | NI rate = hospital acquired MRSA infections | Microbiology and administrative data | No | High end (preexisting integrated computer system) |

| Hirschhorn et al.65 | Consecutive women admitted for a nonrepeat, nonelective cesarean section who received prophylactic antibiotics to a tertiary care medical center over a 17-month period (n = 2197) | NI rates = endometritis, wound infections, urinary tract infections, and bacteremia | Pharmacy and administrative data | Yes | High end (not described) |

| Adverse drug events | |||||

| Brown et al.66 | Patients not described; looked at all reports and alerts generated over a 3-month period at a Veterans Affairs Hospital (n = not reported) | ADE rate = injury related to the use of a drug Potential ADE rate = drug-related injury possible but did not actually occur | Laboratory and pharmacy data | No | High end (preexisting integrated computer system with POE) |

| Classen et al.20 | All medical and surgical in patients admitted to a tertiary care center over an 18-month period (n = 36,653) | ADE rate = injury resulting from the administration of a drug | Laboratory and pharmacy data | No | High end (preexisting integrated computer system with POE and an event monitor) |

| Dalton-Bunnow et al.67 | All patients receiving antidte drugs (n = 419 in retrospective phase, 93 in concurrent review) | ADR rate = adverse reaction related to the use of a drug | Pharmacy data | No | Low end (manually created SQL queries of pharmacy data, printed out and cases reviewed for true ADRs) |

| Raschke et al.68 | Consecutive nonobstetric adults admitted to a teaching hospital over a 6-month period in 1997 (n = 9306) | Potential ADE rate = prescripting errors with a high potential for resulting in an ADE | Patient demographic, pharmacy, allergy, and laboratory data, as well as radiology orders | No | High end (preexisting integrated computer system with POE and an event monitor) |

| Honigman et al.17 | All outpatient visits to a primary care clinic for 1 year (n = 15,665) | ADE rate = injury resulting from the administration of a drug | Laboratory, pharmacy, and administrative data, as well as free-text electronical searches | Yes | High end (preexisting integrated computer system with electronically stored notes and an event monitor) |

| Whipple et al.69 | All patients using patient analgesic overdose controlled analgesia (PCA) during study period (n = 4669) | ADE = narcotic analgesic overdose | Administrative data (billing codes for PCA and naloxone orders) | No | Low end (no EMR, simple screening tool) |

| Koch57 | All admissions to a 650-bed acute care facility over a 7 month perod (n = not reported) | ADR rate = adverse reaction related to the use of a drug | Laboratory, pharma-cology, and micro-biology data | No | Low end (10 tracer drugs were monitored, printouts were made daily, and a pharmacist manually transferred the data to a paper ADR form) |

| Bagheri et al.70 | 5 one-week blocks of inpatients from all departments (except the emergency department and visceral or orthopedic surgery) (n = 147) | ADE rate = drug-induced liver injury | Laboratory data | No | Low end (computerized methods not reported) |

| Dormann et al.71 | All patients admitted to a 9-bed mdical ward in an academic hospital over a 7-month period (n = 379) | ADR rate = adverse reactions related to the use of a drug | Laboratory data | No | Low end (computerized methods not reported) |

| Levy et al.72 | Consecutive patients admitted to a 34-bed ward of an aute-care hospital over a 2-month period (n = 199) | ADR rate = adverse reactions related to the use of a drug | Laboratory data | Yes | Low end (system monitored for approximately 25 laboratory abnormalities and generated paper lists of possible ADRs used for review by clinical pharmacists) |

| Tse et al.18 | Patients not described; admitted to 472-bed acute care community hospital (n = not described) | ADR rate = adverse reaction related to the use of a drug | Pharmacy data (orders for antidote medications) | No | Low end (no EMR, simple screening tool) |

| Payne et al.73 | Patients not described; admitted to 1 of 3 Veterans Affairs Hospitals over a one month period (n = not described) | ADE rate = not specifically defined | Laboratory and pharmacy data | No | High end (preexisting clinical information system with POE and an event monitor) |

| Jha et al.11 | All medical and surgical in patients admitted to a tertiary care hospital over an 8-month period (n = 36,653) | ADE rate = injury resulting from admin-istration of a drug | Laboratory and pharmacy data | Yes | High end (preexisting integrated computer system with POE and an event monitor) |

| Evans et al.74 | All medical and surgical inpatents admitted to a 520-bed tertiary care medical center over a 44-month period (n= 79,719) | Type B ADE rate = idiosyncratic drug reaction or allergic reaction | Laboratory and pharmacy data | No | High end (preexisting integrated computer system with POE and an event monitor) |

| Adverse events | |||||

| Weingart et al.75 | 1994 Medicare bene-ficiaries seen at 69 acute-care hospitals in 1 of 2 states (n = 1025) | AE rate = medical and surgical complications associated with quality problems | Administrative data (ICD-9-CM codes) | Yes | Low end (system not reported) |

| Bates et al.76 | Consecutive patients admitted to the medical services of an academic medical center over a 4-month period (n = 3137) | AE rate = unintended injuries caused by medical management | Billing codes | Yes | Low end (system not reported) |

| Lau et al.77 | Patients selected from 242 cases already deter-mined to have “qualiy problems” based on peer review organiza-tion review (n = 100) | Diagnostic or medication errors = diagnosis deter-mined by expert systems and not detected by physician | Potential diagnostic errors determined by discrepancies between the expert systems list of diagnosis and physician’s list of diagnosis | No | Low end (data manually entered into the expert systems) |

| Andrus et al.78 | Data from all operative procedures from a Veteran’s Affairs Medi-cal Center over a 15-month period (n = 6241) | AE rates = surgical com-plications and mortality | Not reported | No | Low end (requires all data be manually entered into database) |

| Iezzoni et al.79 | 1988 hospital discharge abstracts from 432 hospi-talized adult, nonobstetric medical or surgical patients (n = 1.94 million) | AE rate = medical and surgical compilations | Administrative data (ICD-9-CM codes) | No | Low end (SAS-based computer algorithm designed by authors) |

| Benson et al.80 | Data from 20,000 anes-thesiologic proceedures | AE rate = used German Society of Anesthesiology and Intensive Care Medicine definition | Patient (vital sign information) and pharmacy data | No | High end (system not well described but highly integrated) |

NI = nosocomial infection; MRSA = methicillin-resistant Staphylococcus aureus; AE = adverse event; ADE = adverse drug event; ADR = adverse drug reaction; PPV = positive predictive value; HIS = hospital information system.

Table 2 .

Results and Barriers to Implementation of Studies Evaluating an Adverse Event Monitor Using a Gold Standard

| Study | Description of Monitor | Study Results | False Positives and/or False Negatives | Barriers to Implementation |

|---|---|---|---|---|

| Nosocomial infections | ||||

| Rocha et al.62 | An expert system using boolean logic to detect hospital-acquired infectons in newborns. The system is by positive micro-biology results (data-driven) and at specific periods of time (time-driven) to search for signals. | The computer activated 605 times, 514 times by culture results and 91 times by CSF analysis. The sensitivity of the tool was 85% and speci-ficity 93%. Compared with an expert reviewer’s judge-ment, the tool had a kappa statistic of 0.62. | There were 32 false posi-tives (7%) and 11 false negatives (16%). | The detection system would require a highly integrated and sophisticated HIS to operate. No data were pro-vided regarding the time necessary to maintain the system. |

| Evans et al.55 | A series of computer pro-grams that translate the patients’ microbiology test results into a hierarchical database. Data are then compared with a computer-ized knowledge base devel-oped to identify patients with hospital-acquired infections or receiving inappropriate antibiotic therapy. The system is time-driven, and alerts are transported to the infectious disease service for confirma-tion and investigation. | Either or both computerized detection or traditional methods identified 217 patients. 155 patients were determined to have had a nosocomial infection. The computer identified 182 cases, of which 140 were confirmed (77%). Out of all the confirmed cases (150) the computer identified 90% while traditional methods detected 76%. | 23% (42/182) of the alerts were false positives. The rate of false positives was the same as manual review. Contamination was responsible for many of the false positives. | HIS without a high level of integration might not be able to support the rule base. Infection control practitioners (traditional method) spent 130 hours on infection surveil-lance and 8 hours on collect-ing materials. Only 8.6 hours were necessary to prepare similar reports using com-puterized screening results plus an additional 15 minutes for verification in each patient, resulting in a total of 45.5 hours of surveillance time. |

| Hirschhorn et al.65 | A computer program that captures the duration and timing of postoperative anti-biotic exposure and the ICD-9-CM coded discharge diagnosis. This information was used to screen for possible nosocomial infections. | The overall incidence of infection was 9%. Eight per-cent of all patients had a coded diagnosis for infection. Exposure to greater than 2 days of antibiotics had a sensitivity of 81%, a speci-ficity of 95%, and a PPV of 61% to detect infections. The coded diagnosis had a sensitivity of 65%, a specificity of 97%, and a PPV of 74%. A combination of screens had a sensitivity of 59% and a PPV of 94%. | Based on manual review, 5% of pharmacy records were misclassified with 18% of patients being incorrectly labeled as having received greater than 2 days of antibiotics. Discharge codes missed 35% of the infections. | The monitor would not require a highly integrated HIS and would be easier to implement. No information was described regarding the level of work necessary to maintain the system. |

| Adverse drug events | ||||

| Honigman et al.17 | A computerized tool that reviewed elctronically stored records using four search strategies: ICD-9-CM codes, allergy rules, a computer event monitor, and auto-mated chart review using free-text searches. After the search was performed the data were narrowed and queried to identify incidents. | The monitor detected an esti-mated 864 (95% CI, 750-978) ADEs in 15,655 patients. For the composite tool the sensi-tivity was 58% (95% CI, 18-98), specificity 88% (95% CI, 87-88), PPV 7.5% (95% CI, 6.5-8.5), and NPV 99.2% (95% CI, 95.5-99.98). | For the composite tool the false-positive rate was 42% (637/1501) and the false-negative rate was 12% (10,619/87,013). | The monitor requires a highly integrated HIS to implement. ICD-9-E codes were not used frequently at the study insti-tution. Only a small lexicon had been developed for free-text searches. The study did not mention the amount of time that would be necessary to maintain the monitor. |

| Levy et al.72 | A data-driven monitor where automated laboratory signals (alerts) were gen-erated when a specific labora-tory value reached a pre-defined criteria. A list of alerts was generated on a daily basis and presented to staff physicians. | 32% (64/199) patients had an ADR. There were 295 alerts generated involving 69% of all admissions. Of all ADRs, 61% (43/71) were detected by the automated signals. The sensitivity of the system was 62% with a specificity of 42%. 18% (52/295) of alerts represented an ADR | Overall 82% (243/295) of the alerts were false positives. | Authors mention an “easy implementation” but imple-mentation is not described; however, the high false-posi-tive rate would add to the overall work required to maintain the system. The time necessary to maintain the system is not described. |

| Jha et al.11 | A computerized event monitor detecting events using individual signals and boolean combinations of signals involving medica-tion orders and laboratory results. The computer gen-erates a list of alerts that are reviewed to determine if further evaluation is needed. | 617 ADEs were identified during the study period. The computer monitor iden-tified 2,620 alerts of which 10% (275) were ADEs. The PPV of the event monitor was 16% over the first 8 weeks of the study but increased to 23% over the second 8 weeks after some rule modification. | The false-positive rate over the entire study period was 83%. | In hospitals without this sophisticated a IS, it might be challenging to implement the monitor. The monitor was unable to access microbiology results. To maintain the system required 1-2 hours of programming time a month and 11 person-hours a week to evaluate alerts. |

| Adverse events | ||||

| Weingart et al.75 | A computer program that searched for ICD-9-CM codes that could represent a medical or surgical com-plication. Screened positive discharge abstracts were discharge abstracts were initially reviewed by nurse reviewers, and if a quality problem was believed to have occurred, the physician reviewers then reviewed the chart. | There were 563 surgical and 268 medical cases flagged by the monitor. Judges con-firmed alerts in 68% of the surgical and 27% of the medical flagged cases. 30% of the surgical and 16% of the medical cases identified by the screening tool had quality problems associated with them. | 73% of the medical alerts and 32% of the surgical alerts were flagged with-out an actual complica-tion. 2.1% of the medical and surgical controls had quality problems associ-ated with them but were not flagged by the program. | The monitor would be rela-tively easy to implement; however, the low PPV of the tool for medical charts raises concerns about the accuracy of ICD-9-CM codes and threatens the usefulness of the tool in medical patients. The kappa scores were low for interrater reliability (0.22) con-cerning quality problems. No data were presented about the time necessary to maintain the monitor. |

| Bates et al.76 | The study evaluated five electronically available bill-ing codes as signals to detect AE. Medical records under-went initial manual screen-ing followed by implicit physician review. | There were 341 AEs detected in the study group. The use of all 5 screens would detect 173 adverse events in 885 admissions. The sensitivity and specificity of this strategy were 47% and 74% with a PPV of 20%. Eliminat-ing one poorly performing screen (the least specific) would detect 88 AEs in 289 charts with a sensitivity of 24% and specificity of 93% and a PPV of 30% | The first strategy resulted in 712 false-positive screens out of 885 alerts (80%). The second strategy resulted in 201 false-positive screens out of 289 alerts (70%). | The monitor utilized readily available electronically stored billing data for signals, making the tool more general-izable for most institutions. Electronic screening cost $3 per admission reviewed and $57 per adverse event $57 per adverse event detected compared with $13 per admission and $116 per adverse event detected when all charts were reviewed manually. |

NI = nosocomial infection; AE = adverse event; ADE = adverse drug event; ADR = adverse drug reaction; PPV = positive predictive value; HIS = hospital information system.

Finding Specific Types of Adverse Events

Frequent types of adverse events include nosocomial infections, adverse drug events (ADEs), and falls. Substantial work has been done to detect each by using information technology techniques.

Nosocomial Infections

For more than 20 years before the recent interest in adverse events, nosocomial or hospital-acquired infection surveillance and reporting have been required for hospital accreditation.53In 1970, the Centers for Disease Control set up national guidelines and provided courses to train infection control practitioners to report infection rates using a standard method.54However, the actual detection of the nosocomial infections was based mainly on manual methods, and this process consumed most of infection control practitioners’ time.

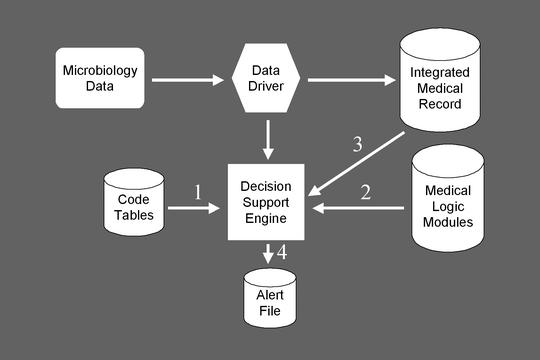

A number of groups have since developed tools to assist providers in detecting nosocomial infections, using computerized detection approaches.55,56These tools typically work by searching clinical databases of microbiology and other data (Figure 1▶) and producing a report that infection control practitioners can use to assess whether a nosocomial infection is present (Figure 2▶). This approach has been highly effective. In a comparison between computerized surveillance and manual surveillance, the sensitivities were 90% and 76%, respectively.55Analysis revealed that shifting to computerized detection followed by practitioner verification saved more than 65% of the infection control practitioners’ time and identified infections much more rapidly than manual surveillance. Most infections that were missed by computer surveillance could have been identified with additions or corrections to the medical logic modules.

Figure 1.

Steps involved in computerized surveillance for nosocomial infections. This figure illustrates the LDS Hospital structure for nosocomial infection surveillance, including the key modules, which must interact for successful surveillance.

Figure 2.

Example of an alert for a nosocomial infection. This report from the Infectious Disease Monitor program at LDS Hospital aggregates substantial clinical detail, which makes it easier for an infection control provider to assess rapidly whether a nosocomial infection is present.

Adverse Drug Events in Inpatients

Hospital information systems can be used to identify adverse drug events (ADEs) by looking for signals that an ADE may have occurred and then directing them to someone—usually a clinical pharmacist—who can investigate.19Examples of signals include laboratory test results, such as a doubling in creatinine, high serum drug levels, use of drugs often used to treat the symptoms associated with ADEs, and use of antidotes.

Before developing its computerized ADE surveillance program, LDS Hospital had only ten ADEs reported annually from approximately 25,000 discharged patients. The computerized suveillance identified 373 verified ADEs in the first year and 560 in the second year.20A number of additional signals or flags were added to improve the computerized surveillance during the second year.

Others have developed similar programs.11,57,58For example, Jha et al. used the LDS rule base as a starting point, assessed the use of 52 rules for identifying ADEs, and compared the performance of the ADE monitor with chart review and voluntary reporting. In 21,964 patient-days, the ADE monitor found 275 ADEs (rate: 9.6 per 1000 patient-days), compared with 398 (rate: 13.3 per 1000 patient-days) using chart review. Voluntary reporting identified only 23 ADEs. Surprisingly, only 67 ADEs were detected by both the computer monitor and chart review. The computer monitor performed better than chart review for events that were associated with a change in a specific parameter (such as a change in creatinine), whereas chart review did better for events associated with symptom changes, such as altered mental status. If more clinical data—in particular, nursing and physician notes—had been available in machine-readable form, the sensitivity of the computer monitor could have been improved. The time required for the computerized monitor was approximately one-sixth that required for chart review.

A problem with broader application of these methods has been that computer monitors use both drug and laboratory data and in many hospitals the drug and laboratory databases are not integrated. Nonetheless, this approach can be successful in institutions with less sophisticated information systems.58In a hospital that did not have a linkage between the drug and laboratory databases, Senst et al. downloaded information from both to create a separate database that was used to detect ADEs. Not all of the rules could be applied to this separate database, but a high proportion could be, and the resulting application successfully identified a large number of ADEs. Furthermore, the epidemiology of the events found differed from prior reports—in particular, admissions caused by ADEs in psychiatric patients were frequent—and this information proved useful in targeting improvement strategies.

Adverse Drug Events in Outpatients

Although many studies address the incidence of ADEs in inpatients, fewer data are available regarding ADE rates in the outpatient setting. Honigman et al. hypothesized that it would be possible with electronic medical records to detect may ADEs using techniques analogous to the inpatient setting. They used four approaches: ICD-9 codes, allergy records, computer event monitoring, and free-text searching of patient notes for drug–symptom pairs (e.g., cough and ACE inhibitor) to detect ADEs. In an evaluation including one year’s data of electronic medical records for 23,064 patients, including 15,665 patients that came for care, 864 ADEs were identified. Altogether, 91% of the ADEs were identified using text searching, 6% with allergy records, 3% with the computerized event monitor, and only 0.3% with ICD-9 coding. The dominance of text searching was a surprise and emphasizes the importance of having clinical information in the electronic medical record, even if it is not coded.

Falls

Inpatient falls are relatively common and are widely recognized as causing significant patient morbidity and increased costs. Several interventions have been found to decrease fall rates.59Hripcsak, Wilcox, and Stetson used this domain as a test area for natural language processing. They began by looking for any radiology reports (e.g., x-ray, head CT, MRI) indicating that a patient fall was the reason for the exam (e.g., R/O fall, S/P fall) and occurred after the second day of hospitalization. They also counted the number of radiology reports in which a fracture was found (thus exploiting the ability of natural language processing to handle negation). They found that 1447 of 553,011 inpatient visits had at least one report to rule out a fall (2.6 falls per thousand admissions), and 14% of those involved a fracture (overall rate of injurious falls: 0.35 per thousand). The number of reports was within the range found in the literature using chart review.60

Detection of Other Types of Adverse Events

The “holy grail” in computerized adverse event detection has been a tool to detect a large fraction of all adverse events, including not only the types of events mentioned in this report, but also other frequent adverse events such as surgical events, diagnostic failures, and complications of procedures. Such a tool could be used by hospitals for routine detection of adverse events on an ongoing basis and in real time. Preliminary studies suggest that techniques such as term searching and natural language processing in reviewing electronic information hold substantial promise for detecting a large number of diverse adverse events affecting inpatients.61 The tools would search discharge summaries, progress notes, and computerized sign-outs as well as other types of electronic data to look for signals that suggest the presence of an adverse event.

Conclusions

The current approach used by most organizations to detect adverse events—spontaneous reporting—is clearly insufficient. Computerized techniques for identifying adverse drug events and nosocomial infections are sufficiently developed for broad use. They are much more accurate than spontaneous reporting and more timely and cost-effective than manual chart review. Research will probably allow development of techniques that use tools such as natural language processing to mine electronic medical records for other types of adverse events. We believe that a key benefit of electronic medical records will be that they can be used to detect the frequency of adverse events and to develop methods to reduce the number of such events.

Acknowledgments

The authors thank Adam Wilcox, PhD, for his role in acquiring the Columbia-Presbyterian Medical Center falls data. This work was supported in part by grants from the Agency for Healthcare Research and Quality (U18 HS11046-03), Rockville, MD, and from the National Library of Medicine (R01 LM06910), Bethesda, MD.

References

- 1.Institute of Medicine. To err is human. Building a safer health system. Washington, DC, National Academy Press, 1999.

- 2.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC, National Academy Press, 2001. [PubMed]

- 3.Brennan TA, Leape LL, Laird N, Hebert L, et al. Incidence of adverse events and negligence in hospitalized patients: Results from the Harvard Medical Practice Study I. N Engl J Med 1991; 324:370–376. [DOI] [PubMed] [Google Scholar]

- 4.Leape LL, Brennan TA, Laird NM, Lawthers AG, et al. The nature of adverse events in hospitalized patients: Results from the Harvard Medical Practice Study II. N Engl J Med 1991; 324:377–384. [DOI] [PubMed] [Google Scholar]

- 5.Seidl LG, Thornton G, Smith JW, et al. Studies on the epidemiology of adverse drug reactions: III. Reactions in patients on a general medical service. Bull Johns Hopkins Hosp 1966; 119:299–315. [Google Scholar]

- 6.California Medical Association. Report of the Medical Insurance Feasibility Study. Sacramento, CA, California Medical Association, 1977.

- 7.Cullen DJ, Sweitzer BJ, Bates DW, Burdick E, et al. Preventable adverse drug events in hospitalized patients: A comparative study of intensive care units and general care units. Crit Care Med 1997; 25:1289–1297. [DOI] [PubMed] [Google Scholar]

- 8.Bennett BS, Lipman AG. Comparative study of prospective surveillance and voluntary reporting in determining the incidence of adverse drug reactions. Am J Hosp Pharm 1977; 34:931–936. [PubMed] [Google Scholar]

- 9.Edlavitch SA. Adverse drug event reporting. Improving the low US reporting rates. Arch Intern Med 1988; 148:1499–1503. [PubMed] [Google Scholar]

- 10.Brennan TA, Localio AR, Leape LL, Laird NM, et al. Identification of adverse events occurring during hospitalization. A cross-sectional study of litigation, quality assurance, and medical records at two teaching hospitals. Ann Intern Med 1990; 112:221–226. [DOI] [PubMed] [Google Scholar]

- 11.Jha AK, Kuperman GJ, Teich JM, Leape L, et al. Identifying adverse drug events: development of a computer-based monitor and comparison with chart review and stimulated voluntary report. J Am Med Inform Assoc 1998; 5:305–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Iezzoni LI, Foley SM, Heeren T, Daley J, et al. A method for screening the quality of hospital care using administrative data: preliminary validation results. Qual Rev Bull 1992; 18(11):361–371. [DOI] [PubMed] [Google Scholar]

- 13.Strom BL, Carson JL, Halpern AC, Schinnar R, et al. Using a claims database to investigate drug-induced Stevens-Johnson syndrome. Statist Med 1991; 10:565–576. [DOI] [PubMed] [Google Scholar]

- 14.Jollis JG, Ancukiewicz M, DeLong ER, Pryor DB, et al. Discordance of databases designed for claims payment versus clinical information systems. Implications for outcomes research. Ann Intern Med 1993; 119:844–850. [DOI] [PubMed] [Google Scholar]

- 15.Campbell JR, Payne TH. A comparison of four schemes for codification of problem lists. Proceedings of the Annual Symposium on Computer Applications in Medical Care 1994; 201–205. [PMC free article] [PubMed]

- 16.Iezzoni LI. Assessing quality using administrative data. Ann Intern Med 1997; 127(8 Pt 2):666–674. [DOI] [PubMed] [Google Scholar]

- 17.Honigman B, Lee J, Rothschild J, Light P, et al. Using computerized data to identify adverse drug events in outpatients. J Am Med Inform Assoc 2001; 8:254–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tse CS, Madura AJ. An adverse drug reaction reporting program in a community hospital. Qual Rev Bull 1988; 14(11):336–340. [DOI] [PubMed] [Google Scholar]

- 19.Evans RS, Pestotnik SL, Classen DC, Bass SB, et al. Development of a computerized adverse drug event monitor. Proc Ann Symp Comp Appl Med Care 1991;23–27. [PMC free article] [PubMed]

- 20.Classen DC, Pestotnik SL, Evans RS, Burke JP. Computerized surveillance of adverse drug events in hospital patients. JAMA 1991; 266:2847–2851. [PubMed] [Google Scholar]

- 21.Landefeld CS, Anderson PA. Guideline-based consultation to prevent anticoagulant-related bleeding. A randomized, controlled trial in a teaching hospital. Ann Intern Med 1992; 116:829–837. [DOI] [PubMed] [Google Scholar]

- 22.Kossovsky MP, Sarasin FP, Bolla F, Gaspoz JM, Borst F. Distinction between planned and unplanned readmissions following discharge from a Department of Internal Medicine. Meth Inform Med 1999; 38:140–143. [PubMed] [Google Scholar]

- 23.Roos LL, Cageorge SM, Austen E, Lohr KN. Using computers to identify complications after surgery. Am J Public Health 1985; 75:1288–1295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Roos LL, Stranc L, James RC, Li J. Complications, comorbidities, and mortality: improving classification and prediction. Health Serv Res 1997; 32:229–238. [PMC free article] [PubMed] [Google Scholar]

- 25.Goldman JA, Chu WW, Parker DS, Goldman RM. Term domain distribution analysis: A data mining tool for text databases. Meth Inform Med 1999; 38(2):96–101. [PubMed] [Google Scholar]

- 26.Rind DM, Yeh J, Safran C. Using an electronic medical record to perform clinical research on mitral valve prolapse and panic/anxiety disorder. Proc Annu Symp Comput Appl Med Care 1995;961.

- 27.Giuse DA, Mickish A. Increasing the availability of the computerized patient record. Proceedings of the AMIA Annual Fall Symposium, 1996;633–637. [PMC free article] [PubMed]

- 28.Spyns P. Natural language processing in medicine: an overview. Meth Inform Med 1996; 35(4-5):285–301. [PubMed] [Google Scholar]

- 29.Friedman C, Hripcsak G. Natural language processing and its future in medicine. Acad Med 1999; 74(8):890–895. [DOI] [PubMed] [Google Scholar]

- 30.Hripcsak G, Friedman C, Alderson PO, DuMouchel W, et al. Unlocking clinical data from narrative reports: A study of natural language processing. Ann Intern Med 1995; 122(9): 681–688. [DOI] [PubMed] [Google Scholar]

- 31.Hripcsak G, Kuperman GJ, Friedman C. Extracting findings from narrative reports: software transferability and sources of physician disagreement. Meth Inform Med 1998; 37(1):1–7. [PubMed] [Google Scholar]

- 32.Fiszman M, Chapman WW, Aronsky D, Evans RS, Haug PJ. Automatic detection of acute bacterial pneumonia from chest x-ray reports. J Am Med Inform Assoc 2000; 7:593–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Baud RH, Rassinoux AM, Scherrer JR. Natural language processing and semantical representation of medical texts. Meth Inform Med 1992; 31:117–125. [PubMed] [Google Scholar]

- 34.Haug PJ, Ranum DL, Frederick PR. Computerized extraction of coded findings from free-text radiologic reports. Work in progress. Radiology 1990; 174(2):543–548. [DOI] [PubMed] [Google Scholar]

- 35.Friedman C, Hripcsak G, DuMouchel W, Johnson SB, Clayton PD. Natural language processing in an operational clinical information system. Nat Lang Engineer 1995; 1:83–108. [Google Scholar]

- 36.Zweigenbaum P, Bouaud J, Bachimont B, Charlet J, Boisvieux JF. Evaluating a normalized conceptual representation produced from natural language patient discharge summaries. Proceedings of the AMIA Annual Fall Symposium, 1997; 590–594. [PMC free article] [PubMed]

- 37.Sager N, Friedman C, Lyman M, et al. Medical Processing: Computer Managment of Narrative Data. Reading, MA: Addison-Wesley, 1987.

- 38.Lin R, Lenert LA, Middleton B, Shiffman S. A free-text processing system to capture physical findings: canonical phrase identification system (CAPIS). In Clayton PD (ed): Proceedings of 15th Annual SCAMC, 1992: 843–847. [PMC free article] [PubMed]

- 39.Zingmond D, Lenert LA. Monitoring free-text data using medical language processing. Comput Biomed Res 1993; 26:467–481. [DOI] [PubMed] [Google Scholar]

- 40.Moore GW, Berman JJ. Automatic SNOMED coding. Proceedings of the Annual Symposium on Computer Applications in Medical Care 1994;225–229. [PMC free article] [PubMed]

- 41.Gabrieli ER. Computer-assisted assessment of patient care in the hospital. J Med Syst 1988; 12(3):135–146. [DOI] [PubMed] [Google Scholar]

- 42.Fiszman M, Haug PJ. Using medical language processing to support real-time evaluation of pneumonia guidelines. Proceedings of the AMIA Annual Symposium, 2000;235–239. [PMC free article] [PubMed]

- 43.Morris WC, Heinze DT, Warner Jr HR, Primack A, et al. Assessing the accuracy of an automated coding system in emergency medicine. Proceedings of the AMIA Annual Symposium, 2000;595–599. [PMC free article] [PubMed]

- 44.Aronow DB, Fangfang F, Croft WB. Ad hoc classification of radiology reports. J Am Med Inform Assoc 1999; 6:393–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Knirsch CA, Jain NL, Pablos-Mendez A, Friedman C, Hripcsak G. Respiratory isolation of tuberculosis patients using clinical guidelines and an automated clinical decision support system. Infect Contr Hosp Epidemiol 1998; 19(2):94–100. [DOI] [PubMed] [Google Scholar]

- 46.Friedman C, Knirsch C, Shagina L, Hripcsak G. Automating a severity score guideline for community-acquired pneumonia employing medical language processing of discharge summaries. Proceedings of the AMIA Annual Symposium, 1999;256–260. [PMC free article] [PubMed]

- 47.Lenert LA, Tovar M. Automated linkage of free-text descriptions of patients with a practice guideline. Proceedings of the Annual Symposium on Computer Applications in Medical Care, 1993;274–278. [PMC free article] [PubMed]

- 48.Hersh WR, Leen TK, Rehfuss PS, Malveau S. Automatic prediction of trauma registry procedure codes from emergency room dictations. Medinfo 1998; 9(Pt 1):665–669. [PubMed] [Google Scholar]

- 49.Delamarre D, Burgun A, Seka LP, Le Beux P. Automated coding of patient discharge summaries using conceptual graphs. Meth Inform Med 1995; 34:345–351. [PubMed] [Google Scholar]

- 50.Spyns P, Nhan NT, Baert E, Sager N, De Moor G. Medical language processing applied to extract clinical information from Dutch medical documents. Medinfo 1998; 9(Pt 1):685–689. [PubMed] [Google Scholar]

- 51.Hripcsak G, Knirsch CA, Jain NL, Pablos-Mendez A. Automated tuberculosis detection. J Am Med Inform Assoc 1997; 4:376–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jaeschke R, Guyatt G, Sackett DL. Users’ guides to the medical literature. III. How to use an article about a diagnostic test. A. Are the results of the study valid? Evidence-Based Medicine Working Group. JAMA 1994; 271:389–391. [DOI] [PubMed] [Google Scholar]

- 53.Joint Commission on Accreditation of Healthcare Organiza-tions. Accreditation Manual for Hospitals. Chicago, 1981.

- 54.Centers for Disease Control. Outline of Surveillance and Control of Nosocomial Infections. Atlanta, 1979.

- 55.Evans RS, Larsen RA, Burke JP, Gardner RM, et al. Computer surveillance of hospital-acquired infections and antibiotic use. JAMA 1986; 256:1007–1011. [PubMed] [Google Scholar]

- 56.Kahn MG, Steib SA, Fraser VJ, Dunagan WC. An expert system for culture-based infection control surveillance. Proc Annu Symp Comput Appl Med Care 1993;171–175. [PMC free article] [PubMed]

- 57.Koch KE. Use of standardized screening procedures to identify adverse drug reactions. Am J Hosp Pharm 1990; 47:1314–1320. [PubMed] [Google Scholar]

- 58.Senst BL, Achusim LE, Genest RP, Cosentino LA, et al. A practical approach to determining adverse drug event frequency and costs. Am J Health-Syst Pharm 2001; 58:1126–1132. [DOI] [PubMed] [Google Scholar]

- 59.Tinetti ME, Baker DI, McAvay G, Claus EB, et al. A multifactorial intervention to reduce the risk of falling among elderly people living in the community. N Engl J Med 1994; 331:821–827. [DOI] [PubMed] [Google Scholar]

- 60.Morgan VR, Mathison JH, Rice JC, Clemmer DI. Hospital falls: A persistent problem. AJPH 1985; 75:775–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Murff HJ, Forster AJ, Peterson JF, Fiskio JM, et al. Electronically screening discharge summaries for adverse medical events. J Gen Intern Med 2001; 17(Supp 1):A205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Rocha BH, Christenson JC, Pavia A, Evans RS, Gardner RM. Computerized detection of nosocomial infections in newborns. Proceedings of the Annual Symposium on Computer Applications in Medical Care 1994;684–688. [PMC free article] [PubMed]

- 63.Dessau RB, Steenberg P. Computerized surveillance in clinical microbiology with time series analysis. J Clin Microbiol 1993; 31(4):857–860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Pittet D, Safran E, Harbarth S, Borst F, et al. Automatic alerts for methicillin-resistant Staphylococcus aureus surveillance and control: role of a hospital information system. Infec Contr Hosp Epidemiol 1996; 17(8):496–502. [PubMed] [Google Scholar]

- 65.Hirschhorn LR, Currier JS, Platt R. Electronic surveillance of antibiotic exposure and coded discharge diagnoses as indicators of postoperative infection and other quality assurance measures. Infect Contr Hosp Epidemiol 1993; 14:21–28. [DOI] [PubMed] [Google Scholar]

- 66.Brown S, Black K, Mrochek S, Wood A, et al. RADARx: Recognizing, assessing, and documenting adverse Rx events. Proceedings of the AMIA Annual Symposium, 2000;101–105. [PMC free article] [PubMed]

- 67.Dalton-Bunnow MF, Halvachs FJ. Computer-assisted use of tracer antidote drugs to increase detection of adverse drug reactions: a retrospective and concurrent trial. Hosp Pharm 1993; 28:746–749. [PubMed] [Google Scholar]

- 68.Raschke RA, Gollihare B, Wunderlich TA, Guidry JR, et al. A computer alert system to prevent injury from adverse drug events: Development and evaluation in a community teaching hospital. JAMA 1998; 280:1317–1320. [DOI] [PubMed] [Google Scholar]

- 69.Whipple JK, Quebbeman EJ, Lewis KS, Gaughan LM, et al. Identification of patient-controlled analgesia overdoses in hospitalized patients: A computerized method of monitoring adverse events. Ann Pharmacother 1994; 28:655–658. [DOI] [PubMed] [Google Scholar]

- 70.Bagheri H, Michel F, Lapeyre-Mestre M, Lagier E, et al. Detection and incidence of drug-induced liver injuries in hospital: A prospective analysis from laboratory signals. Br J Clin Pharmacol 2000; 50:479–484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Dormann H, Muth-Selbach U, Krebs S, Criegee-Rieck M, et al. Incidence and costs of adverse drug reactions during hospitalisation: Computerised monitoring versus stimulated spontaneous reporting. Drug Saf 2000; 22(2):161–168. [DOI] [PubMed] [Google Scholar]

- 72.Levy M, Azaz-Livshits T, Sadan B, Shalit M, et al. Computer-ized surveillance of adverse drug reactions in hospital: implementation. Eurn J Clin Pharmacol 1999; 54:887–892. [DOI] [PubMed] [Google Scholar]

- 73.Payne TH, Savarino J, Marshall R, Hoey CT. Use of a clinical event monitor to prevent and detect medication errors. Proceedings of the AMIA Annual Symposium, 2000;640–644. [PMC free article] [PubMed]

- 74.Evans RS, Pestotnik SL, Classen DC, Horn SD, et al. Preventing adverse drug events in hospitalized patients. Ann Pharmacother 1994; 28:523–527. [DOI] [PubMed] [Google Scholar]

- 75.Weingart SN, Iezzoni LI, Davis RB, Palmer RH, et al. Use of administrative data to find substandard care: validation of the complications screening program. Med Care 2000; 38:796–806. [DOI] [PubMed] [Google Scholar]

- 76.Bates DW, O’Neil AC, Petersen LA, Lee TH, Brennan TA. Evaluation of screening criteria for adverse events in medical patients. Med Care 1995; 33:452–462. [DOI] [PubMed] [Google Scholar]

- 77.Lau LM, Warner HR. Performance of a diagnostic system (Iliad) as a tool for quality assurance. Comput Biomed Res 1992; 25:314–323. [DOI] [PubMed] [Google Scholar]

- 78.Andrus CH, Daly JL. Evaluation of surgical services in a large university-affiliated VA hospital: Use of an in-house-generated quality assurance data base. South Med J 1991; 84:1447–1450. [DOI] [PubMed] [Google Scholar]

- 79.Iezzoni LI, Daley J, Heeren T, Foley SM, et al. Identifying complications of care using administrative data. Med Care 1994; 32:700–715. [DOI] [PubMed] [Google Scholar]

- 80.Benson M, Junger A, Michel A, Sciuk G, et al. Comparison of manual and automated documentation of adverse events with an Anesthesia Information Management System (AIMS). Stud Health Technol Inform 2000; 77:925–929. [PubMed] [Google Scholar]