Abstract

From a twenty-first century partnership between bioethics and neuroscience, the modern field of neuroethics is emerging, and technologies enabling functional neuroimaging with unprecedented sensitivity have brought new ethical, social and legal issues to the forefront. Some issues, akin to those surrounding modern genetics, raise critical questions regarding prediction of disease, privacy and identity. However, with new and still-evolving insights into our neurobiology and previously unquantifiable features of profoundly personal behaviors such as social attitude, value and moral agency, the difficulty of carefully and properly interpreting the relationship between brain findings and our own self-concept is unprecedented. Therefore, while the ethics of genetics provides a legitimate starting point—even a backbone—for tackling ethical issues in neuroimaging, they do not suffice. Drawing on recent neuroimaging findings and their plausible real-world applications, we argue that interpretation of neuroimaging data is a key epistemological and ethical challenge. This challenge is two-fold. First, at the scientific level, the sheer complexity of neuroscience research poses challenges for integration of knowledge and meaningful interpretation of data. Second, at the social and cultural level, we find that interpretations of imaging studies are bound by cultural and anthropological frameworks. In particular, the introduction of concepts of self and personhood in neuroimaging illustrates the interaction of interpretation levels and is a major reason why ethical reflection on genetics will only partially help settle neuroethical issues. Indeed, ethical interpretation of such findings will necessitate not only traditional bioethical input but also a wider perspective on the construction of scientific knowledge.

Keywords: neuroethics, neuroimaging, neuroscience and ethics, bioethics, genetics

INTRODUCTION

What a sensation stethoscopy caused! Soon we will have reached the point where every barber uses it; when he is shaving you, he will ask: ‘Would you care to be stethoscoped, sir?’ Then someone else will invent an instrument for listening to the pulses of the brain. That will make a tremendous stir, until in fifty years' time every barber can do it. Then, when one has had a haircut and shave and been stethoscoped (for by now it will be quite common), the barber will ask, ‘Perhaps, sir, you would like me to listen to your brain-pulses?’

From a twenty-first century partnership between bioethics and neuroscience, modern neuroethics has emerged. While neuroethical discussion and debate about psychological states and physiological processes date back to the ancient philosophers, advanced capabilities for understanding and monitoring human thought and behavior enabled by modern neurotechnologies have brought new ethical, social and legal issues to the forefront. They draw on and extend anatomo-clinical approaches to cerebral localization and functional specialization that began in the 16th and 17th centuries, after a hiatus of more than two millennia from the days of Aristotle and Hippocrates (300 and 400 BCE; Marshall and Fink 2003). Some issues, akin to those surrounding modern genetics, raise critical questions regarding prediction of disease, privacy and identity. However, with new and still-evolving insights to our neurobiology and previously unquantifiable features of profoundly personal behaviors such as social attitude, value and moral agency, the difficulty of carefully and properly interpreting the relationship between brain findings and our own self-concept is unprecedented. Ways of tackling practical questions in neuroimaging will depend on how we deal with the fundamental one of interpretation—the principal reason that traditional bioethics analysis, as laid out in the ethics of genetics, will not suffice as a guide.

Consider, for example, the following sampling of article titles appearing in the scientific literature the past two to three years: “The Good, the Bad and the Anterior Cingulate” (Miller 2002), “Morals and the Human Brain: A Working Model” (Moll et al. 2003), “Strategizing in the Brain” (Camerer 2003), “The Medial Frontal Cortex and the Rapid Processing of Monetary Gains and Losses” (Gehring and Willoughby 2002) or “The Neural Basis of Economic Decision-Making in the Ultimatum Game” (Sanfey et al. 2003), as well as those appearing in popular print media, such as “How the Mind Reads Other Minds” (Zimmer 2003), “Tapping the Mind” (Wickelgren 2003), “Why We're So Nice: We're Wired to Cooperate” (Angier 2002), and “There's a Sucker Born in Every Medial Prefrontal Cortex” (Thompson 2003). From these, we observe that quantitative profiles of brain function—“thought maps”—once restricted to the domain of medical research and clinical neuropsychiatry, may now have a natural relevance in our approach to daily life. This trend conceivably introduces possibilities—or at least desires—for using brain maps to assess the truthfulness of statements and memory in law, profiling prospective employees for professional and interpersonal skills, evaluating students for learning potential in the classroom, selecting investment managers to handle our financial portfolios, and even choosing lifetime partners based on compatible brain profiles for personality, interests and desires. Further, these trends bring to the foreground what would appear to be a strict epistemological challenge at the core of neuroethics—proper interpretation of neuroimaging data. The challenge will prove to be two-fold. First, at the scientific level, the sheer complexity of neuroscience research poses challenges for integration of knowledge and meaningful interpretation of data. Second, at the social and cultural level, we find that social interpretations of imaging studies are bound by cultural and anthropological frameworks. In particular, the introduction of concepts of self and personhood in neuroimaging illustrates the interaction of interpretation levels. Addressing the challenge will involve creative human imagination and conscious awareness of scientific and cultural presuppositions.

This paper, therefore, explores the evolution of functional brain imaging capabilities that have led to bold new findings and claims about behavior in health and disease. We draw on recent neuroimaging research and their proposed applications. Taking a closer look at how genetics has been analyzed from an ethical standpoint, we compare issues raised in genetics with issues in functional neuroimaging using functional magnetic resonance imaging (fMRI) as our model. Finally, we discuss interpretation of neuroimaging data as a key epistemological and ethical challenge, inescapable for neuroethics and intertwined with the history of neuroscience.

FUNCTIONAL NEUROIMAGING

From generations of work by neurotechnologically curious and skilled scientists and engineers, powerful functional neuroimaging tools have been introduced to the modern era. The most prominent tools to date, electroencephalography (EEG), magnetoencephalography (MEG), positron emission tomography (PET), single photon emission computed tomography (SPECT) and functional Magnetic Resonance Imaging (fMRI), have provided a continuing stream of information about human behavior.

One of the oldest approaches dates back to 1929, when neuropsychiatrist Hans Berger announced the invention of the electroencephalogram and showed that the relative signal strength and position of electrical activity generated at the level of the cerebral cortex could be measured using placement of electrodes at the scalp (Karbowski 1990). With its exquisite temporal resolution, the stimulus evoked EEG response “event related potential” (ERP) was the first tool to unveil fundamental knowledge about the working of the human brain in near real time. Over time, other imaging modalities have come to achieve this goal by capitalizing on brain signals such as extracranial electromagnetic activity (MEG), metabolic activity and blood flow (PET and SPECT), and regional blood oxygenation (fMRI) that yield different and complex measurements of functional activity (for a very readable anthropologic perspective on PET specifically, see Dumit 2004). By and large, all utilize comparison or subtraction methods between two controlled conditions, heavy statistical processing, and computer intensive data reconstructions to produce the colorful maps with which we have become familiar. All have roots in the diagnosis and intervention of the wide range of psychiatric and neurological diseases known to us, including head trauma, dementia, mood disorders, stroke, cancer, seizures, and the impact of drug abuse, to mention but a few.

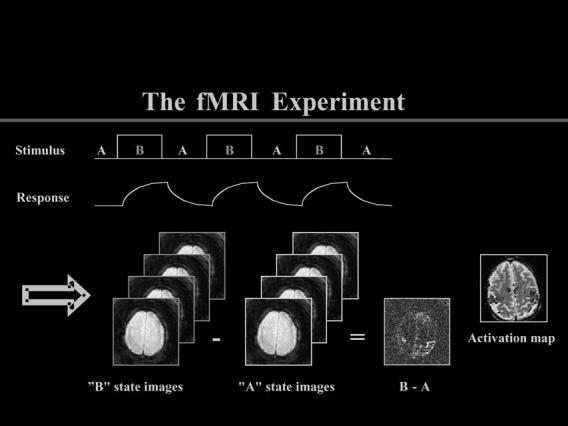

The different techniques each have relative advantages and disadvantages; these are summarized briefly in Table 1 to provide reference for this discussion. Given their technical trade-offs, fMRI stands out as likely to have the greatest enduring impact on our society outside the realm of academia and medicine. It is the widespread availability of MR scanners today and the noninvasiveness of the imaging approach enabled by MR have set fMRI apart from other neuroimaging tools and made it a model for neuroethical discussions. The activation maps produced by fMRI reflect indirect effects of neural activity on local blood flow under constrained experimental conditions. Like PET and SPECT paradigms, a typical fMRI experiment utilizes a stimulus designed for acquiring the relative difference in brain activity between an experimental and a control (baseline) task, as illustrated in Figure 1. The surplus of oxygenated blood recruited to relatively active brain regions produces the effects measured by MR.

Table 1.

Characteristics and Trade-offs of Major Functional Neuroimaging Technologies

| Measurement | Technology | Strengths | Limitations | Notes | |

|---|---|---|---|---|---|

| EEG | Electrical activity measured at scalp. | Electro-encephalogram; 8 to >200 scalp electrodes. | Noninvasive, well-tolerated, low cost, sub-second temporal resolution. | Limited spatial resolution compared to other techniques. | Tens of thousands of EEG/ERP findings reported in the literature. |

| MEG | Magnetic fields measured at scalp, computed from source-localized electric current data. | Superconducting quantum interference device (SQUID); ∼80–150 sensors surrounding the head. | Noninvasive, well-tolerated, good temporal resolution. | Cost, extremely limited market and availability. | |

| PET | Regional absorption of radioactive contrast agents yielding measures of metabolic activity and blood flow. | Ringed-shaped PET scanner; several hundred radiation detectors surrounding the head. | Highly evolved for staging of cancers, measuring cognition function and evolving to a reimbursable imaging tool for predicting disease involving neurocognition such as Alzheimer's Disease. | Requires injection or inhalation of contrast agent such as glucose or oxygen; lag time of up to 30 minutes between stimulation and data acquisition, limited availability (fewer than 100 PET scanners exist in US today) given short half-life of isotopes and few locations with cyclotrons to produce them; cost. | |

| SPECT | Like PET, another nuclear medicine technique that relies on regional absorption of radioactive contrast to yield measures of metabolic activity and blood flow. | Multidetector or rotating gamma camera systems. Data can be reconstructed at any angle, including the axial, coronal and sagittal planes, or at the same angle of imaging obtained with CT or MRI, to facilitate image comparisons. | Documented uses mapping psychiatric and neurological disease including head trauma, dementia, atypical or unresponsive mood disorders, strokes, seizures, the impact of drug abuse on brain function, and atypical or unresponsive aggressive behavior. | Requires injection of contrast agent through intravenous line; cost. | Currently available in two states (CA and CO) for purchase without physician referral; emphasis is on ADHD and Alzheimer's Disease (approx. out-of-pocket cost: $3,000 per study). |

| fMRI | Surplus of oxygenated blood recruited to regionally activated brain. | MRI scanner at 1 Tesla to 7 Tesla and higher; 1.5T most common because of its wide clinical availability. | Noninvasive, study repeatability, no known risks. New applications of MR in imaging diffusion tensor maps (DTI)—namely, the microstructural orientation of white matter fibers—has recently been shown to have good correlation with IQ, reading ability, personality and other trait measures (Klingberg et al. 2000). | Cost of equipment and physics expertise to run and maintain systems. | Rapid proliferation of research studies using fMRI alone or in combination with other modalities, growing from 15 in 1991 (13 journals) to 2,224 papers in 2003 (335 journals), representing an average increase of 56% per year. |

Note: Combined modality systems such as EEG and fMRI are becoming increasingly common. PET and SPECT are forerunners to frontier technology in molecular imaging.

Figure 1.

Experimental (“B” state) images are subtracted from control (“A” state) images to achieve regional activation maps with fMRI. (Courtesy of Gary H. Glover, Lucas MRS/MRI Center, Stanford University)

Applications of Functional Neuroimaging in Clinical Medicine, Cognitive Science and Law

Beyond the use of fMRI in mapping of salient cortical areas prior to surgical intervention for epilepsy, tumors or arteriovenous malformations, other active efforts to make the technology relevant in the clinical setting have focused on Alzheimer's Disease (AD), mental illness in adults and pediatric pathology such as attention deficit hyperactivity disorder (ADHD; Illes and Kirschen 2003). Applications of fetal MRI have also shown great promise in providing better diagnosis of structural central nervous system anomalies, and functional studies of fetal brain blood flow are not lagging far behind. At the opposite end of the life spectrum, first approaches using fMRI to determine levels of consciousness in patients in minimally conscious or vegetative states have also been attempted (e.g. Schiff et al. 2005) (Giacino 2003).

Over the past ten years of fMRI development and expanding boundaries of cognitive neuroscience, the innovation has been applied to gain new non-health related knowledge about human motivation, reasoning, and social attitudes. In a comprehensive literature review, we demonstrated a steady expansion of fMRI studies, alone or in combination with other imaging modalities, with evident social and policy implications, including studies of lying and deception, human cooperation and competition, brain differences in violent people, genetic influences, and variability in patterns of brain development (Illes et al. 2003). In one intriguing but unpublished study, Beauregard et al. (reviewed in Curran 2003) used a combination of EEG, fMRI and PET to probe neural underpinnings of religious experience (Curran 2003). They are focusing on the phenomenon known as unio mystica, a joyous sense of union with God reportedly experienced by a group of cloistered Carmelite nuns in Montreal, Canada. Discussion of the potential meaning and practical uses of such deeply personal neuroprofiles is ripe for bioethical consideration.

Outside the arenas of medicine and cognitive science, the legal arena offers an obvious venue for attempting to translate neuroimaging into meaningful, real-world use. As Hank Greely (2002, 5) wrote:

Neuroscience may provide answers to some of the ‘oldest philosophical questions, shedding light, for example, on existence limits, and meaning of free will.’ It may also provide new ways to distinguish truth from lies or real memories from false ones. This ability to predict behavior with the help of neuroscience could have important consequences for the judicial system as well as for society as a whole.

Greely provides an extensive review of the legal issues in his chapter “Prediction, Litigation, Privacy, and Property: Some Possible Legal and Social Implications of Advances in Neuroscience.” (Greely 2004). A few cases suitable to elucidating where ethical models for brain imaging may intersect with or diverge from genetics are explored here.

Looking back to 1985, when a Supreme Court holding in Ake v. Oklahoma imposed a constitutional requirement for states to provide psychiatric assistance in a criminal defense when the question of sanity is raised, criminal defendants began to argue that ‘psychiatric assistance’ should include a complete neurological evaluation including scans like PET or MRI. PET studies have shown that committed murderers, for example, as a group, have poor functioning in the prefrontal cortex, a locus of impulse control (Raine et al. 1994). In some cases, PET images have been used to argue that a defendant was biologically predisposed to committing a crime and, therefore, should be spared a conviction or death sentence. In at least one court case (People v. Jones)a homicide conviction was reversed because the state failed to provide brain scans.1

In a relatively new application of EEG, EEG-derived “brain fingerprinting” has been promoted as a tool for determining whether an individual is in possession of certain knowledge of a crime (Farwell and Smith 2001). It is the possession—or lack of possession—of the relevant facts about a crime that brain fingerprinting attempts to quantify through measures of brain-wave responses to relevant words or pictures presented at rapid rates on a computer screen. When the brain recognizes significant information—such as crime scene details—it responds with a “memory and encoding related multifaceted electroencephalographic response.” Unlike polygraph testing that measures an individual's fear of getting caught in a lie by tracking relevant physiological markers, brain fingerprinting ostensibly measures brain waves emitted when information stored in the brain is recognized.2

New applications of fMRI that bridge cognitive science and law also have the potential to change approaches to truth verification and lie detection. Langleben et al. (2001), for example, used fMRI to study neural patterns associated with deception. In their landmark experiment, volunteers were instructed to either truthfully or falsely confirm or deny having a playing card in their possession. When subjects gave truthful answers, the fMRI showed increased activity in visual and motor cortex. When they were deliberately deceptive, additional activations were measured in areas including the anterior cingulate cortex to which monitoring of errors and attention has been attributed. Langleben et al. concluded that “essentially, it took more mental energy to lie than to tell the truth” (Evans 2002). These results are consistent with those of Moll et al. (2003) and Heilman (1997) that implicate the temporo-polar cortex, insula, precuneus and their connections in an extended neural circuit that attributes conscious emotions and feelings, especially those with a social context, to perceptions and ideations (see also Aldolphs et al. 1995; Damasio 1994; LeDoux 2003). In the future, therefore, we may not only be able to discern whether an individual is being deceptive, but also whether the deception was premeditated or not.3

PATHWAYS FROM GENETICS TO NEUROIMAGING SCIENCE

Ellen Wright Clayton (2003) provides a comprehensive review of the impact that advancements in genetics and molecular biology have had on society, and she argues for genomics as a complex phenomenon that presents specific challenges for clinicians and patients alike. Neuroscience is no less ethically complex, and neuroscientists, like geneticists and nuclear physicists even before them, are increasingly gaining awareness about the potential implications of their research at the bench, in medicine, and in the public domain (Mariani 2003).4 Drawing on some major ethical, legal and social (ELSI) variables, this section examines the extent to which the ethics of genomics can serve as a model for ethical analysis of neuroimaging.

Discrimination, Stigma

Given the growing recognition that health information is not entirely private, Clayton (2003) and others (e.g., Rothenberg and Terry 2002) have suggested that the most common fear about genetic information is its potential use in justifying denial of access to health insurance, employment, education and even financial loans to people with particular genetic characteristics or diagnoses. While these issues may not be the immediate ones for neuroimaging, as we have seen, little stands in the way for similar concerns about neuroprofiling with functional imaging to arise even as neuroimaging techniques continue to mature. While neuroscientists tease out artifacts masquerading as neural effects and develop analytic methods that provide more intuitive ways of interpreting the data than possible today, there already exists a healthy regard for the novelty and breadth of information that neuroimaging can deliver about human health, behavior and cognitive fitness. How will such technology be used advantageously to benefit people and society? Could it be used harmfully for ill-intentioned purposes? Will Canli's paradigms for imaging personality become adopted for triaging team players or weak decision-makers in the workplace (Canli and Amin 2002) or, in this post-Columbine era, at the door of our high schools to triage out students with a predisposition to unruly or violent behavior? Perhaps screening for good humor would be more acceptable (Canli et al. 2002; Mobbs et al. 2003).

It will be the moral obligation of bioethicists and neuroscientists alike to think proactively about the impact that such effects might have on people, from the point of view both of benefits such as self-knowledge (Weir et al. 1994) and personal choice, as well as risks, especially for children and adolescents at critical stages in their personal and educational development (Savelscu 2001). By what means will anyone resist coercive uses of such technology if employment or educational opportunity are at stake? If the paradigms of Golby et al. (2001), Phelps et al. (2003), or Richeson et al. (2003) for studying race and social attitudes could be adopted for determining eligibility to become a police officer, a school principal, or even a national leader, would this be a legitimate allocation of public funds? Much mischief beyond discrimination and stigma may be created by over-interpretation of any such results (see also Editorial, “Scanning the Social Brain,” Nature Neuroscience, November 2003, 1239).

Privacy of Human Thought

Functional neuroimaging poses pivotal challenges to thought privacy. Should thought information have similar privacy status as genetic information? Probably not less, but perhaps more. No doubt, increased information about the neurobiology of how we think, and potentially why we think what we think, is likely to cause significant ethical dilemmas for clinicians and researchers, especially as measured thought patterns may vary as much with the hemodynamic properties of the relevant vasculature (D'Esposito et al. 2003) as with gender and day-to-day variations in mood and attention (Gur et al. 1975). Moreover, they are highly subject to variability in the culture and values of the people interpreting them (Beaulieu 2002; Dumit 2004). Watson (as cited in Mauron 2003, 245) has stated that the human genome is, at least in part, “what makes us.” The “brainome” (Kennedy 2003), then, touches more upon who we fundamentally “are”—gnarly territory, at best.

With a small leap of faith for real-world validity, the way in which these studies are edging toward biologic measurements of personhood is illustrated, for example, in a now-landmark trolley car study by Greene et al. (2001; see also Greene 2003). In this moral reasoning experiment, subjects were scanned while they made decisions about scenarios in which they could, for example, choose to save the lives of five people on a runaway trolley car by pulling a switch to send it on an adjacent track where one person stands (and who would not survive), or to push one of the people off the trolley and on to the track, thereby blocking the movement of the trolley and saving the remainder of the group. Other studies have required research participants to resolve statements of moral content (e.g., “The judge condemned the innocent man” or “The elderly are useless”) versus neutral content (“The painter used his hand as a paintbrush”; Moll et al. 2002). All such studies touch upon human thought processes that push the envelope of cognitive neuroscience into a domain of significant social concern in which privacy is a vital ingredient.

Genetic versus Neuro Determinism

Despite its probabilistic nature, genetic information tends to be viewed as a definitive form of health data. Individuals feel a sense of inevitability with regard to their genes, Clayton (2003) argues, as well as a sense of genetic determinism. Such determinism, or genomic essentialism (Mauron 2003), has become popular in our culture especially in the way that results of behavioral genetics studies are communicated to the public. We have read reports about genes for violence, homosexuality, alcoholism and even one for language. The essentialist stance is strengthened by the fact we have a tendency to believe that we are our brains. However, longstanding studies of developmental brain plasticity and new activation studies of reorganization after injury have amply demonstrated that any such reductionist view of complex phenotypes is incomplete without consideration of intervening external and cultural factors (Ward and Frackowiak 2004). Some have argued that the biological sciences, which deal with open systems, are improperly fit for universal and deterministic laws, as are physics and chemistry (Mayr 1998). Arguments that make neuroscience deterministic could well be flawed conceptually and empirically (Racine 2005). However, given the tendency to oversimplify complex genetic and brain data, discussion of meaning and practical use is a clear imperative.

Prediction of Disease, Public Health

Countless medical examples exist of symptomatic individuals who, with an inherited genetic defect for a given disorder, must carefully monitor their daily activities to ensure their own health and safety and that of others. But what of the asymptomatic individual who learns from a functional neuroimage of a predisposition to a disease of the central nervous system that ultimately affects cognitive performance and lifelong independence? What are the implications for third parties, as in the case of neurogenetic disorders, for which functional patterns may surface as sensitive predictors of disease? What is the new role of physicians in the entrepreneurial world of self-referred imaging services?

Work on imaging-based diagnosis holds enormous promise for providing new, quantitative evidence for otherwise qualitative diagnoses based on clinical findings, however it also raises compelling questions about what cautions are needed as patients yearn for earlier and earlier diagnosis about diseases for which cures or even treatments do not yet exist. Will such data provide welcome new information or impose new burdens on families, physicians and allied health care professionals? Who will have access to this technology? How will physicians and patients incorporate these new types of data into their reasoning about treatment, compliance and life planning? Access to advanced technologies by the privileged only, whether for diagnosis, medical intervention or for a competitive neurocognitive edge, will only further upset an already delicate and hardly acceptable status quo.

Confidentiality and Responsibility

We are in an era of neuroinformatics in which sharing of genetic, brain, and other data is encouraged. In some cases of large federally sponsored research, brain data sharing is even required (Koslow and Hyman 2000). With only partial brain information is now needed to identify research participants, and new imaging genomics studies (Hariri and Weinberger 2003) coupling genetic information with brain mapping (“genotyped cognition”; Hammann and Canli 2004), major new uncertainties exist about the safety and confidentiality of data stored in cyberspace. Other issues concern the protection of human subjects, including confidentiality and responsibility related to incidental findings. What if pathology is discovered unexpectedly in a shared data set? With whom does the burden of disclosure and care lie—the primary or secondary laboratory? Beyond the laboratory, how shall commercial use of freely shared brain imaging data in the for-profit sector be defined? No doubt, countless other examples exist in research beyond these few.

As we have seen, our concept of legal responsibility may also be changed by neuroimaging. In the United Kingdom, cautions about the use of neuroimages such as PET in the courtroom have already been expressed. In 2002, for example, at a debate entitled “Neuroscience and the Law” hosted by the Royal Institution of Great Britain, forensic psychiatrist and criminal barrister Eastman argued that the neuroimaging science is still too imprecise to make “an unequivocal connection between brain structure and behavior.” “Even if … psychopaths have physically different brains from other people, does it mean anything? Does an abnormal brain automatically mean abnormal behavior? Does it mean a loss of control sufficient to impact on legal responsibility? Do you abolish free will on the basis of an odd brain scan?” Even while neuroimaging cannot establish moral culpability (Kulynych 1997) of where, when, or how a crime occurred, nor individual guilt (Committee to Review the Scientific Evidence on the Polygraph 2003), the constant stream of innovative scientific approaches is aimed at deriving biologic correlates for behaviors committed in the past (Illes, 2005) is unrelenting. As we seek to understand responsibility of others through their biology, it is incumbent upon us to contemplate, yet again, our own responsibilities in interpreting such information, and in protecting access and appropriate use.

Crossroads

In Table 2 we summarize areas where ELSI in genetics converge with and diverge from neuroimaging. These are the crossroads at which we can begin to transition to our thinking about the ethics of making brain maps from our experience with genetics. As the discussion above and this table both show, similarities are striking and span the domains of both research and clinical ethics. They include profound practical benefits, including new knowledge about the human condition and knowledge that informs self-determination and life planning. They include negatives, such as the potential for personal and legal discrimination, inequities of access, risks to confidentiality, inaccuracies inherent to predictive testing of any nature and associated anxiety (Michie et al. 2002), and commercial use (Merz et al. 2002). Pressing issues unique to genetics but not to neuroimaging are not apparent, but the reverse is noteworthy in as much as the wide range of technical and subjective factors, including paradigmatic, physiologic and investigator biases in research play into the interpretation of results and the global potential for biologizing human experience that reaches far beyond any previous window on individual traits.

Table 2.

Comparison of Ethical, Legal and Social Issues in Genetics and Functional Neuroimaging

| ELSI variables | Gene hunting, Gene testing | Functional neuroimaging |

|---|---|---|

| In practice: | ||

| Risk of discrimination, stigma, coercion | Yes | Not at present, but growing concern exists for the evolution of the technology and expanding use. |

| Risk to privacy | Yes | Yes |

| Distributive justice | Yes | Yes, once the technology moves into mainstream clinical medicine. |

| Diagnostic uses | Yes | Emerging |

| Prediction | Yes | Emerging |

| Commercial use | Yes | Emerging; some limited availability already exists in the direct-to-consumer marketplace. |

| In research | ||

| Paradigmatic variables: Results subject to variability in test used | Potentially but not considered a significant risk. | Highly significant given variability in equipment, hypothesis-testing, stimulus design and approaches to data analysis. |

| Physiologic variables: Results subject to physiologic and day-to-day variations. | No | Highly significant given fluctuations, for example, in blood flow, mood, and gender-related physiology. |

| Investigator variables: Results subject to variability of interpretation. | No, but standards for testing are not widespread. | Highly significant, especially when interpretation of data interacts with individual social values and culture. |

| Global issues: | ||

| Biologization of personal thought. | Possibly in mental illness and neuro-degenerative disease. | Highly significant as complex thought becomes quantified and visualized on brain maps. |

INTERPRETATION AS A KEY NEUROETHICAL CHALLENGE

The idea that the genome is the “secular equivalent of the soul” has been legitimately criticized (Mauron 2001). New to neuroethics will be the need to tackle responsibly—with the inevitable and omnipresent working hypothesis (or the “astonishing hypothesis” to quote Crick 1997)—that the mind is the brain. Responsible and careful interpretation of data will therefore become a crucial issue as we wrestle to untangle what we image from what we imagine. Here, genetics as a model is limited and bioethicists will have the greatest role in bringing critical thinking to the field. Fundamentally, the challenge is two-fold as proper ethical interpretation is a crucial concern at both the scientific and the social level.

While fMRI today may surpass other neuroimaging techniques in its use for understanding human behaviors that may have practical relevance, we are witnessing a dynamic stream of new applications and new technical possibilities. Like genetic testing, models for minimizing harm that may result from false positives and inappropriate attributions of cause-and-effect to otherwise correlative results are critical. Apart from genetic testing, brain maps can be readily portrayed as iconic proof of pathology to people at any level of literacy. Yet, as we have seen, the brain image represents unparalleled complexity—from the specialized medical equipment needed to acquire a scan, to the array of parameters used to elicit activations and the statistical thresholds set to draw out meaningful patterns, to the expertise required for the objective interpretation of the maps themselves. Moreover, an absence of standards of practice in the laboratory (in fact, innovation and creativity still define the state-of-the-art in neuroimaging today) and the medicolegal setting creates another layer of complexity for drawing conclusions about behavior, responsibility and cognitive well-being (Kulynych 1997; Nelkin and Tancredi 1989) that will need to be penetrated with appropriately responsive ethical approaches. With dynamic images in hand, we may forget the epistemological limits of how the images were produced, including variability in research designs, statistical treatment of the data, and resolution. It is worth recalling that, in the past, various models of the brain have been proposed by great minds only to be seen later as mere imagination of the brain's real functioning. Descartes used pneumatics as a paradigm to explain how the “animal spirits” were produced by the flow of blood from the heart to the brain (Changeux 1983). Later on, the eminent anatomist Franz Joseph Gall proposed phrenology to the courts for establishing facts and choosing appropriate sentences for convicted criminals (Lanteri-Laura 1996). In the twentieth century, Moniz's psychosurgery procedures certainly left behind an “unhappy legacy” (Gostin 1980).

Today, some scientists and philosophers urge that we adopt the computer metaphor, neural networks or other models to understand brain function. However different and in some sense far apart, these examples highlight cautions needed in the interpretation of brain findings and their intended applications. In an issue of the journal Brain and Cognition that represented a pioneering venture into ethical issues in neuroimaging (Illes 2002), the community of authors who contributed to it already then cited cautions of interpretation as a common concern. These cautions have been reiterated by others (e.g., Gore et al. 2003), and alone justify the increasing attention to frontier neurotechnologies, their capabilities and limitations, and new ethical approaches for thinking about them (see also Blank, 1999).

When links are made between neuroimaging findings and our self-concepts in particular, it is even clearer that the ethics of genetics can only partially help settle ethical issues. Genetics and genomics have provided fertile ground for many ethical reflections on human nature, but the relationship between the brain and the self is far more direct than the link between genes and personal identity (Mauron 2003). The locus for integrating behavior resides in the brain, even if discrete features are determined by our genes. Whether neurotechnology measures that behavior through imaging, or manipulates it through implants of neural tissue or devices, it will fundamentally alter the dynamic between personal identity, responsibility and free will in ways that genetics never has. Indeed, neurotechnologies as a whole are challenging our sense of personhood and providing new tools to society for judging it (Wolpe 2002).

Interpretation of neuroimaging studies are not only bound by scientific frameworks, but also cultural and anthropological ones. Consider concepts such as “moral emotions” that are based on assumptions that some emotions are moral and others not. They illustrate the cultural aspect of the interpretation challenge, which is based on the fact that the self is defined in diverse ways. For example, central to Buddhism is the Doctrine of No-Soul, whereas in Hinduism, the self is a religious and metaphysical concept (Morris 1994). Even within Western traditions, that may appear to be monolithic, various beliefs have served as “sources of the self” (Taylor 1989). As Winslade and Rockwell (2002) wrote, “Humans are forever prone to make premature and presumptuous claims of new knowledge…. One may think that brain imagery will reveal mysteries of the human mind. But it may only help us gradually comprehend the organic, chemical and physiological features of the brain rather than provide the keys to unlock the secrets of human behavior and motivation.” Whatever the outcomes of imaging turn out to be, they will depend on scientific as well as cultural scrutiny of neuroimaging research.

In a study where neuroscientists have teamed-up with Buddhist monks to understand the mind and test for insights gained by meditation (Global News Wire 2003), culturally–laden concepts such as ‘person,’ and ‘emotions’ are being questioned by imaging. Some argue that consciousness and spirituality could be changed by such findings on the brain. Therefore not only does culture penetrate neuroimaging; neuroimaging is increasingly penetrating non-scientific culture. This is why neuroethics needs to consider not only ethics of neuroscience but also a neuroscience of ethics (Roskies 2002) and, we may add, reflection on their scientific and cultural implications.

Regardless of the functional neuroimaging technology du jour, we are left with lingering interpretation and other questions that any new ethical approach for brain imaging will have to address. Time, scholarly dedication and collaboration across the vested disciplines will help resolve them. Some of these questions, which will inevitably raise the bar and challenges of interpretation are:

Revisiting a classic dichotomy in the conduct of research, is there neuroimaging research that we can do (or will be able to do) but ought not to? If we were to accept that the biology of social processes studied within the constraints of the laboratory translates seamlessly to real-world validity, should the contents of ours minds even be studied this way (Foster et al. 2003)? Who should decide and according to what scientific and cultural parameters?

What are the trade-offs between cautious research with public oversight versus the potential for over-regulation in a reaction to adverse or reckless events? Many lessons may be learned from genetics, but “reckless” will surely take on new meaning in discussions about the neurobiology of moral reasoning and social behavior.

How can the reductionist approach of neuroimaging to human behavior be made compatible and complementary to approaches represented by philosophy, sociology and anthropology? How will applications based upon this approach interact with wider cultural perspectives on the self?

Will the large investment in neuroimaging be justified by new knowledge? Are there some forms of funding that should be eschewed because they may lead to methods for thought control or personal financial gain?

What new ethical challenges will neurotechnologies bring us in the future? What will the portability of near infra-red optical imaging offer? Shall transcranial magnetic stimulation be transferred from the medical arena for treating depression to the open market for boosting or fine-tuning cognition like caffeine or other over-the-counter stimulants? With advances in reporter probes (Kim 2003), what ethical approaches will be needed for managing new information and therapies brought forward from the coupling of molecular imaging, targeted either in the central nervous system or elsewhere, and gene therapy trials?

The answers to these questions will surely not be binary. As in the past, they will depend fundamentally on individuals involved and the context in which they are confronted. Fresh thinking, especially about the relationship between the self and the brain, will have to be elaborated for these new types of brain data as the layers of complexity of interpretation and overall stakes are arguably far greater than ever before. Commenting on Aldous Huvley's Brave New World (1932) written approximately 100 years after Soren Kierkegaard's foresight on brain pulses, Pontecorvo wrote:

The ethical issues raised by … feats of human engineering are qualitatively no different from those we shall have to face in the future. The difference will be quantitative: in scale and rate. Even so, the individual steps may still go on being so small that none of them singly will bring those issues forcibly to light: but the sum total is likely to be tremendous… ” (as cited in Stevens, 2000; pp. 81–82).

Pontecorvo was partly right: There is no doubt that the sum total is tremendous. He could never have predicted, however, the extent to which changing qualities would parallel changing quantities.

ADDRESSING THE CHALLENGE

This paper explored the evolution of functional brain imaging capabilities that have led to bold new findings and claims about behavior in health and disease. Recent neuroimaging findings and their proposed applications show that a great number of new ethical issues will be raised. ELSI variables have given us a invaluable starting point, but, neuroethics will need to address issues of data interpretation in great depth both at the scientific and the cultural level. Neuroimaging illustrates this double challenge remarkably since imaging technologies and methodologies are grounded in scientific assumptions. Meanwhile, imaging is an area where investigation of social behavior and selfhood is rapidly increasing and becoming a legitimate endeavor. Indeed, at the heart of imaging is an effort to make sense of an image in need of interpretation. Right along side with the new concept of “imaging neuroethics” others like “neuromarketing,” “neuroeconomics,” “neuroenablement” (Lynch 2004), “neurotheology” and even “neurocorrection” (Farah et al. 2004) have been spawned. All raise concerns about scientifically-warranted and culturally-sensitive interpretation an application.

With the existence of many views about mind and brain, neuroethics will have to foster discussions among neuroscientists whose methods may vary and interpretation of results differ. These discussions will have extend to include meaningful dialogue with scholars in the humanities about concepts like morality, moral judgments and moral emotions—concepts in need of critical appraisal before we can seriously investigate their neural correlates. Open dialogue with the public is no less necessary given that different cultural and religious perspectives subject findings to different interpretations and ethical boundaries. Responsible dissemination of information through the media and public education are also essential in closing the gap between scientists and concerned citizens, especially as the complexity and abstractness of results increase.

Interpretation necessitates creative human imagination and conscious awareness of scientific and cultural presuppositions. Hence, the new generation of neuroethicists must be committed to openly examining the epistemological limits of imagery (Racine and Illes 2004), interdisciplinary appraisal, and public perspectives on these issues. Bioethicists will continue to bring ethical knowledge to the discussion and identify and clarify moral quandaries; bioethicists however, will also have to work as facilitators of a broader dialogue where different perspectives can meet and contribute to a deeper understanding of the issues. Therefore, while in the past technology and ethics may have leapfrogged each other, in this new era, bioethicists and neuroscientists will be well served by working gracefully together to understand the power of a visual image and the impact it can have on people and collectively on society.

ACKNOWLEDGMENTS

We are indebted to Dr. David Magnus, Dr. HFM Van der Loos, Ms. Kim Karetsky, and Connie Stockham for their invaluable input to this paper.

Footnotes

In a 1992 New York murder case, People v. Weinstein, Weinstein was accused of strangling his wife to death and throwing her body from a twelfth-floor apartment. Weinstein's functional PET scans and structural MRI images revealed an arachnoid cyst, and the evidence was admitted in court for the purpose of establishing an insanity defense. The ruling was made despite evidence that such pathology has no known link to criminal behavior. The PET scan depicted the juxtaposition of a black lesion (the cyst) on the red and green colored areas of ’normal’ brain activity, and was considered so profound as to prove to the court that Weinstein's brain was not functioning within normal parameters. The prosecution in this case accepted a manslaughter plea.

Brain fingerprinting played a significant role in the case of Terry Harrington, for example, whose murder conviction was reversed and a new trial ordered after he spent 22 years in prison (State of Iowa v. Terry Harrington). The case dates back to 1977, when Harrington, who was 17 years old at the time, was convicted of murdering a retired police officer. When, in 2000, Harrington underwent fingerprinting, his brain did not emit the expected electroencephalographic patterns in response to critical details of the murder. The results were interpreted to suggest that he was not present at the murder site, a conclusion corroborated by the fact that his brain did emit the requisite patterns in response to details of the event used as his alibi in the case. When confronted with the brain fingerprinting evidence, the original prosecution witness recanted his testimony and admitted that he had lied during the original trial, falsely accusing Harrington to avoid being prosecuted himself.

In another fMRI experiment related to deception and more broadly to lying, Schacter et al. (1998) demonstrated the potential to discern false from truthful memory. In an even more recent study, Anderson et al. (2004) showed areas of neural activation in the dorsolateral pre-frontal cortex associated with the active suppression of memory.

Even in these early stages, some ire about who is conducting what kind of research and with what motivation has already surfaced, especially with respect to research that would seem only to yield financial rewards and not deliver either scholarly or medical benefit (Gardner 2003).

DISCLOSURES

Supported by The Greenwall Foundation, NIH/NINDS RO1 #NS045831 and the Social Sciences and Humanities Research Council of Canada # 756-2004-0434.

REFERENCES

- Ake v. Oklahoma. 1985. 470 U.S. 68.

- Adolphs R, Tranel D, Damasio H, Damasio A. Fear and the Human Amygdala. The Journal of Neuroscience. 1995;5:5879–5892. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson MC, Ochsner KN, Kuhls B, Cooper J, Robertson E, Gabrieli SW, Glover GH, Gabrieli JDE. Neural systems underlying the suppression of unwanted memories. Science. 2004;303(5655):232–235. doi: 10.1126/science.1089504. [DOI] [PubMed] [Google Scholar]

- Angier N. Why we're so nice: We're wired to cooperate. New York Times. 2002;1 [Google Scholar]

- Beaulieu A. Images are not the (only) truth: Brain mapping, visual knowledge and iconoclasm. Science, Technology and Human Values. 2002;27:53–87. [Google Scholar]

- Blank RH. Brain policy: How the new neurosciences will change our lives and our politics. Georgetown University Press; Washington, DC: 1999. [Google Scholar]

- Camerer CF. Strategizing in the brain. Science. 2003;300(5626):1673–1675. doi: 10.1126/science.1086215. [DOI] [PubMed] [Google Scholar]

- Canli T, Amin Z. Neuroimaging of emotion and personality: Scientific evidence and ethical considerations. Brain and Cognition. 2002;50(3):431–444. doi: 10.1016/s0278-2626(02)00517-1. [DOI] [PubMed] [Google Scholar]

- Canli T, Siver H, Whitfield SL, Gotlib IH, Gabrieli JDE. Amygdala response to happy faces as a function of extraversion. Science. 2002;296(5576):2191. doi: 10.1126/science.1068749. [DOI] [PubMed] [Google Scholar]

- Changeux J-P. In: Neuronal Man. Garey Laurence., editor. Princeton University Press; Princeton, NJ: 1997. 1997. [Google Scholar]

- Clayton EW. Ethical, legal and social implications of genomic medicine. New England Journal of Medicine. 2003;349(6):562–569. doi: 10.1056/NEJMra012577. [DOI] [PubMed] [Google Scholar]

- The polygraph and lie detection. National Academy Press; Washington, DC: 2003. Committee to Review the Scientific Evidence on the Polygraph. [Google Scholar]

- Crick F. The astonishing hypothesis: The scientific search for the soul. Simon & Schuster; London: 1995. [Google Scholar]

- Curran P. The Montreal Gazette. Montreal: Oct 19, Soul search: Emotions, spirituality and transcendence: Scientist gains notoriefy for work with nuns; p. A14. [Google Scholar]

- Damasio AR. Descartes' error. Penguin Putnam Pubs; Netcong, NJ: 1994. [Google Scholar]

- D'Esposito M, Deouell LY, Gazzaley A. Alterations in the BOLD fMRI signal with ageing and disease: A challenge for neuroimaging. Nature Reviews Neuroscience. 2003;4:863–872. doi: 10.1038/nrn1246. [DOI] [PubMed] [Google Scholar]

- Dumit J. Picturing personhood: Brain scan and biomedical identity. Princeton University Press; Princeton, NJ: 2004. [Google Scholar]

- Scanning the social brain. Nature Neuroscience. 2003;6(12):1239. doi: 10.1038/nn1203-1239. Editorial. [DOI] [PubMed] [Google Scholar]

- Evans JW. http://www.law.uh.edu/healthlawperspectives/HealthPolicy/021231Functional.html. Functional Magnetic Resonance Images and Lie Detection. 2005 January 31; access date:

- Farah M, Illes J, Cook-Deegan R, Gardner H, Kandel E, King P, Parens E, Sahakian B, Wolpe PR. Neurocognitive enhancement: What can we do? what ought we not do? Nature Reviews Neuroscience. 2004;5:421–425. doi: 10.1038/nrn1390. [DOI] [PubMed] [Google Scholar]

- Farwell LA, Smith SS. Using brain MERMER testing to detect concealed knowledge despite efforts to conceal. Journal of Forensic Sciences. 2001;46(1):1–9. [PubMed] [Google Scholar]

- Foster KR, Wolpe PR, Caplan AL. Bioethics and the brain. IEEE Spectrum. 2003:34–39. [Google Scholar]

- Gardner H. There's a sucker in every prefrontal cortex (Opinion Editorial) New York Times. 2003 November 30;:26. [Google Scholar]

- Gehring WJ, Willoughby AR. The medial frontal cortex and the rapid processing of monetary gains and losses. Science. 2002;295(5563):2279–2282. doi: 10.1126/science.1066893. [DOI] [PubMed] [Google Scholar]

- Gehring WJ, Karpinski A, Hilton JL. Thinking about interracial interactions. Nature Neuroscience. 2003;6(12):1241–1239. doi: 10.1038/nn1203-1241. [DOI] [PubMed] [Google Scholar]

- Global News Wire—Asia Africa Intelligence Wire. 2003 The resonance of the mind (November 20) [Google Scholar]

- Golby AJ, Gabrieli JDE, Chiao JY, Eberhardt JL. Differential responses in the fusiform region to same-race and other-race faces. Nature Neuroscience. 2001;4:845–850. doi: 10.1038/90565. [DOI] [PubMed] [Google Scholar]

- Gore JC, Prost RW, Hendee WR. Functional MRI is fundamentally limited by an inadequate understanding of the origin of fMRI signals in tissue. Point/Counterpoint. Medical Physics. 2003;30(11):2859–2861. doi: 10.1118/1.1619135. [DOI] [PubMed] [Google Scholar]

- Gostin LO. Ethical considerations of psychosurgery: The unhappy legacy of the pre-frontal lobotomy. Journal of Medical Ethics. 1980;6(1):149–156. doi: 10.1136/jme.6.3.149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greely HT. Neuroethics? Health Law News. 2002 July;:5. Health Law & Policy Institute, University of Houston Law Center. 2002. [Google Scholar]

- Greely HT. Prediction, Litigation, Privacy, and Property: Some possible Legal and Social implications of Advances in Neuroscience. In: Garland Brett., editor. Neuroscience and the Law: Brain, Mind, and the Scales of Justice. The Dana Press; New York, NY: 2004. [Google Scholar]

- Greene JD. From neural ‘is’ to moral ‘ought’: What are the moral implications of neuroscientific moral psychology? Nature Review Neuroscience. 2003;4:847–850. doi: 10.1038/nrn1224. [DOI] [PubMed] [Google Scholar]

- Greene JD, Sommerville RB, Nystrom LE, et al. An fMRI investigation of emotional engagement in moral judgment. Science. 2001;293(5537):2105–2108. doi: 10.1126/science.1062872. [DOI] [PubMed] [Google Scholar]

- Gur RC, Mozley LH, Mozley PD, et al. Sex differences in regional cerebral glucose metabolism during a resting state. Science. 1995;267(5197):528–531. doi: 10.1126/science.7824953. [DOI] [PubMed] [Google Scholar]

- Hammann S, Canli T. Individual differences in emotion processing. Curr Opinion in Neurobiology. 2004;14(2):233–238. doi: 10.1016/j.conb.2004.03.010. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Weinberger DR. Functional neuroimaging of genetic variation in serotonergic neurotransmission. Genes, Brain, Behavior. 2003;2(6):341–349. doi: 10.1046/j.1601-1848.2003.00048.x. [DOI] [PubMed] [Google Scholar]

- Heilman KH. The Neurobiology of Emotional Experience. In: Salloway S, Malloy P, Cummings JL, editors. The Neuropsychiatry of Limbic and Subcortical Disorders. American Psychiatric Association; Washington, DC: 1997. pp. 133–142. [Google Scholar]

- Illes J, editor. Brain and Cognition. 3. Vol. 50. Academic Press; New York, NY: 2002. Ethical challenges in advanced neuroimaging. [Google Scholar]

- Illes J. Cerebrum, Special Issue on Neuroethics. The Dana Press; New York: 2005. A fish story: Brain maps, lie detection and personhood. [PubMed] [Google Scholar]

- Illes J, Kirschen MP, Gabrieli JDE. From neuroimaging to neuroethics. Nature Neuroscience. 2003;6(3):250. doi: 10.1038/nn0303-205. [DOI] [PubMed] [Google Scholar]

- Karbowski K. Sixty years of clinical electroencephalography. European Neurology. 1990;30(3):170–175. doi: 10.1159/000117338. [DOI] [PubMed] [Google Scholar]

- Kennedy D. Neuroethics: An uncertain future; Proceedings of the Society for Neuroscience Annual Meeting; New Orleans, Louisiana. 2003. [Google Scholar]

- Kierkegaard S. In: Papers and journals: A selection. Hannay A, editor. Penguin Books; New York: 1846. 1996. [Google Scholar]

- Kim EE. Targeted molecular imaging. Korean Journal of Radiology. 2003;4(4):201–210. doi: 10.3348/kjr.2003.4.4.201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klingberg T, Hedehus M, Temple E, Salz T, Gabrieli JD, Moseley ME, Poldrack RA. Microstructure of temporo-parietal white matter as a basis for reading ability: Evidence from diffusion tensor magnetic resonance imaging. Neuron. 2000;25(2):257–259. doi: 10.1016/s0896-6273(00)80911-3. [DOI] [PubMed] [Google Scholar]

- Koslow SH, Hyman SE. Human brain project: A program for the new millennium. Journal of Biology and Medicine. 2000;17:7–15. [Google Scholar]

- Kulynych J. Psychiatric neuroimaging evidence: A high tech crystal ball? Stanford Law Review. 1997;49:1249–1270. [Google Scholar]

- Langleben DD, Schroeder L, Maldjian JA, Gur RC, McDonald S, Ragland JD, O'Brien CP, Childress AR. Brain activity during simulated deception: An event-related functional magnetic resonance study. NeuroImage. 2002;15(3):727–732. doi: 10.1006/nimg.2001.1003. [DOI] [PubMed] [Google Scholar]

- Lanteri-Laura G. Examen historique et critique del'éthique en neuropsychiatrie, dans le domaine de la recherché sur le cerveau et les therapies. (Historical and critical examination of neuropsychiatry in brain research and therapy.) In: Huber Gérard., editor. Cerveau et psychisme humains: quelle éthique? (The Human Brain and Psyche: Ethical Considerations. John Libbey; Paris: 1996. [Google Scholar]

- LeDoux J. The emotional brain, fear, and the amygdala. Cellular and Molecular Neurobiology. 2003;23(4–5):727–738. doi: 10.1023/A:1025048802629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lynch Z. Neurotechnology and society. Annals of the New York Academy of Sciences. 2004;1013:229–233. doi: 10.1196/annals.1305.016. [DOI] [PubMed] [Google Scholar]

- Mariani SM. Neuroethics: How to leave the cave without going astray. Medscape General Medicine. 2003;5(4):33. [PubMed] [Google Scholar]

- Marshall JC, Fink GR. Cerebral localization then and now. Neuroimage. 2003;20:S2–S7. doi: 10.1016/j.neuroimage.2003.09.001. [DOI] [PubMed] [Google Scholar]

- Mauron A. Is the genome the secular equivalent of the soul? Science. 2001;291(5505):831–832. doi: 10.1126/science.1058768. [DOI] [PubMed] [Google Scholar]

- Mauron A. Renovating the house of being: Genomes, souls and selves. Annals of the New York Academy of Sciences. 2003;1001:240–252. doi: 10.1196/annals.1279.013. [DOI] [PubMed] [Google Scholar]

- Mayr E. Toward a new philosophy of biology. Harvard University Press; Cambridge, MA: 1988. [Google Scholar]

- Merz JF, Magnus D, Cho MK, et al. Protecting subjects' interests in genetics research. American Journal of Human Genetics. 70(4):965–971. doi: 10.1086/339767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michie S, Weinman J, Miller J, et al. Predictive genetic testing: High risk expectations in the face of low risk information. Journal of Behavoral Medicine. 2002;25(1):33–50. doi: 10.1023/a:1013537701374. [DOI] [PubMed] [Google Scholar]

- Miller G. The good, the bad, and the anterior cingulate. Science. 2002;295(5580):2193–2194. doi: 10.1126/science.295.5563.2193a. [DOI] [PubMed] [Google Scholar]

- Mobbs D, Greicius MD, Abdel-Azim E, Menon V, Reiss AL. Humor modulates the mesolimbic reward centers. Neuron. 2003;40(5):1041–1048. doi: 10.1016/s0896-6273(03)00751-7. [DOI] [PubMed] [Google Scholar]

- Moll J, de Oliviera-Souza R, Bramati I, Grafman J. Functional networks in emotional and nonmoral social judgments. Neuroimage. 2002;26:696–703. doi: 10.1006/nimg.2002.1118. [DOI] [PubMed] [Google Scholar]

- Moll J, de Oliviera-Souza R, Eslinger PJ. Morals and the human brain: A working model. Neuroreport. 2003;14(3):299–305. doi: 10.1097/00001756-200303030-00001. [DOI] [PubMed] [Google Scholar]

- Morris B. Anthropology of the self. The individual in cultural perspective. Pluto Press, CO; 1994. [Google Scholar]

- Neuroscience and the Law . Proceedings from “Neuroscience and the Law,” Royal Institution. London: 2002. [Google Scholar]

- Nelkin D, Tancredi L. Dangerous diagnostics: The social power of biological information. Basic Books; New York: 1989. [Google Scholar]

- People v. Jones. 1994. (620 N.Y.S.2d 656) N.Y. App. Div.

- People v. Weinstein. (591 NYS 2d 715); (Sup. Ct. 1992)

- Phelps EA, Cannistraci CJ, Cunningham WA. Intact performance on an indirect measure of race bias following amygdala damage. Neuropsychologia. 2003;41(2):203–208. doi: 10.1016/s0028-3932(02)00150-1. [DOI] [PubMed] [Google Scholar]

- Racine E, Illes J. Is neuroethics the heir of the ethics of genomics? Canadian Bioethics Society; Calgary, Alberta: 2004. [Google Scholar]

- Racine E. Pourquoi et comment tenir compte des neuroscience en éthique? Esquisse d'une approche neurophilosophique émergentiste et interdisciplinaire. Translation: (Why and how take into account neuroscience in ethics? Toward an emergentist and interdisciplinary neurophilosophical approach) Laval Théologique & Philosophique. in press. [Google Scholar]

- Raine A, Buchsbaum MS, Stanley J, Lottenberg S, Abel L, Stoddard J. Selective reductions in pre-frontal glucose metabolism in murderers. Biological Psychiatry. 1994;36:365–373. doi: 10.1016/0006-3223(94)91211-4. [DOI] [PubMed] [Google Scholar]

- Richeson JA, Baird AA, Gordon HL, et al. An fMRI investigation of the impact of interracial contact on executive function. Nature Neuroscience. 2003;6(12):1323–1327. doi: 10.1038/nn1156. [DOI] [PubMed] [Google Scholar]

- Roskies A. Neuroethics for a new millenium. Neuron. 2002;35(1):21–23. doi: 10.1016/s0896-6273(02)00763-8. [DOI] [PubMed] [Google Scholar]

- Rothenberg KH, Terry SF. Human genetics. Before it's too late—Addressing fear of genetic information. Science. 2002;297(5579):196–197. doi: 10.1126/science.1075221. [DOI] [PubMed] [Google Scholar]

- Sanfey AG, Rilling JK, Aronson JA, et al. The neural basis of economic decision-making in the ultimatum game. Science. 2003;300(5626):1755–1758. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- Savulescu J. Predictive genetic testing in children. Medical Journal of Australia. 2001;175(7):379–381. doi: 10.5694/j.1326-5377.2001.tb143625.x. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Buckner RL, Koutstaal W. Memory, consciousness and neuroimaging. Philosophical transactions of the Royal Society of London B. 1998;353:1861–1978. doi: 10.1098/rstb.1998.0338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiff ND, Rodriguez-Moreno D, Kamal A, Kim KHS, Giacina JT, Plum F, Hirsch J. FMRI reveals large-scale network activation in minimally conscious patients. Neurology. 2005 Feb.64:514–523. doi: 10.1212/01.WNL.0000150883.10285.44. [DOI] [PubMed] [Google Scholar]

- State of Iowa v. Terry Harrington. (284 N.W.2d 244; 1979 Iowa Sup.)

- Stevens MLT. Bioethics in America: Origins and Cultural Politics. The Johns Hopkins University Press; Baltimore, MD, USA: 2000. Quoting Pontecorvo G., “Prospects for Genetic Analysis of Man,” in Sonneborn T. M. The Control of Human Heridity and Evolution (Macmillan, New York, 1965, pp. 81–82) [Google Scholar]

- Taylor C. Sources of the self: The making of modern identity. Harvard University Press; Cambridge, MA: 1989. [Google Scholar]

- Thompson C. There's a sucker born in every medial prefrontal cortex. New York Times Magazine. 2003 October 26;:54. [Google Scholar]

- Ward NS, Frackowiak RS. Towards a new mapping of brain cortex function. Cerebrovascular Disease. 2004;17(Suppl 3):35–38. doi: 10.1159/000075303. [DOI] [PubMed] [Google Scholar]

- Weir RF, Lawrence SC, Fales E. Genes and human self-knowledge: Historical and philosophical reflections on moderns genetics. University of Iowa Press; Iowa City, IA: 1994. [Google Scholar]

- Wickelgren I. Tapping the mind. Science. 2003;299(5606):496–499. doi: 10.1126/science.299.5606.496. [DOI] [PubMed] [Google Scholar]

- Winslade WJ, Rockwell JW. Bioethics. Health Law News. 2002;1 Health Law & Policy Institute, University of Houston Law Center. [Google Scholar]

- Wolpe R. The neuroscience revolution. The Hastings Center Report. 2002 July-August; [PubMed] [Google Scholar]

- Zimmer C. How the mind reads other minds. Science. 2003;300(5622):1079–1080. doi: 10.1126/science.300.5622.1079. [DOI] [PubMed] [Google Scholar]