Abstract

Background

Current cognitive neuroscience models predict a right-hemispheric dominance for face processing in humans. However, neuroimaging and electromagnetic data in the literature provide conflicting evidence of a right-sided brain asymmetry for decoding the structural properties of faces. The purpose of this study was to investigate whether this inconsistency might be due to gender differences in hemispheric asymmetry.

Results

In this study, event-related brain potentials (ERPs) were recorded in 40 healthy, strictly right-handed individuals (20 women and 20 men) while they observed infants' faces expressing a variety of emotions. Early face-sensitive P1 and N1 responses to neutral vs. affective expressions were measured over the occipital/temporal cortices, and the responses were analyzed according to viewer gender. Along with a strong right hemispheric dominance for men, the results showed a lack of asymmetry for face processing in the amplitude of the occipito-temporal N1 response in women to both neutral and affective faces.

Conclusion

Men showed an asymmetric functioning of visual cortex while decoding faces and expressions, whereas women showed a more bilateral functioning. These results indicate the importance of gender effects in the lateralization of the occipito-temporal response during the processing of face identity, structure, familiarity, or affective content.

Background

Using functional magnetic resonance imaging (fMRI), Kanwisher and coworkers [1] found an area in the fusiform gyrus that was significantly more active when the subjects viewed faces than when they viewed assorted common objects. The authors concluded that this area, hereafter called the fusiform face area (FFA), might be specifically involved in the perception of faces, not ruling out that other structures might be play a role in this process.

Indeed, Haxby et al. (2000) provided evidence that face perception involves a distributed and hierarchically organized network of the occipito-temporal regions. In this model, the core system consists of the extrastriate visual cortex (FFA), which mediates the analysis of face structure, while the superior temporal sulcus (STS) mediates the analysis of changeable aspects of the face, such as eye gaze, facial expression, and lip movements.

Interestingly, the Kanwisher et al. study [1] showed an activation of FFA only in the right hemisphere in about half the subjects (both men and women), whereas the other subjects showed bilateral activation. These results raised the possibility of functional hemispheric asymmetry in the FFA. Studies addressing this possibility have provided conflicting evidence: several human [2-6] and animal studies [7] found stronger activity in the right hemisphere, while other studies failed to support the notion of a strict right-lateralization (e.g. [8] performed in 5 men and 7 women).

Closer examination of several studies offers more details, but no consensus, on hemispheric asymmetry in areas devoted to face processing. Yovel and Kanwisher [9] found significantly higher fMRI responses to faces than to objects in both the left and right mid-fusiform gyrus regions, although this effect was slightly greater in the right than the left FFA. In another fMRI study [10], a region that responded more strongly to faces than to objects was found within the right fusiform gyrus in 8 subjects (both women and men); however, in 6 of these subjects the same significant pattern was also found in the left fusiform gyrus. Recently, Pourtois and coworkers [11] performed an fMRI study on face identity processing on a group of 8 men and 6 women. Results revealed a reduced response in the lateral occipital and fusiform cortex with face repetition. Specifically, view-sensitive repetition effects were found in both the left and right fusiform cortices, while the left (but not right) fusiform cortex showed viewpoint-independent repetition effects. These findings were interpreted as a sign of left hemisphere dominance in terms of the ability to link visual facial appearance with specific identity knowledge. In line with this, a case has been reported of hyperfamiliarity for unknown faces after left lateral temporo-occipital damage in a female patient [12], suggesting a possible role of the left hemisphere in identity processing. Again, a recent fMRI study [13] carried out on 8 women and 7 men provided evidence of a significant activation of right fusiform and occipital gyrus (2260 voxels), left fusiform gyrus, left inferior, and middle temporal gyrus (3022 voxels) for a face familiarity effect during gender classification, thus providing a complex lateralization pattern for processing face structures and properties.

Event-related potential (ERP) and magnetoencephalography (MEG) recordings of brain activity have provided crucial information about the temporal unfolding of neural mechanisms involved in face processing (see a list of recent papers in Table 1). In particular, these recordings have identified a posterior-lateral negative peak at a latency of approximately 170 ms (referred to as "N170"). This peak has a larger amplitude in response to faces than to other control stimuli (such as houses, objects, trees, or words), and is sensitive to face inversion (upright vs. inverted). N170 is thought to reflect processes involved in the structural encoding of faces. In addition, several studies have found that affective information modulates brain response to human faces as early as 120–150 ms [14-17]. The combination of electromagnetic and functional neuroimaging data identified the possible generator of N1 in the ventral occipito-temporal cortex (FFA and superior temporal sulcus or STS) [16,18,19,53], suggesting that N1 might be the electromagnetic manifestation of a face-processing area activity. An analysis of the relevant literature shows that the topographic distribution of the face-specific N170 is not always right-sided in right-handed individuals. Based on a thorough review of methods and subject samples used in the relevant literature (see Table 1), we hypothesized that this topographic distribution might depend on marked inter-individual differences, possibly related to viewer gender.

Table 1.

Recent ERP and MEG papers reporting P1 and N170 topographic distributions. ERP components were recorded in response to faces and other visual objects over the left and right occipital/temporal areas.

| Papers (Authors and year) | # Ss | Gender | P1 and N1 distribution and effects | Right asymmetry for face processing | ||

| F | M | P1 | N1 | |||

| [14] Batty & Taylor (2003) | 26 | 13 | 13 | P1 larger over the RH but not sensitive to emotions or face-specific. N1 emotion-specific but bilateral. | Yes (Not face- specific) | No |

| [43] Bentin et al. (1999) | 1 | - | 1 | Prosopagnosic patient with right temporal abnormality and not face-sensitive N170. | Yes | |

| [44] Caldara et al. (2004) | 12 | 6 | 6 | N1 larger over the RH to both Asian and Caucasian faces. | - | Yes (not race-specific) |

| [45] Campanella et al. (2000) | 12 | - | 12 | Face identity sensitive N170 larger at right posterior/temporal site. | - | Yes |

| [46] Esslen et al. (2004) | 17 | 10 | 7 | N170 to neutral faces activates the right fusiform gyrus (LORETA). | - | Yes |

| [47] George et al. (2005) | 13 | 7 | 6 | Mooney faces. P1 bilateral not modulated by face inversion. N1 larger on the right hemisphere to both inverted and upright faces. | No | Yes (to both inverted and upright faces) |

| [48] Gliga et al. (2005) | 10 | 7 | 3 | N1 larger over the RH to both bodies and faces. | - | Yes |

| [15] Halgren et al. (2000) | 10 | 2 | 8 | Overall, laterality greater on the right than the left in fusiform face-selective activity, but a high level of individual variability. | No | Yes |

| [20] Harris et al. (2005) | 2 | 2 | - | 2 female prosopagnosic patients. For NM M170 not face-sensitive. Face selectivity effect (faces vs. houses) for KL >LH (RH = 17.3 fT, LH = 26.9 fT). | - | No |

| [20] Harris et al. (2005) | 3 | - | 3 | 3 male prosopagnosic patients. For EB and KNL M170 not face-sensitive. Face selectivity effect for ML > RH. (RH = 57.6 fT, LH = 35.6 fT). | - | Yes |

| [20] Harris et al. (2005) | 17 | 9 | 8 | M170 larger to faces than houses and tended to be larger at LH (p < 0.08). | No | |

| [18] Henson et al. (2003) | 18 | 8 | 10 | Face sensitive N170 was larger at superior temporal area. | - | Yes |

| [49] Herrmann et al. (2005) | 39 | 19 | 20 | Bilateral P1 and N1 larger to faces than buildings. | No | No |

| [50] Holmes et al. (2005) | 14 | 5 | 9 | Not specifically mentioned. From inspection of Fig. 2. P1 much larger on the RH to both faces and houses. N1 larger on the RH to faces only (unfiltered stimuli). | Yes (Not face- specific) | Yes |

| [51] Itier & Taylor (2004) | 36 | 18 | 18 | Face specific P1 was bilateral. N170 was larger over the RH at parietal/occipital sites. | No | Yes |

| [52] Itier & Taylor (2004) | 16 | 7 | 9 | N170 to upright faces is bilateral | - | No |

| [53] Itier & Taylor (2004) | 16 | 7 | 9 | Face-specific P1 is larger over the RH. N1 was bilateral at occipital sites. It was larger at right parietal sites to objects, inverted faces, and upright faces. | Yes | Yes/no (to objects, inverted and upright faces.) |

| [21] Jemel et al. (2005) | 15 | 10 | 5 | No hemispheric asymmetry for P1 or N1 to faces. | No | No |

| [54] Kovacs et al. (2005) | 12 | 4 | 8 | Face (vs. hand)-specific N170 was larger over the RH. | - | Yes |

| [55] Latinus et al. (2005) | 26 | 13 | 13 | Mooney faces. Bilateral or left-sided P1 not sensitive to face-inversion. N1 larger on the RH to upright faces. | No | Yes |

| [19] Liu et al. (2000) | 17 | * | * | M170 larger to faces than animal and human forms at bilateral occipital/temporal sensors. | - | No |

| [22] Meeren et al. (2005) | 12 | 9 | 3 | Face-body compound images: lead main effect (p = 0.04) for P1 amplitude with O1>O2>Oz, but post hoc tests failed to reveal significant differences. ERPs to isolated faces: P1 and N1 to angry and fearful faces were not right-sided. | No | No |

| [16] Pizzagalli et al. (2002) | 18 | 7 | 11 | N1 larger over the right fusiform gyrus and affected by face likeness. | - | Yes |

| [17] Pourtois et al. (2005) | 13 | 9 | 4 | Unfiltered faces: P1 affected by emotional content (fear vs. neutral) in both hemispheres. N1 strongly right-lateralized to upright vs. inverted faces. LAURA source estimation for P1 and N1 topography in the left extra-striate visual cortex | No | Yes (but LH generator for source estimation) |

| [23] Righart & Gelder (2005) | 12 | 10 | 2 | N170 amplitudes were more negative for faces in fearful contexts compared to faces in neutral contexts, but only significantly for electrodes in the left hemisphere. | No | No |

| [56] Rossion et al. (1999) | 14 | 5 | 9 | N1 larger at posterior temporal sites to inverted faces. | No | Yes (not upright specific) |

| [6] Rossion et al. (2003) | 16 | 6 | 10 | N170 for faces compared to words in the right hemisphere only. | No | Yes |

| [57] Rousselet et al. (2004) | 24 | 12 | 12 | P1 larger on the RH for both objects, animal and human faces. N1 much larger on the RH for face than objects, but asymmetry found for objects as well. | Yes (Not face- specific) | Yes/No (Not face- specific) |

| [24] Valkonen-Korhonen et al. (2005) | 19 | 15 | 4 | Control group: N1 Larger at T5/T6 in an emotion detection task (happy upright faces). | - | No |

| [58] Yovel et al. (2003) | 12 | 7 | 5 | N1 to symmetrical and left or right hemi-faces was larger at right temporal site. | - | Yes |

It is of great interest to note that face-specific N170 responses were found to be bilateral or even left-sided in studies involving a sample in which women were the majority [20-24]. Equally interesting, in a recent paper on prosopagnosia in which both male and female patients were considered [20], 2 out of the 3 male patients showed an M170 response which was not sensitive to faces (as opposed to houses) while the third patient showed a right-sided sensitivity to faces. As for the two female patients, one of them showed a lack of sensitivity to faces at M170 level, while the second one showed a left-sided sensitivity.

Many face processing studies using MEG, ERP, neuroimaging, or neuropsychological data do not take subject gender into account as a variable that might affect asymmetry in brain activation. The specific goal of this study was to investigate the timing and topography of brain activity in men and women during processing of neutral and affective faces in order to detect whether there are gender differences in lateralization. To address this question, early face-sensitive P1 and N1 responses over the occipital/temporal cortices to neutral and affective expressions were measured in strictly right-handed men and women.

Results

Behavioral data

A repeated-measures ANOVA was performed on mean response times (RTs), but showed no significant gender effect on response speed (F[1,38] = 0.617; p = 0.44; females = 658 ms, males = 672 ms). Men and women subjects also did not significantly differ in accuracy; however, error percentages were too few to be statistically analyzed. The emotional valence of faces affected RTs (F[1,38] = 191; p < 0.000001), which were faster to negative expressions (613 ms) than to neutral expressions (717 ms) for all viewers.

Latency

Overall, P1 was earlier in response to distressed (111 ms, SE = 1.26) vs. neutral faces (114 ms, SE = 1.33), as shown by the significant "emotion" factor (F[1,38] = 8.65, p < 0.005). The analysis of P1 latency values also showed a strong "gender" effect (F[1,38] = 7.56; p < 0.009) with earlier P1 responses in women (111 ms, SE = 1.11) than men (115 ms, SE = 1.11), as shown in Fig. 1.

Figure 1.

Mean latency (in ms) of the P1 component recorded at the lateral occipital area (independent of hemispheric site) and analyzed according to subject gender and type of facial expression.

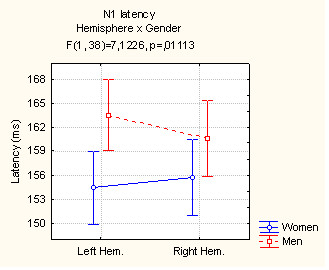

The ANOVA performed on N1 latency values showed that responses to both neutral and distressed faces were significantly faster in women (155.1 ms, SE = 1) than men (162.1 ms, SE = 1) as shown by the significant "gender" factor (F[1,38] = 24.40; p < 0.000001). Furthermore, the effect of "hemisphere X gender" (F[1,38] = 7.12; p < 0.01) proved a strong hemispheric asymmetry in men but not in women; men responded earlier in the right hemisphere rather than the left (see Fig. 2), as confirmed by Tukey post-hoc comparisons.

Figure 2.

Mean latency (in ms) of the N1 component recorded at the left and right lateral occipital areas and analyzed according to subject gender.

Amplitude

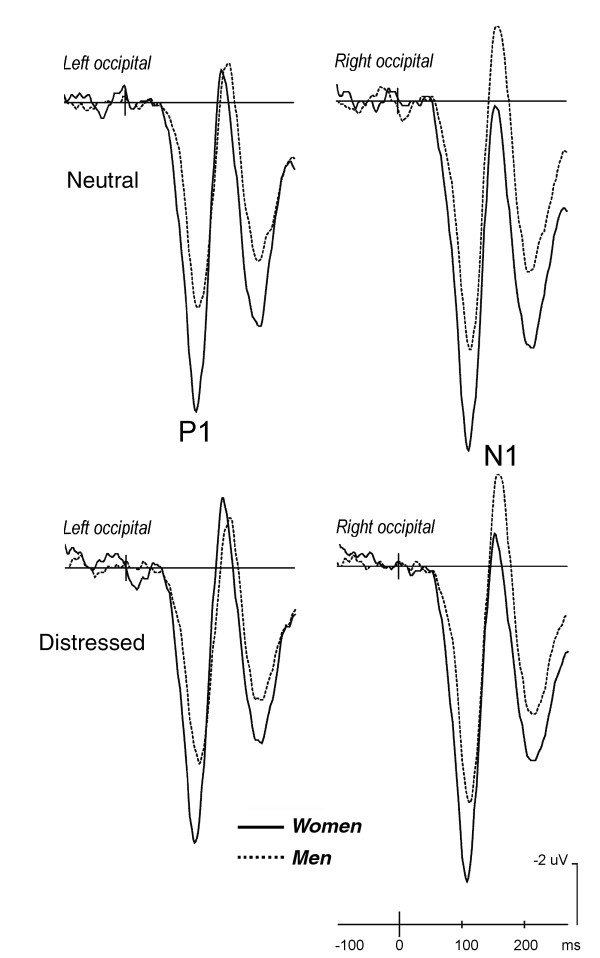

The P110 response was much larger in amplitude in women (7.9 μV, SE = 0.79) than men (10.9 μV, SE = 0.79) as confirmed by the "gender" factor (F[1,38] = 7.11; p < 0.01), regardless of facial expression. The P1 response reached its maximum amplitude over the right occipital cortex in both genders (F[1,38] = 9.72; p < 0.0035) and was not sensitive to the affective content of the images. These effects are clearly visible in the ERP waveforms displayed in Fig. 3.

Figure 3.

Grand-average ERPs recorded at left and right occipital sites in response to neutral and affective faces according to subject gender (women = solid line, men = dashed line).

The emotional content of facial expressions significantly affected N1 amplitudes, as proved by the significance of "emotion" factor (F[1,38] = 6.91; p < 0.015) indicating larger N1 responses to distressed faces (-3.22 μV, SE = 1.1) than to neutral faces (-2.67 μV, SE = 0.84).

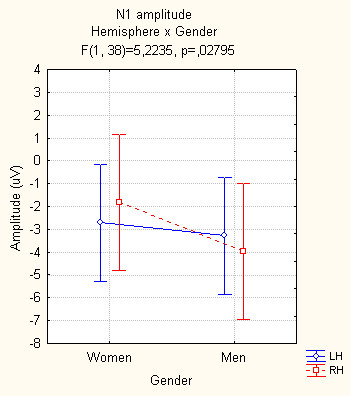

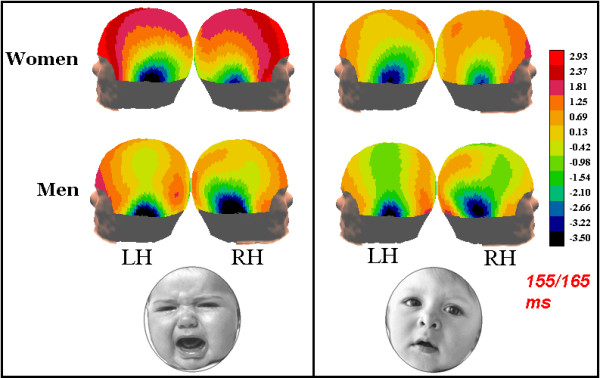

The N160 response was differently lateralized in men and women. Overall (and irrespective of facial expression), women exhibited a N1 response of comparable amplitude over the two visual areas (with a tendency to be larger over the LH), whereas N1 was significantly lateralized over the right hemisphere in men (see Fig. 4) as demonstrated by the significant interaction of "gender X hemisphere" (F[1,38] = 5.22; p < 0.03). This suggests a functional characterization of the hemispheric lateralization in men, which would be more related to the analysis of structural properties of faces and expressions rather than to their affective content.

Figure 4.

Mean amplitude (in μV) of the N1 component recorded at left and right lateral occipital areas and analyzed according to subject gender.

Discussion

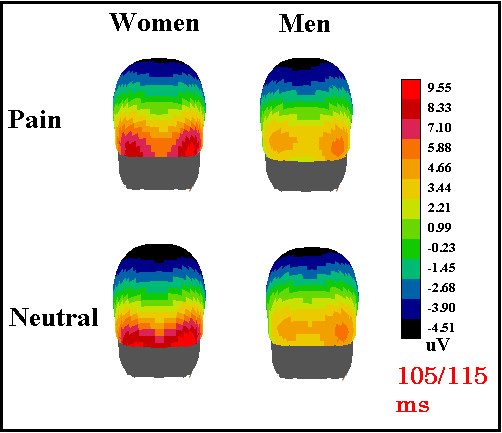

The P1 response was larger and earlier in women than in men, probably suggesting a female preference for the visual signal (infants' faces). This hypothesis is supported by a recent fMRI study showing a stronger activation of the fusiform gyrus in women (compared to men) in response to children's faces [25]. In our study, both P1 and N1 were affected by the emotional content of faces, being earlier (P110) and larger (N160) in response to distressed faces as opposed to neutral faces. These data fit with the available literature which supports the notion of early effects of emotional [14-17] and attentional factors [26-28] in the first stages of visual cortical processing. Overall, the P1 component was larger over the right occipital area in all individuals, and to all stimuli, as clearly visible from the topographic maps in Fig. 5.

Figure 5.

Back view of the scalp distribution of surface potentials recorded in the latency range of P110 according to subject gender and type of facial expression.

This effect might be due either to sensory or cognitive factors. Since all stimuli were faces, in this experiment the asymmetry cannot be ascribed to a generic effect of face processing. Indeed, the literature on face recognition does not support the evidence of a right lateralization for the P1 response, but, rather, a bilateral distribution is often reported (when P1 is considered, see Table 1). Furthermore, in studies involving visual-spatial or selective attention tasks, the P1 component is often described as larger at the right than the left occipital lateral sites both for space orienting (e.g., [29]) and processing of global configurations (e.g., [30]). In addition, P1 is always right-lateralized in response to low spatial frequency patterns even in passive viewing conditions [31,32]. For these reasons, we cannot discuss the P1 right lateralization as an index of a hemispheric dominance for face processing.

On the other hand, the face-specific N160 component was clearly lateralized differently in the men and women in our study. Indeed, a strong gender effect in the hemispheric lateralization of N1 component was observed, both in the latency and amplitude of cerebral response. This hemispheric asymmetry in men was not restricted to the processing of affective faces, and was significant in response to both neutral and distressed faces (see topographic maps in Fig. 6, displaying N1 scalp voltage distribution). Thus, a right hemispheric dominance is suggested for face processing in men but not in women. This may explain the many inconsistencies present in the relevant ERP and neuroimaging literature, which sometimes predicts a bilateral effect and other times a strong right-sided activity in regions devoted to face processing. These conclusions often rely on a mixed gender population, in which men and women are not necessarily equally represented (see Table 1).

Figure 6.

Lateral views of the scalp distribution of surface potentials recorded in the latency range of N160 according to subject gender and type of facial expression.

Our results are also in line with many studies that show gender differences in the degree of lateralization of cognitive and affective processes. Considerable data support greater hemispheric lateralization in men than women for linguistic tasks [33] and for spatial tasks [34]. Gender differences have also been found in the lateralization of visual-spatial processes such as mental rotation [35] and object construction tasks [36], in which males are typically right hemisphere (RH) dominant and females bilaterally distributed. More relevant to the present experiment are the data provided by Bourne [37], who examined the lateralization of processing positive facial emotion in a group of 276 right-handed individuals (138 males, 138 females). Subjects were asked to observe a series of chimeric faces formed with one half showing a neutral expression and the other half showing a positive expression in the left or right visual field, and to decide which face they thought looked happier. The results showed that males were more strongly lateralized than women in the perception of facial expressions, showing a stronger perceptual asymmetry in favour of the left visual field. There are also a number of studies that have found different degrees of lateralization in the cerebral response of men and women to emotional stimuli [38-41]: men tend to demonstrate an asymmetric functioning, and women a bilateral functioning [42].

Conclusion

Our study found a lesser degree of lateralization of brain functions related to face and expression processing in women than men. Furthermore, these results emphasize the importance of considering gender as a factor in the study of brain lateralization during face processing. In this light, our data may also provide an explanation of the inconsistencies in the available literature concerning the asymmetric activity of left and right occipito-temporal cortices devoted to face perception during processing of face identity, structure, familiarity or affective content.

Methods

Participants

40 healthy individuals (20 women and 20 men) with normal or corrected-to-normal vision volunteered for this study. All participants were strictly right-handed as assessed by the Edinburgh Inventory [43] and had a strong right-eye dominance (as attested by practical tests, such as looking inside a bottle or alternately closing each eye to evaluate parallax entity). They were of similar age (average = 33.7 years) and socio-cultural status. Experiments were conducted with the understanding and the written consent of each participant and in accordance with ethical standards (Helsinki, 1964). The study was approved by the CNR Ethical Committee.

Materials and procedures

Participants sat about 120 cm from a computer monitor in an acoustically and electrically shielded cabin. They were instructed to focus on a small cross located in the centre of the screen and to avoid any body or eye movements. Stimuli were randomly presented in the centre of the screen for about 900 ms with an ISI of 1300–1900 ms. The stimulus set consisted of 160 high resolution black and white photos of infants expressing neutral or affective (distressed) emotional states. The electroencephalogram (EEG) was continuously recorded and synchronized with the onset of picture presentation. The task consisted of deciding on the emotional content of the picture. The responses were to be made as accurately and quickly as possible by pressing a response key with the right index finger of the right or left hand (to signal distress or well-being). The hand and experimental run orders were counterbalanced across subjects.

The EEG was continuously recorded from 28 scalp electrodes mounted on an elastic cap. The electrodes were located at frontal (Fp1, Fp2, FZ, F3, F4, F7, F8), central (CZ, C3, C4), temporal (T3, T4), posterior-temporal (T5, T6), parietal (PZ, P3, P4), and occipital scalp sites (OZ, O1, O2) of the International 10–20 System. Additional electrodes were placed halfway between anterior-temporal and central sites (FTC1, FTC2), central and parietal sites (CP1, CP2), anterior-temporal and parietal sites (TCP1, TCP2), and posterior-temporal and occipital sites (OL, OR). Vertical eye movements were recorded using two electrodes placed below and above the right eye, while horizontal movements were recorded from electrodes placed at the outer canthi of the eyes. Linked ears served as the reference lead. The EEG and the EOG were amplified with a half-amplitude band pass of 0.01–70 Hz. Electrode impedance was kept below 5 kΩ. Continuous EEG and EOG were digitized at a rate of 512 samples per second.

Computerized rejection of artefacts was performed before averaging to discard epochs in which eye movements, blinks, excessive muscle potentials, or amplifier blocking occurred. The artefact's rejection criterion was a peak-to-peak amplitude exceeding ± 50 μV and the rejection rate was about 5%. ERPs were averaged offline from 100 ms before until 1000 ms after presentation of the final word. ERP trials associated with an incorrect behavioural response were excluded from further analysis. For each subject, distinct ERP averages were obtained according to infant's facial expression. ERP components were identified and measured with reference to the baseline voltage averages over the interval from -100 ms to 0 ms. P1 and N1 peak amplitude and latency values were measured at lateral occipital sites (OL, OR), where both components reached their maximum amplitude, in the time window between 90–140 ms and 145–175 ms. The McCarthy-Wood correction, sometimes used to normalize ERP amplitudes, was not applied to our data, in line with recent findings in the literature [60].

ERP data of amplitude and latency were analyzed by means of 3- and 4-way repeated measure ANOVAs; the P1 and N1 component were analyzed separately. For P1, there was one between-group factor, "gender" (women and men) and two within-group factors, "emotion" (neutral, distress) and "cerebral hemisphere" (left and right). For N1 there was the extra within-group factor "electrode site" (lateral/occipital, posterior/temporal). Behavioural data were analyzed by means of a 2 way repeated-measures ANOVA performed on mean RTs according to "gender" of viewers and emotional valence of stimuli ("emotion" factor).

Authors' contributions

AMP conceived of the study, coordinated data acquisition and analysis, interpreted the data and drafted the manuscript. SM, VB and MDZ (funded by Fondazione San Raffaele del Monte Tabor) participated in the design of the study, carried out data collection and performed statistical analyses. AZ participated in the study design and coordination and helped to draft the manuscript. All authors read and approved the final manuscript.

Acknowledgments

Acknowledgements

We thank Roberta Adorni for her kind support. The study was supported by MIUR 2003119330_003 and CNR grants to AMP and AZ which were used for data collection and analysis and writing of the manuscript.

Contributor Information

Alice M Proverbio, Email: mado.proverbio@unimib.it.

Valentina Brignone, Email: vale.bri@libero.it.

Silvia Matarazzo, Email: silv_anto@libero.it.

Marzia Del Zotto, Email: marzia.delzotto@unimib.it.

Alberto Zani, Email: alberto.zani@ibfm.cnr.it.

References

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsiao FJ, Hsieh JC, Lin YY, Chang Y. The effects of face spatial frequencies on cortical processing revealed by magnetoencephalography. Neuroscience Letters. 2005;380:54–9. doi: 10.1016/j.neulet.2005.01.016. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cogn Scie. 2000;4:223–233. doi: 10.1016/S1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Pegna AJ, Khateb A, Michel CM, Landis T. Visual recognition of faces, objects, and words using degraded stimuli: where and when it occurs. Hum Brain Map. 2004;22:300–11. doi: 10.1002/hbm.20039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: A functional magnetic resonance imaging study. J Neurosci. 1996;16:5205–15. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossion B, Joyce CA, Cottrell GW, Tarr MJ. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage. 2003;20:1609–24. doi: 10.1016/j.neuroimage.2003.07.010. [DOI] [PubMed] [Google Scholar]

- Pinsk MA, DeSimone K, Moore T, Gross CG, Kastner S. Representations of faces and body parts in macaque temporal cortex: a functional MRI study. PNAS. 2005;102:6996–7001. doi: 10.1073/pnas.0502605102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loffler G, Yourganov G, Wilkinson F, Wilson HR. fMRI evidence for the neural representation of faces. Nat Neurosci. 2005;8:1386–91. doi: 10.1038/nn1538. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face Perception. Domain Specific, Not Process Specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N, Driver J. Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. J Neurophysiol. 1998;79:1574–8. doi: 10.1152/jn.1998.79.3.1574. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. Portraits or people? Distinct representations of face identity in the human visual cortex. J Cogn Neurosci. 2005;17:1043–57. doi: 10.1162/0898929054475181. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Mohr C, Valenza N, Wetzel C, Landis T. Hyperfamiliarity for unknown faces after left lateral temporo-occipital venous infarction: a double dissociation with prosopagnosia. Brain. 2003;126:889–907. doi: 10.1093/brain/awg086. [DOI] [PubMed] [Google Scholar]

- Elfgren C, van Westen D, Passant U, Larsson EM, Mannfolk P, Fransson P. fMRI activity in the medial temporal lobe during famous face processing. Neuroimage. 2006;30:609–16. doi: 10.1016/j.neuroimage.2005.09.060. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cogn Brain Res. 2003;17:613–20. doi: 10.1016/S0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Halgren E, Raij T, Marinkovic K, Jousmaki V, Hari R. Cognitive response profile of the human fusiform face area as determined by MEG. Cereb Cortex. 2000;10:69–81. doi: 10.1093/cercor/10.1.69. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual-Marqui RD, Davidson RJ. Affective judgments of faces modulate early activity (approximately 160 ms) within the fusiform gyri. Neuroimage. 2002;16:663–77. doi: 10.1006/nimg.2002.1126. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P. Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum Brain Mapp. 2005;26:65–79. doi: 10.1002/hbm.20130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson RN, Goshen-Gottstein Y, Ganel T, Otten LJ, Quayle A, Rugg MD. Electrophysiological and Haemodynamic Correlates of Face Perception, Recognition and Priming. Cereb Cortex. 2003;7:793–805. doi: 10.1093/cercor/13.7.793. [DOI] [PubMed] [Google Scholar]

- Liu J, Higuchi M, Marantz A, Kanwisher N. The selectivity of the occipitotemporal M170 for faces. NeuroRep. 2000;11:337–341. doi: 10.1097/00001756-200002070-00023. [DOI] [PubMed] [Google Scholar]

- Harris AM, Duchaine BC, Nakayama K. Normal and abnormal face selectivity of the M170 response in developmental prosopagnosics. Neuropsychologia. 2005;43:2125–36. doi: 10.1016/j.neuropsychologia.2005.03.017. [DOI] [PubMed] [Google Scholar]

- Jemel B, Pisani M, Rousselle L, Crommelinck M, Bruyer R. Exploring the functional architecture of person recognition system with event-related potentials in a within- and cross-domain self-priming of faces. Neuropsychologia. 2005;43:2024–40. doi: 10.1016/j.neuropsychologia.2005.03.016. [DOI] [PubMed] [Google Scholar]

- Meeren HKM, van Heijnsbergen CCRJ, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. PNAS. 2005;102:16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Context Influences Early Perceptual Analysis of Faces – An Electrophysiological Study. Cereb Cortex. 2005 doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- Valkonen-Korhonen M, Tarkka IM, Paakkonen A, Kremlacek J, Lehtonen J, Partanen J, Karhu J. Electrical brain responses evoked by human faces in acute psychosis. Cogn Brain Res. 2005;23:277–86. doi: 10.1016/j.cogbrainres.2004.10.019. [DOI] [PubMed] [Google Scholar]

- Platek SM, Keenan JP, Mohamed FB. Sex differences in the neural correlates of child facial resemblance: an event-related fMRI study. Neuroimage. 2005;25:1336–44. doi: 10.1016/j.neuroimage.2004.12.037. [DOI] [PubMed] [Google Scholar]

- Zani A, Proverbio AM. ERP signs of early selective attention effects to check size. EEG Clin Neurophysiol. 1995;95:277–292. doi: 10.1016/0013-4694(95)00078-D. [DOI] [PubMed] [Google Scholar]

- Zani A, Proverbio AM. Attention modulation of short latency ERPs by selective attention to conjunction of spatial frequency and location. J Psychophysiol. 1997;11:21–32. [Google Scholar]

- Zani A, Proverbio AM. The timing of attentional modulation of visual processing as indexed by ERPs. In: Itti L, Rees G, Tsotsos J, editor. Encyclopedic Handbook of Neurobiology of Attention. San Diego, Elsevier; 2005. pp. 514–519. [Google Scholar]

- Fu S, Greenwood PM, Parasuraman R. Brain mechanisms of involuntary visuospatial attention: an event-related potential study. Hum Brain Mapp. 2005;25:378–90. doi: 10.1002/hbm.20108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proverbio AM, Zani A. Electrophysiological indexes of illusory contours perception in humans. Neuropsychologia. 2002;40:479–91. doi: 10.1016/S0028-3932(01)00135-X. [DOI] [PubMed] [Google Scholar]

- Proverbio AM, Zani A, Avella C. Differential activation of multiple current sources of foveal VEPs as a function of spatial frequency. Brain Topography. 1996;9:59–69. doi: 10.1007/BF01191643. [DOI] [Google Scholar]

- Kenemans JL, Baas JM, Mangun GR, Lijffijt M, Verbaten MN. On the processing of spatial frequencies as revealed by evoked-potential source modeling. Clin Neurophysiol. 2000;111:1113–23. doi: 10.1016/S1388-2457(00)00270-4. [DOI] [PubMed] [Google Scholar]

- Shaywitz BA, Shaywitz SE, Pugh KR, Constable RT, Skudlarski P, Fulbright RK, Bronen RA, Fletcher JM, Shankweiler DP, Katz L, et al. Sex differences in the functional organization of the brain for language. Nature. 1995;373:607–9. doi: 10.1038/373607a0. [DOI] [PubMed] [Google Scholar]

- Rilea SL, Roskos-Ewoldsen B, Boles D. Sex differences in spatial ability: a lateralization of function approach. Brain Cogn. 2004;56:332–43. doi: 10.1016/j.bandc.2004.09.002. [DOI] [PubMed] [Google Scholar]

- Johnson BW, McKenzie KJ, Hamm JP. Cerebral asymmetry for mental rotation: effects of response hand, handedness and gender. Neurorep. 2002;13:1929–32. doi: 10.1097/00001756-200210280-00020. [DOI] [PubMed] [Google Scholar]

- Rasmjou S, Hausmann M, Gunturkun O. Hemispheric dominance and gender in the perception of an illusion. Neuropsychologia. 1999;37:1041–7. doi: 10.1016/S0028-3932(98)00154-7. [DOI] [PubMed] [Google Scholar]

- Bourne VJ. Lateralized processing of positive facial emotion: sex differences in strength of hemispheric dominance. Neuropsychologia. 2005;43:953–6. doi: 10.1016/j.neuropsychologia.2004.08.007. [DOI] [PubMed] [Google Scholar]

- Lee TM, Liu HL, Hoosain R, Liao WT, Wu CT, Yuen KS, Chan CC, Fox PT, Gao JH. Gender differences in neural correlates of recognition of happy and sad faces in humans assessed by functional magnetic resonance imaging. Neuroscience Letter. 2002;333:13–6. doi: 10.1016/S0304-3940(02)00965-5. [DOI] [PubMed] [Google Scholar]

- Kemp AH, Silberstein RB, Armstrong SM, Nathan PJ. Gender differences in the cortical electrophysiological processing of visual emotional stimuli. Neuroimage. 2004;21:632–646. doi: 10.1016/j.neuroimage.2003.09.055. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Yurgelun-Todd DA. Sex differences in amygdala activation during the perception of facial affect. Neurorep. 2001;12:2543–7. doi: 10.1097/00001756-200108080-00050. [DOI] [PubMed] [Google Scholar]

- Wager TD, Phan KL, Liberzon I, Taylor SF. Valence, gender, and lateralization of functional brain anatomy in emotion: a meta-analysis of findings from neuroimaging. Neuroimage. 2003;19:513–31. doi: 10.1016/S1053-8119(03)00078-8. [DOI] [PubMed] [Google Scholar]

- Pardo JV, Pardo PJ, Raichle ME. Neural correlates of self-induced dysphoria. American Journal of Psychiatry. 1993;150:713–719. doi: 10.1176/ajp.150.5.713. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Bentin S, Deouell LY, Soroker N. Selective visual streaming in face recognition: evidence from developmental prosopagnosia. Neurorep. 1999;10:823–7. doi: 10.1097/00001756-199903170-00029. [DOI] [PubMed] [Google Scholar]

- Caldara R, Rossion B, Bovet P, Hauert CA. Event-related potentials and time course of the "other-race" face classification advantage. Neurorep. 2004;15:905–10. doi: 10.1097/00001756-200404090-00034. [DOI] [PubMed] [Google Scholar]

- Campanella S, Hanoteau C, Depy D, Rossion B, Bruyer R, Crommelinck M, Guerit JM. Right N170 modulation in a face discrimination task: an account for categorical perception of familiar faces. Psychophysiol. 2000;37:796–806. doi: 10.1017/S0048577200991728. [DOI] [PubMed] [Google Scholar]

- Esslen M, Pascual-Marqui RD, Hell D, Kochi K, Lehmann D. Brain areas and time course of emotional processing. Neuroimage. 2004;21:1189–203. doi: 10.1016/j.neuroimage.2003.10.001. [DOI] [PubMed] [Google Scholar]

- George N, Jemel B, Fiori N, Chaby L, Renault B. Electrophysiological correlates of facial decision: Insights from upright and upside-down Mooney-face perception. Cogn Brain Res. 2005;310:663–673. doi: 10.1016/j.cogbrainres.2005.03.017. [DOI] [PubMed] [Google Scholar]

- Gliga T, Dehaene-Lambertz G. Structural encoding of body and face in human infants and adults. J Cogn Neurosci. 2005;17:1328–40. doi: 10.1162/0898929055002481. [DOI] [PubMed] [Google Scholar]

- Herrmann MJ, Ehlis AC, Ellgring H, Fallgatter AJ. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs) J Neural Transm. 2005;112:1073–81. doi: 10.1007/s00702-004-0250-8. [DOI] [PubMed] [Google Scholar]

- Holmes A, Winston JS, Eimer M. The role of spatial frequency information for ERP components sensitive to faces and emotional facial expression. Brain Res Cogn. 2005;25:508–20. doi: 10.1016/j.cogbrainres.2005.08.003. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Effects of repetition learning on upright, inverted and contrast-reversed face processing using ERPs. NeuroImage. 2004;21:1518–32. doi: 10.1016/j.neuroimage.2003.12.016. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Source analysis of the N170 to faces and objects. Neurorep. 2004;15:1261–5. doi: 10.1097/01.wnr.0000127827.73576.d8. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb Cortex. 2004;14:132–42. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Kovacs G, Zimmer M, Banko E, Harza I, Antal A, Vidnyanszky Z. Electrophysiological Correlates of Visual Adaptation to Faces and Body Parts in Humans. Cereb Cortex. 2005 doi: 10.1093/cercor/bhj020. [DOI] [PubMed] [Google Scholar]

- Latinus M, Taylor MJ. Holistic processing of faces: learning effects with Mooney faces. Cogn Neurosci. 2005;17:1316–27. doi: 10.1162/0898929055002490. [DOI] [PubMed] [Google Scholar]

- Rossion B, Delvenne JF, Debatisse D, Goffaux V, Bruyer R, Crommelinck M, Guerit JM. Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biol Psychol. 1999;50:173–89. doi: 10.1016/S0301-0511(99)00013-7. [DOI] [PubMed] [Google Scholar]

- Rousselet GA, Mace MJ, Fabre-Thorpe M. Animal and human faces in natural scenes: How specific to human faces is the N170 ERP component? Journal of Vision. 2004;4:13–21. doi: 10.1167/4.1.2. [DOI] [PubMed] [Google Scholar]

- Yovel G, Levy J, Grabowecky M, Paller KA. Neural correlates of the left-visual-field superiority in face perception appear at multiple stages of face processing. J Cogn Neurosci. 2003;15:462–74. doi: 10.1162/089892903321593162. [DOI] [PubMed] [Google Scholar]

- Urbach TP, Kutas M. The intractability of scaling scalp distributions to infer neuroelectric sources. Psychophysiol. 2002;39:791–808. doi: 10.1111/1469-8986.3960791. [DOI] [PubMed] [Google Scholar]