SYNOPSIS

Objectives

Death certificate data are used to estimate state and national incidence of traumatic brain injury (TBI)-related deaths. This study evaluated the accuracy of this estimate in Oklahoma and examined the case characteristics of those persons who experienced a TBI-related death but whose death certificate did not reflect a TBI.

Methods

Data from Oklahoma's vital statistics multiple-cause-of-death database and from the Oklahoma Injury Surveillance System database were analyzed for TBI deaths that occurred during 2002. Cases were defined using the Centers for Disease Control and Prevention (CDC) ICD-10 code case definition. In multivariate analysis using a logistic regression model, we examined the association of case characteristics and the absence of a death certificate for persons who experienced a TBI-related death.

Results

Overall, sensitivity of death certificate-based surveillance was 78%. The majority (62%) of missed cases were due to listing ″multiple trauma″ as the cause of death. Death certificate surveillance was more likely to miss TBI-related deaths among traffic crashes, falls, and persons aged ≥65 years. After adding missed cases to cases captured by death certificate surveillance, traffic crashes surpassed firearm fatalities as the leading external cause of TBI-related death.

Conclusions

Death certificate surveillance underestimated TBI-related death in Oklahoma and might lead to national underreporting. More accurate and detailed completion of death certificates would result in better estimates of the burden of TBI-related death. Educational efforts to improve death certificate completion could substantially increase the accuracy of mortality statistics.

Traumatic brain injury (TBI) is a serious public health problem and a substantial cause of morbidity and mortality in the United States.1–8 Each year, approximately 1.4 million persons sustain a TBI; 235,000 are hospitalized and 50,000 die from their injury.7 The yearly direct and indirect cost of TBI has been estimated at $56 billion.9 Because the public health burden estimated through surveillance from mortality statistics guides public health policy, funding, and prevention, verifying the accuracy of these data is critical.

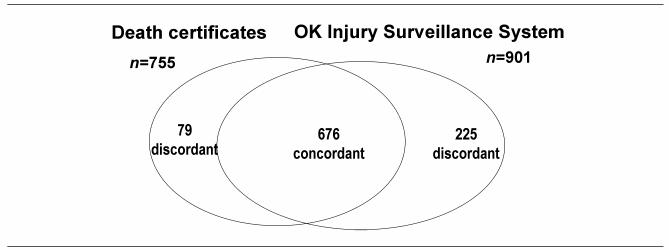

The Centers for Disease Control and Prevention's (CDC's) National Center for Injury Prevention and Control publishes standards for central nervous system injury surveillance.10 These standards include a case definition for TBI mortality that encompasses a group of International Classification of Diseases (ICD)-10 codes, used in death certificates, to identify TBI-related death (Figure 1). In addition, they have identified a group of ICD-9-Clinical Modification (CM) codes, used in hospital discharge records, which represent a TBI diagnosis (Figure 1).10 Nationally, death certificates are the primary tool in mortality surveillance.11 In 2002, Oklahoma was one of 11 states funded to maintain a supplemental system on TBI morbidity and mortality obtained from hospital discharge and death certificate records.10 Oklahoma also examines medical examiner data to obtain more information on TBI mortality.

Figure 1.

ICD-10 and ICD-9-CM codes for TBI-related death

TBI = traumatic brain injury

The accuracy of death certificate data has been evaluated for a variety of diseases and injuries.12–18 For example, it has been estimated that diabetes is recorded on only 36% of death certificates, and fatal motorcycle injuries are underreported by 38%.12,19 In contrast, cardiovascular disease has been estimated to be overrepresented on death certificates by 25% to 80%.15,20

In 1993, the sensitivity of New Mexico death certificate data was evaluated for head and neck injury using medical examiner data as the gold standard. The evaluation calculated the sensitivity for ICD-9 TBI and neck injury codes on death certificates to be 76.7%.21 The accuracy of death certificate surveillance has not been evaluated using the ICD-10 coding guidelines and the case definition delineated by the CDC.

The purpose of this study was to describe the accuracy of death certificate surveillance for TBI mortality in 2002 in Oklahoma. By ascertaining the accuracy of TBI surveillance, we can better guide and evaluate prevention strategies and public policy efforts by targeting the leading causes of TBI death. By comparing deaths from the supplemental statewide injury surveillance system for fatal and nonfatal TBI in Oklahoma with the death certificate data, we calculated the sensitivity and predictive value positive and analyzed the groups most likely to be missed by death certificate-based surveillance.

METHODS

Case identification and case definition

Data from Oklahoma's vital statistics multiple-cause-of-death database were analyzed for deaths that occurred during 2002. The data were compiled from death certificates submitted to the Oklahoma State Department of Health (OSDH). Causes of death and manner of death (natural, accident, homicide, suicide, or undetermined) were recorded on the death certificate by either attending physicians or medical examiners and sent to the CDC's National Center for Health Statistics (NCHS) for coding according to World Health Organization (WHO) guidelines.22 To be included in the death certificate database, the death certificate was coded with at least one ICD-10 code representing CDC-defined TBI (Figure 1).23

Data from the supplemental Oklahoma Injury Surveillance System database were analyzed for the same timeframe. To be included in the Oklahoma Injury Surveillance System, there was either a medical examiner report that described a TBI or a hospital medical record that contained one or more ICD-9-CM hospital discharge codes indicating CDC-defined TBI in a person who died. OSDH staff epidemiologists reviewed medical records of persons with an ICD-9-CM code reflecting a TBI and recorded this information on a chart abstraction form.

To be included as a case of TBI-related death for this study, the death certificate, medical examiner report, or chart abstraction form had to have a diagnosis or description that met the CDC ICD-10 code case definition for a TBI-related death.

Data linkage

The two databases were linked using SAS LinkPro Version 3.01, a probabilistic linking software.24 They were linked using the following variables: the Soundex (a phonetic transliteration) of the last name and first name, date of death, date of birth, sex, and age.

Case classification

The linked or concordant records from the two databases were assumed to be cases of TBI-related death. To validate this assumption, we reviewed a 10% (n=70) random sample of the concordant pairs.

Death certificates, medical examiner reports, and chart abstraction forms were requested on all discordant records. Each record was examined manually to determine if the record contained TBI information in any cause-of-death field that would have met the CDC ICD-10 code case definition (Figure 1). If a death certificate was reviewed and the coding did not match the written diagnoses, the certificate was sent to NCHS for verification of the correct coding.

Sensitivity and predictive value positive (PVP) have historically been calculated in relation to a gold standard (i.e., laboratory tests). There is no traditional gold standard in TBI mortality surveillance. Therefore, we calculated the sensitivity and PVP by comparing the two databases to each other.

Sensitivity was defined as the proportion of true TBI-related deaths identified as TBI by death certificate surveillance (true positives divided by the sum of the true positives and false negatives). Predictive value positive was defined as the proportion of TBI-related deaths identified by death certificate surveillance that were true TBI-associated death events (true positives divided by the sum of the true positives and false positives).25

Variables and analytic strategy

We compared the TBI-related deaths meeting the CDC case definition that were captured by death certificate surveillance to those captured only through the Oklahoma Injury Surveillance System. The dependent variable was the presence or absence of a death certificate indicating TBI-related death. We considered the following independent variables:

Sex: men and women

Age: 0–64 and ≥65 years

Race: white and non-white

Cause of death: traffic crashes, falls, firearm fatalities, and other causes (reference group: firearm fatalities)

Day of week of death: weekday and weekend; and

County of death: metropolitan and non-metropolitan as determined by the U.S. Census.26

We used logistic regression to determine whether there was an association between the variables identified above and being missed by death certificate surveillance. To create a multivariate model, we first performed univariate logistic regression analysis on each variable and retained those variables with p<0.125 by the likelihood ratio test. All the retained variables were considered in a multivariate model. With a forward selection procedure, we determined which variables could be excluded from the model. We also assessed any potential interactions. To determine how well our model fit our observations, we calculated the Hosmer and Lemeshow goodness-of-fit test and performed regression diagnostics using Pearson and deviance residual analysis.

Statistical analyses were performed using SAS 9.1.27 Associations were considered statistically significant at p<0.05.

RESULTS

Linkage results: sensitivity and predictive value positive

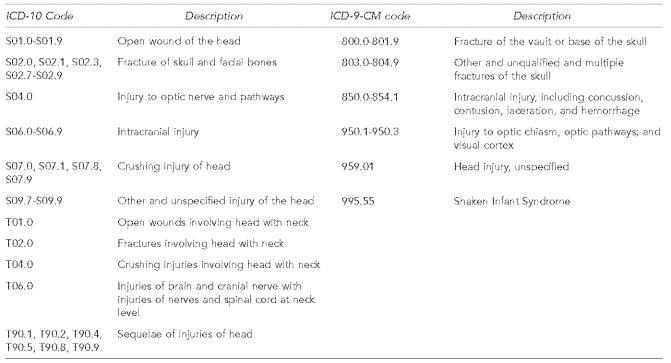

Figure 2 represents the probabilistic linkage results of the death certificate database with the Oklahoma Injury Surveillance System database. Nine hundred eighty records were identified as TBI-related deaths by either or both systems. Six hundred seventy-six records were identified by both systems (i.e., concordant records). Of the 70 concordant records randomly selected for validation of case status, 100% met case definition for this study. We identified 225 records that were captured only by the Oklahoma Injury Surveillance System and 79 records captured only by death certificate surveillance (i.e., discordant records).

Figure 2.

Results of linkage of TBI-related death records between death certificate-based surveillance and Oklahoma's Injury Surveillance System—Oklahoma 2002

TBI = traumatic brain injury

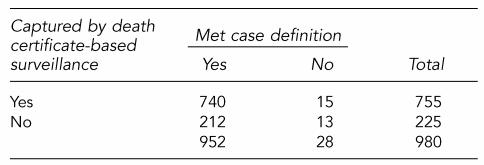

Table 1 represents the classification of cases after manual review of death certificates, medical examiner reports, and chart abstraction tools for the discordant records. A total of 952 (97%) of the records met the case definition. Of the 755 records captured by death certificate surveillance, 15 were identified as false positives. Predictive value positive of death certificate surveillance was calculated at 98%. Of the 15 false positives, 12 records were errors in assigning the correct ICD-10 code. NCHS reviewed these certificates and assigned the correct ICD-10 codes, which did not include a TBI ICD-10 code. Last, three amendments to the original death certificate were never updated to indicate that a TBI did not occur.

Table 1.

Classification of records after manual review of discordant cases between death certificates and the Oklahoma Injury Surveillance System—Oklahoma 2002

Sensitivity: 78%

Predictive value positive: 98%

Of the 952 records that met case definition for TBI-related death, 212 were identified as false negatives (Table 1). Sensitivity of death certificate surveillance was calculated at 78%. The majority (91%) of the false negatives were due to incorrect or incomplete death certificates (n=192). Approximately two-thirds (69%) of these had the diagnosis of ″multiple trauma″ recorded (i.e., no specific causes of death were listed on the death certificate); 86% (n=114) of the multiple trauma records were traffic crashes. Medical examiners certified 100% of the multiple trauma records. Of the remainder of incorrect or incomplete death certificates, over half (52%) were falls and two-thirds (63%) were among persons aged ≥65 years. Eight percent (n=17) of missed records were errors in the coding process and 1% (n=3) of the death certificates could not be located. Finally, 13 records were misclassified by OSDH epidemiologists as TBI-related deaths in the Oklahoma Injury Surveillance System when the information provided in the chart or medical examiner report did not meet the CDC case definition (i.e., true negatives).

Univariate analysis

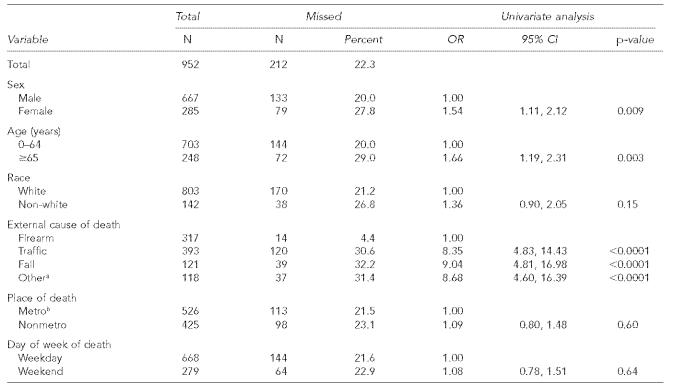

Table 2 represents the description of the population and the results from univariate analysis. Females, persons aged ≥65 years, and persons dying in traffic crashes, falls, and other external causes of death had significantly higher odds of being missed by death certificate-based surveillance. Race, place of injury (metropolitan or non-metropolitan), and day of death (weekday or weekend) were not associated with being missed by death certificate surveillance.

Table 2.

Description of TBI-related death missed by death certificate-based surveillance and univariate analysis—Oklahoma 2002

Other external causes of death include all those not listed above

Metropolitan Statistical Area (MSA) determined by U.S. Census population estimates. Available from: URL: http:www.census.gov/population/www/estimates/metrodef.html

TBI = traumatic brain injury

OR = odds ratio

CI = confidence interval

Missed fall deaths were more likely than other external causes of death to be certified as ″natural″ rather than ″injury related″ on the death certificate (odds ratio [OR] 48.0; 95% confidence interval [CI] 17.56, 131.22; p<0.0001). An attending physician completed 75% of the death certificates where a fall death was certified as natural. Eighty-five percent of missed fall deaths were persons aged 65 and older. Overall, of the 952 records that met case definition, 9% (n=84) of the death certificates were completed by an attending physician rather than by the medical examiner. The missed records were more likely to have their death certified by an attending physician than by the medical examiner (OR=3.48; 95% CI 2.14, 5.65; p<0.0001). Before adjusting for missed cases, firearm-related incidents were the leading external cause of TBI-related death, accounting for 41% of all cases. After the adjustment, traffic crashes were the leading cause, accounting for 41% of all cases, while firearm-related deaths accounted for 33%.

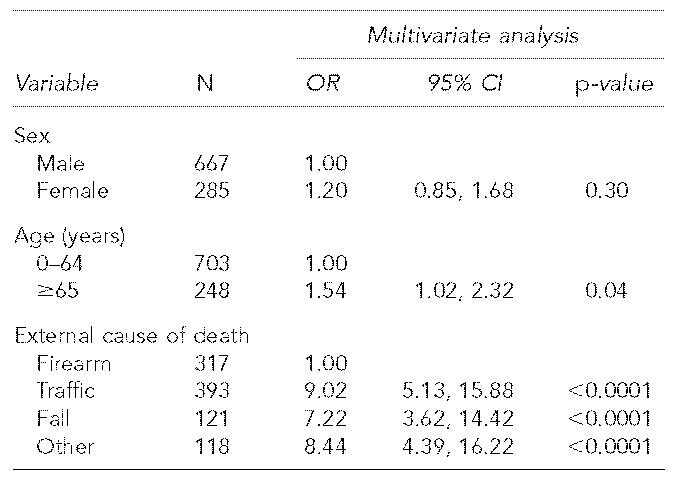

Multivariate analysis

The final multivariate model included sex, age, and external cause of death as variables (Table 3). Persons dying in traffic crashes, falls, and other external causes of death had significantly higher odds of being missed by death certificate-based surveillance when compared to firearm deaths. Persons aged ≥65 years also were more likely to be missed than those under 65. In multivariate analysis, in contrast to the univariate results, women were no longer more likely to be missed by death certificate-based surveillance. There were no significant interactions among the variables.

Table 3.

Multivariate logistic regression model on variables missed by death certificate-based surveillance—Oklahoma 2002

OR = odds ratio

CI = confidence interval

The Hosmer and Lemeshow goodness-of-fit p-value was 0.9910. In addition, the residual analysis yielded no remarkable outliers or any systematic pattern of variation.

DISCUSSION

To our knowledge, this is the first report on the sensitivity of death certificate data using the CDC case definition of TBI-related death based on ICD-10 codes. This analysis indicates that death certificate-based surveillance underestimated TBI-related deaths by 22% in Oklahoma in 2002. The sensitivity is comparable to the 77% found in a similar evaluation that examined ICD-9 data for neck and TBI-related death.21 When missed death certificates were manually reviewed, 91% of those death certificates were certified ″multiple trauma″ or were incompletely or incorrectly completed by the physician. This led to certain groups being underrepresented, such as persons aged ≥65 years, and persons dying from traffic crashes, falls, and other external causes of death. Our results indicate that medical examiners often record only the general diagnosis ″multiple trauma″ when there are several potentially fatal injuries. When these TBI-related deaths with the ″multiple trauma″ diagnosis were added to those already identified, traffic crashes overtook firearms as the leading cause of TBI-related death in Oklahoma. In order to capture these missed records, vital statistics should request that medical examiners list all major injuries on the immediate cause of death line of the death certificate so that each injury can be captured in multiple-cause coding.

Although persons who died from falls accounted for only 18% of missed TBI-related deaths, their manner of death was more likely to be certified as natural. The majority of fall-related deaths were in those aged ≥65 years. These persons had substantial co-morbidities, possibly resulting in the manner of death being certified as natural rather than injury-related. In addition, although state statute requires all injury-related deaths to be referred to the medical examiner, attending physicians completed death certificates for 9% of the 952 cases that met case definition and were more likely to complete the death certificate in missed cases. It is possible that some physicians were unaware of the requirement to notify the medical examiner about an injury-related death or that the attending physician placed more emphasis on natural co-morbidities and did not feel the need to notify the medical examiner.

The study had certain limitations. First, our analysis did not identify individuals who died of a TBI but were not identified with a TBI on their death certificate, medical examiner report, or hospital discharge record. Including these persons would lower the calculated sensitivity, albeit minimally. Second, we analyzed only one year of data and although the overall sample size was large (n=952), racial subgroups were small and we were unable to analyze individual groups. Last, accuracy in completion of death certificates might vary by state and Oklahoma's estimate might not be comparable to a national evaluation of sensitivity.

This study did not use a gold standard to calculate PVP and sensitivity but rather used Oklahoma's Injury Surveillance System with data collected from medical examiner and hospital discharge data to identify records missed by death certificate-based surveillance. Unlike traditional examples of calculating PVP and sensitivity in a laboratory setting when there is a true gold standard, single-source injury surveillance systems will never be a complete source of data due to human or computerized coding errors. Although some authors have used a medical examiner system as the gold standard,21 Dijkhuis et al. determined that 68.7% of injury-related deaths were captured through medical examiner reports and our study indicated that 9% of TBI-related deaths were not referred to the medical examiner.28 This is why the injury field in general has implemented multiple-source surveillance systems like the National Violent Death Reporting System29 and Census for Fatal Occupational Injuries.30 By linking multiple databases, we can attempt to identify all cases to gain a more accurate picture of the burden of disease or injury.18,31–34 This allows researchers to more accurately calculate the PVP and sensitivity of the surveillance system being examined.

The accuracy of death certificate data has direct consequences on research and estimation of burden of injury. If this trend is consistent across other states, national statistics are underestimating the burden of TBI-related death and undercounting certain groups. A more accurate description might lead to additional prevention efforts (e.g., focusing more resources on fall prevention in the elderly).

Death certificates can only be an accurate representation if they are completed correctly. Although physicians believe that death certificate information is important, they do not regularly receive formal instruction and many feel undertrained.35,36 Accuracy rates among physicians have been reported to range from 23% to 68%35,37–39 and simple educational campaigns can substantially improve the accuracy of death certificate completion.35,36 Statewide educational efforts training physicians on how to complete death certificates (i.e., on-line continuing education units) could also substantially improve the accuracy of mortality statistics.

Using multiple data sources can better estimate the burden of disease and injury and can facilitate prevention research and evaluation of existing data sources.31–33,40 By linking medical examiner and hospital discharge data to death certificate surveillance, we were able to assess the accuracy and completeness of death certificates as a surveillance tool and institute more quality control measures into both our surveillance systems. In addition, linked data can be better applied to quantify risk factor data. Death certificates lack risk factor information available from other sources such as medical examiner and criminal justice data (e.g., alcohol and drug use, relationship of perpetrator of homicide, and type of weapon used).18,21,31 This information is critical when planning and evaluating prevention efforts.

Evaluating public health surveillance systems is important and useful.25 Death certificate surveillance is available in all states, is readily accepted nationally for surveillance data, and is a stable source of information that readily lends itself to comparison from year to year. A finalized database, however, is not available until approximately 9–12 months after the calendar year, restricting the timeliness of the data. The standardized form of death certificates is not easily modified and coding is directed by WHO guidelines that cannot be changed at a local level.22 But while death certificate data are limited in timeliness and flexibility, death certificate surveillance continues to be a cost-effective method that all states can use to monitor TBI-related death.

Individual surveillance systems can be enhanced by linking multiple data systems across multiple jurisdictions.41 Both surveillance systems in this study serve their intended purposes and together give a more accurate picture of the burden of TBI mortality. To better evaluate national reporting of TBI-related deaths, we recommend other states with access to medical examiner and hospital discharge data replicate this evaluation to provide a more detailed and accurate description of the burden of traumatic brain injury.

Acknowledgments

This study was funded in part by CDC grant number U17/CCU611902.

The authors acknowledge Kenneth Stewart, Tracy Wendling, Derek Pate, and Sue Bordeaux for help with data management; Tabitha Garwe and Dr. Anindye De for help with data analysis; and Dr. Julie Magri for help preparing the manuscript.

REFERENCES

- 1.Adekoya N, Thurman DJ, White DD, Webb KW. Surveillance for traumatic brain injury deaths—United States, 1989–1998. MMWR Surveill Summ. 2002;51(10):1–14. [PubMed] [Google Scholar]

- 2.Archer P, Mallonee S, Lantis S. Horseback-riding-associated traumatic brain injuries—Oklahoma, 1992–1994. MMWR Morb Mortal Wkly Rep. 1996;45(10):209–11. [PubMed] [Google Scholar]

- 3.Gabella B, Hoffman R, Land G, Mallonne S, Archer P, Crutcher M, et al. Traumatic brain injury—Colorado, Missouri, Oklahoma, and Utah, 1990-1993. MMWR Morb Mortal Wkly Rep. 1997;46(1):8–11. [PubMed] [Google Scholar]

- 4.Adekoya N, Wallace LJD. Traumatic brain injury among American Indians/Alaska Natives—United States, 1992–1996. MMWR Morb Mortal Wkly Rep. 2002;51(14):303–5. [PubMed] [Google Scholar]

- 5.Cross J, Trent R, Adekoya N. Public health and aging: nonfatal fall-related traumatic brain injury among older adults—California, 1996–1999. MMWR Morb Mortal Wkly Rep. 2003;52(13):276–8. [PubMed] [Google Scholar]

- 6.Langlois JA, Kegler SR, Butler JA, Gotsch KE, Johnson RL, Reichard MS, et al. Traumatic brain injury-related hospital discharges: results from a 14-state surveillance system, 1997. MMWR Surveill Summ. 2003;52(4):1–20. [PubMed] [Google Scholar]

- 7.Langlois JA, Rutland-Brown W, Thomas KE. Traumatic brain injury in the United States. Atlanta: National Center for Injury Prevention and Control, Centers for Disease Control and Prevention; 2004. [Google Scholar]

- 8.Gabella B, Hoffman RE, Marine WW, Stallones L. Urban and rural traumatic brain injuries in Colorado. Ann Epidemiol. 1997;7:207–12. doi: 10.1016/s1047-2797(96)00150-0. [DOI] [PubMed] [Google Scholar]

- 9.Thurman D. The epidemiology and economics of head trauma. In: Miller L, Hayes R, editors. Head trauma: basic, preclinical, and clinical directions. New York: Wiley and Sons; 2001. [Google Scholar]

- 10.Marr A, Coronado V, editors. Central nervous system injury surveillance data submission standards—2002. Atlanta: Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; 2004. [Google Scholar]

- 11.Hoyert DL, Kung HC, Smith BL. Deaths: preliminary data for 2003. Natl Vital Stat Rep. 2005 Feb 28;53(15):1–48. [PubMed] [Google Scholar]

- 12.Lapidus G, Braddock M, Schwartz R, Banco L, Jacobs L. Accuracy of fatal motorcycle-injury reporting on death certificates. Accid Anal Prev. 1994;26:535–42. doi: 10.1016/0001-4575(94)90044-2. [DOI] [PubMed] [Google Scholar]

- 13.Moolenaar RL, Etzel RA, Gibson Parrish R. Unintentional deaths from carbon monoxide poisoning in New Mexico, 1980 to 1988. A comparison of medical examiner and national mortality data. West J Med. 1995;163:431–4. [PMC free article] [PubMed] [Google Scholar]

- 14.Kraus JF, Peek C, Silberman T, Anderson C. The accuracy of death certificates in identifying work-related fatal injuries. Am J Epidemiol. 1995;141:973–9. doi: 10.1093/oxfordjournals.aje.a117364. [DOI] [PubMed] [Google Scholar]

- 15.Lloyd-Jones DM, Martin DO, Larson MG, Levy D. Accuracy of death certificates for coding coronary heart disease as the cause of death. Ann Intern Med. 1998;129:1020–6. doi: 10.7326/0003-4819-129-12-199812150-00005. [DOI] [PubMed] [Google Scholar]

- 16.Coultas DB, Hughes MP. Accuracy of mortality data for interstitial lung diseases in New Mexico, USA. Thorax. 1996;51:717–20. doi: 10.1136/thx.51.7.717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Washko RM, Frieden TR. Tuberculosis surveillance using death certificate data, New York City, 1992. Public Health Rep. 1996;111:251–5. [PMC free article] [PubMed] [Google Scholar]

- 18.Comstock R, Mallonee S, Jordan F. A comparison of two surveillance systems for deaths related to violent injury. Inj Prev. 2005;11:58–63. doi: 10.1136/ip.2004.007567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Will JC, Vinicor F, Stevenson J. Recording of diabetes on death certificates: has it improved? J Clin Epidemiol. 2001;54:239–44. doi: 10.1016/s0895-4356(00)00303-6. [DOI] [PubMed] [Google Scholar]

- 20.Chugh SS, Jui J, Gunson K, Stecker EC, John BT, Thompson B. Current burden of sudden cardiac death: multiple source surveillance versus retrospective death certificate-based review in a large U.S. community. J Am Coll Cardiol. 2004;44:1268–75. doi: 10.1016/j.jacc.2004.06.029. [DOI] [PubMed] [Google Scholar]

- 21.Nelson DE, Sacks JJ, Parrish RG, Sosin DM, McFeeley P, Smith SM. Sensitivity of multiple-cause mortality data for surveillance of deaths associated with head or neck injuries. MMWR Surveill Summ. 1993;42(5):29–35. [PubMed] [Google Scholar]

- 22.World Health Organization. International statistical classification of diseases and related health problems, 10th revision, 3 vols. Geneva: WHO; 1992. [cited 2005 Dec 26]. Also available from: URL: http://www.who.int/classifications/icd/en/ ICD-10. [Google Scholar]

- 23.Centers for Disease Control and Prevention (US), National Center for Health Statistics. Instructions for classifying multiple causes of death, 2005. [cited 2005 Dec 26]. Available from: URL: http://www.cdc.gov/nchs/data/dvs/2b2005a.pdf.

- 24.InfoSoft Inc. The LinkPro v301. Winnipeg: InfoSoft Inc; 2004. [Google Scholar]

- 25.German RR, Lee LM, Horan JM, Milstein RL, Pertowski CA, Waller MN. Updated guidelines for evaluating public health surveillance systems: recommendations from the guidelines working group. MMWR Recomm Rep. 2001;50(RR-13):1–35. [PubMed] [Google Scholar]

- 26.Census Bureau (US), Population Division, Population Distribution Branch. [cited 2005 Dec 26];Metropolitan statistical areas and components, December 2003. Available from: URL: http://www.census.gov/population/www/estimates/metrodef.html. [Google Scholar]

- 27.SAS Institute Inc. SAS 9.1. Cary (NC): SAS Institute; 2004. [Google Scholar]

- 28.Dijkhuis H, Zwerling C, Parrish G, Bennett T, Kemper HC. Medical examiner data in injury surveillance: a comparison with death certificates. Am J Epidemiol. 1994;139:637–43. doi: 10.1093/oxfordjournals.aje.a117053. [DOI] [PubMed] [Google Scholar]

- 29.Centers for Disease Control and Prevention (US) National violent death reporting system implementation manual. Atlanta: Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; 2003. [cited 2005 Dec 26]. Also available from: URL: http://www.cdc.gov/ncipc/pub-res/nvdrs-implement/default.htm. [Google Scholar]

- 30.Department of Labor (US), Bureau of Labor Statistics. Fatal occupational injuries in the United States, 1995-1999: a chartbook. Washington: Department of Labor (US), Bureau of Labor Statistics; [cited 2005 Dec 26]. Also available from: URL: http://www.bls.gov/opub/cfoichartbook/home.htm. [Google Scholar]

- 31.Lyman JM, McGwin G, Davis G, Kovandzic TV, King W, Vermund SH. A comparison of three sources of data on child homicide. Death Stud. 2004;28:659–69. doi: 10.1080/07481180490476515. [DOI] [PubMed] [Google Scholar]

- 32.Pezzotti P, Piovesan C, Michieletto F, Zanella F, Rezza G, Gallo G. Estimating the cumulative number of human immunodeficiency virus diagnoses by cross-linking from four different sources. Int J Epidemiol. 2003;32:778–83. doi: 10.1093/ije/dyg202. [DOI] [PubMed] [Google Scholar]

- 33.Schootman M, Harlan M, Fuortes L. Use of the capture-recapture method to estimate severe traumatic brain injury rates. J Trauma. 2000;48:70–5. doi: 10.1097/00005373-200001000-00012. [DOI] [PubMed] [Google Scholar]

- 34.Archer PJ, Mallonee S, Schmidt AC, Ikeda RM. Oklahoma Firearm-Related Injury Surveillance. Am J Prev Med. 1998;15(3 Suppl):83–91. doi: 10.1016/s0749-3797(98)00054-3. [DOI] [PubMed] [Google Scholar]

- 35.Messite J, Stellman SD. Accuracy of death certificate completion: the need for formalized physician training. JAMA. 1996;275:794–6. [PubMed] [Google Scholar]

- 36.Maudsley G, Williams EM. Death certification by house officers and general practitioners—practice and performance. J Public Health Med. 1993;15:192–201. [PubMed] [Google Scholar]

- 37.Myers KA, Farquhar DR. Improving the accuracy of death certification. CMAJ. 1998;158:1317–23. [PMC free article] [PubMed] [Google Scholar]

- 38.Jordan JM, Bass MJ. Errors in death certificate completion in a teaching hospital. Clin Invest Med. 1993;16:249–55. [PubMed] [Google Scholar]

- 39.Lakkireddy DR, Gowda MS, Murray CW, Basarakodu KR, Vacek JL. Death certificate completion: how well are physicians trained and are cardiovascular causes overstated? Am J Med. 2004;117:492–8. doi: 10.1016/j.amjmed.2004.04.018. [DOI] [PubMed] [Google Scholar]

- 40.Horan J, Mallonee S. Injury surveillance. Epidemiol Rev. 2003;25:24–42. doi: 10.1093/epirev/mxg010. [DOI] [PubMed] [Google Scholar]

- 41.Bonnie RJ, Fulco CE, Liverman CT, editors. the Committee on Injury Prevention and Control, Institute of Medicine. Reducing the burden of injury: advancing prevention and treatment. Washington: National Academy Press; 1999. pp. 78–9. [PubMed] [Google Scholar]