Enormous investment has gone into computerised hospital information systems worldwide. The estimated costs for each large hospital are about $50m (£33m), yet the overall benefits and costs of hospital information systems have rarely been assessed.1 When systems are evaluated, about three quarters are considered to have failed,2 and there is no evidence that they improve the productivity of health professionals.3

To generate information that is useful to decision makers, evaluations of hospital information systems need to be multidimensional, covering many aspects beyond technical functionality.4 A major new information and communication technology initiative in South Africa5 gave us the opportunity to evaluate the introduction of computerisation into a new environment. We describe how the project and its evaluation were set up and examine where the project went wrong. The lessons learnt are applicable to the installation of all hospital information systems.

Summary points

Implementation of a hospital information system in Limpopo Province, South Africa, failed

Problems arose because of inadequate infrastructure as well as with the functioning and implementation of the system

Evaluation using qualitative and quantitative methods showed that the reasons for failure were similar to those in computer projects in other countries

Reasons for failure included not ensuring users understood the reasons for implementation from the outset and underestimating the complexity of healthcare tasks

Those responsible for commissioning and implementing computerised systems need to heed the lessons learnt to avoid further waste of scarce health resources

Development of the project

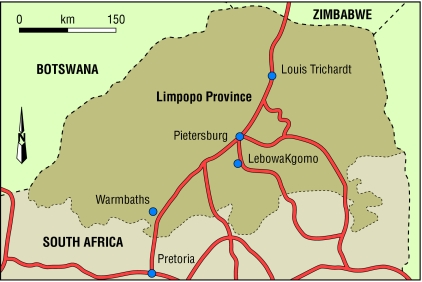

The project to install a computerised integrated hospital information system in Limpopo (Northern) Province (figure) was the biggest medical informatics project in Africa. The project was initiated in response to a national strategy to restructure health care after the 1994 elections. Changes included shifting resources from tertiary and secondary care to primary care, devolution of management to district level, and redistribution of resources in response to perceived geographical and sectoral need. The success of these initiatives required managerial, administrative, and clinical processes that were efficient, effective, and equitable. Adequate information was considered essential, not only to facilitate the tasks but also for short and long term monitoring.

Limpopo Province has 42 hospitals, comprising two mental health institutions, eight regional hospitals (two acting as a tertiary complex with teaching responsibilities), and 32 district hospitals. The area is one of the poorest in South Africa. IBM was awarded the contract for implementation at a cost of 134m Rand (nearly £14m), which represented 2.5% of the province's health and welfare annual budget.

Information system

The overall goal of the project was to improve the efficiency and effectiveness of health and welfare services through the creation and use of information for clinical, administrative, and monitoring purposes. Box B1 lists the objectives of the hospital information system and box B2 lists the functions required to support these objectives in each hospital.

Box 1.

Objectives of health information system

Box 2.

Planned functions for hospital information system

- Master patient index

- Admission, discharges, and transfers

- Patient record tracking

- Appointments

- Order entry and reporting of results

- Departmental systems for laboratory, radiology, operating theatre, other clinical services, dietary services, laundry

- Financial management

- Management information and hospital performance indicators

Each hospital would have its own server to manage all local data and distribute summary data on each patient encounter to other hospitals where the patient had been seen and to a central server at the Welfare and Health Information Technology Operations Centre in Pietersberg (now Polokwane). Demographic information and a list of health problems (based on the international classification of disease (ICD-10) codes) for each patient would also be sent to the centre and other hospitals. The centre therefore would contain a master patient index and data to encounter level from all 42 hospitals. This information could be used for management and epidemiological purposes.

The project was managed by a team consisting of staff from the Department of Health and Welfare, IBM, and its subcontractors. It was initiated in 1997 and a pilot system was introduced into Mankweng Hospital in September 1998. The remaining hospitals were to receive the system over the next 18 months.

Evaluation methods

We designed the evaluation programme to support the implementation (formative evaluation) as well as to assess the benefits and costs (summative evaluation).1 The summative evaluation was modelled on health technology assessment and so was more extensive than the traditional technical assessment of hardware and software. It aimed to assess daily use of the system, the clinical and managerial environment, and ultimately its effect on the quality of patient care and public health. The design drew on a range of disciplines and included representatives of all stakeholders.

The evaluation programme consisted of three separate but interlinked activities: an orientation study, creation of an evaluation framework, and designing the evaluation programme.

Orientation study

The aims of the orientation study were to identify the aspirations and expectations of potential users and give the designers a detailed understanding of what was required. We did a survey of knowledge, attitudes, and perception and asked users what questions the evaluation should include and about potential problems requiring preventive action. Interviews with 250 potential users generated 35 questions to be included in the evaluation.

Creation of evaluation framework

The 35 questions were presented to a workshop supported by the South Africa Health Systems Trust with representatives of 10 stakeholder groups. This resulted in expansion to 114 questions. Through a process of collation and distillation we incorporated the questions into 10 projects (box B3) to create an evaluation framework.

Box 3.

Projects in evaluation framework

- Assessing whether training, change management, and support are optimal

- Assessing whether the reliability of the system (including peripherals, network, hardware and software) is optimal

- Assessing the project management

- Assessing whether the system improves the communication of patient information between healthcare facilities

- Assessing whether data protection is adequate

- Assessing the quality and actual use of decision making information to support clinicians, hospital management, provincial health executives, and the public

- Assessing whether patient administration processes are more standardised and efficient

- Assessing whether costs per unit service are reduced

- Assessing whether revenue collection has improved

- Assessing whether information is used for audit or research

Designing the evaluation programme

The trust supported a second workshop to develop the evaluation programme. The workshop set out to:

Consider the overall design of the evaluation

Prioritise the projects in the evaluation framework

Agree specific outcome indicators

Provide technical advice on drafting the final protocol and proposal for submission to funding bodies

Discuss the organisational structures required to support and implement the programme.

The conclusion was that a randomised controlled trial would be the most robust method for the summative component of the evaluation together with a nested qualitative assessment. The plan was to randomise 24 similar district hospitals to an “early” or “late” implementation group and study various measures once the system had been implemented in half the hospitals. This design was acceptable to the commissioners of the system, the provincial health department, as it was rigorous and prevented them from being able to choose which hospitals would be computerised first. This was a sensitive issue at the time because of the second national elections.

The ethics committee of the Medical University of Southern Africa approved the full study protocol (see bmj.com), and the health systems trust agreed to fund it. A final evaluation report was submitted to the trust in 2002.6

Failures in implementation

Problems began to occur with the implementation in 1999. These can divided into problems related to infrastructure, application, and organisation of the implementation process. Examples of the infrastructure problems include difficulties identifying appropriate computer rooms with air conditioning and reliable power. Mankweng Hospital was on the same circuit as the local bakery, and damaging power surges occurred when the ovens were switched on early in the morning. Installation of local networks was delayed by a concurrent programme to upgrade hospitals.

Application problems related to the functionality and reliability of the system. Because there were too many proposed functions to implement in one phase, some hospitals ended up trying to run the information system in a reduced form in parallel with separate pharmacy and laboratory systems. Many of the modules that were proposed in the initial plan were not created in time. The software also used advanced features, such as replication, that staff then had to be trained in, causing delays. Certain key aspects of the system were not given adequate attention, such as reliability and ease of making printouts.

Poor organisation of the implementation left users dissatisfied. Getting the basic functions of the system working received priority over management reporting, thereby reducing the immediate benefit to decision makers. Failure to respond quickly to computer malfunctions resulted in some hospitals not having their computers functioning for up to six weeks at a time. Selective password access meant that some staff were unable to access the system, which also led to antagonism.

We modified the randomised component of the evaluation to an externally controlled before and after design as the system needed to be implemented early in four control hospitals that had adequate infrastructure. The original IBM contract ended in 2000. The system was not working in all the hospitals, and the contract was therefore retendered. In the 24 study hospitals that had the system installed, we found no significant differences in the quantitative outcome variables analysed (median time spent in outpatients, length of stay, bed occupancy, number of drug prescriptions per patient, improved revenue collection, cost per case, and number of referrals).

The new commission was awarded to Ethniks. However, rather than extending the original system, Ethniks tried to introduce a new one. Less than a year into the new contract, the modified system was continuing to fail to meet expectations. A formal review suggested irregularities in the awarding of the second contract7 and recommended that the project should be put on hold, payments be stopped, and money already forwarded recovered.

Reasons for failure

The reasons for failure of the Limpopo system are discussed below (box B4). South Africa was undergoing one of the greatest changes in the country's history, and this was reflected in the hospital information system project. Although the magnitude of change facing health systems in other countries is different, the issues are common.

Box 4.

Why are computerised health information systems prone to failure?

- Failure to take into account the social and professional cultures of healthcare organisations and to recognise that education of users and computer staff is an essential precursor

- Underestimation of the complexity of routine clinical and managerial processes

- Dissonance between the expectations of the commissioner, the producer, and the users of the system

- Implementation of systems is often a long process in a sector where managerial change and corporate memory is short

- “My baby” syndrome

- Reluctance to stop putting good money after bad

- Failure of developers to look for and learn lessons from past projects

Failure to take account of healthcare cultures

The information system was intended to drive the managerial restructuring of the whole province and a new way of delivering health care in one of the most deprived provinces in the country. Although this was understood at provincial level, local priorities remained the securing of basic ingredients of health care—that is, sufficient well trained staff with adequate access to equipment.

The information system initially increased the workload of staff, but they received insufficient education before the system was introduced. As in many other projects, our evaluation highlighted that educational efforts concentrated too much on “how” to work the system rather than “why” it should be used and were often started too late in the implementation phase.

Underestimating the complexity of healthcare processes

Most healthcare interactions occur in the context of apprehension, anxiety, and time pressure. For a patient worried about the reasons for visiting the hospital and a provider concerned with managing the clinical needs of the patient, any additional activity not considered essential to alleviating their immediate concerns will be unwelcome. This means that even the most basic administrative tasks have more complex dimensions then equivalent tasks in the non-health sector. Add in the complexity of the average patient pathway through the health system, and you begin to understand the challenges faced by anyone implementing a hospital information system.

Different expectations of commissioner, developer, and users

The degree of clash in understanding between those who commission the system, the developers, and hospital staff who use it is rarely appreciated. Paradoxically, the problem may be exacerbated by people with both clinical and computer experience. Although such people are valuable as “product champions” to support implementation, unless they are kept in balance they can inappropriately push commissioners beyond what is sustainable on a routine basis by staff with no special interest in information technology.

Long implementation in context of fast managerial change

Healthcare management is changing rapidly and staff often switch responsibility. This means that project teams overseeing extended programmes are rarely in post for the whole period. The head of department and many senior individuals who supported the original project changed during the Limpopo project.

“My baby” syndrome

Most new interventions in health care are driven by entrepreneurs who have great faith in their project. They may not be capable of standing back and taking a dispassionate view of the cost effectiveness of the interventions. In this case, the implications of an emerging national policy that was encouraging modular systems—that is, pharmacy and radiology that could be linked rather than fully integrated—were not fully assimilated.

Reluctance to stop putting good money after bad

When we look back at unsuccessful projects, it is often clear when the process started going wrong. However, it is more difficult to assess whether the subsequent worsening could have been rectified and, if not, when funding should have been withdrawn. At the time, it is often easier to continue to inject resources in an attempt to achieve a result. If fundamental underlying factors are not corrected, the project will still fail but at additional cost.

Failure to look for and learn from lessons from past projects

Evaluation of expensive healthcare interventions often fails to take an overall view. Managers usually monitor costs and meeting of contractual milestones, whereas academics or health economists assess effectiveness and overall worth (cost effectiveness). This fragmentation of responsibility (often with an absence of external and unbiased observers) can result in quite large deficits being missed until it is too late.

Conclusions

The failure of implementation resulted in the failed aspirations of many dedicated information technology staff, health managers, and other professionals. Most demoralising, however, is the lost contribution that the initial £14m plus £6.2m for the second contract could have made to health care in one of the poorest regions of South Africa. Nevertheless, this story is not unique to developing countries. The United Kingdom has had its share of failed health information systems, wasted millions, and disciplinary hearings.8,9

The computer industry has flourished by portraying its products as essential for efficient and effective health care. Until this is proved by experience and sound research, scepticism is required. The errors described above will continue to be replicated until the unique nature of hospital information systems is recognised and properly designed evaluation is built into all contracts at the beginning.

Supplementary Material

Figure.

Limpopo Province in South Africa

Acknowledgments

We thank the participants of both workshops and the health systems trust for funding them.

Footnotes

Competing interests: None declared.

The protocol of the evaluation programme is available on bmj.com

References

- 1.Friedman C, Wyatt J. Evaluation methods in medical informatics. New York: Springer-Verlag; 1997. [Google Scholar]

- 2.Willcocks L, Lester S. Evaluating the feasibility of information technology, research. Oxford: Oxford Institute of Information Management; 1993. . (Discussion paper DDP 93/1.) [Google Scholar]

- 3.Gibbs W. Taking computers to task. Sci Am. 1997;278:64–71. [Google Scholar]

- 4.Kaplan B. Development and acceptance of medical information systems: an historical overview. J Health Hum Resour Adm. 1988;11:9–29. [PubMed] [Google Scholar]

- 5.Herbst K, Littlejohns P, Rawlinson J, Collinson M, Wyatt JC. Evaluating computerised health information systems: hardware, software and human ware. Experiences from Northern Province, South Africa. J Public Health Med. 1999;21:305–310. doi: 10.1093/pubmed/21.3.305. [DOI] [PubMed] [Google Scholar]

- 6. Health Systems Trust. Evaluation of hospital information system in the Northern Province in South Africa. www.hst.org.za/research/hisnp.htm (accessed February 2003).

- 7. Irregularities in health tender. www.news24.com/contentDisplay/level4Article/0,1113,2-1134_1123426,00.html (accessed September 2002).

- 8.Audit Commission. For your information: a study of information management and systems in the acute hospital. London: HMSO; 1995. [Google Scholar]

- 9.National Audit Office. The 1992 and 1998 IM&T strategies of the NHS Executive. London: Stationery Office; 1999. . (HC371, 1998-99.) [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.