Abstract

Background

The long-term effect of problem-based learning (PBL) on factual knowledge is poorly investigated. We took advantage of a previous randomised comparison between PBL and traditional teaching in a 3rd year course to follow up factual knowledge of the students during their 4th and 5th year of medical school training.

Methods

3rd year medical students were initially randomized to participate in a problem-based (PBL, n = 55), or a lecture-based (LBL, n = 57) course in basic pharmacology. Summative exam results were monitored 18 months later (after finishing a lecture-based course in clinical pharmacology). Additional results of an unscheduled, formative exam were obtained 27 months after completion of the first course.

Results

Of the initial sample of 112 students, 90 participated in the second course and exam (n = 45, 45). 32 (n = 17 PBL, n = 15 LBL) could be exposed to the third, formative exam. Mean scores (± SD) were 22.4 ± 6.0, 27.4 ± 4.9 and 20.1 ± 5.0 (PBL), or 22.2 ± 6.0, 28.4 ± 5.1 and 19.0 ± 4.7 (LBL) in the first, second and third test, respectively (maximum score: 40). No significant differences were found between the two groups.

Conclusion

A small-scale exposure to PBL, applied under randomized conditions but in the context of a traditional curriculum, does not sizeably change long-term presence of factual knowledge within the same discipline.

Background

Long-term retention of knowledge, a proposed strength of problem-based learning, has received little attention in educational research [1]. Randomised controlled designs rarely included long-term follow-up. No difference was found regarding indirect measures related to knowledge aquisition or maintenance [2]. The only evidence for better continuation of knowledge acquisition comes from a non-randomised study [3].

Therefore, we decided to follow up the factual knowledge of undergraduate medical students who had been randomly exposed to a small problem-based learning experience within the context of a traditional, largely lecture-based curriculum. We assessed the knowledge within the same discipline, pharmacology, using a written test. We applied a similar test directly after the randomised intervention, 18 months later as a mandatory summative exam, and 27 months later as a non-scheduled formative exam.

Methods

In 1997, problem-based learning was introduced into a 3rd-year course of basic medical pharmacology using a randomised, controlled design described earlier [4]. Of 112 students who participated (55 problem-based or PBL, 57 lecture-based or LBL) in that course and the summative exam, 90 (45 of the previous PBL group, 45 of the previous LBL group) took the 4th-year course and final exam on clinical pharmacology 18 months later. The clinical pharmacology course was held in the same lecture-based format as the traditional LBL course in basic pharmacology. It was supplemented by an additional, non-mandatory 3-case, 4-session PBL tutorial, which was taken by 15 students (6 had PBL previously, 9 LBL). In October 1999, we asked all participants of a mandatory 5th-year course (non-pharmacology) to sit an additional, formative exam of the same type. Of the students attending that course, 32 (17 PBL, 15 LBL) were from the original groups and agreed to participate. One student left the lecture hall without identifying himself. Students were motivated by explaining them the aim of our study, and by offering a free dinner to the three candidates with the highest scores, and to another three selected by chance.

The examination at each of the three levels consisted of 30 questions to be answered within one hour. Twenty multiple-choice questions (MCQ) and 10 short-essay questions (SEQ) yielded a maximum of 40 points (1 per MCQ, 2 for correct SEQ, graded at 1/2-point intervals). The third exam consisted of equal amounts of questions covering contents of the basic and the clinical pharmacology curriculum, respectively. SEQ were scored by raters blinded regarding the kind of previous training of the students. Data (mean and SD) were analysed using two-sided Mann-Whitney U-test (α = 0.05), comparing PBL versus LBL.

Results

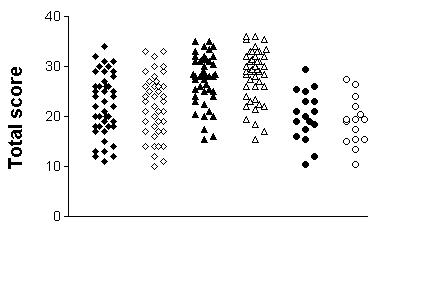

Fig. 1 depicts the scores of the two groups on the three exams. Comparing the first and second exam, scores increased from 22.4 ± 6.0 to 27.4 ± 4.9 (PBL), and from 22.2 ± 6.0 to 28.4 ± 5.1 (LBL). There were no differences between PBL and LBL students in either exam, except for slightly but significantly higher scores of LBL students in their MCQ subscore of the second exam. Further descriptive subgroup analyses of the second exam focussing on the type of question (SEQ), on questions related to contents already covered in the basic course, or on those students who took the additional PBL sessions in clinical pharmacology, revealed no differences between the groups of students.

Figure 1.

Exam results according to initial randomisation Results of the three exams, obtained directly after the intervention (diamonds), 18 months later after the conventional clinical pharmacology course (triangles), and 27 months later without previous announcement (circles). Each symbol represents the score from an individual student. Closed symbols: original PBL group, open symbols: original LBL group.

The unannounced third exam was performed by 32 students (17 previous PBL, 15 pervious LBL). Their scores amounted to 20.1 ± 5.0, and 19.0 ± 4.7, respectively. In both groups, scores had dropped markedly compared with previous summative tests. Data appear normally distributed, regardless of group or time of examination. Again, descriptive subgroup analyses revealed no differences depending type or curricular representation of the questions (Table 1).

Table 1.

Results of the third exam stratified according to question type and course

| Question type | Multiple choice | Short Essay | ||

| Course which had covered the topic | Basic Pharmacology | Clinical Pharmacology | Basic Pharmacology | Clinical Pharmacology |

| PBL group (n = 17) | 6.06 ± 1.95 | 6.18 ± 1.38 | 3.15 ± 1.96 | 4.74 ± 2.28 |

| LBL group (n = 15) | 6.07 ± 1.91 | 5.73 ± 1.33 | 3.13 ± 2.36 | 4.10 ± 2.08 |

Correct answers (mean ± SD) obtained in the third exam, according to question type and curricular representation, i.e., previous course which had covered the topic of the respective question. No significant differences were found between the two groups of students.

Discussion

Our data do not provide evidence for a beneficial effect of problem-based learning on factual knowledge. When students were exposed to advanced studies within the same discipline, there was even a trend towards better performance of students from the control group. Possibly, these students were more comfortable with the traditional method of teaching, which they had already perceived during their first course. However, this negative trend was abolished when knowledge was re-tested later in an unannounced test. Thus, PBL did not negatively affect the long-term learning outcome, although it might have suffered from drawbacks described for hybrid approaches [5], such as a rather superficial approach to learning.

Our intervention was small, consisting of just ten 2-hour PBL sessions in basic pharmacology (plus additional time for self-directed learning and 10 hours of concept lectures), but all students had further PBL experience one year later in a primary-care PBL course. Furthermore, they had the option of an additional PBL tutorial in clinical pharmacology, but this was taken by few students. It may be that – given this small scale of PBL exposure, students' attitudes towards learning were not sufficiently affected. Novice tutors may be unable to fully convey the "philosophy" of self-directed, reflective learning [6]. However, most of our tutors – besides the usual two-day training workshop and their weekly supervisions – had practised PBL tutoring for at least one semester. This time was found sufficient to gain experience in terms of improving the group process [7]. In addition, the positive evaluation of the PBL intervention [1] argues against the assumption that learning styles had remained unaltered – at least in the short term. Other short-term interventions also failed to sustain altered learning habits [8]. Even the learning styles of graduates of an entire PBL curriculum were indistiguishable from their traditional classmates after long-term follow-up [2].

It could be argued that any scheduled summative exam provides a readout of acute rehearsal rather than long-term knowledge. In addition, these data may be governed by the very recent clinical pharmcology classes. To test this, students were later exposed to a similar examination format without being informed a priori. Astonishingly, 32 of the 33 potentially eligible students agreed to participate. The dropout rate from the original sample was nevertheless quite high, evidently because the majority of students had taken the mandatory course in an earlier semester or had been absent for other reasons. This might cause a selection bias, but the limited data available (similar age, clinical year, and results in the previous tests) argue against this assumption. The trend for a better performance of students originally randomised to traditional teaching was reversed, but the difference in favor of the PBL group was still insignificant. The scores were rather low in this test. Remarkably, the correlation between the results of the unscheduled test and the previous summative tests was low (Pearson r ≈ 0.2), unlike correlations between the two sequential summative tests (r ≈ 0.5). This might indicate that a different kind of knowledge is measured by an unscheduled test.

One should consider that the test results do not quite discriminate between retention of old knowledge, acquired by either traditional methods or PBL, and the presence of knowlegde acquired after the randomised intervention. The study of Rodriguez et al., 2002 [9] suggests that such new knowledge of pharmacology can be gained even without an educational intervention within the same discipline. This phenomenon could not be reproduced in our setting. Possible reasons include our longer follow-up interval, use of novel questions rather than those from the original thesaurus, or a teaching environment which does not as much reinforce acquisition of pharmacological knowledge outside the pharmacology courses. It may be easier to test long-term effects in more specialised disciplines which should be less "contaminated" by other medical school classes [9]. Our data allow to conclude, however, that a single-course PBL intervention does not affect – in either direction – learning outcome (viewed as a combined effect of retention and new acquisition) in any substantial and sustained manner. At least, putting the students in a PBL environment within a traditional curriculum did not compromise their factual knowledge in the long term.

It might be that a larger-scale intervention, or an increased sample size, would have revealed a significant beneficial long-term effect of PBL. Even curriculum-wide comparisons have been performed, but their interpretation [10] is also highly questionable [11]. The most suitable size of a PBL intervention to be effective remains unresolved. In any case, future studies on long-term effects of problem-based learning should account for small effect sizes regarding factual knowledge. Long-term follow-up of other measurable outcomes [12] may hold better promise.

Conclusion

A small-scale randomised exposure to PBL – embedded in a largely traditional curriculum – does not affect long-term factual knowledge within the same discipline.

Competing interests

None declared.

Authors' contributions

S.H. was principal investigator, prepared the manuscript draft, and revised the manuscript according to suggestions of coauthors. B.M., R.M. L. and W.A. were research assistants at the time when the data were obtained, they collected and analysed the data, and served as tutors for some of the PBL groups. W.A. made comments and revisisons on the manuscript. U.B. participated in the design and scheduling of the third exam, and gave comments on the manuscript draft. All Authors have read and approved the final version of the manuscript.

Pre-publication history

The pre-publication history for this paper can be accessed here:

Acknowledgments

Acknowledgements

We gratefully acknowledge the continuing support of student tutors and staff tutors of the Department of Pharmacology, University of Cologne. This study was supported by a grant from the Minister of Science and Education of Nordrhein-Westfalen.

Contributor Information

Stefan Herzig, Email: stefan.herzig@uni-koeln.de.

Ralph-Mario Linke, Email: Praxis.Linke@Arztinfo.com.

Bent Marxen, Email: Bent.Marxen@t-online.de.

Ulf Börner, Email: ulf.boerner@uni-koeln.de.

Wolfram Antepohl, Email: Wolfram.Antepohl@rehab.inr.liu.se.

References

- Moust JHC, Schmidt HG. Factors affecting small-group tutorial learning: A review of research. In: Evensen DH, Hmelo CE, editor. Problem-based learning: A research perspective on learning interactions. Mahwah, NJ, Lawrence Erlbaum; 2000. pp. 19–52. [Google Scholar]

- Peters AS, Greenberger-Rosovsky R, Crowder C, Block SD, Moore GT. Long-term outcomes of the new pathway program at Harvard medical school: a randomized controlled trial. Acad Med. 2000;75:470–479. doi: 10.1097/00001888-200005000-00018. [DOI] [PubMed] [Google Scholar]

- Shin JH, Haynes B, Johnson ME. Effect of problem-based, self-directed undergraduate education on life-long learning. Can Med Assoc J. 1993;148:969–976. [PMC free article] [PubMed] [Google Scholar]

- Antepohl W, Herzig S. Problem-based learning versus lecture-based learning in a course of basic pharmacology – a controlled, randomised study. Med Education. 1999;33:106–113. doi: 10.1046/j.1365-2923.1999.00289.x. [DOI] [PubMed] [Google Scholar]

- Houlden RL, Collier CP, Frid PJ, John SL, Pross H. Problems identified by tutors in a hybrid problem-based learning curriculum. Acad Med. 2001;76:81. doi: 10.1097/00001888-200101000-00021. [DOI] [PubMed] [Google Scholar]

- Maudsley G. Making sense of trying not to teach: an interview study of tutors' ideas of problem-based learning. Acad Med. 2002;77:162–172. doi: 10.1097/00001888-200202000-00017. [DOI] [PubMed] [Google Scholar]

- Matthes J, Marxen B, Linke RM, Antepohl W, Coburger S, Christ H, Lehmacher W, Herzig S. The influence of tutor qualification on the process and outcome of learning in a problem-based course of basic medical pharmacology. Naunyn Schmiedebergs Arch Pharmacol. 2002;366:58–63. doi: 10.1007/s00210-002-0551-0. [DOI] [PubMed] [Google Scholar]

- Ozuah PO, Curtis J, Stein RE. Impact of problem-based learning on residents' self-directed learning. Arch Pediatr Adolesc Med. 2001;155:669–672. doi: 10.1001/archpedi.155.6.669. [DOI] [PubMed] [Google Scholar]

- Rodriguez R, Campos-Sepulveda E, Vidrio H, Contreras E, Valenzuela F. Evaluating knowledge retention of third-year medical students taught with an innovative pharmacology program. Acad Med. 2002;77:574–577. doi: 10.1097/00001888-200206000-00018. [DOI] [PubMed] [Google Scholar]

- Colliver JA. Effectiveness of problem-based learning curricula: research and theory. Acad Med. 2000;75:259–266. doi: 10.1097/00001888-200003000-00017. [DOI] [PubMed] [Google Scholar]

- Norman GR, Schmidt HG. Effectiveness of problem-based learning curricula: theory, practice and paper darts. Med Education. 2000;34:721–728. doi: 10.1046/j.1365-2923.2000.00749.x. [DOI] [PubMed] [Google Scholar]

- Thomas RE. Problem-based learning: measurable outcomes. Med Education. 1997;31:320–329. doi: 10.1046/j.1365-2923.1997.00671.x. [DOI] [PubMed] [Google Scholar]