Abstract

How do we empathize with others? A mechanism according to which action representation modulates emotional activity may provide an essential functional architecture for empathy. The superior temporal and inferior frontal cortices are critical areas for action representation and are connected to the limbic system via the insula. Thus, the insula may be a critical relay from action representation to emotion. We used functional MRI while subjects were either imitating or simply observing emotional facial expressions. Imitation and observation of emotions activated a largely similar network of brain areas. Within this network, there was greater activity during imitation, compared with observation of emotions, in premotor areas including the inferior frontal cortex, as well as in the superior temporal cortex, insula, and amygdala. We understand what others feel by a mechanism of action representation that allows empathy and modulates our emotional content. The insula plays a fundamental role in this mechanism.

Empathy plays a fundamental social role, allowing the sharing of experiences, needs, and goals across individuals. Its functional aspects and corresponding neural mechanisms, however, are poorly understood. When Theodore Lipps (as cited in ref. 1) introduced the concept of empathy (Einfühlung), he theorized the critical role of inner imitation of the actions of others in generating empathy. In keeping with this concept, empathic individuals exhibit nonconscious mimicry of the postures, mannerisms, and facial expressions of others (the chameleon effect) to a greater extent than nonempathic individuals (2). Thus, empathy may occur via a mechanism of action representation that modulates and shapes emotional contents.

In the primate brain, relatively well-defined and separate neural systems are associated with emotions (3) and action representation (4–7). The limbic system is critical for emotional processing and behavior, and the circuit of fronto-parietal networks interacting with the superior temporal cortex is critical for action representation. This latter circuit is composed of inferior frontal and posterior parietal neurons that discharge during the execution and also the observation of an action (mirror neurons; ref. 7), and of superior temporal neurons that discharge only during the observation of an action (6, 8, 9). Anatomical and neurophysiological data in the nonhuman primate brain (see review in ref. 7) and imaging human data (10–13) suggest that this circuit is critical for imitation and that within this circuit, information processing would flow as follows. (i) The superior temporal cortex codes an early visual description of the action (6, 8, 9) and sends this information to posterior parietal mirror neurons (this privileged flow of information from superior temporal to posterior parietal is supported by the robust anatomical connections between superior temporal and posterior parietal cortex) (14). (ii) The posterior parietal cortex codes the precise kinesthetic aspect of the movement (15–18) and sends this information to inferior frontal mirror neurons (anatomical connections between these two regions are well documented in the monkey) (19). (iii) The inferior frontal cortex codes the goal of the action [both neurophysiological (5, 20, 21) and imaging data (22) support this role for inferior frontal mirror neurons]. (iv) Efferent copies of motor plans are sent from parietal and frontal mirror areas back to the superior temporal cortex (12), such that a matching mechanism between the visual description of the observed action and the predicted sensory consequences of the planned imitative action can occur. (v) Once the visual description of the observed action and the predicted sensory consequences of the planned imitative action are matched, imitation can be initiated.

How is this moderately recursive circuit connected to the limbic system? Anatomical data suggest that a sector of the insular lobe, the dysgranular field, is connected with the limbic system as well as with posterior parietal, inferior frontal, and superior temporal cortex (23). This connectivity pattern makes the insula a plausible candidate for relaying action representation information to limbic areas processing emotional content.

To test this model, we used functional MRI (fMRI) while subjects were either observing or imitating emotional facial expressions. The predictions were straightforward: If action representation mediation is critical to empathy and the understanding of the emotions of others, then even the mere observation of emotional facial expression should activate the same brain regions of motor significance that are activated during the imitation of the emotional face expressions. Moreover, a modulation of the action representation circuit onto limbic areas via the insula predicts greater activity during imitation, compared with observation of emotion, throughout the whole network outlined above. In fact, mirror areas would be more active during imitation than observation because of the simultaneous encoding of sensory input and planning of motor output (13). Within mirror areas, the inferior frontal cortex seems particularly important here, given that understanding goals is an important component of empathy. The superior temporal cortex would be more active during imitation than observation, as it receives efferent copies of motor commands from mirror areas (12). The insula would be more active during imitation because its relay role would become more important during imitation, compared with mere observation. Finally, limbic areas would also increase their activity because of the modulatory role of motor areas with increased activity. Thus, observation and imitation of emotions should yield substantially similar patterns of activated brain areas, with greater activity during imitation in premotor areas, in inferior frontal cortex, in superior temporal cortex, insula, and limbic areas.

Materials and Methods

Subjects.

Eleven healthy, right-handed subjects participated in the experiment (seven males and four females). The mean age of the subject group was 29, and ranged from 21 to 39 years. All subjects were evaluated with a brief neurological examination and questionnaire to screen for any medical/behavioral disorders. Handedness was evaluated by using a modified version of the Edinburgh Handedness Inventory (24). The study was approved by the University of California at Los Angeles Institutional Review Board and was performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki. Written informed consent was obtained from all subjects before inclusion in the study.

Stimuli.

Stimuli were presented to subjects through magnet-compatible goggles. Using as stimulus a widely known set of facial expressions (25), three stimulus picture sets were assembled, each containing randomly ordered depictions of six emotions (happy, sad, angry, surprise, disgust, and afraid). Of the three stimulus sets, one contained whole faces, and the other two sets contained only eyes or only mouths, which were cropped from the same set of whole faces. All pictures, whether whole face, only mouth, or only eyes, consisted of different individuals, with males and females in equal proportion. The rationale for showing only parts of faces was suggested by the cortical representation of body parts in inferior frontal cortex, where the mouth is represented but the eyes are not (26). In principle, if eye emotional expressions can be dissociated from the emotional expression of the rest of the face, one might see the predicted pattern of activity in inferior frontal cortex during imitation of the whole face or of the mouth, but not during imitation of eye emotional expressions. However, our imaging data (see below) do not support such dissociation.

Behavioral Tasks.

Subjects were presented three runs of stimuli. One run consisted of six blocks of 24 s each. Each block contained six pictures (of the six emotion types), and each picture was presented for 4 s. Blocks were homogenous for stimulus type (i.e., all faces, or all eyes, or all mouths). Subjects were asked to imitate and internally generate the target emotion on the computer screen, or to simply observe. Imitate/observe conditions were counterbalanced across runs, and task blocks were separated by seven rest periods of 24 s (blank screen). The first rest period actually lasted 36 s, the additional 12 s being related to the first three brain volumes, which were discarded from the analysis due to signal instabilities.

Imaging.

Structural and fMRI measurements were performed on a General Electric 3.0 Tesla MRI scanner with advanced nuclear magnetic resonance echo-planar imaging (EPI) upgrade located in the Ahmanson-Lovelace Brain Mapping Center. Structural and functional scanning sequences performed in each subject included: one structural scan (coplanar high-resolution EPI volumes: repetition time (TR) = 4,000 ms; echo time (TE) = 54 ms; flip angle = 90°; 128 × 128 matrix; 26 axial slices; 3.125-mm in-plane resolution; 4-mm thickness; skip 1 mm) for anatomical data, and three functional scans (echo planar T2*-weighted gradient echo sequence; TR = 4,000 ms; TE = 25 ms; flip angle = 90°; 64 × 64 matrix; 26 axial slices; 3.125-mm in-plane resolution; 4-mm thickness; skip 1 mm). Each of the functional acquisitions covered the whole brain.

Individual subjects' functional images were aligned and registered to their respective coplanar structural images by using a rigid body linear registration algorithm (27). Intersubject image registration was performed with fifth-order polynomial nonlinear warping (28) of each subject's images into a Talairach-compatible brain magnetic resonance atlas (29). Data were smoothed by using an in-plane Gaussian filter for a final image resolution of 8.7 × 8.7 × 8.6 mm.

Image Statistics.

Image statistics was performed with analyses of variance (ANOVAs), allowing to factor out run-to-run variability within subjects as well as intersubject overall signal variability (12, 13, 30–33). Given the time-course of the blood oxygen level-dependent (BOLD) fMRI response, which takes several seconds to return to baseline (34), contiguous brain volumes cannot be considered independent observations (35, 36). Thus, the sum of signal intensity at each voxel throughout each task was used as the dependent variable (12, 13, 22). Significance level was set at P = 0.001 uncorrected at each voxel. To avoid false positives, only clusters bigger than 20 significantly activated voxels were considered (37). Factors included in the ANOVAs were subjects (n = 11), functional scans (n = 3), state (n = 2, task/rest), task (n = 2, imitation/observation), and stimuli (n = 3, whole face, eyes, and mouth).

Results

Preliminary ANOVAs revealed no differences in activation among the three imitation tasks, and no differences in activations among the three observation tasks. Thus, main effects of imitation, observation, and imitation minus observation are reported here. As Table 1 shows, there was a substantially similar network of activated areas for both imitation and observation of emotion. Among the areas commonly activated by imitation and observation of facial emotional expressions, the premotor face area, the dorsal sector of pars opercularis of the inferior frontal gyrus, the superior temporal sulcus, the insula, and the amygdala had greater activity during imitation than observation of emotion. To give a sense of the good overlap between the network described in this study and previously reported peaks of activation, Table 2 compares peak of activations in the right hemisphere observed in this study with previously published peaks in meta-analyses or individual studies in regions relevant to the hypothesis tested in this study.

Table 1.

Peaks of activation in Talairach coordinates

| Hemisphere | Region | BA | Talairach coordinates

|

t values

|

||||

|---|---|---|---|---|---|---|---|---|

| x | y | z | Imitation | Observation | Imi-obs | |||

| L | M1 | 4 | −52 | −4 | 32 | 9.63 | NS | 7.44 |

| R | M1 | 4 | 44 | −10 | 36 | 10.93 | NS | 7.3 |

| L | S1 | 2 | −40 | −38 | 40 | 4.42 | NS | NS |

| R | S1 | 3 | 56 | −22 | 36 | 4.14 | NS | 4.74 |

| L | PPC | 7 | −24 | −60 | 40 | 4.42 | 5.77 | NS |

| L | PPC | 40 | −40 | −46 | 50 | 4.51 | 3.72 | NS |

| R | PPC | 39 | 30 | −54 | 38 | 4.98 | 5.3 | NS |

| L | PMC | 6 | −30 | −2 | 50 | 6.14 | NS | 4.93 |

| L | PMC | 6 | −40 | 2 | 32 | 10.98 | 3.91 | 4.84 |

| L | PMC | 6 | −52 | 10 | 26 | 10.28 | 3.81 | 5.63 |

| R | PMC | 6 | 48 | 8 | 28 | 11.4 | 6.14 | 6.7 |

| R | PMC | 6 | 40 | 6 | 30 | 10.23 | 5.86 | 4.84 |

| L | Pre-SMA | 6 | 8 | 6 | 58 | 8.84 | NS | 8.05 |

| R | Pre-SMA | 6 | 0 | 4 | 52 | 9.26 | NS | 8.42 |

| L | RCZp | 32 | −4 | 14 | 44 | 7.72 | NS | 6.74 |

| L | RCZa | 32 | −8 | 30 | 26 | 4.98 | NS | 3.54 |

| R | ACC | 32 | 8 | 16 | 52 | NS | 4.28 | NS |

| R | MPFC | 9 | 6 | 54 | 34 | NS | 4.7 | NS |

| L | LPFC | 10 | −36 | 50 | 12 | 11.86 | NS | 11.26 |

| R | LPFC | 10 | 44 | 38 | 4 | 7.81 | 4.65 | 6.19 |

| R | LPFC | 10 | 34 | 42 | 6 | 8.61 | NS | 6.37 |

| L | IFG | 44 | −40 | 14 | 24 | 7.58 | 6.56 | 3.53 |

| R | IFG | 44 | 50 | 14 | 16 | 9.16 | 4.74 | 6.51 |

| L | IFG | 44 | −50 | 12 | 2 | 7.02 | NS | 9.26 |

| R | IFG | 44 | 50 | 12 | 2 | 8.98 | NS | 9.91 |

| L | IFG | 45 | −46 | 36 | 12 | NS | 5.67 | NS |

| R | IFG | 45 | 46 | 26 | 8 | 8.14 | 3.4 | 5.4 |

| L | Insula | 45 | −36 | 18 | 4 | 2.6 | NS | 4.65 |

| R | Insula | 45 | 36 | 30 | 6 | 7.91 | 3.02 | 6.09 |

| L | STS | 22 | −46 | −48 | 12 | 4.33 | 2.37 | 3.53 |

| R | STS | 22 | 46 | −44 | 12 | NS | 3.72 | NS |

| R | FFA | 19 | 39 | −64 | −14 | 11.86 | 11.86 | NS |

| L | Temp. pole | 38 | −24 | 20 | −32 | 4.74 | NS | 5.49 |

| R | Temp. pole | 38 | 36 | 26 | −28 | 8.47 | NS | 8.09 |

| L | Temp. pole | 38 | −42 | 20 | −20 | NS | 4.28 | NS |

| R | Temp. pole | 38 | 26 | 8 | −26 | NS | 3.95 | NS |

| R | Striatum | 24 | 8 | 6 | 4.88 | NS | 3.67 | |

| L | Amygdala | −22 | 0 | −16 | NS | 3.91 | NS | |

| R | Amygdala | 22 | 0 | −10 | 4.7 | 3.16 | 3.77 | |

In bold are peaks that follow the pattern predicted by the hypothesis of action representation route to empathy (i.e., activation during imitation and observation, and greater activity during imitation compared to observation). The majority of these peaks were predicted a priori, the only exception being the right dorsolateral prefrontal cortex, BA10. In italics are statistical levels approaching significance in predicted areas. NS, not significant.

Table 2.

Comparison of observed peaks of activation in predicted regions with previously reported peaks of activation in imaging meta-analyses and individual studies of action observation, imitation, and emotion

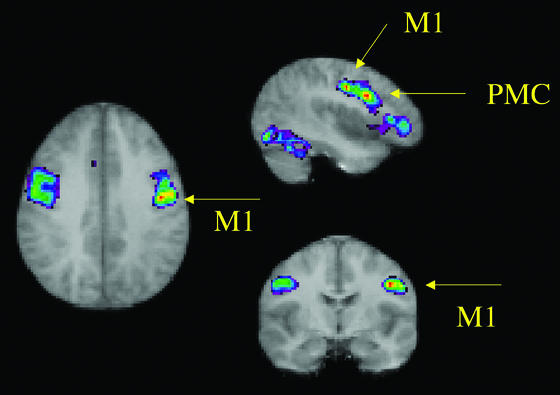

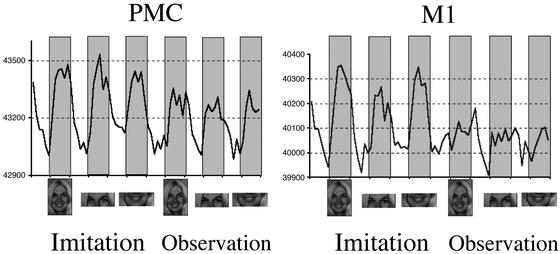

Figs. 1 and 2 show, respectively, the location and time-series of the right primary motor face area and of the premotor face area. The peaks of these activations correspond well with published data, as discussed below. Task-related activity is seen not only during imitation, but also during observation. This observation-related activity is very clear in premotor cortex but also visible in primary motor cortex (although not reaching significance in primary motor cortex).

Figure 1.

Peaks of activations in the right central (labeled M1) and precentral (labeled PMC) sulcus. The peak labeled M1 (x = 44, y = −10, z = 36) corresponds entirely (considering spatial resolution and variability factors) with meta-analytic PET data (x = 48 ± 5.2, y = −9 ± 5.6, z = 35 ± 5.5) for the mouth region of human primary motor cortex. The peak labeled PMC (x = 48, y = 8, z = 28) corresponds well with previously reported premotor mouth (x = 48, y = 0, z = 32) peaks.

Figure 2.

Time-series of peaks of activity in right central (M1) and precentral (PMC) sulcus shown in Fig. 1. Task-related activity is observable not only during imitation but also during observation of emotional facial expressions, especially in PMC.

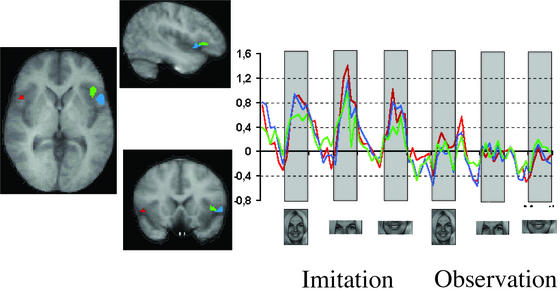

Fig. 3 shows the activations in inferior frontal cortex and anterior insula, with their corresponding time-series. The activity of these three regions is evidently correlated.

Figure 3.

Activations in the right insula (green) and right (blue) and left (red) inferior frontal cortex. Relative time-series are coded with the corresponding colors. The time-series have been normalized to the overall activity of each region. The activity profile of these three regions is extremely similar throughout the whole series of tasks.

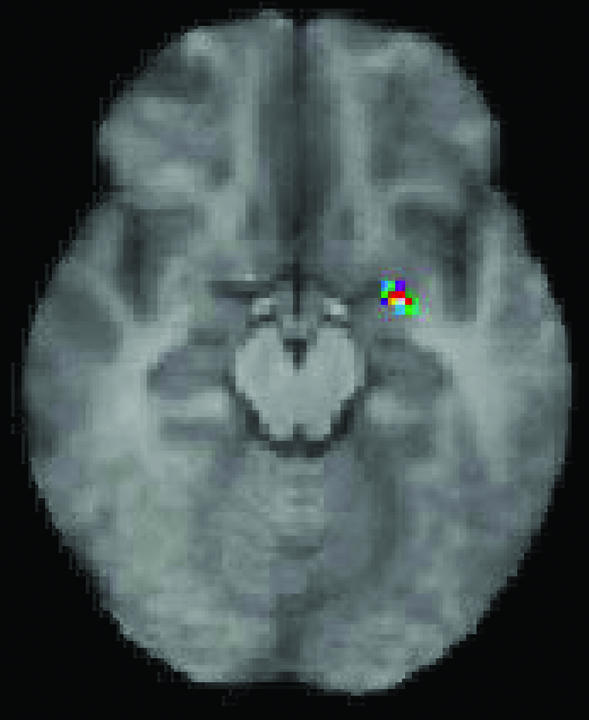

Fig. 4 shows the significantly increased activity in the right amygdala during imitation, compared with observation of emotional facial expressions

Figure 4.

Significantly increased activity in the right amygdala during imitation of emotional facial expressions compared with simple observation.

Discussion

The results of this study support our hypothesis on the role of action representation for understanding the emotions of others. Largely overlapping networks were activated by both observation and imitation of facial emotional expressions. Moreover, the observation of emotional expressions robustly activated premotor areas. Further, fronto-temporal areas relevant to action representation, the amygdala, and the anterior insula had significant signal increase during imitation compared with observation of facial emotional expression.

The peak of activation reported here in primary motor cortex during imitation of facial emotional expressions corresponds well with the location of the primary motor mouth area as determined by a meta-analysis of published positron-emission tomography (PET) studies, by a meta-analysis of original data in 30 subjects studied with PET, and by a consensus probabilistic description of the location of the primary motor mouth area obtained merging the results of the two previously described meta-analyses (38). This convergence confirms the robustness and reliability of the findings, despite the presence of facial motion during imitation. In fact, residual motion artifacts that were still present at individual level after motion correction were eliminated by the group analysis. This result is likely due to the fact that each subject had different kinds of motion artifacts and, when all of the data were considered, only common patterns of activity emerged.

The data also clearly show peaks of activity in the pre-supplementary motor area (pre-SMA) face area and the face area of the posterior portion of the rostral cingulate zone (RCZp) that correspond well with the pre-SMA and RCZp face locations as determined by a separate meta-analysis of PET studies focusing on motor areas in the medial wall of the frontal lobe (39). Thus, our dataset represents an fMRI demonstration of human primary motor and rostral cingulate face area. With regard to premotor regions, the peaks that we observe correspond well with premotor mouth peaks described by action observation studies (40). As Fig. 2 shows, robust pre-motor responses during observation of facial emotional expressions were observed, in line with the hypothesis that action representation mediates the recognition of emotions in others even during simple observation.

The activity in pars opercularis shows two separate foci during imitation, a ventral and a dorsal peak. Only the dorsal peak remained activated, although at significantly lower intensity, during observation of emotion (Table 1). This pattern, with very similar peaks of activation, was also observed in a recent fMRI meta-analysis comprising more than 50 subjects performing hand action imitation and observation in our lab.§§ Pars opercularis maps probabilistically onto Brodmann area 44 (41, 42), which is considered the human homologue of monkey area F5 (43–46) in which mirror neurons were described. In the monkey, F5 neurons coding arm and mouth movements are not spatially segregated, and the human imaging data are consistent with this observation. The imaging data suggest that the dorsal sector represents the mirror sector of pars opercularis, whereas the ventral sector may be simply a premotor area for hand and face movements.

The superior temporal sulcus (STS) area shows greater activity for imitation than for observation of emotional facial expressions, as predicted by the action representation mediation to empathy hypothesis. This area also corresponds anatomically well with an STS area specifically responding to the observation of mouth movements observed in different studies from different labs (47–50).

The anterior sector of the insula was active during both imitation and observation of emotion, but more so during imitation (Fig. 3), fulfilling one of the predictions of our hypothesis that action representation is a cognitive step toward empathy. This finding is in line with two kinds of evidence available on this sector of the insular lobe. First, the anterior insula receives slow-conducting unmyelinated fibers that respond to light, caress-like touch and may be important for emotional and affiliative behavior between individuals (51). Second, imaging data suggest that the anterior insular sector is important for the monitoring of agency (52), that is, the sense of ownership of actions, which is a fundamental aspect of action representation. This finding confirms a strong input onto the anterior insular sector from areas of motor significance.

The increased activity in the amygdala during imitation compared with observation of emotional facial expression (Fig. 4) reflects the modulation of the action representation circuit onto limbic activity. It has been long hypothesized (dating back to Darwin; refs. 53–55) that facial muscular activity influences people's affective responses. We demonstrate here that activity in the amygdala, a critical structure in emotional behaviors and in the recognition of facial emotional expressions of others (56–59), increases while subjects imitate the facial emotional expressions of others, compared with mere observation.

Previous and current literature on observing and processing facial emotional expression provides a rich context in which to consider the nature of the empathic resonance induced by our imitation paradigm. In general, our findings fit well with previously published imaging data on observation of facial expressions that report activation in both amygdala and anterior insula for emotional facial expressions (for a review, see ref. 57 and references therein). A study on conscious and unconscious processing of emotional facial expression (58) has suggested that the left but not the right amygdala is associated with explicit representational content of the observed emotion. Our data, showing a right lateralized activation of the amygdala during imitation of facial emotional expression, suggest that the type of empathic resonance induced by imitation does not require explicit representational content and may be a form of “mirroring” that grounds empathy via an experiential mechanism.

In this study, we treated emotion as a single, unified entity. Recent literature has clearly shown that different emotions seem related to different neural systems. For instance, disgust seems to activate preferentially the anterior insula (60), whereas fear seems to activate preferentially the amygdala (56, 57). We adopted this approach because our main goal was to investigate the relationships between action representation and emotion via an imitation paradigm. Future studies may successfully employ imitative paradigm to further explore the differential neural correlates of emotions.

Taken together, these data suggest that we understand the feelings of others via a mechanism of action representation shaping emotional content, such that we ground our empathic resonance in the experience of our acting body and the emotions associated with specific movements. As Lipps noted, “When I observe a circus performer on a hanging wire, I feel I am inside him” (1). To empathize, we need to invoke the representation of the actions associated with the emotions we are witnessing. In the human brain, this empathic resonance occurs via communication between action representation networks and limbic areas provided by the insula. Lesions in this circuit may determine an impairment in understanding the emotions of others and the inability to “empathize” with them.¶¶

Acknowledgments

This work was supported, in part, by the Brain Mapping Medical Research Organization, the Brain Mapping Support Foundation, the Pierson-Lovelace Foundation, the Ahmanson Foundation, the Tamkin Foundation, the Jennifer Jones-Simon Foundation, the Capital Group Companies Charitable Foundation, the Robson Family, the Northstar Fund, and grants from the National Center for Research Resources (RR12169 and RR08655).

Abbreviations

- fMRI

functional MRI

- PET

positron-emission tomography

Footnotes

Vicenzini, E., Bodini, B., Gasparini, M., Di Piero, V., Mazziotta, J. C., Iacoboni, M. & Lenzi, G. L. (2002) Cerebrovasc. Dis. 13, Suppl. 3, 52 (abstr.).

This paper was submitted directly (Track II) to the PNAS office.

Molnar-Szakacs, I., Iacoboni, M., Koski, L., Maeda, F., Dubeau, M. C., Aziz-Zadeh, L. & Mazziotta, J. C. (2002) J. Cogn. Neurosci., Suppl. S, F118 (abstr.).

References

- 1.Gallese V. J Conscious Stud. 2001;8:33–50. [Google Scholar]

- 2.Chartrand T L, Bargh J A. J Pers Soc Psychol. 1999;76:893–910. doi: 10.1037//0022-3514.76.6.893. [DOI] [PubMed] [Google Scholar]

- 3.LeDoux J E. Annu Rev Neurosci. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. [DOI] [PubMed] [Google Scholar]

- 4.diPellegrino G, Fadiga L, Fogassi L, Gallese V, Rizzolatti G. Exp Brain Res. 1992;91:176–180. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]

- 5.Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- 6.Perrett D I, Harries M H, Bevan R, Thomas S, Benson P J, Mistlin A J, Chitty A J, Hietanen J K, Ortega J E. J Exp Biol. 1989;146:87–113. doi: 10.1242/jeb.146.1.87. [DOI] [PubMed] [Google Scholar]

- 7.Rizzolatti G, Fogassi L, Gallese V. Nat Rev Neurosci. 2001;2:661–670. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- 8.Perrett D I, Emery N J. Curr Psychol Cogn. 1994;13:683–694. [Google Scholar]

- 9.Perrett D I, Harries M H, Mistlin A J, Hietanen J K, Benson P J, Bevan R, Thomas S, Oram M W, Ortega J, Brierly K. Int J Comp Psychol. 1990;4:25–55. [Google Scholar]

- 10.Decety J, Grezes J, Costes N, Perani D, Jeannerod M, Procyk E, Grassi F, Fazio F. Brain. 1997;120:1763–1777. doi: 10.1093/brain/120.10.1763. [DOI] [PubMed] [Google Scholar]

- 11.Grezes J, Decety J. Hum Brain Mapp. 2001;12:1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Iacoboni M, Koski L M, Brass M, Bekkering H, Woods R P, Dubeau M C, Mazziotta J C, Rizzolatti G. Proc Natl Acad Sci USA. 2001;98:13995–13999. doi: 10.1073/pnas.241474598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Iacoboni M, Woods R P, Brass M, Bekkering H, Mazziotta J C, Rizzolatti G. Science. 1999;286:2526–2528. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- 14.Seltzer B, Pandya D N. J Comp Neurol. 1994;343:445–463. doi: 10.1002/cne.903430308. [DOI] [PubMed] [Google Scholar]

- 15.Kalaska J F, Caminiti R, Georgopoulos A P. Exp Brain Res. 1983;51:247–260. doi: 10.1007/BF00237200. [DOI] [PubMed] [Google Scholar]

- 16.Lacquaniti F, Guigon E, Bianchi L, Ferraina S, Caminiti R. Cereb Cortex. 1995;5:391–409. doi: 10.1093/cercor/5.5.391. [DOI] [PubMed] [Google Scholar]

- 17.Mountcastle V, Lynch J, Georgopoulos A, Sakata H, Acuna C. J Neurophysiol. 1975;38:871–908. doi: 10.1152/jn.1975.38.4.871. [DOI] [PubMed] [Google Scholar]

- 18.Sakata H, Takaoka Y, Kawarasaki A, Shibutani H. Brain Res. 1973;64:85–102. doi: 10.1016/0006-8993(73)90172-8. [DOI] [PubMed] [Google Scholar]

- 19.Rizzolatti G, Luppino G. Neuron. 2001;31:889–901. doi: 10.1016/s0896-6273(01)00423-8. [DOI] [PubMed] [Google Scholar]

- 20.Kohler E, Keysers C, Umilta M A, Fogassi L, Gallese V, Rizzolatti G. Science. 2002;297:846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- 21.Umilta M A, Kohler E, Gallese V, Fogassi L, Fadiga L, Keysers C, Rizzolatti G. Neuron. 2001;31:155–165. doi: 10.1016/s0896-6273(01)00337-3. [DOI] [PubMed] [Google Scholar]

- 22.Koski L, Wohlschlager A, Bekkering H, Woods R P, Dubeau M C, Mazziotta J C, Iacoboni M. Cereb Cortex. 2002;12:847–855. doi: 10.1093/cercor/12.8.847. [DOI] [PubMed] [Google Scholar]

- 23.Augustine J R. Brain Res Rev. 1996;2:229–294. doi: 10.1016/s0165-0173(96)00011-2. [DOI] [PubMed] [Google Scholar]

- 24.Oldfield R C. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 25.Ekman P, Friesen W V. Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologist Press; 1976. [Google Scholar]

- 26.Gentilucci M, Fogassi L, Luppino G, Matelli M, Camarda R, Rizzolatti G. Exp Brain Res. 1988;71:475–490. doi: 10.1007/BF00248741. [DOI] [PubMed] [Google Scholar]

- 27.Woods R P, Grafton S T, Holmes C J, Cherry S R, Mazziotta J C. J Comput Assist Tomogr. 1998;22:139–152. doi: 10.1097/00004728-199801000-00027. [DOI] [PubMed] [Google Scholar]

- 28.Woods R P, Grafton S T, Watson J D G, Sicotte N L, Mazziotta J C. J Comput Assist Tomogr. 1998;22:153–165. doi: 10.1097/00004728-199801000-00028. [DOI] [PubMed] [Google Scholar]

- 29.Woods R P, Dapretto M, Sicotte N L, Toga A W, Mazziotta J C. Hum Brain Mapp. 1999;8:73–79. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<73::AID-HBM1>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Iacoboni M, Woods R P, Mazziotta J C. J Neurophysiol. 1996;76:321–331. doi: 10.1152/jn.1996.76.1.321. [DOI] [PubMed] [Google Scholar]

- 31.Iacoboni M, Woods R P, Lenzi G L, Mazziotta J C. Brain. 1997;120:1635–1645. doi: 10.1093/brain/120.9.1635. [DOI] [PubMed] [Google Scholar]

- 32.Iacoboni M, Woods R P, Mazziotta J C. Brain. 1998;121:2135–2143. doi: 10.1093/brain/121.11.2135. [DOI] [PubMed] [Google Scholar]

- 33.Woods R P, Iacoboni M, Grafton S T, Mazziotta J C. In: Quantification of Brain Function Using PET. Myers R, Cunningham V, Bailey D, Jones T, editors. San Diego: Academic; 1996. pp. 353–358. [Google Scholar]

- 34.Aguirre G K, Zarahn E, D'Esposito M. Neuroimage. 1998;8:360–369. doi: 10.1006/nimg.1998.0369. [DOI] [PubMed] [Google Scholar]

- 35.Zarahn E, Aguirre G K, D'Esposito M. Neuroimage. 1997;5:179–197. doi: 10.1006/nimg.1997.0263. [DOI] [PubMed] [Google Scholar]

- 36.Aguirre G K, Zarahn E, D'Esposito M. Neuroimage. 1997;5:199–212. doi: 10.1006/nimg.1997.0264. [DOI] [PubMed] [Google Scholar]

- 37.Forman S D, Cohen J D, Fitzgerald M, Eddy W F, Mintun M A, Noll D C. Magn Reson Med. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- 38.Fox P T, Huang A, Parsons L M, Xiong J H, Zamarippa F, Rainey L, Lancaster J L. Neuroimage. 2001;13:196–209. doi: 10.1006/nimg.2000.0659. [DOI] [PubMed] [Google Scholar]

- 39.Picard N, Strick P L. Cereb Cortex. 1996;6:342–353. doi: 10.1093/cercor/6.3.342. [DOI] [PubMed] [Google Scholar]

- 40.Buccino G, Binkofski F, Fink G R, Fadiga L, Fogassi L, Gallese V, Seitz R J, Zilles K, Rizzolatti G, Freund H J. Eur J Neurosci. 2001;13:400–404. [PubMed] [Google Scholar]

- 41.Mazziotta J, Toga A, Evans A, Fox P, Lancaster J, Zilles K, Woods R, Paus T, Simpson G, Pike B, et al. Philos Trans R Soc London B Biol Sci. 2001;356:1293–1322. doi: 10.1098/rstb.2001.0915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mazziotta J, Toga A, Evans A, Fox P, Lancaster J, Zilles K, Woods R, Paus T, Simpson G, Pike B, et al. J Am Med Inform Assoc. 2001;8:401–430. doi: 10.1136/jamia.2001.0080401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Geyer S, Matelli M, Luppino G, Zilles K. Anat Embryol. 2000;202:443–474. doi: 10.1007/s004290000127. [DOI] [PubMed] [Google Scholar]

- 44.Petrides M, Pandya D N. In: Handbook of Neuropsychology. Boller F, Grafman J, editors. Vol. 9. Amsterdam: Elsevier; 1994. pp. 17–58. [Google Scholar]

- 45.Rizzolatti G, Arbib M. Trends Neurosci. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- 46.vonBonin G, Bailey P. The Neocortex of Macaca Mulatta. Urbana: Univ. of Illinois Press; 1947. [Google Scholar]

- 47.Campbell R, MacSweeney M, Surguladze S, Calvert G, McGuire P, Suckling J, Brammer M J, David A S. Brain Res Cogn Brain Res. 2001;12:233–243. doi: 10.1016/s0926-6410(01)00054-4. [DOI] [PubMed] [Google Scholar]

- 48.Puce A, Allison T, Bentin S, Gore J C, McCarthy G. J Neurosci. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Allison T, Puce A, McCarthy G. Trends Cogn Sci. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- 50.Calvert G A, Campbell R, Brammer M J. Curr Biol. 2000;10:649–657. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- 51.Olausson H, Lamarre Y, Backlund H, Morin C, Wallin B G, Starck G, Ekholm S, Strigo I, Worsley K, Vallbo A B, Bushnell M C. Nat Neurosci. 2002;5:900–904. doi: 10.1038/nn896. [DOI] [PubMed] [Google Scholar]

- 52.Farrer C, Frith C D. Neuroimage. 2002;15:596–603. doi: 10.1006/nimg.2001.1009. [DOI] [PubMed] [Google Scholar]

- 53.Buck R. J Pers Soc Psychol. 1980;38:811–824. doi: 10.1037//0022-3514.38.5.811. [DOI] [PubMed] [Google Scholar]

- 54.Ekman P. Darwin and Facial Expression: A Century of Research in Review. New York: Academic; 1973. [Google Scholar]

- 55.Ekman P. Handbook of Cognition and Emotion. Chichester, England: Wiley; 1999. pp. 301–320. [Google Scholar]

- 56.Phillips M L, Young A W, Scott S K, Calder A J, Andrew C, Giampietro V, Williams S C, Bullmore E T, Brammer M, Gray J A. Proc R Soc London Ser B Biol Sci. 1998;265:1809–1817. doi: 10.1098/rspb.1998.0506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Phan K L, Wager T, Taylor S F, Liberzon I. Neuroimage. 2002;16:331–348. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- 58.Morris J S, Ohman A, Dolan R J. Nature. 1998;393:467–470. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- 59.Hariri A R, Bookheimer S Y, Mazziotta J C. NeuroReport. 2000;11:43–48. doi: 10.1097/00001756-200001170-00009. [DOI] [PubMed] [Google Scholar]

- 60.Phillips M L, Young A W, Senior C, Brammer M, Andrew C, Calder A J, Bullmore E T, Perrett D I, Rowland D, Williams S C, et al. Nature. 1997;389:495–498. doi: 10.1038/39051. [DOI] [PubMed] [Google Scholar]