Abstract

Current models of attention, typically claim that vision and audition are limited by a common attentional resource which means that visual performance should be adversely affected by a concurrent auditory task and vice versa. Here, we test this implication by measuring auditory (pitch) and visual (contrast) thresholds in conjunction with cross-modal secondary tasks and find that no such interference occurs. Visual contrast discrimination thresholds were unaffected by a concurrent chord or pitch discrimination, and pitch-discrimination thresholds were virtually unaffected by a concurrent visual search or contrast discrimination task. However, if the dual tasks were presented within the same modality, thresholds were raised by a factor of between two (for visual discrimination) and four (for auditory discrimination). These results suggest that at least for low-level tasks such as discriminations of pitch and contrast, each sensory modality is under separate attentional control, rather than being limited by a supramodal attentional resource. This has implications for current theories of attention as well as for the use of multi-sensory media for efficient informational transmission.

Keywords: attention, vision, audition

1. Introduction

Since our environment provides many competing inputs to our sensory systems, filtering out irrelevant information plays a very important function in ensuring that neural resources are not needlessly expended. One means of doing this is to use endogenous attention, the term given to our ability to select voluntarily certain stimuli from the array of input stimuli (Desimone & Duncan 1995). Once attentional selection has taken place, performance on tasks employing the selected stimuli generally improves, as limited cognitive resources are allocated to the selected location or object to enhance its neural representation. This is true for both the tasks that may be considered to be ‘high-level’ (see Pashler 1998 for review) as well as for ‘low-level’ sensory tasks such as basic perceptual thresholds (Lee et al. 1999; Morrone et al. 2002). One of the consequences of limited cognitive resources is that, the optimal level of performance on a given task is reduced, when it is done in parallel with a second task, as limited resources must be divided. This paper examines the cost of dividing the attention across two modalities—vision and audition—and compares this with dividing attention across two similar tasks in the same modality.

Many of the psychophysical studies have reported cross-modal attentional effects. In one example showing cross-modal facilitation, pre-cuing observers to the location of an auditory stimulus will also increase the speed of response to a visual target and vice-versa (Driver & Spence 2004). Similarly, pre-cuing observers to features or attributes within a spatial location can also effectively prime performance in the non-cued modality, as shown for direction of auditory or visual motion (Beer & Roder 2005). Another example of cross-modal facilitation is that attentionally selecting (i.e. shadowing) a voice in one location while ignoring one in another location is improved by watching a video of moving lips in the shadowed location (Driver & Spence 1994). Performance on such a task can be made worse (cross-modal interference) by viewing a video of the distractor stream (Spence et al. 2000). Together with earlier cognitive studies demonstrating a cost for dividing attention between sensory modalities (Taylor et al. 1967; Tulving & Lindsay 1967; Long 1975; Massaro & Warner 1977), there is considerable evidence suggesting that attentional processes in audition and vision are closely linked.

However, several examples from the older cognitive and ‘human factors’ literature show substantial independence between visual and auditory attentional resources (Brown & Hopkins 1967; Swets & Kristofferson 1970; Allport et al. 1972; Triesman & Davies 1973; Shiffrin & Grantham 1974; Egeth & Sager 1977; Wickens 1980), and some more recent studies also point in this direction (Bonnel & Hafter 1998; Ferlazzo et al. 2002; Larsen et al. 2003). Triesman & Davies (1973) showed that two series of stimuli presented to separate modalities are processed with greater speed and accuracy than the two series presented to the same modality, indicating a degree of independence in unimodal attentional resources. However, this literature is not unequivocal. For example, an attempt to replicate Triesman and Davies' study led to an alternative account that the original study was methodologically confounded and the results are probably due to lack of eye movement control (Wickens & Liu 1988).

In another recent study arguing against cross-modal links in attention (Duncan et al. 1997), it was shown that the ‘attentional blink’ (the momentary reduction in performance on a second task when it rapidly follows a first task) is modality-specific, occurring in vision and in audition, but with very little transfer between vision and audition. However, this result has been challenged by subsequent researchers who have reported significant cross-modal effects with the attentional blink (Arnell & Jolicoeur 1999; Jolicoeur 1999). A possible explanation for the conflict may be that there is a cost in switching between task-sets or spatial location in order to carry out the two tasks (Potter et al. 1998). Indeed, a recent study that carefully controlled spatial allocation of attention and matched the responses required in task one and task two (Soto-Faraco & Spence 2002) failed to find evidence of a cross-modal attentional blink (see also Arnell & Jenkins 2004).

The neurophysiological literature on possible cross-modal links in attention, like the cognitive and psychophysical literature, provides evidence for both multi-sensory attentional processes and for unimodal processes. In support of a unitary attentional system, attentional interactions between different sensory modalities in humans can be seen in various reports. In visual evoked potential (VEP) studies, potentials are enhanced when subjects attend to visual stimuli (as expected) but also (to a lesser extent) when attending to auditory stimuli (Hillyard & Munte 1984; Eimer & Schroger 1998). More recent imaging studies have demonstrated interactions between senses not only in higher multi-modal areas (Calvert et al. 2000) but also fairly in early visual areas such as the lingual gyrus (Macaluso et al. 2000; Macaluso et al. 2002) where non-visual attention can still elevate activity to some extent in visual occipital areas. Against this, however, a variety of neural evidence suggests that attention can act unimodally at early levels, including the primary cortices. Neuroimaging and single-unit electrophysiology point to attentional modulation of both V1 (Motter 1993; Luck et al. 1997; Brefczynski & DeYoe 1999; Gandhi et al. 1999; Somers et al. 1999) and A1 (Woodruff et al. 1996; Grady et al. 1997; Jancke et al. 1999). For reviews of imaging studies on attention see: Posner & Gilbert (1999), Kanwisher & Wojciulik (2000) and Corbetta & Shulman (2002).

We decided to study cross-modal attention by measuring basic visual and auditory thresholds (contrast and pitch discrimination) with a dual-task technique in an attempt to clarify whether attentional resources are independent or not at the very early stages of cortical processes where contrast and pitch discrimination are thought to be processed (V1 and A1, respectively, Recanzone et al. 1993; Zenger-Landolt & Heeger 2003). Our approach was to measure performance on contrast and on pitch discrimination tested alone, and then compare it with performance obtained while concurrently doing a secondary distractor task that was either intra-modal or cross-modal (visual or auditory). In both modalities, performance on the primary threshold task suffered greatly when the distractor task was intra-modal, but remained unaffected by distractors that were cross-modal. Strong within-modal interference, but no cross-modal interference, provides good evidence that for basic sensory processes such as contrast and pitch discrimination, each modality has access to its own attentional resources.

2. Material and methods

Each of the discrimination thresholds for basic visual and auditory attributes were measured separately (alone), and then again in conjunction with a simultaneous secondary task that could be visual or auditory. The primary visual task was to make a forced-choice discrimination between which of two lateralized grating patches was higher in contrast, and the primary auditory task to discriminate which of two lateralized tones was higher in pitch. The secondary (distractor) task for the visual modality was to detect whether one element in a brief central array of dots was brighter than the others, and the secondary task in audition was to detect whether a brief triad of tones formed a major or a minor chord. The subjects were asked to treat each task as being equally important. For both modalities, the secondary task was designed to minimize ‘masking’ and other direct interference. In other words, the secondary tasks were always central, while the bilateral primary tasks were slightly peripheral, exciting different areas of V1 and the frequencies for the auditory tasks differed by an octave, to ensure that they excited different auditory frequency channels, and therefore different areas of A1. Before data collection began, all subjects practised the primary and secondary tasks in each modality daily until perceptual learning was complete and their performance had asymptoted.

Three observers (one author and two naive students) viewed a Sony Trinitron screen (40×30°, 1024×768 pixels, 100 Hz, 30 Cd m−2) from a distance of 57 cm. The video monitor was flanked by two high-quality speakers (Yamaha MSP5) separated by 64 cm and flanking the sides of the monitor. Stimuli for the primary tasks (both visual and auditory) were centred at 8° on either side of the central fixation (calibrating auditory localization individually for each subject) and followed the same temporal profile. The auditory stimuli were digitized at the rate of 65 kHz and presented at 75 dB intensity. The primary auditory stimuli were 100 ms tone pips (with 10 ms raised cosine ramps), alternated between left and right positions at 100 ms intervals, twice in each position (e.g. LRLR). One stimulus (randomly left or right) was set at 600 Hz, the other at 600+Δf Hz, and subjects had to identify on which side the higher tone occurred. The primary visual stimuli were vertical sine-wave gratings with a spatial frequency of 0.75 c degree−1, also centred at ±8° and vignetted within a circular Gaussian 2.5° wide (full-width at half-height). They were also alternated between left and right positions (twice in each), 100 ms on (with raised cosine ramps of 28 ms) and 100 ms off. The contrast of one grating was 30%, and the other was 30+ΔC%. Δf and ΔC were both varied in an adaptive staircase using the QUEST routine (Watson & Pelli 1983) to home-in on the threshold level of ΔC and (in separate blocks) Δf. Each session comprised two interleaved QUEST routines of 25 trials each, with 3–5 sessions conducted for each condition. The data from the 6–10 QUEST routines were pooled into a global dataset and a cumulative Gaussian function was fitted, with thresholds calculated as the 75% correct point.

Stimuli for the secondary tasks were presented to the centre of the screen and had a fixed level of difficulty. For the auditory distractor task, subjects were required to identify a 3-tone chord triad as major (1100+1308+1648 Hz) or minor (1100+1386+1648 Hz), ramped on and off within a Gaussian of full-width half-height of 435 ms. The amplitude of the middle tone (which identifies whether the chord is a major or a minor) was set previously to a determined value that yielded a known level of performance. In this prior ‘calibration’ experiment, the intensity of the middle tone was varied using QUEST to find the level that gave 75% performance for discriminating major from minor chords. All the data were pooled and a cumulative Gaussian was fitted. From the fit, an intensity value one standard deviation above the threshold (i.e. 92% correct) was selected. On all dual-task trials, distractor difficulty remained fixed at this level. For the visual distractor task, subjects had to determine whether an array of 20 bright dots (32% contrast, 0.4° diameter) contained one brighter dot (randomly present or absent with equal probability over trials). The dots were randomly positioned within a circular window of 4.5° in diameter and ramped in time by a Gaussian profile with a full band-width of 260 ms. Again, a prior ‘calibration’ experiment was run for each subject to determine the brightness increment of the odd-man-out dot that corresponded to a performance level of one standard deviation above the threshold. Thus, both secondary tasks were always presented at a constant difficulty level corresponding to 92% correct, or a d-prime value of 2. Subjects responded first to the secondary task and then to the primary task, and to ensure that the subject attended to the distractor task, the response to the primary task was recorded only if the secondary response was correct.

3. Results

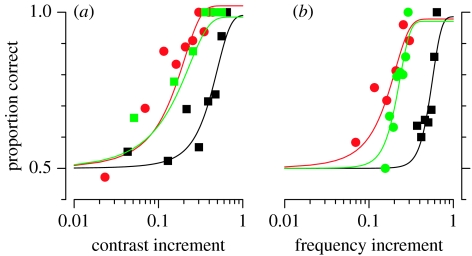

Figure 1 shows examples of psychometric functions from one observer for the primary visual task (contrast discrimination, figure 1a) and the primary auditory task (frequency discrimination, figure 1b). In each of the panels, red symbols represent performance for the primary when measured alone, while the two other curves show performance when measured together with the secondary task of the same modality (blue symbols) or different modality (green symbols). The secondary task in the same modality shifts the psychometric functions markedly to the right, reflecting a marked increase in the contrast (or frequency) increment required to perform the primary task. For all subjects, increment thresholds were at least twofold larger for intra-modal distractors, and as much as fivefold. On the other hand, when the distractor task was in the other modality (green symbols), psychometric functions remained very similar to the single-task results. Importantly, the psychometric functions remained orderly and of similar slope during the dual tasks, implying a real change in the threshold limit. A marked change in slope or noisiness could have suggested that the subjects were ‘multiplexing’ and attempting to alternate between tasks from trial-to-trial, compromising their performance on the primary task.

Figure 1.

Examples of psychometric functions for one naive observer (RA) for (a) visual contrast and (b) auditory frequency discriminations. The red symbols show the thresholds for the primary task alone, the blue symbols when performed together with the secondary task in the same modality and the green symbols when performed with the secondary task in the other modality. Chance performance was 50% (lower dashed line). The curves are best fitting cumulative Gaussians, from which thresholds were calculated (taken as the 75% correct point, indicated by the vertical dashed lines). The secondary task in the same modality clearly impeded performance, shifting the psychometric functions towards higher contrasts and frequencies, without greatly affecting their slope or general form. In this experiment, the secondary tasks were adjusted in difficulty to produce 92% correct performance when presented alone (d′=2).

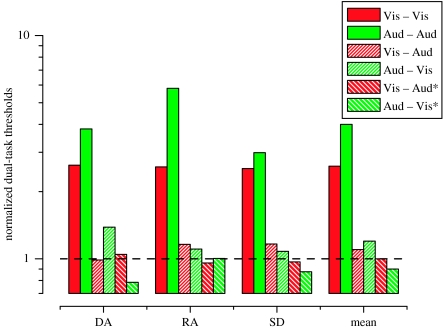

Figure 2 summarizes the primary thresholds in the dual-task conditions for three observers. The dual-task thresholds are shown as multiples of the primary thresholds measured in the single-task conditions (red curves in figure 1), so that a value of 1.0 (dashed line) would indicate no change at all. In all cases, secondary tasks in the same modality raised primary thresholds considerably, while the cross-modal secondary tasks had virtually no effect. The average increase in primary threshold produced by intra-modal distractors was a factor of 2.6 for vision and a factor of 4.2 for audition, while the average threshold increase produced by cross-modal distractors was just 1.1 for vision and 1.2 for audition.

Figure 2.

Threshold performance for three observers (author D.A. and two naïve subjects) for visual (red bars) and auditory (green bars) discriminations all normalized by the primary-task threshold measured as a single task. A value of 1.0 (dashed line) would therefore indicate no difference in primary-task thresholds from single to dual task conditions. Values greater that 1.0 indicate worse performance in the dual task, and therefore the ‘cost’ of dividing attention. Solid bars show thresholds for dual tasks in the same modality, cross-hatching for distractor tasks were in the other modality. Diagonal cross-hatching indicates cross-modal dual tasks that were spatially co-located (indicated by *). The only large effects are for dual tasks in the same modality.

The final cluster of columns in figure 2 shows the data averaged over observers. Since the large effects of intra-modal distractors is clear, we analysed statistical significance only for the two cross-modal conditions (the two middle columns) with a bootstrap procedure (Efron & Tibshirani 1993), re-sampling individual subjects' data and refitting psychometric functions (5000 trials). Although it is a small effect in comparison to that of the within modality distractor (factor of 1.2 versus 4.2), the mean increase in the primary auditory threshold produced by the cross-modal (visual) distractor was found to be statistically significant (p=0.002). Using the same analysis, the mean increase in the primary visual threshold produced by the cross-modal (auditory) distractor (1.1) was not found to be significantly greater than 1.0 (p>0.05).

For the results described above, the primary and secondary tasks were spatially separated to minimize potential masking and general confusion. However, as evidence suggests that cross-modal interactions may be location-specific (Driver & Spence 2004), we created a version of the experiment in which the tasks were spatially co-located. We used the same two primary tasks described above (contrast discrimination and frequency discrimination) and presented them simultaneously in time and co-located in space (again, horizontally ±8° from fixation). Subjects, therefore, gave two responses, one indicating which side had the higher frequency and the other indicating which side had the higher contrast. This experiment was designed to test whether cross-modal interference between the two primary tasks would arise because of the stimuli being spatially (and temporally) overlapping. The diagonally hatched bars in figure 2 report the results and show that performing two spatially coincident cross-modal tasks did not produce an increase in threshold relative to the level measured in each of the modalities alone. Indeed, there is a tendency for these values to be slightly less than 1.0. This difference was not statistically significant (p>0.05 in both cases) and any tendency for these values to be less than 1.0 is probably due to the fact that subjects completed this experiment after the first one and had most likely slightly improved in their unimodal discrimination acuity through learning.

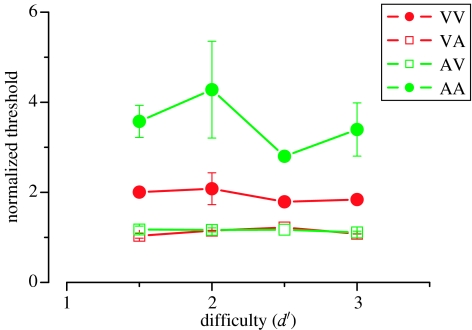

As a further control (using exactly the same design as in our first experiment), we varied the attentional load in the dual-task conditions by adjusting the difficulty of the secondary task. This was to test the possibility that the lack of cross-modal effects may have been due to the secondary task not being difficult enough to reduce primary task performance. From the psychometric data that was collected prior to these experiments to calibrate the difficulty of the secondary task, we chose intensity levels (for the middle tone of the chord triad) and luminance increments (for the odd-man-out dot discrimination task) that corresponded to performance ranging from d′=1.5 (85% correct) to d′=3 (98% correct). Figure 3 shows that over this range, task difficulty had very little effect on the primary task thresholds: at all levels of difficulty, the intra-modal secondary task increased thresholds by factors of 2 to 4, while the cross-modal secondary task had virtually no effect (normalized values very close to 1.0).

Figure 3.

Effect of difficulty of secondary task, averaged across subjects. Stimulus detectability varied from d′=0.5 to 3, calculated individually from the psychometric functions for the secondary task performed alone (d′=1 implies 75% threshold). Clearly task difficulty has little effect, either on the within modality or between modality dual tasks. NR refers to the control condition where the secondary stimulus was displayed, but subjects were not required to respond to it.

Finally, we repeated our first experiment with the secondary stimulus present, but subjects were asked to ignore it. The data for this ‘no response’ condition are indicated by NR in figure 3 and show that the mere presence of the task-irrelevant distractor stimuli did not affect primary task thresholds at all. In other words, the distractor stimulus did not mask the primary stimulus, but required active attention for it to have any effect.

4. Discussion

The results of this study clearly show that basic auditory and visual discriminations of the type studied here are not limited by a common central resource. Concurrent tasks in the same modality increased thresholds substantially, as previously observed in visual contrast discriminations (Lee et al. 1999; Morrone et al. 2002), and observed here for the first time for auditory frequency discriminations. However, a concurrent task in a different modality had virtually no effect on either visual or auditory thresholds, regardless of whether the tasks were spatially superimposed or separated, and irrespective of task load. Concurrently displaying the primary and secondary stimuli, without requiring subjects to perform the secondary task, had no effect at all.

Several recent studies have explored interactions between visual and auditory attentional resources (Driver & Spence 1994; Spence & Driver 1996; Spence & Driver 1997a,b; Spence et al. 2001). These studies have generally employed speeded responses in cued-orienting experiments and have found faster orienting (reaction times) in one modality even when the cue is presented in another. Since visual cues can speed auditory-orienting and vice versa, these authors conclude that visual and auditory attentional resources are closely linked. Although it is difficult to compare their reaction-time data with our threshold data, their accuracy data permit a better comparison. Based on the error scores they report, it is clear that the losses in accuracy are often quite small when cues are cross-modal, with d′ varying by about 0.1–0.5 at most (our d′ calculations based on their reported errors). Therefore, the inter-modal cost is nearly an order of magnitude less than the intra-modal cost we report here. Although we too found some suggestion of an inter-modal cost, it was small and reached statistical significance in only one condition (auditory thresholds measured with visual secondary task). However, although statistically significant, the decrement in discriminability relative to baseline caused by the cross-modal distractor task was only about 20%, compared with 420% for the intra-modal distractor task. So, while we cannot totally exclude the existence of cross-modal attentional links, these effects must be considered to be very much secondary compared with the magnitude of intra-modal attentional effects.

Although our conclusions may seem at odds with those of Spence and Driver indicating supramodal attentional processes, they sit well with many other studies, both from the older psychological and human factors literature (Brown & Hopkins 1967; Allport et al. 1972; Swets & Kristofferson 1970; Triesman & Davies 1973; Shiffrin & Grantham 1974; Egeth & Sager 1977; Wickens 1980), and more recent studies using psychophysical and behavioural paradigms quite different from ours (Bonnel & Hafter 1998; Ferlazzo et al. 2002; Larsen et al. 2003).

One important factor that may account for cross-modal attentional load affects performance could be whether the subjects' responses are speeded (as in the reaction time studies of Spence and Driver) or not (as in our threshold studies). It is conceivable that while cross-modal attentional load does not change perceptual sensitivity, it could speed (or retard) sensory processing (Jolicoeur & Dell'Acqua 1999; Ruthruff & Pashler 2001; Prinzmetal et al. 2005). Several recent studies are consistent with ours in suggesting that cross-modal divided attention does not affect unspeeded thresholds. For example, Larsen et al. (2003) showed that subjects could report two letters (one visual the other auditory) with the same level of performance as a single letter in either modality alone. Bonnel & Hafter (1998) found that detecting brief visual and auditory pulses (in luminance and intensity, respectively) could be done concurrently just as well as when done separately, a result consistent with independent auditory and visual resources. However, when Bonnel and Hafter changed their task from simple detection to increment or decrement discrimination, they showed a clear cost of dividing attention across modalities (even though the task was unspeeded).

It should also be pointed out that not all speeded-response studies support a single-supramodal attentional system. For example, Ferlazzo et al. (2002) measured reaction times in a lateralized spatial cueing paradigm when retinal and head-centred vertical meridians were not aligned (e.g. by fixating a point in the left head-centred hemifield). They found distinct cueing effects (RT differences between valid and invalid trials) existed for stimuli presented around the visual meridian and for stimuli presented around the head-centred meridian. Separable meridian effects suggest two spatial maps and therefore, separate visual and auditory attentional resources operate in this endogenous orienting paradigm.

A recent proposal by Prinzmetal et al. (2005) provides a helpful framework for understanding these findings. They suggest that speeded response affects the decision of which channel should be attended, but does not influence processing within that channel. This agrees with the studies showing an improvement in reaction times but not in thresholds. Unspeeded responding allows voluntary allocation of resources to the stimulus within a particular channel, facilitating perceptual enhancement of the stimulus, improving accuracy and thresholds. Indeed, virtually identical experimental conditions can yield accuracy improvements or RT improvements depending on whether the task is speeded or unspeeded (Santee & Egeth 1982; Prinzmetal et al. 2005). Importantly, this proposal posits that the time-limited decision to select a channel output in speeded responding is a late process central with respect to within-channel sensory processes.

Recent evidence suggests that attention is not a unitary phenomenon, but acts at various cortical levels, including early levels of sensory processing and the primary cortical areas of V1 and A1 (Kanwisher & Wojciulik 2000). Attentional modulation of primary cortices is particularly relevant to our study, since the contrast and pitch discrimination tasks used in our experiment are probably mediated by primary cortical areas (Recanzone et al. 1993; Boynton et al. 1999; Zenger-Landolt & Heeger 2003). Moreover, there is now good evidence that attention acts as a signal-enhancement process in early cortical processing (Treue & Maunsell 1996; McAdams & Maunsell 1999; Seidemann & Newsome 1999), consistent with Prinzmetal et al.'s ‘channel-enhancement’ proposal. Our results are, therefore, quite consistent with the notion that each primary cortical area is modulated by its own attentional resources, with very little interaction across modalities.

We do not exclude the possibility that attentional processes could occur at higher levels after visual and auditory information is combined, even in unspeeded trials. Indeed, depending on the nature of the task demands, the most sensible strategy might well be employed to a supramodal attentional resource for a given task. An example would be the task of speech comprehension in a noisy auditory environment. In this case, there are spatially co-located visual and auditory speech signals and so that it would be sensible to attend to both via a supramodal system of spatial attention system. This would allow visual input from lip and mouth movements to complement auditory speech signals to improve comprehension. More generally, given the wide variety of tasks that human observers are required to complete when monitoring or interacting with the environment, and the evidence for a distributed cortical network of attention, it would be highly efficient to deploy attentional resources at various levels in accordance with task requirements.

In our cross-modal conditions, observers knew that they had to make a visual and an auditory discrimination response and knew where they would occur. In these circumstances, optimal performance for visual and auditory thresholds would be achieved by deploying attention at the level of the primary cortices, the areas that probably mediate discrimination of contrast and pitch, and by focusing it on the spatial locations to be stimulated. However, in situations where the behavioural response needs to be based on information that may arrive through different sensory modalities at the same spatial location, as in the spatially lateralized paradigm typically employed by Spence and Driver where the modality was not known or was indicated by a cue that was only partially valid (e.g. Spence & Driver 1996), it would be the supramodal resources from the higher levels of a distributed attentional system that would be best suited to the experimental task. Thus, differences between the studies could reflect differences in tasks, but both point to attention increasing task efficiency.

The current results have potential practical implications, suggesting that complex datasets can be displayed more efficiently if presented in more than one modality. This approach has been explored in human factors research in the last decade or so (in applications such as aircraft cockpit design) based on audiovisual studies from the 1970s. Our data, based on robust threshold estimates, provide further reinforcement for this approach in showing that human observers can effectively multi-task without loss of performance, provided that the tasks occur in separate sensory modalities. Multi-modal presentation of information is also relevant for new technological applications such as multi-modal immersive and virtual reality environments where data is presented in non-visual modalities, including audition and touch. Our data also support new research applications in the area of data ‘sonification’. This refers to the process of representing data as sound, rather than in typical visual displays, and is being developed for contexts where large quantities of information need to be monitored simultaneously (e.g. complex medical procedures). Our results confirm the viability of data sonification as a means of freeing up visual resources in that auditory and visual information can be monitored independently.

References

- Allport D.A, Antonis B, Reynolds P. On the division of attention: a disproof of the single channel hypothesis. Q. J. Exp. Psychol. 1972;24:225–235. doi: 10.1080/00335557243000102. [DOI] [PubMed] [Google Scholar]

- Arnell K.M, Jenkins R. Revisiting within-modality and cross-modality attentional blinks: effects of target–distractor similarity. Percept. Psychophys. 2004;66:1147–1161. doi: 10.3758/bf03196842. [DOI] [PubMed] [Google Scholar]

- Arnell K.E, Jolicoeur P. The attentional blink across stimulus modalities: evidence for central processing limitations. J. Exp. Psychol. Hum. Percept. Perform. 1999;25:630–648. 10.1037/0096-1523.25.3.630 [Google Scholar]

- Beer A.L, Roder B. Attending to visual or auditory motion affects perception within and across modalities: an event-related potential study. Eur. J. Neurosci. 2005;21:1116–1130. doi: 10.1111/j.1460-9568.2005.03927.x. 10.1111/j.1460-9568.2005.03927.x [DOI] [PubMed] [Google Scholar]

- Bonnel A.M, Hafter E.R. Divided attention between simultaneous auditory and visual signals. Percept. Psychophys. 1998;60:179–190. doi: 10.3758/bf03206027. [DOI] [PubMed] [Google Scholar]

- Boynton G.M, Demb J.B, Glover G.H, Heeger D.J. Neuronal basis of contrast discrimination. Vision Res. 1999;39:257–269. doi: 10.1016/s0042-6989(98)00113-8. 10.1016/S0042-6989(98)00113-8 [DOI] [PubMed] [Google Scholar]

- Brefczynski J.A, DeYoe E.A. A physiological correlate of the ‘spotlight’ of visual attention. Nat. Neurosci. 1999;2:370–374. doi: 10.1038/7280. 10.1038/7280 [DOI] [PubMed] [Google Scholar]

- Brown A.E, Hopkins H.K. Interactions of the auditory and visual sensory modalities. J. Acoust. Soc. Am. 1967;41:1–7. doi: 10.1121/1.1910318. 10.1121/1.1910318 [DOI] [PubMed] [Google Scholar]

- Calvert G.A, Campbell R, Brammer M.J. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr. Biol. 2000;10:649–657. doi: 10.1016/s0960-9822(00)00513-3. 10.1016/S0960-9822(00)00513-3 [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. 10.1038/nrn755 [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. 10.1146/annurev.ne.18.030195.001205 [DOI] [PubMed] [Google Scholar]

- Driver J, Spence C. Spatial synergies between auditory and visual attention. In: Umiltà C, Moscovitch M, editors. Attention and performance: conscious and nonconscious information processing. MIT Press; Cambridge, MA: 1994. pp. 311–331. [Google Scholar]

- Driver J, Spence C. Crossmodal spatial attention: evidence from human performance. In: Spence C, Driver J, editors. Crossmodal space and crossmodal attention. Oxford University Press; Oxford, UK: 2004. pp. 179–220. [Google Scholar]

- Duncan J, Martens S, Ward R. Restricted attentional capacity within but not between sensory modalities. Nature. 1997;387:808–810. doi: 10.1038/42947. 10.1038/42947 [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani R.J. Chapman & Hall; New York, NY: 1993. An introduction to the bootstrap. [Google Scholar]

- Egeth H.E, Sager L.C. On the locus of the visual dominance. Percept. Psychophys. 1977;22:77–86. [Google Scholar]

- Eimer M, Schroger E. ERP effects of intermodal attention and cross-modal links in spatial attention. Psychophysiology. 1998;35:313–327. doi: 10.1017/s004857729897086x. 10.1017/S004857729897086X [DOI] [PubMed] [Google Scholar]

- Ferlazzo F, Couyoumdjian M, Padovani T, Belardinelli M.O. Head-centred meridian effect on auditory spatial attention orienting. Q. J. Exp. Psychol. A. 2002;55:937–963. doi: 10.1080/02724980143000569. 10.1080/02724980143000569 [DOI] [PubMed] [Google Scholar]

- Gandhi S.P, Heeger D.J, Boynton G.M. Spatial attention affects brain activity in human primary visual cortex. Proc. Natl Acad. Sci. USA. 1999;96:3314–3319. doi: 10.1073/pnas.96.6.3314. 10.1073/pnas.96.6.3314 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grady C.L, Van Meter J.W, Maisog J.M, Pietrini P, Krasuski J, Rauschecker J.P. Attention-related modulation of activity in primary and secondary auditory cortex. Neuroreport. 1997;8:2511–2516. doi: 10.1097/00001756-199707280-00019. [DOI] [PubMed] [Google Scholar]

- Hillyard S.A, Munte T.F. Selective attention to color and location: an analysis with event-related brain potentials. Percept. Psychophys. 1984;36:185–198. doi: 10.3758/bf03202679. [DOI] [PubMed] [Google Scholar]

- Jancke L, Mirzazade S, Shah N.J. Attention modulates activity in the primary and the secondary auditory cortex: a functional magnetic resonance imaging study in human subjects. Neurosci. Lett. 1999;266:125–128. doi: 10.1016/s0304-3940(99)00288-8. 10.1016/S0304-3940(99)00288-8 [DOI] [PubMed] [Google Scholar]

- Jolicoeur P. Restricted attentional capacity between sensory modalities. Psychonom. Bull. Rev. 1999;6:87–92. doi: 10.3758/bf03210813. [DOI] [PubMed] [Google Scholar]

- Jolicoeur P, Dell'Acqua R. Attentional and structural constraints on visual encoding. Psychol. Res. 1999;72:154–164. [Google Scholar]

- Kanwisher N, Wojciulik E. Visual attention: insights from brain imaging. Nat. Rev. Neurosci. 2000;1:91–100. doi: 10.1038/35039043. 10.1038/35039043 [DOI] [PubMed] [Google Scholar]

- Larsen A, McIlhagga W, Baert J, Bundesen C. Seeing or hearing? Perceptual independence, modality confusions, and crossmodal congruity effects with focused and divided attention. Percept. Psychophys. 2003;65:568–574. doi: 10.3758/bf03194583. [DOI] [PubMed] [Google Scholar]

- Lee D.K, Itti L, Koch C, Braun J. Attention activates winner-take-all competition among visual filters. Nat. Neurosci. 1999;2:375–381. doi: 10.1038/7286. 10.1038/7286 [DOI] [PubMed] [Google Scholar]

- Long J. Reduced efficiency and capacity limitations in multidimensional signal recognition. Q. J. Exp. Psychol. 1975;27:599–614. doi: 10.1080/14640747508400523. [DOI] [PubMed] [Google Scholar]

- Luck S.J, Chelazzi L, Hillyard S.A, Desimone R. Neural mechanisms of spatial selective attention in areas V1, V2, and V4 of macaque visual cortex. J. Neurophysiol. 1997;77:24–42. doi: 10.1152/jn.1997.77.1.24. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Frith C.D, Driver J. Modulation of human visual cortex by crossmodal spatial attention. Science. 2000;289:1206–1208. doi: 10.1126/science.289.5482.1206. 10.1126/science.289.5482.1206 [DOI] [PubMed] [Google Scholar]

- Macaluso E, Frith C.D, Driver J. Directing attention to locations and to sensory modalities: multiple levels of selective processing revealed with PET. Cereb. Cortex. 2002;12:357–368. doi: 10.1093/cercor/12.4.357. 10.1093/cercor/12.4.357 [DOI] [PubMed] [Google Scholar]

- Massaro D.W, Warner D.S. Dividing attention between auditory and visual perception. Percept. Psychophys. 1977;21:569–574. [Google Scholar]

- McAdams C.J, Maunsell J.H. Effects of attention on orientation-tuning functions of single neurons in macaque cortical area V4. J. Neurosci. 1999;19:431–441. doi: 10.1523/JNEUROSCI.19-01-00431.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrone M.C, Denti V, Spinelli D. Color and luminance contrasts attract independent attention. Curr. Biol. 2002;12:1134–1137. doi: 10.1016/s0960-9822(02)00921-1. 10.1016/S0960-9822(02)00921-1 [DOI] [PubMed] [Google Scholar]

- Motter B.C. Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. J. Neurophysiol. 1993;70:909–919. doi: 10.1152/jn.1993.70.3.909. [DOI] [PubMed] [Google Scholar]

- Pashler H.E. MIT Press; Cambridge, MA: 1998. The psychology of attention. [Google Scholar]

- Posner M.I, Gilbert C.D. Attention and primary visual cortex. Proc. Natl Acad. Sci. USA. 1999;96:2585–2587. doi: 10.1073/pnas.96.6.2585. 10.1073/pnas.96.6.2585 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Potter M.C, Chun M.M, Banks B.S, Muckenhoupt M. Two attentional deficits in serial target search: The visual attentional blink and an amodal task-switch deficit. J. Exp. Psychol. Learn. Mem. Cogn. 1998;24:979–992. doi: 10.1037//0278-7393.24.4.979. 10.1037/0278-7393.24.4.979 [DOI] [PubMed] [Google Scholar]

- Prinzmetal W, McCool C, Park S. Attention: reaction time and accuracy reveal different mechanisms. J. Exp. Psychol. Gen. 2005;134:73–92. doi: 10.1037/0096-3445.134.1.73. 10.1037/0096-3445.134.1.73 [DOI] [PubMed] [Google Scholar]

- Recanzone G.H, Schreiner C.E, Merzenich M.M. Plasticity in the frequency representation of primary auditory cortex following discrimination training in adult owl monkeys. J. Neurosci. 1993;13:87–103. doi: 10.1523/JNEUROSCI.13-01-00087.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruthruff E, Pashler H.E. Perceptual and central interference in dual-task performance. In: Shapiro K, editor. The limits of attention: temporal constraints in human information processing. Oxford University Press; Oxford, UK: 2001. pp. 100–123. [Google Scholar]

- Santee J.L, Egeth H.E. Do reaction time and accuracy measure the same aspects of letter recognition? J. Exp. Psychol. Hum. Percept. Perform. 1982;8:489–501. doi: 10.1037//0096-1523.8.4.489. 10.1037//0096-1523.8.4.489 [DOI] [PubMed] [Google Scholar]

- Seidemann E, Newsome W.T. Effect of spatial attention on the responses of area MT neurons. J. Neurophysiol. 1999;81:1783–1794. doi: 10.1152/jn.1999.81.4.1783. [DOI] [PubMed] [Google Scholar]

- Shiffrin R.M, Grantham D.W. Can attention be allocated to sensory modalities? Percept. Psychophys. 1974;15:460–474. [Google Scholar]

- Somers D.C, Dale A.M, Seiffert A.E, Tootell R.B. Functional MRI reveals spatially specific attentional modulation in human primary visual cortex. Proc. Natl Acad. Sci. USA. 1999;96:1663–1668. doi: 10.1073/pnas.96.4.1663. 10.1073/pnas.96.4.1663 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soto-Faraco S, Spence C. Modality-specific auditory and visual temporal processing deficits. Q. J. Exp. Psychol. 2002;55A:23–40. doi: 10.1080/02724980143000136. [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J. Audiovisual links in endogenous covert spatial attention. J. Exp. Psychol. Hum. Percept. Perform. 1996;22:1005–1030. doi: 10.1037//0096-1523.22.4.1005. 10.1037/0096-1523.22.4.1005 [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J. Audiovisual links in exogenous covert spatial orienting. Percept. Psychophys. 1997a;59:1–22. doi: 10.3758/bf03206843. [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J. On measuring selective attention to an expected sensory modality. Percept. Psychophys. 1997b;59:389–403. doi: 10.3758/bf03211906. [DOI] [PubMed] [Google Scholar]

- Spence C, Ranson J, Driver J. Cross-modal selective attention: on the difficulty of ignoring sounds at the locus of visual attention. Percept. Psychophys. 2000;62:410–424. doi: 10.3758/bf03205560. [DOI] [PubMed] [Google Scholar]

- Spence C, Nicholls M.E, Driver J. The cost of expecting events in the wrong modality. Percept. Psychophys. 2001;63:330–336. doi: 10.3758/bf03194473. [DOI] [PubMed] [Google Scholar]

- Swets J.A, Kristofferson A.B. Attention. Annu. Rev. Psychol. 1970;21:339–366. doi: 10.1146/annurev.ps.21.020170.002011. 10.1146/annurev.ps.21.020170.002011 [DOI] [PubMed] [Google Scholar]

- Taylor M.M, Lindsay P.H, Forbes S.M. Quantification of shared capacity processing in auditory and visual discrimination. Acta Psychol. 1967;27:223–229. doi: 10.1016/0001-6918(67)99000-2. [DOI] [PubMed] [Google Scholar]

- Treue S, Maunsell J.H. Attentional modulation of visual motion processing in cortical areas MT and MST. Nature. 1996;382:539–541. doi: 10.1038/382539a0. 10.1038/382539a0 [DOI] [PubMed] [Google Scholar]

- Triesman A.M, Davies A. Divided attention to ear and eye. In: Kornblum S, editor. Attention and Performance. Academic Press; New York, NY: 1973. pp. 101–117. [Google Scholar]

- Tulving E, Lindsay P.H. Identification of simultaneously presented simple visual and auditory stimuli. Acta Psychol. 1967;27:101–109. doi: 10.1016/0001-6918(67)90050-9. 10.1016/0001-6918(67)90050-9 [DOI] [PubMed] [Google Scholar]

- Watson A.B, Pelli D.G. QUEST: a Bayesian adaptive psychometric method. Percept. Psychophys. 1983;33:113–120. doi: 10.3758/bf03202828. [DOI] [PubMed] [Google Scholar]

- Wickens C.D. Attention and Performance. Erlbaum; Hillsdale, NJ: 1980. The structure of attentional resources. [Google Scholar]

- Wickens C.D, Liu Y. Codes and modalities in multiple resources: a success and a qualification. Hum. Factors. 1988;30:599–616. doi: 10.1177/001872088803000505. [DOI] [PubMed] [Google Scholar]

- Woodruff P.W, Benson R.R, Bandettini P.A, Kwong K.K, Howard R.J, Talavage T, Belliveau J, Rosen B.R. Modulation of auditory and visual cortex by selective attention is modality-dependent. Neuroreport. 1996;7:1909–1913. doi: 10.1097/00001756-199608120-00007. [DOI] [PubMed] [Google Scholar]

- Zenger-Landolt B, Heeger D.J. Response suppression in v1 agrees with psychophysics of surround masking. J. Neurosci. 2003;23:6884–6893. doi: 10.1523/JNEUROSCI.23-17-06884.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]