Abstract

ReportTutor is an extension to our work on Intelligent Tutoring Systems for visual diagnosis. ReportTutor combines a virtual microscope and a natural language interface to allow students to visually inspect a virtual slide as they type a diagnostic report on the case. The system monitors both actions in the virtual microscope interface as well as text created by the student in the reporting interface. It provides feedback about the correctness, completeness, and style of the report. ReportTutor uses MMTx with a custom data-source created with the NCI Metathesaurus. A separate ontology of cancer specific concepts is used to structure the domain knowledge needed for evaluation of the student’s input including co-reference resolution. As part of the early evaluation of the system, we collected data from 4 pathology residents who typed in their reports without the tutoring aspects of the system, and compared responses to an expert dermatopathologist. We analyzed the resulting reports to (1) identify the error rates and distribution among student reports, (2) determine the performance of the system in identifying features within student reports, and (3) measure the accuracy of the system in distinguishing between correct and incorrect report elements.

INTRODUCTION

We have previously described a general method for developing intelligent tutoring systems to teach visual classification problem solving 1,2, based on a cognitive model of expertise 3. SlideTutor is an instantiation of this general architecture in the domain of Inflammatory Diseases of Skin. The first formative evaluation of SlideTutor demonstrated substantial benefit of the system after just a single use 4. Diagnostic performance increased significantly on both multiple choice and case diagnostic tests after four hours of working with the system. Learning gains were undiminished at retention testing one week later. In addition to the virtual microscope interface, SlideTutor includes a diagrammatic reasoning interface that lets students create graphical arguments for their diagnosis. The tutor corrects errors, and provides help for students as they search for evidence, create hypotheses, and relate evidence to hypotheses moving towards a final or differential diagnosis.

SlideTutor can be used with either a case-structured interface or a knowledge-structured interface 1,4. The interfaces differ in that one interface emphasizes relationships within an individual case, and the other incorporates a unifying knowledge representation across all cases. But both of these interfaces rely on invented notations for the hypothetico-deductive method. Natural language interfaces (NLI) provide a more natural method for interacting with students. Several intelligent tutoring systems have been developed which use NLI methods 5–7, including a very sophisticated system for teaching cardiovascular physiology that is based on a method for supporting dialogue between student and system 8. We report on the development of a NLI that specifically analyzes and provides feedback on one of the most ubiquitous artifacts in clinical reasoning – the diagnostic report. ReportTutor is designed to help students learn how to analyze cases of Melanoma and write accurate and complete diagnostic reports of their findings. Melanoma incidence and death rates are increasing globally 9. Proper identification of pathologic prognostic factors is of critical importance in providing correct therapy 10.

COGNITIVE TASK

Like SlideTutor, the central assumption in our architecture is that human cognition is modeled by production rules. This assumption is based on a framework of human cognition that has accumulated abundant empirical validation 11. In particular, one of the central assumptions of our Medical Tutoring Systems is that mastery of clinical skills such as visual diagnosis can be dissected into component sub-skills which can be analyzed by the tutor to provide feedback and error correction. In ReportTutor we extend our work outside of the domain of visual classification problem solving to the somewhat more simplistic realm of reporting. Once the diagnosis has been determined, the student must identify disease specific prognostic factors and report the required features in the Surgical Pathology Report. Almost all academic departments of Pathology now use the College of American Pathologists (CAP) Checklists 12 as a source of data elements (features) which must be included in cancer reports such as those for Melanoma biopsies and resections. The CAP Cancer checklists are required for accreditation by the American College of Surgeons Commission on Cancer. Table 1 shows some of the required elements for a surgical pathology report on a skin excision for Melanoma. The task in ReportTutor is to correctly identify and document all relevant prognostic factors.

Table 1.

Example CAP Data Elements and ReportTutor Goals

| Data Element | Value Domain Data Type | Events required to complete goal |

|---|---|---|

| Diagnosis (Histologic Subtype) | Enumerated: | Tumor must be viewed and value correctly identified in report |

| Ulceration | Boolean | Feature must be viewed and value correctly identified in report |

| Breslow Thickness | Float | Feature must be measured at deepest extent using micrometer and value correctly identified in report |

| Perineural Invasion | Boolean | Feature must be viewed and value correctly identified in report |

| Mitotic Index | Integer | Feature must be measured by counting mitoses in ten non-overlapping high powere fields and value (within error tolerance) correctly reported |

| Status of margin | Enumerated: | Feature must be viewed in all locations and value correctly identified in report |

SYSTEM ARCHITECTURE AND IMPLEMENTATION

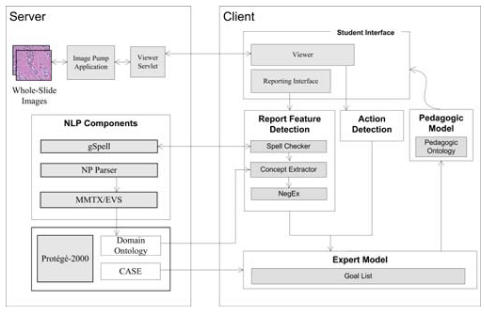

ReportTutor is implemented as a client-server system, written in the Java programming language. The system architecture is shown in Figure 1. Virtual Slides for ReportTutor are scanned using a commercial robotic scanner 13, and saved in the ReportTutor database. Before use as ReportTutor cases, virtual slides must first be authored using our existing SlideAuthor system. SlideAuthor is a Protégé 14 plugin that we created that enables authors to annotate slides by creating a frame-based representation (CASE) of the salient features in each case. The ontology used to guide case authoring is identical to the one used by the tutoring system to analyze student input and to structure feedback in response to student errors and requests for help.

Figure 1.

System Architecture

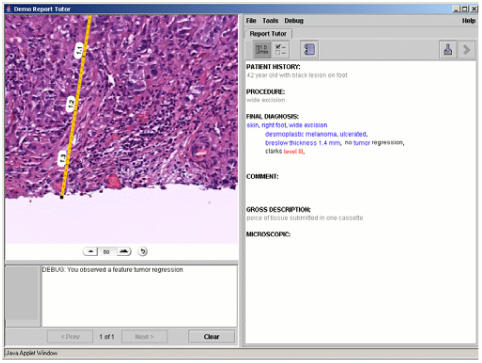

The ReportTutor student interface incorporates a commercial Java viewer (Figure 2 – left) that enables the student to pan and zoom in a huge image file, simulating the use of a traditional microscope. We added an embedded measuring tool that can be used as a surrogate for the standard micrometer. The action detection component of the tutoring system monitors the actions of the student to determine whether the student has observed particular features or performed particular actions (such as measuring) correctly.

Figure 2.

Student Interface showing measuring tool

The pedagogic model of the tutoring system can also control the viewer to point out particular features or perform particular actions as they are needed in the pedagogic exchange.

The student interface also incorporates an interactive text entry area (Figure 2 – right) that displays the major section headings for a generic pathology report. Some sections are static and pre-defined from the case representation (i.e. clinical history, gross description) and some sections are empty, editable text-fields available for use by the student (final diagnosis, microscopic description, comment). The report feature detection component of the tutoring system monitors report text entered by the student to determine whether the student has identified particular features by extracting known concepts from the typed text and matching them to the domain ontology. Those concepts are first organized into features and related attributes (name and value) and then converted to data element representation. Data elements are checked for correctness against data elements that are in the goal list and appropriate feedback is provided by the pedagogic model. The action detection component monitors student virtual microscope actions such as measurement with micrometer or observation of some prognostic feature at certain location and magnification level. Those actions are also converted to data element representation and treated as another feature attribute by the system. For example, when the student measures depth of invasion with a micrometer in the virtual microscope and then types "depth of invasion is 1.42 mm" into the report, the system constructs a single data element that has a feature "depth of invasion", size attribute "float" with value "1.42", unit attribute "mm", and action attribute "measure depth". Then each part of this data element is compared with the correct data element in the goal list and appropriate feedback is generated if some parts are incorrect or missing.

The pedagogic model provides context-dependent feedback to students. When the student requests a hint – the pedagogic model guides the student to complete the goal highest in the goal-stack. When students make mistakes, bug messages help remediate the error and guide the student back to the solution path. Hints and bugs are defined for each generic situation in which they apply within a separate Protégé ontology.

At the beginning of each case, ReportTutor creates a list of goals from the Protégé CASE representation. The goal list is not displayed to the student, and goals are removed following completion. Individual goals may relate to actions only, actions combined with report features, or report features only. As students begin to type in their report, the report feature detection component sends a sentence (defined by a period) or sentence fragment (defined by a comma or carriage return), to the server where it is spell-checked, noun-phrased, and matched to the controlled terminology. Spell-checking is accomplished with gSpell 15. Matching of noun phrases to the NCI Metathesaurus is performed with MMTx 16. The resulting concept is filtered through our domain ontology, and all recognized concepts are sent back to the report feature detection component. Values of numeric attributes are tagged, and NegEx is used to determined presence or absence of concepts on the client side. Text that matches to concepts in the domain ontology is highlighted as blue when correct and as red when incorrect. Highlighted text provides additional information from the controlled terminology when the student mouses over it.

Data elements extracted from the report and virtual microscope are continuously sent to the expert model to evaluate them against the goal list. Performance of this complex task can occur in many sequences. Students typically go back and forth between viewing the slide and typing the report, but may perform individual steps in many different orders. Thus, ReportTutor cannot always count on the sequence of events to know what the student is trying to do. For example the student might look at the slide to determine all or some of the values in Table 1 before including any of them in the report. Therefore, ReportTutor takes a best-case view of student performance, in the sense that if the student has performed the actions that correlate with a particular goal – the goal will be considered completed once the text feature, attribute and value have been entered. In these cases, it is possible that we are missing cognitive errors that happen to be associated with correct responses (guesses), however this was considered preferable to being overly critical when the student actions were ambiguous.

The expert model evaluates input from both the viewer action detection and report feature detection components, to determine their relationship to the goal list. For example, after the student has seen an area of ulceration, and has indicated that ulceration is present – then the goal to identify ulceration is achieved, and removed from the goal stack. Features, attribute names and attribute values that are correctly identified turn blue in the reporting interface as they are recognized but features that are incorrectly identified turn red and generate feedback specific to the kind of error. Current error categories and example messages are shown in Table 2. Errors that relate to inaccurate searching and feature identification in the virtual microscope can therefore be separated from those that involve incorrect interpretation or poor reporting practices.

Table 2.

Error categories and example errors

| Process | Error Remediated by Tutor |

|---|---|

| Feature Identification (Feature) | Prognostic feature is not present in this case |

| Prognostic feature was not observed or measured on the slide | |

| Prognostic feature was not measured correctly | |

| Prognostic feature was not observed or measured completely | |

| Prognostic feature is not specific enough | |

| Feature Refinement (Attributes) | No attribute values were provided |

| This attribute is not associated with this feature | |

| Incorrect value for correct attribute is typed | |

| Given attribute is not specific enough. | |

| Feature Observation or Measurement (Action) | Measurement performed in incorrect location |

| Right tissue is being measured, but not in the optimal location | |

| Right tissue is being measured, but micrometer didn’t reac tissue borders | |

| Not all regions belonging to this feature were observed | |

| Less then 10 high powered fields were counted |

When students are lost, they can ask for help, and receive context specific hints. The tutor hints to the highest goal in the stack. Hint states are similar to those previously described for SlideTutor. When the student has completed the report, they must submit it to finalize the interaction. Upon submission, ReportTutor checks for completeness, and style. Errors that can be identified at the time of submission include missing features and attributes, non-standard ordering of features, and excessive wordiness. Each case is concluded by providing summary feedback of student performance.

PILOT STUDY OF REPORT FEATURE DETECTION

In our other interfaces to SlideTutor, students are required to unambiguously specify the feature and it’s attributes from a set of ontologies. In ReportTutor, they use a natural language interface that could under-detect or over-detect features, and could assign attributes and values to these features incorrectly. An additional difference between ReportTutor and SlideTutor is that they target different cognitive tasks. For a system like ReportTutor, evaluation is complex because it cannot be assumed that performance on ‘gold-standard’ expert reports will necessarily reflect performance on student reports. Therefore, our first pilot study of ReportTutor was designed to (1) investigate errors and omissions made by students in reporting features, and (2) determine the precision and recall of the report feature detection component.

METHODS

Subjects

We recruited four pathology residents from different residency programs to participate in the study. The use of human subjects was approved by the University of Pittsburgh Institutional Review Board (protocol # 0307023).

Materials

Students viewed three different cases of Melanoma – two cases of invasive melanoma and one case of in-situ melanoma.

Data collection

Students used a version of the tutoring system that provided no feedback at all – they viewed the virtual slide and typed in their report without the benefit of tutoring, so that we could analyze both naturally-occurring student errors and performance of the report feature detection system without confounding the data. We maintained a separate log file that captured all student actions and input text.

As a gold standard for student performance, we asked an expert Dermatopathologist (DJ) to view the same three cases with the virtual microscope. The expert first dictated a diagnostic report that we captured as a text file. After all cases were dictated, he separately indicated the features, attribute names and attribute values that ReportTutor is designed to detect (based on the CAP protocol data elements). These values were used as the “gold standard” against which we measured student performance in identifying features, attributes and values.

Using the discrete data obtained from the expert, we asked a second pathologist (not a member of our research group) to manually code each student report for (1) whether each feature was present, (2) whether the attribute for the feature was present, and (3) whether the value for the attribute was correct. This data was then used to measure the performance of the system in detecting features and distinguishing correct from incorrect data elements.

We determined frequencies of errors for all of ReportTutor’s features and attributes, computed precision and recall of the Report Feature detection system, and analyzed the kinds of false positive and false negative system errors.

RESULTS

All students completed all cases in the study. Student reports had a mean word count of 53.3 + 64.9. Reports ranged from extremely sparse to very wordy. Students used a variety of narrative structures from short sentence fragments separated by carriage returns, to complete paragraphs in prose form.

Table 3 shows student performance in identifying key features present in the expert report. For each feature, the table shows the total number of times the feature was mentioned in any relevant case, regardless of whether the value for the feature was correct, incorrect, or missing. With the exception of the overall diagnosis, depth of invasion and margin status - most students neglected to mention important prognostic factors at all, including ulceration, growth phase, venous invasion, perineural invasion, lymphocytic response, and regression.

Table 3.

Student Identification of Features

| Feature | Identified |

|---|---|

| Diagnosis | 12/12 (100%) |

| Depth of Invasion | 6/8 (75%) |

| Margins | 6/12 (50%) |

| Mitotic Index | 2/8 (25%) |

| Solar Elastosis | 3/12 (25%) |

| Clark's Level | 2/12 (17%) |

| Nevus remnant (pre-existing) | 2/12 (17%) |

| Ulceration | 1/12 (8%) |

| Growth phase | 0/8 (0%) |

| Venous Invasion | 0/8 (0%) |

| Perineural Invasion | 0/8 (0%) |

| Tumor-Infiltrating Lymphocytes | 0/8 (0%) |

| Tumor Regression | 0/12 (0%) |

| Tumor Staging (pTNM) | 0/12 (0%) |

Many features in Table 3 require more than one cognitive step. For example, depth of invasion requires both a size (generally reported to the 2nd significant digit) and a unit of measure (usually mm). In these cases we separately determined whether students mentioned the attribute of each step (for example any depth) and whether the student response was within the error threshold of the gold-standard value (e.g. 0.3 ± 0.1 mm). Results for features with multiple attributes are shown in Table 4. Errors were frequent in student reports, and included missing as well as incorrect values.

Table 4.

Student Identification of Attributes and Values

| Feature Identified | Attribute | Total N | Correct | Incorrect | Missing |

|---|---|---|---|---|---|

| Diagnosis | Major Diagnosis | 12 | 10 | 2 | 0 |

| Histologic Subtype | 12 | 1 | 2 | 9 | |

| Invasiveness | 20 | 7 | 0 | 13 | |

| Depth of Invasion | Size | 8 | 4 | 2 | 2 |

| Units | 8 | 4 | 2 | 2 | |

| Margins | Location | 24 | 7 | 0 | 17 |

| Invasiveness of margin lesion | 12 | 0 | 0 | 12 | |

| Mitotic Index | Count | 8 | 1 | 1 | 6 |

| Units | 8 | 1 | 1 | 6 | |

| Clark's Level | Level | 12 | 2 | 0 | 10 |

In the 12 student reports, we identified a total of 95 concepts including features, attributes and values that were present The total included all elements regardless of whether or not they were case goals and whether or not they were correct. We determined the performance of the Report Feature Detector (RFD) in recognizing these concepts. The precision of the RFD was 0.97 and the recall was 0.65. We further analyzed these recall errors and identified a set of potential causes including: unresolved synonyms, concepts split into multiple noun phrases, concepts that were implied in report context, and concepts that were merged into a single string. For purposes of comparison, we also computed performance metrics for the RFD against the expert dictated report that had a richer set of concepts. Precision was predictably lower at 0.87 and recall was nearly equivalent at 0.65.

We also measured the ability of the system to determine the correctness of the student’s response. A total of 41 out of 95 recognized concepts were case goals. Most of these (92%) were coded by the expert coder as correct. Over 20 % of these correct concepts were erroneously considered by the RFD to be incorrect (asterisks). The major cause for systems failure to recognize correct attributes was failure to detect the feature concept that those attributes belonged too. So if the "depth of invasion" concept was not detected, its attributes such as size that were detected were marked as incorrect, because they did not belong to any known feature. This problem accounts for almost 95% of all failures. Further work is needed to limit false errors that will produce inappropriate bug messages.

DISCUSSION

We have described the early development of an intelligent tutoring system that utilizes a natural language interface. The work extends our ongoing efforts to develop highly individualized training systems for education of health professionals. The architecture of the system is general and could be used in any area of pathology where reporting guidelines or standards have been developed. In particular, it would be feasible to extend the paradigm with little additional programming effort to other areas covered by the CAP protocols.

In our first pilot evaluation of the system, we observed a significant error rate among students when comparing content of their reports to a gold standard using expert human coding. Student errors included complete absence of features, incorrect features, absent attributes, incorrect attributes, absent values and incorrect values. ReportTutor is designed to remediate all of these errors with specific error messages and advice.

For this limited pilot study, the Report Feature Detector performed within an acceptable range, however more work is needed to increase recall and limit errors that are made in assessing correctness. It is likely that the high precision that we measured will decline as more complex documents containing more complex features are included in the test set. The current study was limited to evaluating the RFD without system interaction – students did not get tutoring feedback. In general – this scenario may provide a lower bounds for performance if use of the tutoring system ultimately drives students towards reporting practices which are easier for the system to parse and recognize.

LIMITATIONS

This was a pilot study and is therefore limited by the relatively small pool of subjects and cases. Further work is needed to determine whether the present data generalize to other document sets and other users. Additionally, the system was not tested in its fully interactive mode, which requires a more complex and potentially error-prone text parse.

FUTURE WORK

ReportTutor will continue to undergo frequent evaluations and versioning targeting both the natural language and human computer interaction aspects of the system. Additional NLP tools are scheduled for addition. In the short term, the system is expected to be fully integrated into SlideTutor and evaluated for its effect on learning by late 2005. Our long term goal is to improve our methods for interpretation and evaluation of student report text and to then begin to incorporate methods for true system-student dialogue.

Table 5.

Detection of Correct and Incorrect Features

| Correctly recognized concepts | |

|---|---|

| Coded by pathologist as correct | 38 (92%) |

| System recognized as correct | 30 (78%) |

| System recognized as incorrect** | 8 (22%) |

| Coded by pathologist as incorrect | 3 (8%) |

| System recognized as correct | 0 (0%) |

| System recognized as incorrect | 3 (100%) |

ACKNOWLEDGEMENTS

Work on ReportTutor is supported by a grant from the National Cancer Institute (R25 CA101959). This work was conducted using the Protégé resource, which is supported by grant LM007885 from the United States National Library of Medicine. We gratefully acknowledge the contribution of the SPECIALIST NLP tools provided the National Library of Medicine. We thank Olga Medvedeva, Elizabeth Legowski, Jonathan Tobias, and Maria Bond for their help with this project.

REFERENCES

- 1.Crowley RS, Medvedeva O. An intelligent tutoring system for visual classification problem solving. Artificial Intelligence in Medicine (in press). [DOI] [PubMed]

- 2.Crowley RS, Medvedeva OP. A General Architecture for Intelligent Tutoring of Diagnostic Classification Problem Solving. Proc AMIA Symp. 2003:185–189. [PMC free article] [PubMed] [Google Scholar]

- 3.Crowley RS, Naus GJ, Stewart J, Friedman CP. Development of Visual Diagnostic Expertise in Pathology – An Information Processing Study. J Am Med Inform Assoc. 2003;10(1):39–51. doi: 10.1197/jamia.M1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Crowley RS, Legowski E, Medvedeva O, Tseytlin E, Roh E, Jukic DM. An ITS for medical classification problem-solving: Effects of tutoring and representations. Submitted to AIED 2005.

- 5.VanLehn K, Jordan PW, Rosé CP, et al. The architecture of Why2-Atlas: A coach for qualitative physics essay writing, in: S. A. Cerri, G. Gouardères, and F. Paraguaçu , eds., Proc. ITS 2002; Springer-Verlag, Berlin, pp 158–167, 2002.

- 6.Graesser AC, Wiemer-Hastings K, Wiemer-Hastings P, Kreuz R the Tutoring Research Group. AutoTutor: A simulation of a human tutor. Journal of Cognitive Systems Research. 1999;1:35–51. [Google Scholar]

- 7.Aleven V. Using background knowledge in case-based legal reasoning: A computational model and an intelligent learning environment. Artificial Intelligence. 2003;150:183–237. [Google Scholar]

- 8.Kim N, Evens MW, Michael JA, and Rovick AA, CIRCSIM-TUTOR: An intelligent tutoring system for circulatory physiology, in: H. Mauer, ed., Computer Assisted Learning, Proceedings of the International Conference on Computer Assisted Learning; Springer-Verlag, Berlin, pp 254–266, 1989.

- 9.Lens MB, Dawes M. Global perspectives of contemporary epidemiological trends of cutaneous malignant melanoma. Br J Dermatol. 2004;150:179–85. doi: 10.1111/j.1365-2133.2004.05708.x. [DOI] [PubMed] [Google Scholar]

- 10.Scolyer RA, Thompson JF, Stretch JR, Sharma R, McCarthy SW. Pathology of melanocytic lesions: new, controversial, and clinically important issues. J Surg Oncol. 2004;86(4):200–11. doi: 10.1002/jso.20083. [DOI] [PubMed] [Google Scholar]

- 11.Anderson JR. Rules of the Mind. Hillsdale, NJ: Lawrence Erlbaum. Associates; 1993.

- 12.http://www.cap.org/apps/docs/cancer_protocols/protocols_index.html

- 13.http://www.aperio.com/

- 14.http://protege.stanford.edu/

- 15.http://specialist.nlm.nih.gov/nls/GSpell_web/

- 16.Aronson A. Effective Mapping of Biomedical Text to the UMLS Metathesaurus: The MetaMap Program. Proc AMIA Symp 2001, pp 17–21. [PMC free article] [PubMed]