Abstract

Background

Evidence-based medicine (EBM) attempts to narrow the gap between knowledge and practice, but ready access to evidence-based resources remains a challenge to practicing physicians.

Objective

To describe a new, EBM information delivery service, its trial design, and baseline data.

Methods

McMaster PLUS (Premium Literature Service), composed of a continuously updated database and web-based interface, delivers scientifically rigorous and clinically relevant research literature matched to individual physicians’ clinical interests. A cluster randomized controlled trial is currently underway, comparing 2 versions of the PLUS system.

Results

As of Feb 2005, the PLUS database contained over 5700 scientifically sound, clinically relevant articles, published from 2001 to present. 68% of articles have full text links. Over 200 physicians in Northern Ontario have been randomized to one of 2 PLUS interfaces.

Conclusion

McMaster PLUS has been designed to aid physicians to home in on high quality research that is highly relevant and important to their own clinical practice.

Introduction

Clinical encounters generate many questions about the treatment, diagnosis, prognosis and cause of disease1. Recently, physicians often turn to the Internet to satisfy their information needs2,3.

Many attempts have been made to provide online EBM information resources to physicians via digital libraries4 and web based products and services5,6 including those geared to rural and remote physicians7. Physicians want quick, easy access to resources, which are well organized, with both summary and full formats available, and content that is derived from reputable sources2,8.

A standardized approach toward the evaluation of digital libraries is still lacking,9 however, several initiatives are working toward this end10,11. Research shows that a variety of evaluation methods have been employed, including self reported satisfaction by survey, interview or focus group12, controlled environment testing in laboratories13, log analysis of system use4,7, formative and summative forms of usability testing and cognitive task analysis14. Fewer evaluations of health information systems employ what Friedman et al15 calls the objectivist approach, including comparison-based, controlled trial studies. Common research opinion holds that a multi-method approach to evaluation produces a valuable, well-rounded evidence of effectiveness of the system16.

We have created a new, evidence-based information delivery service called McMaster Premium Literature Service (PLUS) to address physicians’ need for current best evidence. PLUS is unique, in that only articles that have been critically appraised for scientific methodology by expert researchers and then reviewed for clinical relevance and newsworthiness by an international panel of clinician raters are presented to the end-user. PLUS employs both push (email alerts) and pull (search engine) mechanisms for clinical literature distribution, which can be focused by the user, to a particular discipline among primary care, internal medicine and its subspecialties.

PLUS is being piloted to physicians in Northern Ontario in collaboration with the Northern Ontario Virtual Library (NOVL). A cluster-randomized-controlled trial comparing 2 versions of the PLUS system is currently underway.

Methods

McMaster PLUS

PLUS exists by way of extending activities associated with the production of 2 evidence-based summary journals, Evidenced-Based Medicine and ACP Journal Club (ACPJC). Research staff in the Health Information Research Unit at McMaster University, Hamilton, Ontario, Canada perform weekly hand searches of over 120 clinical journals (about 50,000 articles/year). Articles are selected if they address one or more of the following purposes: etiology, prognosis, diagnosis, therapy and prevention, clinical prediction, economics, quality improvement, or differential diagnosis, and if they pass explicit criteria for scientific merit17. The article selection process has high reproducibility (kappa >0.80) and undergoes periodic quality assurance checks17. The critical appraisal process filters out about 95% of published studies, which are off topic, have poor methodology, or non-clinical endpoints, leaving about 2500 “pass” articles per year.

Each “pass” article’s citation, abstract and National Library of Medicine’s Medical Subject Headings (MeSH) enter the McMaster Online Rating of Evidence (MORE) database by way of an online request to PubMed for its “MEDLINE” data. Each eligible article is tagged with one or more clinical disciplines for which it might be pertinent (e.g. internal medicine) and assigned a purpose category (e.g. treatment).

The MORE rating panel is currently composed of >2000 practicing physicians in primary care and internal medicine and its specialties (with some representatives from other major specialties). MORE raters are sent email notification and enter the MORE online rating interface to access full text articles in their discipline. They submit their ratings and optional personal commentary at the same interface. If the ratings for both clinical relevance and newsworthiness average 3 or above out of 7 for at least one discipline, the article is moved from the MORE database to the PLUS database. The article rating process helps to further distill the clinical literature from the 2500 “pass” articles (95% “noise” reduction) to about 20 of the most important articles per year for a given clinical discipline, an overall “noise” reduction of 99.96% (20/50000).

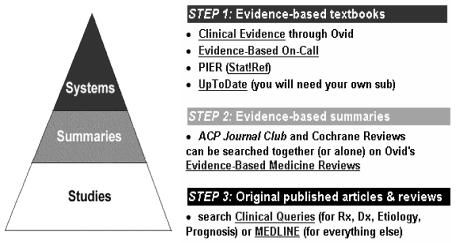

PLUS end users access McMaster PLUS at a web interface whose key features include a personal profile set-up, email alerts, search engine and the Pyramid of Evidence, an hierarchical approach to organizing evidence-based resources. PLUS users register online and create a personal profile composed of basic contact information, clinical discipline(s) selection and CME credit selection.

PLUS email alerts point PLUS users to new article content (original articles and systematic reviews) that matches their clinical discipline(s). Alert delivery is available at 1 – 7 day intervals. An interactive tool in PLUS indicates the expected number of articles per month, at a given cutoff level for clinical relevance and newsworthiness, for a user’s clinical discipline profile. Users can then select cutoff scores to receive a personally digestible volume of alerted articles.

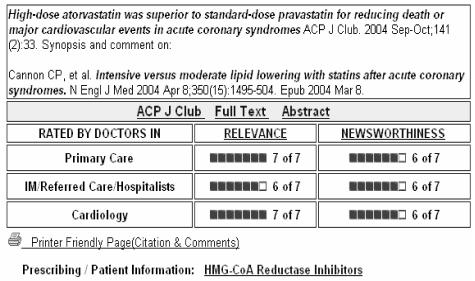

Email alerts display hyperlinked article titles, which when clicked on, deliver the user into the PLUS system via an embedded user ID and password. Details of the alerted article include its citation, all clinical ratings for relevance and newsworthiness, comments submitted by raters, and links to the PubMed abstract, full text article (if available free or by NOVL’s Ovid license), full text ACP Journal Club synopsis (if prepared) and MedlinePlus® drug information pages (if appropriate) (figure 1).

Figure 1.

PLUS article record

The PLUS search engine allows users to “pull” specific information from the accumulated pool of high quality evidence-based research stored in the PLUS database. Basic and advanced search options allow for input of search terms and strategy refinement, respectively. Users have the opportunity to search the database for items that were rated by physicians in their own clinical discipline, or to search the entire database. Each search strategy is mapped to a synonym table to increase the yield of relevant records. Google’s spell checker is embedded in the search function. Search results are displayed in the same article record format as alerted articles.

The “Pyramid of Evidence” (figure 2) depicts a hierarchy of evidence-based information and is meant to help physicians find the most evolved evidence-based resource to address their clinical questions18.

Figure 2.

Pyramid of Evidence

To facilitate data collection for the trial, the PLUS system tracks when users login to the system, whether by the devoted login page or an alerted article. It also tracks when alerted articles are viewed, when users employ the search engine or view links in an article record from either an alert or search engine.

Before the launch of the PLUS system, usability testing with volunteer participants was conducted to refine system features. Screen shots and simultaneous “think aloud” verbalizations by participants were captured using CyberCam software (SmartGuyz Inc.), a microphone and a sound mixer. Data were reviewed by project staff and decisions regarding any system changes were arrived at by consensus.

PLUS Trial Participants

The Canadian Medical Association directory (MD Select) and the College of Physicians and Surgeons of Ontario registries were used to create a physician contact database for trial recruitment, containing 600 email addresses and 1700 surface mail addresses for physicians in Northern Ontario. Participants were recruited from Northern Ontario communities with use of mail-outs, email, fax, and presentations.

Eligibility requirements were: 1) NOVL registration; 2) a general or family physician, internist, or internal medicine sub-specialist who spends at least 20% of their clinical practice time working in general practice or general internal medicine; 3) fluency in English; 4) expecting to maintain a similar practice in the same community for at least one year (not moving or retiring); and 5) an email account that is used at least once per month. PLUS trial registration, including determining trial eligibility, and obtaining participants’ consent, was conducted online.

PLUS Intervention

Timelines for the PLUS trial include a 6-month baseline period and an 18-month trial period. The baseline period served to collect data on participants’ typical use of traditional, licensed digital resources. A baseline interface was built that presented users the following searchable resources: Ovid (including Clinical Evidence, the Evidence-Based Medicine Reviews database, selected full text journal access, and the Medline database), Stat!Ref (offering a suite of electronic text books) and MD Consult (full access to certain journals, and text books - only offered until Sept 2004).

Two different PLUS intervention interfaces (self-serve and full-serve versions) were developed, which hosted new features beyond those found in the baseline version. The self-serve version includes a subset of features from the full-serve version and requires that users conduct their own search for EBM literature, with the Pyramid of Evidence available for guidance. The full-serve version of PLUS provides the same self-serve searching option, plus a personally customized email alerting service and searchable database, both of which exclusively draw from the PLUS database of quality filtered, relevance rated literature.

PLUS Trial Outcomes

Our primary research questions are: Does the addition of McMaster PLUS to a digital medical library service (NOVL) for family physicians and internists in Northern Ontario result in: 1) a change in patterns and frequency of utilization of the digital library and other internet resources; 2) improvements in meeting practitioners’ perceived information needs (utility); 3) an increase in the use of relevant evidence-based information; and 4) reported improvements in patient care and outcomes (usefulness)? Our secondary research questions are: 1) What are the relative frequencies of use of various medical resources in the digital library?; 2) In which environments and by which methods, do practitioners access, search, and retrieve information from the digital medical library?; and 3) What are the physician and practice determinants of utilization, utility, use and usefulness of the PLUS service and the digital medical library?

Randomization and Blinding

Practicing communities were selected as the unit of trial analysis to minimize contamination (sharing of information and resources provided by the PLUS search engine or alerting service) between the 2 groups. Trial participants were assembled into 4 large and 6 small community clusters, maximizing the distance between clusters and minimizing the differences in numbers in each cluster. The clusters were randomized to either the self-serve or full-serve trial interface by rank ordering them by size and assigning them an odd or even number from a table of random numbers reported by Fleiss19. Interface designation was recorded in the PLUS database so that each user was presented with the appropriate trial period interface upon login. Participants were not told which group they were assigned to and the 2 different intervention period interfaces were similar in appearance and navigation.

Statistical Analysis

The unit of analysis for the trial is community clusters. P values less than 0.05 will be considered significant without adjustment for multiple comparisons for the primary analysis and with adjustment for multiple testing for secondary analyses.

Results

PLUS Database

PLUS currently (Feb 21, 2005) houses 5741 article records and is increased by 35–60 articles each week. Articles span publication years 1999–2005 (Table 1).

Table 1.

PLUS database article’s publication date

| Publication Year | |||||||

|---|---|---|---|---|---|---|---|

| 1999–2000 | 2001 | 2002 | 2003 | 2004 | 2005 | Total | |

| Count | 3 | 462 | 1101 | 1770 | 2226 | 179 | 5741 |

| % | 0.1 | 8 | 19.2 | 30.8 | 38.8 | 3.1 | 100 |

The designation of purpose category across the 5741 articles in the PLUS database is as follows: Therapy/ Prevention (75.5%), Etiology (8.8%), Prognosis (4.7%), Diagnosis (3.7%), Clinical Prediction Guide (2.9%), Economics (1.7%), Quality Improvement (1.2%) and multiple category assignment (1.5%).

Full text links have been established for 68.3% of the 5741 PLUS database articles (Ovid medical journals, 34.9%; Ovid Cochrane Reviews, 18.0%; free web links, 15.4%). 7.5% of the 5741 articles have a link to an ACP Journal Club structured abstract and 30.9% have link to a MedlinePlus® drug information page.

PLUS Trial

Between Sept 2003 and Mar 2004, 420 individuals expressed interest in participating in the McMaster PLUS trial. Of these 76 were determined to be ineligible. 344 potential participants were issued the online trial consent form and of these 134 did not respond and 7 refused consent. By the spring of 2004, 203 eligible physicians had provided their consent. On April 1, 2004, 6 small communities and 4 large communities were randomized to either the self-serve and full-serve trial interfaces. Since that time, one participant requested to be removed from the trial because of low computer literacy skills and another had to be removed because his practice moved outside of the eligible trial boundaries.

Demographic data were collected via a registration form. Of the 196 participants who provided data on gender, 146 (74%) were male and 50 (26%) were female. Mean age was 43.9 y (low of 29 y and high of 71 y; n = 198). The mean year of graduation among all 203 participants was 1989, and spanned from 1959 to 2003. The majority of participants selected 1 clinical discipline (62%), while 33% choose 2–4 disciplines and 5% choose 5 or more disciplines to represent their clinical interests. The top ranking clinical disciplines selected were: General Practice(GP)/ Family Practice(FP) (48%), Emergency Medicine (41%), GP/FP/Obstetrics (14%), GP/FP/ Mental Health (12%). On the baseline questionnaire, the majority of participants reported high-speed Internet access at their home, office, clinic and hospital at which they work (Table 2).

Table 2.

Type of Internet connection access reported by 168 of 203 PLUS trial participants

| Location | No Access (%) | Dial-up (%) | High Speed (%) | Unaware (%) |

|---|---|---|---|---|

| Home | 2 (1.0) | 54 (26.6) | 112 (55.12) | 0 |

| Office | 39 (19.2) | 30 (14.8) | 89 (43.8) | 10 (4.9) |

| Clinic | 65 (32) | 18 (8.9) | 67 (33) | 18 (8.9) |

| Hospital | 18 (8.9) | 12 (5.9) | 125 (61.6) | 13 (6.4) |

Discussion

We have created a new evidence-based information delivery service called McMaster PLUS. PLUS features include: 1) high quality, clinical research studies, hand-searched from approximately 120 clinical journals; 2) organization by clinical discipline; 3) clinical assessment ratings of quality filtered articles for relevance and newsworthiness by frontline clinicians; 4) electronic “push” of high quality, high relevancy articles to registered physicians; and 5) a cumulative database that recognizes PLUS registered users’ characteristics while searching (“pull”).

McMaster PLUS is currently being evaluated in a cluster-randomized-controlled trial with 203 physicians allocated to 2 different versions of PLUS. Multiple methods are being applied for data collection including log analysis of user login and system usage (alerts, search engine and other links), short online satisfaction questionnaires, and qualitative interviews to probe satisfaction and overall system perception. We anticipate a rich data set from which to gain insight into the uptake and satisfaction of McMaster PLUS by practicing clinicians in Northern Ontario.

Conclusion

McMaster PLUS is a robust, customizable, web-based information service featuring an email alerting service and searchable database of quality filtered, clinical-relevance rated literature. A formal evaluation of McMaster PLUS is currently underway, seeking to determine how PLUS affects the use and usefulness of evidence from research, in comparison with unassisted digital library access. PLUS trial results will help identify best design features for an evidence-based information delivery service geared to physicians practicing in remote and rural areas.

Acknowledgments

The opinions stated here are solely those of the authors. This work is supported by research grants from the Ontario Ministry of Health and Long-term Care and the Canadian Institute for Health Research. We thankfully acknowledge our collaborators at NOVL and other members of the McMaster PLUS team: Chris Cotoi, Dawn Jedras, James McKinlay, Ann McKibbon, Rick Parrish, Leslie Walters and Nancy Wilczynski.

References

- 1.Ely JW, Osheroff JA, Ebell MH, Chambliss ML, Vinson DC, Stevermer JJ, et al. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study. 2002 Mar 23;BMJ324(7339):710–716. doi: 10.1136/bmj.324.7339.710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bennett NL, Casebeer LL, Kristofco RE, Strasser SM. Physicians’ Internet information-seeking behaviors. J Contin Educ Health Prof. 2004 Winter;24(1):31–8. doi: 10.1002/chp.1340240106. [DOI] [PubMed] [Google Scholar]

- 3.Martin S. Two-thirds of physicians use Web in clinical practice. CMAJ. 2004 January 6;170(1):28. [Google Scholar]

- 4.Turner A, Fraser V, Muir Gray JA, Toth B. A first class knowledge service: developing the National electronic Library for Health. Health Info Libr J. 2002 Sep;19(3):133–45. doi: 10.1046/j.1471-1842.2002.00392.x. [DOI] [PubMed] [Google Scholar]

- 5.Magrabi F, Coiera EW, Westbrook JI, Gosling AS, Vickland V. General practitioners’ use of online evidence during consultations. Int J Med Inform. 2005 Jan;74(1):1–12. doi: 10.1016/j.ijmedinf.2004.10.003. [DOI] [PubMed] [Google Scholar]

- 6.McDuffee DC. AHEC library services: from circuit rider to virtual librarian. Area Health Education Centers. Bull Med Libr Assoc. 2000 Oct;88(4):362–6. [PMC free article] [PubMed] [Google Scholar]

- 7.D’Alessandro MP, D’Alessandro DM, Galvin JR, Erkonen WE. Evaluating overall usage of a digital health sciences library. Bull Med Libr Assoc. 1998 Oct;86(4):602–9. [PMC free article] [PubMed] [Google Scholar]

- 8.Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum ME. Answering Physicians’ Clinical Questions. Obstacles and Potential Solutions. J Am Med Inform Assoc. 2005 Mar-Apr;12(2):217–24. doi: 10.1197/jamia.M1608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cullen R. Evaluating digital libraries in the health sector. Part 1: measuring inputs and outputs. Health Info Libr J. 2003 Dec;20(4):195–204. doi: 10.1111/j.1471-1842.2003.00456.x. [DOI] [PubMed] [Google Scholar]

- 10.E-Metrics: measures for electronic resources [homepage on the internet]. Washington, DC: Association of Research Libraries; c2005 [updated 2005 Feb 2; cited 2005 Mar 1]. Available from: www.arl.org/stats/newmeas/emetrics/index

- 11.eLib: the electronics library program [homepage on the internet]. UK: Electronic Libraries Programme; c2005 [updated 2005 Jan 13; cited 2005 Mar1]. Available from: ww.ukoln.ac.uk/services/elib

- 12.Martin S. Using SERVQUAL in health libraries across Somerset, Devon and Cornwall. Health Info Libr J. 2003 Mar;20(1):15–21. doi: 10.1046/j.1471-1842.2003.00404.x. [DOI] [PubMed] [Google Scholar]

- 13.Westbrook JI, Coiera EW, Gosling AS.Do online information retrieval systems help experienced clinicians answer clinical questions? J Am Med Inform Assoc 2005Jan 31[Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004 Feb;37(1):56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 15.Friedman CP, Owens DK, Wyatt JC. Evaluation and technology assessment. In: Shortliffe EH, Wiederhold LM, Fagan, editors. Medical informatics: computer applications in health care and biomedicine. New York: Springer; 2001.

- 16.Cullen R. Evaluating digital libraries in the health sector. Part 2: measuring impacts and outcomes. Health Info Libr J. 2004 Mar;21(1):3–13. doi: 10.1111/j.1471-1842.2004.00455.x. [DOI] [PubMed] [Google Scholar]

- 17.Wilczynski NL, McKibbon KA, Haynes RB. Enhancing retrieval of best evidence for health care from bibliographic databases: calibration of the hand search of the literature. Medinfo. 2001;10(Pt1):390–3. [PubMed] [Google Scholar]

- 18.Haynes RB. Of studies, summaries, synopses, and systems: the “4S” evolution of services for finding current best evidence. ACP Journal Club. 2001 Mar-Apr;134:A11–A13. [PubMed] [Google Scholar]

- 19.Fleiss J: Statistical Methods for Rates and Proportions, 2nd ed. New York: Wiley; 1981.