Abstract

In this paper, we present the G-DEE system, a document engineering environment aimed at clinical guidelines. This system represents an extension of current visual interfaces for guidelines encoding, in that it supports automatic text processing functions which identify linguistic markers of document structure, such as recommendations, thereby decreasing the complexity of operations required by the user. Such markers are identified by shallow parsing of free text and are automatically marked up as an early step of document structuring. From this first representation, it is possible to identify elements of guidelines contents, such as decision variables, and produce elements of GEM encoding, using rules defined as XSL style sheets. We tested our automatic structuring system on a set of sentences extracted from French clinical guidelines. As a result, 97% of the occurrences of deontic operators and their scopes were correctly marked up. G-DEE can be used for various purposes, from research into guidelines structure to assisting the encoding of guidelines into a GEM format or into decision rules.

INTRODUCTION

Clinical Guidelines have become an important medium for the standardization and dissemination of medical knowledge. This is why their processing has attracted considerable research interest. The ‘document centric approach’ has been introduced to facilitate the use of guidelines knowledge. The best known instance of ‘document centric approach’ is the Guideline Elements Model (GEM),1 which is an XML framework based on a hierarchy of concepts describing the guidelines contents, information for their use, and meta-information (such as guidelines objectives, intended audience and authors). Each GEM element corresponds to specific labels, some of which are normalized through a controlled vocabulary (for instance, the one defined by the National Guidelines Clearinghousea).

The encoding of a clinical guideline using the GEM framework consists of structuring the guideline textual document using the set of XML markups provided by the framework. This can be a complex process, as it requires an in-depth analysis of the guideline contents and, simultaneously, a constant reference to the GEM framework For instance, the physician needs to analyze text sections in depth to properly identify decision variables prior to their GEM encoding. As a consequence, substantial variation is observed in the GEM encoding of a given clinical guideline by different users.2 A pilot study has shown that three out of five participants in a GEM encoding experiment reported difficulties with the identification of decision variables. In particular, the amount of text inserted into certain GEM elements by the participants varied considerably. This would suggest that the complexity of manual analysis can affect the structure of the encoded document.

To tackle these problems and support the process of manual marking up of guidelines documents, the GEM-Cutterb,3 application has been developed. It has been defined as an XML editor to facilitate the marking up of textual guidelines. GEM-Cutter is essentially a user-friendly interface to text manipulation, markup selection and document encoding. It contains a complete browser of GEM concepts that facilitates access to proper categories. While it does not identify contents of the document itself, it certainly decreases the cognitive load of the user by offering on-line information on GEM categories and supporting an incremental process of document marking up.

The GEM-Cutter interface is based on a multiple windowing system that displays the original clinical guideline document, a GEM category browser and the corresponding GEM encoded text. The interface is operated by selecting a portion of text and dragging it over a GEM category; this generates a corresponding entry in the GEM encoded document, comprised of that text portion marked up by the category over which it was dragged.

From a similar perspective, Svatek et al.4 developed the “Stepper” system, which supports the encoding of clinical guidelines through the marking up of text based on a stepwise formalisation process. The early steps consist in marking up of the guideline text, while the final ones generate knowledge representations.5 Stepper is also supported by an interface similar to GEM-Cutter, whose purpose is to “minimize information loss during the encoding process”. However, both interfaces still support a manual encoding process; they do not provide tools to assist such encoding on a content basis, for instance through the automatic recognition of relevant textual information.

In this paper, we introduce the G-DEE environment (Guideline Document Engineering Environment), a software environment for the study of clinical guidelines that incorporates automatic text processing functions such as the identification of linguistic markers of recommendations. This environment can be used for various purposes, from research into guidelines structure to assisting with the actual encoding of guidelines into a GEM format.

APPROACH

One limitation of manual encoding is the number of operations the user has to perform at different levels of abstraction. Even with the help of a graphic interface for textual manipulation, such operations put considerable cognitive load on the user.

More specifically, the user has to recognize the structure of textual recommendations (identifying condition structures from their linguistic forms) and simultaneously analyze their meaning (extracting medical contents and decision variables) to properly encode the document. Her work could be considerably simplified if the interface could provide some form of automatic recognition of text contents, thus assisting encoding. While free text understanding is beyond state-of-the-art, it is possible to use shallow Natural Language Processing (NLP) techniques to develop an automatic aid to document structuring. These techniques will specifically target the recognition of appropriate markers of textual structure, relieving the user from the early steps of document structure recognition.

Linguistic markers of guidelines structure

Clinical guidelines belong to the generic category of normative texts, to which much research has been dedicated. For instance, Moulin and Rousseau6 have described a method to automatically extract knowledge from legal texts based on the hypothesis that these texts are naturally structured through the occurrence of specific linguistic expressions, known as “deontic operators”. These operators manifest themselves through such verbs as “pouvoir” (“to be allowed to or may”), “devoir” (“should or ought to”), “interdire” (“to forbid”). These verbs correspond to traditional deontic modalities: permission, obligation and interdiction, which have been found by Kalinowski7 to be the most characteristic linguistic structures of normative texts.

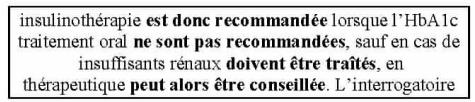

We have adapted Moulin and Rousseau’s approach to the context of clinical guidelines, by identifying equivalent deontic elements specific to the kind of recommendations they contain. Our underlying hypothesis is that the automatic identification of such operators would facilitate early steps of document structuring and encoding. Examples of deontic operators are: “is then recommended” (in French, “est done recommandée”), “are not recommended” (“ne sont pas recommandées”), “should be treated” (“doivent être traîtés”) “may then be advised” (“peutalors être conseillée”). In order to analyze the occurrences of such deontic expressions in clinical guidelines, we used a concordance analysis program, the “Simple Concordance Program (release 4.07)c”, which produces keywords in context (see below).

As a result, we collected a set of syntactic variants for the various deontic operators. The moderate variability of deontic expressions (65 syntactic patterns) suggested that an appropriate syntactic coverage could be achieved by encoding these variants into a sample syntactic formalisms, such as Finite-State Transition Network (FSTN).8

Our aim is to recognize linguistic markers of deontic expressions by a partial parsing of free text. The identification of such markers inside the text can subsequently be used to structure the document accordingly. Techniques for shallow analysis and recognition of specific text contents have been essentially developed in the field of Information Extraction (IE). For instance, the FASTUS system9 has demonstrated how FSTN could be tuned to recognize specific descriptions (such as actions or characters). This is why we adapted IE techniques to the specific identification, in the present case, of deontic expressions, regardless of the surface form of their textual occurrence.8 The recognition of deontic operators is however not sufficient to structure the document: it is also necessary to recognize the scope of the operator’s arguments. In terms of document structure, scopes correspond to text segments structured by deontic expressions. A scope that precedes a deontic operator is called front-scope, whereas the back-scope corresponds to a scope which follows the operator.

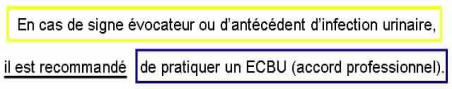

Figure 2 illustrates the scopes of a deontic operator “il est recommandé” (“it is recommended”) which is underlined.

Figure 2.

Front-and back-scope for the “recommended” deontic operator.

THE GRAPHICAL ENVIRONMENT

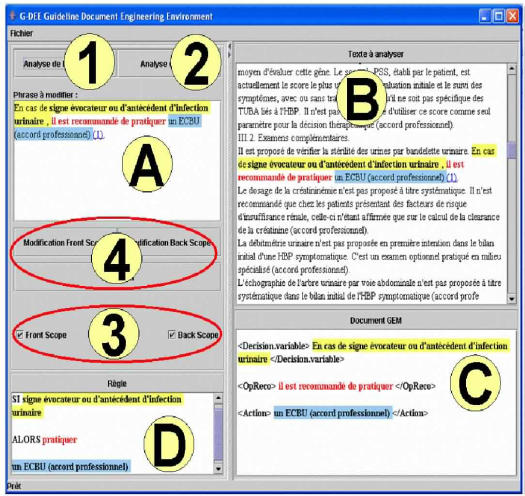

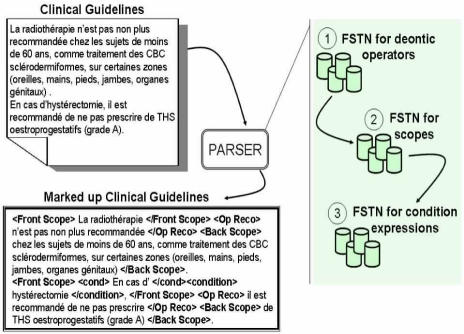

An overview of the G-DEE interface is presented in Figure 3-a. This environment supports manipulation of the guidelines text through a combination of features, some dealing with text display, text manipulation, and others triggering text structuring functions based on text analysis.

Figure 3-a.

The G-DEE interface (see text).

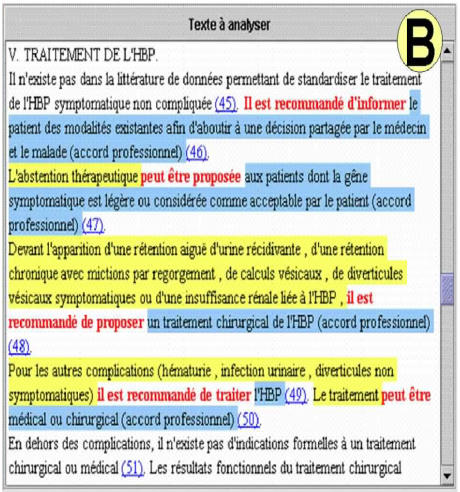

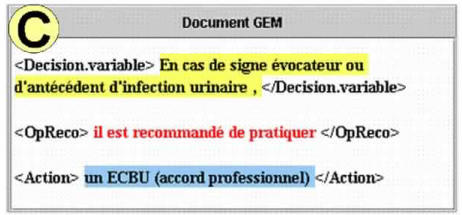

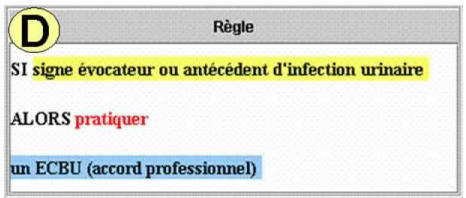

The interface supports the graphical selection of text sections which can then be automatically analyzed for deontic operators (button 1) and marked up accordingly, as shown on Figure 3-b (window B) for the sentence “En cas de signe évocateur ou d’antécédent d’infection urinaire, il est recommandé de pratiquer un ECBU (accordprofessionnel).”d. The whole document can be analyzed by pressing button 2. The resulting marking up can be validated interactively by the user (button 4 of the interface). In addition, G-DEE enables to display contents of decision.variable, and action GEM elements, as well as deontic operators in window C. Window D displays decision rules automatically derived from the marked up text, which can be used for knowledge extraction or analysis of text coherence.

Figure 3-b.

Recognition of deontic operators.

The Text Processing Engine

One of the most important functions of the interface is its automatic assistance to document encoding based on automatic text analysis. We have developed an ad hoc parsing technology inspired from IE which parses the selected text (or the whole document) for deontic operators to automatically mark them up, together with their corresponding scopes.10 Text parsing is based on a cascade of FSTN (see figure below), and uses a tailor-made parsing algorithm that we have developed, in particular for the efficient handling of shared patterns between FSTN. The first step consists in analyzing the text for deontic expressions using a set of 1106 FSTN, which correspond to 65 syntactic patterns with their morphological variations. It can be noted that syntactic phenomena corresponding to deontic operators are largely common to the French and English languages, despite minor variation in syntactic expressions.

Upon identification of a deontic operator, the corresponding deontic expression is tagged (using appropriate markups), also taking into account the voice of that expression, as it affects the position of the deontic operator’s arguments (front-scope and back-scope). After the text has been tagged for deontic operators, a second step uses a specialized FSTN to properly delimitate (and mark up) the corresponding scopes of the deontic operator using previously recorded information about the operator’s voice. This first step of document structuring produces an XML file, which can serve as a pivot representation for further processing, supporting GEM encoding functions or knowledge extraction. For that purpose, we developed a set of tools based on an XSLT processor, able to transform the original XML file. Transformation rules are encoded in an XSL style sheet that is used by the XSLT parser to process the original XML document. For instance, we have defined an XSL style sheet that will prompt the XSLT parser to extract portions of text corresponding to decision.variable and action elements in the GEM hierarchy, from the XML documents tagged for deontic operators and their scopes (Figure 5). In a similar fashion, this XSLT parser can also extract decision rules in an IF-THEN format (Figure 6) from the marking up of deontic operators and their scopes. The identification of decision.variable is also used to automatically extract IF-THEN decision rules11 where the contents of front-scope and back-scope can be mapped to IF-THEN clauses.

Figure 5.

Automatic derivation of “decision, variable” and “action” GEM elements.

Figure 6.

Automatic derivation of

EXAMPLE APPLICATIONS

Computer-Aided Guideline Editing

We defined specific highlighting functions to identify deontic operators and their associated front-scope and back-scope. Such highlighting is defined in an XSL style sheet which is used by an XSLT process. The results in the front-scope are being highlighted in yellow and the back-scope in blue. An example is shown in Figure 5, where the front-scope corresponds to “En cas de signe évocateur ou d'antécédent d’infection urinaire”e. The text highlighted in blue corresponds to the back-scope, i.e. “un ECBU (accord professionnel)”f. The deontic operator itself “il est recommande de pratiquef”g appears in bold red font.

Encoding GEM Conditions Variables

The marking up of deontic operators and their scopes can serve as a basis to identify a Conditional element of a GEM document. The first step consists in introducing another level of marking up, by tagging, within the front-scope or back-scope, those elements which corresponds to decision.variable and action elements. This is achieved through the FSTN-based recognition of syntactic markers for conditional expressions (e.g. “en cas de” (“in case of”), “lorsque” (“when”), etc.). These conditional elements permit to identify decision.variable within scopes. The rationale is once again that conditional statements have a finite number of syntactic expressions in a given text genre. This extraction of decision variables is achieved through a set of rulesh matching the structure of conditional expressions to decision variables and including these rules in a XSL style sheet. These rules once again take into account the voice of the corresponding sentence. For instance, whenever a condition expression occurs in the front-scope and the sentence is in the passive voice, the front-scope corresponds to decision.variable and the back-scope to action as shown in the figure below.

Extraction of Decision Rules

The same mechanism which extracted decision variables can be used to extract decision rules from the marked up document, by matching the contents of IF-THEN clauses to the previously identified front-scope and back-scope. We once again defined specific XSL style sheets that permit to instantiate the corresponding decision rule which can be displayed in a dedicated window of the interface (see below). For example, when a condition expression is part of the front-scope and the sentence is in the passive voice, the IF-clause should correspond to the front-scope. The THEN-clause will contain the marked up action verb as well as the textual content of the back-scope.

EVALUATION

We tested our text processing system on 276 sentences (extracted from 5 randomly selected clinical guidelines). None of these clinical guidelines has been used for the definition of our deontic operators’ grammar, which guarantees the validity of the test suite. For this evaluation, we mainly focused on the correct identification of the deontic expressions based on the following verbs: “recommander” (“to recommend”), “devoir” (“should or ought to”), “pouvoir” (“to be allowed to or may”) and “convenir” (“to be appropriate”).

This test set contains 304 deontic operators which had been previously identified manually together with their respective front-scope and back-scope. This manual identification serves as benchmark for our evaluation. To evaluate system performance, we compared the system’s output for automatic encoding to the manually encoded benchmark (an overview of such marking up is shown in Figure 3-b).

As a preliminary result, our automatic structuring system correctly marked up 97% of the occurrences of deontic operators and their associated scopes on this test set composed of 276 sentences not used in the initial grammar definition. Furthermore, in this preliminary evaluation, we do not consider the usability of the overall G-DEE environment, but limit ourselves to a performance analysis of the text processing tools.

CONCLUSION

In this paper, we presented the G-DEE environment dedicated to the study of clinical guidelines. G-DEE incorporates automatic text processing functions that recognize recommendations from free text. This process is possible through the recognition of specific linguistic expressions called deontic operators.

This approach enables to decrease the cognitive load of the user by reducing the number of tasks that she should carry out to encode clinical guidelines. The user may focus solely on the identification of contents, due to the fact that the system provides her with a first level of document structuring. This approach then facilitates access to text contents, by providing automatic assistance to the early phases of document structuring. Additional functions are currently under development. For example, those enabling to modify sentences marked up in the text (part A of Figure 3-a), which should assist the authoring of clinical guidelines.

All these treatments may be performed from a linguistic analysis centered on a limited number of deontic markers or conditional structures. This should guarantee the scalability of the approach within the limits of the state-of-the-art of document processing techniques. The current limitation of the approach lies in the syntactic coverage required to identify deontic operators. Although extensive coverage can be achieved from corpus analysis (because of the specific nature of deontic operators), occasionally new texts will introduce variants not previously encountered, which require extension of the grammar.

This paper presented early results from the implementation of our G-DEE environment. A more extensive evaluation of the system is currently being undertaken by expert physicians with extensive experience in guidelines definition and encoding.

A valuable extension of this approach would consist in further processing of the textual contents of a deontic operator’s scopes, which would identify relevant content such as medical treatments. Such processing can be based on terminological recognition or information extraction methods such as named entity recognition.

Figure 1.

Occurrences ofdeontic operators and their syntactic variations (negation, passive voice, etc.).

Figure 4.

The various steps of text processing.

Footnotes

Download from: http://gem.med.yale.edu/

Downloadable from: http://www.download.com

“In case of symptoms of urinary tract infection, it is recommended to perform urinalysis (expert agreement).”

“In case of symptoms of urinary tract infection”

“urinalysis (expert agreement).”

“it is recommended to perform”

These rules have been defined for French texts.

REFERENCES

- 1.Shiffman R, Karras B, Agrawal A, Chen R, Marenco L, Nath S. GEM: A proposal for a more comprehensive guideline document model using XML. Jam Med Inform Assoc. 2000 Sep–Oct;7(5):488–98. doi: 10.1136/jamia.2000.0070488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Karras B, Nath S, Shiffman R. A preliminary evaluation of guideline content mark-up using GEM--An XML Guideline Elements Model. Proc AMIA Symp. 2000:413–7. [PMC free article] [PubMed] [Google Scholar]

- 3.Shiffman R, Michel G, Essaihi A. Bridging the guideline implementation gap: a systematic approach to document-centered guideline implementation. J Am Med Inform Assoc. 2004 Sep–Oct;11(5):418–26. doi: 10.1197/jamia.M1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Svatek V, Ruzicka M. Step-by-step mark-up of medical guideline documents. Int J Med Inform. 2003 Jul;70(2–3):329–35. doi: 10.1016/s1386-5056(03)00041-8. [DOI] [PubMed] [Google Scholar]

- 5.Svatek V, Kroupa T, Ruzicka M. Guide-X - a step-by-step, markup-based approach to guideline formalisation. First European Workshop on Computer-based Support for Clinical Guidelines and Protocols. 2001:97–114. [Google Scholar]

- 6.Moulin B, Rousseau D. Knowledge acquisition from prescriptive texts. ACM. 1990:1112–1121. [Google Scholar]

- 7.Kalinowski G. La Logique Déductive: Presses Universitaires de France (in French); 1996.

- 8.Roche E, Schabes Y. Finite-State Language Processing: MIT Press; 1997.

- 9.Hobbs J, Appelt D, Bear J, et al. FASTUS: A cascaded finite-state transducer for extracting information from natural language text. Finite State Devices for Natural Language Processing. 1996:383–406. [Google Scholar]

- 10.Georg G, Colombet I, Jaulent M-C. Structuring clinical guidelines through the recognition of deontic operators. Proceedings of Medical Informatics Europe 2005, in press. [PubMed]

- 11.Georg G, Séroussi B, Bouaud J. Does GEM-encoding clinical practice guidelines improve the quality of knowledge bases? A study with the rule-based formalism. AMIA Annu Symp Proc. 2003:254–8. [PMC free article] [PubMed] [Google Scholar]