Abstract

A risk analysis framework was used to examine the implementation barriers that may hamper the successful implementation of interorganizational clinical information systems (ICIS). In terms of study design, an extensive literature review was first performed in order to elaborate a comprehensive model of project risk factors. To test the applicability of the model, we next conducted a longitudinal multiple-case study of two large-scale ICIS demonstration projects carried out in Quebec, Canada. Variations in the levels of several risk dimensions measured throughout the duration of the projects were analyzed to determine their impact on successful implementation. The analysis shows that the proposed framework, composed of five risk dimensions, was very robust, and suitable for conducting a thorough risk analysis. The results also show that there are links between the quality of the risk management and the level of project outcomes. To be successful, it is important that the implementation efforts be distributed proportionally according to the importance of each of the risk factors. Furthermore, because the risks evolve dynamically, there is a need for high responsiveness to emerging implementation problems. Thus, implementation success lies in the ability of the project management team to be aware of and to manage several risk threats simultaneously and coherently since they evolve dynamically through time and interact with one another.

Introduction

Most industrialized countries have undertaken major reforms of their health care systems during the last few years. These reforms have attempted to strengthen the primary health care sector and favor the emergence of integrated health care networks as a new model of care organization. 1–3 Within the context of this new model, patients need to have access to various points of care, where both the best intervention and the lowest cost structure are available, according to the severity of the illness. Given the geographic dispersion of these points of care, the interaction of many health specialists and the need to balance the complex sequence of events required to care for each patient, the emerging network organizations cannot be efficient and productive without substantial application of information technology (IT). Clinical information must follow the patient so that the health specialists can coordinate their activities and offer efficient integrated care.

To fully benefit from these new models of care, interorganizational clinical information systems (ICISs) must be developed and used. Several countries have undertaken various forms of such large-scale interorganizational initiatives. For instance, in 2004, the U.S. Department of Health and Human Services released a strategic framework that calls for the creation of Regional Health Information Organizations that would provide local leadership for the development, implementation, and application of secure health information exchange across care settings. 2 A similar road map was worked out in Canada a few years ago and gave birth to large pilot projects of health information networks such as interorganizational diagnostic imaging systems and regional master patient index projects. 3 Great Britain has also made large investments to deploy electronic medical records across its entire health care system. On a smaller scale, France just launched a call to tender for an electronic medical record demonstration project for its public health care system.

As reported in the scientific and professional press, many ICIS projects have recently failed for reasons such as inadequate buy-in and conflicting organizational missions, the need for centralized databases, data ownership issues, lack of financing, deep-seated professional and institutional resistance, and the high cost of network technology. 1,2,4–7 Recent improvements in IT, notably network technology and clinical information standards, have removed some of these barriers creating a more favorable environment. However, the development of interorganizational systems remains very complex and their implementation highly risky.

In the present paper, we use a risk analysis approach to broaden and strengthen our understanding of ICIS project implementation barriers. We build upon information systems project risk literature and medical informatics literature to increase knowledge in this domain. In pursuit of this objective, this paper proposes a risk analysis framework and then examines its applicability. To this end, we analyzed how the variation in several risk factor levels throughout the duration of a given ICIS project had an impact on the success of its implementation. The empirical test of the risk analysis framework was conducted with two large-scale Canadian experiments conducted between 2001 and 2004 in Quebec, Canada. The first project was deployed within a pediatric network of three hospitals and four private medical clinics geographically dispersed over three regions while the second project served an interorganizational network linking a public hospital and a private laboratory setting to over 100 general practitioners (GPs) practicing in ten primary care private clinics. Both projects were confronted with important issues such as governance among competing organizations, patient matching, and data standards that are critical to clinical information exchange.

Risk Typology

Identifying the risks associated with the implementation of an ICIS can be a major challenge for managers, clinicians, and IT specialists, as there are numerous ways in which they can be described and categorized. Risks vary in nature, severity, and consequence, so it is important that those considered to be high-level risks be identified, understood, and managed. The proposed taxonomy is aimed at organizing in a meaningful way the vast number of information system-related success factors or risk factors that have been identified in the literature. 8 As listed in ▶, we suggest five different risk dimensions according to which we have categorized a series of risk factors most often cited in the IT and medical informatics literature. A fundamental risk dimension for any IT project is of course the technological risk to which we add four other dimensions, namely: the human risk, the usability risk, the managerial risk, and the strategic/political risk. According to the proposed framework, the success of such systems lies in the capability to put in place a series of proactive strategies that minimize the potential impact of the various risks that are specific to a particular project. We thus adopt the definition that reflects the manner in which most of the IT literature views risk in terms of negative outcomes relative to management goals and user expectations. 8 Each risk dimension presented in ▶ is discussed in detail below.

Table 1.

Table 1. Risk Dimensions, Risk Factors, and Empirical Support

| Risk Dimensions | Risk Factors | Empirical Support |

|---|---|---|

| Technological |

|

Ahmad et al. 2002; Barki et al. 1993; Doolan et al. 2003; Ferratt et al. 1996, 1998; Miller & Sim 1994; Morrell & Ezingeard 2001; Overhage et al 2005; Payton & Ginzberg 2001; Sherer & Alter 2004 |

| Human |

|

Anderson 1997; Attewel 1992; Barki et al. 1993; Battacherjee 2001; Cash et al. 1992; Ferratt et al. 1998; Gibson 2003; Jiang et al. 2001; Karahanna et al. 1999; Keil et al. 1998; Kling & Iacono 1989; Kukafka et al. 2003; Lapointe & Rivard 2005; Leonard-Barton 1988; Markus 1983; Miller & Sim 1994; Paré 2002, 2006; Pierce et al. 1991; Poon et al. 2004; Sicotte et al. 1998a; Srinivasan & Davis 1987 |

| Usability |

|

Ahmad et al. 2002; Anderson & Aydin 1997; Baccarini 2004; Davis 1989; Doolan et al. 2003; Kukafka et al. 2003; Lee et al. 1996; Overhage et al. 2001, 2005; Shu et al. 2001; Siau 2003; Sicotte et al. 1998ab; Van Der Meijden et al. 2003; Venkatesh & Davis 2000; Weiner et al. 1999 |

| Managerial |

|

Ahmad et al. 2002; Jarvenas & Yves 1991; Kanter 1983; Sherer & Alter 2004; Zaltman et al. 1973 |

| Strategic & political |

|

Ferratt et al. 1996; Morrell & Ezingeard 2001; Overhage et al. 2005; Payton & Ginzberg 2001; Sherer & Alter 2004; Stead et al. 2005 |

Technological Risk

Hardware and software complexity has long been considered a critical IT project risk factor. 9–10 In the health care sector, the novelty of computer-based patient record software exacerbates the level of this risk factor. 11–13 Software complexity also refers to the interdependence of the new application and existing information systems within the organization. This risk factor is further increased in the context of a necessary network infrastructure that represents an additional layer of technological risk. Indeed, in the ICIS context, the presence of diverse and more or less compatible hardware and software systems specific to the participating organizations makes matters worse in terms of systems integration, processing speed and security. 1,2,5,6,14–15

Human Risk

Resistance to change is a phenomenon so pervasive and widely recognized that it scarcely requires documentation. 16 Previous IT research has revealed that the major concerns include the users' openness to change, users' attitudes toward the new system, 17–19 and users' expectations. 20,21 From this perspective, organizational history or memory of previous technological initiatives might affect the way a new technology is framed and hence have an influence on the extent of implementation success. 22 Also, previous IT research underlined the importance of users' computer skills and knowledge, 23,24 and user ownership. 24–26 Indeed, IT project risk increases as the project team's familiarity with hardware, operating systems, databases, application software, and communications technology decreases. 5,9,27,28 IT research specific to physicians and other health care professionals shows similar results. Physicians have long been accused of being reluctant to use computer-based information systems and several studies have shown evidence of computer resistance among physicians. 11,29–33 Therefore, physicians and other health professional users should be recognized as having a major influence on ICIS implementation risks, particularly when one takes into account the high level of responsibility and autonomy given to professionals in their practice. 11,34

Usability Risk

Perceived usefulness represents one of the most studied constructs in the IT literature. Davis (1989) defines it as the “degree to which a person believes that using a particular system would enhance his or her job performance.” 35 This risk factor should be especially important in the case of sensitive organizational activities such as clinical care. In this context, validity, and pertinence of information should be of paramount importance as well as factors such as system downtime and display speed. 12,13,36,37 In the same manner, another important factor in the literature is the extent to which the new system is perceived as user friendly. 35,38 The complexity of interface development remains one of the barriers to widespread diffusion of the computer-based patient record. 34,39 Furthermore, substantial investments in IT have delivered disappointing results, largely because organizations tend to use technology to mechanize old ways of doing business. 40,41 Simply laying technology on top of existing work processes trivializes its potential. To assure the effective use of the technology, organizational redesign must become synonymous with system deployment. The implementation of clinical information systems carries certain risks in this domain, especially if it interferes with traditional practice routines. 33,42–44 This is often the case for implementation of electronic data entry which often implies a time consuming activity for the clinical team, particularly the physicians. 37,45–47 Thus process redesign is a significant risk factor that should be planned with a view to offering the greatest possible added value to all users. Finally, it is important to underscore that the perceived usefulness risk needs to be assessed not only in terms of its alignment with local clinical business processes but also with interorganizational business processes. 1,2

Managerial Risk

In an ideal IT project situation, all of the necessary resources are made available as needed. These resources include not only money, but also people and equipment. 10,47,48 In this respect, selecting and assembling a group of people with enough power to lead the change effort and encouraging the group to work together as a team are two important factors exercising a high influence on the project outcome, especially in complex organizations such as health care establishments. 44 The leading group, composed of consistent and dedicated staff, must create a vision to help direct the project and develop strategies to help achieve the set goals. 44,49 From this perspective, upper executive support represents a meaningful factor that can also diminish managerial risk. 50 Associated with these elements, the time frame in which the health information project needs to be executed is also a barrier to successful implementation. 12 ICIS projects, like most large transformation ventures, risk losing momentum if there are no short-term goals to meet and celebrate. Without short-term wins, too many people might give up or actively join the ranks of those who have been resisting change. 44,49 We expect these factors to hold true in the ICIS context where several organizations need to act together.

Strategic/Political Risk

ICIS implementation projects pose numerous strategic difficulties. The collaboration of several groups of professionals and organizations is essential in a context in which the amplitude of organizational change is high and in which the demands on human and financial resources are great. Political factors arising from conflicting professional and organizational interests and objectives will tend to impede the progress of network implementation. 1,2,6,51,52 In this context, the degree of participation and adherence of each participating group and organization is influenced by the value associated with the collective project with regard to their own objectives. 14,15 A strategic stance is needed so the project may pursue common objectives. This is particularly true in a context in which antagonism or enmity may easily occur between parties as a result of misunderstandings, unanticipated changes in the scope of the project, missed or delayed delivery dates, or other disputes that may polarize partners into opposing camps. 10

Methods

Given that the implementation of interorganizational information systems is a complex process involving multiple actors and that it is influenced by events that happen unexpectedly, qualitative methods were used to identify a broad range of risk factor issues and to generate useful insights for theory building. In terms of study design, an extensive review of the IT and medical informatics literature was first conducted. This was necessary in order to elaborate a comprehensive model of project risk factors suitable for the implementation of ICIS projects. Then, in line with Eisenhardt's (1989) work, 53 these risk factors were used as a starting point to conduct a multiple-case study 54 to empirically identify risk level variations during the implementation stage and study the links of these variations with implementation success.

ICIS projects vary in scope, formality and expected outcomes. According to the Markle Foundation's Connecting for Health, 52 interorganizational networks can be differentiated by several factors outlined in ▶, including: provider or system network, type of technology solution, and targeted users. In the present analysis, two longitudinal case studies of ICIS implementation were conducted. The first case took place over a 3-year period while the second occurred over a 2-year period. The study was approved by the appropriate institutional review boards.

Table 2.

Table 2. Profile of the Case Studies

| Name of project | Rainbow Network | Primary Care Network |

|---|---|---|

| Type of IT system | Regional EMR data warehouse | Regional EMR data warehouse |

| Network size | 7 organizations | 12 organizations |

| Targeted users | 39 pediatricians | 105 general practitioners |

| Project length | 40 months | 26 months |

| (Ending date: June 2004) | (Ending date: December 2003) | |

| Total budget | 11 Million $ (Cdn) | 14.8 Million $ (Cdn) |

| Project success | ||

|

High | High |

|

Low | High |

|

Low | Moderate |

The first case, referred to as the Rainbow Network, consisted of an interorganizational results reporting system primarily aimed at accelerating the transmission of health data within a health information network composed of seven organizations serving a pediatric clientele in Quebec, Canada (▶). These organizations included a large pediatric teaching hospital, the pediatric departments of two mid-sized adult community hospitals, and four affiliated pediatric medical clinics. A total of 39 pediatricians volunteered to use the new system during a seven-month demonstration period. The second case study, referred to as the Primary Care Network, involved a similar interorganizational results reporting system, also located in Quebec, Canada (▶). The network consisted of ten medical clinics in which 105 GPs agreed to participate as users of the new system. IT solutions were respectively provided by a major computer systems supplier in the first case and by a small IT firm specializing in medical informatics in the second one. These systems were sub-components of larger interorganizational Electronic Medical Record (EMR) systems. More precisely, the health data exchange in both projects was limited to results reporting of laboratory tests and radiology exams. Physician ordering was forecasted in a subsequent implementation phase. Both technological solutions relied on a data warehouse infrastructure able to combine individual patient health data already contained in the existing legacy computer systems of the participating organizations. Several technical interfaces had to be developed to compensate for the lack of data standards between the proprietary systems and the data warehouses.

A unique network identifier was also attributed to each patient to ensure that patient matching and medical terminology used in particular organizations was recognized by the network system through the development of an interorganizational master patient index and a shared medical thesaurus. Interorganizational data exchange was technically feasible between the participating organizations because they all had access to a proprietary high-band secure intranet previously deployed by the Quebec Department of Health. The exchange of clinical data was further secured to the extent that each user needed a password and access to the system was restricted to dedicated workstations. The implementation objectives were similar in both projects: the participating physicians were expected to use the new system in their clinical practice on a regular basis.

The two ICIS projects were significant cases because of their importance and for the corresponding efforts they generated in terms of project design and implementation. Indeed, both cases consisted of the largest initiatives undertaken at the time within the Quebec Health Care System to develop integrated health care networks. The Rainbow project was financed by both the Quebec Health and Industry Departments. The relevance from the point of view of the Industry Department was to financially support an emerging growth sector of the economy, while the Health Department aimed at supporting an essential organizational model in the spirit of the reform promoting greater continuity of care. The Primary Care Network also benefited from substantial state support. Within a National Canadian initiative aimed at developing interoperable EMRs across Canada, both the federal government and Quebec's provincial authority—provincial authorities are responsible for delivering health care according to the Canadian constitution—invested in the project. Given their scale, both projects enjoyed high public visibility in the health care industry where they were perceived as major innovations. In summary, both ICISs were large-scale projects with sufficient investment in financial and human resource terms to give birth to meaningful interorganizational clinical health information network building initiatives. Additionally, a portion of each project's budget was set aside as a research grant for the academic research team, which we headed, responsible for conducting an independent and thorough implementation evaluation. As such, we had access to all of the material necessary to conduct the present cross-case analyses.

A similar data collection strategy was pursued in both cases. Multiple sources were used. First, semi-structured interviews were carried out with several users and project team members who participated in the development and implementation of the systems. A total of 52 interviews were conducted for the Rainbow Network project (27) and the Primary Care Network project (25). Second, structured questionnaires were used to measure users' attitudes toward, and satisfaction with the new system. These questionnaires were distributed to all participating users before and after system deployment (quantitative results not reported here). Third, system usage was objectively measured using the log history journal of both systems. This very robust and non-intrusive measure allowed us to identify each user and to determine the frequency and duration of each work session with the new system. Fourth, data were collected through observation during steering committee and user committee meetings. Lastly, all documents relevant to the present study, including organizational charts, administrative documents, minutes of meetings, newsletters, and training material were also collected and analyzed for both cases.

We began data analysis with a thorough analysis of each case and then conducted a cross-case comparison to develop the theoretical insights used for theory building. This comparison was especially relevant given that the circumstances created a situation of contrasting cases. Indeed, the first case was considered a failure while the other led to a successful implementation, as reported in ▶. This situation offered a unique opportunity to analyze the links between the risk factors and implementation success in a contrasting fashion. Finally, in an effort to synthesize the results of a rich qualitative analysis, we assessed the risk levels of the five dimensions of risk on a five-level scale varying from “Very Low” to “Very High.” Each of these risk levels was determined for each ICIS project at two critical moments: at project onset (the initial risk level) and at the end of the implementation phase (the final risk level). The difference between the initial and final risk levels indicates the relative performance of each network in overcoming a threat. To ensure the validity of this risk assessment, various methods were used. The diverse and rich sources of data used for our case studies allowed us to use a triangulation method in the assessment of the degree of risk. Also, the two principal investigators independently conducted a risk assessment analysis of each case. This process resulted in a few minor discrepancies that were later resolved by consensus. The results of the risk assessment were presented to, and validated by, the management team of each project at the end of the experimentation period. In this paper, we report the main elements that support the risk analysis assessment conducted in each project. The reader can judge the soundness of the risk levels attributed to each risk dimension in both cases.

Results

Prior to presenting the results of the risk analysis, the evidence that allowed us to determine the level of implementation success is presented. First, both cases can be qualified as successful in technological terms to the extent that both electronic network data transmission infrastructures were made operational and usable during the course of the implementation process. However, the level of success was very different in terms of the physicians' adoption and use. The Rainbow project failed to meet its own expectations. The use of the results reporting system was mainly limited to the teaching hospital where 13 physicians out of 22 occasionally used the new system on a weekly basis. In the other organizations, use was even more disappointing. In one of the community hospitals, only one of the physicians occasionally used the new system while none were able to use it in the other simply because the system had not been deployed in time. None of the physicians used the new system in the four clinics. In these clinics, the medical secretaries used the system but only to print the data that was, as always, filed in the paper file. In contrast, physician use of the new system was far more impressive in the Primary Care Network. In the eleventh month following deployment, 69% of the physicians were using the new system intensively. Since the end of the data collection, the evolution of both projects has been consistent with their respective initial paths. On the one hand, the Rainbow project still allows the exchange of patient information among the seven health care organizations but physicians' use remains anemic. On the other hand, the number of users in the Primary Care Network has expanded both in terms of physicians (from 105 to 130) and medical clinics (from 10 to 13).

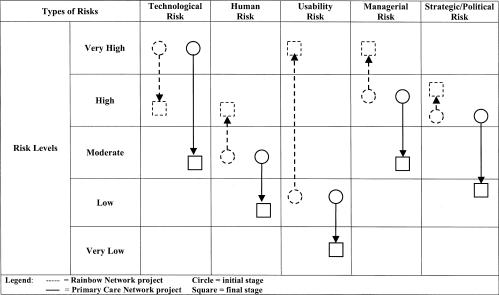

The risk analysis is summarized in ▶. On a five-level scale, the initial risk levels (represented by circles) and the final levels (represented by squares) for each project are shown. The difference between the initial and final risk levels indicates the relative performance of each network in overcoming its own risk.

Figure 1.

Assessment of the evolution of the level of risk in terms of five dimensions of risk.

Technological Risk

As depicted in ▶, technical feasibility was perceived as a main challenge in both projects. The initial high level of risk was principally associated with the newness of the network software and infrastructure. At the time, interoperable EMRs were not widely used in the Canadian health care industry and network or shared EMRs were far less widespread. Several vendors offered electronic solutions but none had gained enough market success to ascertain their long-term viability. The number of failures and business bankruptcies was still high. In the same manner, the novelty of the interorganizational health information infrastructure was perceived as risky in terms of network reliability and security, especially in a context in which data integration needed to be achieved between the various existing IT systems of several organizations.

Accordingly, the largest part of the implementation effort in terms of time, money, and human resources was investment in the acquisition, set up of project parameters and installation of the network solution and infrastructure. There was a clear need to hire outside experts in IT to compensate for the in-house resource shortage both in terms of the number of IT specialists and the level of expertise. In addition, both projects chose a technical solution to diminish their risks. Both systems were data warehouse solutions that were able to integrate within a sole database the data of different systems. This technological solution thus offered the possibility to keep in place all the existing systems of the participating organizations. The project's overall cost and the scale of organizational change were thus accordingly diminished. However, this solution carried its own risk due to the lack of a common data standard that would have made possible the transfer of clinical data between the existing systems and the new network data warehouse. In this way, because the legacy systems in place did not support HL7, a relatively new data standard created to this end, homemade technical interfaces had to be developed. This risk led to different implementation outcomes. In the Rainbow project, the complexity of the work was underestimated and the schedule did not allow enough time for its completion. The development of the technical interfaces trailed behind and the project suffered a twelve-month delay. The final deadline was missed, and although the integration of clinical data was possible, the cost was high in terms of time delay, additional budget, and user perception. The last impact was especially negative in terms of physicians' adoption and use, and consequently implementation success was severely impaired.

Despite being confronted with the same level of initial risk, the Primary Care Network project made a more realistic implementation plan. Its time frame and efforts were more aligned with the complexity of the task and the technical interfaces were developed according to the planned schedule. The risk level was diminished and the users' commitment to the project remained unaffected. The implementation outcomes were accordingly more favorable in terms of physicians' adoption and use of the new system.

Network infrastructure risk was well managed in both cases. The use of external expertise, as well as the presence of a pre-existing secure and reliable telecommunication network, was helpful for conceiving and deploying the infrastructures. Indeed, an earlier massive IT investment made by the Quebec Department of Health had given them access to a proprietary high band secure intranet. Interorganizational data exchanges were thus technically feasible before the launch of both projects.

Human Risk

Resistance to change has been regularly invoked as a factor explaining electronic clinical information systems implementation difficulties among physicians. This was not the case for the projects under study, at least not at the outset. First, it must be stressed that all the physicians agreed to participate in the implementation stage on a voluntary basis. Accordingly, physicians' initial expectations and attitudes were positive toward the new system. The physicians thought that the new system was likely to offer improvements on the usual paper system. However, at the same time, they were realistic about the large efforts needed to carry out this change and their collaboration was not taken for granted. They were ready to make efforts but, at the same time, they had specific expectations about the pace of the implementation progress and the performance of the new system since they had experienced IT failures in the past. Indeed, the Rainbow Network's teaching hospital had suffered a recent unsuccessful EMR implementation, 31 while the Primary Care Network had undergone a similar failure during the implementation of a regional health smart card system. Thus, the initial level of human risk was relatively moderate in both projects to the extent that user resistance was weak and their expectations were realistic.

However, despite similar initial human risk levels, the implementation efforts were different. The Rainbow Network project efforts were very limited in terms of their relations with the system users. First, a committee of physicians was formed to analyze their information requirements but their modification suggestions were never really taken into account. The consultation was conducted very early in the implementation process and the physicians had a vague vision of the new system and the context of use remained undetermined. Thus the committee's impact was not effective in the set up of system parameters. Secondly, a physician from the teaching hospital was recruited to act as a project champion. But, the strategy was unsuccessful to the extent that his influence on the physicians remained solely limited to his own hospital and his efforts to modify the new system were in vain. In fact, this physician acted more as a project opinion leader than a system champion.

In stark contrast, the Primary Care Network project made impressive and continuous implementation efforts to build strong and continuous relations with the physicians. In each participating clinic, a physician acted as project champion. Their responsibilities were numerous: detailed clinical information analyses; experimental use to test the system interface in laboratory settings; configuration of the new system adapting it to their respective clinics' needs; and acting as “super users” to help their own colleagues. All these activities gave them a very good understanding of the new system and their requests for system modifications were taken into account and often implemented. Thus, the users really influenced the implementation process.

These differences between the two projects in terms of human risk awareness and management led to opposite implementation outcomes. As shown in ▶, the Rainbow Network suffered an increase of its human risk level while the Primary Care Network succeeded in diminishing its own risk level. During the course of the implementation, the level of skepticism increased among the physicians of the Rainbow Network while the Primary Care Network physicians became increasingly confident in the success of the new system. Furthermore, several Primary Care Network physicians, namely the project champions, developed a significant sense of ownership vis-à-vis the new system. 25

Usability Risk

Initially, the awareness of the project management team regarding the importance of the usability risk was low in both projects. The physicians' perception of the usefulness of the new system was taken for granted and only the system's user friendliness was perceived as a significant risk factor. During the course of the implementation, the former became a major issue while the latter did not entail negative implementation outcomes to the extent that both systems were deemed easy to use by the physicians.

At first, the strategy to use a data warehouse type of results reporting system was a good move. The existing systems offered a way to access large amounts of electronic data, such as laboratory and radiology results. The level of usability risk was thus relatively low. But, in the course of the implementation process, the usefulness of the new system became a major issue that was managed differently for each project, resulting in an opposite variation of the level of risk. The implementation success measured in terms of physician use was severely impacted. The system's usefulness to the physicians became a critical risk in two domains: information usability and work usability. The former corresponded to the information content offered by the ICIS application. The latter concerned the alignment between the electronic system use and the physicians' work routines.

The analysis showed three dimensions belonging to information usability: data scope, quality, and critical mass. First, the reduction of the scope of available data to laboratory and radiology results was well accepted by the physicians, reducing technological risk while offering enough system usability. Despite their expectation for a more complete ICIS, the physicians accepted this solution as temporary and the level of usability risk remained low. The second issue was in relation to data quality that was of paramount importance for the physicians. Fortunately, this risk factor also remained low in the course of the projects. Once again, the choice of a data warehouse technology meant that the clinical data were directly transferred as is from the existing systems. Users' prior positive confidence in the quality of the data was transferred to the new system. It was the third dimension of information usability that made a difference. The critical mass of information was an important cause for concern given the physicians' expectations in both projects for a results reporting system that offered laboratory and radiology results for all of their clientele. In the Rainbow project, to counter external stakeholder concerns regarding confidentiality issues, it was decided to develop a state-of-the-art, stand-alone electronic system aimed at managing patient consent and ensuring information sharing across the interorganizational network. Unfortunately, the new system development incurred severe delays and the patients' consent recruitment campaign began late so too few patients had the chance to give their consent. Consequently, the number of patients for whom information was made available in the network database was not sufficient to interest the physicians. In contrast, although the Primary Care Network was confronted with similar external stakeholder pressures regarding confidentiality issues, they kept their focus on usability and worked to improve the security functions already existing in the IT vendor system. Although the security level of their project was weaker than the one reached by the Rainbow project, their solution was quickly made operational. They obtained the patients' consent in time and consequently their network database offered information for almost all of the patients. The physicians using the new system were thus sure to have the information they were looking for.

The second usability risk issue that had a significant impact concerned differences between the physicians' usual work routines and the new ones introduced by the use of the results reporting system. The Rainbow Network project adopted a minimalist implementation strategy and decided to simply ask the physicians to use the new system without giving them any additional support. All of the existing business processes supporting the patient paper system were left in place. It was a risky strategy to the extent that it remained much easier and less time consuming for the physicians to use the old paper system, particularly since it offered all of the information for all patients. In this manner, the low level of perceived usefulness both in terms of information usability and work usability fuelled an anemic use of the new system.

In contrast, the Primary Care Network project team was more proactive in its implementation efforts. Even before its deployment, the application's usability was tested among future users, in a simulated environment. These tests led to several corrections to the software in order to better satisfy users. A similar proactive approach was adopted at the very beginning of the implementation to quickly solve a problem of response time. Poor response time was a serious usability risk that had a negative impact on the time spent by physicians using the new system. The project team made intensive efforts to remedy the situation with such actions as software optimization, upgrading clinical information systems, and even the replacement of the network server. In fact, the project management team acknowledged throughout the implementation that the usability problem was unbearable from the users' point of view and that a solution needed to be found at all costs. Accordingly, significant modifications were made to the application to render it more usable and user friendly. In this manner, the usability risk was greatly overcome. The risk even decreased during the implementation process. Despite the implementation difficulties, physicians continued to participate because they saw that no efforts were being spared to find solutions to their problems.

In summary, the initial level of the usability risk was similar in the two projects but a different attitude toward risk management during implementation affected the outcome. In fact, the two projects resulted in different implementation outcomes in large part due to contrasting achievements in usability risk management.

Managerial Risk

So far, the analysis has shown that ICIS projects' risk levels evolve in accordance with the project management teams' attitudes and actions towards risk. The quality of the management team is thus a potential risk in itself. It needs to be taken into account if one wants to conduct a thorough analysis of risk.

The analysis showed that the managerial risk was initially high in both cases. Three risk factors were mainly at play: team size, variety of expertise, and time constraints. The comparison of the two cases revealed that these factors interacted with one another during the implementation process and were significant in affecting the levels of project risk and, consequently, the implementation issues. On the one hand, the Rainbow management risk increased during the implementation process because the project management team in comparison with the Primary Care Network team was smaller, possessed less expertise both in terms of IT and project management, and had far less time to devote to the management of the project. The Primary Care Network managerial efforts were more intensive and consequently more responsive to project risk. Their project management team was larger, composed of full-time employees dedicated to the project who offered broad IT and project management expertise.

These differences in the composition and time availability of the project management team explained the imbalance in terms of implementation efforts observed between the two projects. The more homogeneous composition of the Rainbow Network team diminished the depth of its risk awareness. Because of its smaller size, the team's efforts were monopolized by technological problems at the expense of other important risks, namely the human and usability risks. Also, its small size made it vulnerable to changes in personnel. Accordingly, the departure of a key team member at mid-project term entailed severe perturbations in the project's implementation.

The analysis also revealed two other factors that caused an increase in the level of managerial risk. From the beginning, the schedule and budget for both projects were very tight, especially considering the inherent complexity of implementing first-time IT projects aimed at multiple organizations.

Strategic/Political Risk

While the installation of an information network infrastructure is considered a technological risk, the development of collaborative relations between several organizations is considered a strategic/political risk. From the start, the two projects were considered high-risk. The analysis showed that it was the network's diverse composition rather than its size that affected risk levels. Due to the experimental nature of both projects, the number of organizations was rather small and quite manageable, however, network homogeneity made a significant difference between the two cases. The Rainbow Network was more heterogeneous in terms of organizational size and mission. The network brought together a large teaching hospital and small clinics that exclusively treated children and two community hospitals that principally treated adult patients. In comparison, the Primary Care Network, composed solely of GPs, was far more homogeneous. Further, the Rainbow Network organizations were spread across three different health regions while the Primary Care Network brought together GPs practicing in the same region, who were members of the same regional chapter of the GPs' national professional association. In summary, differences were far less reconcilable in the Rainbow Network than in the Primary Care Network. Thus, network cohesion and consequently implementation outcomes were impacted. The level of strategic/political risk remained high in the Rainbow Network while the Primary Care Network managed to diminish this risk over time as shown in ▶.

Discussion

The study findings add several new perspectives and practical insights to the existing body of knowledge in the field of medical informatics. First, the analysis shows that project risk analysis is a helpful tool to assess this type of project. The validity of the proposed framework appeared very robust for each of the two cases studied. The study framework was composed of five risk dimensions and was adequate to conduct a thorough analysis of risk. The analysis did not reveal any phenomena associated with project risk that we could not classify within the typology. The list was complete while remaining parsimonious. In addition, the specificity of the proposed factors was satisfactory. The risk factors were both specific and easily distinguishable from one another.

Secondly, the presence of several types of risk shows that project risk analysis is fundamentally multidimensional. This supports a finding regularly identified in the IS literature. 8,17,34 The framework strengthened the stance that a deterministic view of technology as the main and only driving force behind change fails to grasp the complex reality of an IT project. The risk analysis showed that the technological aspect covers only one dimension of project risk. The four other risk dimensions are essential to understanding the dynamics of implementation. In the same manner, the results also clearly demonstrate that a thorough analysis of risk is helpful to account for human factors that will affect implementation. The impact of the usability risk in both projects with respect to implementation success underscores this result. Although it is true that the success of an implementation process lies in the availability of a sound and effective technology, this dimension alone is insufficient. The other risk dimensions are equally important. In other words, even if technological risk remains an essential condition for success, it is inadequate on its own.

In this manner, the importance given to the other risk dimensions allows for a more thorough analysis and to clearly establish the necessary corrective actions. From this perspective, we believe that a thorough analysis allows better distribution of the implementation efforts. To be successful, it is important that these efforts be distributed proportionally according to the importance of each of the risk factors. Furthermore, because the risks evolve dynamically, there is a need for high responsiveness to emerging implementation problems. Implementation success lies in the ability of the project management team to be aware of and to manage several possible threats simultaneously and coherently since they evolve dynamically through time and interact with one another. Thus, to be successful, the project management team needs to demonstrate flexibility in implementation management.

The research results also show that project risk analysis is a helpful tool for increasing the level of implementation success. In terms of practical insights, the results suggest that successful ICIS implementations require a proactive stance where the implementation risks must be anticipated as early as possible. To do so, an analysis of the implementation situation at the start of the project should help in the anticipation and clear identification of the upcoming challenges. Furthermore, risk factors are dynamic and evolve over time. Accordingly, the risk analysis should be dynamic and constant throughout the implementation stage. Health care managers could use the multi-level risk framework presented in this study as a checklist to guide their assessment of the ICIS implementation scenario. A comprehensive vision of the entire project is needed. The implication of key actors with complementary knowledge and expertise fully dedicated to the project is of key importance. The management team's challenge is to preserve the fundamental characteristics of the project while being flexible and innovative so as to conciliate multiple and divergent issues emerging at various stages of project implementation. It may be tempting to suggest that all of the implementation risks encountered reflect a failure to plan properly for the implementation of change. However, many examples have shown that organizations that rely solely on prepared, detailed plans still fail. The findings suggest that a good plan should start with the challenges or risks to be overcome, rather than with the actions to be taken and the decisions to be made. In other words, before thinking about how to implement such systems, it is important to spend some time analyzing the existing situation which dictates the issues to be addressed and risks to be surmounted.

Lastly, the analysis of both cases also showed some interdependence between the risk factors, since ripple effects from one risk factor to another were observed. For instance, the technological, human, and usability risks appeared especially prone to closely interact. This suggests that risk dimension interdependence needs to be more thoroughly investigated. While such an analysis is outside the scope of this paper, we firmly believe that a next step would be to develop a process theory of IT risk management. We encourage researchers interested in this phenomenon to pursue their efforts in this direction.

Conclusion

ICISs make it possible to store and retrieve patient medical information in ways that were previously not feasible. 1,2,6,14 It is an important tool for helping a community to improve continuity of care while controlling costs. However, the risks to the development and acceptance of interorganizational systems are significant as shown in the cases reported here. In short, health care organizations participating in such complex projects should assess their readiness for data sharing by conducting a rigorous review of their technological, human, and usability capabilities. They must also evaluate the availability of local leadership to spearhead the effort and the anticipated difficulties of securing cooperation among institutions. We firmly believe that each of these elements represents a critical success factor in building and managing ICIS projects.

Clearly, additional case studies must be conducted to increase the validity of the findings of this study. Future research efforts should be pursued in order to explain how risk factors interact and how they influence ICIS project outcomes. Although managers and clinicians in other communities can learn from the experiences described above, they should carefully consider how their own reality differs from the one presented here. Other researchers could build upon the risk framework developed in this study in order to help community leaders and managers better understand the risks they will face and the steps they will need to take in order to deploy successful ICISs.

Footnotes

The Canadian Institutes of Health Research and the Social Sciences and Humanities Research Council of Canada are gratefully acknowledged for providing financial support for this research project.

References

- 1.Starr P. Smart technology, stunted policydeveloping health information networks. Health Affairs 1997;16,3:91-105. [DOI] [PubMed] [Google Scholar]

- 2.Overhage JM, Evans L, Marchibroda J. Communities' readiness for health information exchangeThe national landscape in 2004. J Am Med Inform Assoc 2005;12,2:107-112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Canada Health Infoway Canada Health Infoway's 10-Year Investment Strategy—Pan-Canadian Electronic Health Record. 2005. Available at: http://www.infoway-inforoute.ca/en/resourcecenter/resourcecenter.aspx. Accessed July 27th 2006.

- 4.Wakerly R. Open systems drive health information networks Computers in healthcare 1993;14,3:30-35. [PubMed] [Google Scholar]

- 5.Ferratt TW, Lederer AL, Hall SR, Krella JM. Surmounting health information network barriersThe greater Dayton area experience. Health Care Management Review 1998;23,1:70-76. [DOI] [PubMed] [Google Scholar]

- 6.Payton FC, Ginzberg MJ. Interorganisational health care systems implementationsAn exploratory study of early electronic commerce initiatives. Health Care Management Review 2001;26,2:20-32. [DOI] [PubMed] [Google Scholar]

- 7.Pemble KR. Regional health information networks – The Wisconsin health information networkA case study. Proc Annu Symp Comput Appl Med Care 1994(Suppl):401-405. [PMC free article] [PubMed]

- 8.Alter S, Sherer SA. A general, but readily adaptable model of information system risk Comm AIS 2004;14:1-28. [Google Scholar]

- 9.Barki H, Rivard S, Talbot J. Toward an assessment of software development risk J Manag Inform Syst 1993;10,2:203-225. [Google Scholar]

- 10.Sherer SA, Alter S. Information systems risks and risk factorsAre they mostly about information systems?. Comm AIS 2004;14:29-64. [Google Scholar]

- 11.Miller RH, Sim I. Physicians' use of electronic medical recordsBarriers and solutions. Health Affairs 2004;23,2:116-126. [DOI] [PubMed] [Google Scholar]

- 12.Ahmad A, Teater P, Bentley TD, et al. Key attributes of a successful physician order entry system implementation in a multi-hospital environment J Am Med Inform Assoc 2002;9:16-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Doolan DF, Bates DW, James BC. The use of computers for clinical care: A case of series of advanced U.S. sites J Am Med Inform Assoc 2003;10,1:94-107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ferratt TW, Lederer AL, Hall SR, Krella JM. Swords and PlowsharesInformation Technology for Collaborative Advantage. Inform Manag 1996;30,3:131-142. [Google Scholar]

- 15.Morrell M, Ezingeard JN. Revisiting Adoption Factors of Interorganisational Information Systems in SMEs Log Inform Manag 2001;15,1/2:46-57. [Google Scholar]

- 16.Markus ML. Power, Politics, and MIS Implementation Comm ACM 1983;26,6:430-444. [Google Scholar]

- 17.Gibson CF. IT-Enabled Business ChangeAn Approach to Understanding and Managing Risk. MIS Quart Exec 2003;2,2:104-115. [Google Scholar]

- 18.Jiang J, Klein G, Discenza R. Information System Success as Impacted by Risks and Development Strategies IEEE Trans Eng Manag 2001;48,1:46-55. [Google Scholar]

- 19.Karahanna E, Straub DW, Chervany NL. Information Technology Adoption across TimeA Cross-Sectional Comparison of Pre-Adoption and Post-Adoption Beliefs. MIS Quart 1999;23,2:183-213. [Google Scholar]

- 20.Battacherjee A. Understanding Information Systems Continuancean Expectation-confirmation Model. MIS Quart 2001;25,3:351-370. [Google Scholar]

- 21.Srinivasan A, Davis JG. A Reassessment of Implementation Process Models Interfaces 1987;17,3:64-71. [Google Scholar]

- 22.Kling R, Iacono S. The Institutional Character of Computerised Information Systems Technol People 1989;5,1:7-28. [Google Scholar]

- 23.Attewell P. Technology Diffusion and Organizational LearningThe Case of Business Computing. Org Sci 1992;3:1-19. [Google Scholar]

- 24.Leonard-Barton D. Implementation and Mutual Adaptation of Technology and Organization Res Pol 1988;17,5:1-17. [Google Scholar]

- 25.Paré G, Sicotte C, Jacques H. The Effects of Creating Psychological Ownership on Physicians' Acceptance of Clinical Information Systems J Am Med Inform Assoc 2006;13, 2:197-205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pierce JL, Rubenfeld SA, Morgan S. Employee OwnershipA Conceptual Model of Process and Effects. Acad Manag Rev 1991;16:121-144. [Google Scholar]

- 27.Cash JI, McFarlan FW, McKenney JL, Applegate LM. Corporate Information Systems Management. 3rd edition. Homewood, IL: Irwin; 1992.

- 28.Keil M, Cule P, Lyytinen K, Schmidt R. A Framework for Identifying Software Project Risks Comm ACM 1998;41,11:76-83. [Google Scholar]

- 29.Anderson JG. Clearing the Way for Physicians' Use of Clinical Information Systems Comm ACM 1997;40,8:83-90. [Google Scholar]

- 30.Paré G. Implementing Clinical Information SystemsA Multiple-case Study within a US Hospital. Health Serv Manag Res 2002;15:1-22. [DOI] [PubMed] [Google Scholar]

- 31.Poon EG, Blumenthal D, Jaggi T, et al. Overcoming Barriers to Adopting and Implementing Computerized Physician Order Entry Systems in U.S. Hospitals Health Affairs 2004;23,4:184-189. [DOI] [PubMed] [Google Scholar]

- 32.Lapointe L, Rivard S. A Model of Resistance to Information Technology Implementation MIS Quart 2005;29,3:461-491. [Google Scholar]

- 33.Sicotte C, Denis JL, Lehoux P, Champagne F. The Computer Based Patient Record Challenges Towards Timeless and Spaceless Medical Practice J Med Syst 1998;22,4:237-256. [DOI] [PubMed] [Google Scholar]

- 34.Kukafka R, Johnson SB, Linfante A, Allegrante JPM. Grounding a New Information Technology Implementation Framework in Behavioural Sciencea Systematic Analysis of the Literature on IT Use. J Biomed Inform 2003;36:218-227. [DOI] [PubMed] [Google Scholar]

- 35.Davis FD. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology MIS Quart 1989;13,3:319-340. [Google Scholar]

- 36.Lee F, Teich JM, Spurr CD, Bates DW. Implementation of Physician Order EntryUser Satisfaction and Self-reported Usage Patterns. J Am Med Inform Assoc 1996;3:42-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Overhage JM, Perkins S, Tierney WM, McDonald CJ. Controlled Trial of Direct Physician Order EntryEffects on Physicians' Time Utilization in Ambulatory Care Internal Medicine Practices. J Am Med Inform Assoc 2001;8,4:361-371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Venkatesh V, Davis FD. A Theoretical Extension of the Technology Acceptance ModelFour Longitudinal Field Studies. Manag Sci 2000;46,2:186-204. [Google Scholar]

- 39.Van Der Meijden MJ, Tange HJ, Troost J, Hasman A. Determinants of Success of Inpatient Clinical Information Systemsa Literature Review. J Am Med Inform Assoc 2003;10,3:235-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Baccarini D, Salm G, Love ED. Management of Risks in Information Technology Projects Industl Manag Data Syst 2004;104,3/4:286-295. [Google Scholar]

- 41.Siau K. Interorganizational Systems and Competitive Advantages—Lessons from History J Comp Inform Syst 2003;44,1:33-39. [Google Scholar]

- 42.Anderson JG, Aydin CE. Evaluating the Impact of Health Care Information Systems Int J Technol Assess Health Care 1997;13,2:380-393. [DOI] [PubMed] [Google Scholar]

- 43.Sicotte C, Denis JL, Lehoux P. The Computer Based Patient RecordA Strategic Issue in Process Innovation. J Med Syst 1998;22,6:431-443. [DOI] [PubMed] [Google Scholar]

- 44.e-Health Initiative and Foundation. Lessons learned from the Nine Communities Funded through the Connecting Communities for Better Health Program 2006; 33 pages..

- 45.Shu K, Boyle D, Spurr C, Horsky J, Heiman H, O'Connor P, Lepore J, Bates DW. Comparison of Time Spent Writing Orders on Paper with Computerised Physician Order Entry. In Patel V, et al. (eds) Medinfo 2001 Amsterdam: IOS Press.. [PubMed]

- 46.Weiner M, Gress T, Thiemann DR, Jenckes M, Reel Sl, Mandell SF, Bass EB. Contrasting Views of Physicians and Nurses about an Inpatient Computer-based Provider Order-entry System J Am Med Inform Assoc 1999;6,3:234-244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kanter RM. The Change Masters. New York: Simon and Schuster Inc; 1983.

- 48.Zaltman G, Duncan R, Holbeck J. Innovations and Organizations. New York: John Wiley; 1973.

- 49.Kotter JP. Leading ChangeWhy Transformation Efforts Fail. Harv Bus Rev 1995;Mar/Apr:59-67. [Google Scholar]

- 50.Jarvenas SL, Ives B. Executive Involvement and Participation in the Management of Information Technology MIS Quart 1991;15,2:205-228. [Google Scholar]

- 51.Stead WW, Kelly BJ, Kolodner RM. Achievable Steps toward Building a National Health Information Infrastructure in the United States J Am Med Inform Assoc 1994;12,2:113-120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Markle Foundation's Connecting for Health Financial, Legal and Organizational Approaches to Achieving Electronic Connectivity in Healthcare. 2004. October, 87 pages.

- 53.Eisenhardt KM. Building Theories from Case Study Research Acad Manag Rev 1989;14,4:532-550. [Google Scholar]

- 54.Yin RK. Case Study Research, Design and Methods. Beverly Hills, CA: Sage Publications; 2003.