Short abstract

Evidence based medicine insists on rigorous standards to appraise clinical interventions. Failure to apply the same rules to its own tools could be equally damaging

The advent of evidence based medicine has generated considerable interest in developing and applying methods that can improve the appraisal and synthesis of data from diverse studies. Some methods have become an integral part of systematic reviews and meta-analyses, with reviewers, editors, instructional handbooks, and guidelines encouraging their routine inclusion. However, the evidence for using these methods is sometimes lacking, as the reliance on funnel plots shows.

What is a funnel plot?

The funnel plot is a scatter plot of the component studies in a meta-analysis, with the treatment effect on the horizontal axis and some measure of weight, such as the inverse variance, the standard error, or the sample size, on the vertical axis. Light and Pillemer proposed in 1984: “If all studies come from a single underlying population, this graph should look like a funnel, with the effect sizes homing in on the true underlying value as n increases. [If there is publication bias] there should be a bite out of the funnel.”1 Many meta-analyses show funnel plots or perform various tests that examine whether there is asymmetry in the funnel plot and directly interpret the results as showing evidence for or against the presence of publication bias.

The plot's wide popularity followed an article published in the BMJ in 1997.2 That pivotal article has already received over 800 citations (as of December 2005) in the Web of Science. With two exceptions, this is more citations than for any other paper published by the BMJ in the past decade. The authors were careful to state many reasons why funnel plot asymmetry may not necessarily reflect publication bias. However, apparently many readers did not go beyond the title of “Bias in meta-analysis detected by a simple, graphical test.”

The influential Cochrane Handbook adopts a relatively conservative view and acknowledges that there are problems with the concept.3 Yet it devotes more than four pages to this subject, far more than for any other test of bias and heterogeneity in meta-analysis. Whereas the widely accepted quality of reporting of meta-analysis (QUOROM) statement simply requires in its proposed checklist a description of “any assessment for publication bias,”4 its equally accepted counterpart for meta-analyses of observational studies in epidemiology (MOOSE) states that “methods should be used to aid in the detection of publication bias, eg, fail safe methods or funnel plots.”5 In an article on quantitative synthesis in systematic reviews commissioned by the American College of Physicians, even we advocated funnel plots and devoted a figure and considerable text to them.6

Use and abuse

Hand searching of the BMJ from July 2003 to June 2005 shows that funnel plots were mentioned in 20 of the 47 systematic reviews that included some quantitative data synthesis (see bmj.com for full details). In all 20 cases, the plots were mentioned specifically as tests for evaluating publication bias. Four of the 20 systematic reviews eventually did not perform these tests because they felt too few studies were available (maximum 3 to 10 per meta-analysis), one made no further mention besides the methods, and only one performed the tests and acknowledged that “the funnel plot may not detect publication bias when the number of studies is small.” The other 14 systematic reviews did not question the inferences from these tests and typically made categorical statements about conclusively finding or excluding publication bias with these methods.

A total of 34 meta-analyses had been evaluated with these methods: 14 of them had nine or fewer studies and 18 of them had significant between-study heterogeneity; only five of the 34 meta-analyses had 10 or more studies and no significant between-study heterogeneity. Although 10 studies is not an adequate number for the funnel plot,7 we chose it as a cut-off to show that systematic reviewers did not meet even this liberal criterion.

Inconsistent interpretations were notable between different tests in the same meta-analysis. For example, in a meta-analysis of breastfeeding and blood pressure in later life,w3 the results said: “evidence of such [publication bias] was provided by a funnel plot. The Egger test was significant (P = 0.033), but not the Begg test (P = 0.186)” and a figure shows “Begg's funnel plot (pseudo 95% confidence limits).” Inconsistent interpretations were notable even for the same test between results and discussion. For example, in a meta-analysis of metformin for polycystic ovary syndromew4 the results stated that, “the funnel plot implies publication bias” whereas the discussion concluded that, “these data seem robust with no evidence of major publication bias.”

Accuracy of test

The evaluation of a methodological test is directly analogous to the evaluation of a clinical diagnostic test. Fryback and Thornbury have proposed a six level model for evaluating a diagnostic test.8 This provides a good discussion framework. The six expectations of a clinical diagnostic test are technical feasibility, diagnostic accuracy, diagnostic effect, treatment effect, effect on patient outcome, and societal effect. If the conclusions of evidence based medicine are based on poor tests, the negative effect eventually may be considerable. So we must examine closely at least the technical feasibility and diagnostic accuracy of these methods.

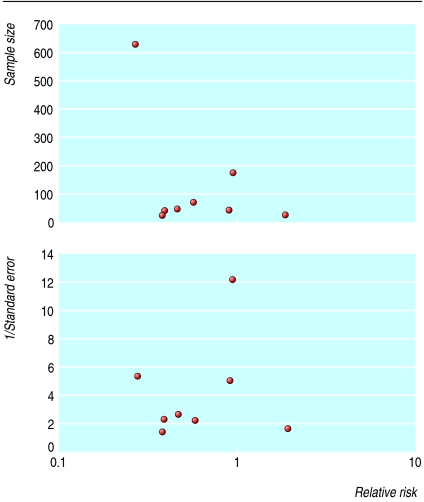

An evaluation of the technical feasibility of the funnel plot shows many problems that are difficult to solve. Strong empirical evidence exists that the appearance of the plot may be affected by the choice of the coding of the outcome (binary versus continuous),9 the choice of the metric (risk ratio, odds ratio, or logarithms thereof), and the choice of the weight on the vertical axis (inverse variance, inverse standard error, sample size, etc).10,11 Figure 1 gives an example of how these choices can make a difference.

Fig 1.

Effect of measure of precision (plotted on y axis) on appearance of funnel plots. Redrawn from Tang and Liu11

Even in the unlikely event that agreement is reached on what metric and what expression of weight to use on the axes, enormous uncertainty and subjectivity remains in the visual interpretation of the same plot by different researchers. Our team recently designed a survey to examine this question using simulated plots with or without publication bias.12 The ability of researchers to identify publication bias using a funnel plot was practically identical to chance (53% accuracy).

Formal statistical tests may eliminate the subjectivity in visual inspection of asymmetry. Investigators commonly use the rank correlation test13 or one of many tests based on regression.2,7,10,11,14 The validity of these tests depends on assumptions often unmet in practice, however, and the choice of test introduces further subjectivity into the procedure. The methods theoretically require a considerable number of available studies, generally at least 30 for sufficient power. But the number needed depends on the size of the studies and on the true treatment effect—for example, for an odds ratio of 0.67, even 60 studies are not adequate.7 Most meta-analyses of clinical trials, however, have far fewer studies. For instance, the average Cochrane meta-analysis has fewer than 10.15 Thus the tests typically have low power16 and may be inappropriate.

Even ignoring statistical concerns of power and choice of metric and weights, it is still unclear if funnel plots really diagnose publication bias. Strictly speaking, funnel plots probe whether studies with little precision (small studies) give different results from studies with greater precision (larger studies). Asymmetry in the funnel plot may therefore result not from a systematic under-reporting of negative trials but from an essential difference between smaller and larger studies that arises from inherent between-study heterogeneity. For example, small studies may focus on high risk patients, for whom the treatment is more effective because such patients have more events that could potentially be prevented17; or studies with small weight may generally have shorter follow-up and differ because the treatment effect decreases with time.18 Early studies may target different populations (with different effect sizes) than subsequent studies,19 and subsequent studies may be much larger, trying to test the concept on less selected patients. Variation in quality can affect the shape of the funnel plot, with smaller, lower quality studies showing greater benefit of treatment.20

Summary points

Methods used by evidence based medicine should be evaluated with rigorous standards

The funnel plot is widely used in systematic reviews and meta-analyses as a test for publication bias

Asymmetry of the funnel plot, either visually interpreted or statistically tested, does not accurately predict publication bias

Inappropriate or misleading use of funnel plot tests may do more harm than good

Heterogeneity may sometimes be both statistically and clinically obvious—that is, studies may be examining different questions.21 Yet the authors of a meta-analysis, such as the one investigating the relation between garlic consumption and cancer,21 may still pool all studies together when it comes to the funnel plot, even though they have analysed them separately for the main analysis. In other cases, it may not be possible to identify a source for the existing heterogeneity.22 Simulation studies of funnel plots have found that bias may be incorrectly inferred if studies are heterogeneous.21,23

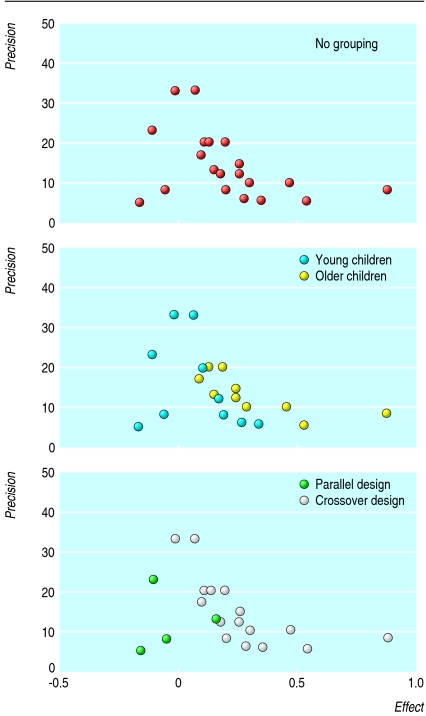

For example, figure 2 shows the funnel plot for a meta-analysis of inhaled disodium cromoglicate as maintenance therapy in children with asthma.24 The authors found both statistical and clinical heterogeneity, yet they published a funnel plot (fig 2, top), stating: “Studies with low precision and negative outcome are under-represented, indicating publication bias.” Grouping the studies according to age of participants (middle) and study design (bottom) creates a different impression.

Fig 2.

Effect of grouping of data on impression of funnel plot for meta-analysis of inhaled disodium cromoglicate that found significant heterogeneity24

Finally, we have no gold standard against which to compare the results of funnel plot tests. A true standard measure of publication bias would require prospective registries of trials with detailed knowledge of which studies have been published and which are unpublished. It would then be feasible to test whether tests of publication bias capture accurately the presence of unpublished studies and whether one variant performs better than others. Given that efforts for study registration have only recently started,25 this evaluation is currently difficult. Although a large number of alternative tests for publication bias exist,26 none has been validated against a standard.

Prevention of bias

In conclusion, evidence based methods, including the funnel plot, should be evidence based. If treatment decisions are made on the basis of misleading methodological tests, the costs to patients and society could be high. Decisions guided by the easy assurance of a symmetrical funnel plot may overlook serious bias. Equally, it may be misleading to discredit and abandon valid evidence simply because of an asymmetrical funnel plot. The prevention of publication bias is much more desirable than any diagnostic or corrective analysis.

Supplementary Material

Details of BMJ systematic reviews mentioning funnel plots are on bmj.com

Details of BMJ systematic reviews mentioning funnel plots are on bmj.com

Contributors and sources: The authors have worked for a long time on methodological research in systematic reviews and meta-analyses. The idea was generated by JL and expanded by the other authors. The manuscript was written by JPAI and JL and commented on critically by the other authors. All authors approved the final version. JL is the guarantor.

Funding: Supported in part by AHRQ grant R01 HS10254. Competing interests: None declared.

References

- 1.Light RJ, Pillemer DB. Summing up. The science of reviewing research. Cambridge, MA: Harvard University Press, 1984.

- 2.Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ 1997;315: 629-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alderson P, Green S, Higgins JPT, eds. Cochrane reviewers' handbook 4.2.2 [updated March 2004]. In: Cochrane Library, Issue 1. Chichester: Wiley, 2004.

- 4.Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. Quality of Reporting of Meta-analyses. Lancet 1999;354: 1896-900. [DOI] [PubMed] [Google Scholar]

- 5.Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA 2000;283: 2008-12. [DOI] [PubMed] [Google Scholar]

- 6.Lau J, Ioannidis JP, Schmid CH. Quantitative synthesis in systematic reviews. Ann Intern Med 1997;127: 820-6. [DOI] [PubMed] [Google Scholar]

- 7.Macaskill P, Walter SD, Irwig L. A comparison of methods to detect publication bias in meta-analysis. Stat Med 2001;20: 641-54. [DOI] [PubMed] [Google Scholar]

- 8.Fryback DG, Thornbury JR. The efficacy of diagnostic imaging. Med Decis Making 1991;11: 88-94. [DOI] [PubMed] [Google Scholar]

- 9.Hrobjartsson A, Gotzsche PC. Is the placebo powerless? Update of a systematic review with 52 new randomized trials comparing placebo with no treatment. J Intern Med 2004;256: 91-100. [DOI] [PubMed] [Google Scholar]

- 10.Sterne JA, Egger M. Funnel plots for detecting bias in meta-analysis: guidelines on choice of axis. J Clin Epidemiol 2001;54: 1046-55. [DOI] [PubMed] [Google Scholar]

- 11.Tang JL, Liu JL. Misleading funnel plot for detection of bias in meta-analysis. J Clin Epidemiol 2000;53: 477-84. [DOI] [PubMed] [Google Scholar]

- 12.Terrin N, Schmid CH, Lau J. In an empirical evaluation of the funnel plot, researchers could not visually identify publication bias. J Clin Epidemiol 2005;58: 894-901. [DOI] [PubMed] [Google Scholar]

- 13.Begg CB, Mazumdar M. Operating characteristics of a rank correlation test for publication bias. Biometrics 1994;50: 1088-99. [PubMed] [Google Scholar]

- 14.Peters JL, Sutton AJ, Jones DR, Abram KR, Rushton L. Comparison of two methods to detect publication bias in meta-analysis. JAMA 2006;295: 676-80. [DOI] [PubMed] [Google Scholar]

- 15.Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ 2003;327: 557-60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sterne JA, Gavaghan D, Egger M. Publication and related bias in meta-analysis: power of statistical tests and prevalence in the literature. J Clin Epidemiol 2000;53: 1119-29. [DOI] [PubMed] [Google Scholar]

- 17.Schmid CH, Lau J, McIntosh MW, Cappelleri JC. An empirical study of the effect of the control rate as a predictor of treatment efficacy in metaanalysis of clinical trials. Stat Med 1998;17: 1923-42. [DOI] [PubMed] [Google Scholar]

- 18.Ioannidis JP, Cappelleri JC, Lau J, Skolnik PR, Melville B, Chalmers TC, et al. Early or deferred zidovudine therapy in HIV-infected patients without an AIDS-defining illness. Ann Intern Med 1995;122: 856-66. [DOI] [PubMed] [Google Scholar]

- 19.Ioannidis J, Lau J. Evolution of treatment effects over time: empirical insight from recursive cumulative meta-analyses. Proc Natl Acad Sci USA 2001;98: 831-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sterne JAC, Egger M, Davey Smith G. Investigating and dealing with publication and other biases. In: Egger M, Davey Smith G, Altman DG, eds. Systematic reviews in health care: meta-analysis in context. London: BMJ Books, 2001. [DOI] [PMC free article] [PubMed]

- 21.Terrin N, Schmid CH, Lau J, Olkin I. Adjusting for publication bias in the presence of heterogeneity. Stat Med 2003;22: 2113-26. [DOI] [PubMed] [Google Scholar]

- 22.Schmid CH, Stark PC, Berlin JA, Landais P, Lau J. Meta-regression detected associations between heterogeneous treatment effects and study-level, but not patient-level, factors. J Clin Epidemiol 2004;57: 683-97. [DOI] [PubMed] [Google Scholar]

- 23.Schwartzer G, Antes G, Schumacher M. Inflation of type I error rate in two statistical tests for the detection of publication bias in meta-analyses with binary outcomes. Stat Med 2002;21: 2465-77. [DOI] [PubMed] [Google Scholar]

- 24.Tasche MJ, Uijen JH, Bernsen RM, van der Wouden JC. Inhaled disodium cromoglycate as maintenance therapy in children with asthma: a systematic review. Thorax 2000;55: 913-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.DeAngelis CD, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. JAMA 2004;292: 1363-4. [DOI] [PubMed] [Google Scholar]

- 26.Rothstein HR, Sutton AJ, Borenstein M, eds. Publication bias in meta-analysis. Prevention, assessment and adjustments. Sussex: John Wiley and Sons, 2005.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.