Abstract

Objective:

Severely limited organ resources mandate maximum utilization of donor allografts for orthotopic liver transplantation (OLT). This work aimed to identify factors that impact survival outcomes for extended criteria donors (ECD) and developed an ECD scoring system to facilitate graft-recipient matching and optimize utilization of ECDs.

Methods:

Retrospective analysis of over 1000 primary adult OLTs at UCLA. Extended criteria (EC) considered included donor age (>55 years), donor hospital stay (>5 days), cold ischemia time (>10 hours), and warm ischemia time (>40 minutes). One point was assigned for each extended criterion. Cox proportional hazard regression model was used for multivariate analysis.

Results:

Of 1153 allografts considered in the study, 568 organs exhibited no extended criteria (0 score), while 429, 135 and 21 donor allografts exhibited an EC score of 1, 2 and 3, respectively. Overall 1-year patient survival rates were 88%, 82%, 77% and 48% for recipients with EC scores of 0, 1, 2 and 3 respectively (P < 0.001). Adjusting for recipient age and urgency at the time of transplantation, multivariate analysis identified an ascending mortality risk ratio of 1.4 and 1.8 compared to a score of 0 for an EC score of 1, and 2 (P < 0.01) respectively. In contrast, an EC score of 3 was associated with a mortality risk ratio of 4.5 (P < 0.001). Further, advanced recipient age linearly increased the death hazard ratio, while an urgent recipient status increased the risk ratio of death by 50%.

Conclusions:

Extended criteria donors can be scored using readily available parameters. Optimizing perioperative variables and matching ECD allografts to appropriately selected recipients are crucial to maintain acceptable outcomes and represent a preferable alternative to both high waiting list mortality and to a potentially futile transplant that utilizes an ECD for a critically ill recipient.

We retrospectively evaluated outcomes for donor grafts in over 1000 liver transplants to develop a donor scoring system. An extended criteria donor score of 3 was associated with high risk of graft failure and recipient mortality. Such risk was doubled when extended criteria donors were utilized in urgent recipients. Our results strongly argue for donor-recipient matching.

Over the last 20 years, orthotopic liver transplantation (OLT) has become a routinely applied therapy for an expanding group of patients with end-stage liver disease. Organ availability during that same time period has increased at a much slower rate and appears to have reached a plateau at approximately 6000 liver grafts per year. This disparity has led to a large expansion in the UNOS liver transplant waiting list and a 5-fold increase in deaths while awaiting OLT.1

Multiple strategies for expansion of the donor pool are being pursued concurrently. These include the use of living donors for both pediatric and adult recipients, splitting of cadaveric livers for 2 recipients, and the use of “extended criteria donors” (ECD).2 An accepted precise definition of what constitutes an ECD for liver transplantation remains elusive. Conceptually, the graft from such a donor is at increased risk of early failure (ie, primary nonfunction or delayed graft function) or predisposes to inferior graft or patient survival outcomes.

Several single-center studies have attempted to define donor variables that are associated with initial poor function or subsequent graft failure and patient death post-OLT.3–6 However, these studies have exhibited wide variability and seemingly contradictory results in the reported factors that influenced graft function post-OLT. Such variability may have resulted from the type of analysis performed (univariate vs. multivariate), definitions of graft nonfunction, the examined donor parameters, and donor populations that were used by the center.3 Furthermore, operative factors such as warm and cold ischemia and the condition of the recipient may further influence the outcome,7 since transplantation of an extended criteria liver graft in a stable recipient may prove to be successful, while utilization of a similar graft in an urgent patient may be associated with graft failure and death.

A collective review of the literature revealed at least 15 donor variables that may be associated with poor graft survival and increased risk of recipient death. Such variables included donor age, sex, race, weight, gender, ABO status, cause of brain death, length of hospital stay, pulmonary insufficiency, use of pressors, cardiac arrest, blood chemistry, cold preservation time, graft steatosis, and donor hypernatremia.3 We recently analyzed outcomes in our single-center experience with 3200 liver transplants and assessed the impact of these factors.8 In that report, multivariate analysis revealed the extended donor characteristics of advanced age and prolonged hospital stay to impact recipient mortality risk. Operative parameters of cold and warm ischemia times (CIT and WIT) also showed independent significance, as did recipient characteristics of age and urgency at time of transplantation. We now examine these extended criteria more closely to assess the actual degree of risk they impart cumulatively to a recipient and for the extent to which they can be scored and “matched” to a recipient to maintain optimum graft and patient survival outcomes.

MATERIALS AND METHODS

Patient Inclusion Criteria

A retrospective analysis of adult allografts used for adult recipients at our institution was performed. The data set used in this analysis is taken from the UCLA registry of 3200 transplants performed on 2662 patients through 2001 as reported previously.8 Beginning with these 3200 transplants, we excluded retransplants (538 cases) and donors whose age was less than or equal to 18 years (1020 cases). Of the remaining pool, we excluded transplants where the recipient age was less than or equal to 18 years (169 cases) and transplants where the recipient received a split liver or reduced graft (45 cases), as well as any graft without fully complete data sets that concomitantly included recipient, donor, or operative variables (275 cases). Analysis therefore included 1153 graft-recipient pairs.

For the purpose of this study, OLT candidates were considered as urgent or nonurgent recipients, according to their medical condition prior to transplantation, as defined by the United Network for Organ Sharing (UNOS) categories. Urgent patients included recipients requiring support in the intensive care unit prior to OLT or those designated urgent by UNOS criteria. The current Model for End Stage Liver Disease (MELD) scoring system was not initiated during the period of this study.

Calculation of Donor Score

Previous analysis8 has shown advanced donor age and prolonged length of donor hospital stay impact negatively on recipient survival. Perioperative variables of cold and warm ischemia time have also been shown to increase relative mortality risk in our multivariate analyses.7,8 Finally, recipient age and urgency status at time of transplant are statistically significant predictors of poor outcome after liver transplant. We therefore calculated an extended criteria “Donor Score” (DS) by giving one point each for donor age greater than 55 years, donor hospital stay greater than 5 days, CIT greater than 10 hours, and WIT greater than 40 minutes. Thus, the DS can vary from 0 to 4. However, in this data set, only 1 patient had a value of 4 and was combined with those with a value of 3. Thus, the range in our data set (except for 1 patient) is from 0 to 3.

Statistical Analysis of Graft and Patient Survival

Kaplan-Meier methods were used to estimate patient and graft survival as a function of time. The log-rank test was used for univariate Kaplan-Meier curve comparisons for each factor one at a time, eg, for comparing patient survival in those where the donor was ≤55 years old versus those where the donor was >55 years old. Univariate empirical hazard ratios were computed and reported where the hazard is the number of events (deaths or failures + deaths) divided by the number of person-months of follow-up. The Cox proportional hazard model was used to evaluate the impact of the donor score (0, 1, 2, 3) on patient mortality and graft failure, controlling for urgent UNOS status (yes or no) and recipient age. The median follow-up was 68 months.

RESULTS

Predictors of Graft Failure and Recipient Survival

Over the 18-year period of this study, 2662 patients underwent 3200 OLTs with a median follow-up of 6.7 years. Complete donor and recipient data sets were available for analysis on the remaining adult 1153 donor-recipient pairs who received whole organ deceased donor grafts.

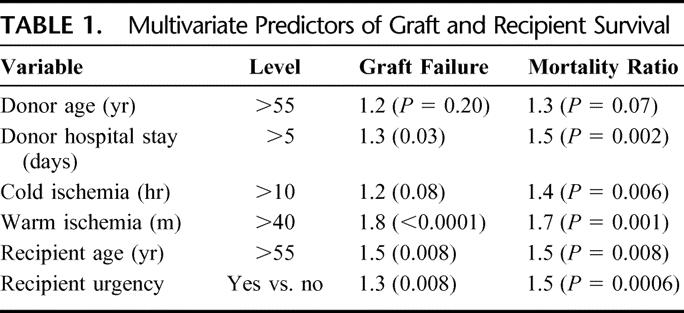

We performed univariate analysis on this group of 1153 pairs focusing on donor and recipient factors previously shown in our entire cohort to predict posttransplant outcome.7,8 Donor hypernatremia (serum Na > 155) was not found to be associated with worse outcome after OLT, consistent with our previously reported findings. Next, multivariate analysis utilizing a Cox proportional hazard model was undertaken with those factors found significant in univariate analysis (Tables 1). Recipient survival after OLT was impacted adversely by donor factors of advanced age over 55 (hazard ratio [HR] = 1.3, P = 0.07) and prolonged hospital stay over 5 days (HR = 1.5, P = 0.002). CIT over 10 hours adversely affected patient survival (HR = 1.4, P = 0.006) as did WIT over 40 minutes (HR = 1.7, P = 0.001). Recipient age over 55 was significant (HR = 1.5, P = 0.008) as was recipient urgency status (HR = 1.5, P = 0.008). Similarly, by multivariate analysis, graft failure was again predicted by extended donor hospital stay (HR = 1.3, P = 0.03), extended CIT (HR = 1.2, P = 0.08), prolonged WIT (HR = 1.8, P = 0.0001), recipient age over 55 (HR = 1.5, P = 0.008), and urgent recipient status (HR = 1.3, P = 0.008).

TABLE 1. Multivariate Predictors of Graft and Recipient Survival

Calculation of Extended Criteria Donor Score

Based on the results of the multivariate analysis of ECD characteristics and perioperative variables as predictors of recipient outcome, we next examined the cumulative effect of multiple unfavorable prognostic indicators. This was accomplished by establishing an ECD score in which each unfavorable factor (donor age over 55 years, hospital stay greater than 5 days, CIT greater than 10 hours, and WIT greater than 40 minutes) was assigned 1 point each. Points were totaled to a possible score of 0, 1, 2, 3, or 4 (Table 2). Of the 1153 donors in this study set, 568 had an ECD score of 0, 429 had a donor score of 1, 135 had a score of 2, and 21 had a score of 3.

TABLE 2. Calculation of Extended Criteria Donor Score

Graft and Patient Survival After Transplant as a Function of Donor Score

We next assessed the impact of an increasing ECD score on 1-year graft and patient survival controlling for recipient age and urgency status under the Cox model. As the donor score increased from 0 to 1 to 2 to 3, the hazard ratio for graft failure increased proportionately to relative risks of 1.2, 1.6, and 3.2 (Table 3). These values were all statistically significant, with the greatest increase in risk of graft loss occurring with the transition from an EDS of 2 to 3. Similarly, patient mortality risk increased as the EDS increased. Donor scores of 1, 2, and 3 had relative risks of 1.4, 1.8, and 4.5. All were statistically significant with a large increase in accrued risk as the ECD scores went from 2 to 3.

TABLE 3. One-Year Graft and Patient Survival as a Function of Donor Score

Graft and Recipient Matching

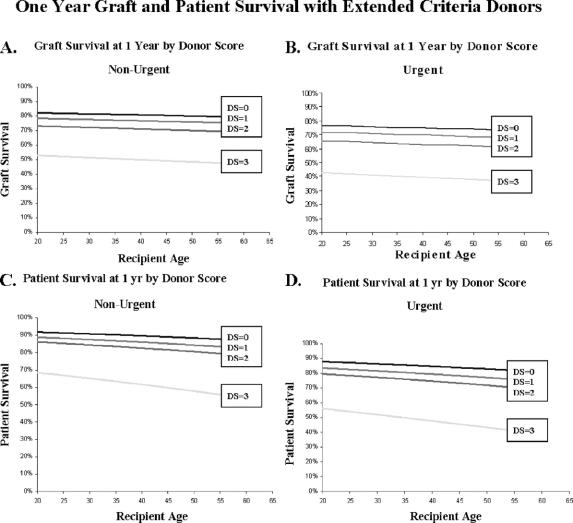

We next examined the success of our attempts to optimize results with extended criteria donors by matching them with appropriate recipients. We used the above Cox model based on our previously calculated DS and examined outcomes in terms of graft failure or patient mortality as a function of age and urgency status at time of transplantation. These results are shown in Figure 1. Panels A and B examine nonurgent and urgent status patients for graft survival at 1 year and show a decrement with each successive increase in DS. The differences between DS of 0, 1, and 2 are small but statistically significant, whereas the difference between a DS of 2 and 3 is larger, with 1-year graft survival rates for a recipient of age 40 years falling from 71% to 50%. These large differences are seen for each age group, and increasing recipient age has a negative effect upon 1-year graft survival.

FIGURE 1. One-year graft and patient survival with extended criteria donors. A and B, Graft survival at 1 year as a function of recipient age for nonurgent and urgent recipients, respectively. Outcomes for recipients of grafts with Extended Criteria Donor Scores (DS) of 0, 1, 2, and 3 are shown. C and D, One-year patient survival versus recipient age for nonurgent and urgent recipients, respectively, for allografts of varying donor scores.

Increasing DS has a similar negative effect on patient mortality (Fig. 1C, D). Again, decreases in survival with increasing DS of 0, 1, and 2 are significant but small in degree, whereas a DS of 3 impacts more profoundly on 1-year survival in both nonurgent and urgent status recipients. A 40-year-old nonurgent recipient of an allograft with DS of 0 had a 1-year survival of 90%, compared with a 62% 1-year survival after receiving an allograft with DS of 3. A 60-year-old urgent recipient receiving such an allograft had a 1-year survival of 39%.

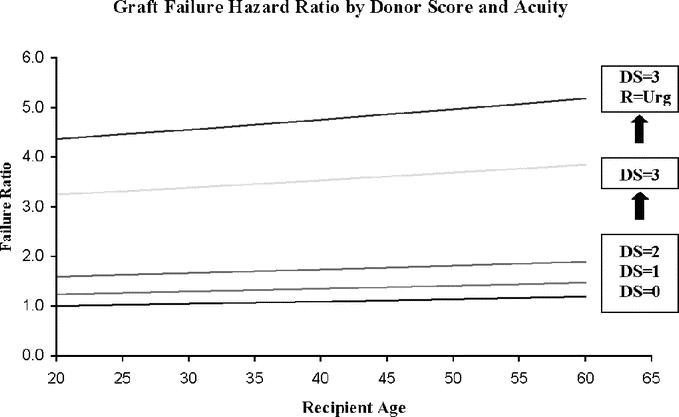

The relative risk for graft failure increases with elevated donor score, recipient age, and acuity, as shown in Figure 2. The hazard ratio increases only slightly as DS increases to 2, shown in Figure 2 for nonurgent recipients. As DS increases to 3 in nonurgent recipients, the hazard ratio rises more abruptly. For a 40-year-old nonurgent recipient who received an allograft with a DS of 0, this value is 1.1 but increases to 3.5 with an allograft assigned a DS of 3. A final curve shows another abrupt elevation for urgent recipients who receive an allograft with a DS of 3, although urgent status and increasing recipient age elevates graft failure hazard ratio at every DS value.

FIGURE 2. Graft failure risk based on donor score and recipient acuity. The risk of graft loss at 1 year as a function of recipient age is shown for allografts with Donor Scores (DS) of 0, 1, 2, and 3. The lower curves represent nonurgent recipients. The uppermost curve represents the graft failure hazard ratio in urgent recipients who are transplanted with allografts with a DS of 3.

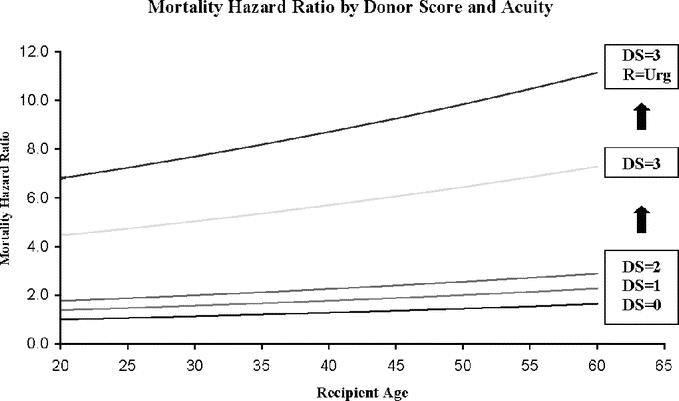

Patient mortality risk is shown in Figure 3. Hazard ratios increase as DS values increase with the largest jump seen between DSs of 2 and 3 shown here for nonurgent patients. An additional curve demonstrates another large increase in mortality for urgent recipients who receive a graft with DS of 3. Relative mortality is increased by urgent status and elevated recipient age at each DS value.

FIGURE 3. Mortality risk based on Donor Score and recipient acuity. Overall recipient mortality at 1 year after transplant is shown versus recipient age for varying Donor Scores (DS). The lower curves represent recipients with a nonurgent status at the time of transplant. The uppermost curve shows the mortality risk when an urgent recipient receives an allograft with a Donor Score of 3.

DISCUSSION

Advances in perioperative management, surgical technique, and immunosuppression have significantly improved results in liver transplantation and expanded applications of the procedure over the past 20 years. Efforts to proportionally maximize the donor pool have led many centers to liberalize their criteria for cadaveric donor eligibility with respect to donor age, length of hospital stay, and other potentially unfavorable characteristics. Efforts to quantify the effects on outcome of such extended donors have suffered from a lack of accepted criteria for ECDs and have produced conflicting results and conclusions.9 When UNOS adopted the MELD formula in February 2002, waiting time was removed from the organ allocation system, with liver grafts subsequently directed toward patients with quantifiably greater decompensation. As these 2 trends intersect, the risk of using ECD grafts in sicker recipients seems obvious but has awaited specific demonstration.

Rigorous definition of the extended criteria liver donor has remained elusive. Multiple donor characteristics have been examined independently, and some studies have shown resultant higher rates of subsequent graft dysfunction and patient death.10–13 Scoring systems or formulae for ECDs have been developed but not widely adopted, as they have proven either cumbersome or not widely applicable.14,15 Moreover, other studies have reported acceptable outcomes using such “high-risk” organs.16,17 The inconsistent experience with ECD grafts has delayed clear quantification of graft-related risk factors that predict poor graft performance after transplantation. Such inconsistency between graft characteristics and posttransplant graft function may have resulted from attempts to isolate a single criterion that affects graft function, rather than a complex interplay of donor, operative, and recipient factors that collectively determine the fate of the transplant. For example, while donor hypernatremia has been identified as a prognostic indicator for graft survival after transplantation,13 our current study has failed to demonstrate hypernatremia as an independent risk factor on multivariate analysis, when other factors have been considered simultaneously. Such results are consistent with recent experience where hypernatremia has been adequately managed in donors.12

Donor liver steatosis has been associated with increased rates of primary nonfunction and initial poor function.11 While most authors agree that grafts with severe steatosis impose a significant risk of graft failure and probably should be avoided,3 grafts with moderate steatosis (>30% and <60%) may impose a relative risk on posttransplant survival.11 Surgical precision and expertise during the procurement of such organs are essential for successful outcomes. At UCLA, we have routinely avoided the utilization of moderately steatotic allografts in urgent recipients. Furthermore, we have not performed systematic biopsies of donor livers to assess fat content, since previous experience from our center has shown that physical inspection at the time of procurement was equivalent to biopsy in assessing the fat content as a determinant for graft failure. Thus, expert donor selection is critical for successful outcomes.

As unfavorable characteristics accumulate in a donor, other nondonor factors become increasingly important. In the present analysis of 1153 primary adult liver transplants, we show that advanced donor age and extended donor hospitalization prior to procurement were predictors of overall worse graft and patient survival. To view such characteristics in a vacuum may be an oversimplification. Perioperative factors certainly contributed to outcomes with ECDs, and our analysis revealed extended CITs and WITs to be associated with worse graft and patient survival. To assess the dynamic interplay of these factors in the ECD scenario, we generated an ECD score, which assesses the accumulation of unfavorable parameters and their interplay with recipient acuity. The calculated DS predicted graft failure and patient mortality at 1 year, with an abrupt worsening of outcomes with scores over 2.

Rather than viewing ECD grafts as simply “usable” or “unusable,” they may be more appropriately viewed as existing on a spectrum that can be scored and matched to an appropriate recipient for optimal utilization in a time of scarcity. Graft-recipient matching focuses on monitoring and scoring accumulating unfavorable donor characteristics and minimizing other factors that the transplant surgeon can control, such as CITs and WITs and recipient selection. Our analysis attempts to examine the interplay of these donor, operative, and recipient factors. As extended donor criteria accumulate, minimal ischemia times yield acceptable outcomes with appropriately selected recipients. We report here that prolonged CIT and WIT in these scenarios as well as use of grafts with higher donor scores in older or more urgent recipients give predictably lower 1-year graft and patient survivals. These elevated hazard ratios for graft and patient loss with ECDs used in higher acuity patients are consistent with our institutional experience, and it has been our practice to match such grafts to younger, less acute patients (typically nonhospitalized patients with compensated cirrhosis and/or hepatocellular cancer). Herein we report acceptable outcomes if ECD grafts are matched to such recipients.

With ECD grafts, perioperative CIT and WIT can be minimized to mitigate unfavorable donor characteristics. Furthermore, such grafts can be matched to appropriate recipients. Younger recipient age and nonurgent recipient status minimize the risks of primary graft nonfunction. Marginal donor quantification, as previously attempted by isolated preprocurement laboratory values, is probably a less helpful approach. More productive is the concept of calculated risk in graft-recipient matching, in which an integrated picture of DS and recipient acuity must be considered to maintain acceptable outcomes. Unfortunately, the current MELD allocation system distributes all grafts, including ECDs, to patients based solely on recipient MELD scores. This blind organ distribution that disregards the quality of the offered organ as well as operative variables often forces placement of ECD in urgent recipients. Such MELD policy, which excludes the surgeon from donor-recipient matching, predisposes to futile placement of ECD grafts in marginal recipients, producing poor results with suboptimal utilization of a scarce donor resource. We therefore strongly urge policy-makers to consider a policy revision to distribute ECD allografts to better-matched recipients rather than patients with the highest MELD.

CONCLUSION

We have identified extended criteria donor factors that predict poor outcome after OLT; these include donor age over 55 years and length of donor hospitalization over 5 days. Perioperative factors that compound the deleterious effects of ECDs include CIT > 10 hours and WIT > 40 minutes. In an effort to maximize successful use of ECDs, we have developed a strategy of calculated risk in which each of these factors was assigned a point, and an ECD score from zero to 4 was generated. In over 1000 transplants, the rate of graft failure and patient mortality after OLT was seen to be low with ECD scores of 2 or less but increased considerably in recipients whose graft had an extended criteria score of 3. This difference was further increased when recipients with higher acuity received grafts with a donor score of 3. To optimize effective utilization of our limited donor resources, ECD grafts must be considered as part of an appropriately matched graft-recipient pair, rather than as isolated entities.

Discussions

Dr. Paul C. Kuo (Durham, North Carolina): Dr. Busuttil and his group have one of the largest experiences in the country, if not the world, of liver transplantation. This paper is really quite seminal in that it objectifies the use of these extended criteria donors. Many times at 2:00 in the morning when we are getting these calls, we must guess as to what should be used and should not be used. The beauty of this paper is that it objectifies with a scoring system those grafts that could and could not be used. And certainly, again, as I mentioned earlier, in this era of ever-increasing levels of government oversight about graft acceptance rates, this will certainly be of great value.

I have a number of questions. First, with the advent of the MELD, have you applied this scoring system in the MELD era to better differentiate between recipients? The scoring system in this retrospective review leaves out the informal screening that sometimes occurs before you send out a procurement team, and I was wondering if you could talk a little bit about the informal screen that you utilize at UCLA or in your region before you send out your procurement team. I was wondering if you have any financial or length of stay data with regard to ECD donors. Finally, any plans to move ahead with further validation in a prospective multicenter trial?

Dr. Ronald W. Busuttil (Los Angeles, California): In regard to if we have correlated our data with a current MELD score, this is a data set that ended in 2001, and MELD actually didn't go into effect until 2002. However, we have gone back and looked at these cases and tried to apply the MELD scoring system to them. And I can tell you from a preliminary observation that the patients who have a MELD score of 30 or greater, which is roughly equivalent to the old UNOS system of a UNOS 2-A, there is a clear-cut difference in recipient survival versus those that have a MELD score less than 30.

Your second question talks about informal screening. I am not trying to be facetious, but our informal screening when we get a call about a donor organ at UCLA is to find out the address of the donor hospital. We basically go out and inspect virtually all donor livers because we feel that inspection of the organ is much more fruitful regarding whether the organ is good, fair, or bad. But I can tell that you that BMI of the patients really doesn't make a difference because we have used many organs that come from donors with BMIs of <35 that you would think would be totally steatotic, and they are actually not and really function very well. So we really believe that an experienced surgeon is paramount and trumps biopsy. In fact, we rarely even biopsy organs to look for fat content since, in our experience, visual inspection is as good as a biopsy.

You asked something about financial issues. Well, there is no question that those patients that have higher graft loss with higher patient morbidity and mortality are going to be in the hospital longer and will require greater resources. To avoid this undesirable scenario, a low-risk donor is best matched with either a moderate or high-risk recipient as opposed to the converse.

Regarding expansion of this study, I acknowledge that these data are derived from a single-center study and, although it is a large data set, it needs to be verified by other centers. And I do truly believe that a multicenter trial is essential. If a multicenter trial confirms these data, perhaps we can get a modification of the MELD system to more appropriately match donors to recipients.

Dr. Ravi S. Chari (Nashville, Tennessee): Dr. Busuttil and colleagues identify and address a critical shortcoming of our organ allocation scheme. Currently, recipient severity of illness is the sole factor driving organ allocation. There is no provision under the current system for a surgeon to exercise his or her discretion so the recipient factors can be included. The authors correctly conclude that optimized allocation would not only consider organ donor features but also the environment the organ is going into, namely, the recipient, as such a new paradigm must be established to maximize our use of all available organs. With that, I would like the authors to comment on three areas within their paper.

The first is a philosophical question. I would like to challenge their use of the words “extended criteria donor.” They have identified features that are commensurate with less good outcome with some organs when all donors over their 18-period of the study were included. However, it is also clear from their analysis that they would suggest that these same extended criteria donors when placed in the appropriate recipient would work better. As such, I infer that in the proposed optimized system there would no longer be extended criteria per se but optimized allocation. Thus, as a point of clarification, I would ask them to comment whether their proposed system of maximizing organ allocation use would be simply for those so-called extended criteria donors or for all donors.

Number 2. From their analysis, they made an a priori decision of features that were commensurate with extended criteria status. They selected these features rather than perform a statistical analysis where the features were determined from this analysis. As such, in contradistinction to a predictive index scoring analysis such as that used to derive our current MELD system used for organ allocation, there was no weighting of the respective factors. Rather, each was assigned equal weighting with a score of 1. Do the authors believe that further analysis should be performed to weight their factors or do they feel comfortable with the current one, and do they feel that a system that looks at scoring that was based on information available at the time of the offer rather than perioperative features such as cold ischemic time could be derived to assist in prospective donor allocation?

Number 3. In their analysis of donor and recipient factor interaction, there seems to be a disparity. For instance, when they scored organs and looked at graft and patient outcome based on the points, they were able to have statistical separation of their quartiles, validating the discriminating capacity of their scoring system. However, when they extended this analysis to include recipient factors, there was not that same degree of quartile separation and only those that had 3 donor points were statistically separated. As such, it would appear that this system did not discriminate beyond the donor features when recipient factors were included. What would they propose to be an appropriate system or mechanism to more clearly incorporate recipient factors in such a way that they could have more separation to validate the donor-recipient optimized scoring system?

Dr. Ronald W. Busuttil (Los Angeles, California): First of all, I think that this is not going to apply to all donors or to all recipients. If the MELD system is changed, the schema I have presented should apply to high-risk donors and to high-risk recipients.

I am hoping that we would be able to stratify those high-risk donors to better risk recipients rather than the ICU-bound patient. And what I would like to hopefully achieve is that the organ offered to the center, the decision on whom the organ goes to is made by transplant teams rather than the number on the list.

Your second question regarded the weight of each of the variables. If you look at the previous slides, there was not great statistical variation between any of the five variables. The ones that had the highest power were recipient urgency and warm ischemia time. So I would submit to you again that we need to direct those donors into patients based on recipient urgency.

We also have to look very carefully at who you are going to let do the transplant because if it is going to take you an hour or so to sew in the liver, it is not going to work very well.

The final question was very similar to what was stated before. Yes, I think this needs to be applied to more centers because that is the only way we are going to get the MELD system changed.

Dr. William C. Chapman (St. Louis, Missouri): Just 3 days ago, the International Liver Transplant Society held a Consensus Conference in Philadelphia on this very topic of extended criteria donors and the development of optimal strategies to maximize donor use and transplant outcomes. So this is clearly something we are going to be hearing a lot more about in the near future.

I have only a couple of questions that haven't already been asked, and they are a little bit variations on what has been stated.

First, have you planned in the near future to apply this analysis to an independent data set? For example, the UNOS data set, or the SRTR, which I think might be pretty easily assessed to look at the outcome with your predictors.

The second question is: do you factor in steatosis in some objective way? It is not listed as a variable, but I am sure it is certainly an important factor that you consider in your patients. Were you able to include it in your analysis? Or was it not able to be incorporated in an objective way and is that why we don't see it?

Dr. Ronald W. Busuttil: (Los Angeles, California): In regards to validating this with the SRTS or the UNOS database, I think that is important. My problem in looking at these big national databases is that they are incomplete data sets. There is so much missing information in many areas. I think this validation needs to be done, but I am skeptical on what that data are actually going to show.

In regard to the steatosis factor, we did not include that specifically because we do not do routine biopsies of livers. Should this be done? It should be done to further validate this information which I presented to you. But as I mentioned before, we really believe that an experienced surgeon who does the procurement can provide you with equally important data as a biopsy.

Dr. Tomoaki Kato (Miami, Florida): We all agree that the use of the extended criteria donor is a very important issue. However, concern has been raised by some with regards to the use of these grafts for the use of hepatitis C recipient. Do you have any data with regard to the use of these grafts in hepatitis C recipients?

Dr. Ronald W. Busuttil (Los Angeles, California): We have indeed looked at that. We have just submitted an abstract that looks precisely at nonviral factors that predict hepatitis C recurrence. Basically, many of these involve many of the same kind of donor issues that I have discussed today. The marginal donor, unequivocally, that is placed into a hepatitis C recipient, results in a higher and an earlier incidence of hepatitis C recurrence.

Dr. Alan W. Hemming (Gainesville, Florida): One of the largest components of your scoring system seems to be warm ischemic time. This is the least predictable at the time you are offered an organ since you only know the warm ischemic time after you have sewn the liver in. How do you propose to use warm ischemic time in terms of matching organs since this information is not available at the time the organ is matched to the recipient?

Dr. Ronald W. Busuttil (Los Angeles, California): That is obviously a very individual thing. There are going to be inexperienced centers, if you will, that may have a much longer warm ischemia time than a more experienced center. And that probably is the Achilles heel of this study. That cannot be controlled. But I think basically all of the other factors can be controlled. So I would submit to you in your center if you are going to have a marginal donor, then I would have the most experienced surgeon be involved in the transplant.

Footnotes

Supported in part by the Dumont Foundation, Pfleger Liver Institute, and the Joanne Barr Foundation.

Reprints: Andrew M. Cameron, MD, PhD, Dumont-UCLA Transplant Center, UCLA School of Medicine, 10833 LeConte Ave., 77-132 CHS, Los Angeles, CA 90095. E-mail: acameron@mednet.ucla.edu.

REFERENCES

- 1.United Network for Organ Sharing website (www.unos.org), accessed November 2005.

- 2.Busuttil RW, Tanaka Koichi. The utility of marginal donors in liver transplantation. Liver Transpl. 2003;9:651–663. [DOI] [PubMed] [Google Scholar]

- 3.Strasberg SM, Howard TK, Molmenti EP, et al. Selecting donor livers: risk factors for poor function after orthotopic liver transplantation. Hepatology. 1994;20:829–838. [DOI] [PubMed] [Google Scholar]

- 4.Mor E, Klintmalm GB, Gonwa TA, et al. The use of marginal donors for liver transplantation: a retrospective study of 365 liver donors. Transplantation. 1992;53:383–386. [DOI] [PubMed] [Google Scholar]

- 5.Freeman R, Weisner RH, Edwards E, et al. Results of the first year of the new liver allocation plan. Liver Transpl. 2004;10:7–15. [DOI] [PubMed] [Google Scholar]

- 6.Onaca NN, Levy MF, Sanchez EQ, et al. A correlation between pretransplantation MELD score and mortality in the first two tears after liver transplantation. Liver Transpl. 2003;9:117–123. [DOI] [PubMed] [Google Scholar]

- 7.Ghobrial RM, Gornbein J, Steadman R, et al. Pretransplant model to predict posttransplant survival in liver transplant patients. Ann Surg. 2002;236:315–323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Busuttil RW, Farmer DG, Yersiz H, et al. Analysis of long term outcomes of 3200 liver transplantations over two decades: a single center experience. Ann Surg. 2005;241:905–918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Maring JK, Klompmaker IJ, Zwaveling JH, et al. Poor initial graft function after orthotopic liver transplantation: can it be predicted and does it affect outcome? An analysis of 125 adult primary transplantations. Clin Transplant. 1997;11:373–379. [PubMed] [Google Scholar]

- 10.Berenguer M, Prieto M, San Juan F, et al. Contribution of donor age to the recent decrease in patient survival among HCV-infected liver transplant recipients. Hepatology. 2002;36:201–210. [DOI] [PubMed] [Google Scholar]

- 11.Verran D, Kusyk T, Painter D, et al. Clinical experience gained from the use of 120 steatotic donor livers for orthotopic liver transplantation. Liver Transpl. 2003;9:500–505. [DOI] [PubMed] [Google Scholar]

- 12.Totsuka E, Dodson F, Urakami A, et al. Influence of high donor serum sodium levels on early postoperative graft function in human liver transplantation: effect of correction of donor hypernatremia. Liver Tranpl Surg. 1999;5:421–428. [DOI] [PubMed] [Google Scholar]

- 13.Figuras J, Busquets J, Grande L, et al. The deleterious effect of donor high plasma sodium and extended preservation in liver transplantation: a multivariate analysis. Transplantation. 1996;61:410–413. [DOI] [PubMed] [Google Scholar]

- 14.Briceno J, Solorzano G, Pera C. A proposal for scoring marginal liver grafts. Transpl Int. 2000;13(suppl):249–252. [DOI] [PubMed] [Google Scholar]

- 15.Tekin K, Imber CJ, Atli M, et al. A simple scoring system to evaluate the effects of cold ischemia on marginal liver donors. Transplantation. 2004:411–416. [DOI] [PubMed] [Google Scholar]

- 16.Oh CK, Sanfey HA, Pelletier SJ, et al. Implication of advanced donor age on the outcome of liver transplantation. Clin Transpl. 2000;14:386–390. [DOI] [PubMed] [Google Scholar]

- 17.Yersiz H, Shaked A, Olthoff K, et al. Correlation between donor age and the pattern of liver graft recovery after transplantation. Transplantation. 1995;60:790–794. [PubMed] [Google Scholar]