Abstract

Objective:

To determine if prior training on the LapMentor™ laparoscopic simulator leads to improved performance of basic laparoscopic skills in the animate operating room environment.

Summary Background Data:

Numerous influences have led to the development of computer-aided laparoscopic simulators: a need for greater efficiency in training, the unique and complex nature of laparoscopic surgery, and the increasing demand that surgeons demonstrate competence before proceeding to the operating room. The LapMentor™ simulator is expensive, however, and its use must be validated and justified prior to implementation into surgical training programs.

Methods:

Nineteen surgical interns were randomized to training on the LapMentor™ laparoscopic simulator (n = 10) or to a control group (no simulator training, n = 9). Subjects randomized to the LapMentor™ trained to expert criterion levels 2 consecutive times on 6 designated basic skills modules. All subjects then completed a series of laparoscopic exercises in a live porcine model, and performance was assessed independently by 2 blinded reviewers. Time, accuracy rates, and global assessments of performance were recorded with an interrater reliability between reviewers of 0.99.

Results:

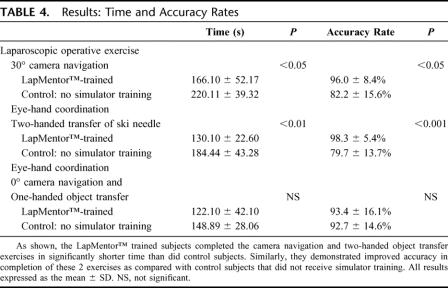

LapMentor™ trained interns completed the 30° camera navigation exercise in significantly less time than control interns (166 ± 52 vs. 220 ± 39 seconds, P < 0.05); they also achieved higher accuracy rates in identifying the required objects with the laparoscope (96% ± 8% vs. 82% ± 15%, P < 0.05). Similarly, on the two-handed object transfer exercise, task completion time for LapMentor™ trained versus control interns was 130 ± 23 versus 184 ± 43 seconds (P < 0.01) with an accuracy rate of 98% ± 5% versus 80% ± 13% (P < 0.001). Additionally, LapMentor™ trained interns outperformed control subjects with regard to camera navigation skills, efficiency of motion, optimal instrument handling, perceptual ability, and performance of safe electrocautery.

Conclusions:

This study demonstrates that prior training on the LapMentor™ laparoscopic simulator leads to improved resident performance of basic skills in the animate operating room environment. This work marks the first prospective, randomized evaluation of the LapMentor™ simulator, and provides evidence that LapMentor™ training may lead to improved operating room performance.

A randomized, double-blinded study was undertaken to determine if surgical interns undergoing prior training on the LapMentor™ laparoscopic simulator would demonstrate superior intraoperative performance as compared with a control group of their peers that did not receive simulator training. The simulator-trained interns were able to complete the animate intraoperative exercises in less time with a higher degree of accuracy, and outperformed the control group with regard to camera navigation skills, efficiency of motion, optimal use of instrumentation, and perceptual ability.

Numerous influences have led to the development of computer-aided simulation to facilitate training in minimally invasive surgery (MIS). These forces include the unique and complex nature of MIS procedures, the requirement for greater efficiency of surgical training due to resident duty hour restrictions and the stringent financial reality of the operating room environment, and most importantly, the legitimate increasing public demand to demonstrate some level of procedural competence prior to performing procedures in the human operating room. Ziv et al have suggested that simulation-based medical education is an ethical imperative, and that the use of simulation in training sends a message that patients are to be protected whenever possible and are not to be used as a convenience of training.1 Simulation-based training has long provided the framework for training in many other complex, high-risk professions (ie, aviation, nuclear power, and the military) with the goal of maximizing safety during training and minimizing risk. It is only recently, however, that simulation has been embraced in the healthcare environment as a possible means of facilitating safer surgical training.

Laparoscopic procedures require psychomotor mapping of 3-dimensional space while interacting with a 2-dimensional image. It is this fact combined with the evolution of MIS which has provided the perfect platform and opportunity for the development of computer-aided simulation. Performance of laparoscopic procedures also requires complex psychomotor skills and utilization of optics and instrumentation that are vastly different from those used in conventional open surgery. Additionally, it is far more difficult for the mentor to directly guide the hand of the student and control the course of the laparoscopic procedure, thus often leading to frustration for both learner and instructor. Increasingly, evidence suggests that a well-structured curriculum, which incorporates virtual reality training for laparoscopic surgery, improves performance in both the animate2–6 and human7–10 operating rooms. The majority of these studies, however, have evaluated only 2 of the commercially available laparoscopic simulators (MIST VR™3,6,8,10 or LapSim™2,5), and very few of the other simulators currently on the market have undergone rigorous evaluation and validation.

The LapMentor™ (Simbionix USA Corp., Cleveland, OH) is a high-fidelity, computer-aided simulator that provides a laparoscopic training curriculum comprised of basic skills, tutorial procedural tasks, and full procedures (such as laparoscopic cholecystectomy). At the completion of a task or procedure, the simulator provides immediate feedback to the trainee with measures of time, accuracy rate, efficiency of motion, and safety parameters displayed on the screen. The LapMentor™ is also equipped with a high-end technological haptic system, which transmits resistance when tissues or objects are encountered during the simulated task, a feature lacking in many other computer-aided simulators but obviously present in the operating room. While all of these features are highly attractive, the LapMentor™ costs in excess of $100,000, and effective transfer of laparoscopic skills from this simulator to the operating room has not yet been demonstrated. Therefore, the purpose of this study was to determine if training on the LapMentor™ laparoscopic simulator improves resident performance of basic MIS skills in the operating room environment as compared with residents who did not receive any prior training.

METHODS

Subjects

Twenty-one surgical interns in the University of Michigan, Department of Surgery were recruited to participate in this study. All aspects of the study were approved by the University of Michigan Institutional Review Board (IRB), and all subjects gave informed consent. All subjects were in good standing within the surgical training program, denied prior experience with either laparoscopic or other simulators, and reported zero or less than 5 hours per week spent currently or previously as a child playing computer or video games.

The study was made available to all interns as stipulated by our IRB, and all categorical General and Plastic Surgery interns participated (n = 9), as did all undesignated preliminary (n = 3) and Urology interns (n = 3). The remaining subjects (n = 6) were designated preliminary interns in Neurosurgery, Otolaryngology, or Orthopedics. Eleven subjects were randomized to the LapMentor™ training group (LMT): 3 categorical General Surgery, 2 Plastic Surgery, 1 Urology, 2 undesignated preliminary, and 3 designated preliminary interns. One subject (an undesignated preliminary intern), however, failed to complete all required simulator exercises prior to the porcine laboratory assessment and was therefore excluded from the study (final n = 10, LMT). Likewise, one subject (a designated preliminary intern) out of 10 in the control group (CTRL) failed to complete the animate operating room assessment and was also excluded from the study (final n = 9, CTRL). The CTRL group interns were comprised of 3 categorical General Surgery, 1 Plastic Surgery, 2 Urology, 1 undesignated preliminary, and 3 designated preliminary interns.

LapMentor™ Training

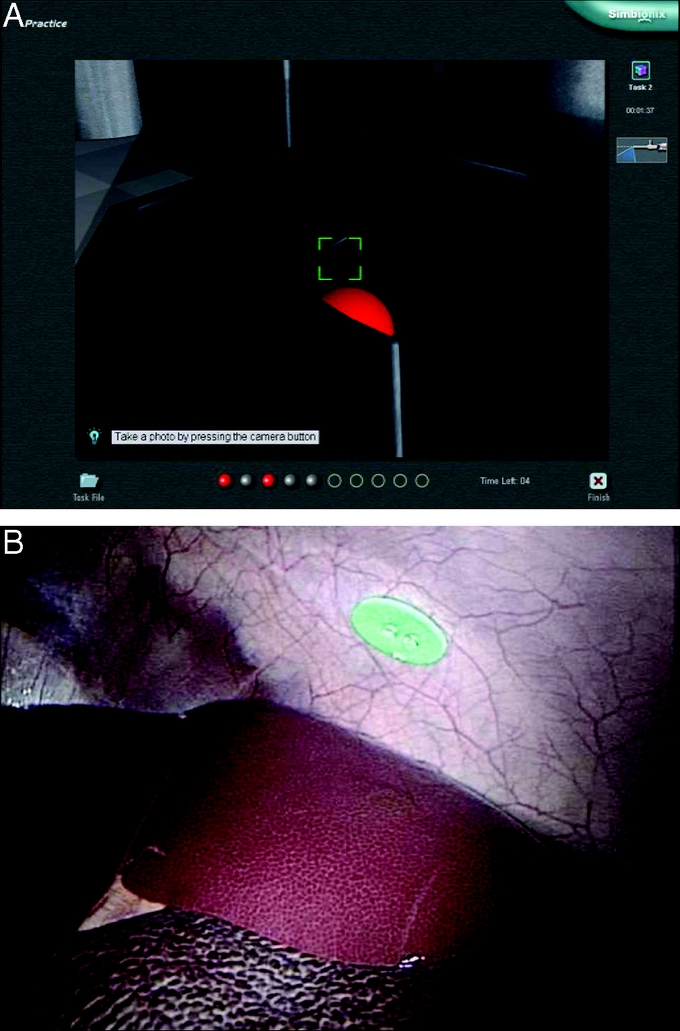

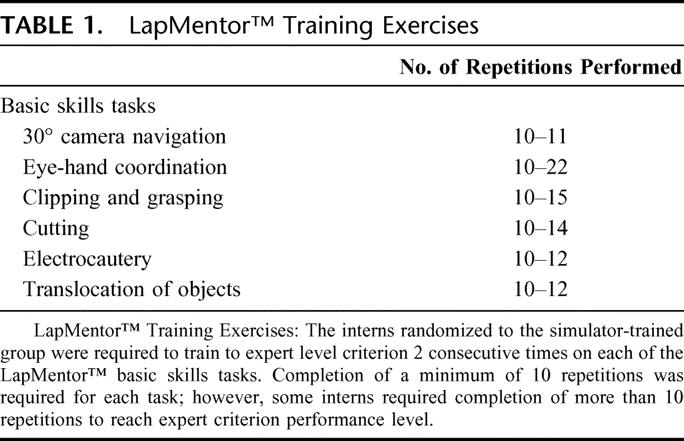

Those subjects randomized to train on the LapMentor™ (Simbionix USA Corp.) underwent a group orientation to the simulator and the required basic skills tasks: 30° camera navigation (Fig. 1A), eye-hand coordination, clipping and grasping, cutting, electrocautery, and translocation of objects. Each subject then performed a single, supervised practice repetition to orient themselves to the simulator and task. Six faculty laparoscopic surgeons performed 6 repetitions of each task, and the mean of their performance on each task was designated as the expert performance criterion level. LMT subjects were required to train to expert criterion level 2 consecutive times for each task, with a minimum of 10 repetitions/task required. Minimum criteria were set at 10 repetitions as construct validity data for the LapMentor™ metrics was not established at the time of this study. A prior study demonstrated that the learning curve for junior surgeons reached a plateau around 8 repetitions;11 therefore, a minimum of 10 repetitions was required to ensure that trainees received adequate training on the simulator exercises. The numbers of repetitions performed by the LMT subjects are shown in Table 1.

FIGURE 1. LapMentor™ 30° camera navigation exercise and corresponding animate operating room laparoscopic exercise. The LapMentor™ screen shot for the camera navigation exercise demonstrates the requirement for the subject to use the 30° optics of the laparoscope to identify the red balls within the containers. The trainee is required to manipulate the scope until the green square is centered on the red ball. The square will then turn red and the ball will disappear. The subject must then find the next red ball. A, The in vivo operative assessment similarly required subjects to optimally use a 30° laparoscope to identify 5 foam “dots” on the abdominal wall of the pig. B, This image shows the appearance of one of these “dots.”

TABLE 1. LapMentor™ Training Exercises

Animate Operating Room Assessment

LapMentor™ subject training occurred over 4 weeks within the first 2 months of internship. Upon completion of simulator training, both LMT and CTRL subjects were assessed performing a series of laparoscopic skills exercises in anesthetized male pigs weighing approximately 20 kg. Animal use for this study was approved by the University of Michigan's Animal Care and Use Committee, with adherence to the “Guiding Principles of Laboratory Animal Care” as promulgated by the American Physiological Society. The porcine model was chosen as it closely recapitulates the human operating room environment and allows utilization of the same laparoscopic instrumentation and equipment used in our hospital, thereby creating a very realistic intraoperative environment. The in vivo tasks were chosen based upon deconstruction of laparoscopic procedures, with the basic techniques of laparoscopy evaluated: camera navigation, efficient and coordinated use of laparoscopic instrumentation, perceptual ability or the ability to convert two-dimensional visual information into manipulation in three-dimensional space, and safe placement of clips and application of electrocautery.

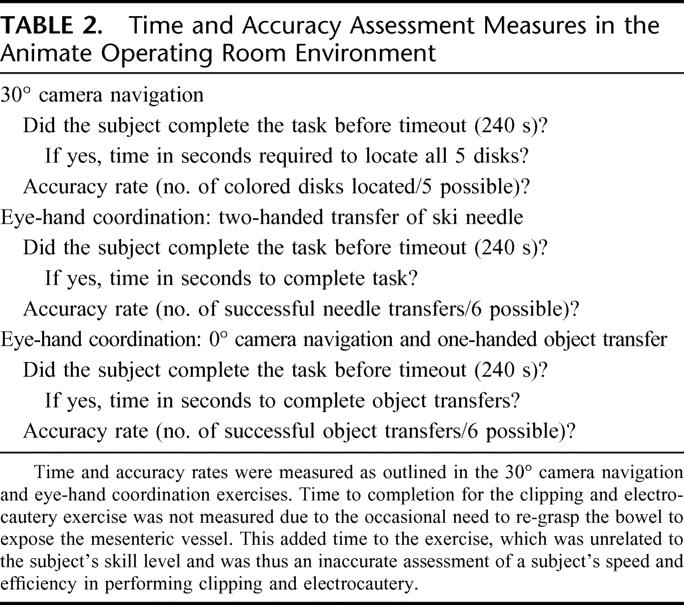

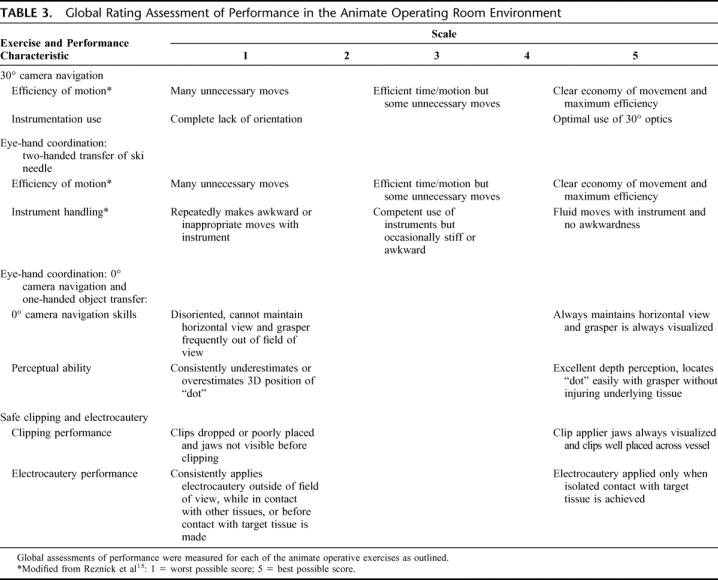

All subjects were oriented to the animate laparoscopic tasks by one of the primary investigators using scripted instructions and demonstration of optimal performance for each task. Each subjects’ performance was captured on DVD. The tasks included a 30° camera navigation exercise, 2 eye-hand coordination exercises, and a clipping and electrocautery exercise. The 30° camera navigation exercise required the subject to find and focus on 5 foam “dots” measuring 1 cm in diameter, which were placed at various preselected locations within the abdomen (Fig. 1B). The placement of the “dots” required the subject to use the 30° optics of the laparoscope to successfully locate the objects within the abdomen. The first eye-hand coordination exercise focused on two-handed transfers utilizing 2 laparoscopic graspers. One of the investigators held the laparoscope for this exercise. The subject stood at the foot of the pig and was required to transfer a ski needle (USSC 3–0 Silk, United States Surgical Corporation) with a left-handed grasper from the right lobe of the liver up into the air to be grasped by the right-handed grasper, and then placed gently down on the left lobe of the liver. This procedure was then reversed and was repeated 3 times. The second eye-hand coordination exercise evaluated one-handed object transfer and zero degree camera navigation skills. The subjects were asked to handle the laparoscope first with their left hand and to transfer a 1-cm foam “dot” with a grasper in their right hand from the liver to the spleen while standing on the pig's left side. This procedure was then reversed with the subject standing on the pig's right side, and they were then asked to handle the laparoscope with their right hand while using a grasper to transfer the “dot” from the spleen back to the liver. The subjects were asked to keep the grasper in view at all times during the transfers. Lastly, a safe clipping and electrocautery exercise was performed. A segment of bowel was suspended by 2 assistants and a window was created in the mesentery on either side of a mesenteric vessel. The subject was then asked to handle the laparoscope with their left hand and to place a clip proximally and distally on the vessel and to divide the vessel between them using electrocautery. Safe clipping and electrocautery techniques were emphasized for this exercise. Tables 2 and 3 outline the outcome measures assessed for each exercise.

TABLE 2. Time and Accuracy Assessment Measures in the Animate Operating Room Environment

TABLE 3. Global Rating Assessment of Performance in the Animate Operating Room Environment

The recorded DVD performance for each subject was reviewed by 2 surgeon-investigators blinded to the training status and identity of the subject. A true laparoscopic novice (undergraduate student), junior resident (PGY3), and laparoscopic faculty member were also recorded performing the exercises. These performances were then viewed by the 2 blinded reviewers and used to set benchmarks for performance. For review of the subjects’ recorded performance, each task was then viewed separately by both reviewers for all subjects (ie, the camera navigation exercise was reviewed for all subjects followed by the first eye-hand coordination exercise for all subjects). This allowed for focused evaluation of a specific skill set for all subjects, rather than viewing the entire porcine laboratory performance for 1 subject at a time. Interrater reliability was determined for the global assessment outcome measures.

Data Analysis

Mean performance was compared between the LMT and CTRL subject groups using one-way ANOVA. The interrater reliability analysis for the global assessment scale was conducted using an alpha model in SPSS. This model calculates the mean percentage agreement between the ratings for each scale item. All data are expressed as the mean ± the standard deviation and statistical significance was set at a level of P < 0.05. SPSS v13.0 was used for all statistical analyses (SPSS, Inc., Chicago, IL).

RESULTS

LMT subjects outperformed CTRL subjects on the camera navigation and two-handed object transfer exercises, and in the performance of safe electrocautery. As shown in Table 4, the LMT subjects completed the 30° camera navigation exercise in significantly less time than did CTRL subjects, and with a higher degree of accuracy. Similarly, the LMT subjects demonstrated greater accuracy and more expeditious completion of a series of two-handed transfers of an object between right- and left-handed graspers than did CTRL subjects (Table 4). Additionally, when one examines the accuracy rate assessments for the camera navigation and two-handed grasping eye-hand coordination exercise, there is less variability noted in the LMT subjects’ performance, with a more narrow distribution observed in their accuracy rates. This suggests greater consistency in accurate performance following simulator training for these basic skills. Significant differences in performance were not identified between LMT and CTRL subjects for the zero degree camera navigation/one-handed object transfer exercise, or in the assessment of safe clipping practices.

TABLE 4. Results: Time and Accuracy Rates

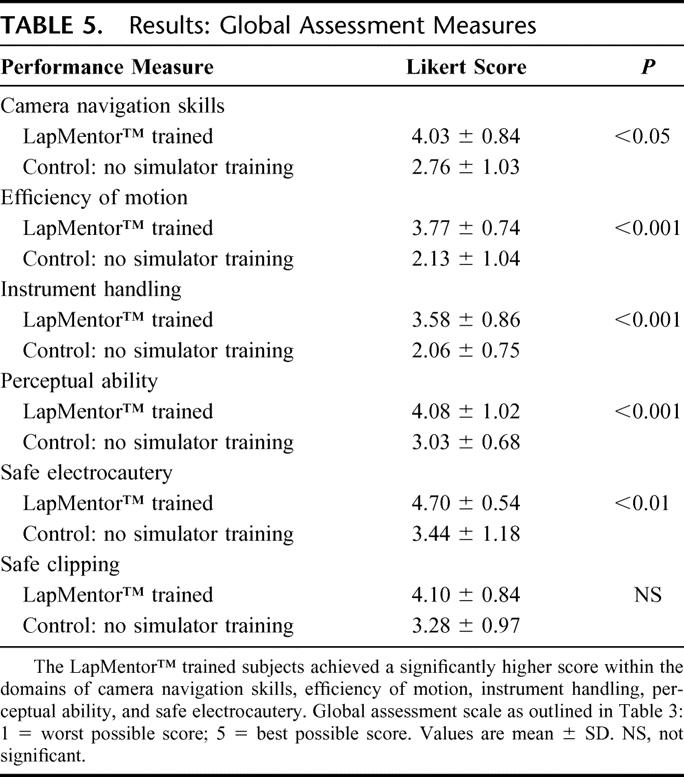

Global assessment measures of several laparoscopic surgery component skills were completed by 2 blinded surgeon-investigators with an interrater reliability between reviewers 1 and 2 of 0.99. Internal consistency for the scale was 0.94 for reviewer 1 and 0.95 for reviewer 2, indicating good internal reliability. Table 3 outlines the scale and anchors used to measure camera navigation skills (30° instrumentation use and zero degree camera navigation skills), efficiency of motion, instrument handling, perceptual ability, clipping performance, and electrocautery performance. As demonstrated in Table 5, the LMT subjects significantly outperformed CTRL subjects within the domains of camera navigation skills, efficiency of instrument motion, optimal instrument handling, perceptual ability, and the performance of safe electrocautery. Although quantification of errors was not specifically measured in this study, the improved perceptual ability and greater efficiency of instrument motion observed in the LMT group led to a decreased incidence of adjacent organ injury (liver and spleen) during the two-handed object transfer exercise, and a decreased incidence of applying electrocautery while not in contact with the target tissue during the electrocautery exercise.

TABLE 5. Results: Global Assessment Measures

DISCUSSION

Minimally invasive surgery requires a unique skills set as evidenced by the lack of correlation between open surgical experience and the performance of laparoscopic surgical skills.12 This lack of transfer of training emphasizes the need for specialized training in minimally invasive surgery and supports the concept of virtual reality training for the development of laparoscopic skills. Satava first suggested the use of virtual reality simulators for surgical training over a decade ago;13 however, it has only been in the last 5 years that simulation has been widely embraced by the surgical community as a valid and valuable training tool. This has led to a proliferation in computer-aided laparoscopic simulators for training surgeons in MIS skills. The LapMentor™ represents one of the newer laparoscopic virtual reality simulators with modules for component laparoscopic skills practice and assessment, as well as complete surgical procedures. It is this latter factor along with an emphasis on fidelity of tissue appearance and interaction that provides market discrimination. The LapMentor™ additionally provides haptic feedback to the trainee, a condition that more closely mimics the true operative environment. To date, however, the LapMentor™ has not been rigorously evaluated to determine if it is an effective training tool that translates into improved performance in the in vivo operative environment. As Youngblood et al have demonstrated, different simulators have varied training strengths, and careful evaluation is required so that these simulators can be appropriately used for both training and assessment purposes.2

Thus, the present study marks the first evaluation of the LapMentor™ laparoscopic simulator and demonstrates evidence for transfer validity from simulator training to the animate operating room environment for the following basic laparoscopic skills: camera navigation, efficient instrument motion and handling, perceptual ability, and safe electrocautery practices. This study, however, failed to demonstrate improved performance on a zero degree camera navigation exercise requiring one-handed object transfer, and in the assessment of safe clipping practices. This may be due to a lack of transfer validity for these particular skills from the simulator to the in vivo environment, or secondary to oversimplification of the operating room exercise leading to an inability to detect small differences in performance. The small size of the study population also raises the possibility of a type II error, and a larger trial may identify significant differences in performance for these tasks. Additionally, this study only evaluated the impact of simulator training on the in vivo performance of basic laparoscopic skills. The transfer of skills gained in the virtual environment was tested in the animate operating room because it allowed consistent recreation of laparoscopic test skills for all subjects. Assessing skill in the human operative environment can be substantially more challenging due to a multitude of patient and attending surgeon factors. Future studies will need to address whether LapMentor™ training leads to improved performance on more complex surgical procedures such as laparoscopic cholecystectomy or appendectomy. Indeed, the larger and as yet unanswered question about the value of simulation in procedural training is whether such educational interventions can ultimately be linked to improved patient outcomes. This is, of course, the ultimate goal of improving surgical education processes and outcomes.

The use of virtual reality for the training of MIS skills allows for the development of a “pretrained novice” so that basic psychomotor and visual-spatial laparoscopic skills have been developed and become reasonably automatic before proceeding to the operating room.7 This allows for more efficient and safer use of operating room training time, such that intraoperative training can be focused on clinical decision making and avoidance of errors rather than learning how to use and manipulate the equipment.7,8 As evidence continues to emerge in support of using virtual reality in the training of MIS skills, more and more surgical training programs will be looking to the market to buy laparoscopic simulators. While studies such as this one should help guide the educational consumer, there are several important considerations a hospital or institution must address. When considering implementation of virtual reality simulation into a curriculum, it is most important to realize that VR simulation is most effective when incorporated into a comprehensive, well-designed curriculum for the training of MIS skills with close supervision and participation of expert MIS surgeons and trainers. Increased fidelity of simulation has the potential to increase the “appeal” and degree of learner engagement; however, high-fidelity simulators like the LapMentor™ may be cost prohibitive for most training programs and “high-tech” trainers do not necessarily guarantee a superior training experience. Matsumoto et al examined the effect of bench model fidelity on endourological training and demonstrated a significant improvement in the performance of a midureteric stone extraction for subjects receiving prior training on a bench model. There was not a difference, however, in performance on the in vivo task between those subjects trained on a low-fidelity model as compared with the high-fidelity training model, although the high-fidelity training model was significantly more expensive.14 In contrast, recent work done by Youngblood et al demonstrated improved performance on live surgical tasks following training on a virtual reality part-task trainer as compared with a traditional box trainer.2 This suggests that certain skills will likely be best learned in the virtual reality environment, while others may be learned equally as well or even better in less expensive, lower-fidelity models.

It is clear that rigorous evaluation of each of the virtual reality trainers on the market is needed so these simulators can be appropriately used and optimally introduced into surgical training curriculums. In particular, if these simulators are to be used for high-stakes assessment in the future, or even physician credentialing, validation studies such as this one are critical.

Discussions

Dr. William G. Cioffi, Jr. (Providence, Rhode Island): Most of us in this room were trained during the era of “see one, do one, teach one,” and for a variety of reasons which include patient safety issues, malpractice concerns, OR and surgeon efficiency, and perhaps most importantly, I hope, the realization that this may not be the best educational technique have abandoned this. This, however, leaves us with a conundrum of how best to teach technical skills to our residents. This issue is further compounded by the explosion of minimally invasive surgical techniques being applied to ever more complex operations. It is further hampered by work-hour restrictions which limit resident availability for educational endeavors. Thus, papers like this are quite important.

The authors have shown that prior training on a high-fidelity laparoscopic trainer led to marked improvement in basic skill acquisition. The strengths of the article include the randomized nature, the validity constructs used, the fact that both time and accuracy were studied and measured, and that there was follow-up testing in a porcine model. However, there are some weaknesses.

First is the cost, both in terms of dollars, the trainer that they studied cost $100,000, and then there is the issue of both faculty and resident time. How much time did each resident spend? How did you work this into the 80-hour week. Box trainers are much less expensive. Madden and colleagues have suggested equivalency between boxes and more expensive models. Why did you not have box control?

What kind of training did your controls get? Rege and colleagues have suggested that both groups should get some baseline testing so that there is equal familiarity at least with the instrument prior to the educational training. There were 21 interns randomized; 19 completed the study. How many were categorical general surgical interns versus other subspecialties? Did this matter? Were there gender differences? This might be important as we put our programs together.

Criticisms of previous studies have included the fact that study measures have little to do with real laparoscopic surgery. What were the mistakes made other than the one example that you showed us? In the porcine part of the study, was there a reduction in dangerous moves that all of us would like to avoid on our patients?

Finally and probably the most important question is the durability of the training. Have you done any follow-up studies at the end of the internship year to compare the groups to see that there was at least a 6- to 12-month durable response? And more importantly, what happens as we go on to teach these individuals more complex operations?

Despite these criticisms, this is an important study. I compliment the authors on bringing to us. All of us will need to improve the measures by which we educate our residents in these techniques.

Dr. Rebecca M. Minter (Ann Arbor, Michigan): The high cost of these simulators is a big consideration, and for this reason the University of Michigan has chosen to set up our simulation center as an institutional resource rather than solely within the Department of Surgery. Because of this, we have buy-in from all of the departments in the medical center, including nursing, and this has allowed us to purchase a number of high-end simulators with the cost spread across a number of departments. This may not be a feasible model at every institution. The high cost associated with these computer-aided simulators is one of the major reasons that we feel that these simulators must be clearly validated before they are implemented into a surgical curriculum. We were one of the first institutions to obtain the LapMentor™ and therefore undertook this study to determine how this simulator should be used and if its use could be validated.

Though this study was designed as a transfer validity study, the question of how this simulator compares to the cheaper box trainers is an important one. Other studies have shown that the box trainers are superior to computer-aided simulators for certain skills like intracorporeal suturing and knot tying where a true three-dimensional environment and haptic feedback are very important features. The LapMentor™ does have haptic feedback, but it is not perfect yet and hopefully they will continue to develop this feature of the simulator. We did not specifically look at the box trainers as a control as this was designed as a transfer validity study for this particular simulator, rather than a comparison between the box trainer and the LapMentor™, but this will certainly be an important thing to examine in future studies.

The time required for completion of the simulator exercises was approximately 4 hours for each of the interns. All of the interns were oriented as a group to the simulator tasks by myself and shown how to perform them properly. They then each performed a single repetition of each task with direction to be certain that they were performing the tasks properly. Just sending them to use a simulator without instruction is not the purpose of these simulators, and I think that is an important point. They must be implemented as a tool, as a part of a larger curriculum, not just as an expensive video game.

In terms of the interns, we were already dealing with the difficulty of a small study population so we could not limit recruitment to only the general surgery interns. Additionally, it was a requirement of our IRB that this study be made available to all interns. What we did do though, was to control the randomization of the categorical, designated preliminaries, and undesignated preliminaries to the two study groups, as well as the gender of the interns randomized. We had three female interns in each group. The numbers were really too small to perform subgroup analyses to look at gender differences and differences by handedness. Both of these issues would be very interesting to look at but would have to be done on a multi-institutional level. All of our categorical General Surgery and Urology interns participated, so there were three categorical interns in each group, two Urology interns, and the rest were either designated or undesignated preliminaries from other specialty groups.

The mistakes that were made in the animate operating room exercises, aside from the untrained subjects just taking longer, were largely related to inefficient use of the instrumentation and poor perceptual ability. They would frequently pass point and hit the liver or the spleen, or they would take forever getting there because they were very hesitant and weren't sure of the location of the three-dimensional object represented in the two-dimensional image. On the clipping and cautery exercises, we often observed the untrained interns starting to apply cautery prior to establishing contact with the vessel and standing on the cautery constantly until they reached the vessel, clearly an unsafe practice. This suggests that the simulator-trained subjects had improved perceptual ability as they did not apply the cautery until they had established contact with the target vessel.

The durability of training is an excellent question. Danny Scott's group at Tulane and a number of others have begun to try to address this question. It is not something that we evaluated in this particular study, but it would be interesting to do so in the future. We performed this study at the beginning of the academic year so that we could capture the interns at a time when they were truly untrained, and it would be interesting to follow up at the end of the year to see if these differences persist.

Dr. Ronald Clements (Birmingham, Alabama): I think it is safe to say that advanced minimally invasive surgery is no longer the surgery of the future; it is here today, and we need to train our residents to be proficient in these skills.

The unique environment of laparoscopy, as was pointed out in the manuscript, provides us with the ability to have simulation training prior to the resident participating in these cases, which hopefully will diminish their hesitancy and improve their performance in the operating room.

This presentation shows us the results of a randomized trial on a commercially available simulator versus no simulation training. As you have already seen, the subjects were randomized and were taught six previously designated skills.

I really have 3 subjects about which I would like to question the authors. Some of these have already been covered by Dr. Cioffi.

First, in relationship to the study itself, did any of the interns have significant laparoscopic operative experience prior to the animal lab? I think that may explain why there was no difference in the zero degree camera navigation and the one-hand transfer. As you are well aware, that is probably the skill that the intern is most likely to accomplish in the operating room. Also, the small numbers in the study may explain the fact that there was no difference there.

You also questioned each of the participants about their video game usage, and all of the subjects reported essentially none. I suspect that is a function of their busy schedule as a surgery resident. Perhaps a better question to ask is, and I would like to hear your comments on this, what about their usage of video games in childhood and in their teen years when perhaps our circuits aren't quite as hard-wired at that point to think in the concrete world but more open to the virtual world? Certainly, I know my sons can attain higher scores on their video games than I can, and that fact was well demonstrated prior to my departure for this meeting.

Regarding the expensive price of the LapMentor™ system, and you touched on this a little bit, but could this same result be achieved with a lower-tech alternative? And I suppose the point of the question is really, do we require high fidelity virtual trainers with their high price to get the same skills transferred to the interns?

You already addressed this somewhat, but have you noticed an improvement in actual operations based on this training system? Has it led to better instructor satisfaction and decreased frustration in the operating room by the teacher? If so, then the $100,000 price tag may be a good value.

Finally, in regard to implementing the training modality, has your residency program now required this of interns and required perhaps even more senior residents to spend time and train to these expert levels prior to operating on patients?

I am certainly interested in your ideas of how to incorporate virtual reality training into the 80-hour workweek. The fact that we have fewer residents on service to accomplish more operations and the increased demands for proficiency demonstration prior to credentialing makes it more difficult to mandate the residents spend more time in simulation training. My personal thoughts on the value of this technology are, as it is more clearly demonstrated to be of value, that program directors will place a greater emphasis on virtual reality training and the time spent mastering the virtual skill set. Perhaps also in the future, as we are trying to attract medical students into surgery, this may be a tool that they have grown up with (ie, the video game generation), and this might actually be an instrument we can use to attract better medical students into surgery training.

Dr. Rebecca M. Minter (Ann Arbor, Michigan): As to how we quantified the subjects’ prior experience, we had all subjects complete a one-page sheet capturing demographic data. They were asked how many cases they had driven a laparoscope for as a medical student and how many cases they had participated in as a resident. As it was the beginning of their intern year, none of the interns had logged any laparoscopic cases as residents. With regard to their experience as medical students, they all listed a number, but it was based on memory and only an estimate since they had not logged this information as students; they really weren't sure. Therefore, rightly or wrongly, we just had to assume that their medical school experience was likely similar and was not significantly different between interns.

We also asked them about their video game experience: both as a child and currently. For whatever reason, none of our interns had any significant video game experience: either prior or current. We do have one resident that is actually a national video game champion and he did not participate.

The issue of whether or not the LapMentor™ is worth the expense if residents prefer its use is a good one. Certainly, the haptic feedback and the superb three-dimensional graphics are big draws for the residents. Additionally, on the procedural modules, this simulator demonstrates very high fidelity. When you strip the fat down off the cystic duct on the lap chole module, there is bleeding. If you tear the duct, there is a bile leak. If you avulse the artery, there is bleeding. The residents love the realistic look and feel of these procedural modules. We have other computer-aided simulators in our Simulation Center and the residents will stand in line to use the LapMentor™ because of its high fidelity. I think this lends to its increased use as compared to some of our other simulators and the box trainers. The box trainers will typically stand empty while the computer-aided simulators get used all the time unless a faculty member specifically takes a resident to the box trainer to work on a specific task like intracorporeal suturing. This is probably due to the very reason that you suggest: the residents prefer these high-end, high-fidelity computer-aided simulators. I think, though, that studies such as this one are critically important as we look at implementing these simulators into the curriculum. Different simulators have varied strengths, and a curriculum should be built around data from studies such as these, not just based upon which simulators the residents prefer to use.

The way we are currently using these data at the University of Michigan is to build our laparoscopic curriculum using this data as well as data from other studies performed at our institution to select which exercises are the best for teaching specific skills sets. Additionally, we are using this data to develop focused and targeted remediation programs for any resident that may have a particular deficiency in the operating room. We can build a specific remediation plan using the LapMentor™, the LapSim™, and the box trainers based on data from this study and others that is focused and targeted to their weakness. It is a much more efficient and effective means of practice than just saying, “Go spend some time in the simulation center and come back to the OR when you are ready.” We are also developing a mandatory curriculum that we will require our residents to complete before they come to the OR.

With regard to how we are addressing simulator use time in the context of the 80-hour workweek, any time in the simulation center that is required is counted towards their 80 hours; however, independent practice does not count. Dr. Britt can correct me if we are wrong in our interpretation of these rules, but we see independent practice in the simulation center as no different from reading a textbook. We have an expectation that our trainees will prepare before they come to the operating room, whether it is reading about possible complications in a textbook, reviewing an atlas, or practicing in the simulation center. This is a critical aspect of independent, lifelong learning, not a duty hour issue. If we say, “Okay, everyone is going to go to the simulation center today to practice knot tying,” then we count those hours towards their duty hours.

Dr. Thomas R. Russell (Chicago, Illinois): I would like to ask you a question about LapMentor™. Are they leaders in this field? There is obviously a potentially huge market for developing such simulators in the future.

Secondly, you focused on resident training. Do you see any role for this sort of simulator in training people actually in practice? I am looking to the future with recertification and maintenance of certification, where all of us may have to become involved in this sort of simulator-based training to be eligible for renewal of license and certification.

Finally, do you think that you will be able to develop simulators that will basically replace the animal model?

Dr. Rebecca M. Minter (Ann Arbor, Michigan): We actually recently presented our data at the College in October on the construct validity of this particular simulator. I think the biggest shortfall of many of these computer-aided simulators is that the companies have put all of their time and money into developing the high-fidelity interface and the haptics, and have not spent nearly enough time and energy in developing the metrics. This is actually the reason that we set a minimum of 10 repetitions for each of our subjects. Our construct validity study was going on simultaneously with this study and actually demonstrated that the out-of-the-box metrics for the LapMentor™ are relatively insensitive for detecting differences in performance. The company is currently working on fixing the metrics based on this feedback. However, I think, if you are going to talk about using these simulators for high-stakes assessment like certification, these simulators will have to be rigorously validated, particularly with respect to the validity of the metrics. The LapMentor™ currently falls short in this department and is not ready for use in processes like maintenance of certification.

In terms of its face validity and how it looks and feels, it looks and feels very real and both our residents and faculty enjoy using it. There are simulators coming onto the market from all directions currently, and each is going to have to be carefully evaluated, particularly if they are going to be used for high-stakes assessment and certification processes. I don't think we are quite there yet.

Regarding the use of simulators to replace animal models, I think we may ultimately get there, but cost will be a huge issue. We currently use total human simulators in our Simulation Center for placement of chest tubes, managing airways, central line placement, and to practice codes. These human simulators are highly realistic but can also be used over and over again for these exercises so the cost can be justified. Additionally, the lack of a true three-dimensional environment with computer-aided laparoscopic simulators will have to be addressed. The computer-aided simulators create the feeling of three-dimensions, but they are still two-dimensional environments. How far the technology will progress with respect to the creation of a three-dimensional environment I do not know.

Dr. Michael L. Hawkins (Augusta, Georgia): Tell me about this video game. Do you assume that video game players are skilled and therefore excluded from the study? Is there really any evidence that this carry-over or cross-over from mortal combat or flight simulator and those types of games to hopefully what is not mortal combat in the operating room?

If so, that brings us back to a question I believe Dr. Flint raised about gender differences. Since it is probably more likely that more males play those games than females, what does this mean for the future of surgery, where currently perhaps as many as 50% of surgical residents are female?

Dr. Rebecca M. Minter (Ann Arbor, Michigan): Well, I would say that you are in very good shape if 50% of your surgical residents are female. We are not quite there yet. The gender differences question is an interesting one and I believe that one study has evaluated this in medical students, but given that only approximately one third of the surgical residents in a program are female this would really need to be done on a multi-institutional level to achieve sufficient numbers to make these sort of comparisons.

With regard to the video game effect, two studies have demonstrated that this is a real effect. There is a positive correlation between an individual's video game experience and their laparoscopic performance. I assume this is not related to the mortal combat side of things but rather to the eye-hand coordination issue. Like any other technical exercise, this represents repetitive practice of a technical skill obtained while playing hours of video games.

Footnotes

Supported in part by a research grant from the Association for Surgical Education Foundation's Center for Excellence in Surgical Education, Research, and Training (Springfield, IL), and by the United States Surgical Corporation (Norwalk, CT).

Reprints: Rebecca M. Minter, MD, University of Michigan, Department of Surgery, 1500 East Medical Center Drive, Taubman Center TC2920H, Ann Arbor, MI 48109-0331. E-mail: rminter@med.umich.edu.

REFERENCES

- 1.Ziv A, Wolpe PR, Small SD, et al. Simulation-based medical education: an ethical imperative. Acad Med. 2003;78:783–788. [DOI] [PubMed] [Google Scholar]

- 2.Youngblood PL, Srivastava S, Curet M, et al. Comparison of training on two laparoscopic simulators and assessment of skills transfer to surgical performance. J Am Coll Surg. 2005;200:546–551. [DOI] [PubMed] [Google Scholar]

- 3.Jordan JA, Gallagher AG, McGuigan J, et al. Virtual reality training leads to faster adaptation to the novel psychomotor restrictions encountered by laparoscopic surgeons. Surg Endosc. 2001;15:1080–1084. [DOI] [PubMed] [Google Scholar]

- 4.Fried GM, Derossis AM, Bothwell J, et al. Comparison of laparoscopic performance in vivo with performance measured in a laparoscopic simulator. Surg Endosc. 1999;13:1077–1081; discussion 1082. [DOI] [PubMed]

- 5.Hyltander A, Liljegren E, Rhodin PH, et al. The transfer of basic skills learned in a laparoscopic simulator to the operating room. Surg Endosc. 2002;16:1324–1328. [DOI] [PubMed] [Google Scholar]

- 6.Ahlberg G, Heikkinen T, Iselius L, et al. Does training in a virtual reality simulator improve surgical performance? Surg Endosc. 2002;16:126–129. [DOI] [PubMed] [Google Scholar]

- 7.Gallagher AG, Ritter EM, Champion H, et al. Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg. 2005;241:364–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2002;236:458–463; discussion 463–464. [DOI] [PMC free article] [PubMed]

- 9.Schijven MP, Jakimowicz JJ, Broeders IA, et al. The Eindhoven laparoscopic cholecystectomy training course. Improving operating room performance using virtual reality training: results from the first E.A.E.S. accredited virtual reality trainings curriculum. Surg Endosc. 2005;19:1220–1226. [DOI] [PubMed] [Google Scholar]

- 10.Grantcharov TP, Kristiansen VB, Bendix J, et al. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004;91:146–150. [DOI] [PubMed] [Google Scholar]

- 11.Grantcharov TP, Bardram L, Funch-Jensen P, et al. Learning curves and impact of previous operative experience on performance on a virtual reality simulator to test laparoscopic surgical skills. Am J Surg. 2003;185:146–149. [DOI] [PubMed] [Google Scholar]

- 12.Figert PL, Park AE, Witzke DB, et al. Transfer of training in acquiring laparoscopic skills. J Am Coll Surg. 2001;193:533–537. [DOI] [PubMed] [Google Scholar]

- 13.Satava RM. Virtual reality surgical simulator: the first steps. Surg Endosc. 1993;7:203–205. [DOI] [PubMed] [Google Scholar]

- 14.Matsumoto ED, Hamstra SJ, Radomski SB, et al. The effect of bench model fidelity on endourological skills: a randomized controlled study. J Urol. 2002;167:1243–1247. [PubMed] [Google Scholar]

- 15.Reznick R, Regehr G, MacRae H, et al. Testing technical skill via an innovative ‘bench station’ examination. Am J Surg. 1997;173:226–230. [DOI] [PubMed] [Google Scholar]