Abstract

Four pigeons were exposed to two tandem variable-interval differential-reinforcement-of-low-rate schedules under different stimulus conditions. The values of the tandem schedules were adjusted so that reinforcement rates in one stimulus condition were higher than those in the other, even though response rates in the two conditions were nearly identical. Following this, a fixed-interval schedule of either shorter or longer values than, or equal to the baseline schedule, was introduced in the two stimulus conditions respectively. Response rates during those fixed-interval schedules typically were higher in the presence of the stimuli previously correlated with the lower reinforcement rates than were those in the presence of the stimuli previously correlated with the higher reinforcement rates. Such effects of the reinforcement history were most prominent when the value of the fixed-interval schedule was shorter. The results are consistent with both incentive contrast and response strength conceptualizations of related effects. They also suggest methods for disentangling the effects of reinforcement rate on subsequent responding, from the response rate with which it is confounded in many conventional schedules of reinforcement.

Keywords: behavioral history, reinforcement rates, within-subject comparison, fixed-interval schedules, differential-reinforcement-of-low-rate schedules, incentive contrast, key peck, pigeons

The study of behavioral history effects often involves a comparison of two different history-building conditions, the effects of which then are compared against those observed during identical final schedules of reinforcement in a history-testing condition. Using this type of procedure, differential effects of past reinforcement schedules on present performance have been demonstrated using both humans and other animals as subjects (e.g., Barrett, 1977, Freeman & Lattal, 1992; Nader & Thompson, 1987; Urbain, Poling, Millam, & Thompson, 1978; Weiner, 1964). Freeman and Lattal (1992), for example, reported differential fixed-interval (FI) performance by pigeons in the presence of different stimuli as a function of a history of responding according to either differential-reinforcement-of-low-rate (DRL) or fixed-ratio (FR) schedules. Because different schedules of reinforcement maintain different patterns, rates of response, and, often, rates of reinforcement, any or all of these variables could contribute to the performance dubbed a “history effect.” In the present experiment, we attempted to isolate the historical effects on current performance of different rates of reinforcement, while holding constant the rate of responding.

Precedence for considering the potential role of reinforcement history in present performance may be found generally in the incentive contrast literature (Crespi, 1942; Flaherty, 1982) and in Nevin's analysis of behavioral momentum (Nevin, 1974, 1979). Such precedence, in addition, may be found specifically in an earlier experiment conducted by Tatham, Wanchisen, and Yasenchack (1993). Several experiments have shown that after training with a small reinforcer magnitude, changing to a condition with a larger magnitude of reinforcement increases response rates over those observed in control animals that received only the large-magnitude reinforcer. Conversely, switching from a large- to small-magnitude reinforcer results in lower rates than those observed in a control group receiving only the small-magnitude reinforcer (Crespi, 1942; Flaherty, 1982). Although the results with reinforcer magnitude do not necessarily extrapolate directly to reinforcer rate, the incentive contrast effect does illustrate how changing a reinforcement parameter from the history-building (i.e., the conditions establishing the initial responding) to history-testing conditions (i.e., those conditions where the effects of the different history conditions are assessed) may differentially influence present responding.

An extensive body of experiments beginning with the seminal work of Nevin (1974) shows how current responding under a common contingency may be influenced by past conditions of reinforcement. For example, Nevin found that pigeons trained on a multiple variable-interval (VI) 30-s VI 120-s schedule responded more persistently in the previous VI 30-s component when extinction replaced both VI schedules. These findings may be cast as a behavioral history effect, one that Nevin has attributed to differences in reinforcement rate. It also is the case that different reinforcement contingencies controlling different response rates, but maintained by identical rates of reinforcement, are differentially resistant to change when a common disruptor is intruded (Lattal, 1989). Relative resistance to change of responding under an identical schedule following a history of exposure to two different schedules, however, has not been studied as a function of holding both the contingency and response rate constant while varying reinforcement rate during the history-building condition.

Tatham et al. (1993) suggested that large differences in reinforcement rate between history-building and history-testing schedules might diminish the effects of the previous conditions because larger differences in reinforcement rate presumably would be more discriminable. After exposing three groups of rats to DRL 10-s, 30-s, or 60-s schedules, an FI 30-s schedule was effected. The most persistent DRL-like responding was expected by the DRL 30-s schedule history, because the change in reinforcement rates from the past to the current contingencies should have been least discriminable. Contrary to expectations, rats exposed to the DRL 60-s schedule that obtained an average of 0.37 reinforcers per min, and not those exposed to the DRL 30-s schedule with 0.91 reinforcers per min, had the most persistent low response rates during FI. Because response rates varied with reinforcement rates, response rates in the final history-building sessions were considerably lower under the DRL 60-s schedule than those under the DRL 10-s and 30-s schedules. The results suggest that differential response rates during history-building, and not the discriminative properties of reinforcement rate per se, influenced subsequent responding under the same reinforcement contingency.

Nonetheless, the findings of Tatham et al. (1993) in conjunction with those from studies of behavioral momentum and incentive contrast, invite consideration of the possibility of a reinforcement history effect, that is, an effect on subsequent responding as a function of reinforcement parameters rather than response parameters. These three perspectives each predict differences in responding in the history-testing condition as a function of differential reinforcement histories. Behavioral momentum theory does so on the basis of events in the history-building conditions. Other things being equal, it would predict less of a history effect when reinforcement rates in the history-building condition are higher, that is, these rates would be more resistant to change. The predictions of both discrimination and incentive contrast accounts consider reinforcement rate in the history-testing condition to be critical because the relative change between it and the history-building condition determines the effects. With incentive contrast, for example, relative response rates in the history-testing condition will change as a function of the relative shifts in reinforcement rate. Thus, going from more- to less-, or less- to more-frequent reinforcement between the two conditions will result in differential responding in the history-testing condition, with higher rates in the less- to more-frequent reinforcement shift. The discrimination account would seem to be more general, suggesting that the more discriminable the two conditions are because of differences in reinforcement rate (cf. Commons, 1979), the smaller would be the effects of the past schedule.

A more empirical rationale also exists for considering the potential role of reinforcement rate differences between the history-building and history-testing conditions. In previous experiments, as noted above, such potential effects have been confounded because different parameters of the reinforcer, such as magnitude or rate, generate different response rates. Several studies of behavioral history have examined performance differences in the testing condition as a function of different schedules, and thus response rates, during training. This analysis has been made while holding reinforcement rate constant across the history-building and history-testing conditions (e.g., Freeman & Lattal, 1992; LeFrancois & Metzger, 1993). In the present experiment, the opposite question was posed: How does exposure to different rates of reinforcement affect subsequent responding when response rates are held constant? We label such possible effects as reinforcement history effects because, if manifest, they would result from the interactions of history-building and history-testing reinforcement rates with equal response rates. The present experiment combined the observations of the different theoretical and empirical issues described above as the framework for examining the effects of different reinforcement rate histories on subsequent responding on a common schedule. To accomplish this experimentally, a tandem VI DRL schedule was used that allowed constant response rates across two disparate reinforcement rates during a history-building condition that was followed by exposure to FI schedules that arranged more or less frequent reinforcement in the history-testing condition than did the tandem schedules. The interest was in determining whether a reliable difference in response rates in a history-testing condition might develop as a function of different reinforcement rate histories.

Method

Subjects

Four experimentally naive male White Carneau pigeons were maintained at 80% of their free-feeding weights. Water and health grit were freely available in the home cage.

Apparatus

A Gerbrands Model G7105 operant conditioning chamber was housed in a Gerbrands Model G7210 sound- and light-attenuating enclosure. A response key (1.5-cm diameter), centered on the front panel of the work area and operated by a force of about 0.15 N, was transilluminated white or green by different 28-V DC bulbs covered with colored caps. Two 28-V DC bulbs covered by white caps and located toward the rear of the ceilings provided general illumination. Reinforcement was 2-s access to grain from a hopper located behind a circular aperture (6 cm diameter) centered on the work panel, with the lower edge 7 cm from the chamber floor. The food aperture was illuminated by two 28-V DC bulbs during reinforcement. Noise from a ventilation fan located on the back of the enclosure masked extraneous sounds. Control and recording operations were accomplished with a microcomputer (Dell 425s/NP) using MedPC experiment-control software and connected to the chambers by a MedPC interfacing system. A Gerbrands cumulative recorder (Model C-3) also was used.

Procedure

Hand-shaping of the key-peck response was followed by one session in which each key peck was reinforced. Following this, a VI schedule was introduced in which the average interreinforcer interval (IRI) was increased from 10 s to 120 s progressively over 12 sessions in the presence of the white keylight and the constant houselight. Sessions ended after 40 reinforcers. A constant-probability progression (Fleshler & Hoffman, 1962) consisting of 20 intervals was used to generate the VI schedules.

Thereafter, a series of history-building sessions was in effect in which different reinforcement rates were scheduled in two different stimulus conditions while response rates were equated (described below). This history-building was followed by a series of history-testing sessions in the presence of FI schedules with either a short or long IRI. Following a return to the history-building condition, a final series of history-testing sessions were conducted in which the FI schedule values were reversed from the previous history-testing series to allow within-subject comparisons of the effects of different FI values on the appearance of behavioral history effects.

During each of the history-building and history-testing conditions, two daily 20-min sessions occurred. The two sessions were associated with distinct stimuli: a flashing (on for 0.5 s and then off for 0.5 s) versus a constant houselight combined with a green versus a white response key. The order of the two daily sessions was determined by a coin toss, with the restriction that the same order could not occur for more than 3 consecutive days. The sessions were separated by 2 hr, during which time each pigeon was returned to its home cage. The stimulus combinations in each session, the final schedule values, and the IRIs during each condition are shown in Table 1.

Table 1. Reinforcement schedules and median interreinforcer intervals (IRIs; ranges in parentheses), in seconds, over the last 10 sessions of the two history-building conditions (Build 1 and Build 2) and over the first 10 sessions of the history-testing conditions (Test 1 and Test 2). The houselight conditions (f = flashing; c = constant) and keylight colors (g = green; w = white) are indicated in parentheses below the labels Shorter (interreinforcer interval) and Longer (interreinforcer interval).

| Condition | Pigeon |

|||

| 1114 |

1117 |

|||

| Shorter (f-g) | Longer (c-w) | Shorter (c-w) | Longer (f-g) | |

| Build 1 | VI 20-s DRL 4-s | VI 120-s DRL 7-s | VI 20-s DRL 3.2-s | VI 120-s DRL 7-s |

| 32.7 | 218.0 | 29.5 | 198.0 | |

| (30.4–39.3) | (98.0–298.0) | (27.2–39.3) | (98.0–598.0) | |

| Test 1 | FI 218-s | FI 218-s | FI 29-s | FI 29-s |

| 221.6 | 222.8 | 30.2 | 30.1 | |

| (219.6–222.2) | (221.4–289.3) | (29.5–30.9) | (29.3–30.8) | |

| Build 2 | VI 20-s DRL 4-s | VI 120-s DRL 7-s | VI 20-s DRL 2-s | VI 120-s DRL 8-s |

| 33.8 | 131.3 | 24.6 | 398.0 | |

| (30.4–40.8) | (118.0–398.0) | (24.0–27.2) | (298.0–1198.0) | |

| Test 2 | FI 33-s | FI 33-s | FI 398-s | FI 398-s |

| 34.5 | 34.2 | 400.5 | 399.5 | |

| (34.2–35.1) | (33.6–36.0) | (399.5–402.0) | (398.5–402.5) | |

During the first history-building condition, tandem VI DRL schedules were in effect in both daily sessions. The mean value of the IRI of the VI schedule was short (20 s) in one session and long (120 s) in the other to yield differences in reinforcement rates. The values of the DRL schedules in the two sessions also were adjusted across days to equate response rates in the two daily sessions. The final values of the tandem schedules for each pigeon are shown in Table 1. This condition was in effect for 75 days for Pigeons 1114, 928, and 933; and 85 days for Pigeon 1117.

Next, FI schedules were implemented to test the effects of the previous history-building condition. Except for the substitution of two identical FI schedules for the tandem VI DRL schedules, the procedure and stimuli were otherwise identical to the previous condition. The FI values, shown in Table 1, were determined by the median IRI of each of the final 10 shorter IRI history-building sessions for Pigeons 1117 and 928, and of the final 10 longer IRI history-building sessions for Pigeons 1114 and 933. Here and subsequently, the IRIs of the tandem VI DRL schedules were determined by dividing session time, excluding reinforcer access time, by the number of reinforcers. This history-testing condition was in effect for 30 days for each pigeon.

Following the history-testing condition, the previous history-building condition was reinstated, but, as before, the values of the DRL component of the tandem schedule were modified as required to equate response rates in the two daily sessions. Table 1 shows the final values of the tandem VI DRL schedules for each pigeon in the second history-building condition. This condition was in effect for 50 days for each pigeon.

The procedure of the second history-testing condition was the same as that of the first except that the large FI values were in effect for pigeons previously exposed to the small FI values in the first test, and vice versa. Table 1 shows the final value of the FI schedules for each pigeon in the second history-testing condition, which was determined as during the first history-testing condition. This second history-testing condition was in effect for 30 days for each pigeon.

Results

The data in Table 1 show that the median IRIs during the last 10 days in each history-building condition in the two multiple-schedule components (i.e., the two daily sessions) were markedly different from one another. This effect was consistent across all four pigeons. The data also show that the median FI IRIs in each history-testing condition for each pigeon were approximately equal to the appropriate preceding baseline tandem VI DRL IRI across stimulus conditions.

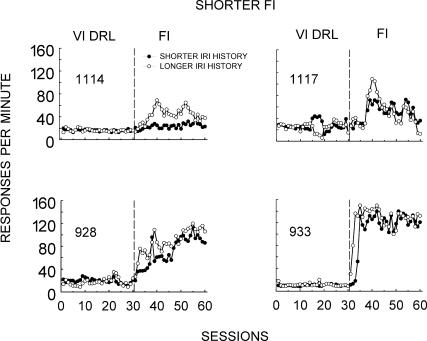

Figure 1 shows session-by-session response rates of each pigeon in each component for the last 30 tandem VI DRL history-building sessions and each of the history-testing sessions when the shorter FI schedule was in effect. During the tandem VI DRL schedules, response rates in the shorter IRI and the longer IRI components were approximately equal. As a further assessment of the differences in response rates between the two components during this history-building condition, sign tests were conducted separately for each subject. Table 2 shows the number of sessions of the last 15 in which response rates in the longer IRI condition were higher than those in the shorter IRI condition, and the statistical significance. For no subject did the number deviate from chance. Thus, the statistical tests also indicate that response rates in the shorter IRI and the longer IRI components were equal.

Fig 1. Response rates of each subject for all sessions in which a shorter FI schedule was in effect and for the preceding 30 sessions in which tandem VI DRL schedules were in effect.

Filled circles represent responding under the stimuli correlated with a tandem VI DRL schedule that produced shorter IRIs, whereas open circles represent responding under the stimuli correlated with a tandem VI DRL schedule which produced longer IRIs.

Table 2. Number of sessions (numerators) in which response rates in the longer IRI history condition were higher than those in the shorter IRI history condition during the last 15 sessions of the history-building conditions, and the first and last 15 sessions of the history-testing conditions.

| Pigeon | ||||

| Condition | 1114 | 1117 | 928 | 933 |

| Shorter FI | ||||

| Build | ||||

| Last 15 | 6/12 | 9/14 | 9/15 | 5/9 |

| Test | ||||

| First 15 | 14/15** | 10/13* | 14/15** | 14/15** |

| Last 15 | 14/14** | 1 /14**, a | 12/14** | 7/15 |

| Longer FI | ||||

| Build | ||||

| Last 15 | 1/12**, a | 6/14 | 2/15**, a | 7/13 |

| Test | ||||

| First 15 | 0/14**, a | 11/14* | 9/13 | 8/15 |

| Last 15 | 1/14**, a | 14/15** | 15/15** | 11/14* |

Note. Denominator values less than 15 indicate that the data of at least one session were excluded because the response rates in both conditions were equal (sign tests do not count such data) or data of one of the two conditions were absent.

Statistically significant but in the reverse direction, indicating that sessions in which response rates in the longer IRI history condition were higher than those in the shorter IRI history condition were significantly fewer than would be expected by chance.

p < .05.

p < .01.

When identical shorter FI schedules were introduced in both daily sessions in the history-testing condition, response rates in the two stimulus conditions were, with the exception of the FI following the shorter IRI history for Pigeon 1114, higher than those in the history-building condition, an effect of the change from a low-rate contingency requirement to an FI schedule. To the extent that a history effect is present, it should be seen (both here and in Figure 2 that follows) in response rate differences between the two identical FI components. Response rates in the two stimulus conditions usually were higher in the FI in the stimulus condition previously correlated with the longer IRI history. This occurred on the first day of the FI exposure for Pigeons 928 and 933, and from the second day for Pigeons 1114 and 1117. Higher rates thereafter were observed in the former longer IRI component for 28 of the 30 sessions for Pigeon 1114, and for 26 of the 30 sessions for Pigeon 928. The results were more complex with Pigeons 933 and 1117. For the first 14 sessions with Pigeon 933, and for 11 of the first 16 sessions with Pigeon 1117, rates were higher in the stimulus condition previously correlated with the longer IRI history. Thereafter, however, the rates in the two historical conditions converged for Pigeon 933, and the rates for Pigeon 1117 reversed such that during the last 14 sessions rates were higher in the former shorter IRI component. These transient effects are consistent with other results showing behavioral history effects to be transient across sessions (e.g., Freeman & Lattal, 1992).

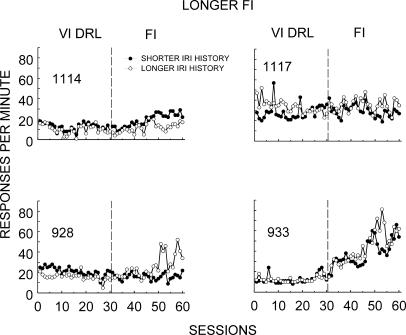

Fig 2. Response rates of each subject for all sessions in which a longer FI schedule was in effect and for the preceding 30 sessions in which tandem VI DRL schedules were in effect.

Filled circles represent responding under the stimuli correlated with a tandem VI DRL schedule that produced shorter IRIs, whereas open circles represent responding under the stimuli correlated with a tandem VI DRL schedule which produced longer IRIs.

Because effects of the reinforcement history were transient within the 30 testing sessions for two of the four pigeons, sign tests were conducted separately for the first and last 15 sessions. For each pigeon during the first 15 test sessions, the number of sessions in which response rates in the longer IRI history condition were higher than those in the shorter IRI history condition, was significantly more than would be expected by chance (Table 2). These results support the preceding descriptions of the results that response rates in the longer IRI history condition were higher than those in the shorter IRI history condition during at least the first 14 sessions of the shorter FI history-testing condition. Results of the sign tests for the last 15 history-testing sessions also are consistent with those from the visual inspections. That is, sessions in which response rates in the longer IRI history condition were higher than those in the shorter IRI history condition were significantly more than would be expected by chance for Pigeons 1114 and 928, significantly fewer for Pigeon 1117, and not significantly different for Pigeon 933.

Figure 2 shows session-by-session response rates of each pigeon for the last 30 tandem VI DRL history-building sessions and each of the 30 history-testing sessions when the longer FI schedule was in effect. In the latter sessions of the history-building condition, response rates in the shorter IRI and longer IRI conditions were approximately equal for Pigeons 1117 and 933. For Pigeons 1114 and 928, the rates in the shorter IRI condition were somewhat higher than those in the longer IRI condition, but the absolute magnitude of the differences in the response rates between the two conditions was minimal. The median number of responses per min (ranges in parentheses) for the last 15 shorter IRI and longer IRI history-building sessions for Pigeons 1114 and 928, respectively, were 14.0 (5.0 to 18.0) and 11.5 (1.0 to 17.0), and 19.5 (10.0 to 22.0) and 15.0 (5.0 to 25.0). Results of the sign tests shown in Table 2 support the above descriptions. The number of sessions where response rates in the longer IRI condition were higher than those in the shorter IRI condition was within the range of what would be expected by chance for Pigeons 1117 and 933, whereas the number was significantly fewer than would be expected by chance for Pigeons 1114 and 928.

When identical longer FI schedules were introduced in both daily sessions in the history-testing condition, response rates in the two FI components increased reliably for only Pigeon 933, a reversal of the finding of increases in seven of eight cases when the transition was to the shorter FI schedule. This difference thus seems to be largely the result of the differences in reinforcement rates between the two conditions. In terms of differences in response rates between the two stimulus conditions, the rates were higher in the FI in the stimulus condition previously correlated with the longer IRI history from the second day of the FI exposure for Pigeon 1117, and from the third day with Pigeon 928. For these two pigeons, higher response rates during the previously longer IRI component were observed for 24 or 26 of the 30 sessions during which the condition was in effect. Pigeon 933 had higher response rates in the formerly longer IRI component during 17 of the last 21 sessions in this condition. Unlike Pigeons 1117 and 928, however, this effect for this pigeon became manifest only after several sessions of higher rates in the formerly short IRI component. Unlike the other three subjects, Pigeon 1114 consistently had higher response rates in the formerly short IRI component.

Results of sign tests generally support the preceding descriptions of the results (Table 2). There were significantly more sessions in which response rates in the longer IRI history condition were higher than those in the shorter IRI history condition than would be expected by chance during the first and last 15 sessions for Pigeon 1117, and only during the last 15 sessions for Pigeons 928 and 933. This number of sessions was significantly fewer than would be expected by chance for Pigeon 1114 during both the first and last 15 sessions.

Thus, to summarize the response rate findings, when the transition was to a short-valued FI schedule, response rates were significantly higher in the longer IRI history component. When the transition was to a long-valued FI schedule, two of the four subjects showed higher rates in the longer IRI history component, one showed indifferent rates, and the fourth showed higher rates in the shorter IRI history component. In all cases where there were differences in response rates in the two IRI history components, the differences tended to be small.

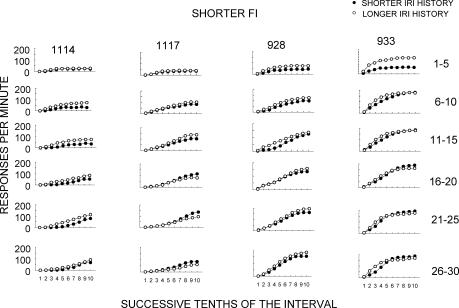

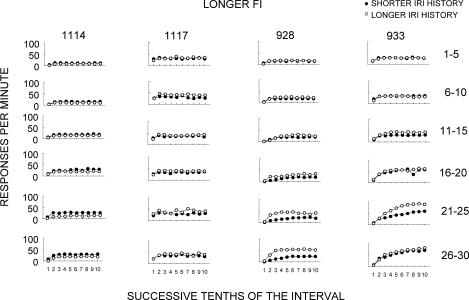

Figures 3 and 4 show response rates in successive tenths of the fixed interval for each pigeon under the shorter and longer FI schedules, respectively, averaged over successive five-session blocks of the FI history-testing conditions. Response rates usually increased and, in the case of the shorter FI schedule, often became more positively accelerated over successive blocks of sessions (although on many occasions response rates reached asymptote before the final tenths of the intervals). In the case of the longer FI schedules, response rates were lower at the beginning of the interval and increased somewhat thereafter, but only in the case of Pigeon 933, from session 16 onward, was there evidence of successively increasing responding of the sort shown more or less consistently during the shorter FI condition. The data in these figures also iterate the overall response rate findings described for Figures 1 and 2, but now with response rates distributed across the FI. For all cases except for Pigeon 1114 in the longer FI history-testing condition, slightly but more or less consistently higher rates of responding occurred in the presence of the stimuli previously correlated with the longer IRI during the history-building condition.

Fig 3. Response rates during successive tenths of the shorter FI for each subject.

From top to bottom, averaged values for Sessions 1 through 5, 6 through 10, 11 through 15, 16 through 20, 21 through 25, and 26 through 30 of the history-testing condition, respectively, are shown. Filled circles represent responding under the stimuli previously correlated with a tandem VI DRL schedule that produced shorter IRIs, whereas open circles represent responding under the stimuli previously correlated with a tandem VI DRL schedule which produced longer IRIs.

Fig 4. Response rates during successive tenths of the longer FI for each subject.

From top to bottom, averaged values for Sessions 1 through 5, 6 through 10, 11 through 15, 16 through 20, 21 through 25, and 26 through 30 of the history-testing condition, respectively, are shown. Filled circles represent responding under the stimuli previously correlated with a tandem VI DRL schedule that produced shorter IRIs, whereas open circles represent responding under the stimuli previously correlated with a tandem VI DRL schedule which produced longer IRIs.

The present FI pattern results could have been related to the fact that, because each session lasted for 20 min, a larger number of reinforcers occurred during the shorter FI schedules than that during the longer FI. We therefore evaluated the development of FI responding across successive reinforcers, rather than across successive sessions. Except for the following issue, this analysis, however, did not add usefully to what already has been discussed with respect to the effects of past reinforcement rates on present performance. When the number of reinforcers provided the basis for the comparison, the development of positively accelerated response rates across successive tenths of the FI generally was comparable for the longer and shorter-valued FI schedules. As noted, however, during the very early exposure to the FI schedules, response patterns across the IRI were flatter under the longer FI schedules, whereas they were accelerated under the shorter FI schedules. Ferster and Skinner (1957, pp. 142–185) studied transitions from continuous reinforcement to various values of FI schedules ranging between 1 to 45 min. Under shorter FI schedules, reinforcers tended to occur while response rates were high, whereas reinforcers with early exposure to longer FI schedules usually occurred after the rate had fallen to low levels. Although the range of the FI values was relatively small in the present experiment, and the transition was from tandem VI DRL to FI, the present experiment also illustrates subtle differences in the early development of FI responding as a function of values of an FI schedule.

Discussion

Unlike previous studies of behavioral history where response and reinforcement rates during the history-building conditions have either covaried (e.g., Tatham et al., 1993) or the latter have been held constant while allowing response rates to change (e.g., Freeman & Lattal, 1992; LeFrancois & Metzger, 1993), the present procedure examined historical effects of different reinforcement rates unconfounded by disparate response rates. In most studies of behavioral history effects, too, different rates of responding are developed in the two history-building conditions, which then are assessed in terms of their rate of convergence to a common level following introduction of the common, history-testing schedule (e.g., Baron & Leinenweber, 1995; Wanchisen, Tatham, & Mooney, 1989). The effects examined in the present experiment were the opposite of this. That is, the response rates were equalized during the history-building condition and divergence then became the test of the behavioral history effect.

The behavioral history effects observed here were small and transient relative to those observed when reinforcement rate is held constant and response rates are varied. The divergences from one another during the history-testing conditions were sufficient, however, to reach statistical significance in most instances. The rate and pattern of divergence varied across subjects and conditions. In some instances response rates remained similar immediately following the change to the history-testing condition (e.g., Pigeon 928 under the longer FI schedule), but in other cases the separation started early and continued at approximately the same level throughout (e.g., Pigeon 1117 during the longer FI condition and Pigeon 1114 during the shorter FI condition). Nonetheless, in most instances, the divergence was greater in the FI condition correlated with the lower reinforcement rate during the history-building condition. We label such effects as reinforcement history effects, to distinguish them from the more frequently discussed behavioral history effects (Freeman & Lattal, 1992) whereby different rates of responding in training persist in a subsequent common testing condition. The small effects obtained when reinforcement rate rather than response rate is the basis for a differential history suggest that the latter contributes more to behavioral history effects than does the former when the two are varied together, as in some of the earlier behavioral history studies (e.g., Weiner, 1964).

With Pigeons 1114 and 1117, the IRIs in the two long-IRI baseline conditions preceding the long and short FIs differed considerably (218 vs 131 for 1114 and 398 vs 198 for 1117); however, in the other two cases they did not. Ideally, the reinforcement rates would have been similar between the two baseline conditions; however, variation was an inevitable consequence of having to adjust the value of the DRL components of the tandem schedules to keep the response rates the same in the two components. Despite the differences in baseline reinforcement rates, similar results obtained between Pigeons 1117, 928, and 933. Pigeon 1114 under the long FI had the opposite effect from the other pigeons. Whether this difference was related to the shifting baselines is not known, but remains a possibility.

Response rates during the FI schedules increased in seven of eight cases from the preceding baselines when the transition was to the shorter FI schedule, and they remained unchanged or increased only slightly or inconsistently in six of eight cases under the longer FI schedule, Pigeon 933 being the exception in the latter description. Removing a contingency of differential reinforcement of long IRTs often increases response rates (cf. Freeman & Lattal, 1992, Experiment 3). If this were the case here, then response rates in both the short and long FI components might be expected to be higher than those in the previous tandem VI DRL component because the same IRT was removed simultaneously from both components. The increases, however, were more consistently higher than in the previous tandem VI DRL schedule in the shorter FI component. This differential increase suggests that eliminating the IRT requirement was not the critical variable in increasing subsequent FI response rates. Rather, the differential increase is more reasonably attributed to the interaction between the reinforcement rate in the history-building condition and the temporal parameter of the FI schedule in the history-testing condition.

The data in Figures 3 and 4 show that, despite the small but consistent response rate differences, there were no consistent differences in patterns of FI responding as a function of these different histories, a finding in accord with that of Freeman and Lattal (1992). In Freeman and Lattal, one of the history-building conditions was an FR schedule, which yields a break-and-run pattern of responding not unlike the pause–respond pattern obtained on FI schedules. Thus, in the history-testing condition when the FI schedule replaces the FR schedule, the pattern of responding is likely to be at least qualitatively similar to the previously established FR break-and-run pattern. Such a similarity might be reason to predict that an FR history would result in more rapid adjustment of the response patterns to the fixed-reinforcer interval of the FI schedule than would a DRL history where such a break-and-run pattern was not observed. There was, however, no evidence of such an effect in Freeman and Lattal. Thus, in the present experiment, the absence of differential temporal control was not surprising, for, unlike the similar history-building FR and history-testing FI patterns in Freeman and Lattal, there was nothing in the pigeons' history of responding on the identical (except for DRL value) history-building tandem schedules in this experiment to even suggest a basis for differential pattern control during the subsequent FI history-testing condition.

Previous experiments analyzing the role of different reinforcement rates on subsequent responding have yielded mixed results. As described in the Introduction, Tatham et al. (1993) found no systematic differences in the effects of different reinforcement rates in the history-building condition, arranged by varying the value of a DRL schedule, on subsequent FI responding, a result made difficult to interpret because of the failure to separate response and reinforcement rates. Nevin (1974, 1979) has found consistently that lower reinforcement rates yield less resistance to extinction than do higher reinforcement rates. Because Nevin used VI schedules to generate different reinforcement rates, response rates varied with different reinforcement rates. To the extent that transitions to extinction can be compared to transitions to any other schedule or other disrupter (i.e., changing to either extinction or prefeeding are potential disrupters in the same sense that changing to either a relatively rich or relatively lean FI schedule is a potential disrupter), the present results are comparable to Nevin's findings that higher reinforcement rates are more resistant to change. Here, however, such effects occurred when response rates maintained by the two different reinforcement rates were equal. Indeed, when reinforcement rates are held constant, differences in persistence occur as a function of differences in rate-controlling contingencies (Lattal, 1989). The present results complement these latter findings by showing a subtle but consistent effect of reinforcement rate unconfounded by response-rate differentials. The small size of the effects suggests that perhaps much of what has been previously described as an effect of reinforcement rate should be tempered by acknowledging a small contribution of reinforcement rate differences.

The role of the contingencies during the testing conditions may be related to both the discrimination hypothesis (Tatham et al., 1993) and incentive contrast effects (Crespi, 1942; Flaherty, 1982), in that both consider the changed-to conditions when predicting behavioral effects. In those cases where the shift was from the history-building condition to the shorter FI schedule in the testing condition, the discrimination hypothesis would predict more persistence in the stimulus condition associated with the shorter rather than the longer IRI during the history-building—that is, rates will be more like baseline in the shorter IRI history condition. This was observed with all four pigeons. Generalizing from the incentive contrast findings, relatively higher rates in the longer IRI history condition would be predicted because the pigeon is getting more frequent food delivery in the testing than in the history-building condition. By the same token, response rates in the component with the shorter IRI history should be lower than those in the component with the longer IRI history. These effects also were observed in all four animals. In those cases where the shift was from the history-building condition to the longer FI schedule, the discrimination hypothesis predicts more persistence in the components with the longer than with the shorter IRI history, that is, rates would be more like those in the component with the longer IRI during the history-building condition. Except for Pigeon 1114, such effects were not observed with any of the subjects. From the standpoint of incentive contrast, lower rates would be expected in the shorter IRI history component than in the longer one. This effect was obtained in three of four pigeons. Thus, the results offer only mixed support for the discrimination hypothesis, but are for the most part consistent with the incentive contrast findings.

The use of a within-subject design to assess behavioral history effects (cf. Freeman & Lattal, 1992; Ono & Iwabuchi, 1997) allowed the isolation of small but consistent differences in responding as a function of different rates of reinforcement. One potential difficulty with such designs, however, is the possibility of behavioral interactions between the two components of the schedule, that is, between the early and late sessions within a day. Response rates in the two daily sessions tended to covary, as the data in Figures 1 and 2 show. Such covariation might mask incentive contrast effects by causing the two response rates to more closely track one another than they might in the absence of the other stimulus condition. Even if induction was operative here, the systematic differences in the FI schedules still occurred as a function of the past reinforcement rates and the interactions between the past and present reinforcement rates.

References

- Baron A, Leinenweber A. Effects of a variable-ratio conditioning history on sensitivity to fixed-interval contingencies in rats. Journal of the Experimental Analysis of Behavior. 1995;63:97–110. doi: 10.1901/jeab.1995.63-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett J.E. Behavioral history as a determinant of the effects of d-Amphetamine on punished behavior. Science. 1977 Oct 7;198:67–69. doi: 10.1126/science.408925. [DOI] [PubMed] [Google Scholar]

- Commons M.L. Decision rules and signal detectability in a reinforcement-density discrimination. Journal of the Experimental Analysis of Behavior. 1979;32:101–120. doi: 10.1901/jeab.1979.32-101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crespi L.P. Quantitative variation of incentive and performance in the white rat. American Journal of Psychology. 1942;55:467–517. [Google Scholar]

- Ferster C.B, Skinner B.F. Schedules of reinforcement. New York: Appleton-Century-Crofts; 1957. [Google Scholar]

- Flaherty C.F. Incentive contrast: A review of behavioral changes following shifts in reward. Animal Learning and Behavior. 1982;10:409–440. [Google Scholar]

- Fleshler M, Hoffman H.S. A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1962;5:529–530. doi: 10.1901/jeab.1962.5-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman T.J, Lattal K.A. Stimulus control of behavioral history. Journal of the Experimental Analysis of Behavior. 1992;57:5–15. doi: 10.1901/jeab.1992.57-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattal K.A. Contingencies on response rate and resistance to change. Learning and Motivation. 1989;20:191–203. [Google Scholar]

- LeFrancois J.R, Metzger B. Low-response-rate conditioning history and fixed-interval responding in rats. Journal of the Experimental Analysis of Behavior. 1993;59:543–549. doi: 10.1901/jeab.1993.59-543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nader M.A, Thompson T. Interaction of methadone, reinforcement history, and variable-interval performance. Journal of the Experimental Analysis of Behavior. 1987;48:303–315. doi: 10.1901/jeab.1987.48-303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A. Response strength in multiple schedules. Journal of the Experimental Analysis of Behavior. 1974;21:389–408. doi: 10.1901/jeab.1974.21-389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A. Reinforcement schedules and response strength. In: Zeiler M.D, Harzem P, editors. Reinforcement and the organization of behaviour. Chichester, England: Wiley; 1979. pp. 117–158. In. eds. [Google Scholar]

- Ono K, Iwabuchi K. Effects of histories of differential reinforcement of response rate on variable-interval responding. Journal of the Experimental Analysis of Behavior. 1997;67:311–322. doi: 10.1901/jeab.1997.67-311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tatham T.A, Wanchisen B.A, Yasenchack M.P. Effects of differential-reinforcement-of-low-rate schedule history on fixed-interval responding. The Psychological Record. 1993;43:289–297. [Google Scholar]

- Urbain C, Poling A, Millam J, Thompson T. d-Amphetamine and fixed-interval performance: Effects of operant history. Journal of the Experimental Analysis of Behavior. 1978;29:385–392. doi: 10.1901/jeab.1978.29-385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wanchisen B.A, Tatham T.A, Mooney S.E. Variable-ratio conditioning history produces high- and low-rate fixed-interval performance in rats. Journal of the Experimental Analysis of Behavior. 1989;52:167–179. doi: 10.1901/jeab.1989.52-167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner H. Conditioning history and human fixed-interval performance. Journal of the Experimental Analysis of Behavior. 1964;7:383–385. doi: 10.1901/jeab.1964.7-383. [DOI] [PMC free article] [PubMed] [Google Scholar]