Abstract

An observing procedure was used to investigate the effects of alterations in response–conditioned-reinforcer relations on observing. Pigeons responded to produce schedule-correlated stimuli paired with the availability of food or extinction. The contingency between observing responses and conditioned reinforcement was altered in three experiments. In Experiment 1, after a contingency was established in baseline between the observing response and conditioned reinforcement, it was removed and the schedule-correlated stimuli were presented independently of responding according to a variable-time schedule. The variable-time schedule was constructed such that the rate of stimulus presentations was yoked from baseline. The removal of the observing contingency reliably reduced rates of observing. In Experiment 2, resetting delays to conditioned reinforcement were imposed between observing responses and the schedule-correlated stimuli they produced. Delay values of 0, 0.5, 1, 5, and 10 s were examined. Rates of observing varied inversely as a function of delay value. In Experiment 3, signaled and unsignaled resetting delays between observing responses and schedule-correlated stimuli were compared. Baseline rates of observing were decreased less by signaled delays than by unsignaled delays. Disruptions in response–conditioned-reinforcer relations produce similar behavioral effects to those found with primary reinforcement.

Keywords: observing, conditioned reinforcement, response-reinforcer relations, treadle press, key peck, pigeon

Techniques used to explore the effects of altering response–primary-reinforcer relations (e.g., Gleeson & Lattal, 1987; A. Williams & Lattal, 1999) on the maintenance of behavior have involved the removal of the response-reinforcer dependency and introducing delays between the response and primary reinforcer (e.g., food). Response rates decrease in a negatively accelerated fashion as a function of increasing delay value (e.g., Gleeson & Lattal, 1987; Reilly & Lattal, 2004; Schaal & Branch, 1990; Sizemore & Lattal, 1978) and signaled delays maintain higher response rates than unsignaled delays of equal duration (e.g., Richards, 1981; Schaal & Branch, 1988). When the dependency between a response and a primary reinforcer is removed, but the consequence continues to occur independently of responding, response rates decrease, sometimes to zero (e.g., Lattal & Maxey, 1971; Rescorla & Skucy, 1969; Zeiler, 1968; cf. Lieving & Lattal, 2003; Neuringer, 1970).

Response–conditioned reinforcer relations have been examined within traditional procedures for investigating conditioned reinforcement effects. Royalty, Williams, and Fantino (1987) introduced delays to response-produced discriminative stimuli in three-component chained variable-interval (VI) schedules. The effects of delays on responding to produce these stimuli were comparable to the effects of delay to primary reinforcement.

Marr and Zeiler (1971) investigated the effects of altering the dependency between responding and conditioned reinforcement. Brief stimulus presentations were superimposed on fixed-interval (FI) schedules, and response rates were enhanced locally (i.e., in the early parts of fixed-interval schedules). When brief stimulus presentations were delivered independently of responding, response rates were suppressed (locally) relative to conditions that arranged for response-dependent stimulus presentations. The conjoint schedules used by Marr and Zeiler, however, suffer from methodological shortcomings similar to those inherent with chained-schedule procedures. Namely, the response that is interpreted as changing in frequency due to conditioned reinforcement effects is the same response that ultimately produces the primary reinforcer. More importantly, the effects attributed to conditioned reinforcement in that study were not sufficiently strong to affect overall response rates, the most common measure of reinforcer efficacy within this literature. Although local patterning was affected by brief stimulus presentations, suppression of responding by response-independent brief stimulus presentations occurred only during the early portions of the FI schedule, and for only 1 of the 2 subjects. The results therefore did not show unequivocally the effects of altering response–conditioned-reinforcer relations by comparing response-dependent and response-independent conditioned reinforcer delivery. Similarly, B. Williams and Dunn (1991) arranged for the superimposition of stimulus presentations in a concurrent-chains procedure. Additional presentations of stimuli that were typically followed by food delivery were made contingent on choice, but these additional presentations were not followed by food. Under these conditions, choice was a function of the relative frequency of these additional stimulus presentations, and as such offered evidence for the conditioned-reinforcing effect of these stimulus presentations on choice responding within a chained-schedule context.

In contrast to chained schedules and second-order schedules, the observing procedure uncouples the two sources of reinforcement (primary and conditioned) by requiring two operants for their production (Wyckoff, 1952). Stimulus changes correlated with the presence or absence of a reinforcement contingency, for example, are made contingent on one response, and the production of the primary reinforcer (e.g., food) is made contingent on a second response, as in Wyckoff's (1952) procedure and in variations of that method (e.g., Branch, 1970, 1973; Bowe & Dinsmoor, 1983; Kelleher, Riddle, & Cook, 1962; Shahan, 2002; Shahan, Magee, & Dobberstein, 2003). For this reason the observing procedure may provide a more definitive conclusion about the effects of conditioned reinforcement on behavioral maintenance when compared to those procedures that measure the occurrence of a single response that produces both primary and conditioned reinforcement. The observing procedure allows for examinations of response–reinforcer relations that may be less confounded by the effects of primary reinforcement on the response in question (see B. Williams, 1994 for further review).

In the present study, the behavioral effects of altering the response–reinforcer relation between responding and conditioned reinforcement were examined by using an observing procedure. The response–conditioned reinforcer relation was altered by (1) manipulating the presence or absence of a response–reinforcer dependency for observing, (2) imposing resetting delays between observing responses and the conditioned reinforcers they produced, and (3) examining the addition of signals to resetting delays between observing responses and the conditioned reinforcers they produced.

Experiment 1

The effect of removing the response–conditioned reinforcer dependency on behavior maintained by conditioned reinforcement was investigated in Experiment 1. This was achieved by comparing baselines in which behavior produced conditioned reinforcement to conditions in which response-independent conditioned reinforcement was delivered at rates equal to those in the response-dependent reinforcement baseline.

Method

Subjects

Three White Carneau pigeons with experimental histories were used (Pigeons 200, 166, and 35). Each was maintained at 80% of ad libitum weight via postsession feedings. Free access to water and health grit was available in each pigeon's home cage.

Apparatus

A standard two-key pigeon operant chamber was used. The chamber was housed in a sound-attenuating enclosure, with a ventilation fan that masked extraneous noise. The right response key could be transilluminated red, green, or white (the left key remained dark and inoperative throughout Experiments 1 and 2), and required approximately 0.15 N to operate. A 5-cm wide rodent response lever protruded 2 cm from the work panel and was located 8 cm from the floor and 6 cm left of the hopper. During some conditions, an L-shaped treadle was suspended from the response lever. The treadle was 5 cm wide at the lever and widened to 7 cm at the foot. The foot of the treadle protruded 5 cm from the base, and was approximately 2 cm from the floor of the chamber. Pigeons could step onto the treadle and release it. A microswitch was operated when the treadle was released, and its closure constituted the treadle-press response. Reinforcement was 3-s access to a solenoid-operated hopper that elevated into an aperture centered on the work panel 2 cm above the floor. General illumination (except for the duration of reinforcement) was provided throughout the sessions by a houselight located behind a 4 cm × 4 cm translucent cover at the bottom right corner of the work panel. A microcomputer operating with Med-PC software was used to schedule contingencies and record experimental events.

Procedure

Pretraining

For each pigeon, key pecking was maintained on a multiple VI 60-s extinction (Ext) schedule. The key was transilluminated green during VI and red during Ext (i.e., green = S+, red = S−). The VI schedule consisted of 20 intervals generated by the progression described by Fleshler and Hoffman (1962), with each interval selected without replacement. The VI and Ext components were 80 s in duration and alternated quasi-randomly with the restriction that a particular component could not occur more than three times in succession. Sessions were 80 min in duration and were conducted daily. During this condition, the treadle was unavailable.

After a minimum of 20 sessions, a discrimination ratio was calculated for each of the last 3 sessions by dividing the number of pecks during the VI by the total number of pecks during the session. Pretraining was completed when this ratio equaled or exceeded .95 for 3 consecutive sessions, and overall rates of pecking were judged stable on visual inspection for the last 6 sessions.

Four treadle-press-training sessions then were conducted to establish the treadle-press response using 3-s access to mixed grain as the reinforcer. During these sessions, the keylights were extinguished, and the houselight was illuminated. Following treadle-press training, the multiple VI Ext schedule again was in place for key pecking and the treadle was removed from the chamber. Discrimination sessions were continued for a minimum of 10 additional sessions, and until the criteria described above were met. Following discrimination training, observing contingencies were imposed and withdrawn as described below. The order of conditions for each pigeon and number of sessions conducted within each condition are summarized in Table 1.

Table 1. Sequence of conditions for Experiment 1 and the number of sessions conducted for each.

| Pigeon | Contingency for conditioned reinforcement | Number of sessions |

| 200 | FR 1 | 53 |

| VT 41.8 s | 22 | |

| FR 1 | 28 | |

| VT 46.1 s | 26 | |

| 166 | FR 1 | 54 |

| VT 47.3 s | 22 | |

| FR 1 | 31 | |

| VT 19.0 s | 20 | |

| 35 | FR 1 | 37 |

| VT 18.1 s | 52 | |

| FR 1 | 41 |

Observing Condition

During this condition the treadle was reintroduced into the chamber, the stimuli correlated with the VI and Ext components were turned off, and the key light was transilluminated white (i.e., a mixed VI 60-s Ext schedule was effected). Treadle presses during the VI component of this and all subsequent conditions changed the key color from white to green for 5 s and initiated a 3-s changeover delay (COD). Each treadle press initiated a 3-s timer, during which food reinforcement was unavailable and each subsequent treadle press during this 3-s delay reset the COD. When the COD timer elapsed, VI food reinforcement again was available for key pecks. If an Ext component was scheduled to begin during the 5-s stimulus presentation, the key color reverted to white with the onset of the Ext component. The obtained S+ duration, therefore, could be less than 5 s. Treadle responses during the Ext component had no programmed consequence. All other aspects of the procedure remained the same as in pretraining. In this and all subsequent experiments, conditions were changed when at least 20 sessions had been conducted and the number of observing responses per session was judged to be stable on visual inspection for 4 to 5 consecutive sessions.

Response-independent Conditioned Reinforcement

Following completion of the observing condition, the mean obtained inter-conditioned reinforcer interval for S+ presentations was derived, for each pigeon, by examining the times between S+ presentations across the final six sessions of the observing condition. The time that Ext was in effect was excluded. The interconditioned reinforcer times were summed and divided by the total number of S+ presentations. This value then was used to generate a 20-interval variable-time (VT) schedule using a Fleshler-Hoffman (1962) distribution.

The mixed VI Ext schedule of food reinforcement continued to operate as in the previous condition, but treadle presses had no programmed consequences. When the VI schedule was in effect, S+ presentations were delivered independently of responding according to the yoked VT schedule described above. Operation of the VT timer was suspended during Ext. All other aspects of the procedure remained the same.

When treadling rate stabilized, the observing contingency was reinstated and conditioned reinforcers were delivered on a fixed-ratio (FR) 1 schedule. For Pigeon 166, baseline levels of observing were not recovered following exposure to the VT schedule of conditioned reinforcer delivery. This pigeon reliably paused for 4–5 s following the onset of S+, presumably because of the COD. In an attempt to recover observing, the duration of the S+ was changed from 5 to 10 s for this pigeon only. After increasing the duration of S+, Pigeon 166 began to peck the food key during S+, occasionally producing food. Observing then quickly recovered to baseline levels. For the remainder of the experiment, the duration of S+ presentations was 10 s for this subject.

Pigeons 200 and 166 completed a fourth condition in which the yoking procedure used to remove the contingency between observing and conditioned reinforcement was reinstated.

Results and Discussion

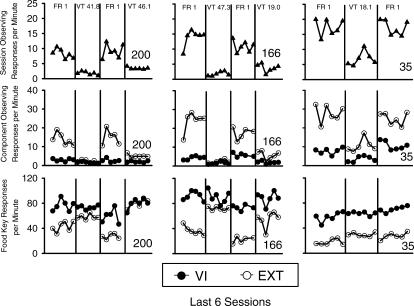

Observing and food key response rates across the last six sessions of each condition are shown for each pigeon in Figure 1. Overall observing responses per min are shown in the top three panels, and are shown separated by component (VI or Ext) in the middle panels. Food key responses per min separated by component are shown in the bottom three panels. Overall rates of observing were calculated by dividing the total number of observing responses by the adjusted session time. Total session time was adjusted by subtracting food delivery time. Component (i.e., separated) rates were calculated by dividing the number of responses by the amount of session time that component was in effect. For response rates in the VI component, reinforcement time was corrected for as described above for overall rate calculations.

Fig 1. Overall observing rates (top three panels) separated by multiple schedule component (middle three panels), and food-key response rates (bottom three panels) during each of the final six sessions of each condition of Experiment 1.

The condition headings refer to the schedule of conditioned reinforcement in effect. The filled circles denote response rates during the VI component of the multiple VI Ext schedule, and empty circles denote response rates during the Ext component. Dissociated rates of observing (middle) and food key (bottom) responding were lost for the sixth session in the third condition and the fifth session of the third condition for Pigeons 200 and 166, respectively.

When the dependency between the observing response and conditioned reinforcement was removed, overall rates of observing decreased reliably both within and between subjects. This finding is consistent with studies that have demonstrated this effect with primary reinforcement (e.g., Lattal & Maxey, 1971; Rescorla & Skucy, 1969; Zeiler, 1968). The observing response was not eliminated by the removal of the dependency, but other studies have demonstrated continued response maintenance by time-based schedules following a history of response-dependent reinforcement (see, for example, Lieving & Lattal, 2003; Neuringer, 1970), and sometimes have found observing to be persistent even when placed on extinction (e.g., Branch, 1970).

Although there was no consequence for observing during the Ext component, rates of observing were consistently higher in that component than in the VI component, as can be seen in the middle three panels of Figure 1. The removal of the dependency between the observing response and S+ presentations decreased observing in both components reliably.

Response rates on the food key tended to be more dissociated across the VI and Ext components when the observing contingency was in place, although this effect was slight for Pigeon 35. Food key response rates during the VI component were relatively unaffected by the presence or absence of the observing contingency. Thus, the dissociation between food key response rates across the VI and Ext components was largely due to changes in responding during the Ext component.

Overall, when response-dependent conditioned reinforcers were made response-independent across conditions, both observing rates and food key rates were affected. Rates of observing decreased, and food key response rates were higher in Ext when the observing contingency was not in effect. When the observing contingency was in effect, rates of observing were high in the Ext component and low in the VI component, suggesting competition to some extent between key pecks (food) and treadle presses (schedule-correlated stimuli).

In Experiment 1, the dependency between responding and conditioned reinforcement was removed. In Experiment 2, the response–reinforcer dependency remained intact, but the response–conditioned reinforcer relation was altered by imposing resetting delays between observing responses and conditioned reinforcement.

Experiment 2

The effects of disrupting response–reinforcer temporal contiguity on behavior maintained by conditioned reinforcement were investigated in Experiment 2. This was done by introducing unsignaled, resetting delays (i.e., tandem FR 1 differential-reinforcement-of-other-behavior, or DRO, schedules) between an observing response and the conditioned reinforcer it produced.

Method

Subjects

Two White Carneau pigeons (Pigeons 114 and 117) were maintained at 80% of ad libitum weight via postsession feedings. Free access to water and health grit was available in each pigeon's home cage. Both had an experimental history that included treadle pressing.

Apparatus

The apparatus was the same as in Experiment 1, with the following exception: a plexiglass barrier was placed between the response key and the treadle. The barrier extended from the top of the work panel to the top left edge of the food aperture (on the treadle side of the hopper), and 5 in. into the chamber. This arrangement forced the pigeons to maneuver under or around the barrier to change over to the other operandum, thus serving as a further obstacle (in addition to the 3-s COD) to the development of adventitious temporal contiguity between key pecks, treadle presses, and food deliveries. These adventitious contiguities have been common enough in this type of experimental arrangement to potentially obscure interpretation of behavioral effects in other studies in our laboratory (e.g., Lieving, 1998; Lieving & Lattal, 2003).

Procedure

The pretraining and observing conditions were as in Experiment 1 with the following exceptions: both pigeons had a history of treadle pressing, so treadle-training sessions were not conducted during pretraining, and the S+ duration was 10 s. When observing responses were established and maintained reliably, the treadle-press response was extinguished before reestablishing an observing baseline and proceeding to the delayed conditioned reinforcement conditions. This was done to ensure that the observing contingency alone was responsible for the maintenance of the treadle-press response. Extinction was in effect for at least 10 sessions and until treadle pressing was zero or near zero for three consecutive sessions. For Pigeon 117 the treadle-press response was not recovered following extinction. To encourage observing, it was decided to increase the possible number of S+ presentations per session by decreasing the duration of the conditioned reinforcer for Pigeon 117, due to its initially low rates of treadle pressing. For this reason, training continued with the observing condition using a 5-s conditioned reinforcer presentation and resulted in recovery of the observing response. The effects of delays were compared to this baseline, and the 5-s S+ was used throughout the remainder of the experiment for this pigeon. The data from the baseline with 10-s immediate conditioned reinforcement are displayed in the Results section as the initial baseline.

Delayed Conditioned Reinforcement

Following the reestablishment of the observing baseline, unsignaled, resetting delays to conditioned reinforcement were effected. The schedule of conditioned reinforcement in effect on the treadle was a tandem FR 1 DRO x, where x was equal either to 0, 0.5, 1.0, 3.0, or 10 s. The DRO constituted an unsignaled resetting delay to conditioned reinforcement. The sequence of conditions, sessions to stability within each condition, and the nominal duration of the conditioned reinforcer for each pigeon are summarized in Table 2. Each treadle press while the VI schedule was in effect initiated a delay to conditioned reinforcement, provided that the VI schedule was still in operation when the delay had timed out. The scheduled delivery of a conditioned reinforcer was terminated if an Ext component began during a delay period. Conditioned reinforcement, as in baseline, consisted of a 10-s change in key color from white to green. A return to baseline was implemented for Pigeon 114 following the final condition in which conditioned reinforcement was delayed (10 s).

Table 2. Sequence of baseline and delay conditions for Experiment 2, the number of sessions conducted for each, and the conditioned reinforcer duration for each pigeon.

| Pigeon | Contingency for conditioned reinforcement | Number of sessions | Conditioned reinforcer duration (s) |

| 114 | FR 1 | 20 | 10 |

| EXT | 22 | 10 | |

| FR 1 | 22 | 10 | |

| Tandem FR 1 DRO 3 s | 24 | 10 | |

| Tandem FR 1 DRO 1 s | 16 | 10 | |

| Tandem FR 1 DRO 10 s | 16 | 10 | |

| FR 1 | 12 | 10 | |

| 117 | FR 1 | 20 | 10 |

| EXT | 14 | 10 | |

| FR 1 | 26 | 5 | |

| Tandem FR 1 DRO 3 s | 20 | 5 | |

| Tandem FR 1 DRO 1 s | 29 | 5 | |

| Tandem FR 1 DRO 0.5 s | 19 | 5 |

Results and Discussion

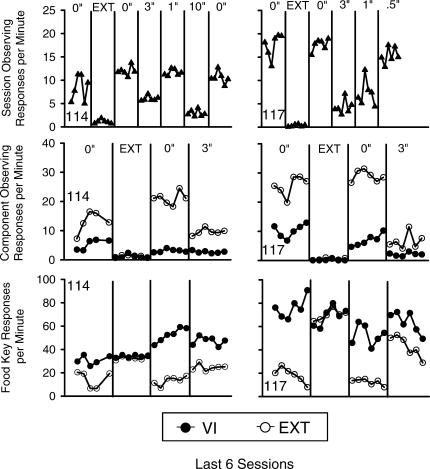

Observing and food key response rates across the final six sessions of each condition are shown for each pigeon in Figure 2. Overall rates of observing were calculated by dividing the number of observing responses by the adjusted total session time. The total session time was adjusted by subtracting all food delivery time, and by subtracting delay time from the nominal session time of 80 min. Although infrequent, any additional delay time that did not end with a conditioned reinforcer (e.g., when the Ext component began during the delay) was added to the time to be subtracted.

Fig 2. Overall observing rates (top three panels) separated by multiple schedule component (middle three panels), and food key response rates (bottom three panels) during the final six sessions of the conditions of Experiment 2.

Dissociated response rates are shown only for the first four conditions. The condition headings refer to the DRO value in effect for the tandem FR 1 DRO x schedule of conditioned reinforcement. The heading EXT refers to the condition during which the observing contingency was removed. The filled circles denote response rates during the VI component of the multiple VI Ext schedule, and empty circles denote response rates during the Ext component. The data for dissociated rates of observing (middle) and food key (top) responding were lost for the fifth session in the first condition for Pigeon 114.

Overall observing responses per min are shown in the top two panels of Figure 2, and are shown separated by component (VI or Ext) in the middle two panels. Food key responses per min separated by component are shown in the bottom two panels. Component (i.e., separated) rates were calculated by dividing the number of responses by the amount of session time that component was in effect (minus reinforcement and delay time in the VI component). These separated response rates for both observing and food key responding are shown only for the first four conditions (baseline, extinction, baseline, and 3-s delay). The data for the remaining conditions were lost because the medium on which they were stored was corrupted.

Treadle pressing was maintained in each condition where the conditioned reinforcement contingency was in effect, and low rates of treadle pressing were obtained when treadle pressing was extinguished. During the second exposure to immediate conditioned reinforcement, rates of observing were less variable for both pigeons, indicating an increase in control by the observing contingency. The subsequent comparisons between immediate and delayed conditioned reinforcement, therefore, were made with respect to the second baseline.

Overall rates of observing varied inversely as a function of delay value. That is, observing tended to decrease as delay value increased. As in Experiment 1, rates of observing were higher in the multiple-schedule Ext component when the observing contingency was in effect (middle panels of Figure 2), and the imposition of a 3-s delay to conditioned reinforcement decreased observing in the Ext component more than in the VI component. Also as in Experiment 1, food key response rates were undifferentiated when the observing contingency was not in effect, and food key rates in the Ext component were decreased when the observing contingency was in place. When conditioned reinforcement was delayed by 3 s, food key rates in Ext increased for both pigeons.

Unsignaled resetting delays to conditioned reinforcement decreased observing for both pigeons in a manner similar to that reported previously with delays between responding and primary reinforcement (e.g., Sizemore & Lattal, 1978; B. Williams, 1976). In addition, the removal via extinction of the dependency between observing and conditioned reinforcement decreased response rates on the treadle to near zero for both pigeons.

Experiment 3

In Experiment 3, the relative effects of signaled and unsignaled delays to conditioned reinforcement on observing were compared. Previous work has shown that the addition of signals to delays between a response and reinforcer attenuates the rate-decreasing effects of those delays (e.g., Lattal, 1984; Richards, 1981; Schaal & Branch, 1988). In Experiment 3, we attempted to extend this finding to response–reinforcer relations involving behavior maintained by conditioned reinforcement.

Method

Subjects

Four White Carneau pigeons with experimental histories were used (Pigeons 276, 3504, 1794, and 516). Each was maintained at 80% of ad libitum weight via postsession feedings. Free access to water and health grit was available in each pigeon's home cage.

Apparatus

The apparatus was as in Experiment 1, with the following exception: the chamber did not contain the treadle during any of the conditions. The observing response consisted of a key peck on the left key, which required the same force as the food key to operate and was transilluminated white during observing conditions.

Procedure

The pretraining condition was as in Experiments 1 and 2. The sequence of conditions for each pigeon and number of sessions conducted within each condition are summarized in Table 3.

Table 3. Sequence of baseline and delay conditions for Experiment 3, and the number of sessions conducted for each.

| Pigeon | Contingency for conditioned reinforcement | Number of sessions |

| 276 | FR 1 | 22 |

| Tandem FR 1 DRO 5 s (unsignaled) | 20 | |

| FR 1 | 40 | |

| Tandem FR 1 DRO 5 s (signaled) | 20 | |

| FR 1 | 30 | |

| 3504 | FR 1 | 23 |

| Tandem FR 1 DRO 5 s (unsignaled) | 20 | |

| FR 1 | 45 | |

| Tandem FR 1 DRO 5 s (signaled) | 20 | |

| FR 1 | 29 | |

| 1794 | FR 1 | 29 |

| Tandem FR 1 DRO 5 s (unsignaled) | 20 | |

| FR 1 | 30 | |

| Tandem FR 1 DRO 5 s (signaled) | 20 | |

| FR 1 | 31 | |

| 516 | FR 1 | 22 |

| Tandem FR 1 DRO 5 s (unsignaled) | 20 | |

| FR 1 | 38 | |

| Tandem FR 1 DRO 5 s (signaled) | 20 | |

| FR 1 | 28 |

Observing Condition

The observing condition differed from that in Experiments 1 and 2 only in that each response on the left key (observing key) produced 30 s of the multiple schedule, regardless of whether VI or Ext was in effect on the mixed VI Ext food schedule. Thus, both the S+ and the S− could be produced. Both the S+ and S− were available for observing to maximize contact between the observing response and the signal. The color of the right key (food key) changed accordingly if the component changed from VI to Ext, or vice versa, during the 30-s conditioned reinforcer delivery.

Signaled Versus Unsignaled Delays to Conditioned Reinforcement

Throughout this condition, 5-s resetting delays were programmed (i.e., conditioned reinforcement was produced according to a tandem FR 1 DRO 5-s schedule). During the signaled delay condition, the observing key was dark for the duration of the delay. During the unsignaled delay condition, the observing key remained white during the delay. Further key pecks on the observing key during the delay reset the DRO schedule. Each condition was in effect for a minimum of 15 sessions. An immediate conditioned-reinforcement condition was imposed between and after conditions of delayed conditioned reinforcement.

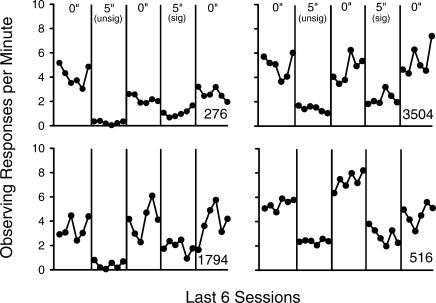

Results and Discussion

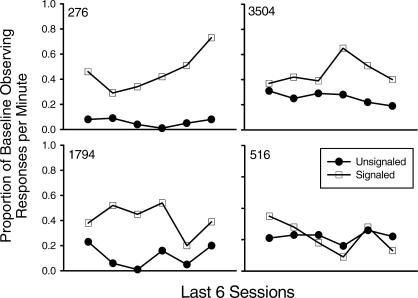

Response rates for each pigeon on the observing key are shown in Figure 3 as a function of the final six sessions of each condition. Rates of observing were higher with immediate conditioned reinforcement than with delays to conditioned reinforcement. Because baseline rates of observing for immediate conditioned reinforcement tended to vary from baseline to baseline, the data from the delay conditions are presented as a proportion of their immediately preceding baselines in Figure 4 to facilitate a comparison between signaled and unsignaled delays. This figure shows that 5-s signaled delays to conditioned reinforcement tended to reduce rates of observing less than their unsignaled counterparts in 3 out of the 4 pigeons.

Fig 3. Observing responses per min across the final six sessions of each condition of Experiment 3.

Fig 4. Observing responses per min from Figure 4, expressed as a proportion of the immediately preceding baseline (i.e., no delay).

Signaled delays typically maintained higher rates of observing than unsignaled delays. This result extends previous findings that involved signaled and unsignaled delays to primary reinforcement. Schaal and Branch (1988, 1990) and others (e.g., Reilly & Lattal, 2004; B. Williams & Dunn, 1994) have suggested that signals maintain response rates through delay periods due to their conditioned-reinforcing function that is acquired through close temporal relation with primary reinforcement, particularly with nonresetting delays. The results of Experiment 3, therefore, show a potential effect of second-order conditioned reinforcement. Namely, a stimulus (delay signal) acquires reinforcing function because it reliably precedes another stimulus (S+) that in turn derives reinforcing function from a primary reinforcer (food). Analogous results have been found in respondent conditioning experiments (e.g., Holland & Rescorla, 1975; Yin, Barnet, & Miller, 1994) that demonstrate relatively weak but reliable eliciting properties from a previously neutral stimulus after being paired with an effective conditioned stimulus (CS) that in turn derives its eliciting properties through its contingent association with an unconditioned stimulus (US).

General Discussion

The present results complement previous experiments that investigated the effects of altering response–reinforcer relations involving the delivery of unconditioned or primary reinforcers. The current experiments extend these findings by showing similar effects on behavior maintained by conditioned reinforcement arranged through an observing procedure. The overall pattern of behavioral effects was similar to that found with primary reinforcement in previous studies, despite the relatively low rates of behavior maintained by conditioned reinforcement in the current study. The occurrence of these relatively low rates is consistent with previous work showing that conditioned reinforcement contingencies maintain lower rates of responding relative to their primary reinforcement counterparts (e.g., Zimmerman, 1969). The results of Experiment 1 complement the finding of Marr and Zeiler (1971): when the contingency between a response and the conditioned reinforcement that maintains that response is degraded, the rate of that response decreases. In the study by Marr and Zeiler, some of the stimulus events that functioned as conditioned reinforcers were made response independent. In the present study, all of those events were made response independent. The results from Experiments 2 and 3 support the general finding that response rates decrease when conditioned reinforcement is delayed (Royalty et al., 1987). In Experiment 3, unsignaled delays produced proportionally greater response-rate reductions than signaled delays. This result also is consistent with previous examinations of the behavioral effects of delayed primary reinforcement (e.g., Richards, 1981; Schaal & Branch, 1988). Overall, then, the results of the current investigation are consistent with previous research, and reaffirm the utility of the observing procedure to examine issues of conditioned reinforcement in addition to those procedures that use chained schedules, concurrent chains, or second-order schedules.

The results also appear to be consistent with previous studies demonstrating that the production of S- might function as a conditioned punisher (e.g., Mulvaney, Dinsmoor, & Jwaideh, 1974; Purdy & Peel, 1988), albeit in an indirect way. Although the limited number of subjects and the lack of within-subject comparisons in the present study preclude making definitive generalities, the rates of observing for immediate conditioned reinforcement in Experiments 1 and 2 (in which the S+ only was available for production) were appreciably higher than in Experiment 3 (in which both the S+ and S− could be produced by the observing response). Observing rates during the VI schedule components in Experiments 1 and 2 were comparable with overall rates of observing in Experiment 3. Observing rates during the Ext components, however, typically were about 3 times higher than in the VI components. This result suggests that the production of S− may have functioned as a punisher for the observing response in Experiment 3. This conclusion is strengthened in light of the fact that the observing response in Experiments 1 and 2 was a treadle press and was a key peck in Experiment 3. Key pecks can be expected to occur at higher rates than treadle presses when maintained by primary reinforcement (e.g., Green & Holt, 2003); the higher rates of treadle pressing obtained in Experiments 1 and 2, therefore, is consistent with the suggestion that the S− had acquired aversive properties. Similarly, the procedure used in Experiments 1 and 2 (S+ only) may have functioned not as a simple FR 1 that was available intermittently, but more as a general intermittent schedule of observing due to the intervening and unsignaled Ext components during which observing had no consequences. This is possible particularly in light of the fact that when the observing contingency was removed, the observing response extinguished in both VI and Ext components.

The present experiments demonstrate the potential utility of the observing procedure to examine conditioned reinforcement effects in a manner complementary to other procedures such as single and concurrent-chained schedules. Although the general method has been in existence for more than half a century (Wyckoff, 1952), observing responses have received relatively less attention in contemporary experimental analyses of conditioned reinforcement effects, apart from a recent handful of studies (e.g., Gaynor & Shull, 2002; Shahan, 2002; Shahan et al., 2003; Shahan & Podlesnik, 2005; Shahan, Podlesnik, & Jimenez-Gomez, 2006) and the work of Dinsmoor and colleagues (e.g., Bowe & Dinsmoor, 1983; Dinsmoor, 1985; Dinsmoor, Mueller, Martin, & Bowe, 1982). When it is useful to separate the effects of primary and conditioned reinforcement, the observing procedure has proven to be effective.

The observing procedure may be a useful method to examine phenomena such as the potential second-order conditioned reinforcement effects shown in Experiment 3. Although the effect was slight in terms of overall response rates, it was reliable. The potential ubiquity of these behavioral effects in the maintenance of behavior within natural environments, with both humans and nonhumans, provides a strong rationale to continue to delineate experimentally the variables that control and modify conditioned reinforcement effects in terms of the response–conditioned reinforcer relation. To accomplish this task, methods such as the observing procedure should play a pivotal role, wherein conditioned reinforcement as a process can be identified with relative certainty because a unique operant has been required for the production of the conditioned reinforcer.

Stimuli that signal the availability of reinforcement are ubiquitous both in and out of the laboratory. These stimuli, under some circumstances, may acquire reinforcing properties of their own, and as such can control and maintain behavior through their rate-increasing properties. The current experiments demonstrate that the locus of this behavioral maintenance lies in the dependency, or response–reinforcer relation, between the response and the conditioned reinforcer alone rather than to some other behavioral process, as is the case with primary reinforcement effects (A. Williams & Lattal, 1999). Some of the implications for this outcome are straightforward and intuitive; the prediction and control of behavior maintained by conditioned reinforcement can be achieved by altering the relation between the response that produces a conditioned reinforcer and that stimulus, without altering the dependency that the conditioned reinforcer signals (i.e., the response–primary reinforcer relation). Response–conditioned reinforcer relations also may be sufficient for the acquisition of new behavior (see, for example, Snycerski, Laraway, & Poling, 2005), even when the dependency involves delayed conditioned reinforcement. Further elucidations of these response–conditioned reinforcer relations and how those relations are manifest in complex laboratory and nonlaboratory settings will lead to more thorough descriptions of behavioral control by conditioned reinforcers and thus to more complete analyses of operant behavior.

Acknowledgments

Mark P. Reilly is now at the Department of Psychology at Central Michigan University. Gregory A. Lieving is now at the Behavioral Psychology Department at the Kennedy Krieger Institute. Address correspondence to the first author, Behavioral Psychology Department, The Kennedy Krieger Institute, 707 N Broadway, Baltimore, MD 21205.

References

- Bowe C.A, Dinsmoor J.A. Spatial and temporal relations in conditioned reinforcement and observing behavior. Journal of the Experimental Analysis of Behavior. 1983;39:227–240. doi: 10.1901/jeab.1983.39-227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Branch M.N. The distribution of observing responses during two VI schedules. Psychonomic Science. 1970;20:5–6. [Google Scholar]

- Branch M.N. Observing responses in pigeons: Effects of schedule component duration and schedule value. Journal of the Experimental Analysis of Behavior. 1973;20:417–428. doi: 10.1901/jeab.1973.20-417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor J.A. The role of observing and attention in establishing stimulus control. Journal of the Experimental Analysis of Behavior. 1985;43:365–381. doi: 10.1901/jeab.1985.43-365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor J.A, Mueller K.L, Martin L.T, Bowe C.A. The acquisition of observing. Journal of the Experimental Analysis of Behavior. 1982;38:249–263. doi: 10.1901/jeab.1982.38-249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleshler M, Hoffman H.S. A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1962;5:529–530. doi: 10.1901/jeab.1962.5-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaynor S.T, Shull R.L. The generality of selective observing. Journal of the Experimental Analysis of Behavior. 2002;77:171–187. doi: 10.1901/jeab.2002.77-171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gleeson S, Lattal K.A. Response-reinforcer relations and the maintenance of behavior. Journal of the Experimental Analysis of Behavior. 1987;48:383–393. doi: 10.1901/jeab.1987.48-383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Holt D.D. Economic and biological influences on key pecking and treadle pressing in pigeons. Journal of the Experimental Analysis of Behavior. 2003;80:43–58. doi: 10.1901/jeab.2003.80-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland P.C, Rescorla R.A. Second-order conditioning with food unconditioned stimulus. Journal of Comparative and Physiological Psychology. 1975;88:459–467. doi: 10.1037/h0076219. [DOI] [PubMed] [Google Scholar]

- Kelleher R.T, Riddle W.C, Cook L. Observing responses in pigeons. Journal of the Experimental Analysis of Behavior. 1962;5:3–13. doi: 10.1901/jeab.1962.5-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattal K.A. Signal functions in delayed reinforcement. Journal of the Experimental Analysis of Behavior. 1984;42:239–253. doi: 10.1901/jeab.1984.42-239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattal K.A, Maxey G.C. Some effects of response-independent reinforcers in multiple schedules. Journal of the Experimental Analysis of Behavior. 1971;16:225–231. doi: 10.1901/jeab.1971.16-225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieving G.A. 1998. Acquisition of observing responses with delayed conditioned reinforcement. Unpublished master's thesis, West Virginia University (On-line abstract) Available: http://etd.wvu.edu/templates/showETD.cfm? recnum = 307. [Google Scholar]

- Lieving G.A, Lattal K.A. Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior. 2003;80:217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr M.J, Zeiler M.D. Schedules of response-independent conditioned reinforcement. Journal of the Experimental Analysis of Behavior. 1971;21:433–444. doi: 10.1901/jeab.1974.21-433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulvaney D.E, Dinsmoor J.A, Jwaideh A.R. Punishment of observing by the negative discriminative stimulus. Journal of the Experimental Analysis of Behavior. 1974;21:37–44. doi: 10.1901/jeab.1974.21-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuringer A.J. Superstitious key pecking after three peck-produced reinforcements. Journal of the Experimental Analysis of Behavior. 1970;13:127–134. doi: 10.1901/jeab.1970.13-127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Purdy J.E, Peel J.L. Observing response in goldfish (Carassius auratus). Journal of Comparative Psychology. 1988;102:160–168. [Google Scholar]

- Reilly M.P, Lattal K.A. Within-session delay-of-reinforcement gradients. Journal of Experimental Analysis of Behavior. 2004;82:21–35. doi: 10.1901/jeab.2004.82-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla R.A, Skucy J.C. Effect of response-independent reinforcers during extinction. Journal of Comparative and Physiological Psychology. 1969;67:381–389. [Google Scholar]

- Richards R.W. A comparison of signaled and unsignaled delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1981;35:145–152. doi: 10.1901/jeab.1981.35-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Royalty P, Williams B.A, Fantino E. Effects of delayed conditioned reinforcement in chain schedules. Journal of the Experimental Analysis of Behavior. 1987;47:41–56. doi: 10.1901/jeab.1987.47-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaal D.W, Branch M.N. Responding of pigeons under variable-interval schedules of unsignaled, briefly signaled, and completely signaled delays to reinforcement. Journal of the Experimental Analysis of Behavior. 1988;50:33–54. doi: 10.1901/jeab.1988.50-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaal D.W, Branch M.N. Responding of pigeons under variable-interval schedules of signaled delayed reinforcement: Effects of delay-signal duration. Journal of the Experimental Analysis of Behavior. 1990;53:103–121. doi: 10.1901/jeab.1990.53-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A. Observing behavior: Effects of rate and magnitude of primary reinforcement. Journal of the Experimental Analysis of Behavior. 2002;78:161–178. doi: 10.1901/jeab.2002.78-161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Magee A, Dobberstein A. The resistance to change of observing. Journal of the Experimental Analysis of Behavior. 2003;80:273–293. doi: 10.1901/jeab.2003.80-273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Podlesnik C.A. Rate of conditioned reinforcement affects observing rate but not resistance to change. Journal of the Experimental Analysis of Behavior. 2005;84:1–17. doi: 10.1901/jeab.2005.83-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Podlesnik C.A, Jimenez-Gomez C. Matching and conditioned reinforcement rate. Journal of the Experimental Analysis of Behavior. 2006;85:167–180. doi: 10.1901/jeab.2006.34-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sizemore O.J, Lattal K.A. Unsignalled delay of reinforcement in variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1978;30:169–175. doi: 10.1901/jeab.1978.30-169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snycerski S, Laraway S, Poling A. Response acquisition with immediate and delayed conditioned reinforcement. Behavioural Processes. 2005;68:1–11. doi: 10.1016/j.beproc.2004.08.004. [DOI] [PubMed] [Google Scholar]

- Williams A.M, Lattal K.A. The role of the response-reinforcer relation in delay-of-reinforcer effects. Journal of the Experimental Analysis of Behavior. 1999;71:187–194. doi: 10.1901/jeab.1999.71-187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A. The effects of unsignalled delayed reinforcement. Journal of the Experimental Analysis of Behavior. 1976;26:441–449. doi: 10.1901/jeab.1976.26-441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A. Conditioned reinforcement: Experimental and theoretical issues. The Behavior Analyst. 1994;17:261–285. doi: 10.1007/BF03392675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A, Dunn R. Preference for conditioned reinforcement. Journal of the Experimental Analysis of Behavior. 1991;55:37–46. doi: 10.1901/jeab.1991.55-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A, Dunn R. Context specificity of conditioned-reinforcement effects on discrimination acquisition. Journal of the Experimental Analysis of Behavior. 1994;62:157–167. doi: 10.1901/jeab.1994.62-157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyckoff L.B. The role of observing responses in discrimination learning. Psychological Review. 1952;59:431–442. doi: 10.1037/h0053932. [DOI] [PubMed] [Google Scholar]

- Yin H, Barnet R.C, Miller R.R. Second-order conditioning and Pavlovian conditioned inhibition: Operational similarities and differences. Journal of Experimental Psychology: Animal Behavior Processes. 1994;20:419–428. [PubMed] [Google Scholar]

- Zeiler M.D. Fixed and variable schedules of response-independent reinforcement. Journal of the Experimental Analysis of Behavior. 1968;11:405–414. doi: 10.1901/jeab.1968.11-405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimmerman J. Meanwhile . . . back at the key: Maintenance of behavior by conditioned reinforcement and response-independent primary reinforcement. In: Hendry D.P, editor. Homewood, IL: Dorsey Press; 1969. In. ed. Conditioned reinforcement (pp. 91–124). [Google Scholar]