Abstract

Four experiments examined the effects of delays to reinforcement on key peck sequences of pigeons maintained under multiple schedules of contingencies that produced variable or repetitive behavior. In Experiments 1, 2, and 4, in the repeat component only the sequence right-right-left-left earned food, and in the vary component four-response sequences different from the previous 10 earned food. Experiments 1 and 2 examined the effects of nonresetting and resetting delays to reinforcement, respectively. In Experiment 3, in the repeat component sequences had to be the same as one of the previous three, whereas in the vary component sequences had to be different from each of the previous three for food. Experiment 4 compared postreinforcer delays to prereinforcement delays. With immediate reinforcement sequences occurred at a similar rate in the two components, but were less variable in the repeat component. Delays to reinforcement decreased the rate of sequences similarly in both components, but affected variability differently. Variability increased in the repeat component, but was unaffected in the vary component. These effects occurred regardless of the manner in which the delay to reinforcement was programmed or the contingency used to generate repetitive behavior. Furthermore, the effects were unique to prereinforcement delays.

Keywords: Variability, repetition, stereotypy, operant, resistance to change, multiple schedule, key peck, pigeon

Variability appears to be an operant dimension of behavior, much like the force, location, and rate of responses, in that it can be influenced by consequences (see Neuringer, 2002, 2004, for reviews). For example, Page and Neuringer (1985) examined the variability of response sequences of pigeons. In one condition of Experiment 5, sequences of eight responses distributed in any manner across two keys produced food if the present sequence differed from the previous 50 sequences (Lag 50). In another, yoked, condition, a sequence produced food if on the corresponding trial during the Lag 50 condition food had been earned. Thus, in the Lag 50 condition, sequences had to differ from previous ones to earn food, but in the yoked condition, sequences produced food regardless of their relation to previous sequences. The pigeons produced about 75% of the total number of different possible sequences (256) in the Lag 50 condition, but only about 20% in the yoked condition. An increase in variability when reinforcers depend on variable behavior has been demonstrated in a number of species in addition to pigeons, including dolphins, rats, and human children and adults (e.g., Goetz & Baer, 1973; Pryor, Haag, & O'Reilly, 1969; Stokes, Mechner, & Balsam, 1999; van Hest, van Haaren, & van De Poll, 1989). Furthermore, a variety of different procedures have been used to produce variability with several different response topographies (e.g., Blough, 1966; Goetz & Baer, 1973; Machado, 1989; Morgan & Neuringer, 1990; Pryor et al., 1969).

Disruption by some means typically affects reinforced repetition more than reinforced variability, as measured by the change from the baseline level of variability. For example, greater persistence of response sequence variability over repetition has been reported with disruptors that are imposed without changing the maintaining schedule of reinforcement (see Harper & McLean, 1992), like prefeeding and within-session response-independent food (Doughty & Lattal, 2001) and ethanol administration (Cohen, Neuringer, & Rhodes, 1990). Greater disruption of repetition also has been reported with disruptors that change some aspect of the maintaining schedule of reinforcement, like extinction (Neuringer, Kornell, & Olufs, 2001) and increasing minimum interresponse times between responses in a sequence (Neuringer, 1991). Together, these results suggest that selective disruption of reinforced repetition is a common outcome.

To further assess the generality of differential disruption of repetition and variability, we examined the effects of delays to reinforcement on reinforced variability and repetition. Unlike previous manipulations used to disrupt variable and repetitive behavior, delays to reinforcement disrupt the temporal contiguity between the sequence and the reinforcer, thus providing a novel method of disruption by changing the schedule contingencies. In prior research with single responses, rather than sequences of responses, delays to reinforcement have been widely shown to reduce the rate of key pecking (e.g., Sizemore & Lattal, 1977, 1978; Williams, 1976; see Schneider, 1990, for review). What effects would delays to reinforcement have on response sequence variability and repetition? On the one hand, if results are similar to those obtained with other disrupters, then sequence repetition would be more disrupted than variability. On the other hand, if repetition and variability per se are the functional operants, then delays to reinforcement may disrupt response sequence variability as well as repetition.

In four experiments we examined the effects of delaying food delivery after the completion of a four-response sequence. Pigeons responded under a multiple schedule that required variable key-peck sequences to earn food in one component and repetitive key-peck sequences in the other. Across experiments, we examined the effects of different procedures for delaying reinforcers and different contingencies for generating variable and repetitive sequences. The goal was to determine the effects of delayed reinforcement on sequence variability and rate when reinforcers are dependent on variability or repetition.

Experiment 1

In this experiment, we examined the effects of signaled nonresetting delays to reinforcement (0–30 s) on sequence variability and rate under a multiple schedule of food delivery for variable and repetitive sequences. With signaled delays to reinforcement, the response that produces the reinforcer also produces a stimulus change that is present until reinforcer delivery (see Lattal, 1987). In the present case, the last peck in a sequence meeting the schedule requirement turned off the key lights for the duration of the delay prior to reinforcer delivery. Any pecks occurring during the delay had no programmed consequences (i.e., the delay was nonresetting; see Lattal, 1987). In the component requiring variability (hereafter the vary component), sequences that differed from the previous 10 sequences produced food (lag10). In the component requiring repetition (hereafter the repeat component), only a sequence of pecks on the right, right, left, and then left key (RRLL) produced food.

Method

Subjects

Four adult White Carneau pigeons, weighing between 464 and 609 g at their free-feeding weight, served as subjects. Pigeons were maintained at 80% (± 15 g) of free-feeding weights through postsession feeding as necessary. All pigeons were experimentally naive except P154, which had previous experience with a variety of unrelated procedures. When not in experimental sessions, the pigeons were individually housed in a temperature-controlled colony under a 12:12 hr light/dark cycle and had free access to water and digestive grit.

Apparatus

Four similar BRS/LVE sound-attenuating chambers, constructed of painted metal with aluminum front panels, were used. The chambers were 35 cm across the front panel, 30.7 cm from the front panel to the back wall, and 35.8 cm high. Each panel had three translucent plastic response keys that were 2.6 cm in diameter and 24.6 cm from the floor. The left and right keys could be lit from behind with either red or white light. The center key was dark and pecks to this key had no programmed consequences. In three of the chambers the center-to-center distance between keys was 8.2 cm; in the fourth chamber (P154) the distance was 6.4 cm. A lamp (28 V 1.1 W) was mounted 4.4 cm above the center key and served as the houselight. A rectangular aperture 9 cm below the center key provided access to a solenoid-operated hopper filled with pelleted pigeon diet. During hopper presentations, the hopper aperture was lit and the key and houselights extinguished. White noise and chamber ventilation fans masked extraneous noise. Contingencies were programmed and data were collected by microcomputers using Med Associates® interfacing and software located in an adjacent room.

Procedure

Experimental sessions were conducted daily at approximately the same time. Key pecks were initially autoshaped (Brown & Jenkins, 1968) to each of the key color and location combinations (red left, red right, white left, white right) used in the experiment.

Multiple Schedule

The final procedure was a multiple vary repeat schedule. Each component had an equal probability of starting the session, after which the components alternated following every fifth food delivery. There was a 10-s blackout between components during which all chamber lights were off and pecks had no programmed consequences. Sessions ended after 40 food deliveries, 200 trials, or 60 min, whichever occurred first. A four-peck sequence was required in both components, with a resetting 0.5-s interresponse interval imposed between each peck. The keys were dark and the houselight was lit during the interresponse interval. During both components, the center key remained dark and pecks to this key had no programmed consequences.

In the vary component, the side keys were lit red. Sequences differing from the previous 10 sequences in the vary component resulted in 2-s access to food (lag 10). Sequences that were the same as one of the previous 10 produced a flashing houselight for 2 s. At the beginning of each session, the 10 last sequences from the vary component in the previous session were used for comparison.

In the repeat component, the side keys were lit white. The sequence RRLL produced 2-s access to food. All other sequences produced a flashing houselight for 2 s. This procedure was in effect for at least 30 sessions, after which delays to reinforcement were introduced when behavior across the last six sessions was judged stable by visual inspection.

Pretraining

A pretraining procedure similar to that used previously for repetitive sequences (e.g., Cohen et al., 1990) was used. Initially, only the repeat component was presented. In the first phase of training, the sequence RR produced food. Next, RRL was required for food, then RRLL. The houselight flashed and the trial terminated if a response other than what was required occurred at any point in the sequence during these initial phases. The requirement was changed to the next phase when at least 35% of trials resulted in food in a session. Repeat pretraining was conducted for 12 to 18 sessions across subjects.

After repeat pretraining was complete, the vary component was also presented during sessions. Components alternated as described previously. In the first session with the vary component, the first sequence produced food. The second sequence had to differ from the first to produce food, the third sequence had to differ from the first two to produce food, and so on, until a lag 5 was reached (i.e., sequences had to differ from the previous five to produce food). For each subsequent session, the last five sequences of the vary component from the previous session were used for comparison. The lag 5 was in effect for 40 sessions, after which the lag was changed to 10 in an effort to make the percentage of trials ending in food more similar for the vary and repeat components.

Delays to Reinforcement

Table 1 lists the order of conditions and the number of sessions for each pigeon. During conditions with delayed reinforcement, when a sequence met the contingency in effect (either vary or repeat, depending on the component), food delivery was delayed after the last response of the sequence. Incorrect sequences resulted in the houselight flashing for 2 s, as described previously. During the delay, the keys were dark and the houselight was lit. Pecks to dark keys during the delay had no programmed consequences (i.e., the delay was nonresetting). Delays of 5, 15, and 30 s were implemented, with a return to immediate reinforcement between each. The order of delays was counterbalanced across subjects. After all conditions with delayed reinforcement were completed, the 15-s delay was repeated. Conditions were in effect for a minimum of 15 sessions and until dependent measures for each component (described below) were stable as judged by visual inspection (minimal variability and trend) across the last six sessions. In three cases conditions with delays to reinforcement were discontinued prior to stability because responding practically ceased; data from these conditions were not included in the analyses.

Table 1. Programmed delay to reinforcement, number of sessions in each condition, and the mean (SD) rate of key pecking per min during delays to reinforcement for the vary and repeat components for each pigeon in Experiment 1.

| Subject | Delay (s) | Sessions | Pecks/min during delay |

|

| Vary | Repeat | |||

| P92 | 0 | 31 | – | – |

| 30 | 6 | * | * | |

| 0 | 38 | – | – | |

| 15 | 19 | 0.83 (0.66) | 0.23 (0.41) | |

| 0 | 19 | – | – | |

| 5 | 21 | 20.50 (3.02) | 35.50 (5.63) | |

| 0 | 17 | – | – | |

| 15 | 36 | 0.56 (0.43) | 0.13 (0.16) | |

| P98 | 0 | 43 | – | – |

| 15 | 15 | 13.01 (2.86) | 16.20 (5.07) | |

| 0 | 26 | – | – | |

| 5 | 17 | 14.60 (2.62) | 16.60 (3.00) | |

| 0 | 33 | – | – | |

| 30 | 20 | * | * | |

| 0 | 29 | – | – | |

| 15 | 24 | 9.49 (5.65) | 12.43 (5.18) | |

| P99 | 0 | 51 | – | – |

| 15 | 4 | * | * | |

| 0 | 19 | – | – | |

| 5 | 37 | 123.00 (4.23) | 82.10 (9.48) | |

| 0 | 49 | – | - | |

| 30 | 50 | – (–)a | – (–)a | |

| 0 | 21 | – | – | |

| 15 | 26 | 75.23 (10.53) | 82.03 (10.55) | |

| P154 | 0 | 31 | – | – |

| 30 | 26 | 5.35 (3.65) | 6.11 (1.94) | |

| 0 | 23 | – | – | |

| 15 | 18 | 6.30 (1.76) | 3.23 (0.73) | |

| 0 | 19 | – | – | |

| 5 | 25 | 16.70 (2.72) | 1.20 (0.54) | |

| 0 | 38 | – | – | |

| 15 | 30 | 4.97 (1.18) | 1.83 (1.09) | |

Note: Dashes indicate conditions that did not have a programmed delay. Asterisks indicate conditions in which behavior did not reach stability. a data files necessary for this analysis were accidentally overwritten.

Dependent Measures

All dependent measures were taken from the last six sessions of each condition. Variability was assessed separately for the vary and repeat components with the U-value statistic: −Σ [RFi * log2 (RFi)]/log2 (16), where RFi represents the relative frequency of each of the 16 possible sequences (Miller & Frick, 1949; Page & Neuringer, 1985). U-values range from 0 to 1.0 with higher values indicating greater variability. A U-value of 0 indicates only one sequence occurred across the session; a value of 1.0 indicates all 16 possible sequences occurred equally often. The percentage of trials ending in food was calculated by dividing the total number of trials that produced food by the total number of trials completed during a session separately for each component. The joint analysis of U-value and percentage of trials ending in food is useful because U-value is a relatively molar (i.e., session-level) measure of variability, whereas percentage of trials with food reflects more molecular aspects (i.e., the local pattern of response sequences) of variability (see Page & Neuringer, 1985, for discussion). To obtain a measure of response rate, we calculated trials/min. Trials/min was computed for each session by dividing the total number of trials completed in a component by the total time spent in that component, excluding any time during delays. Rate of reinforcement under conditions with immediate reinforcement also was calculated by dividing the total session time spent in each component (excluding any time during delays) by the number of reinforcers delivered in that component, separately for the vary and repeat components. Time spent during reinforcer delivery was included in the calculation of total component time for both trials/min and reinforcement rate.

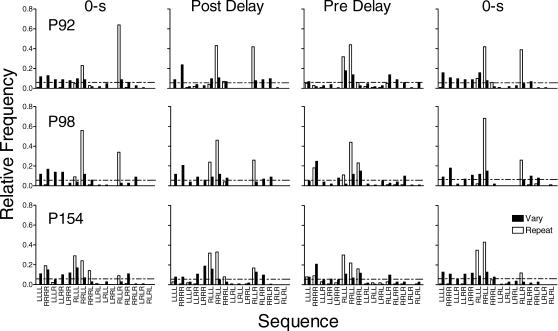

To examine the effects of delays to reinforcement on emission of specific response sequences, we calculated the relative frequency of each possible sequence (e.g., Cohen et al., 1990; Doughty & Lattal, 2001; Neuringer et al., 2001). Relative frequencies (shown in Figures 2, 4, & 6) were calculated for each condition by pooling data across the last six sessions of any and all replications of a condition and dividing the number of occurrences of each sequence by the total number of trials completed for each condition for each subject separately for each component.

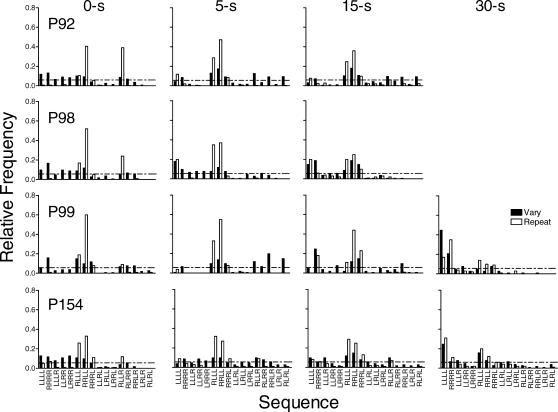

Fig 2. The relative frequency of each of the possible 16 sequences for each pigeon under immediate reinforcement and at each delay to reinforcement in Experiment 1.

Filled and unfilled bars represent the data from the vary and repeat components, respectively. The horizontal dashed line indicates the relative frequency of each possible sequence predicted by chance.

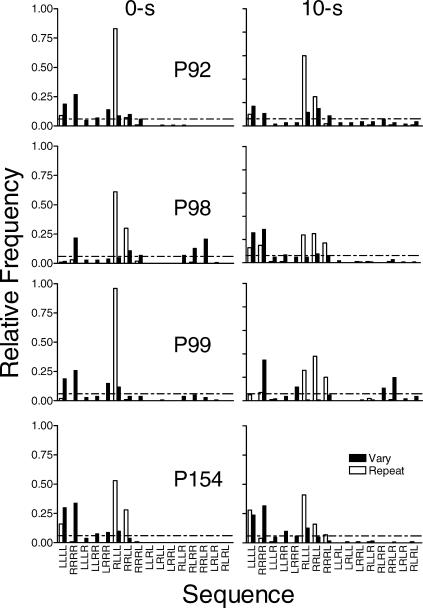

Fig 4. The relative frequency of each of the possible 16 sequences for each pigeon under immediate reinforcement and at each delay to reinforcement in Experiment 2.

Other details as in Figure 2.

Fig 6. The relative frequency of each of the possible 16 sequences for each pigeon under immediate reinforcement and at the 10-s delay to reinforcement in Experiment 3.

Other details as in Figure 2.

Results

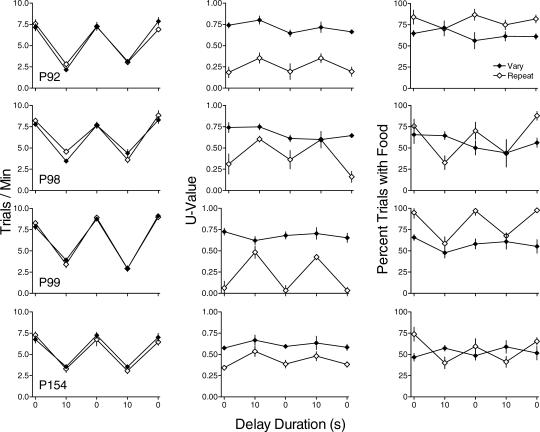

Figure 1 shows trials/min (left column), U-value (center column) and percentage of trials with food (right column) separately for the vary and repeat components for all pigeons. Data are means of the last six sessions of each condition. Because effects were similar across the four replications, data for the 0-s delay (baseline) are averaged across replications. Replications of the condition with the 15-s delay to reinforcement are shown by unconnected points. Under immediate reinforcement, trials/min (left column) was somewhat greater during the vary than during the repeat component for 3 of the 4 pigeons. Trials/min was not systematically affected by the 5-s delay to reinforcement, but decreased similarly in the vary and repeat components at longer delays. Decreases in trials/min were similar across replications of the 15-s delay for the vary and repeat components.

Fig 1. The effect of nonresetting delays to reinforcement on trials/min (left column), U-value (center column), and percentage of trials with food (right column) for each pigeon in Experiment 1.

Filled and unfilled circles represent data from the vary and repeat components, respectively. Replications of the condition with the 15-s delay are shown as unconnected points. Data are averaged across the last six sessions of each condition for each subject. Error bars indicate ± one standard deviation.

U-value (center column) was higher in the vary component than in the repeat component with immediate reinforcement and remained so across all delays, with the exception of P99 at the longest delay. In the repeat component U-value increased as a function of increasing delays to reinforcement. The effects of delays on U-value in the vary component were usually small and unsystematic compared to the effects in the repeat component, with some increases evident at delays of 5 s for 2 pigeons. U-value for the replication of the 15-s delay was similar to that during the first exposure to the 15-s delay in both the vary and repeat components.

During immediate reinforcement, percentage of trials with food (right column) was higher in the repeat component than in the vary component for 2 pigeons and the same across components for the other 2. In the repeat component, the percentage of trials ending in food decreased with longer delays to reinforcement for 3 of 4 pigeons. This measure was less affected in the vary component, with slight decreases evident for 3 of 4 pigeons. Similar effects occurred across replications of the 15-s delay in the vary and repeat components.

Figure 2 shows the relative frequencies of the 16 possible sequences in the vary and repeat components for each pigeon. The sequences are plotted along the x-axis beginning with sequences that require the least number of changeovers between keys and ending with sequences that require the most changeovers (cf. Doughty & Lattal, 2001). The horizontal dashed line indicates the relative frequency of each possible sequence predicted by chance (i.e., 1 divided by 16, or 0.0625). Data were pooled across the last six sessions of each replication of each condition.

During immediate reinforcement in the repeat component the target sequence (RRLL) occurred most frequently. The sequence with an early right-to-left switch (RLLL), and the sequence which when repeated makes up the target sequence (RLLR), also occurred at frequencies above those predicted by chance. As the delay to reinforcement increased, the frequency of the target sequence decreased but remained above chance. The frequency of sequences with no switches increased in the repeat component across delays. In the vary component, during immediate reinforcement sequence frequency was fairly evenly distributed among sequences with zero or one switch for all pigeons, with some 2- and 3-switch sequences also occurring. There was no systematic effect of the 5- and 15-s delays, but at the 30-s delay in the vary component the most frequent response sequences required zero switches for the 2 pigeons that completed the condition.

Table 1 shows the rate of key pecking during delays to reinforcement for each pigeon averaged across the last six sessions for each condition. The delay rate reflects the number of key pecks summed across all three dark keys divided by the time spent during the delay. The rate of key pecking during the delay varied substantially across subjects and delays from 0.13 pecks/min to 123 pecks/min. The rate of key pecking during the delay was not systematically different for the vary and repeat components.

Table 2 shows that in the repeat component the rate of reinforcement under immediate reinforcement was higher than in the vary component for 3 of 4 pigeons. The rate of reinforcement reflects trials/min (response rate) and the percentage of trials ending in food. In terms of the total number of reinforcers earned per session, all pigeons earned all available reinforcers (20 vary and 20 repeat) across the last six sessions of each condition except as noted here. P98 earned an average of 19.67 and 20.00 reinforcers in the vary and repeat components, respectively, in the condition with the 15-s delay. In the condition with the 30-s delay, P99 earned 13.67 vary and 14.67 repeat reinforcers. P154 earned 17.83 vary and 19.00 repeat reinforcers in the condition with the 30-s delay.

Table 2. Mean reinforcers earned per min under baseline (no delay) conditions in the vary and repeat components for each subject in each experiment.

| Subject | Reinforcers/min |

|

| Vary | Repeat | |

| Experiment 1 | ||

| P92 | 2.93 | 3.28 |

| P98 | 2.87 | 3.66 |

| P99 | 3.36 | 4.71 |

| P154 | 2.33 | 2.15 |

| Experiment 2 | ||

| P92 | 2.77 | 3.27 |

| P98 | 2.78 | 4.54 |

| P99 | 3.77 | 6.04 |

| P154 | 2.35 | 3.42 |

| Experiment 3 | ||

| P92 | 4.63 | 6.38 |

| P98 | 5.11 | 6.21 |

| P99 | 5.14 | 7.86 |

| P154 | 3.16 | 5.37 |

| Experiment 4 | ||

| P92 | 2.82 | 2.53 |

| P98 | 2.76 | 4.36 |

| P154 | 2.44 | 2.37 |

Discussion

With immediate reinforcement, U-value was higher in the vary component than the repeat component. This result replicates the common finding that sequence variability is high when variability is required, but relatively low when variability is not required or when a particular sequence is required for food (e.g., Denney & Neuringer, 1998; Page & Neuringer, 1985). Trials/min (i.e., response rate) decreased in both the vary and repeat components as a function of increasing delays to reinforcement for each subject. The decrease in trials/min was not systematically different across the two components. These results with sequences of responses are similar to those obtained with signaled delays to reinforcement with simple operant behavior (e.g., reinforcement of a single key peck): typically, the longer the delay to reinforcement, the greater the decrease in response rates (e.g., Lattal, 1984; Richards, 1981; Schaal & Branch, 1988, 1990). With longer delays to reinforcement, responding sometimes ceased in both components. This finding is similar to that of Ferster (1953) who found that when long delays to reinforcement for single responses of pigeons were implemented abruptly, as in the present study, responding was not well maintained.

In terms of the variability of the sequences, however, the effects of delays to reinforcement differed for the vary and repeat components. For each pigeon, U-value increased in the repeat component as a function of delay, indicating that overall sequence variability increased in this component. Similarly, percentage of trials with food decreased in the repeat component, reflecting the fact that the repetitive contingency was less likely to be satisfied (i.e., the sequence RRLL decreased in frequency) as the delay to reinforcement increased. In the vary component, however, U-value and percentage of trials with food were relatively unaffected by delays to reinforcement, showing that overall sequence variability and the likelihood of meeting the variability contingency, respectively, were generally unchanged. These effects likely are not due to differences in reinforcement rate between the two components (Table 2), because sequences in the repeat component usually had a higher rate of reinforcement, which should render them more resistant to change, rather than less (see Nevin & Grace, 2000, for review).

The relative frequency of each of the possible 16 sequences also was affected differently by delays to reinforcement in the two components. In the repeat component, with immediate reinforcement the distribution of sequences was largely restricted to the target sequence (RRLL) and one or two common errors that were similar to the target. Delays to reinforcement shifted the distribution in the repeat component to sequences with fewer switches between keys and ultimately flattened it. In the vary component, with immediate reinforcement the distribution of sequences was comparatively even across the possibilities. Shorter delays to reinforcement had relatively little impact on the distribution. During the condition with the longest delay (30 s), which only 2 pigeons completed, the distribution of sequences in the vary component shifted to sequences with relatively few switches between keys, and was largely indistinguishable from that generated during the repeat component. This result could have occurred because the 30-s delay occupied much of the time during each trial, and the vary and repeat components became more difficult to discriminate because the key lights were not lit during the delay.

Experiment 2

In terms of the variability of sequences, delays to reinforcement degraded behavior in the repeat component more than behavior in the vary component in Experiment 1. One explanation of this result could be the key pecking during delays to reinforcement in both components (see Table 1). Although there were substantial differences in rates of key pecking during the delay across conditions and pigeons (cf. Schaal & Branch, 1988, 1990), this key pecking could be expected to degrade sequence integrity during the repeat component more so than during the vary component for the following reasons. To the extent that pecks during the delay were relatively contiguous with food delivery, those key pecks could have been reinforced. If so, the chances of these reinforced pecks being different from the target sequence in the repeat component is relatively high. In the vary component, reinforced extraneous pecks could be argued to have little impact on performance, given that all 16 possible sequences of pecks were eligible for reinforcement.

Experiment 2 examined the role played by contiguity of pecking during the delay with the reinforcer in the differential effects of delayed reinforcement on variable and repetitive sequences. To this end, we used resetting delays to reinforcement, in which each peck during the delay restarted the delay duration (see Lattal, 1987). Such a delay controls the time between the last response and the delivery of the reinforcer, thereby reducing responding during delays.

Method

Subjects and Apparatus

The pigeons from Experiment 1 served as subjects. Sessions were conducted at approximately the same time each day. Care, housing, and the experimental apparatus were as described in Experiment 1.

Procedure

A multiple vary (lag 10) repeat (RRLL) schedule was in effect. No pretraining was required because the current experiment occurred immediately following the last condition of Experiment 1 (P99) or Experiment 4 (P92, P98, & P154). The general procedure of signaled delays to reinforcement from Experiment 1 was used. Table 3 lists the number of sessions in each condition for each subject in Experiment 2. The order of delay durations for each subject was 0, 5, 15, 5, and 0 s. The other aspects of the contingencies and the delay stimuli in the vary and repeat components were as in Experiment 1. Unlike Experiment 1, pecks during the delay reset the delay. The food was not delivered until the delay duration elapsed with no pecks to any of the three dark keys. Conditions were changed when behavior was stable as defined in Experiment 1.

Table 3. Programmed delays to reinforcement (s), number of sessions in each condition, mean (SD) rate of pecking per min during delays, and mean (SD) obtained delays to reinforcement (s) for the vary and repeat components for each pigeon in Experiment 2. Other details as in Table 1.

| Subject | Programmed delay (s) | Sessions | Pecks/min during delay |

Obtained delay (s) |

||

| Vary | Repeat | Vary | Repeat | |||

| P92 | 0 | 64 | – | – | – | – |

| 5 | 30 | 0.00 (0.00) | 0.10 (0.24) | 5.00 (0.00) | 5.00 (0.01) | |

| 15 | 25 | 0.00 (0.00) | 0.00 (0.00) | 15.00 (0.00) | 15.00 (0.00) | |

| 5 | 71 | 0.38 (0.59) | 0.19 (0.30) | 5.10 (0.15) | 5.06 (0.09) | |

| 0 | 31 | – | – | – | – | |

| P98 | 0 | 47 | – | – | – | – |

| 5 | 38 | 10.88 (3.39) | 14.70 (2.99) | 5.36 (0.08) | 5.49 (0.15) | |

| 15 | 30 | 3.60 (0.57) | 7.10 (1.13) | 15.70 (0.71) | 15.90 (0.27) | |

| 5 | 56 | 13.09 (4.61) | 13.00 (2.13) | 5.31 (0.12) | 5.41 (0.16) | |

| 0 | 43 | – | – | – | – | |

| P99 | 0 | 69 | – | – | – | – |

| 5 | 27 | 21.16 (3.66) | 20.61 (1.34) | 6.83 (0.78) | 7.05 (0.50) | |

| 15 | 17 | 8.76 (1.78) | 7.85 (1.85) | 16.17 (0.30) | 15.71 (0.14) | |

| 5 | 27 | 20.69 (3.60) | 29.92 (3.67) | 6.73 (0.26) | 8.16 (0.43) | |

| 0 | 69 | – | – | – | – | |

| P154 | 0 | 73 | – | – | – | – |

| 5 | 32 | 6.20 (2.10) | 1.09 (0.79) | 5.22 (0.07) | 5.03 (0.05) | |

| 15 | 25 | 1.63 (1.02) | 0.48 (0.25) | 15.36 (0.17) | 15.54 (0.61) | |

| 5 | 45 | 8.35 (1.98) | 0.97 (0.76) | 5.5 (0.20) | 5.10 (0.14) | |

| 0 | 45 | – | – | – | – | |

Results

Figure 3 shows trials/min (left column), U-value (middle column) and percentage of trials with food (right column) for each pigeon. Trials/min in both the vary and repeat components decreased with increases in delay duration with the exception of the repeat component for the 5-s delay conditions for P98. The second exposure to the 5-s delay to reinforcement produced rates similar to those during the initial exposure. There were no systematic differences in response rates between the vary and repeat components across pigeons.

Fig 3. The effect of resetting delays to reinforcement on trials/min (left column), U-value (center column), and percentage of trials with food (right column) for each pigeon in Experiment 2.

Other details as in Figure 1.

The center column of Figure 3 shows that U-value in the repeat component was less than U-value in the vary component at all delays. In the repeat component, U-value increased with increasing delays to reinforcement for 3 of 4 pigeons. For P92, U-value was higher during the first exposure to the 5-s delay than with immediate reinforcement but similar at other delays. In the vary component, there was no systematic change in U-value as a function of delay. The replication of the 5-s delay resulted in values similar to those from the initial exposure in both the vary and repeat components except for P92 as noted above.

The right column of Figure 3 shows that in the repeat component, the percentage of trials with food decreased with delayed reinforcement for 3 of 4 pigeons. In the vary component the percentage of trials with food was not systematically affected by delay. As with the other measures, the replication of the 5-s delay resulted in values similar to those from the initial exposure.

Figure 4 shows that in the repeat component during immediate reinforcement the distributions of response sequences were similar to those from Experiment 1, with the target sequence occurring most frequently. During conditions with delays to reinforcement, the frequency of the target sequence in the repeat component decreased, while the frequency of other sequences with one right to left switch (i.e., RLLL, RRRL) increased. In the vary component during immediate reinforcement, the sequence distributions were relatively flat and also similar to that in Experiment 1. As the delay to reinforcement increased, there was no systematic change in the distribution of sequences in the vary component.

Table 3 shows the rate of key pecking during delays to reinforcement and the average obtained delay for each subject across the last six sessions for each condition. The obtained delay was calculated as the average amount of time between the last peck in the 4-response sequence and the delivery of the reinforcer for the last six sessions of each condition. If pecking occurred after the sequence was completed (during the delay), then the time between the last peck during the delay and the delivery of the reinforcer was always the programmed delay as shown in Table 3. The average obtained delay in both components and for all delay durations was within 1 s of the programmed delay for 3 subjects. The 4th subject (P99) experienced average obtained delays that were between 1.5 and 3 s longer than the programmed delay because of pecking during the delay. Table 2 shows that under immediate reinforcement, the rate of reinforcement (reflecting trials/min and percentage of trials with food) was higher in the repeat component than in the vary component. All pigeons collected all available reinforcers (20 vary and 20 repeat) in each of the last six sessions of each condition.

Discussion

The results of Experiment 2, in which the effects of a resetting delay to reinforcement were investigated, were similar to those obtained in Experiment 1, in which the effects of a nonresetting delay to reinforcement were investigated. In both cases, delays to reinforcement decreased the rate of sequences in the vary and repeat components in a similar manner. Sequence variability was disrupted more in the repeat component than in the vary component. Delays to reinforcement increased U-value and decreased the percentage of trials with food in the repeat component, but did not systematically affect these measures in the vary component. The relative frequency of the 16 possible sequences also was affected differently by delays to reinforcement in the two components. Delays to reinforcement shifted the distribution in the repeat component to sequences with fewer switches between keys and ultimately flattened it, but in the vary component delays to reinforcement had relatively little impact on the distribution of sequences.

Unlike in Experiment 1, in Experiment 2 the time between the last peck during the signaled delay period and the delivery of the reinforcer was controlled. Therefore, contiguity between key pecks during the delay and the delivery of food could not have contributed to the results obtained in Experiment 2. In addition, rates of keypecking during the delay decreased substantially from those in Experiment 1 for all pigeons. Thus, accidental reinforcement of nontarget pecks during the delay does not appear to account for the differential effects of delays to reinforcement on sequences during the vary and repeat components. As in Experiment 1, these effects appear unlikely to be related to differences in reinforcement rate between the two components, because reinforcement rate was higher in the repeat component than in the vary component (Table 2).

Experiment 3

In Experiments 1 and 2, both resetting and nonresetting delays to reinforcement changed the variability of sequences more in the repeat component than in the vary component. In these two experiments, the vary and repeat components differed in several ways. Most obviously, the repeat component required sequence repetition for food delivery, whereas the vary component required sequence variability for food delivery. More subtly, however, the repeat contingency was defined by a single response sequence, whereas the vary contingency was defined by the relation between sequences. Perhaps the effects observed in the first two experiments were related to this difference, rather than the repetitive versus variable requirements of the contingencies. Furthermore, the effects on sequence variability in the repeat component could be unique to the particular sequence, RRLL, chosen as the target.

To examine the generality of the effects observed in the first two experiments, we investigated the effects of a 10-s nonresetting delay to reinforcement when the contingencies in the repeat and vary component were both defined by the relation of the current sequence to previous sequences. Specifically, in the repeat component, a sequence produced food if it was the same as one of the previous three (lag 3 same), but in the vary component, a sequence produced food if it was different from the previous three (lag 3 different; cf. Cherot, Jones, & Neuringer, 1996; Neuringer, 1992).

Method

Subjects and Apparatus

The pigeons from the previous two experiments served as subjects. Care and housing were as described previously, and sessions were conducted at approximately the same time each day in the apparatus described for Experiment 1. This experiment occurred after Experiment 4 for each pigeon.

Procedure

A lag 3 procedure was used for both the vary and repeat components. In the vary component, a four-response sequence that was different from the previous three resulted in 2-s access to food, and a sequence that was the same as one of the last three resulted in a 2-s flashing houselight. In the repeat component a response sequence that was the same as one of the last three response sequences resulted in food delivery, and a sequence in the repeat component that was different from the last three resulted in the houselight flashing (see Neuringer, 1992, for a similar procedure). In both the vary and repeat components, RRRR and LLLL were never eligible for reinforcement (cf. Cherot et al., 1996). Table 4 shows the number of sessions at each delay and the order of conditions. Once responding stabilized with immediate reinforcement, a 10-s nonresetting delay to reinforcement was introduced in both the vary and repeat components. Exposure to this delay was repeated after a return to immediate reinforcement. Conditions were changed based on the stability criteria described in the first experiment.

Table 4. Number of sessions in each condition, programmed delay to reinforcement, and the mean (SD) rate of key pecking per min during delays to reinforcement for the vary and repeat components for each pigeon in Experiment 3. Other details as in Table 1.

| Subject | Sessions | Delay (s) | Pecks/min during delay |

|

| Vary | Repeat | |||

| P92 | 63 | 0 | – | – |

| 31 | 10 | 0.15 (0.12) | 0.00 (0.00) | |

| 40 | 0 | – | – | |

| 24 | 10 | 1.30 (0.78) | 1.82 (1.63) | |

| 29 | 0 | – | – | |

| P98 | 42 | 0 | – | – |

| 25 | 10 | 3.55 (0.78) | 2.26 (0.73) | |

| 63 | 0 | – | – | |

| 34 | 10 | 3.96 (2.63) | 3.92 (2.20) | |

| 40 | 0 | – | – | |

| P99 | 36 | 0 | – | – |

| 49 | 10 | 34.35 (5.04) | 60.26 (9.72) | |

| 33 | 0 | – | – | |

| 36 | 10 | 63.59 (10.34) | 37.42 (10.75) | |

| 33 | 0 | – | – | |

| P154 | 34 | 0 | – | – |

| 39 | 10 | 44.84 (11.54) | 1.10 (0.79) | |

| 45 | 0 | – | – | |

| 60 | 10 | 3.65 (1.56) | 0.15 (0.16) | |

| 58 | 0 | – | – | |

Results

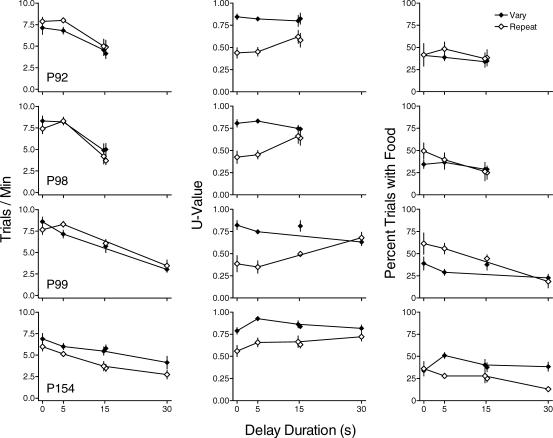

Figure 5 shows trials/min (left column), U-value (center column) and the percentage of trials with food (right column) for each pigeon. Response rates were higher during immediate reinforcement than during the 10-s delay in both the vary and repeat components. There was no systematic difference in response rates between the vary and repeat components. The replications of the conditions with immediate and delayed reinforcement resulted in similar rates of responding to those obtained during the initial exposures.

Fig 5. The effect of nonresetting delays to reinforcement on trials/min (left column), U-value (center column), and percentage of trials with food (right column) for each pigeon in Experiment 3.

Other details as in Figure 1.

U-value (center column, Figure 5) was lower in the repeat component than in the vary component across all conditions with the exception of the second exposure to the 10-s delay for P98. In addition, U-value in the repeat component was lower during immediate reinforcement and increased during the 10-s delay. U-value in the vary component was not systematically affected by delays to reinforcement. Results were similar during the replications of conditions for each pigeon with the exception of P98. Following the first exposure to the 10-s delay to reinforcement condition, U-value in the vary component remained somewhat lower for this pigeon for the remainder of the experiment.

The percentage of trials with food (right column) under immediate reinforcement was higher in the repeat component than in the vary component. The 10-s delay decreased the percentage of trials with food in the repeat component, but had no systematic effect on this measure in the vary component. As with the other measures, replications of conditions produced data similar to that during the initial exposure for both components.

Figure 6 shows that in the repeat component with immediate reinforcement, RLLL was the most frequent sequence for all pigeons. The only other sequences produced at levels above those predicted by chance in the repeat component with immediate reinforcement were RRLL (the previous target sequence) and LLLL. With the 10-s delay to reinforcement the distribution flattened and sequences with one right-to-left switch occurred more frequently in the repeat component than predicted by chance. During the 10-s delay there also was a tendency for more sequences with no switches than during immediate reinforcement. In the vary component, during immediate reinforcement the distribution of sequences was flatter than in the repeat component, but clustered around sequences with zero or one switch, with some 2-switch sequences. There was no systematic difference in the distribution of sequences in the vary component between conditions with immediate reinforcement and the 10-s delay to reinforcement.

Table 4 shows the rate of key pecking during delays to reinforcement for each pigeon averaged across the last six sessions for each condition. The rate of key pecking during the delay was not systematically different for the vary and repeat components. The data in Table 2 show that the rate of reinforcement with no delay was higher in the repeat component than in the vary component. All pigeons collected all available reinforcers (20 vary and 20 repeat) in each of the last six sessions of each condition.

Discussion

Overall, the behavior generated by the lag 3 same and different contingencies with immediate reinforcement was similar to that maintained by the target sequence (repeat) and lag 10 (vary) contingencies used in the first two experiments. In the immediate reinforcement conditions, response rates (trials/min) were not systematically or substantially different across components or across experiments. Variability, as indexed by the more global measure U-value and by the sequence distributions, was higher in the component with the lag 3 different (vary) contingency than in the component with the lag 3 same (repeat) contingency. The U-values for the vary component in Experiment 3 were similar to those obtained in Experiments 1 and 2. U-values for the repeat component in the first two experiments, however, were higher than those obtained in Experiment 3. In addition, reinforcement rates in Experiment 3 were higher in both components than those in Experiments 1 and 2.

The sequence distributions show that in the lag 3 same component in Experiment 3, largely one sequence was emitted. This finding is interesting, because the pigeons could have repeated different sequences across time, but instead made one in particular. The sequences RRRR and LLLL were not eligible for reinforcement. All pigeons repeated RLLL, which is similar to the target sequence (RRLL) from the previous experiments, but with an earlier switch from the right to left key. The percentage of trials ending in food was higher for the repeat component in Experiment 3 than in the first two experiments.

In the vary component, the sequence distributions in Experiment 3 were not as flat as those in the first two experiments. This difference probably is due to the fact that a lag 3, rather than a lag 10, contingency was in effect, and replicates the frequent finding that the level of variability is sensitive to that required by the contingency (e.g., Machado, 1989; Page & Neuringer, 1985). It was somewhat surprising that RRRR and LLLL occurred frequently, given that they were not eligible for reinforcement. These sequences could, however, make up the previous three sequences from which the last one had to differ, which could be why they still occurred so frequently.

The effects of delaying reinforcement were similar across the three experiments. In all cases, delaying reinforcement decreased trials/min for both the vary and repeat component. U-value was relatively unaffected in the vary component, but was increased in the repeat component, regardless of the contingency used to define repetitive behavior across experiments. The more local measure of success at meeting the contingencies, the percentage of trials with food, also decreased in the repeat component but changed little in the vary component in all three experiments.

Experiment 4

In the first three experiments, delayed reinforcement disrupted sequence variability more in the repeat component than in the vary component. In all these experiments, however, inserting a delay between the end of the sequence and the delivery of the reinforcer has two effects: the contiguity between the end of the sequence and the reinforcer is decreased, and the overall rate of reinforcement is decreased. Decreasing the rate of reinforcement without reducing sequence-reinforcer contiguity can increase sequence variability when variability is relatively low (e.g., Grunow & Neuringer, 2002), as it was in the repeat component in the first three experiments. Furthermore, with single key pecks response rate is usually lower with lower reinforcement rates (e.g., Catania & Reynolds, 1968), so the decrease in trials/min with delays to reinforcement also could be related to the decrease in reinforcer rate.

To evaluate the role of decreases in reinforcement rate per se, we compared the effects of pre- and postreinforcer delays on repetitive and variable sequences. Prereinforcer delays occur between the last response in a sequence and the delivery of the reinforcer, whereas postreinforcer delays occurred after the delivery of the reinforcer and the start of the next trial. Both delays decrease overall reinforcement rate, but postreinforcer delays do not decrease contiguity between the sequence and the reinforcer. If changes in contiguity produced the effects in the first three experiments, then the postreinforcer delay should have little effect on sequence variability. If the decrease in reinforcement rate increased variability in repetitive behavior, however, then both pre- and postreinforcer delays should have similar effects.

Method

Subjects and Apparatus

Pigeons 92, 98, and 154 from the prior experiments were used. Pigeon 99 did not participate in this experiment because of the length of time required for it to finish the previous experiment. Care and housing were the same, and sessions were conducted at approximately the same time each day in the apparatus described for Experiment 1.

Procedure

Pretraining was not required because this experiment occurred immediately following the last condition of Experiment 1. The same basic multiple schedule of vary (lag 10) and repeat (RRLL) described in Experiment 1 was used here.

Table 5 shows the order of conditions and the number of sessions at each for each subject. As in prior experiments, the prereinforcer delay occurred between the completion of a sequence meeting the required contingencies and the reinforcer delivery, and was signaled by dark keys and the lit houselight. During the postreinforcer delay, sequences meeting the required contingencies immediately produced food, and then the programmed delay occurred after reinforcer delivery and before the start of the next trial. During this delay also all the keys were dark and the houselight was on. The pre- and postreinforcer delays were nonresetting. Within subjects, the pre- and postreinforcer delay durations were the same (30 s for P154 and 15 s for P92 and P98).

Table 5. Programmed delay to reinforcement, number of sessions in each condition, and the mean (SD) rate of key pecking per min during delays to reinforcement for the vary and repeat components for each pigeon in Experiment 4. Other details as in Table 1.

| Subject | Delay (s) | Sessions | Pecks/min during delay |

|

| Vary | Repeat | |||

| P92 | 0 | 21 | – | – |

| post-15 | 29 | 0.17 (0.20) | 0.00 (0.00) | |

| pre-15 | 30 | 0.17 (0.15) | 0.07 (0.16) | |

| 0 | 64 | – | – | |

| P98 | 0 | 20 | – | – |

| post-15 | 25 | 0.93 (0.48) | 1.30 (0.69) | |

| pre-15 | 22 | 12.53 (2.52) | 15.10 (5.97) | |

| 0 | 45 | – | – | |

| P154 | 0 | 17 | – | – |

| post-30 | 17 | 1.60 (1.51) | 1.50 (1.21) | |

| pre-30 | 20 | 3.60 (1.34) | 2.86 (3.46) | |

| 0 | 73 | – | – | |

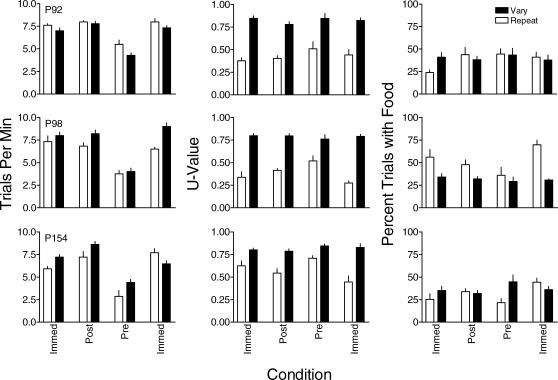

Results

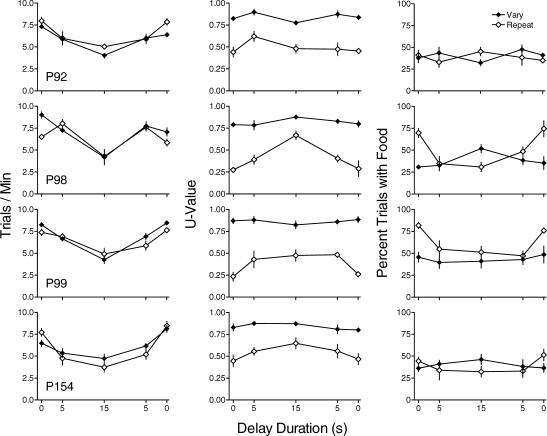

The left column of Figure 7 shows that trials/min in both components was higher under immediate reinforcement and postreinforcer delays relative to those maintained by prereinforcer delays. The postreinforcer delays increased response rates in the vary component above those maintained by immediate reinforcement for 2 pigeons. Response rates were not systematically different between the vary and repeat components across conditions and were generally similar during the first and second exposure to immediate reinforcement.

Fig 7. The effect of pre- and postreinforcer delays on trials/min (left column), U-value (center column), and percentage of trials with food (right column) for each pigeon in Experiment 4.

Filled and unfilled bars represent data from the vary and repeat components, respectively. Error bars indicate one standard deviation above the mean.

The center column of Figure 7 shows that U-values were consistently higher in the vary component than in the repeat component across all conditions. There was no systematic difference in U-value between conditions with immediate reinforcement and postreinforcer delays in the repeat component. Prereinforcer delays increased U-value compared to immediate reinforcement in the repeat component. U-value remained generally unaffected by either the pre- or postreinforcer delays in the vary component. The right column of Figure 7 shows that there was no systematic effect of either pre- or postreinforcer delays to reinforcement on the percentage of trials with food in either the vary or repeat components. There also was no systematic difference in the percentage of trials with food between the vary and repeat components.

Figure 8 shows that in the repeat component, the target sequence (RRLL) occurred at levels above what is predicted by chance with immediate reinforcement and delayed reinforcement. The frequency of sequences with one early or late switch increased with the prereinforcer delay. The two-switch sequence RLLR, which occurred at above-chance levels with immediate reinforcement, decreased in frequency with the prereinforcer delay but was not systematically affected by the postreinforcer delay. Response sequences in the vary component during immediate reinforcement were fairly evenly distributed among the sequence options that included no switch or one switch, with a limited number of 2-switch sequences. In the vary component there were no systematic differences in the sequence distributions with either the pre- or postreinforcer delay.

Fig 8. The relative frequency of each of the possible 16 sequences for each pigeon under immediate reinforcement and at the pre- and postreinforcer delay in Experiment 4.

Other details as in Figure 2.

Table 5 shows the rate of key pecking during delays to reinforcement for each pigeon averaged across the last six sessions for each condition. The rate of key pecking during the delay was not systematically different for the vary and repeat components, but was higher for 2 of 3 pigeons during the prereinforcer delay than during the postreinforcer delay condition. Table 2 shows that the rate of reinforcement with no delay did not differ systematically between the repeat and vary components across pigeons. All pigeons collected all available reinforcers in each of the last six sessions of each condition.

Discussion

Prereinforcer delays decreased trials/min similarly in the vary and repeat component. Postreinforcer delays, however, did not decrease trials/min. Instead, there was sometimes a small increase in trials/min, particularly in the vary component. The decrease in trials/min with delayed reinforcement, therefore, seems related to the disruption of contiguity between the last response in the sequence and the delivery of the reinforcer, rather than the decrease in overall reinforcement rate introduced by delays to reinforcement.

Similarly, prereinforcer delays increased sequence variability (U-value) in the repeat but not in the vary component. In contrast, postreinforcer delays had no substantial or systematic effects on U-value in either component. In terms of the relative frequency of individual sequences, in the repeat component prereinforcer delays flattened the distribution somewhat and shifted it toward sequences with fewer switches, whereas in the vary component there was no large or systematic effect. Postreinforcer delays had relatively little effect in either component. The percentage of trials with food was relatively unaffected by postreinforcer delays, but generally decreased in the repeat component with prereinforcer delays. The increase in sequence variability in the repeat component, therefore, also seems to be related to the decrease in contiguity between the last response in the sequence and the delivery of the reinforcer, rather than the decrease in reinforcement rate introduced by delays to reinforcement.

General Discussion

When variability in sequences was required for reinforcement, variability was relatively high compared to when repetition was required for reinforcement. This general result held true for the U-value statistic and the relative frequency of sequences, and occurred regardless of whether repetition was defined by a target sequence (Experiments 1, 2, & 4) or by the relation of the current sequence to previous ones (Experiment 3). Furthermore, the degree of variability was under discriminative control: the differences occurred within subject, within session, in a multiple schedule. In 67 out of 69 conditions across experiments and pigeons, mean U-value was lower during the repeat component than during the vary component. These results are similar to those obtained previously with various species and procedures (e.g., Cohen et al., 1990; Denney & Neuringer, 1998; Miller & Neuringer, 2000; Page & Neuringer, 1985).

The percentage of trials ending with food delivery also indicates relatively successful control by the contingencies. In the vary component, 37% of trials ended with food, averaged across all pigeons and all immediate reinforcement conditions in Experiments 1, 2, and 4, in which a lag 10 contingency was in effect. We conducted a 10,000 trial simulation in which a computer randomly selected a left or right response for a 4-response sequence (the sequence length in the current experiments). Under a lag 10 contingency, the simulated random responder received a reinforcer on 52% of trials. The difference between the pigeons' performance and the random emitter was similar to that obtained in previous experiments and simulations (see Page & Neuringer, 1985). In the repeat component with the target sequence RRLL, 50% of trials ended in food across pigeons for all immediate reinforcement conditions in Experiments 1, 2, and 4. This percentage of trials with food is similar to that obtained by rats for the target sequence LLRR in previous research with a multiple vary repeat schedule (Cohen et al., 1990). The percentage of trials with food was lower with the target sequence RRLL (Experiments 1, 2, & 4) than when the pigeons chose the sequence to repeat, which in all cases was RLLL (Experiment 3). Sequences with later switches may be more difficult than sequences with earlier switches because the stimulus control function of the number of responses in a sequence increases in variability with increasing response number (see e.g., Gallistel, 1990; Machado, 1997).

In each of the four experiments, delayed reinforcement decreased trials/min similarly in components requiring variable and repetitive behavior. This effect occurred with nonresetting (Experiments 1, 3, & 4) and resetting (Experiment 2) delays, and with repetitive behavior maintained under a target sequence procedure (Experiments 1, 2, & 4) as well as under a lag contingency more similar to that maintaining variable behavior (Experiment 3). Longer delays to reinforcement decreased trials/min more so than shorter ones. Our results for sequence rates are similar to those found by delaying reinforcement for relatively simple behavior, such as single key pecks (e.g., Lattal, 1984; Pierce, Hanford, & Zimmerman, 1972; Richards, 1981; Schaal & Branch, 1988, 1990; Sizemore & Lattal, 1977, 1978; Williams, 1976; see Schneider, 1990, for review). Furthermore, the decreases in response rates do not seem related to decreases in overall reinforcement rate produced by delays to reinforcement, because postreinforcer delays of equivalent duration also decrease programmed reinforcement rate but did not decrease response rates (Experiment 4).

The effects of delaying reinforcement were similar in terms of the rate of sequences, but they differed for the structure of variable and repetitive sequences. U-value increased in the repeat component, but was largely unaffected in the vary component, as a function of delay to reinforcement. U-value in the repeat component was not affected by equivalent postreinforcer delays (Experiment 4), so these changes do not appear likely to be related to the decrease in reinforcement rate produced by delays to reinforcement (cf. Grunow & Neuringer, 2002). Reinforcement rates under immediate reinforcement also were generally higher in the repeat component than in the vary component (Table 2), which, if anything, should make repetitive behavior more resistant to change, rather than less (see Nevin & Grace, 2000). The relative frequency distributions of sequences also were more disrupted in the repeat component than in the vary component. On the whole, the variability of variable behavior seems to have been more resistant to disruption by delays to reinforcement than the repetitiousness of repetitive behavior. This result is similar to those showing greater disruption of repetitive behavior with a variety of manipulations in previous experiments (e.g., Cohen et al., 1990; Doughty & Lattal, 2001; Neuringer, 1991; Neuringer et al., 2001).

The present results seem contrary to what would be expected if repetition and variability were the functional operants in the repeat and vary components, respectively. Contiguity between a response and a reinforcer is widely regarded as a fundamental aspect of the maintenance of operant behavior (e.g., Lattal, 1987; Nevin, 1973, 1974), so reducing the contiguity between a sequence and its consequence should weaken the functional operant. In the repeat component, when the reinforcer was delayed sequences were less repetitive, but in the vary component, when the reinforcer was delayed sequences did not become less variable. One interpretation of these results is that variability is not the functional operant in this experimental paradigm.

Another possibility is that variability is the functional operant, but that delaying reinforcement has two opposing effects. One effect could be to decrease operant variability, but another could be to elicit variability (see Neuringer et al., 2001; Wagner & Neuringer, in press). With single key-peck responses maintained by variable-interval schedules of reinforcement, for example, delays to reinforcement decrease mean response rates and increase the variability in interresponse time distributions (Schaal, Shahan, Kovera, & Reilly, 1998; Shahan & Lattal, 2005). Wagner and Neuringer (in press) provide evidence for this interpretation of the effects of delayed reinforcement on operant and elicited variability with sequences of responses. In their experiments with rats, three-response sequences were required to have either a low, moderate, or high level of variability for food. When the food was delayed from the end of the sequence, response rates decreased for all groups. The effects of delayed reinforcement on variability, however, depended on the baseline level of variability: low levels of variability increased, moderate levels were unchanged, and high levels decreased. This result is what would be expected if delaying reinforcement 1) decreased operant variability and 2) elicited variability. This interpretation also could explain the results of the present experiment. For the repeat component, if operant repetition was decreased and variability elicited, then variability would go up. For the vary component, if moderate levels of operant variation were decreased and variability elicited, then variability could remain unchanged. Thus, another interpretation is that the present results do not provide evidence against the operant nature of variability per se.

Although the disruptive effects of delays to reinforcement in the present experiments were similar to the effects of other disruptors on variable and repetitive behavior, the mechanism of disruption by delays to reinforcement and other factors is not clear. One potential mechanism by which delays to reinforcement might disrupt variable and repetitive performance is by interfering with remembering. Remembering previous responses may be required for accurate repetitive performance, but not for variable performance. For example, Neuringer (1991) found that imposing longer minimum interresponse intervals interfered with repetitive but not variable sequences. Similarly, delays to reinforcement might decrease remembering of previous sequences in both components, but might selectively decrease performance in the repeat component due to the memorial requirement. Although this interpretation may explain the current results and those in which interresponse intervals were manipulated (Neuringer, 1991), it is less clear how it would apply to other types of disruptors, like prefeeding and extinction (e.g., Doughty & Lattal, 2001; Neuringer et al., 2001). Thus, although reinforced variable and repetitive behavior is differentially susceptible to disruption by various manipulations, the reason for this difference remains to be elucidated.

Acknowledgments

Portions of these data were presented at the 27th annual meeting of the Association for Behavior Analysis, New Orleans, May 2001. We thank Allen Neuringer for interesting discussions that led to these experiments and Tim Shahan for helpful discussion during the conduct of the experiments. They each provided valuable comments on previous drafts of this manuscript. Thanks also to Carrie DeHaven for her help in the conduct of the first experiment.

References

- Blough D.S. The reinforcement of least-frequent interresponse times. Journal of the Experimental Analysis of Behavior. 1966;9:581–591. doi: 10.1901/jeab.1966.9-581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown P.L, Jenkins H.M. Auto-shaping of the pigeon's key-peck. Journal of the Experimental Analysis of Behavior. 1968;11:1–8. doi: 10.1901/jeab.1968.11-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania A.C, Reynolds G.S. A quantitative analysis of the responding maintained by interval schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1968;11:327–383. doi: 10.1901/jeab.1968.11-s327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherot C, Jones A, Neuringer A. Reinforced variability decreases with approach to reinforcers. Journal of Experimental Psychology: Animal Behavior Processes. 1996;22:497–508. doi: 10.1037//0097-7403.22.4.497. [DOI] [PubMed] [Google Scholar]

- Cohen L, Neuringer A, Rhodes D. Effects of ethanol on reinforced variations and repetitions by rats under a multiple schedule. Journal of the Experimental Analysis of Behavior. 1990;54:1–12. doi: 10.1901/jeab.1990.54-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denney J, Neuringer A. Behavioral variability is controlled by discriminative stimuli. Animal Learning & Behavior. 1998;26:154–162. [Google Scholar]

- Doughty A.H, Lattal K.A. Resistance to change of operant variation and repetition. Journal of the Experimental Analysis of Behavior. 2001;76:195–216. doi: 10.1901/jeab.2001.76-195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B. Sustained behavior under delayed reinforcement. Journal of Experimental Psychology. 1953;45:218–224. doi: 10.1037/h0062158. [DOI] [PubMed] [Google Scholar]

- Gallistel C.R. The organization of learning. Cambridge, MA: MIT Press; 1990. pp. 317–350. [Google Scholar]

- Goetz E.M, Baer D.M. Social control of form diversity and the emergence of new forms in children's blockbuilding. Journal of Applied Behavior Analysis. 1973;6:209–217. doi: 10.1901/jaba.1973.6-209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grunow A, Neuringer A. Learning to vary and varying to learn. Psychonomic Bulletin and Review. 2002;9:250–258. doi: 10.3758/bf03196279. [DOI] [PubMed] [Google Scholar]

- Harper D.N, McLean A.P. Resistance to change and the law of effect. Journal of the Experimental Analysis of Behavior. 1992;57:317–337. doi: 10.1901/jeab.1992.57-317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattal K.A. Signal functions in delayed reinforcement. Journal of the Experimental Analysis of Behavior. 1984;42:239–253. doi: 10.1901/jeab.1984.42-239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattal K.A. Considerations in the experimental analysis of reinforcement delay. In: Commons M.L, Mazur J.E, editors. Quantitative analyses of behavior: Vol. 5. The effect of delay and of intervening events on reinforcement value. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc; 1987. pp. 107–123. In. eds. [Google Scholar]

- Machado A. Operant conditioning of behavioral variability using a percentile reinforcement schedule. Journal of the Experimental Analysis of Behavior. 1989;52:155–166. doi: 10.1901/jeab.1989.52-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Machado A. Increasing the variability of response sequences in pigeons by adjusting the frequency of switching between two keys. Journal of the Experimental Analysis of Behavior. 1997;68:1–25. doi: 10.1901/jeab.1997.68-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller G.A, Frick F.C. Statistical behavioristics and sequences of responses. Psychological Review. 1949;56:311–324. doi: 10.1037/h0060413. [DOI] [PubMed] [Google Scholar]

- Miller N, Neuringer A. Reinforcing variability in adolescents with autism. Journal of Applied Behavior Analysis. 2000;33:151–165. doi: 10.1901/jaba.2000.33-151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan L, Neuringer A. Behavioral variability as a function of response topography and reinforcement contingency. Animal Learning & Behavior. 1990;18:257–263. [Google Scholar]

- Neuringer A. Operant variability and repetition as functions of interresponse time. Journal of Experimental Psychology: Animal Behavior Processes. 1991;17:3–12. [Google Scholar]

- Neuringer A. Choosing to vary and repeat. Psychological Science. 1992;3:246–250. [Google Scholar]

- Neuringer A. Operant variability: Evidence, functions, and theory. Psychonomic Bulletin & Review. 2002;9:672–705. doi: 10.3758/bf03196324. [DOI] [PubMed] [Google Scholar]

- Neuringer A. Reinforced variability in animals and people. American Psychologist. 2004;59:891–906. doi: 10.1037/0003-066X.59.9.891. [DOI] [PubMed] [Google Scholar]

- Neuringer A, Kornell N, Olufs M. Stability and variability in extinction. Journal of Experimental Psychology: Animal Behavior Processes. 2001;27:79–94. [PubMed] [Google Scholar]

- Nevin J.A. The maintenance of behavior. In: Nevin J.A, Reynolds G.S, editors. The study of behavior: Learning, motivation, emotion, and instinct. Glenview, IL: Scott, Foresman; 1973. pp. 201–236. In. eds. [Google Scholar]

- Nevin J.A. Response strength in multiple schedules. Journal of the Experimental Analysis of Behavior. 1974;21:389–408. doi: 10.1901/jeab.1974.21-389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A, Grace R.C. Behavioral momentum and the Law of Effect. Behavioral and Brain Sciences. 2000;23:73–130. doi: 10.1017/s0140525x00002405. [DOI] [PubMed] [Google Scholar]

- Page S, Neuringer A. Variability is an operant. Journal of Experimental Psychology: Animal Behavior Processes. 1985;11:429–452. doi: 10.1037//0097-7403.26.1.98. [DOI] [PubMed] [Google Scholar]

- Pierce C.H, Hanford P.V, Zimmerman J. Effects of different delay of reinforcement procedures on variable-interval responding. Journal of the Experimental Analysis of Behavior. 1972;19:141–146. doi: 10.1901/jeab.1972.18-141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pryor K.W, Haag R, O'Reilly J. The creative porpoise: Training for novel behavior. Journal of the Experimental Analysis of Behavior. 1969;12:653–661. doi: 10.1901/jeab.1969.12-653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards R.W. A comparison between signaled and unsignaled delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1981;35:145–152. doi: 10.1901/jeab.1981.35-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaal D.W, Branch M.N. Responding of pigeons under variable-interval schedules of unsignaled, briefly signaled, and completely signaled delays to reinforcement. Journal of the Experimental Analysis of Behavior. 1988;50:33–54. doi: 10.1901/jeab.1988.50-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaal D.W, Branch M.N. Responding of pigeons under variable-interval schedules of signaled-delayed reinforcement: Effects of delay signal duration. Journal of the Experimental Analysis of Behavior. 1990;53:103–21. doi: 10.1901/jeab.1990.53-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaal D.W, Shahan T.A, Kovera C.A, Reilly M.P. Mechanisms underlying the effects of unsignaled delayed reinforcement on key pecking of pigeons under variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1998;69:103–122. doi: 10.1901/jeab.1998.69-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider S.M. The role of contiguity in free-operant unsignaled delay of positive reinforcement: A brief review. The Psychological Record. 1990;40:239–257. [Google Scholar]

- Shahan T.A, Lattal K.A. Unsignaled delay of reinforcement, relative time, and resistance to change. Journal of the Experimental Analysis of Behavior. 2005;83:201–219. doi: 10.1901/jeab.2005.62-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sizemore O.J, Lattal K.A. Dependency, temporal contiguity, and response-independent reinforcement. Journal of the Experimental Analysis of Behavior. 1977;25:119–125. doi: 10.1901/jeab.1977.27-119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sizemore O.J, Lattal K.A. Unsignaled delay of reinforcement in variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1978;30:169–175. doi: 10.1901/jeab.1978.30-169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes P.D, Mechner F, Balsam P.D. Effects of different acquisition procedures on response variability. Animal Learning & Behavior. 1999;27:28–41. [Google Scholar]

- van Hest A, van Haaren F, van de Poll N.E. Operant conditioning of response variability in male and female Wistar rats. Physiology & Behavior. 1989;45:551–555. doi: 10.1016/0031-9384(89)90072-3. [DOI] [PubMed] [Google Scholar]

- Wagner K, Neuringer A. Operant variability when reinforcement is delayed. Learning & Behavior. in press doi: 10.3758/bf03193187. [DOI] [PubMed] [Google Scholar]

- Williams B.A. The effects of unsignalled delayed reinforcement. Journal of the Experimental Analysis of Behavior. 1976;26:441–449. doi: 10.1901/jeab.1976.26-441. [DOI] [PMC free article] [PubMed] [Google Scholar]