Abstract

Adaptive control of reaching depends on internal models that associate states in which the limb experienced a force perturbation with motor commands that can compensate for it. Limb state can be sensed via both vision and proprioception. However, adaptation of reaching in novel dynamics results in generalization in the intrinsic coordinates of the limb, suggesting that the proprioceptive states in which the limb was perturbed dominate representation of limb state. To test this hypothesis, we considered a task where position of the hand during a reach was correlated with patterns of force perturbation. This correlation could be sensed via vision, proprioception, or both. As predicted, when the correlations could be sensed only via proprioception, learning was significantly better as compared to when the correlations could only be sensed through vision. We found that learning with visual correlations resulted in subjects who could verbally describe the patterns of perturbations but this awareness was never observed in subjects who learned the task with only proprioceptive correlations. We manipulated the relative values of the visual and proprioceptive parameters and found that the probability of becoming aware strongly depended on the correlations that subjects could visually observe. In all conditions, aware subjects demonstrated a small but significant advantage in their ability to adapt their motor commands. Proprioceptive correlations produced an internal model that strongly influenced reaching performance yet did not lead to awareness. Visual correlations strongly increased the probability of becoming aware, yet had a much smaller but still significant effect on reaching performance. Therefore, practice resulted in acquisition of both implicit and explicit internal models.

Keywords: reaching, arm movements, awareness, adaptation, force fields, vision, proprioception, computational models, motor control, motor learning

The brain can sense the state of a system via multiple sensory channels. For example, both visual and proprioceptive sensors often simultaneously encode arm position (Cohen and Andersen, 2000;Rossetti et al., 1995). Both visual and auditory channels may encode location of an object. It has been suggested that when such duplicate information is available, the brain forms a single estimate by weighting each modality according to its measurement precision (Ernst M.O and Banks M.S., 2002;van Beers et al., 1999). However, a recent study challenged this view. Sober and Sabes (2003) considered a reaching task where state of the limb could be estimated using both vision and proprioception. They described two important psychophysical results: First, the estimate of limb state used for kinematic planning of a reach relied mostly on a representation of limb state in terms of vision. This is consistent with the idea that the plan for a reaching movement forms in the posterior parietal cortex in terms of vectors that represent hand and target positions with respect to fixation (Buneo et al., 2002). The difference between these two vectors is a vector that points from the hand to the target. In the premotor cortex, the representation of this vector remains largely independent of the proprioceptive state of the limb (Kakei et al., 2001). Second, the estimate used for transforming the planned movement into motor commands relied mostly on the limb’s proprioceptive state. This is consistent with the result that for a given movement direction, proprioceptive state of the arm strongly affects discharge of motor cortical cells (Scott and Kalaska, 1997). Therefore, it is plausible that visual and proprioceptive information regarding limb state differentially affect planning vs. execution of a reach.

When there are novel forces on the hand that depend on state of the limb, for example while reaching in a force field (Shadmehr and Mussa-Ivaldi, 1994), the brain learns to alter the maps that describe the execution of the reach and not the maps that describe reach plans. For example, when people practice reaching in a force field, it appears that the brain associates the limb state in which forces were experienced to the motor command that are necessary to negate the forces (Conditt et al., 1997). The coordinate system in which limb state is represented in this computation is joint-like rather than Cartesian, resulting in generalization patterns that are in the intrinsic coordinates of the arm (Shadmehr and Moussavi, 2000;Malfait et al., 2002). This pattern of generalization is consistent with the hypothesis that the brain relies primarily on proprioception to represent limb state for the purpose of transforming a movement plan into motor commands.

Two recent results, however, are inconsistent with this view. An internal model of dynamics that represents state of the arm in terms of proprioception predicts that when the arm experiences a pattern of force during reaching, there should be no transfer of learning to the contralateral arm. However, there is small but significant generalization from one arm to the other and the coordinate system appears to be Cartesian (Criscimagna-Hemminger et al., 2003;Malfait and Ostry, 2004). Moreover, a recent study showed that when an observer views a video of an actor reaching in a force field, he gains information that has a small but significant effect on his performance (Mattar and Gribble, 2005).

To reconcile these results, we considered the possibility that sensory information about state of the limb might contribute to performance in two fundamentally different ways. First, visual and proprioceptive information might combine to form an implicit internal model of dynamics. This implicit model would likely depend on an estimate of limb state as sensed through proprioception. Second, visually observed patterns might help form an explicit internal model that predicts the direction or other characteristics of the perturbation. This might result in awareness. The two components of knowledge would combine to guide performance. Thus, in this study we attempted to elucidate three points: 1) the relative contribution of vision and proprioception to implicit force field learning, 2) the relative contribution of vision and proprioception to awareness of the force field pattern, and 3) the role of awareness in force field learning. The results suggest a revision of existing computational models of adaptation for reaching.

Methods

Fifty four healthy individuals (18 women and 36 men), all naïve to the experiment, participated in this study. Average age was 25 years (range: 19 to 35 years). The study protocol was approved by JHU School of Medicine IRB and all subjects signed a consent form. Subjects sat on a chair in front of a 2D robotic manipulandum and held its handle. Their upper arm rested on an arm support attached to the chair and they reached in the horizontal plane at the shoulder height. We spread a sheet of black heavy cloth horizontally above the movement plane. This sheet occluded view of the entire body below the neck (including the moving arm). A vertical monitor was placed about 75 cm in front of subjects and displayed a cursor (2×2 mm2) representing hand position and squares (6×6 mm2) representing start and target positions of reaching. The relationship between this visual display and hand position varied in the five experimental groups (as shown in figure 1 and detailed below).

Figure 1.

Experiment design and post experiment interview. A. Top row displays the cursor trajectory. The bottom row displays the corresponding hand trajectory. The curved paths represent perturbed movements by the force field, either clockwise or counter-clockwise. Matched group: the cursor position was aligned with subject’s hand position. Proprioceptive cue group: hand position varied but not cursor position. Visual cue group: the cursor position varies but not hand position. Flipped group: the cursor was displayed on the opposite side of the hand position. B. Assessment of force field awareness. After the completion of the experiment, each subject was asked these questions in order to determine whether they were consciously aware of the force field pattern. C. Examples from two subjects. Some subjects were able to accurately describe the force field pattern with diagrams or in writing.

Task

A cursor on the monitor represented hand position. It always moved with a velocity that was identical to hand velocity. On each trial, one of three force fields that depend on the hand velocity where , acted on the hand: a clockwise (CW) curl field (B = [0 13; −13 0] N·sec/m), a counter-clockwise (CCW) curl field (B = [0 –13; 13 0] N·sec/m), or a null field. These fields pushed the hand perpendicular to the direction of motion. As the movements were always to a target at 180° (i.e., downward), a CW field pushed the hand to the left while a CCW field pushed the hand to the right. We generated a 420 long pseudo-random sequence of fields and used the same sequence in all subjects. This sequence controlled the start position of each reach trial. For example, in the “matched” group, when the trial indicated a CW field, the robot positioned the hand at left of center to start the reach trial and a cursor was displayed at left of center on the screen. When the trial indicated a CCW field, the robot positioned the hand at the right of center and a cursor was displayed at right of center on the screen. When the trial indicated a null field, the robot positioned the hand at the middle and the cursor was also displayed in the middle of the monitor. In contrast, in the “proprioceptive cue” group, the robot positioned the hand at left, center, or right according to the field in that trial but the cursor starting position was always at center. In the “visual cue” group, the robot always positioned the hand at center but the cursor was displayed at left, center, or right depending on the field in that trial. Note, however, that cursor velocity always matched hand velocity and therefore in all groups a deflection of the hand to the right or left during a movement was accurately displaced on the screen.

The task was to reach to a displayed target (displacement of 10 cm) within 500±50 ms. Targets were always displayed on a vertical line, straight down from start positions, which represented reaching directly toward the subject. Onset of movement was determined using an absolute velocity threshold of 0.03 m/s. Feedback on performance was provided immediately after target acquisition. If the target was acquired within a 100 ms window around the required movement time (450–550 ms), the target exploded and the computer made a sound. If the target location was acquired too slowly or too quickly, the target turned blue or red, respectively. After this reward was presented the robot moved the subject’s hand to a new start position. During this transition period, the cursor feedback indicating hand position was blanked until the hand was within 2 cm of the start position for the next trial. After the hand became stationary within 5 mm of the new start position for 300 ms, the video monitor displayed the next target.

Experiment 1

We compared performance in three conditions: in one condition, visual information that indicated the position of the reaching movement correlated with the patterns of force perturbation during the reach. In another condition, proprioceptive information that indicated the position of the reach correlated with the pattern of forces. In a final condition, both proprioceptive and visual information correlated with the forces.

In the matched group (n=8) at the start of each reach the robot positioned the hand at one of three locations – left, center, or right (Figure 1a). These locations were evenly spaced (7 cm apart from each other) on a line about 48 cm in front of the subject and centered about the subject’s midline. If the field for a trial was CW, then the robot positioned that hand at the left start location; right if the field was CCW; center if the field was null. The cursor was similarly positioned at left (−7cm), center (0cm), and right (+7cm) to match starting hand position.

In the proprioceptive cue group (n=7) at the start of each reach the robot positioned the hand at left, center, or right depending on the field condition for that trial (same hand position-field correspondence as in the matched group). However, the cursor displayed the starting hand position always at the center. When the movement began, the velocity vector for the cursor matched hand velocity. That is, if the hand was pushed to the right, the cursor moved to the right by exactly the amount that the hand was pushed. This matching of cursor velocity and hand velocity was maintained in all conditions for all groups.

In the visual cue groups (n=7), at the start of each reach the robot always positioned the hand at the middle of the workspace. However, the cursor was displayed at left, center, or right depending on the field condition for that trial (same cursor position-field correspondence as in the matched group). The cursor distance between left and right movements was 7 cm.

Experiment 2

Results of Experiment 1 suggested to us that when there was a correlation between proprioceptively sensed movement positions and perturbations, subjects learned the task well. However, when this correlation was sensed through vision, there was a good chance of becoming aware of the patterns of perturbation. Did awareness have a significant impact on performance? To answer this question, we recruited a large number of new subjects into a flipped group (n=16) where at the start of each reach the robot positioned the hand at left, center, or right according to the field in that trial. However, the cursor that indicated starting hand position was displayed opposite to the location of the hand. For example, when the hand was resting at the right starting position, the cursor was displayed to left of the monitor midline. As before, once the movement began the cursor velocity matched hand velocity.

Experiment 3

The results of Experiment 2 demonstrated that aware subjects performed significantly better than unaware subjects. To determine the extent of this advantage, we considered a condition where we expected that nearly all subjects should become aware. We again recruited a new group of subjects (n=12) and trained them in a paradigm identical to the visual cue group described above, except that the cursor indicating hand position was at 14 cm to the left or right of the center of the screen. As before, in all trials the robot placed the hand at the center start location.

Experiment 4

Based on the results of Experiments 1–3, we constructed a model. To directly test the predictions of our model we considered a situation where subjects were instructed explicitly regarding the relationship between start position of the reach and the patterns of force. In the aware proprioceptive cue group (n=4), we informed subjects of this relationship in the beginning of the experiment and conducted the experiment with the same condition as the proprioceptive cue group.

General experiment procedures

To familiarize the volunteers with the task, subjects began with three sets of 84 smovements in the null field. Following this, subjects did five force field sets of 84 movements in which fields were applied as described above. During training in the field, occasional pseudo-randomly designated trials were performed without forces (i.e., catch trials). Approximately one in seven trials was a catch trial. Note that the “aware proprioceptive cue” group subjects performed 80 more movements in the null field during which they could practice to distinguish the three different start positions before the main experiment started. Also, only in the aware proprioceptive cue group, during the null field sets only, words indicating the start position, "left", "center", or "right" were displayed at the beginning of each trial to help subjects differentiate the three different start positions.

After the experiment, subjects completed a written questionnaire (Fig. 1b). The form contained questions about self-evaluation of performance, notice of any force field patterns, use of any strategy to succeed in the task, and level of attention during the experiment. The purpose of the questionnaire was to help us categorize subjects into “aware” or “unaware” groups. All subjects who answered “yes” to the first question, "did you notice any relationship between the force direction and movement start position?", and correctly described the force pattern (as in the box in Fig. 2) were assigned to the “aware” group. Only one subject marked “no” to the first question and then answered “yes” to the second question, "did you realize that when the movement starts on the right, force direction was rightward and when the movement starts on the left, force direction was leftward?. This subject was also categorized “aware”.

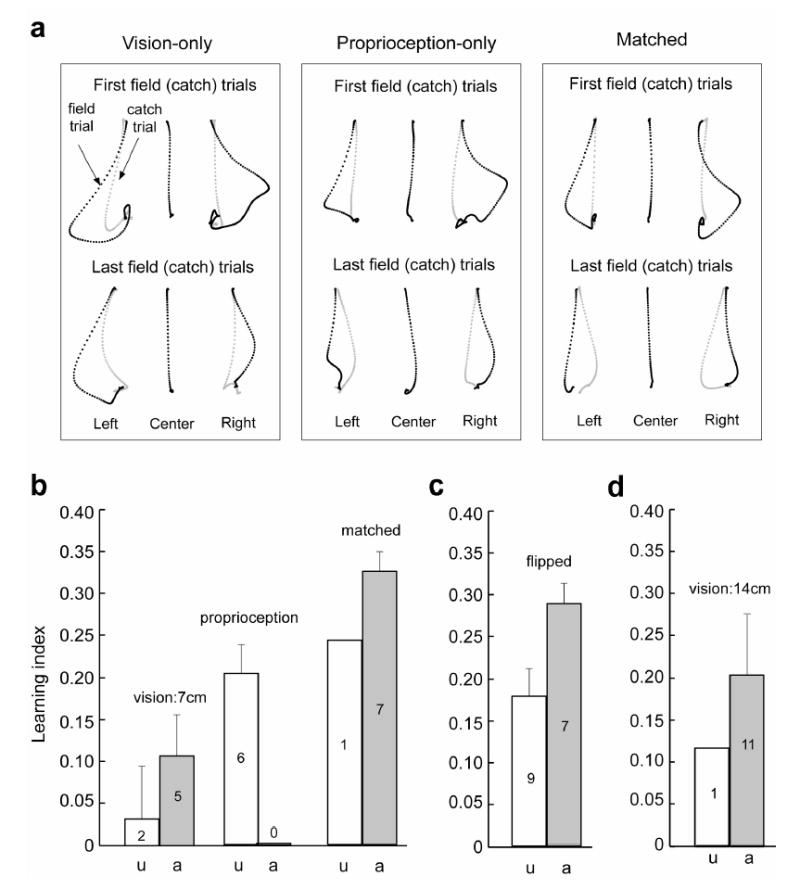

Figure 2.

Implicit learning depends primarily on proprioception but awareness improves learning. A. Hand paths of a typical subject in the vision, proprioception and matched groups. Top panel shows the first field (black) or catch (grey) trials and bottom panel shows the last field or catch trials for each location. B. Results of Experiment 1. Learning index of the vision, proprioception, and matched groups, with sub-groups indicating awareness classification based on post-experiment written questionnaire. The index is an average of performance over all 5 sets of training. See supplementary materials for data for each set. The number inside of each bar indicates the number of subjects. Error bars are standard errors of mean. C. Results of Experiment 2, flipped condition. D. Results of Experiment 3, vision:14cm condition.

Some subjects became consciously aware of the pattern of forces. These subjects were able to describe or confirm the correct relationship between upcoming force direction and the start position of a movement whereas unaware subjects either incorrectly described the relationship or were unable to confirm the correct relationship (Fig. 1c). When the subjects were asked to describe the relationship between the force direction and the start position, most subjects drew a diagram. The typical diagram included boxes at the three start locations and subsequent hand paths. The hand paths were curved toward the force direction often with arrows indicating the force direction (Fig. 1c). Some subjects reported verbally, e.g., “when presenting targets on the right, the robot pushed right, when presenting targets on the left, the robot pushed left and there was no resistance in the middle.”

Performance Measures

As a measure of error, we used the displacement perpendicular to target direction at 250 ms into the movement (perpendicular error, p.e.). Using p.e. at 250 ms, we computed a learning index (Smith and Shadmehr, 2005): When learning occurs, the trajectories of field trials become straight while the trajectories of catch trials become skewed. Therefore, the error in field trials decreases and the error in catch trials increases. We quantified the learning index as:

For each set, mean of the perpendicular errors for the catch trials and the field trials at each location was used to compute the learning index for the left and right movements separately and the mean of these two indices were taken as a learning index for a given set. For each subject, we averaged these indices across the five adaptation sets to use as a single subject’s overall learning index.

Statistical Tests

To test the statistical significance in performance difference among multiple groups, we performed an one-way or two-way ANOVA followed by Tukey's Least Significant Difference(TLSD) multicomparisons using Matlab statistical toolbox. Because the number of subjects in each group is not equal, we performed an unbalanced ANOVA using a function, anovan, in the Matlab toolbox. Moreover, the function anovan allowed us to test only the main effects using a linear model and enabled us to do an ANOVA even in the case that one of the cells in the design matrix was empty. In the post-hoc comparisons, we considred p<0.05 to be statistically significant.

Results

Experiment 1: Proprioceptively sensed state of the limb dominated adaptive control

In the proprioceptive-only condition, visual cues indicated that the reaches were performed in the same location while proprioception indicated that the reach took place in three different locations (separated by 7 cm). The proprioceptively sensed positions correlated with force perturbations. In the visual cue condition, proprioception indicated that the reaches were performed in the same location while vision indicated that they took place in three different locations (separated by 7 cm). The visually sensed positions correlated with force perturbations. In the matched-group, both visual and proprioceptive cues indicated that the reaches were performed in three different locations (separated by 7 cm). If the brain adaptively controls reaching by forming an association between proprioceptively sensed positions of the limb where perturbations were experienced and the motor commands that can overcome them, then performance of matched and proprioceptive cue groups should be similar while performance of proprioceptive cue group should be significantly better than the visual cue group.

While we quantified the effect of vision and proprioception on performance, we also considered the possibility that performance depended not just on an implicit internal model, but also on an explicit component. We tried to gauge this explicit component via awareness of the relationship between the end-effector position and patterns of force. We provided a written interview to each subject immediately after completion of the task (details described in Methods and Figure 1b). Subjects were classified as aware if they were able to describe or confirm the correct relationship between starting positions and force directions (Figure 1c).

Figure 2a displays hand paths of a typical subject from the matched, visual cue, and proprioceptive cue groups. In the first set of field trials, hand paths were strongly disturbed by forces in the left and right locations in all three groups. After training, in the matched and proprioceptive cue group the field trials were straighter and the catch trials were curved in the opposite direction of the fields, indicating that subjects formed internal models for the external perturbations. However, in the vision only-group training resulted in smaller changes in both field and catch trials than the other two groups.

We quantified performance for each subject using a learning index (see Methods). Figure 2b shows averaged performance across subjects in the three experimental conditions. [Supplementary materials provide the detailed time course of this index for each subject group.] We found that group membership significantly affected performance (one-way ANOVA, F(2,18)=13.2, p<10−3). The TLSD post-hoc multi-comparison revealed that the performance difference was significant between the visual cue and proprioceptive cue groups, visual cue and matched groups, and proprioceptive cue and matched groups (See Methods).

Performance in the proprioceptive cue group was significantly better than the visual cue group. Therefore, if the subjects could not see the relationship between movement positions and perturbations, but could proprioceptively sense this correlation, they learned the task significantly better than if the correlation could be sensed via vision only.

Performance of the matched group was significantly better than the proprioceptive cue group. Therefore, when the correlation between movement positions and perturbations could be sensed via both vision and proprioception, there were performance gains with respect to when only one of these modalities was available. The difference between the matched and proprioceptive cue group is an estimate of the influence of visual information. These results reject our hypothesis that adaptation was due to an association of proprioceptively sensed state of the limb with motor errors. Rather, the results suggest that both cues were affecting performance but that proprioception appeared to have a dominant role.

We next examined the performance of the aware and unaware subjects in each group and made two observations. First, a majority of subjects in the vision and matched groups gained awareness while no subject became aware in the proprioception group. This differences in the probability of awareness in the three groups are significant (χ2 test, χ2 =12.71, d.f.=2, p<0.01). This hinted that awareness might have been driven by the visual cues that signaled a correlation between movement positions and perturbations. Second, we noted that aware subjects tended to outperform their unaware counterparts. Unfortunately, this tendency could not be confirmed statistically in a within-group comparison because the visual cue and matched groups contained very few unaware subjects whereas the subjects in the proprioceptive cue group were all unaware.

Because in humans, awareness is a fundamental property of the declarative memory system, the results suggested to us that in the aware subjects we were observing the combined influence of the explicit and implicit memory systems. However, we needed to test whether awareness had a significant effect on performance.

Experiments 2 and 3: Awareness significantly improved performance

In Experiment 2, we recruited new subjects (n=16) and tested them in a new condition. In the “flipped” group the robot positioned the hand at left, center, or right. However, when the hand was at left, the cursor was displayed at right and when the hand was at right, the cursor was displayed at left. Therefore, both visual and proprioceptive sense of limb position correlated with the patterns of perturbation. Figure 2c summarizes the results of the experiment. We found that seven out of sixteen subjects became aware of the force pattern and aware subjects performed significantly better than unaware subjects (one sided t-test, t=2.52, df=14, p=0.01).

Interestingly, among the unaware sub-group performance was very similar to the performance of proprioceptive cue group where subjects were also unaware. We performed a two-way ANOVA for all the experimental groups so far (visual cue, proprioceptive cue, matched and flipped groups) with the first factor being experimental condition and the second factor being awareness. We found that both experimental condition and awareness were significant factors (F(3,33)=9.54, p<10−3 and F(1,33)=8.48, p<0.01). The TLSD post-hoc multicomparisons showed that the performance was statistically different between the visual cue and proprioceptive cue groups, visual cue and matched groups, and visual cue and flipped groups. Performance of the unaware flipped group was not significantly different from the unaware proprioceptive cue group. Therefore, when proprioceptive feedback was identical, performance of unaware subjects was similar (i.e., proprioceptive cue, matched-unaware, and flipped-unaware subgroups). Notice that the performance difference between aware and unaware subgroups in visual cue, matched, and flipped groups is nearly constant. To further test this, we performed a two-way ANOVA for the visual cue, matched and flipped groups using an interaction model and found no significant interaction effect. Thus, awareness appeared to improve performance by roughly a constant amount regardless of the experimental condition.

To quantify the effect of awareness further, we recruited new subjects (n=12) and tested them in a visual cue condition. On every trial the robot placed the hand at the center start position but the cursor was displayed at left, center, or right (distance from center=14 cm). As before, the pattern of force perturbations depended on where the cursor was placed on the screen. We found that 11 out of 12 subjects became aware. Performances of the two sub-groups are plotted in Fig. 2d. In every group where subjects became aware, performance of the aware sub-group appeared better than their unaware counterparts.

An additive model of the implicit and explicit components of learning

To help explain these results, we made a model based on two assumptions. 1) Suppose that the unaware (implicit) learning and aware (explicit) learning each depend on the brain’s ability to estimate limb position in each trial. The estimate of limb position depends on the values observed by vision and proprioception, and also the confidence that the brain has in those values. 2) Suppose that the performance that we measure is a sum of contributions made by the implicit (unaware) and explicit (aware) memory systems.

In trial i , the distance of the visual cue from the midline is represented by v(i) , and distance of the proprioceptive cue from the midline by variable p(i) . If the confidence in the visual cue is noted with cv and the confidence in the proprioceptive cue is noted with cp , then the perceived state of the limb q(i) is (Ernst M.O and Banks M.S., 2002):

| (1) |

Earlier we had reported that performance (i.e., learning index) s depended linearly on the distance between the left and right movements (Hwang et al., 2003). Thus, we have:

| (2) |

For example, Eqs. (1) and (2) explain that performance in the matched group was better than the proprioceptive-only group because in the matched group the visual feedback confirmed the proprioceptive feedback, thereby producing a larger perceived distance between the movements, q(r) − q(l).

Eqs. (1) and (2) include only the effect of separation distance between left and right movements and not the effect of awareness. Suppose that if a subject is aware of the force patterns, then the resulting explicit knowledge would contribute an amount e to the learning index. Performance of unaware and aware groups now becomes:

| (3) |

| (4) |

Here, , and e is a constant indicating the amount of performance improvement due to awareness (Fig. 3a).

Figure 3.

Model fit of learning performance and probability of awareness. A. The model of unaware and aware learning described in Eqs. (3) and (4). B. The data (squares, representing mean+/−SEM) were fit to Eqs. (3) and (4). The term e indicates improvement of learning due to awareness. The model fit (Eqs. 3 and 4) to the group data was r2=0.93. The model fit to the individual subjects was r2=0.27, p<0.001 when k = 0.015 , e = 0.10 and cv = 0.16 , cp = 0.84 . Note that the aware proprioceptive cue group (black dot) was not used to fit the model, but rather was the model’s prediction that we tested in Experiment 4. The grey circle around the black dot is the actual data for Experiment 4. C. The model fit to probability of awareness, where q ′ is perceived state as in Eq. (5). Parameter values: cv’=0.8, and cp’=0.2.

Eq. (4) indicates that in every group, aware subjects should perform better than unaware subjects. It also indicates that performance improvement due to awareness should be similar in all groups. We fitted the three unknown parameter of Eqs. (3) and (4) to the mean of the group data (including separate sub-groups for aware and unaware) and found an r2=0.93 (Fig. 3b). Therefore, the model accounted for the group data exceedingly well. How well did the model account for individual subjects? Using the same model, we computed an r-square for data from individual subjects in Experiments 1–3 (Fig. 3b) and found a highly significant fit (p<0.001, r2=0.27). The fit estimated that wv = 0.0027+/−0.0042 (mean+/−95% confidence), wp = 0.0148+/− 0.0057, and e = 0.104+/−0.10. This resulted in cv = 0.16, and cp = 0.84. Therefore, performance of unaware subjects was significantly affected by proprioceptive information, while performance in all subjects was significantly affected by awareness.

Experiment 4: Providing explicit knowledge via instructions improved performance

Although our data suggests that the aware subjects outperform their unaware counterparts by roughly a constant amount as our model’s fit to the data shows, the small number of subjects in the unaware subgroup in each experimental condition except the flipped condition makes it hard to prove this point. To test how well this model predicted performance on new data, we recruited yet another group of subjects. Our model predicted that if subjects in the proprioceptive cue group became aware of the field pattern, they would perform better than the unaware proprioceptive cue subjects by a similar amount as the other conditions. The black dot in Figure 3b indicates the model’s prediction. To test this prediction, we needed aware proprioceptive cue subjects. However, we had earlier found that it was unlikely that subjects in the proprioceptive cue condition would become aware. Thus, we recruited four new subjects and before beginning of experiment used a diagram to inform these subjects of the relationship between the start position of each reach and the perturbation patterns. The grey circle in Figure 3b shows the performance of these subjects. Their performance was essentially identical to the model’s predicted performance, confirming a nearly constant improvement due to awareness. Providing explicit knowledge via instructions significantly improved performance (one-sided t-test, t=2.25, df=9, p=0.025).

To summarize the effect of the experimental condition and awareness, we performed a two-way ANOVA across all the experimental groups including the aware proprioceptive cue group and found that both the experimental condition and awareness were significant factors (F(4, 48)=3.70, p<0.01 and F(1,48)=5.94, p<0.05). The post-hoc multi-comparisons showed that the performance difference was significant between the visual cue and proprioceptive cue groups, visual cue and matched groups, visual cue and flipped groups, and matched and double visual cue groups.

Probability of becoming aware depended mainly on visual information

Finally, we considered how the probability of becoming aware Pr(a) depended on visual and proprioceptive information. We represented awareness as a binary variable a and used Pr(a) to represent the probability of becoming aware. We imagined that this probability may depend on performance, i.e., the better the performance, the higher the probability of becoming aware. In such a scenario, awareness would be triggered when a subject’s performance reached a certain threshold. Our data rejected this possibility: In the proprioceptive cue group, where performance was high, Pr(a) = 0 , whereas in the vision:7cm group, where performance was poor, Pr(a) = 0.71.

We next considered the idea that the probability of becoming aware depended on the presence of visual and proprioceptive information and not performance, Pr(a) = g(v, p) . Thus, in a group of subjects the expected value of the learning index should depend on both the unaware learning and the probability of awareness:

Figure 3c plots Pr(a) for all groups. In the vision:7cm and vision:14cm groups proprioceptive cues were identical but Pr(a) increased from 0.72 to 0.92. This suggested that Pr(a) depended on the visually perceived distance of the movements. However, proprioception was also a factor because while visual distances of the cues were identical in the flipped and matched groups, Pr(a) increased from 0.44 to 0.875. Therefore, both visual and proprioceptive information affected awareness. To estimate the relative importance of each cue, we assumed that q ′ was the perceived state as in Eq. (1), except with new confidence coefficients c1 ′ and c2 ′. Probability of awareness depended on q ′ via a logistic function:

| (5) |

The fit of this model to the data in Figure 3c produced values: cv’=0.8, and cp’=0.2 (r2=0.88, p<0.05). Thus, probability of becoming aware was four times more dependent on visual information than proprioceptive information.

Discussion

The present study examined whether there were dissociable explicit and implicit components to the force field learning paradigm and quantified the relative influence of proprioception and vision on each component. We considered a task where reaching movements interacted with force fields. The fields depended on starting position of the reaching movements. In the visual cue group, starting position varied in the visual space but not in the proprioceptive space. In the proprioceptive cue group, starting position varied only in the proprioceptive space. In the matched group, starting position varied in both spaces congruently and in the fli12pped group, starting position varied in both spaces but incongruently. We found that the majority of subjects became aware of the force field dependency on the start position when the visual cue position varied while no subject became aware when only the proprioceptive cue position varied. Moreover, in each group, the aware subjects outperformed their unaware counterparts by a small but significant amount. Also performance comparison among visual cue, proprioceptive cue, matched and flipped groups after removing the awareness dependent learning (explicit learning) revealed that implicit learning system relied primarily on proprioceptive cue. Therefore, we report the new observation that in learning dynamics of reaching, the brain relies on both implicit and explicit learning systems. Performance was dominated by the implicit learning system and that system relied primarily on proprioception to form internal models of dynamics. However, performance was significantly affected by an explicit learning system that became aware of the force patterns and this awareness depended primarily on visual information in the task.

A role for awareness in control of reaching

In many motor skills that have been studied in the laboratory, e.g. prism adaptation, visual rotation, and serial reaction time (SRT) sequence learning, there are distinct components of performance that may be due to awareness. For example, in the SRT task awareness of the underlying sequence can develop as a consequence of prolonged training (Stadler, 1994) or simply through a cue that signals the introduction of the sequence (Willingham et al., 2002). Patients with unilateral parietal cortex damage show no ability to learn the SRT task (Boyd and Winstein, 2001). However, their performance improves significantly if they first learn the sequence explicitly. These results not only support the anatomical distinction between regions that support explicit and implicit learning, but suggest that explicit knowledge can help improve motor performance.

A well studied motor learning task is the control of reaching in force fields. Subjects with severe impairment in their declarative memory system, e.g., amnesic patients, appear to have normal learning and retention in this task (Shadmehr et al., 1998). However, this does not imply that explicit knowledge plays no role in the task. In a recent study, Osu et al. (Osu et al., 2004) demonstrated that subjects could learn to associate force perturbations with visual cues when they were provided with explicit verbal information. However, when such instructions were not provided, extensive training on a similar task did not produce any improvement in performance (Rao and Shadmehr, 2001). Therefore, it is possible that even in the force field task; explicit knowledge might play a role in performance.

Here we found that aware subjects in every group tended to perform better than their unaware counterparts. The effect of awareness appeared to be a constant increase in performance. Note that the small number of subjects in the unaware subgroup in each experimental condition except the flipped condition makes it hard to prove that there was a constant amount of performance improvement. However, the results of the experiment 4 that aware subjects in the proprioceptive cue condition outperformed their unaware counterparts by the similar amount as the other conditions support the idea that awareness results in a constant increase in performance. Then, how did aware subjects perform better? One might expect that aware subjects developed some strategies to succeed in the task, e.g. aiming differently depending on the expected direction of perturbation. We closely analyzed kinematic features like hand paths, speed profile, and perpendicular velocity but could not detect an obvious marker that distinguished an aware subject (supplementary material). Indeed, in our post-experiment survey all of our aware subjects denied that they aimed differently depending on the expected force field direction. Instead, most aware subjects reported that they were “mentally getting prepared to resist against the expected direction of perturbation”.

Effect of vision-proprioception mismatch

Our experiment imposed a discrepancy between the start positions of the reach as viewed visually vs. felt proprioceptively. Importantly, once the movement began, the displacements in the two coordinate systems remained consistently aligned. While it is known that discordant hand-cursor motion impairs the subject’s visual tracking, discordance is generally due to velocity mismatch rather than absolute start position. In our experiment, there was no velocity mismatch except that the horizontal movements were projected onto the vertical plane which would affect all the experimental groups.

The visual cue and proprioceptive cue groups might have performed worse than the matched group simply due to the visual-proprioceptive discordance. According to this general impairment scenario, both the flipped and the 14 cm visual cue groups would perform worse than the 7 cm visual cue group because the former two conditions induced larger discrepancy, impairing the tracking ability even more than the 7 cm visual cue group. On the contrary, our data showed that subjects in the flipped and 14 cm visual cue group performed better, suggesting that a mismatch-induced general impairment is unlikely to have a large effect.

Relative contributions of vision and proprioception

Until very recently, many studies showed that vision dominates when vision and proprioception conflict in perceptual tasks (Ernst and Banks, 2002). However, Sober and Sabes(2003) found that the relative contributions of vision and proprioception are not fixed but variable depending on the computation being performed. Furthermore, even for the same computation, the brain weights differently depending on the context such as the content of the visual information or the sensory modality for the target location, suggesting that the brain tries to minimize errors arising from transformation between coordinates (Sober and Sabes, 2005). From this point of view, one reason why we found the visual information was severely discounted in implicit learning system might be related to our experimental setup which used a vertical monitor instead of a virtual reality or a horizontal projector. In our experimental setup, the visual information presented on the vertical monitor needs to be transformed to the horizontal plane, and so it is possible that the visual cue was further discounted to reduce errors from this additional transformation.

Model predictions and limitations

Awareness that we observed in our task likely relies on neural structures that are distinct from those that store implicit knowledge. In this scenario, the result of training is formation of potentially two different internal models, independently contributing to performance. This scenario is intriguing because it potentially can explain some puzzling recent results. Earlier work had found that training in a force field generalizes strongly to the trained arm in intrinsic coordinates but weakly to the opposite arm in extrinsic coordinates (Criscimagna-Hemminger et al., 2003;Malfait and Ostry, 2004). If performance is due to sum of a strong implicit internal model that depends on proprioception and a weak explicit internal model that depends on vision, then the patterns of generalization can be explained. Furthermore, our model predicts that if the force field is imposed gradually on the arm so that there are little or no visual cues to predict the direction of perturbation, there will be little probability to become aware, resulting in little or no generalization to the other arm. This is consistent with a recently reported result (Malfait and Ostry, 2004). Finally, one does not need to actually move their arm in a force field to become aware of the perturbation patterns. Viewing of a video might suffice. Indeed, a recent report found evidence for this idea (Mattar and Gribble, 2005).

Our model makes a number of novel predictions. We predict that in amnesic patients where acquisition of explicit information is impaired but learning implicit internal models of reaching is unimpaired (Shadmehr et al., 1998), there should be little or no transfer between arms because this transfer depends on internal models that form in explicit memory. Willingham (Willingham, 2001) has argued that the system that acquires explicit information can guide motor behavior so that performance improves, but that this guidance requires attention. Thus, our model predicts that increasing the attention load on a reaching task, e.g., via a distracter, should: 1) reduce the probability of becoming aware; 2) reduce performance with the trained arm only minimally, but 3) affect the pattern of generalization to the untrained arm substantially.

How might awareness influence the neural representation of implicit internal models? Consider the basis function model of force field learning (Hwang et al., 2003) where bases encode the state of the limb where a perturbation was sensed. We might imagine that visually-driven awareness modulates sensitivity of the bases to changes in arm position. For a given spatial distance between two movements, increasing the sensitivity of the bases to changes in arm position is equivalent to increasing the separation distance between two movements, thus resulting in improved learning. Attention or other cues can indeed modulate tuning of neurons (Reynolds et al., 2000;Moran and Desimone, 1985;Musallam et al., 2004). For example, visual stimuli with low luminance contrast elicit higher activation of V4 neurons when attention is directed to the stimulus location than when attention is directed away from the stimulus location, demonstrating that attention increases the sensitivity to luminance contrast (Reynolds et al., 2000). Thus, if effect of awareness is similar to effect of attention, sensitivity of the bases to changes in perceived arm position might be enhanced, resulting in improved learning.

There are limitations on the ability of our study to answer some of the more important questions with regard to explicit learning. First, we did not control awareness of explicit knowledge and rather relied on each subject’s report in the post-experiment survey. It is unclear at which point during the experiment each subject became aware. However, when we informed the subjects at the beginning of the trials about the field-cue pattern (i.e., aware proprioceptive cue group), performance was remarkably close to the model’s prediction. This suggests that in other groups, subjects probably became aware early in training. Second, we made a binary categorization for each subject, aware or unaware, but awareness might not be an all or none phenomena. Thus, even among aware subjects, degree of awareness probably varied. When we fit our model to the group data, the model explained 93% of the variance. When we used the same parameters to explain the individual data, we found a highly significant fit (p<0.001), but we could only explain 27% of the variance. This suggests that the model is an excellent predictor of group performance, but far worse in predicting performance of individual subjects. The inability to account for the variance within each sub-group is probably a reflection of our inability to quantify awareness beyond a binomial distribution.

In summary, we find that adaptive control of reaching in force fields can produce both implicit and explicit knowledge, and both affect production of motor commands. The implicit knowledge strongly affects performance and appears to depend primarily on limb states that are sensed via proprioception. Visually sensed correlations between limb state and perturbations can lead to awareness, and the resulting explicit knowledge has a small but significant impact on the motor commands that control reaching.

Acknowledgments

This work was supported by grants from the NIH (NS37422, NS46033).

References

- Boyd LA, Winstein CJ. Implicit motor-sequence learning in humans following unilateral stroke: the impact of practice and explicit knowledge. Neurosci Letters. 2001;298:65–69. doi: 10.1016/s0304-3940(00)01734-1. [DOI] [PubMed] [Google Scholar]

- Buneo CA, Jarvis MR, Batista AP, Andersen RA. Direct visuomotor transformations for reaching. Nature. 2002;416:632–636. doi: 10.1038/416632a. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Andersen RA. Reaches to sounds encoded in an eye-centered reference frame. Neuron. 2000;27:647–652. doi: 10.1016/s0896-6273(00)00073-8. [DOI] [PubMed] [Google Scholar]

- Conditt MA, Gandolfo F, Mussa-Ivaldi FA. The motor system does not learn the dynamics of the arm by rote memorization of past experience. J Neurophysiol. 1997;78:554–560. doi: 10.1152/jn.1997.78.1.554. [DOI] [PubMed] [Google Scholar]

- Criscimagna-Hemminger SE, Donchin O, Gazzaniga MS, Shadmehr R. Learned dynamics of reaching movements generalize from dominant to nondominant arm. J Neurophysiol. 2003;89:168–176. doi: 10.1152/jn.00622.2002. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Hwang EJ, Donchin O, Smith MA, Shadmehr R. A gain-field encoding of limb position and velocity in the internal model of arm dynamics. PLoS Biology. 2003;1:209–220. doi: 10.1371/journal.pbio.0000025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kakei S, Hoffman DS, Strick PL. Direction of action is represented in the ventral premotor cortex. Nature Neurosci. 2001;4:1020–1025. doi: 10.1038/nn726. [DOI] [PubMed] [Google Scholar]

- Malfait N, Ostry DJ. Is interlimb transfer of force-field adaptation a cognitive response to the sudden introduction of load? J Neurosci. 2004;24:8084–8089. doi: 10.1523/JNEUROSCI.1742-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malfait N, Shiller DM, Ostry DJ. Transfer of motor learning across arm configurations. J Neuroscience. 2002;22:9656–9660. doi: 10.1523/JNEUROSCI.22-22-09656.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattar AA, Gribble PL. Motor learning by observing. Neuron. 2005;46:153–160. doi: 10.1016/j.neuron.2005.02.009. [DOI] [PubMed] [Google Scholar]

- Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229:782–784. doi: 10.1126/science.4023713. [DOI] [PubMed] [Google Scholar]

- Musallam S, Corneil BD, Greger B, Scherberger H, Andersen RA. Cognitive control signals for neural prosthetics. Science. 2004;305:258–262. doi: 10.1126/science.1097938. [DOI] [PubMed] [Google Scholar]

- Osu R, Hirai S, Yoshioka T, Kawato M. Random presentation enables subjects to adapt to two opposing forces on the hand. Nat Neurosci. 2004;7:111–112. doi: 10.1038/nn1184. [DOI] [PubMed] [Google Scholar]

- Rao, A. K. and Shadmehr, R. Contentual cues facilitate learning of multiple models of arm dynamics. Soc.Neurosci.Abs. 27[302.4]. 2001. Ref Type: Abstract

- Reynolds JH, Pasternak T, Desimone R. Attention increases sensitivity of V4 neurons. Neuron. 2000;26:703–714. doi: 10.1016/s0896-6273(00)81206-4. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Desmurget M, Prablanc C. Vectorial coding of movement: Vision, proprioception, or both? J of Neurophysiology. 1995;74:457–463. doi: 10.1152/jn.1995.74.1.457. [DOI] [PubMed] [Google Scholar]

- Scott SH, Kalaska JF. Reaching movements with similar hand paths but different arm orientation: I. Activity of individual cells in motor cortex. J Neurophysiol. 1997;77:826–852. doi: 10.1152/jn.1997.77.2.826. [DOI] [PubMed] [Google Scholar]

- Shadmehr R, Brandt J, Corkin S. Time dependent motor memory processes in H.M. and other amnesic subjects. J Neurophysiol. 1998;80:1590–1597. doi: 10.1152/jn.1998.80.3.1590. [DOI] [PubMed] [Google Scholar]

- Shadmehr R, Moussavi ZMK. Spatial generalization from learning dynamics of reaching movements. J Neurosci. 2000;20:7807–7815. doi: 10.1523/JNEUROSCI.20-20-07807.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadmehr R, Mussa-Ivaldi FA. Adaptive representation of dynamics during learning of a motor task. J Neurosci. 1994;14:3208–3224. doi: 10.1523/JNEUROSCI.14-05-03208.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith MA, Shadmehr R (2005) Intact ability to learn internal models of arm dynamics in Huntington’s disease but not cerebellar degeneration. J Neurophsyiol in press. [DOI] [PubMed]

- Sober SJ, Sabes PN. Multisensory integration during motor planning. J Neurosci. 2003;23:6982–6992. doi: 10.1523/JNEUROSCI.23-18-06982.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN. Flexible strategies for sensory integration during motor planning. Nat Neurosci. 2005;8:490–497. doi: 10.1038/nn1427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stadler MA. Explicit and implicit learning and maps of cortical motor outpu. Science. 1994;265:1600–1601. doi: 10.1126/science.8079176. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: An experimentally supported model. J Neurophsyiol. 1999;81:1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- Willingham DB. Becoming aware of motor skill. Trends Cog Sci. 2001;5:181–182. doi: 10.1016/s1364-6613(00)01652-1. [DOI] [PubMed] [Google Scholar]

- Willingham DB, Salidis J, Gabrieli JD. Direct comparison of neural systems mediating conscious and unconscious skill learning. J Neurophysiol. 2002;88(3):1451–1460. doi: 10.1152/jn.2002.88.3.1451. [DOI] [PubMed] [Google Scholar]