Abstract

Objective: The paper compares the speed, validity, and applicability of two different protocols for searching the primary medical literature.

Design: A randomized trial involving medicine residents was performed.

Setting: An inpatient general medicine rotation was used.

Participants: Thirty-two internal medicine residents were block randomized into four groups of eight.

Main Outcome Measures: Success rate of each search protocol was measured by perceived search time, number of questions answered, and proportion of articles that were applicable and valid.

Results: Residents randomized to the MEDLINE-first (protocol A) group searched 120 questions, and residents randomized to the MEDLINE-last (protocol B) searched 133 questions. In protocol A, 104 answers (86.7%) and, in protocol B, 117 answers (88%) were found to clinical questions. In protocol A, residents reported that 26 (25.2%) of the answers were obtained quickly or rated as “fast” (<5 minutes) as opposed to 55 (51.9%) in protocol B, (P = 0.0004). A subset of questions and articles (n = 79) were reviewed by faculty who found that both protocols identified similar numbers of answer articles that addressed the questions and were felt to be valid using critical appraisal criteria.

Conclusion: For resident-generated clinical questions, both protocols produced a similarly high percentage of applicable and valid articles. The MEDLINE-last search protocol was perceived to be faster. However, in the MEDLINE-last protocol, a significant portion of questions (23%) still required searching MEDLINE to find an answer.

Highlights

This randomized trial evaluated different searching methods for identifying answers to clinical questions, utilizing MEDLINE first versus pre-appraised resources first.

Searching MEDLINE was perceived by residents to take longer than pre-appraised resources.

Residents needed both MEDLINE and pre-appraised resources to answer questions generated during clinical care of patients.

Residents applied critical appraisal skills during literature searches to answer clinical questions.

Implications for practice

Medical libraries need to provide both MEDLINE and pre-appraised resources to provide resources for answers to the largest proportion of clinical questions.

Though pre-appraised resources were useful in answering clinical questions, access to the primary literature was still required to answer the questions; this observation may serve as an essential component of training in information skills provided to clinicians.

INTRODUCTION

The practice of evidence-based medicine (EBM) requires the judicious use of valid clinical research, combined with clinical expertise and patient values to help inform decisions for managing patient care [1]. The practice of EBM follows the steps of the evidence cycle: assessing the patient, asking a well-focused question, acquiring the evidence, appraising the evidence for internal and external validity, and applying the information to patient care. The practice of EBM thus begins with a patient encounter that generates questions about patient care. That information need is translated into a well-focused question and a search for relevant and valid information that can be applied to the decision-making process for that patient. To be most effective, the practice of EBM must occur in real-time at the point of patient care [2].

The literature reports that clinicians generate numerous questions per patient encounter, ranging from 2 questions per 3 patients to 3 questions per 10 patients. Ely recorded 1,101 questions related to patient care asked by 103 family physicians during 732 hours of observation in clinical settings [3]. Most questions (64%) were not immediately addressed because the clinician, “after voicing uncertainty…felt that a reasonable decision could be based on his or her current knowledge.” For the questions that were pursued, physicians spent less than 2 minutes seeking an answer and only 2 questions led to a formal literature search. Green reported that 64 residents asked 280 questions during 404 patient encounters [4]. Again, most questions (71%) were not pursued. The most common reasons for failing to pursue an answer were lack of time (60%) and forgetting of the question (29%). Thus the ability to formulate questions and quickly access information is crucial to the practice of EBM and managing patient care.

Hunt has suggested that the most efficient approach to answering a focused clinical question is to begin with a “prefiltered” or pre-appraised resource [5]. These resources are updated regularly, cite methodologically sound studies, focus on clinically important topics, and are easy to search. Examples of such resources are ACP Journal Club, the Cochrane Database of Systematic Reviews, and UpToDate. MEDLINE does not fall into this category because it has a broader purpose, which is to collect, organize, and disseminate the biomedical literature. The increased availability of these resources at the point of care necessitates a formal evaluation of their efficiency in providing answers to clinical questions. Because many of the skills that physicians use in everyday practice are gained in postgraduate training, this study seeks to determine the most efficient use of these electronic resources for answering clinical questions asked by residents in an internal medicine training program.

METHODS

Participants

Participants were recruited from second- and third-year residents who had completed a formal EBM curriculum during their intern year and were scheduled for an eight-week rotation on a general medicine ward between August 2001 and April 2002. The general medicine ward was chosen as the site for this study due to the central role of ward rotations in graduate medical education as well as the high volume of clinical questions that arise during the course of care directed by residents [6]. All of these residents had been exposed to at least one library training session, which covered searching MEDLINE via Ovid and the library's pre-appraised resources (ACP Journal Club, Cochrane Database of Systematic Reviews via EBM Reviews from Ovid, and UpToDate).

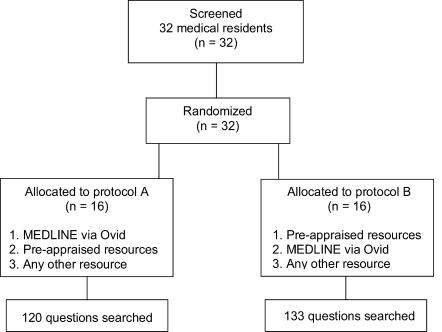

Thirty-two medical residents consented to the study and were randomized by computer-assisted random number determination for block order. This resulted in four blocks of eight residents randomized to one of two different search protocols for finding methodologically sound studies to answer clinical questions. Protocol A searched MEDLINE first, and protocol B searched the pre-appraised resources first (Figure 1). Each block represented an eight-week rotation on the general medicine ward. Residents did not receive compensation and were motivated by the expectation of practicing EBM during the care of their patients. The Duke University Medical Center Institutional Review Board approved the study proposal.

Figure 1.

Study protocol

Each day during the eight-week rotation, the residents formed at least one clinical question during the real-time care of in-patients, attending rounds, or sign-out rounds with the chief medical resident. The questions, which were considered to be important information required in caring for patients, were transcribed onto pocket-sized cards that the residents carried with them. Residents were instructed to search for valid evidence to answer each question. Valid evidence was defined as studies meeting the critical appraisal criteria outlined in Users' Guides to the Medical Literature: A Manual for Evidence-based Clinical Practice [7]. These criteria were part of the content of the EBM curriculum during the intern year at the medical center.

The sixteen residents randomized to protocol A searched MEDLINE first, and then, if no answers were found, they were instructed to search the pre-appraised resources from the medical center library's Website. The sixteen residents randomized to protocol B searched the pre-appraised resources first, and then, if no answers were found, they were instructed to search MEDLINE. If no answers were found using either of these protocols, residents were allowed to pursue the answer in any other resources available to them. Residents categorized the questions (therapy, diagnosis, prognosis, or etiology) before they searched and were instructed to consider the primary aim of the question, even if it covered more than one category. All questions were searched according to the randomized protocol.

When an article that answered the question was found, the resident printed the abstract and recorded the clinical question, type of question, and the unique identifier (UI) for the article into a Web-based database of clinical questions, the Duke CAR database [6]. The UI allowed the citation to be linked to the full text of the article for the resident to read and for the study reviewers to evaluate. The residents also recorded the resource that the article was found in and reported the perceived amount of time to find an answer: fast, less than five minutes; reasonable, less than ten minutes; or slow, more than ten minutes. This study employed Hunt's criteria for efficiency, defining an efficient search process as one providing the desired result (methodologically sound and clinically important studies) with the least amount of waste (not difficult or time consuming to search) [5]. Based on this definition for efficiency and the data from Ely that found residents searched for less than two minutes most of the time [3], it was determined that less than five, five to ten, and more than ten minutes represented an accurate assessment of physician availability for real-time clinical answers. Residents stated that once an article was found, the electronic data entry portion of the study was not cumbersome.

Resources

Pre-appraised resources, such as ACP Journal Club, apply EBM criteria to the evaluation of an article and summarize the study methodology and results in a “value-added” abstract, often adding commentary from a clinical expert. The Cochrane Database of Systematic Reviews takes a focused clinical question, conducts a thorough review of the literature, and then draws a conclusion based on the results of included validated studies. UpToDate was included as a pre-appraised resource, as it met most of the criteria—is updated regularly, covers topics that are clinically relevant, and is easy to search—and because it was used heavily by the residents.

MEDLINE is the US National Library of Medicine's premier bibliographic database that contains more than sixteen million references to journal articles in life sciences with a concentration on biomedicine. It is an indexed database, relying on a hierarchical vocabulary structure to identify and retrieve relevant citations. MEDLINE provides access to the published biomedical literature but makes no attempt to critically appraise the articles cited in its database. All participants in this study searched MEDLINE using the Ovid platform, which was easily accessible from the medical center library's Website.

Data review

Faculty reviewers were recruited from the department of medicine, which included internal medicine and the subspecialties of cardiology, gastroenterology, geriatrics, infectious disease, and oncology. All faculty had previous formal training in teaching EBM either from McMaster University's How to Teach Evidence-based Clinical Practice workshop or from a similar course at Duke. Ten faculty members, blinded to the study protocol, were selected to review a subset of the therapy questions and corresponding articles identified as answering the questions. Therapy questions were chosen because they are consistently reported as the most common type of questions [6, 8]. Each faculty member reviewed between fifteen and twenty articles.

The articles were first assessed by two reviewers, who determined if the article addressed the specific clinical question. To do this, the reviewers took the clinical question; framed it in the format of identifying the patient or problem, intervention, comparison, and outcome (PICO); and then compared this to the article title and/or abstract. Applicability was demonstrated if the article matched three of the four parts of the PICO and was the appropriate study design for the type of question [9]. If the two reviewers did not agree that the article addressed the question, then a third reviewer assessed the question-to-article relationship. The second major criterion for assessment was the internal validity of the article. The reviewers applied the EBM validity criteria for therapy articles as described by Guyatt [10]. The reviewers used a standardized form to indicate if the study was randomized; if participants were all accounted for; if patients, physicians, and assessors were blinded; and if groups were similar at the start of the study. When the reviewers disagreed, a third reviewer applied the same criteria to break the tie.

Statistical analysis

Statistical analysis was performed on the number of responses in each protocol in reference to what the perceived time to find an answer was, whether or not the article addressed the question, and whether or not the answer article met the validity criteria. For each analysis, a chi-squared test was used to determine statistical significance between the two protocols. The chi-squared test was used in this study as none of the expected counts was less than five.

RESULTS

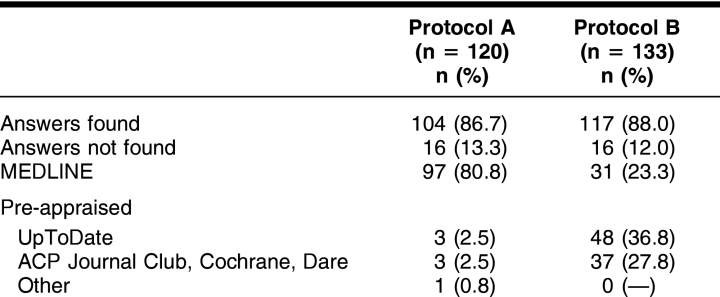

The total number of questions searched by residents in each protocol was similar: 120 questions in protocol A (MEDLINE-first) and 133 questions in protocol B (MEDLINE-last). The number of questions for which an answer was found was also similar: 104 (86.7%) answers in protocol A and 117 (88.0%) answers in protocol B (Table 1). The types and number of questions that the residents asked were also similar in both groups: therapy (A = 85/71%, B = 90/68%), diagnosis (A = 19/16%, B = 24/18%), prognosis (A = 13/11%, B = 11/8%), and other (A = 3/2%, B = 8/6%).

Table 1 Clinical questions

The resource in which the answer article was found for each protocol is presented in Table 1. In protocol A, ninety-seven (80.8%) of the answers were found in MEDLINE and six (5.0%) were found in the pre-appraised resources. In protocol B, eighty-five (64.6%) of the answers were found in the pre-appraised resources and thirty-one (23.3%) were found in MEDLINE. UpToDate was the major resource for answers in protocol B, whereas MEDLINE was the major source for answers in protocol A.

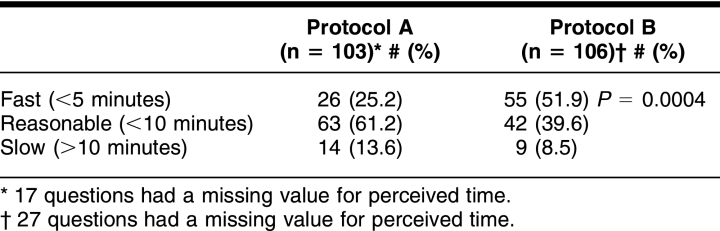

The results of the perceived time to find an answer for each protocol are presented in Table 2. A statistically greater number of questions was answered in less than five minutes in protocol B (MEDLINE-last) than protocol A (MEDLINE-first) (55, 51.9%; 26, 25.2%; P < 0.00004). The majority of questions were felt to take a reasonable amount of time in protocol A, with sixty-three (61.2%) questions answered in less than ten minutes. Each group had some questions for which the residents did not enter the time to find an answer, including seventeen questions in protocol A and twenty-seven questions in protocol B.

Table 2 Perceived time

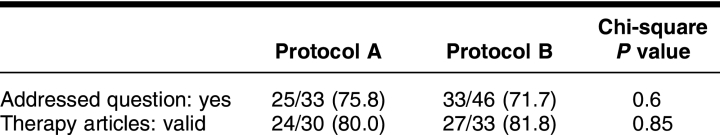

A random subset of 79 therapy answer articles was reviewed to determine if they addressed the question and if the study methodology was valid. A similar portion of answer articles, 75.8% in protocol A and 71.7% in protocol B, met the criteria for addressing the clinical question. Similar numbers of answer articles were valid in each group, including 24 (80%) in protocol A and 27 (81.8%) in protocol B (Table 3).

Table 3 Answer articles

DISCUSSION

This study reports on a randomized trial of using 2 different search protocols to answer 253 clinical questions posed by medical residents on a general medicine in-patient rotation. Protocol B (MEDLINE-last), which searched pre-appraised resources first, was perceived to be faster at finding articles to answer questions than protocol A, which searched MEDLINE first. Analysis of a subset of the answer articles by faculty reviewers did not find a difference in the proportion of articles that were applicable to the clinical question and that met validity criteria. The pre-appraised resources were perceived as faster to search; however, they left 23% of the questions unanswered, corresponding with other evaluations of pre-appraised resources [11, 12]. MEDLINE took more time to search but left only 6% of the questions unanswered.

How should these results be applied to current medical training? EBM is an important component of graduate medical education. In 2000, 37% of surveyed residency program directors reported an independent EBM curriculum, with common objectives including appraising critically (78%), searching for the evidence (53%), posing questions (44%), and applying evidence to decision making (35%) [13]. Although 97% of programs provided access to MEDLINE, only 33% provided access to pre-appraised resources such as ACP Journal Club or the Cochrane Database of Systematic Reviews. This study shows that to facilitate the practice of EBM and the answering of clinical questions, residents and clinicians need access to both pre-appraised resources and MEDLINE. Pre-appraised resources provide more timely access to answers because they are faster to search and the included studies have already been critically appraised. In contrast, MEDLINE provides answers to some questions that are not available in the pre-appraised literature but can be slower to search and requires the resident to apply critical appraisal skills to determine the study's validity.

One of the major factors associated with failing to use the medical literature to answer clinical questions is time [2, 4]. Time is related to the availability of the resource at the point of need and to the complexity of the search process to find an answer in the resource. While the outcome measure of perceived time to find an answer in this study is subjective, the perception of a quick search may be as important as the reality of a fast search for resident physicians' motivation to find answers to clinical questions. The fast time of less than five minutes used in this study was within the acceptable time limits as consistently reported by busy clinicians [2, 4]. For this study, both the pre-appraised resources and MEDLINE were available at the same location, however, the complexity of the search process was very different. The pre-appraised resources were searched by text-words in the title or abstract. Searching MEDLINE via Ovid was more difficult, requiring some knowledge of Medical Subject Headings (MeSH) and Boolean logic.

A significant barrier to the widespread utilization of the MEDLINE-last protocol may well be cost. As financial resources at most institutions are limited and medical information expands, the appropriate utilization of electronic resources for teaching and clinical practice is essential. MEDLINE is available for free from the National Library of Medicine through PubMed. Personal and institutional subscriptions to the ACP Journal Club, the Cochrane Database of Systematic Reviews from Ovid, and UpToDate can be costly, ranging from over $200 per individual to over $40,000 for an institution. In addition, individual familiarity and experience with each resource varies. Thus access to these resources will be specific to each institution's financial resources and practice patterns.

Most graduate medical education programs in EBM focus on the critical appraisal process. Residents are taught how to evaluate a study based on rules of evidence that determine the validity of the study methodology. These results show that residents are learning these skills and can successfully apply the EBM criteria to identify valid therapy studies. Residents using protocol A (MEDLINE-first) identified a similar percentage of valid articles compared to residents searching the pre-appraised resources (protocol B MEDLINE-last), (80.0% vs. 81.8%).

This study has some limitations. Any trial examining answers to clinical questions will depend on the generated questions and the expertise of the clinicians performing the search. In the medical residency training program at this medical center, all the residents are exposed to a curriculum including clinical question formation and basic MEDLINE searching starting in their intern year. Thus these trainees may be more fluent at using the electronic resources and searching, accounting for the high rate of success when seeking answers to clinical questions (over 80% in either strategy). While all residents attended the searching sessions as part of the EBM curriculum, this study did not assess the individual search skills of each resident for this study. The emphasis on EBM in the training program may limit generalizability to other settings without such curricula. The potential also exists for clustering of data as single participants might have answered multiple questions, which could affect the independence of each search and answer. Another limitation is the inclusion of UpToDate as a pre-appraised resource. While there may be disagreement over the pre-appraised quality of UpToDate [14], it is important to include it in this study as many residents use this resource exclusively to answer clinical questions. Finally, the study does not dictate a specific sequence for searching the resources beyond the basic protocols of either pre-appraised resources first or MEDLINE first. As a result, recommendations for which resource to search first cannot be drawn from the data. Nonetheless, the results show that answering clinical questions requires a variety of resources and that neither the pre-appraised resource nor MEDLINE are by themselves sufficient to provide answers to all the questions that might arise during the management of patients.

This study was a randomized trial to determine the most efficient use of electronic resources for answering clinical questions asked by residents in an internal medicine training program. The MEDLINE-last protocol was perceived to yield answers faster than the MEDLINE-first protocol. Both protocols were similar with respects to applicability and validity of answer articles. In the MEDLINE-last protocol, a significant portion of questions (23%) still required searching MEDLINE to find an answer. This has implications for clinicians and educators: if resident physicians depend solely on summarized and pre-appraised resources, then an important portion of questions may go unanswered.

Further research needs to be done on specific information resources and their utility in different settings. Based on these results, pre-appraised resources should be considered in addition to MEDLINE for teaching EBM practice in residency programs.

Acknowledgments

This study acknowledges the Duke University internal medicine residents for their willingness to search for clinical questions during the general medicine rotations. The UpToDate Company provided free electronic access to their site to the participants of the study for the duration of the study.

Contributor Information

Manesh R. Patel, Email: manesh.patel@duke.edu.

Connie M. Schardt, Email: connie.schardt@duke.edu.

Linda L. Sanders, Email: linda.sanders@duke.edu.

Sheri A. Keitz, Email: sheri.keitz@duke.edu.

REFERENCES

- Straus SE. Evidence-based medicine: how to practice and teach EBM. 3rd ed. London, UK: Elsevier, 2005:1. [Google Scholar]

- Ramos K. Real-time information-seeking behavior of residency physicians. Fam Med. 2003 Apr; 35(4:):257–60. [PubMed] [Google Scholar]

- Ely JW. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999 Aug 7; 319(7206:):358–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green ML. Residents' medical information needs in clinic: are they being met? Am J Med. 2000 Aug 15; 109(3:):218–23. [DOI] [PubMed] [Google Scholar]

- Hunt DL. Users' guides to the medical literature: XXI. using electronic health information resources in evidence-based practice. Evidence-Based Medicine Working Group. JAMA. 2000 Apr 12; 283(14:):1875–9. [DOI] [PubMed] [Google Scholar]

- Crowley SD. A Web-based compendium of clinical questions and medical evidence to educate internal medicine residents. Acad Med. 2003 Mar; 78(3:):270–4. [DOI] [PubMed] [Google Scholar]

- Guyatt G. ed. Users' guides to the medical literature: a manual for evidence-based clinical practice. Chicago, IL: AMA Press, 2002. [Google Scholar]

- Sackett DL. Finding and applying evidence during clinical rounds: the “evidence cart.”. JAMA. 1998 Oct 21; 280(15:):1336–8. [DOI] [PubMed] [Google Scholar]

- Keitz SA. EBM curriculum at Duke. [Web document]. Durham, NC: Duke University, 2002. [cited 14 Feb 2006]. <http://www.mclibrary.duke.edu/subject/ebm/overview/ebm_keitz.html>. [Google Scholar]

- Guyatt GH. Users' guides to the medical literature. II. how to use an article about therapy or prevention. A. are the results of the study valid? Evidence-Based Medicine Working Group. JAMA. 1993 Dec 1; 270(21:):2598–601. [DOI] [PubMed] [Google Scholar]

- Schilling LM, Steiner JF, Lundahl K, and Anderson RJ. Residents' patient-specific clinical questions: opportunities for evidence-based learning. Acad Med. 2005 Jan; 80(1:):51–6. [DOI] [PubMed] [Google Scholar]

- Koonce TY, Giuse NB, and Todd P. Evidence-based databases versus primary medical literature: an in-house investigation on their optimal use. J Med Libr Assoc. 2004 Oct; 92(4:):407–11. [PMC free article] [PubMed] [Google Scholar]

- Green ML. Evidence-based medicine training in internal medicine residency programs a national survey. J Gen Intern Med. 2000 Feb; 15(2:):129–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hersh W. Information retrieval: a health and biomedical perspective. [Web document]. Portland, OR: Oregon Health Sciences University, 2005. [cited 16 Mar 2006]. <http://medir.ohsu.edu/∼hersh/irbook/updates/update4.html>. [Google Scholar]