Abstract

Although facial expressions of emotion are universal, individual differences create a facial expression “signature” for each person; but, is there a unique family facial expression signature? Only a few family studies on the heredity of facial expressions have been performed, none of which compared the gestalt of movements in various emotional states; they compared only a few movements in one or two emotional states. No studies, to our knowledge, have compared movements of congenitally blind subjects with their relatives to our knowledge. Using two types of analyses, we show a correlation between movements of congenitally blind subjects with those of their relatives in think-concentrate, sadness, anger, disgust, joy, and surprise and provide evidence for a unique family facial expression signature. In the analysis “in-out family test,” a particular movement was compared each time across subjects. Results show that the frequency of occurrence of a movement of a congenitally blind subject in his family is significantly higher than that outside of his family in think-concentrate, sadness, and anger. In the analysis “the classification test,” in which congenitally blind subjects were classified to their families according to the gestalt of movements, results show 80% correct classification over the entire interview and 75% in anger. Analysis of the movements' frequencies in anger revealed a correlation between the movements' frequencies of congenitally blind individuals and those of their relatives. This study anticipates discovering genes that influence facial expressions, understanding their evolutionary significance, and elucidating repair mechanisms for syndromes lacking facial expression, such as autism.

Keywords: facial movements, genetics, congenitally blind, gestalt, individual differences

According to Darwin (1), many of the facial actions exhibited by humans and lower animals are innate. This idea may explain why human and nonhuman primates (2), congenitally blind people (3), individuals from isolated cultures (4, 5), and the young and old (6–8) express similar emotions with similar facial expressions.

Individual Differences in Facial Expression

Although facial expressions are universal, individual differences exist in facial displays, which may even be apparent in neonates (9). These differences are expressed in the variation of facial muscles and facial nerves (10). The variability in facial muscles can also be expressed with some muscles appearing in some individuals and not in others (11).

The individual differences in facial muscles result in, for example, the ability or inability to produce particular facial movements, including asymmetrical ones (12–14). It also results in different individual intensities, frequencies (15), and diversities (12, 16) of facial expressions. Smith and Ellsworth (17), Ortony and Turner (18), and Scherer (19) proposed that elements of facial expression configurations could be directly linked to individual appraisals.

Such individual differences have been observed in born-blind persons as well (20). Examples of different phenotypes of facial expressions are Duchenne and non-Duchenne smiles. Smiles involving both raised lip corners and contraction of the muscles encircling the eyes are called Duchenne smiles, whereas smiles lacking the muscle activity around the eyes are non-Duchenne smiles (21). The dimples that are caused by the tension created by two heads of the zygomaticus major (a muscle located at the corner of the mouth) instead of one also serve as a component in creating different phenotypes of facial expressions. Duchenne smiles and dimples may be of added value in making an expression intensive and noticeable (22).

The combination of the individual's typical movements creates an individual facial expression signature that conveys unique information about a person's identity (23) and is sufficiently strong so that individuals may be accurately recognized on the basis of their facial behavior.

The individual's facial expression signature is stable over time. Stable patterns arise within the first 6 months of life (24, 25), and they were also demonstrated in facial expressions of mothers with their first and second infants (26).

Family Twin Studies on the Heredity of Facial Expressions

Although the universality of facial expressions, which indicates a heritable basis for facial display, has been studied for ≈40 years, there have been very few family studies concentrating on the heredity of facial expressions (27, 28).

Researchers have considered whether asymmetry is related to genetic factors (29). Freedman, as reviewed by Ekman (6), compared the behavior of monozygote and dizygote twins over the first year of life and found that identical twin pairs showed significantly greater similarity in social smiling and the intensity and timing of fear reactions. Katzanis et al. (30) found that monozygotic twins showed significant similarity in eye tracking performance during smooth pursuit, and dizygotic twins revealed a lack of significance.

Carlson et al. (31) showed the genetic influence on emotional modulation by using eye blink measurement. They found that monozygotic twin pairs showed similar electromyography response amplitudes (recorded from the orbicularis oculi), when they received startling acoustic stimuli while viewing emotionally positive, negative, and neutral slides. The percent change in response amplitude between the affective and neutral conditions also showed significant similarity within monozygotic twin pairs. Overall, members of dizygotic twin pairs were not found to be significantly similar with any of the measures of the affective and neutral conditions.

However, none of the abovementioned twin studies compared the gestalt (32) of facial movements; instead, they compared only a few movements in one or two emotional states in monozygotic and dizygotic twins.

In our study, we examined whether there is a family facial expression signature, in addition to the known individual facial expression signature, by studying the gestalt of facial movements in six emotional states.

A classic way to unravel the innate patterns of facial expressions is to study them in congenitally blind individuals. About 130 years ago, Darwin (1) mentioned facial expressions in blind-from-birth individuals in the context of heritability: “The inheritance of most of our expressive actions explains the fact that those born blind display them, as I hear from the Rev. R. H. Blair, equally well with those gifted with eyesight.”

Following this account, we examined the heritable basis of facial movements by comparing the repertoires of facial movements of congenitally blind persons with those of their relatives (a list of all facial movements observed over the entire analyzed segments is presented in Table 2, which is published as supporting information on the PNAS web site). We examined this in think-concentrate, sadness, anger, disgust, joy, and surprise induced during individual interviews. We also compared the frequencies of facial movements of congenitally blind persons with those of their relatives in anger.

Results

Facial Movements of Congenitally Blind Subjects Within and Out of Family.

We compared the frequency of occurrence of a facial movement of a congenitally blind person in and outside his family by using the “in-out family test” (see Methods).

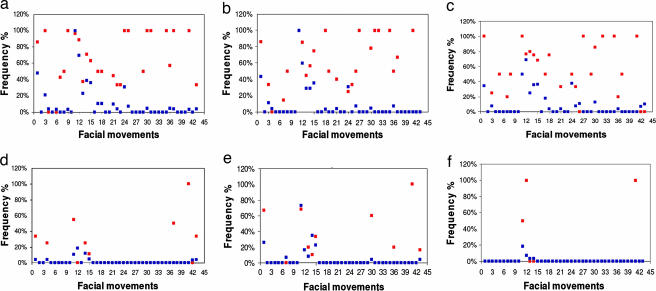

A comparison was performed over the entire interview and for each emotional state separately (Fig. 1).

Fig. 1.

The frequency of occurrence of a facial movement of a congenitally blind person within and outside of his family in each of the emotional states: concentration (a), sadness (b), anger (c), disgust (d), joy (e), and surprise (f). The x axis includes the facial movements that served for documenting facial expressions (see Table 2). The values of frequency in the y axis were obtained by using the in-out family test described in Methods. n = 51 (21 congenitally blind + 30 sighted subjects). Red squares, in family; blue squares, out of family.

Using the results of the two nonparametric statistical tests (Mann–Whitney and Kolmogorov–Smirnov, see Methods), we showed that during the entire interview, and particularly in think-concentrate, sadness, and anger, the frequency of occurrence of a facial movement of a congenitally blind individual in his family is significantly higher than that outside of his family (Table 1).

Table 1.

The frequency of occurrence of a facial movement of a congenitally blind subject in his family relative to that outside of his family in various emotions

| Emotion | MW | MW P value | KS | KS P value |

|---|---|---|---|---|

| Entire interview | 412.500 | 0.000 | 2.696 | 0.000 |

| Think/concentrate | 621.500 | 0.006 | 2.157 | 0.000 |

| Sad | 615.500 | 0.003 | 1.833 | 0.002 |

| Anger | 642.500 | 0.008 | 1.725 | 0.005 |

| Disgust | 894.500 | 0.703 | 0.755 | 0.619 |

| Joy | 885.000 | 0.638 | 0.539 | 0.933 |

| Surprise | 909.000 | 0.778 | 0.323 | 1.000 |

MW, Mann–Whitney; KS, Kolmogorov–Smirnov.

Differences were not significant in disgust, joy, and surprise.

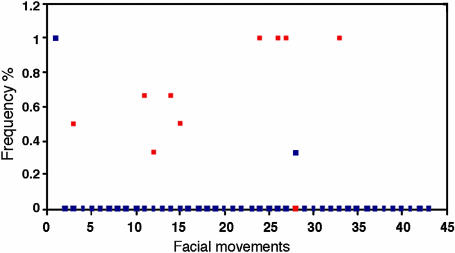

Results of the in-out family test in three families, each including two born-blind brothers are presented in Fig. 2. These results are based on data collected during the entire interview and show that, even in families with two born-blind brothers, the frequency of occurrence of a facial movement of a congenitally blind individual within his family is significantly higher than that outside of his family.

Fig. 2.

The frequency of occurrence of a facial movement of a congenitally blind person within and outside of his family in the entire interview in three families, each including two brothers born blind (n = 6). The x axis includes the facial movements that served for documenting facial expressions (see Table 2). The values of frequency in the y axis were obtained by using the in-out family test described in Methods. Red squares, in family; blue squares, out of family.

Correlation between Facial Movements of Relatives Demonstrated by Classification Methods.

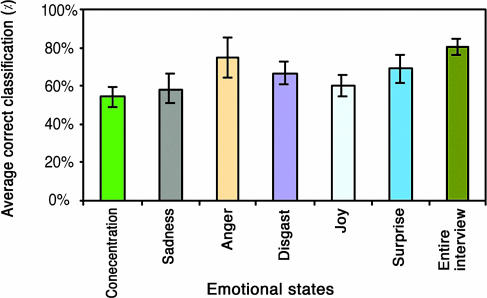

In the “classification test” (see Methods) we used the support vector machine method to classify a congenitally blind subject to his family. Classification was performed based on the gestalt of facial movements. Results show 80% correct classification over the entire interview, 75% in anger, 69% in surprise, 66% in disgust, 60% in joy, 59% in sadness, and 54% in think-concentrate (Fig. 3).

Fig. 3.

The classification of a congenitally blind person to his family according to the facial movements' repertoire in various emotional states. Movements of seven congenitally blind subjects were compared with those of 50 individuals (facial movements of a single born-blind person were compared each time to those of 50 individuals) in six emotional states (x axis). The values of average correct classification in the y axis were obtained by using the classification test described in Methods. The y axis includes the proportion of the correct classifications of the born-blind subject to the in class, including their family, out of the number of all possible partitions into two classes (in class and out of class). which is 184,756. Error bars show standard deviations.

Discussion

In this study we examined the hereditary component of facial expressions by comparing facial movements in born-blind individuals with those of their sighted relatives.

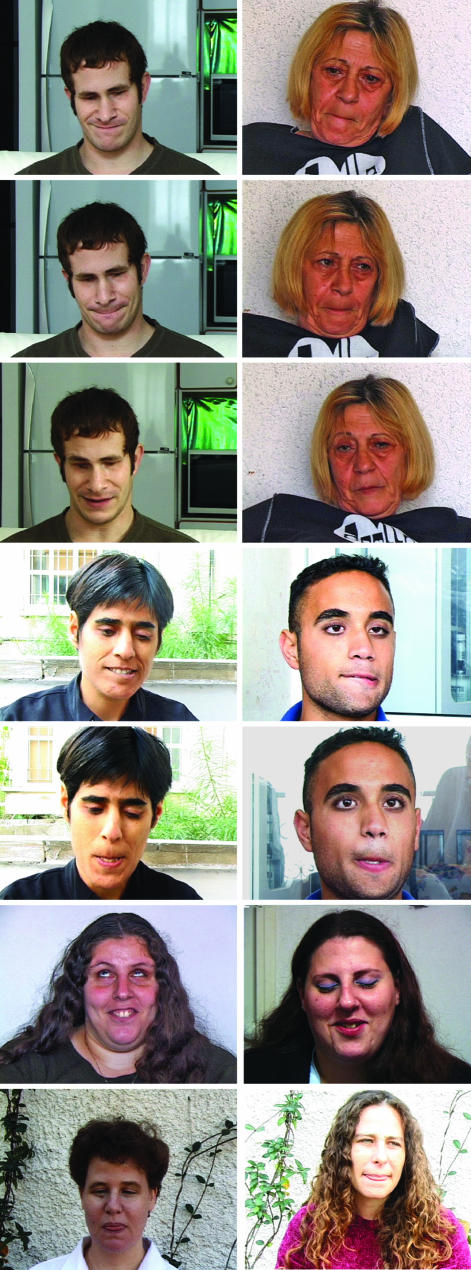

The correlation between the repertoires of born-blind subjects to those of their sighted relatives demonstrated by both the in-out family test and the classification test analyses indicates a family facial expression signature. Examples of similar facial movements of individual facial expression signature of the subject, observed in born-blind subjects and their sighted relatives, are demonstrated in Fig. 4.

Fig. 4.

Similar movements in born-blind participants (Left) and their sighted relatives (Right). Movement 30 (see Table 2) in rows 1 and 2 shows typical movements of the lips while the lips touch each other (as if chewing). Movement 14 (see Table 2) in row 3 shows raising the right eyebrow only. Movement 22 (see Table 2) in row 4 shows biting the lower lip while the mouth shows left asymmetry. Movement 25 (see Table 2) in row 5 shows rolling the upper lip inside. For movement 41 (see Table 2) in row 6 a “U” shape is created in the area between the lower lip and the chin. The chin is stretched and goes forward. The edges of the mouth are embedded and the lower lip is stretched. In movement 1 (see Table 2) in row 7 the tongue protrudes and touches both lips.

The hereditary influence that appeared in think-concentrate, sadness, and anger (Table 1) may relate to the induction of the high repertoire proportions¶ of facial movements by these emotions, as found in a previous study (G.P., G.K., M.K., L.B., H.H.-O., D.K., and E.N., unpublished work).

The two methods of analyses we used differed in that the classification test compared the individuals' gestalt of facial movements, whereas the in-out family test compared a particular facial movement of a subject, which is a single component of the gestalt. The results of these tests may suggest that facial movements have differential heritability as expressed in the diverse emotions (Figs. 1 and 3). By using the in-out family test, we clearly see which of the movements expressed in a particular emotion has high heritability and which has a low heritability. The classification test, however, which compares the gestalt of facial movements of individuals, includes both types of movements: those characterized with high heritability and those with low heritability.

The greater the number of high heritability movements included in the gestalt, the higher the average rating of correct classification.

It should be noted that both analyses clearly show a high correlation between the repertoires of facial movements of congenitally blind persons with those of their relatives in anger.

The classification test also revealed a correlation between the frequencies of facial movements of congenitally blind individuals and those of their relatives in anger (correct classification of 67%). Because anger is considered a negative emotion, our results correlate with those of Baker et al. (33), which showed increased levels of correlation between genetic similarity and reactions to negative affects.

However, the data of Baker et al. were collected from sighted subjects, whereas our study demonstrates the same phenomenon in congenitally blind individuals and their relatives. Our results also support the study of Afrakhteh (44) that also included sighted subjects, comparing posed facial expressions of monozygote twins to those of dizygote twins. Afrakhteh found that posed expressions of anger revealed significant differences between monozygote and dizygote twins, whereas all of the other emotions (sadness, joy, disgust, surprise, and fear) revealed nonsignificant differences. Actually, our study showed the same phenomenon of the expression of similar facial movements of relatives in anger, but here we demonstrate it in born-blind individuals and their relatives by comparing spontaneous (not posed) expressions.

It is important to mention that the repertoires and frequencies of facial movements are not the only components of a family's facial expression signature. Additional components, such as timing (34) and intensities (22) of facial movements, may play an important role in creating the overall family signature.

To exclude the possibility that the congenitally blind subjects had learned these expressions by sensing their relatives' faces through touch we designed a questionnaire.

The answers show that congenitally blind persons do not touch their relatives' faces to adopt facial expressions during any stage of their life. In fact, they consider this “Hollywood” myth very impolite behavior. We can also exclude such a possibility here because congenitally blind and deaf phocomelian‖ children, who are incapable of sensing their relatives' face by touching, nevertheless show the appropriate facial expressions (35).

According to Iverson and Goldin-Meadow (36), although blind speakers can learn something about the use of gesture from their own sensory experiences and from explicit instructions, the information they obtain from these sources is minimal at best. Unlike sighted children, they have no visual models available for learning, and because they cannot see their listeners, whatever feedback they receive on their own gesture production, is markedly reduced.

Cole et al. (37), who studied facial control, found that although the lack of visual feedback does not preclude the ability to mask disappointment, the blind children, who appeared to mask disappointment, were less likely than sighted children to refer spontaneously to such facial control. According to Cole et al., blind children, particularly older ones, may be more aware of verbal versus facial communication.

A striking example taken from our study, which contradicts the claim that congenitally blind subjects can learn these expressions, is the family of a blind-from-birth subject whose biological mother abandoned him 2 days after birth (Fig. 4 Left, top three photos).

The birth mother and son first met when the son reached 18 years of age and only rarely for short periods thereafter. Nevertheless, they demonstrated a unique family facial expression signature.

Our results are also supported by data from a small sample, including three pairs of congenitally blind brothers, showing that the frequency of occurrence of a facial movement in one of the congenitally blind brothers in his family was higher than the frequency of occurrence of the facial movement outside of his family (Fig. 2). These special samples imply that the probability of a sighted member imitating the congenitally blind person's facial expressions is negligible.

The hereditary basis of facial expression must stem from a variety of alleles expressed in muscle anatomy, innervations, and cognition. Although we are still far from discovering the genes that influence facial expression, our study is an essential stage in the process of unraveling the genetic basis of facial expressions.

Methods

Study Population.

The study included 21 congenitally blind subjects and 30 sighted relatives (belonging to 21 families; each includes one person blind from birth). All congenitally blind subjects had no known cognitive, emotional, behavioral, or physical impairments besides blindness. All sighted subjects had unimpaired vision and no cognitive, emotional, or behavioral impairments and were matched to the congenitally blind participants on the basis of kinship.

Informed consent was obtained from all subjects.

Induction of Facial Expressions.

Sadness, anger, and joy were induced during an individual interview (38). Each subject was asked to relate his experience, causing him to feel the specific emotion, in a very intensive manner. He was requested to give as much detail as possible to relive the emotions he experienced. According to Ekman (39), spontaneous behavior is natural when some part of life itself leads to the behavior studied.

Think-concentrate, described by Darwin (1) as an “intellectual emotion,” virtually unstudied from the point of view of facial expressions (40), was induced by asking the subject to solve a few puzzles of successively increasing difficulty.

Disgust was induced by telling the subject a story including disgusting details. Surprise was induced by asking the subject to solve a difficult puzzle. While concentrating on the details heard, he was suddenly asked a question (in gibberish) that had no connection with the puzzle.

To verify that subjects experienced the intended emotion, self-reported emotion data were collected during the interview.

All subjects were photographed individually at their homes (the preferred environment).

Index of Facial Movements.

We created an index of 43 movements, including all of the movements observed that were documented; nine of them (movements 8, 10, 19, 35, 36, 37, 38, 39, and 40 in Table 2) included two movements that appeared in tandem, one immediately preceding the other.

Documenting Facial Movements.

Facial movements were documented in a written description. The analyzed videos included segments in which each subject: (i) solved all of the puzzles (think-concentrate); (ii) related a life experience that caused him to feel a particular singular emotion (sadness/anger/joy), the analyzed segments included the entire description related by the subjects; (iii) listened to the entire story including disgusting details (disgust), and (iv) was asked to solve a difficult puzzle and suddenly, while concentrating on the details, the subject was asked a question in gibberish (surprise).

All analyzed segments of a particular induced emotion were found to be correlated in 100% of the subject's self-reported emotion, which clearly mentioned this particular induced emotion.

Because we analyzed long duration video segments, which included 18 h of video, we decided not to use the Facial Action Coding System (41) because its use would make the coding process very long and unsuitable for our project's framework. The coding was carried out by a single coder.

The results are based on analysis of 409 min of think-concentrate, 257 min of sadness, 231 min of anger, 33 min of disgust, 111 min of joy, and 12 min of surprise.

Computational Methods.

Method 1: The in-out family test.

We examined the frequency of occurrence of a facial movement of a congenitally blind person within his family relative to the frequency of its occurrence outside of his family by using a PERL script specifically written for this study. This script enabled us to compare two values: in family and out family.

The in-family value is based on congenitally blind subjects that show a particular facial movement and is defined as the average proportion of their family members (excluding the congenitally blind members) who show this particular facial movement.

The out-family value is based on congenitally blind subjects who do not show this particular facial movement, while their family members do. The out-family value is defined as the average proportion of family members of these congenitally blind subjects who show the particular facial movement.

The in-family and out-family values were accepted by using the following calculations:

In family for a specific facial movement = A/B with A being the number of family members (of born-blind individuals that show the particular facial movement) showing this particular facial movement. This number does not include born-blind individuals that show the particular facial movement. B is the total number of family members (of born-blind individuals) who show the particular facial movement.

Out family for a particular facial movement = C/D with C being the number of family members (of born-blind individuals) who do not show the specific facial movement that show this particular facial movement and D being the total number of family members (of born-blind individuals) who do not show the particular facial movement).

This analysis includes 21 born-blind and 30 sighted subjects. Because every born-blind subject has only one or two family members (in family) and all other subjects belong to the out of family, the out-of-family value has more potential to be higher than the in-family value. However, the in-family frequency values were significantly higher than the corresponding out-family frequency values in concentration, sadness, and anger. As a result, the discrepancy of in-family and out-family distributions for these emotional states is expected.

A comparison of the two distributions of these values (in family and out family), in each of the six emotional states, was performed. The significance of their difference was evaluated by nonparametric statistical tests: Mann–Whitney and Kolmogorov–Smirnov, both two-tailed (Table 1).

Method 2: The classification test.

Classification of the congenitally blind subject to his family, based on his facial movements' repertoire and frequencies, was performed by using machine learning tools, including the support vector machine classifier (42), which aims at distinguishing between two classes of data. Every subject is considered an element and is represented by a sequence of values (types of movements/their frequencies).

For each congenitally blind subject, classification is made according to two classes: family members and nonfamily members.

To show interdependence between facial expressions of different family members a classification test was implemented. The support vector machine classifier works in two stages:

The training stage in which a large number of in-class and out-of-class examples are given to the system. The classifier builds a rule of distinction between the two classes based on the feature vectors of these examples. The rule might be based on a linear model, a quadratic model, etc.

The testing stage in which a new data example is given and the classifier grades its association with one of the two classes based on the rule of distinction determined in the training stage.

In the case discussed in this article, a specific family is considered in class and all other subjects in the data set are considered out of class. Testing is performed by considering a member of the family (which was not included in the in-class training set) as a test example.

However, considering the data collected, the in-class training set is very small (two or three family members), which does not allow a reliable classification result. Given that there are a sufficient number of out-of-class examples, a variant of the standard classification method, the V-fold cross validation was used (43). In this variation, several runs of support vector machine classification were performed for every test sample. In each run the in-class set (composed of the family members of the subject currently being tested) was expanded artificially. Classification was performed by training on the expanded in-class and out-of-class sets, followed by testing, using the current test sample. Seven congenitally blind subjects were tested in our study. Each of the 21 families examined were divided into two sets (the in-class and out-of-class sets: one included 10 families and the other included 11 families). For every such partition the congenitally blind subject was classified as belonging to one of the subclasses. In this experiment every data element was represented by a feature vector that included a sequence of facial movements and their frequencies displayed by the subject in the analyzed video segments. The number of all possible partitions was 184,756. Placing all such possible partitions into two sets was considered.

Successful classification occurs when the test sample is classified as belonging to the expanded in class, including his family. A voting scheme is incorporated to count the number of successful classifications over many such runs. The number of successful classifications compared with the number of misclassifications determined the final quality of classification for the congenitally blind subject.

Acknowledgments

We thank Dr. Erika Rosenberg (University of California, Davis, CA), Prof. Irenaeus Eibl-Eibesfeldt (Ludwig-Maximillian University of Munich, Munich, Germany), and Prof. Matti Mintz (Tel Aviv University, Tel Aviv, Israel) for discussions and comments; Prof. Giora Heth, Dr. Josephine Todrank, Mr. Eden Orion, Ms. Shlomit Rak-Yahalom, Ms. Lena Elnecave, and Ms. Sara Nitzan for technical support; Ms. Robin Permut and Ms. Na-ava Rubinstein for editing; and The Israel Guide Dog Center for the Blind, the Center for the Blind in Israel, Service for the Blind, Ministry of Labor and Social Affairs (Jerusalem, Israel), the Eliya Children's Nursery, and the Ancell-Teicher Research Foundation for Genetic and Molecular Evolution for helpful support. M.K. was supported by the Caesarea Rothschild Foundation.

Footnotes

The authors declare no conflict of interest.

The proportion of the facial movements shown by a subject in a certain emotional state relative to his facial movements' repertoire.

Phocomelia is a common birth defect seen with thalidomide use, characterized by defective, shortened limbs, resulting in flipper hands and feet or a complete absence of limbs.

References

- 1.Darwin C. The Expression of the Emotions in Man and Animals. 3rd Ed. Oxford: Oxford Univ Press; 18721998. [Google Scholar]

- 2.Preuschoft S, Van Hoof JA. Folia Primatol (Basel) 1995;65:121–137. doi: 10.1159/000156878. [DOI] [PubMed] [Google Scholar]

- 3.Eibl-Eibesfeldt I. In: Social Communication and Movement. Von Cranach M, Vine I, editors. New York: Academic; 1973. pp. 163–194. [Google Scholar]

- 4.Ekman P, Keltner D. In: Nonverbal Communication: Where Nature Meets Culture. Segerstrale U, Molnar P, editors. Mahwah, NJ: Lawrence Erlbaum Associates; 1997. pp. 27–46. [Google Scholar]

- 5.Grammer K, Schiefenhovel W, Schleidt M, Lorenz B, Eibl-Eibesfeldt I. Ethology. 1988;77:279–299. [Google Scholar]

- 6.Ekman P. In: Darwin and Facial Expression: A Century of Research in Review. Ekman P, editor. New York: Academic; 1973. pp. 169–222. [Google Scholar]

- 7.Trevarthen C. Hum Neurobiol. 1985;4:21–32. [PubMed] [Google Scholar]

- 8.Izard CE, Malateste CZ. In: Handbook of Infant Development. Osofsky JD, editor. New York: Wiley; 1987. pp. 494–554. [Google Scholar]

- 9.Manstead ASR. In: Fundamentals of Nonverbal Behavior (Studies in Emotion and Social Interaction) Feldman RS, Rime B, editors. Cambridge: Cambridge Univ Press; 1991. pp. 285–328. [Google Scholar]

- 10.Goodmurphy C, Ovalle W. Clin Anat. 1999;12:1–11. doi: 10.1002/(SICI)1098-2353(1999)12:1<1::AID-CA1>3.0.CO;2-J. [DOI] [PubMed] [Google Scholar]

- 11.Pessa J, Zadoo V, Garza P, Adrian EJ, Dewitt A, Garza J. Clin Anat. 1998;11:310–313. doi: 10.1002/(SICI)1098-2353(1998)11:5<310::AID-CA3>3.0.CO;2-T. [DOI] [PubMed] [Google Scholar]

- 12.Liu Y, Cohn JF, Schmidt KL, Mitra S. Comput Vision Image Understanding. 2003;91:138–159. [Google Scholar]

- 13.Smith WM. J Cognit Neurosci. 1998;10:663–667. doi: 10.1162/089892998563077. [DOI] [PubMed] [Google Scholar]

- 14.Borod JC, Koff E, Yecker S, Santschi C, Schmidt JM. Psychophysiology. 1998;36:1209–1215. doi: 10.1016/s0028-3932(97)00166-8. [DOI] [PubMed] [Google Scholar]

- 15.Kaiser S, Wehrle T, Schmidt S. In: Fischer AH, editor. Proceedings of the Xth Conference of the International Society for Research on Emotions; Würzburg, Germany: ISRE; 1998. pp. 82–86. [Google Scholar]

- 16.Cohn JF, Schmidt K, Gross R, Ekman P. Proceedings of the International Conference on Multimodal User Interface; New York: ACM Press; 2002. pp. 491–496. [Google Scholar]

- 17.Smith CA, Ellsworth PC. J Pers Soc Psychol. 1985;48:813–838. [PubMed] [Google Scholar]

- 18.Ortonyt A, Turner TJ. Psychol Rev. 1990;97:315–331. doi: 10.1037/0033-295x.97.3.315. [DOI] [PubMed] [Google Scholar]

- 19.Scherer KR. In: Appraisal Processes in Emotion: Theory, Methods, Research. Scherer KR, Schorr A, Johnstone T, editors. Oxford: Oxford Univ Press; 2001. pp. 92–120. [Google Scholar]

- 20.Galati D, Scherer KR, Ricci-Bitti PE. J Pers Soc Psychol. 1997;73:1363–1379. doi: 10.1037//0022-3514.73.6.1363. [DOI] [PubMed] [Google Scholar]

- 21.Frank MG, Ekman P, Friesen WV. J Pers Soc Psychol. 1993;64:83–93. doi: 10.1037//0022-3514.64.1.83. [DOI] [PubMed] [Google Scholar]

- 22.Schmidt KL, Cohn JF. Yrk Phys Anthropol. 2001;44:3–24. doi: 10.1002/ajpa.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kaiser S, Wehrle T, Edwards P. In: Frijda NH, editor. Proceedings of the VIIIth Conference of the International Society for Research on Emotions; Storrs, CT: ISRE; 1994. pp. 275–279. [Google Scholar]

- 24.Cohn JF, Campbell SB. In: Rochester Symposium on Developmental Psychopathology: A Developmental Approach to Affective Disorders. Cicchetti D, Toth S, editors. Hillsdale, NJ: Erlbaum; 1992. pp. 105–130. [Google Scholar]

- 25.Moore GA, Cohn JF, Campbell SB. Dev Psychol. 2001;37:706–714. [PubMed] [Google Scholar]

- 26.Moore GA, Cohn JF, Campbell S. Dev Psychol. 1997;33:856–860. doi: 10.1037//0012-1649.33.5.856. [DOI] [PubMed] [Google Scholar]

- 27.Freedman DG. In: Determinants in Infant Behavior. Foss B, editor. London: Methuen; 1965. pp. 149–156. [Google Scholar]

- 28.Papadatos C, Alexiou D, Nicolopoulos D, Mikropoulos H, Hadzigeorgiou E. Arch Dis Childhood. 1974;49:927–931. doi: 10.1136/adc.49.12.927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thompson JR. J Am Dent Assoc. 1943;30:1859–1871. [Google Scholar]

- 30.Katsanis J, Taylor J, Iacono WG, Hammer M. Psychophysiology. 2000;37:724–730. [PubMed] [Google Scholar]

- 31.Carlson RS, Katsanis J, Iacono WG, McGue M. Biol Psychol. 1997;10:235–246. doi: 10.1016/s0301-0511(97)00014-8. [DOI] [PubMed] [Google Scholar]

- 32.Homa D, Haver B, Schwartz T. Memory Cognit. 1976;4:176–185. doi: 10.3758/BF03213162. [DOI] [PubMed] [Google Scholar]

- 33.Baker LA, Cesa IL, Gatz M, Mellins C. Psychol Aging. 1992;7:158–163. [PubMed] [Google Scholar]

- 34.Chon J. In: What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS) 2nd Ed. Ekman P, Rosenberg E, editors. Oxford: Oxford Univ Press; 2005. pp. 388–392. [Google Scholar]

- 35.Eibl-Eibesfeldt I. Human Ethology. New York: Aldine de Gruiter; 1989. [Google Scholar]

- 36.Iverson JM, Goldin-Meadow S. The Nature and Functions of Gesture in Children's Communication. San Francisco: Josset-Bass; 1998. [Google Scholar]

- 37.Cole PM, Jenkins PA, Shott CT. Child Dev. 1989;60:683–688. [PubMed] [Google Scholar]

- 38.Rosenberg EL, Ekman P, Blumenthal JA. Health Psychol. 1998;17:376–380. doi: 10.1037//0278-6133.17.4.376. [DOI] [PubMed] [Google Scholar]

- 39.Ekman P. Emotions Revealed: Recognizing Faces and Feelings to Improve Communication and Emotional Life. New York: Holt; 2003. [Google Scholar]

- 40.Rozin P, Cohen AB. Emotion. 2003;3:68–75. doi: 10.1037/1528-3542.3.1.68. [DOI] [PubMed] [Google Scholar]

- 41.Ekman P, Friesen WV. The Facial Action Coding System: Investigator's Guide. Palo Alto, CA: Consulting Psychologists Press; 1978. [Google Scholar]

- 42.Vapnik VN. The Nature of Statistical Learning Theory. New York: Springer; 1995. [Google Scholar]

- 43.Burman P. Biometrika. 1989;76:503–514. [Google Scholar]

- 44.Afrakhteh S. Ph.D. dissertation. San Francisco, CA: International University; 2000. [Google Scholar]