Abstract

Objectives

To categorize the manner in which programmatic curricular outcomes assessment is accomplished, identify the types of assessment methodologies used, and identify the persons or groups responsible for assessment.

Methods

A self-administered questionnaire was mailed to 89 institutions throughout the United States and Puerto Rico.

Results

Sixty-eight of 89 surveys (76%) were returned. Forty-one respondents (60%) had a written and approved plan for programmatic curricular outcomes assessment, 18% assessed the entire curriculum, and 57% had partial activities in place. Various standardized and institution-specific assessment instruments were employed. Institutions differed as to whether an individual or a committee had overall responsibility for assessment.

Conclusion

To move the assessment process forward, each college and school should identify a person or group to lead the effort. Additional validated assessment instruments might aid programmatic assessment. Future studies should identify the reasons for selecting certain assessment instruments and should attempt to identify the most useful ones.

Keywords: assessment, curricular assessment, programmatic assessment

INTRODUCTION

During the past 2 decades as pharmacy education shifted to the PharmD degree as the sole entry-level degree, a parallel process of revitalization of pharmacy education gained momentum. Consistent with efforts in higher education in general and health care education in particular, the need to document students' achievement of ability-based outcomes became a major focus for colleges and schools of pharmacy.

Several publications have shaped the development/revision of PharmD curricula throughout the nation. For example, publication of Background Paper II by the American Association of Colleges of Pharmacy's (AACP) Commission to Implement Change in Pharmaceutical Education provided a list of educational outcomes needed by pharmacy graduates to deliver pharmaceutical care to patients and suggested a framework for the development of institution-specific outcomes.1 The Center for Advancement of Pharmaceutical Education (CAPE) Advisory Panel on Educational Outcomes was established by the AACP and published curricular outcomes for pharmacy education, which have been revised periodically.2,3 Continuing its leadership role in the area of assessment, an AACP fellow produced a “Guide for Doctor of Pharmacy Program Assessment”4,5 and, recently, AACP published a series of “Excellence Papers,” in which one of the manuscripts focused on assessment.6 Abate and colleagues provided a comprehensive review of the educational literature in general and then focused on pharmacy. The authors described the shift of higher education from using “input-based” methods (eg, looking primarily at the number of students, faculty members, and resources) to determine institutional effectiveness to a system that uses “outcome-based” measures (eg, measuring students' knowledge, skills, and behaviors) to assess effectiveness. The authors made a series of recommendations that included sharing of assessment-related activities among pharmacy educators.

In addition to AACP, the current Accreditation Council for Pharmacy Education (ACPE) accreditation standards (Standards 2000) include a focus on assessment.7 ACPE also provides a list of professional competencies (or outcomes) that graduates from PharmD programs should be able to meet, and goes on to require that colleges and schools of pharmacy provide data on the effectiveness of the pharmacy program as it relates to student performance of these outcomes.

As colleges and schools of pharmacy worked to develop assessment plans, groups of researchers sought to collect data from colleges and schools of pharmacy to provide a resource for educators. In 1998, Scott and colleagues mailed a survey to colleges and schools of pharmacy to determine their approaches to assessment.8 Sixty-four percent (50 out of 78) of colleges and schools of pharmacy responded to the survey. The most common approach to assessment was to have students rate their ability to meet curricular outcomes. Other approaches included a combination of several methods including clerkship (practice experience) outcomes assessment, and the use of objective structured clinical examinations (OSCEs). The authors concluded that pharmacy educators had made progress with respect to assessment, but most institutions were only in the early stages of developing outcome assessment strategies; thus, much work remained.

Also in 1998, Ryan and Nykamp mailed a questionnaire to colleges and schools of pharmacy to determine the number of institutions that used cumulative written examinations that covered basic sciences, pharmaceutical sciences, and clinical sciences to assess student performance.9 Of the 46 persons who responded to the survey, only 20% indicated that their college or school used this type of cumulative examination. The authors concluded that additional studies of the validity of cumulative examinations and the use of OSCEs were required.

Relying primarily on the medical education literature, Winslade provided an extensive review and evaluation of methods used by health professions other than pharmacy to assess students.10 Using these findings, the author went on to provide recommendations for assessing pharmacy students' achievement of curricular outcomes. The report included recommendations that psychometrically valid assessment instruments should be utilized to assess knowledge and skills and that OSCEs or other types of simulations be developed to assess students' ability to meet curricular outcomes.

Survey results published by Bouldin and Wilkin in 2000 included data from 55 colleges and schools of pharmacy (69% response rate) with the majority being public institutions.11 Approximately 50% of the institutions had an assessment committee, while 11% of the respondents reported having a full-time equivalent person with responsibility for assessment. At the time of the survey, 71% of respondents indicated that their faculty had approved a list of general educational abilities, but only 44% indicated that their college or school had a written outcomes assessment plan. Of those institutions with a written plan, 65% of respondents noted that the plan had been formally approved by the faculty. The researchers found that the most common providers of input into the assessment process were students, faculty members, preceptors, and alumni. The North American Pharmacist Licensure Examination (NAPLEX) was the most common instrument used in programmatic assessment. The authors made a series of suggestions to move programmatic assessment to a higher level within pharmacy education. These recommendations included having personnel at each college and school who were dedicated to assessment, ensuring that assessment plans were formally adopted, and determining the validity and reliability of instruments used for assessment.

Finally, in 2000, Boyce reported that 38% of responding colleges and schools of pharmacy had formalized assessment activities, 28% had informal activities, and 34% noted they had plans to develop formal activities.4

In addition to the survey data noted above, there are many examples of reports from individual institutions12-19 that examined assessment of an individual course/skill set or provided a description of an approach to assessment at a single institution. In its series of “Successful Practices in Pharmaceutical Education,” AACP posted on its web site a series of reports on programmatic assessment from 7 colleges and schools of pharmacy.20 The reports included individual approaches used to revise a specific course, the development of complete curricular assessment plans at individual colleges and schools of pharmacy, and the development of an assessment model that could be transferable between or among educational institutions.

Pharmacy educators are not unique in their struggles to institutionalize assessment and move from assessment being viewed as a necessary activity associated with an upcoming accreditation visit to it becoming part of the institution's culture. Peggy Maki of the American Association of Higher Education advocates that “institutional curiosity” should be the force that drives assessment efforts.21 Dr. Maki provides a guide to assessment that is divided into 3 parts. The first centers on institutions identifying outcomes and determining whether educational activities adequately address the desired outcomes. The second part of the guide focuses on establishment of an “assessment timetable.” The third part focuses on data analysis, sharing the findings with constituents, and making informed decisions based on the assessment data. Similarly, in 1999, the Academic Affairs Committee of the AACP published a report that included a model that could be used to assist colleges and schools in developing assessment plans.22 The model proposed by Hollenbeck was used by the faculty members and administrators who attended the 1999 AACP Institute.

In a recent review of the literature, Anderson and colleagues identified 48 full-length articles and notes as well as 116 abstracts related to assessment that were published in the American Journal of Pharmaceutical Education from 1990 to 2003.23 The authors noted that there was a need to examine the current status of assessment at colleges and schools of pharmacy to determine similarities, differences, and best practices.

To continue the process of documenting academic pharmacy's progress toward outcomes assessment, a study was conducted to categorize the manner in which programmatic curricular outcomes assessment is being accomplished at colleges and schools of pharmacy in the United States and Puerto Rico. Additional goals of the project were to develop a better understanding of the types of methodologies used to assess programmatic outcomes and to identify the persons, groups, or committees responsible for the various assessment strategies.

METHODS

A self-administered survey instrument was developed and reviewed for completeness, ease of completion, clarity, and overall suitability by persons at 4 different colleges and schools of pharmacy and 1 person at AACP with expertise in survey design and/or extensive knowledge of the subject area. Following modification, the questionnaire and cover letter were submitted to the institutional review boards at Long Island University and Massachusetts College of Pharmacy and Health Sciences. Both institutions granted exempt status to the project.

In fall 2003, an e-mail message was sent to the deans of the 89 colleges and schools of pharmacy in the United States and Puerto Rico requesting the name of the most appropriate person to receive the questionnaire. During the late fall, a questionnaire (along with a cover letter and a postage-paid return envelope) was mailed to the person suggested by each dean. In those instances in which a dean did not suggest a specific person, the materials were mailed to the dean. Duplicate packets were mailed to nonrespondents approximately 5 weeks later, and telephone calls were made or e-mail messages were sent to persons who did not respond to the second mailing. Persons who responded to these messages received a survey packet by mail, e-mail, or FAX, as per their request.

Information obtained from the questionnaires was entered into a database. Descriptive statistics and bivariate analyses of the data were conducted using SPSS software (Version 13.0). All variables were categorical. Percentages were reported for descriptive analyses and are based on the total number of respondents unless otherwise noted. Fisher's exact tests were used for the bivariate analyses. Significance was set at P < 0.05.

RESULTS

A total of 68 surveys were returned for a 76% response rate. There were no significant differences in findings based on whether the institutions were public or private. Forty-one (60%) respondents reported that they had a written and college- or school-wide approved plan for programmatic curricular outcomes assessment, while 24 (35%) respondents indicated that the plan was in development, and 3 (4%) stated that there was no written and approved plan. As noted throughout this manuscript, the only significant findings noted related to whether a college or school of pharmacy had a written and approved plan for assessment. In other words, certain programmatic curricular assessments were more likely to be utilized if a formal and approved assessment plan existed.

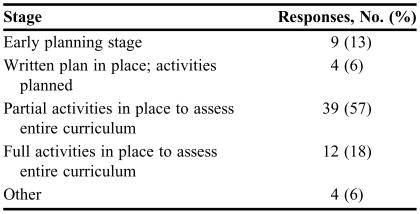

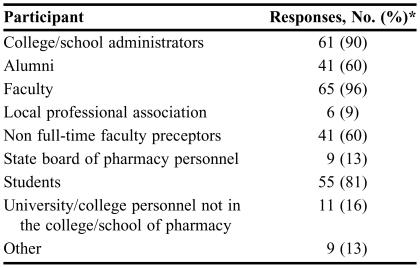

As depicted in Table 1, colleges and schools of pharmacy were at different stages of programmatic curricular assessment. The majority of respondents (39, 57%) indicated that partial activities were in place to assess the entire curriculum, while 12 (18%) institutions had full activities in place to assess curricular outcomes. The data in Table 2 indicate that a wide array of stakeholders were involved in some aspect of programmatic curricular assessment with the most common being faculty members (65, 96%), college and school administrators (61, 90%), and students (55, 81%). Commonly involved as well were alumni and non full-time faculty preceptors, while professional groups such as state boards of pharmacy and professional associations, and non-pharmacy personnel from the college or school, or the affiliated university, were not frequently involved in the programmatic assessment process.

Table 1.

Stage of Curricular Assessment at Colleges and School of Pharmacy (N = 68)

Table 2.

Persons Involved in Curricular Outcomes Assessment at Colleges and Schools of Pharmacy (N = 68)

*Some respondents gave multiple responses

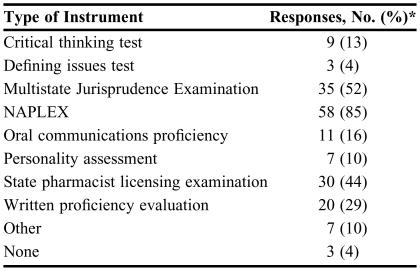

Several standardized instruments were used to assess programmatic curricular outcomes (Table 3). The most frequently reported items were related to professional licensure; specifically NAPLEX (58 responses, 85%), the Multi-state Jurisprudence Examination (35, 52%), and individual state pharmacist licensing examinations (30, 44%). The most widely used non-licensure assessment tool was a written proficiency evaluation (20, 29%). Although other commercially available methodologies were employed (eg, critical thinking tests and personality assessments) they did not appear to have widespread use.

Table 3.

Standardized Instruments Used to Assess Programmatic Curricular Outcomes at Colleges and Schools of Pharmacy (N = 68)

*Multiple responses exist

NAPLEX = North American Pharmacist Licensure Examination

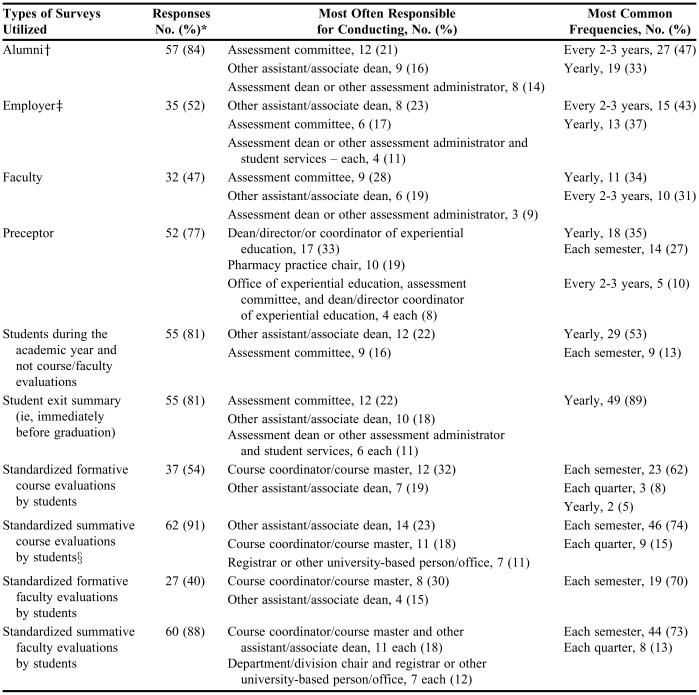

As shown in Table 4, many different types of surveys were utilized in the assessment process. For example, 57 (84%) respondents sought input from alumni and 35 (52%) contacted employers of recent graduates on a regular basis. Of the 41 (60%) colleges and schools of pharmacy with a written and approved assessment plan, 39 (57% of the total respondents and 95% of those with an approved plan) conducted alumni surveys. In comparison, of the 27 (40%) institutions without a plan, 18 (26% of the total respondents and 67% of those without a plan) conducted surveys of alumni. Thus, colleges and schools with assessment plans were significantly more likely to conduct alumni surveys than those without assessment plans (Fisher's exact test 0.005). Of the 41 colleges and schools with an assessment plan, 28 (41% of the total and 68% of institutions with a plan) conducted employer surveys, while 7 out of 27 institutions without a plan (10% of the total and 26% of those without a plan) conducted this assessment. These differences are significant (Fisher's exact test 0.001). Fifty-two (77%) colleges and schools of pharmacy routinely surveyed preceptors, but unlike alumni and employer surveys, these surveys were most commonly conducted by persons responsible for experiential education rather than persons responsible for programmatic assessment in general and were not significantly related to whether a college or school had a written and approved assessment plan.

Table 4.

Survey Assessments Conducted at Colleges and Schools of Pharmacy (N = 68)

*Multiple responses exist

†Fisher's exact test 0.005; have or do not have assessment plan

‡Fisher's exact test 0.001; have or do not have assessment plan

§Fisher's exact test 0.033; have or do not have assessment plan

The most commonly reported student assessment survey (62 respondents, 91%) was standardized course evaluations at the end of a course (ie, summative evaluations) followed by faculty evaluations at the end of a course (60, 88%). Of the 41 institutions with a formal assessment plan in place, 40 (59% of the total respondents and 98% of those with a plan) conducted summative course evaluations compared with 22 of 27 that did not have a formal and approved assessment plan (32% of the total and 82% of those without a plan). Therefore, compared with institutions without a formal and approved assessment plan, those with a plan appeared to be significantly (Fisher's exact test 0.033) more likely to conduct summative course evaluations. Course evaluations and faculty evaluations during a course (ie, formative evaluations) were reported somewhat less frequently. These student assessments were commonly the responsibility of course coordinators (ie, faculty members) rather than persons responsible for overall programmatic assessment. Exit interviews or student surveys immediately prior to graduation were conducted by 55 (81%) responding colleges and schools and were usually the responsibility of persons involved with overall assessment activities.

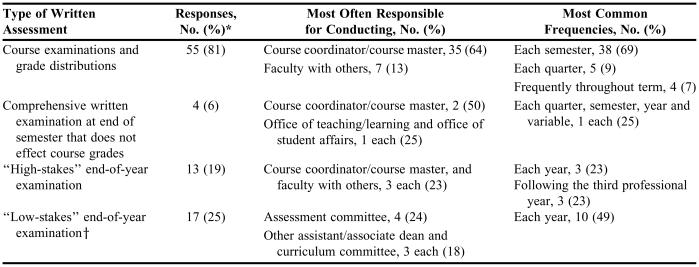

The most common written assessments and/or examinations used for programmatic curricular assessment were course examinations and a review of grade distributions (see Table 5). Course coordinators and other members of the faculty usually conducted these assessments. Of the 41 respondents whose college or school had an assessment plan in place, 14 (21% of the total and 34% of this subgroup) conducted a so-called “low-stakes” end-of-year examination to assess whether students had obtained the requisite knowledge, skills, attitudes, and behaviors. In comparison, 3 of 27 institutions without an assessment plan (4% of the total and 11% of colleges and schools in this subgroup) indicated they conducted a low-stakes end-of-year examination (Fisher's exact test 0.045; have versus do not have an assessment plan). Designed to assess curricular outcomes, these low-stakes examinations had minimal or no effect on an individual student's progression through the curriculum. Thirteen (19%) respondents utilized a similar end-of-year examination, but in this case the examination did have consequences for individual students and was, therefore, considered to be a “high-stakes” examination. So-called “high-stakes” examinations are usually conducted annually or immediately prior to students beginning advanced practice experiences.

Table 5.

Written Assessments and/or Examinations Used for Programmatic Curricular Outcomes Assessment at Colleges and Schools of Pharmacy (N = 68)

*Multiple responses exist

†Fisher's exact test 0.045; have or do not have assessment plan

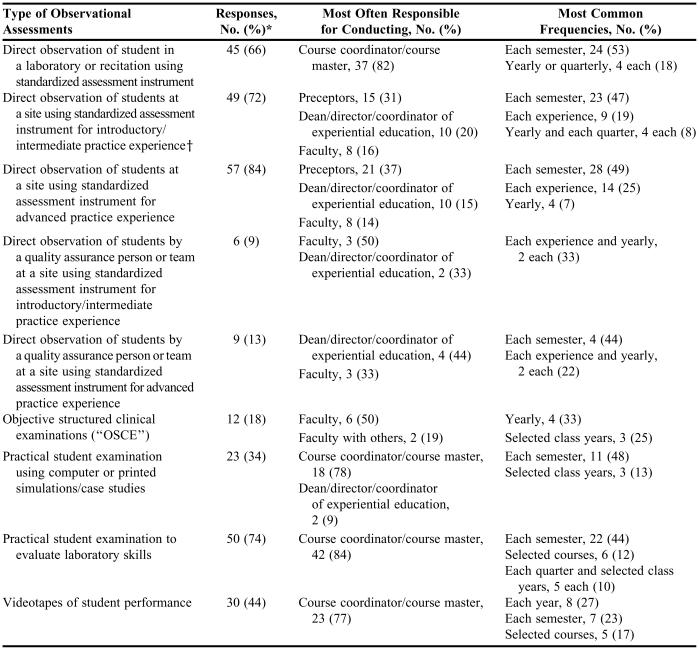

As highlighted in Table 6, colleges and schools of pharmacy used a variety of observational assessments as part of the programmatic assessment process (in addition to utilizing similar observations to assess individual courses or experiences). These assessments were conducted with standardized instruments, and included assessing students in laboratories (such as those that emphasize extemporaneous compounding of medications and the performance of physical assessment) and recitation sections, and at introductory and advanced practice sites. Many institutions (30, 44%) videotaped students to assess various skills such as the ability to counsel real or simulated patients. Twelve (18%) respondents indicated that their institutions utilized OSCEs as part of the programmatic assessment process.

Table 6.

Observational Assessments Utilized for Curricular Outcomes Assessment at Colleges and Schools of Pharmacy (N = 68)

*Multiple responses exist

†Fisher's exact test 0.005; have or do not have assessment plan

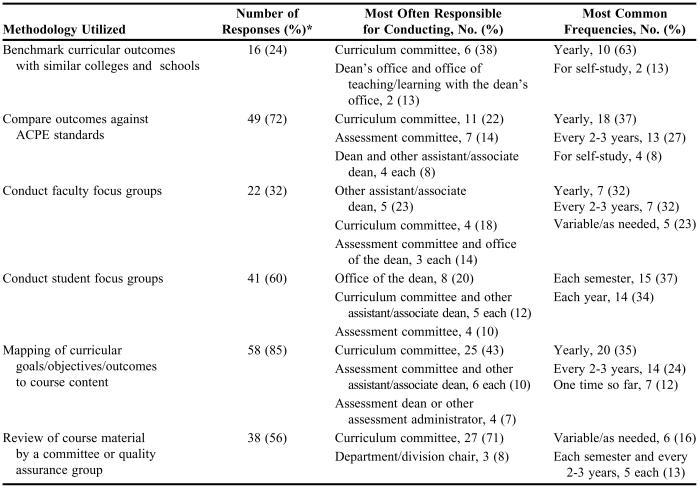

A variety of other assessment methodologies were used as well (Table 7). These included mapping of curricular goals and objectives to curricular outcomes, comparing curricular outcomes to those noted by ACPE, conducting focus groups with students, and reviewing individual course syllabi, handouts, and examinations. Less frequently reported strategies included benchmarking curricular outcomes with other colleges and schools of pharmacy and conducting faculty focus groups.

Table 7.

Miscellaneous Methodologies Used for Curricular Outcomes Assessment at Colleges and Schools of Pharmacy (N = 68)

*Multiple responses exist

Twenty-seven (40%) respondents indicated that they used student portfolios as part of the curricular assessment process. In addition, 12 (18%) noted that faculty/teaching portfolios were used as part of the process and 11 (16%) reported that they reviewed student journals.

Respondents were requested to consider all of the assessment methodologies utilized at their institution and indicate the most useful/valuable ones. The responses were highly varied and there was no consensus. Nevertheless, for the didactic curriculum, 7 (10%) respondents indicated that student surveys were the most useful, while for introductory and advanced practice experiences, preceptor observations were the most commonly cited (18% and 19%, respectively).

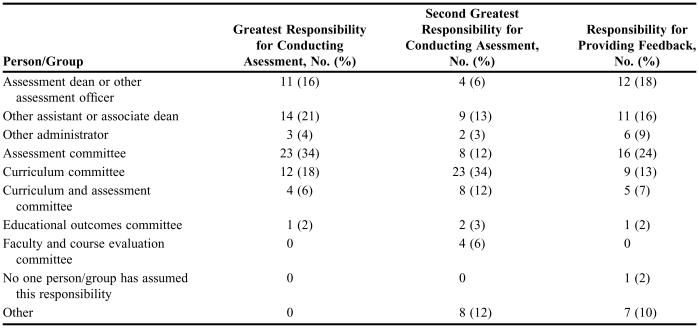

Respondents were queried as to the person or group that had the greatest and second greatest overall responsibility for programmatic curricular assessment (Table 8). The greatest overall responsibility fell to an assessment committee, or an assistant or associate dean, followed by the curriculum committee. The curriculum committee had the second greatest responsibility followed by an assistant or associate dean. The task of summarizing the findings from the various assessment methods and providing the information to concerned parties was most commonly the responsibility of an assessment committee, followed by an assessment dean or other assessment officer/administrator (Table 8).

Table 8.

Persons/Groups with Responsibility for Conducting Programmatic Curricular Assessments, and/or Summarizing Findings and Providing Feedback at Colleges and Schools of Pharmacy (N = 68)

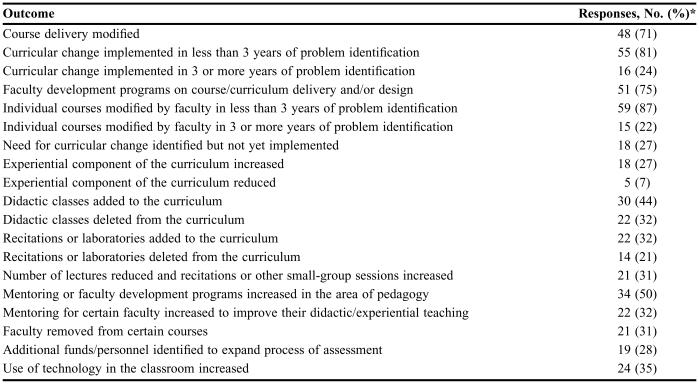

The ultimate goal of programmatic curricular assessment is to identify strengths and weaknesses of the program and implement change when warranted. Table 9 delineates the types of changes that were reported as a result of the assessment process. Although multiple responses were prevalent, 59 (87%) respondents noted that individual faculty members modified specific courses in less than 3 years of identifying a need for course modification and 55 (81%) respondents noted that a more global curricular change occurred in less than 3 years of identifying a need for the change. Other common items noted included modification of course delivery, addition of didactic courses to the curriculum, and the implementation of various mentoring and faculty development programs in a timely fashion.

Table 9.

Types of Changes That Have Occurred as a Result of Curricular Outcomes Assessment at Colleges and Schools of Pharmacy (N = 68)

*Multiple responses exist

Respondents' opinions on several issues were requested. First, regarding the financial cost of programmatic curricular assessment, 7 (10%) thought the cost was high, 31 (46%) thought it was intermediate, 27 (40%) thought it was low, and 3 (4%) did not respond. Second, concerning the overall benefit to the college or school of pharmacy, 31 (46%) thought the benefits were high, 25 (37%) noted that the benefits were intermediate, 9 (13%) responded low, and 3 (4%) did not respond. Finally, 1 (2%) respondent indicated that programmatic curricular assessment was overemphasized at the college or school of pharmacy, 26 (38%) noted that it was underemphasized, 35 (52%) noted that the emphasis was correct, and 6 (9%) did not respond.

DISCUSSION

Consistent with the emphasis on overall assessment activities and specifically those in programmatic outcomes assessment expressed by AACP, ACPE, and higher education in general, the current study revealed an increase in formal activities at colleges and schools of pharmacy compared with previous studies. For example, in 2000, Bouldin and Wilkin reported that 44% of 55 colleges and schools of pharmacy (24 institutions) surveyed had a written assessment plan and, of those, 15 colleges and schools (65%) had plans that were approved by the faculty.11 At about the same publication time, Boyce noted that 38% of institutions had formal assessment activities in place.4 In comparison, the current study revealed that 60% of 68 respondents (41 institutions) had a written and approved plan for programmatic curricular assessment. This noteworthy increase in the percentage of institutions with a written and approved assessment plan within a relatively short period of time implies a significant effort by colleges and schools of pharmacy to implement an assessment process. Nevertheless, only 18% of respondents had activities in place to assess all curricular outcomes. This could imply several things such as a significant time-lag between developing an assessment plan and full implementation; a lack of suitable assessment instruments; and/or a lack of resources (time and money) to devote to assessment. Alternatively, partial activities may have been in place, assessments were conducted, and curricular changes were made before additional programmatic outcomes were assessed – a commendable and effective process although it could be perceived as somewhat slow. To comply with ACPE standards, a comprehensive plan needs to be in place and colleges and schools of pharmacy should strive to implement full assessment activities, recognizing that even if a comprehensive plan is in place, it might not be possible to assess all outcomes annually due to limited resources.

The current study revealed the involvement of a wide array of stakeholders in the assessment process. Somewhat unclear is the reason why all colleges and schools did not have faculty members and students involved in this endeavor. Perhaps this is another step in the evolution process, as it does not appear possible to institute an effective plan without the involvement of these 2 groups. In addition, it is somewhat surprising that few colleges and schools are involving persons from state boards of pharmacy and/or professional organizations in programmatic outcomes assessment. It appears these organizations could provide an additional level of expertise, particularly in certain areas such as professionalism and professional/political activism.

Bouldin and Wilkin reported that among colleges and schools with formal programmatic assessment plans, 96% had student involvement, 92% had faculty involvement, 75% had preceptor involvement, 63% had alumni involvement, and 25% had participation from state boards of pharmacy.11 Although these levels of involvement are slightly higher than those found in the current study, the data from Bouldin and Wilkin were limited to institutions that had formalized assessment plans at the time. The importance of having a broad array of stakeholders involved in assessment activities was supported by Boyce4 and Anderson et al.23 For example, Anderson and colleagues noted that students, faculty members, staff members, administrators, alumni, employers, community partners, and others should be involved in the process, although their level of involvement will vary.23

Similar to the data reported by Bouldin and Wilkin11 NAPLEX was the most widely used standardized programmatic curricular assessment instrument. The goal of NAPLEX, however, is to assess the minimal competency to practice the profession of pharmacy. Although colleges and schools use additional standardized assessment tools, if they are to gain more widespread appeal, data are needed to demonstrate that tests such as oral and written proficiency examinations are valid and correlate with pharmacy curricular outcomes. If validated standardized instruments were available from a central source (such as AACP), the assessment process might be less daunting and it might be easier for colleges and schools to benchmark their activities with others. Nevertheless, given the vast differences among colleges and schools of pharmacy, including the different emphases that individual institutions place on outcomes identified by CAPE and ACPE, assessment methods developed at individual institutions may lead to the most useful and comprehensive programmatic changes.

Colleges and schools continue to use survey data to assess outcomes. Key off-campus stakeholders such as alumni, employers of recent graduates, and preceptors are commonly asked to assess the ability of graduates to meet college and school goals. Interestingly, most of the surveys, such as those that gather information from alumni and employers, were conducted by college and school personnel involved in the overall assessment process. In comparison, surveys of preceptors tended to be conducted by persons involved with experiential education. Similarly, assessments of students at introductory and advanced practice sites were the responsibility of preceptors and others directly involved in experiential education. Colleges and schools of pharmacy seem to view the assessment process for experiential education somewhat differently than that for the rest of the curriculum. In the long term, this might be problematic, as programmatic assessment should be a college- or school-wide, coordinated, and all-inclusive effort. In addition, since ACPE standards require that practice experiences occur throughout the professional degree program, assessment activities should not occur in isolation, but rather as part of an integrated approach.

Respondents noted that several assessments, including course and faculty evaluations, review of grade distributions, use of high-stakes examinations and OSCEs, and direct observation of students in laboratories and recitations, were conducted by faculty members. Although utilizing individual members of the faculty in this manner is logical, it is important that the data gleaned from these efforts are shared with persons or committees responsible for overall assessment. If the information is not shared and does not become part of the overall assessment plan, colleges and schools will be conducting individual course assessments rather than programmatic curricular assessment.

Although 85% of respondents mapped curricular goals and objectives to overall curriculum outcomes, far fewer compared their outcomes to those suggested by ACPE and those used by similar colleges and schools. Recently, revised broad-stroke CAPE outcomes have been published3 (more detailed outcomes/competencies are not yet available) and ACPE has drafted a revision of its accreditation standards.24 The proposed ACPE standards use the CAPE outcomes as professional competencies and outcome expectations for PharmD curricula. Now, persons at colleges and schools of pharmacy will be able to use one set of outcomes for comparative purposes, which should aid the process greatly. Given the different missions and goals of colleges and schools, large-scale benchmarking with other institutions is unlikely to take place unless this can be done through a central source such as AACP. This latter process is done to some extent now, as, for example, colleges and schools can compare faculty salaries with selected comparable institutions and AACP publishes data that allow for faculty salary comparisons across institutions.

About 40% of respondents reported that their colleges and schools used student portfolios for outcomes assessment; the survey instrument did not ask respondents to specify whether these were print or electronic portfolios. Although little is published in the pharmacy literature concerning the use of portfolios,25,26 their use appears to be gaining in popularity. Although review of portfolios by faculty members or administrators is labor-intensive, this appears to be an excellent method for determining whether students are able to meet curricular outcomes in a wide array of areas such as providing pharmaceutical care, managing/interacting with others, and communicating with patients and health care professionals. Student portfolios also are a useful tool for students to use in self-assessing their progress toward achieving course and programmatic outcomes. As commercial software becomes more widely available, it is likely that an increased number of colleges and schools will incorporate this strategy into the overall assessment process.

Colleges and schools were divided in their opinions of whether an individual person or a committee should take the lead in assessment activities. Specifically, many colleges and schools have identified a dean or other assessment officer as the person with the greatest overall responsibility for conducting programmatic curricular assessments, while others have given this task to a group of faculty members (and others) under the auspices of an assessment committee or a curriculum committee. To a large extent, the decision probably should be based on the culture of each institution. Regardless of which approach is taken, as long as faculty members and administrators (in addition to the other key stakeholders such as students and alumni) are involved in the process, effective outcomes assessment can be achieved.

Limitations

As with all self-administered surveys, questions could have been misinterpreted or answered out of context. For example, respondents may have indicated their use of formative and summative faculty and course reviews, but did not differentiate between course assessment and programmatic curricular assessment. Similarly, respondents noted their use of student and faculty/teaching portfolios, but the authors cannot be sure that the portfolios were being used for programmatic curricular outcomes assessment. In addition, for some of the survey questions, respondents provided written responses rather than selecting from a provided list. This made the responses more difficult to categorize and could have resulted in some degree of misinterpretation by the authors.

Finally, considering that the questions dealing with the cost of curricular outcomes assessment were completed by persons heavily involved in the assessment process, the responses may be skewed. Respondents were not queried about nonfinancial costs such as time and energy. Thus, it is not possible to comment on whether respondents believed the process was a time-consuming or labor-intensive one.

CONCLUSION

Each college and school of pharmacy needs to have a written plan in place for programmatic outcomes assessment (even if it needs to be frequently reviewed and perhaps revised23) and should implement full assessment activities as soon as possible, recognizing that it might not be feasible to assess all curricular outcomes on an annual basis. To move the process forward, each college and school should identify a person or group of persons with the responsibility and authority to lead the effort. It does not appear to matter whether the responsible party is an administrator, a faculty member, or an assessment committee; time invested and desire to participate/contribute will undoubtedly make the process work or fail. Equally important, individual colleges and schools need to ensure that all stakeholders are involved and committed, and that the assessment process is transparent.

It is hoped that the new CAPE outcomes and accreditation guidelines being developed will more clearly indicate the outcomes that all colleges and schools of pharmacy need to address, which in turn may help to guide the assessment plans of individual institutions. The assessment process might be aided as well by the availability of additional validated assessment instruments, many of which could be coordinated through a central source, made available to all colleges and schools of pharmacy, and provide a means for sharing data among institutions. Standardized instruments will undoubtedly be more cost effective and time effective than having each institution develop all of its own tools. Nevertheless, college- and school-specific assessment methods will continue to play a vital role in the overall assessment process.

Data obtained from this survey provide colleges and schools of pharmacy with a snapshot of programmatic outcomes assessment throughout the United States and Puerto Rico. Colleges and schools can identify strategies that they wish to adopt based on their use at other institutions, and can note the person or committees responsible for these activities.

Future studies should delve into the reasons for selecting certain assessment activities over others and should make a better effort to identify the most useful instruments. Perhaps future studies can identify persons willing to share their knowledge, experience, and expertise with others, and make this information available through a central source.

All institutions should have a written and approved assessment plan, utilize a wide array of stakeholders in the assessment process, and allow persons responsible for these activities to share instruments in order to make the process easier. If this occurs colleges and schools of pharmacy will be able to establish a culture of assessment that in turn will lead to better educated and more competent pharmacy graduates.

ACKNOWLEDGMENT

This paper was presented in part at the 2004 Annual Meeting of the American Association of Colleges of Pharmacy, Salt Lake City, Utah July 10-14, 2004.

REFERENCES

- 1.Commission to Implement Change in Pharmaceutical Education, Background Paper II. Entry-level, curricular outcomes, curricular content and education process. Am J Pharm Educ. 1993;57:377–85. [Google Scholar]

- 2. CAPE Educational Outcomes, American Association of Colleges of Pharmacy, Available at: http://www.aacp.org/Docs/MainNavigation/ForDeans/5763_CAPEoutcomes.pdf. Accessed March 3, 2005.

- 3. CAPE Educational Outcomes, American Association of Colleges of Pharmacy, Available at: http://www.aacp.org/Docs/MainNavigation/Resources/6075_CAPE2004.pdf. Accessed March 3, 2005.

- 4. Boyce EG. A guide for Doctor of Pharmacy program assessment. American Association of Colleges of Pharmacy, Available at: http://www.aacp.org/Docs/MainNavigation/Resources/5416_pharmacyprogramassessment_forweb.pdf. Accessed March 7, 2005.

- 5. Boyce EG. Appendices to a guide for Doctor of Pharmacy program assessment, American Association of Colleges of Pharmacy, Available at: http://www.aacp.org/Docs/MainNavigation/Resources/5417_pharmprogramassessmentappendices_forweb.pdf. Accessed March 7, 2005.

- 6.Abate MA, Stamatakis MK, Haggett RR. Excellence in curriculum development and assessment. Am J Pharm Educ. 2003;67:478–500. [Google Scholar]

- 7. Accreditation Council for Pharmacy Education. Accreditation Standards and Guidelines for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree Adopted June 14, 1997. Available at: http://www.acpe-accredit.org./standards. Accessed March 3, 2005.

- 8.Scott DM, Robinson DH, Augustine SC, Roche EB, Ueda CT. Development of a professional pharmacy outcomes assessment plan based on student abilities and competencies. Am J Pharm Educ. 2002;66:357–64. [Google Scholar]

- 9.Ryan GJ, Nykamp D. Use of cumulative examinations at US schools of pharmacy. Am J Pharm Educ. 2000;64:409–12. [Google Scholar]

- 10.Winslade N. A system to assess the achievement of doctor of pharmacy students. Am J Pharm Educ. 2001;65:363–92. [Google Scholar]

- 11.Bouldin AS, Wilkin NE. Programmatic assessment in US schools and colleges of pharmacy: A snapshot. Am J Pharm Educ. 2000;64:380–7. [Google Scholar]

- 12.Supernaw RB, Mehvar R. Methodology for the assessment of competence and the definition of deficiencies of students in all levels of the curriculum. Am J Pharm Educ. 2002;66:1–4. [Google Scholar]

- 13.Garavalia LS, Marken PA, Sommi RW. Selecting appropriate assessment methods: Asking the right questions. Am J Pharm Educ. 2002;66:108–12. [Google Scholar]

- 14.Howard PA, Henry DW, Fincham JE. Assessment of graduate outcomes: focus on professional and community activities. Am J Pharm Educ. 1998;62:31–6. [Google Scholar]

- 15.Isetts BJ. Evaluation of pharmacy students' abilities to provide pharmaceutical care. Am J Pharm Educ. 1999;63:11–20. [Google Scholar]

- 16.Purkerson DL, Mason HL, Chalmers RK, Popovich NG, Scott SA. Expansion of ability-based education using an assessment center approach with pharmacists as assessors. Am J Pharm Educ. 1997;61:241–8. [Google Scholar]

- 17.Monaghan MS, Vanderbush RE, Gardner SF, Schneider EF, Grady AR, McKay AB. Standardized patients: an ability-based outcomes assessment for the evaluation of clinical skills in traditional and nontraditional education. Am J Pharm Educ. 1997;61:337–44. [Google Scholar]

- 18.Dennis VC. Longitudinal student self-assessment in an introductory pharmacy practice experience course. Am J Pharm Educ. 2005;69 Article 1. [Google Scholar]

- 19.Ried LD, Brazeau GA, Kimberlin C, Meldrum M, McKenzie M. Students' perceptions of their preparation to provide pharmaceutical care. Am J Pharm Educ. 2002;66:347–56. [Google Scholar]

- 20. Successful Practices in Pharmaceutical Education, Program Assessment, American Association of Colleges of Pharmacy. Available at: http://www.aacp.org/Docs/MainNavigation/Resources/6026_ProgramAssessment.pdf. Accessed March 7, 2005.

- 21.Maki PL. Developing an assessment plan to learn about student learning. J Acad Librarianship. 2002;28:8–13. [Google Scholar]

- 22.Hollenbeck RG. Chair report for the academic affairs committee. Am J Pharm Educ. 1999;63:7S–13S. [Google Scholar]

- 23.Anderson HM, Anaya G, Bird E, Moore DL. A review of educational assessment. Am J Pharm Educ. 2005;69 Article 12. [Google Scholar]

- 24. Accreditation Council for Pharmacy Education, Revision of ACPE Standards 2000 Draft 1.2 January 2005, Available at: http://www.acpe-accredit.org./pdf/ACPE_Revised_Standards_Draft_1.2_with_Appendices.pdf. Accessed March 7, 2005.

- 25.Holstad SG, Vrahnos D, Zlatic TD, Maddux MS. Electronic student portfolio. Am J Pharm Educ. 1998;99:56. [Google Scholar]

- 26.Cerosimo R, Copeland D, Kirwin J, Matthews SJ. Initiation and mid-year review of a three-part P4 student portfolio. Am J Pharm Educ. 2003;63 Article 100. [Google Scholar]