Abstract

Background

Physicians have difficulty keeping up with new evidence from medical research.

Methods

We developed the McMaster Premium LiteratUre Service (PLUS), an internet-based addition to an existing digital library, which delivered quality- and relevance-rated medical literature to physicians, matched to their clinical disciplines. We evaluated PLUS in a cluster-randomized trial of 203 participating physicians in Northern Ontario, comparing a Full-Serve version (that included alerts to new articles and a cumulative database of alerts) with a Self-Serve version (that included a passive guide to evidence-based literature). Utilization of the service was the primary trial end-point.

Results

Mean logins to the library rose by 0.77 logins/month/user (95% CI 0.43, 1.11) in the Full-Serve group compared with the Self-Serve group. The proportion of Full-Serve participants who utilized the service during each month of the study period showed a sustained increase during the intervention period, with a relative increase of 57% (95% CI 12, 123) compared with the Self-Serve group. There were no differences in these proportions during the baseline period, and following the crossover of the Self-Serve group to Full-Serve, the Self-Serve group’s usage became indistinguishable from that of the Full-Serve group (relative difference 4.4 (95% CI −23.7, 43.0). Also during the intervention and crossover periods, measures of self-reported usefulness did not show a difference between the 2 groups.

Conclusion

A quality- and relevance-rated online literature service increased the utilization of evidence-based information from a digital library by practicing physicians.

Introduction

It has long been known that even the most important advances in health care knowledge take decades for widespread implementation. 1 Many questions arise in clinical encounters between physician and patient concerning the treatment, diagnosis and cause of disease that could be answered by the medical literature. 2,3 These questions are often left unanswered, but physicians are increasingly turning to the Internet for new opportunities to satisfy information needs. 4 Recent studies show that several barriers block the acquisition and use of high quality research information in health care. Findings indicate that physicians’ daily routines afford them little time to search and review new medical information. 5–7 Physicians admit that they lack the skills required to navigate literature databases 7–9 and many physicians remain unaware of the existence of evidence-based information resources. 5 Physicians also lack the skills to properly appraise medical literature. 6,8,10

Clinical information retrieval technology (CIRT) encompasses systems such as Internet-based digital libraries containing reference information that clinicians can consult for answering everyday patient questions and keeping abreast of current best evidence for clinical decisions. CIRT is now widely used but evaluations have been limited. Less than a third of observational studies show that searches have a positive influence on clinicians, with equivocal results from a small number of experimental studies. 11

We hypothesized that centralizing the evaluation of newly published medical literature to find the highest quality and most relevant clinical studies, and providing both alerting and searching services for this literature in conjunction with a digital library, would increase the utilization and usefulness of the medical literature by practicing clinicians. In collaboration with 3 evidence-based publications, we created the McMaster Premium LiteratUre Service (McMaster PLUS). This service 1) identifies the clinical discipline and interests of individual practitioners; 2) rates the scientific merit, clinical relevance, and newsworthiness (novelty) of new clinical research articles and reviews at the time of publication, for each relevant discipline; 3) alerts practitioners to new high quality, relevant research findings for their discipline; and 4) provides a cumulative database of these items so that practitioners can look up information when needed. The service was linked with a pre-existing, standard, Internet-based library service including full-text online journals and books. In a cluster randomized trial among clinicians who were already registered with the library service, the PLUS system was tested for utilization, utility, use of individual items, and perceived clinical usefulness.

Methods

Some of the methods are detailed elsewhere 12,13 and are presented briefly here, with other aspects not previously reported described here in full. The study protocol was registered with the International eHealth Study Registry (IESR; IESN2005RH00013) (http://www.jmir.org/IESR), and approved by the McMaster University-Hamilton Health Sciences Research Ethics Board.

Setting

The study was staged in Northern Ontario in collaboration with the Northern Ontario Medical Program (NOMP) and Northeastern Medical Education Corporation (NOMEC), which had administrative responsibility for the Northern Ontario Virtual Library (NOVL). NOVL is an Ontario government-sponsored free digital library service for all physicians in Northern Ontario, mainly including Ovid-licensed full-text journals and its Evidence-Based Medicine Reviews service, as well as medical text and journal collections (MD Consult, initially, then Stat!Ref). The opportunity for this collaboration was to assist with the strengthening of clinical information services in this relatively sparsely populated area of approximately 786,440 inhabitants in over 800,000 square kilometers, 14 in preparation for a new medical school, the Northern Ontario School of Medicine, which opened shortly after the trial was completed.

Participants

Physicians were recruited by mail-outs, e-mail, fax, and presentations, based on contact information authorized for release for recruitment purposes by NOVL, and from the Canadian Medical Association electronic directory (MD Select) and the College of Physicians and Surgeons of Ontario (CPSO) registry. NOVL, NOMP and NOMEC also notified their physician registrants about the study and included information about registering for the PLUS trial on their websites.

Physicians were eligible for the study if they: 1) were registered with NOVL; 2) spent at least 20% of their clinical practice time working in general or family practice, general internal medicine, or sub-specialties of internal medicine; 3) expected to maintain a similar practice in the same community for at least one year (not moving or retiring); 4) were fluent in English; and 5) used a personal e-mail account at least once per month. Incentives for physicians to participate in the trial included the offer of automatically recorded continuing medical education (CME) credits, accredited through McMaster University, from the Royal College of Physicians and Surgeons of Canada, the College of Family Physicians of Canada, and the American Medical Association, for time spent with the PLUS system. PLUS trial registration, including determining trial eligibility and obtaining participants’ consent, was conducted online. The total number of participants screened and included in the study is outlined at the beginning of the Results section below.

We also sought to increase physician recruitment and participation by enlisting the help of “educational influentials,” identified by asking PLUS participants to nominate colleagues whom they thought were good communicators, humanistic and had a strong knowledge base.

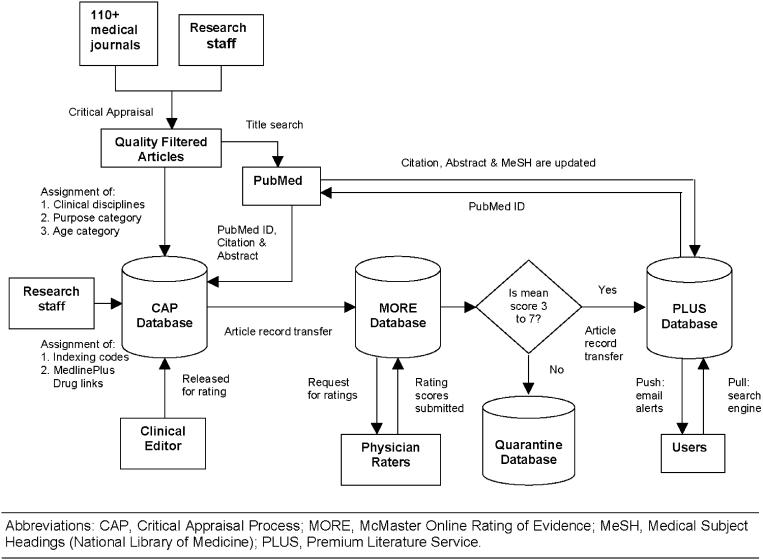

The McMaster Premium LiteratUre Service (PLUS) System (▶)

Figure 1.

Quality appraisal, peer rating, and literature distribution process.

McMaster PLUS was created as an extension of the production of evidence-based journals in the Health Information Research Unit (HIRU) at McMaster University, including ACP Journal Club (http://www.acpjc.org) and Evidence-Based Medicine (http://ebm.bmjjournals.com/), and with approval of their publishers, the American College of Physicians and the BMJ Publishing Group, respectively. (A freely available version without digital library links exists as bmjupdates+, http://bmjupdates.com) PLUS features (▶) included:

1 Scientific appraisal: Clinical research studies and systematic reviews about treatment, prevention, diagnosis, prognosis, etiology, clinical prediction, quality improvement, and cost-effectiveness, were selected by research staff from each issue of over 110 clinical journals since 2002, according to explicit criteria for scientific merit,15 and qualifying articles were organized by clinical discipline;

2 Clinical Peer Rating: For each qualifying article, 3-4 practicing physicians (from an online panel of over 1500 physicians) for each pertinent discipline (33 possible clinical disciplines in total) were asked to rate each article according to two 7-point scales—one for clinical relevance and one for newsworthiness (novelty)—using the McMaster Online Rating of Evidence (MORE) system (http://hiru.mcmaster.ca/MORE)13;

3 Links: Citations of articles with ratings that averaged 3 or more for each scale were transferred to the PLUS database and linked to their PUBMED abstracts, to their full-text article if available through NOVL or open access, to MEDLINEPLUS patient information if about licensed drugs, and to ACP Journal Club synopses, if available later;

4 Alerts: As soon as 3 clinical ratings were received for a given discipline, if the ratings averaged at least 4 for each scale, an alert was “pushed” by e-mail to participants of that discipline, notifying them of the article. Each participant had the option to adjust the alert notification cut-off for each scale upwards from 4 through 7 (thus narrowing the range of relevance and number of alerts they would receive), as well as the frequency with which alerts were sent (daily through weekly); and

5 Search: A search engine was provided for the online cumulative database of all qualifying articles (back to 2002); the search engine recognized PLUS registered users’ characteristics for information retrieval (“pull”), so that they could retrieve articles rated by physicians in their discipline.

We developed 3 different web-based interfaces for the delivery of PLUS services: a baseline period interface for all participants and, during the trial period, a “Self-Serve” interface and “Full-Serve” interface. The baseline interface provided users with access to the usual digital resources offered by NOVL. In the trial period, one study group was provided with “Self-Serve” access to NOVL, which was the baseline interface plus a passive guide to finding evidence-based clinical information (“pyramid of evidence” 16 ). The second group received “Full-Serve” access to NOVL by augmenting the Self-Serve interface with discipline-specific PLUS alerts (“push”) and access to the PLUS database of accumulated alerts (“pull”) (▶).

Table 1.

Table 1. Features of the Self-Serve and Full-Serve Versions of McMaster PLUS

| Feature | Description | Self-Serve | Full-Serve |

|---|---|---|---|

| Ovid | Suite of online bibliographic databases (e.g., MEDLINE and CINHAL) and resources (Clinical Evidence, Cochrane Database of Systematic Reviews, Books@Ovid) offering full text from many journals and books. Available to PLUS participants Nov 2003–Mar 2005. |  |

|

| MD Consult | Web-based health information look-up service with full-text to Elsevier published items and Patient Information Sheets, and other features. Available to PLUS participants from Nov 2003–Sept. 2004. |  |

|

| Stat!Ref | Online library of full-text medical text books. Available to PLUS participants Nov 2003–Mar 2005. |  |

|

| Pyramid of Evidence | Hierarchy of evidence-based information guide to finding best evidence for a given clinical topic 16 |  |

|

| PLUS Alerts | E-mail alerts sent to users, matched on their personal clinical disciplines, which provide citation information for new critically appraised, clinically rated research and review articles, their ratings and links to their full-text and drug prescribing and patient information (if available). E-mail frequency and volume of alerted titles is customizable. |  |

|

| PLUS Search | Search engine that returns critically appraised articles rated by physicians in their own clinical discipline (default) or across all disciplines. Features a synonym table to increase the yield of relevant records and Google’s spell checker (by permission). Search results presented in same format as for alerted articles, with full-text links to journals and ACP Journal Club, if available. |  |

Study Design

To minimize contamination between the study groups (i.e., physicians assigned to the different study groups sharing additional information provided by the McMaster PLUS alerting service or search engine), we conducted a stratified, cluster-randomized controlled clinical trial with 2 study groups.

Randomization

During a pre-randomization baseline period with access only to NOVL, we assembled trial participants into 10 community clusters by mapping clinical practice locations of all PLUS participants, and grouping them into non-overlapping clusters which maximized the geographic distance between clusters and minimized the variation in numbers of participants in each cluster. Hospital district divisions were consulted about physician practice patterns to make decisions on some cluster designations.

Stratification of community clusters was originally to be based on 2 criteria: 1) the number of physicians per cluster; and 2) the median frequency of NOVL use (average number of logins per month by each cluster) over the baseline period. Since baseline usage patterns showed little inter-cluster difference, clusters were only stratified by the number of participants in each. Each cluster was then assigned a number to conceal the name of the community (concealed allocation), and the 4 largest clusters were rank ordered from largest to smallest. Each cluster was randomized to either the Full-Serve or Self-Serve interface based on a table of random numbers reported by Fleiss, 17 with balancing for each pair of clusters. This process was repeated for the 6 smaller clusters.

Blinding

Although physicians could not be blinded to the intervention, the Full-Serve and Self-Serve interfaces were similar in appearance and navigation for both groups, and participants were not told to which group they were assigned. Also each group’s trial period interfaces offered something new compared with the baseline period (previously described). All PLUS trial staff except the data analyst were blinded to the allocation of practice communities to the Full-Serve or Self-Serve trial interfaces until the time of data analysis.

Data Collection

The baseline period served to recruit participants and collect data on their typical utilization and use of the digital resources offered by NOVL (Ovid, MD Consult and Stat!Ref), later used in the comparison to the intervention period use. The PLUS monitoring software was originally conceived of as a client-side plug-in that would monitor all keystrokes by participants while using a PLUS “desktop” and browser on their computers, 18 but project collaborators whose own digital platform would lie upstream to the desktop objected to this for security reasons. We thus changed to a “server-side” system, hosted in the HIRU at McMaster University that tracked logins by individual users via a devoted login page or via e-mail alerts. Use of PLUS system features and links accessed from the McMaster PLUS server were tracked, for example, opening alerted article records and viewing rater comments, the PUBMED abstract, ACP Journal Club synopsis, or full-text article. Use of the PLUS search engine, the pyramid of evidence, or quick launch links to the table of contents of specific journals (PLUS journal link) and selecting a licensed digital resource such as Ovid, were also tracked. For coverage of users’ activities within Ovid, an existing online reporting tool, Ovid Stats, was used. The PLUS system could track users to, but not within, MD Consult, Stat!Ref, PUBMED, or other locations outside the PLUS web environment. The length of a usage session could be accurately assessed only when a user logged out of the system. In cases where multiple logins were initiated by a user within 5 minutes of each other without a recorded logout, the sessions were combined. System logout was encouraged by only offering CME credits if users logged out. When users did not log out of the system, the duration from login to their last interaction (e.g., Ovid link selected) was recorded. Usage sessions were automatically terminated after 30 minutes of inactivity.

Outcome Measures

The primary utilization outcome was measured by 1) comparison of the rate of logins/month/user by each trial group; 2) comparison of the change in rate of logins/month/user by each trial group between the baseline period and the intervention period; and 3) differences in the proportions of users per month in each group. A login event was defined as a login followed by any system usage (i.e., if any menu items or links were clicked). Secondary utilization outcomes reported in this paper included 1) average minutes per month that practitioners logged into PLUS; and 2) average number of times practitioners used certain specific electronic resources (i.e., the average number of times practitioners clicked on one of Ovid and/or Stat!Ref which were available via a link from within the PLUS system).

The primary utility outcome measured how satisfied participants were with the PLUS system, collected via an online survey that was automatically sent to each user monthly or after every tenth usage session in which they employed a licensed digital resource, the PLUS search engine, or PLUS journal links. The questionnaire included 4 very brief questions that took less than one minute to complete (a full version of the questionnaire can be found online as an Appendix to this article).

The primary use outcome reported in this paper measured use of relevant, evidence-based information, defined as accessing one of the following resources once within Ovid: full-text journals, and evidence-based publications such as the Cochrane Library and other publications in Ovid’s Evidence-Based Medicine Reviews collection. Access to these resources was tracked by Ovid Statistics.

The primary usefulness outcome was assessed as the average physician response to the effect of literature access on self-reported practice performance and patient outcomes.

Sample Size and Power

The original sample size calculations estimated the need for 18 communities per trial group, with an average cluster size of 15 practicing physicians per community (a total of 540 physicians), to detect a difference in the average login rate for the 2 study groups of 8 logins per 2 month trial period, with a standard deviation of 4, an intracluster correlation coefficient of 0.2, and a power of 78%. However, a review of the power calculations during the recruitment period revealed an error, such that the study had over 99% power to detect an effect of this size with just 10 communities and an average of 15 practitioners per community.

Statistical Methods/Analysis

Although the unit of allocation and analysis for the trial was to be physician communities, with a single value for each outcome in each cluster, data collected during the baseline period revealed a non-significant intra-cluster correlation coefficient of −0.02 (95% confidence interval [CI] −0.16, 0.12) for the primary study outcome measure of frequency of logins. This documented that the variation between communities was unimportant, and was therefore ignored in subsequent analyses; individual physicians became the unit of analysis. For continuous outcomes such as the average number of physician logins to PLUS per community per month, the average physician time of PLUS utilization per community per month, and the change in these measures over time, mixed effects linear repeated measures analyses of variance (ANOVA) were applied, with the interaction of intervention by time as the main feature of interest. A similar approach with analysis of covariance was used for adjustments of important baseline variables. For dichotomous outcomes such as the proportion of physicians who were satisfied with PLUS, nonparametric tests were used to assess differences between the 2 intervention groups (with the use of generalized Mantel-Haenszel tests in stratified analyses, such as primary care doctors and specialists). P-values less than 0.05 were considered to be significant for the primary analyses, without adjustment for multiple comparisons.

Results

Participant Flow and Recruitment (▶)

Figure 2.

Flow of participants through recruitment, determination of eligibility, provision of consent and randomization.

Between September 2003 and March 2004, 325 individuals expressed interest in participating in the McMaster PLUS trial, of whom 62 were determined to be ineligible (50 were residents or nurses, 7 did not practice in one of the required disciplines at least 20% of their clinical time, 3 did not use a personal e-mail account in the previous 30 days, and 2 could not confirm that they would remain in their current practice over the next year). A further 56 did not complete the eligibility questionnaire, and 4 declined to participate in the trial. By March 31, 2004, 203 eligible physicians had provided their online consent to participate and were organized into 10 communities based on geographic clustering. On April 1, 2004, 6 smaller communities and 4 larger communities were randomized to 1 of 2 trial interfaces, with 3 small community clusters and 2 large community clusters allocated to the Full-Serve group (98 physicians) and 3 small community clusters and 2 large community clusters allocated to the Self-Serve group (105 physicians). During the trial, 5 participants withdrew from the Full-Serve group: 3 on moving outside of the NOVL-eligible geographic area, 1 for low computer literacy skills, and 1 on retiring from clinical practice; 4 participants withdrew from the Self-Serve group: 3 on moving outside of the NOVL-eligible geographic area and 1 for lack of interest (▶).

Seventy-one participants nominated 23 physicians as educational influentials. Of these 23, 13 were already participants in the trial, 3 had expressed interest in PLUS, and 7 had not responded to notices about the trial. All 23 were notified via e-mail, letter, and fax of their nomination and asked to join the trial (if not already a participant), provide feedback on the service and inform colleagues in their community about the PLUS trial. None of the 23 nominees responded.

Participants were well matched on baseline characteristics (▶) except that a higher proportion of the participants in the Self-Serve group lived in larger communities (64%, compared with 46% for the Full-Serve group). Over 95% in both groups had high-speed Internet access in at least one location.

Table 2.

Table 2. Baseline Characteristics of the Participants by Study Group

| Self-Serve (N = 105) | Full-Serve (N = 98) | Missing Data (N and Group) | |

|---|---|---|---|

| Mean age (95% CI) | 43.5 (41.8, 45.2) | 42.9 (40.4, 45.4) | 2 Full-Serve |

| Mean yr licensed (95%CI) | 1989 (1987, 1991) | 1987 (1985, 1989) | |

| Gender (% male) | 73.3% | 74.5% | |

| No. (%) in larger communities | 67 (63.8%) | 45 (45.9%) | |

| Discipline—no. (%) indicating primary care alone | 27 (25.7%) | 28 (28.6%) | |

| No. (%) indicating 2 or more disciplines | 37 (35.2%) | 39 (39.8%) | |

| Working hours—mean (95% CI) | 42.9 (39.3, 46.5) | 43.8 (39.6, 48.0) | 8 Self-Serve, 5 Full-Serve |

| High-speed access in at least 1 location—no. (%) | 94 (95.9%) | 97 (97.8%) | 7 Self-Serve, 9 Full-Serve |

PLUS participants constructed a clinical interest profile by selecting one or more clinical disciplines from a set list. The majority (127, 62.5%) selected a single discipline to represent their interests. About half of participants indicated a primary care discipline (45.7% for the control group and 54.1% for the intervention group).

Utilization was the primary trial end-point. During the intervention period (April 2004–May 2005), mean logins/month/user rose from 1.66 to 1.84 for the Full-Serve group, while falling from 2.05 to 1.46 in the Self-Serve group. The difference in changes in rates, adjusted for baseline differences, was 0.77 logins/month/user (95% CI 0.43, 1.11), favoring the Full-Serve group. This analysis was based on the intention-to-treat principle, 19 with all participants considered. During the intervention period, significantly more Full-Serve participants (87.8%) than Self-Serve participants (75.2%) used the service (difference 12.6%, 95% CI 1.8, 22.9) (▶). There were no significant differences in the frequency of non-utilization during the pre- or post-randomization periods. Restricting the analysis to the participants who used the service at least once, the difference in logins/month/user was somewhat larger, at 0.92 (95% CI 0.075, 1.77), favoring the Full-Serve group. The proportion of Full-Serve participants who used the service during each month of the intervention period (▶, ▶) showed a sustained increase during the intervention period, with a relative increase of 57.2% (95% CI 12.0, 123.5) compared with the Self-Serve group. There were no differences in these proportions during the baseline period, and following the crossover of the Self-Serve group to Full-Serve, the Self-Serve group’s usage became indistinguishable from that of the Full-Serve group (relative difference 4.4% (95% CI −23.7, 43.0).

TABLE 3.

TABLE 3. Proportion (and 95% CI) of PLUS Users by Group and by Trial Phase

| Study Group | Baseline | Intervention Period | Control-Crossover Period |

|---|---|---|---|

| Full-Serve (n = 98) | 72.45 (62.9, 80.3) | 87.8 (79.8, 92.9) | 67.4 (57.6, 75.8) |

| Self-Serve (n = 105) | 72.38 (63.2, 80.0) | 75.2 (66.2, 82.5) | 75.2 (66.2, 82.5) |

| Difference | 0.07 (−12.2, 12.2) | 12.5 (1.8, 22.9) | 7.89 (−4.51, 20.1) |

TABLE 4.

TABLE 4. Average Percentage (and 95% CI) of Users Per Month

| Study Group | Baseline | Intervention Period | Control-Crossover Period |

|---|---|---|---|

| Full-Serve (n = 98) | 43.7 (16.4, 71.0) | 50.3 (47.4, 53.3) | 44.9 (39.4, 50.3) |

| Self-Serve (n = 105) | 40.8 (10.5, 71.1) | 32.0 (27.5, 36.5) | 43.0 (34.3, 51.8) |

| Absolute difference | 2.9 (−31.0, 36.8) | 18.3 (13.2, 23.4) | 1.9 (−6.7, 10.4) |

| Relative difference | 7.1 (−22.5, 48.4) | 57.2 (12.0, 123.5) | 4.4 (−23.7, 43.0) |

Figure 3.

Percentage of participants/month using the PLUS service for baseline period (Nov 2003–Mar 2004, up to first vertical line), intervention period (Apr 2004–Mar 2005, up to second vertical line), and control-crossover period (Apr 2005–October 2005)

Duration of use was a secondary utilization outcome. The duration of a PLUS usage episode could only be accurately determined for approximately 50% of participants who logged out at the end of PLUS sessions. For these sessions, the average duration increased from baseline (10.5 min/mo/person) by 4.2 minutes/participant/month during the intervention period for the Full-Serve group, compared with 1.1 minutes/month/participant for the Self-Serve group (from a baseline rate of 13.0 min/mo/person), but the difference in increases was not significant (difference 3.1 min, 95% CI −8.57, 14.79). Durations for sessions for which there was no logout, judged by time to last click, were substantially less (average 4.43, 95% CI 3.35, 5.31), suggesting that this measure substantially underestimated durations. Nevertheless, the differences between groups were similar when these figures were analyzed alone or combined with logout sessions, with no significant differences between the groups.

Another secondary utilization outcome was accessing specific electronic resources. During the intervention period, the Full-Serve group accessed Ovid 11.2 times/participant (95% CI 6.0, 16.4, range 0–216), compared with 17.3 accesses/participant (95% CI 11.1, 23.5; range 0–209) for the Self-Serve group (difference 6.08, 95% CI −1.94, 14.10, not significant). The Full-Serve group accessed Stat!Ref at a frequency of 5.6 accesses/participant (95% CI 3.2, 7.9, range 0–62), compared with 7.2 accesses/participant (95% CI 4.1, 10.4; range 0–91) for the Self-Serve group (difference 1.67, 95% CI −2.27, 5.60, not significant).

The primary utility outcome measured whether participants found what they were looking for, and how easy it was to find the information. The response rates to this monthly e-mail questionnaire (online Appendix) were below 50% for both groups. When asked if PLUS provided participants access to the information they wanted, the 2 groups did not differ (Full-Serve “Yes” 70.8%, Self-Serve “Yes” 71.5%, p = 0.803). There was also no difference in the average score for the questions on ease of finding information (Full-Serve 7.14, Self-Serve 7.52, difference −0.38, 95% CI −1.07, 0.31, on a scale of 1–10 with 10 highest).

The primary use outcome measured use of specific evidence-based information once within Ovid. The Full-Serve group accessed Ovid full-text journals on 14.4 occasions/participant (95% CI 7.2, 21.7; range 0–317), compared with 13.3 occasions/participant for the control group (95% CI 7.5, 19.2, range 0–176; difference 1.1, 95% CI −8.0, 10.3, not significant). The patterns of use of other Ovid resources (i.e., evidence-based publications such as the Cochrane Library and Evidence-Based Medicine Reviews) were similar for the 2 groups (data not shown).

The primary usefulness outcome was assessed as the average physician response to a question concerning how useful the information from PLUS was in caring for patients, rated on a 10-point scale (1 = not at all useful; 10 = very useful). This question appeared on the same monthly e-mail questionnaire (online Appendix) as the primary utility outcome noted above. As indicated above response rates were below 50% for both groups. The 2 groups did not differ significantly on this measure (Full-Serve average 7.38; Self-Serve 7.68, difference −0.31, 95% CI −1.04, 0.43). In secondary analyses, there were no differences in comparing the average responses of primary care physicians or specialists in the 2 groups, or physicians in smaller communities compared with larger communities. Also during the crossover period, measures of self-reported usefulness did not show a difference between the 2 groups (data not shown).

Discussion

As proof of concept, our hypothesis that centralizing the evaluation of newly published medical literature to find the highest quality and most relevant clinical studies was supported, and providing both alerting and searching services for this literature in conjunction with a digital library did increase the utilization of the medical literature by practicing clinicians. Participants who registered for digital library access and were assigned to receive alerts about quality- and relevance-rated original studies and reviews from over 110 clinical journals increased their monthly use of PLUS by 57% relative to a group that received only digital library access and a passive guide to the evidence-based medical literature. Increased utilization was sustained over 19 months of follow-up and was identically observed in the control group when crossed over to the active intervention. Thus, the PLUS system reproducibly increased utilization of the current medical literature by practicing physicians in non-academic settings. Further, because of the nature of the PLUS selection process, the literature accessed was of high quality and relevance for clinical practice. Overall, rates of use were higher than contemporaneous rates from similar studies of digital library access by physicians. For example, Gosling et al. 20 reported usage figures for a digital medical library ranging from 0.25 to 1.04 accesses/month/clinician, compared with 1.84 accesses per month for the intervention group in our study.

Our hypothesis that this service would also increase the perceived usefulness of the information retrieved was not borne out: the 2 study groups did not differ for this measure, both reporting usefulness in the 70–80% range. Possible explanations include lack of appreciation for evidence-based information, incomplete matching of content to participants’ interests, and inadequate measures of usefulness. That the latter might be the case is suggested by the facts that the rate of utilization differences were sustained during the intervention period, and when the control group was crossed over to the Full-Serve service, its rate of utilization increased to match that of participants initially assigned to Full-Serve. Further, the drop out rate for the trial was very low, with only 1 person dropping out for lack of interest and 1 for lack of computer literacy. Thus, the overall utilization of literature with the PLUS service was higher even though perceptions of usefulness were not increased.

Considerable effort was made to optimize the PLUS trial interfaces before their launch through in-house testing and staff discussion, usability testing, and beta testing. Consequently, few system changes were needed after the launch of the trial. Automated recording and analysis of users’ logins and their selection of specific links provided convenient tracking of PLUS usage and the means to report primary outcomes for the trial more accurately. Questionnaires requiring participants to respond (e.g., concerning perceptions of the usefulness of PLUS information for patient care) proved more problematic, with response rates below 50% despite e-mail reminders. Eventually we were able to overcome this low response rate by encouraging logouts using continuing medical education credits, a lottery on an inexpensive lottery ticket, attaching brief questions about resources to the logout, and later hosting a second draw for those submitting questionnaire responses (total costs less than CDN$40). The placement of the questionnaire post logout obviously minimized recall bias, but this timing meant that participants had no time to digest and apply information before commenting on its utility.

As noted in the results section of the paper, Full-Serve participants accessed Ovid and Stat!Ref at lower rates than Self-Serve participants. Although these differences were not statistically significant these lower rates of access may reflect that the Full-Serve participants found the information they wanted within the PLUS system (including citation, clinical ratings and comments, and PubMed abstract), leading to less perceived need to access resources beyond PLUS.

Several limitations of our investigation should be borne in mind. First, the participants lived in a relatively under-populated area and the results might not be generalizable to other settings. However, over half lived in mid-sized urban communities and no differences in uptake were observed between smaller and larger communities. Second, while access rates and patterns of access of the digital library and PLUS were objectively and completely determined, actual use of the information was based on self-report alone and response rates for such questions were less than optimal. Nevertheless, the increased utilization of evidence-based resources was encouraging. Third, our data collection did not include information seeking beyond the digital library, including non-library online resources. Fourth, the digital library service and use of PLUS were provided free of charge to practitioners, and our findings may not apply in circumstances where users must pay themselves. Fifth, we had hoped to gain the support of “educational influentials” among the study practitioners but none of the 23 who were nominated responded to our invitations. Perhaps this reflects the unwillingness of these individuals to extend their influence beyond medical topics well known to them (in this instance, by endorsing the PLUS service and asking colleagues to participate in the trial).

We are currently evaluating additional features of the PLUS interface to determine the effects on literature use.

Conclusion

Two hundred and three participants were randomized to either a Full-Serve or Self-Serve interface of McMaster PLUS in a clinical trial of its effectiveness as an evidence-based information delivery service for physicians practicing in primary care, internal medicine, or sub-specialties of internal medicine in Northern Ontario. Findings showed sustained increased usage of the digital library service with the addition of discipline-specific quality- and relevance-rated alerts to newly published articles and a cumulative database of alerts to use for looking up current best evidence concerning the management of health care problems.

Footnotes

The McMaster PLUS system was developed with support from a grant from the Ontario Ministry of Health and Long-term Care and the PLUS trial was supported by funds from the Canadian Institutes of Health Research.

The authors thankfully acknowledge our collaborators in the Northern Ontario Medical Program, Northeastern Ontario Medical Education Corporation, and the Northern Ontario Virtual Library. The PLUS service would not have been feasible without the cooperation of the American College of Physicians, publisher of ACP Journal Club, and the BMJ Publishing Group, publisher of Evidence-Based Medicine, who permitted us to combine the PLUS study with the literature review process we use routinely for their journals. In return, the MORE clinical rating system is now being used to help select and present articles for these journals and to develop new information services, such as bmjupdates+. The scope of the rating system is currently being expanded to include additional medical disciplines and nursing. The authors also acknowledge the in-kind support of Ovid Technologies Inc. which provided means for the investigators to create links from PLUS to Ovid’s full-text journal articles and Evidence-Based Medicine Reviews. Funders and supporters had no role in the design, execution, analysis, or interpretation of the study or preparation of the manuscript.

The opinions stated here are solely those of the authors.

Trial registration International eHealth Study Registry (IESR) IESN2005RH00013.

References

- 1.Antman EM, Lau J, Kupelnick B, Mosteller F, Chalmers TC. A comparison of results of meta-analyses of randomized control trials and recommendations of clinical expertsTreatments for myocardial infarction. JAMA 1992;268:240-248. [PubMed] [Google Scholar]

- 2.Smith R. What clinical information do doctors need? BMJ 1996;313:1062-1068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ely JW, Osheroff JA, Gorman PN, et al. A taxonomy of generic clinical questions: classification study BMJ 2000;321:429-432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bennett NL, Casebeer LL, Kristofco RE, Strasser SM. Physicians’ internet information-seeking behaviors J Contin Educ Health Prof 2004;24:31-38. [DOI] [PubMed] [Google Scholar]

- 5.McColl A, Smith H, White P, Field J. General practitioner’s perceptions of the route to evidence based medicine: a questionnaire survey BMJ 1998;316:361-365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Young JM, Ward JE. Evidence-based medicine in general practice: beliefs and barriers among Australian GPs J Eval Clin Pract 2001;7:201-210. [DOI] [PubMed] [Google Scholar]

- 7.Ely JW, Osheroff JA, Ebell MH, et al. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study BMJ 2002;324:710-713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McAlister FA, Graham I, Karr GW, Laupacis A. Evidence-based medicine and the practicing clinician J Gen Intern Med 1999;14:236-242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wilson P, Glanville J, Watt I. Access to the online evidence base in general practice: a survey of the Northern and Yorkshire Region Health Info Libr J 2003;20:172-178. [DOI] [PubMed] [Google Scholar]

- 10.Putnam W, Twohig PL, Burge FI, Jackson LA, Cox JL. A qualitative study of evidence in primary care: what the practitioners are saying CMAJ 2002;166:1525-1530. [PMC free article] [PubMed] [Google Scholar]

- 11.Pluye P, Grad RB, Dunikowski LG, Stephenson R. Impact of clinical information-retrieval technology on physicians: a literature review of quantitative, qualitative, and mixed methods studies Int J Med Inform 2005;74:745-768. [DOI] [PubMed] [Google Scholar]

- 12.Holland J, Haynes RB. McMaster Premium Literature Service (PLUS): An evidence-based medicine information service delivered on the web AMIA Annu Symp Proc 2005:340-344. [PMC free article] [PubMed]

- 13.Haynes RB, Cotoi C, Holland J, et al. Second order peer review of the medical literature for clinical practitioners JAMA 2006;295:1801-1808. [DOI] [PubMed] [Google Scholar]

- 14.Northern Ontario Overview. Available at: http://www.mndm.gov.on.ca/mndm/nordev/redb/sector_profiles/northern_ontario_e.pdf. Accessed on:.

- 15.McKibbon KA, Wilczynski NL, Haynes RB. What do evidence-based secondary journals tell us about the publication of clinically important articles in primary healthcare journals? BMC Medicine 2004;332, Available at: http://www.biomedcentral.com/1741-7015/2/33. Accessed on:. [DOI] [PMC free article] [PubMed]

- 16.Haynes RB. Of studies, summaries, synopses, and systems: the “4S” evolution of services for finding current best evidence[editorial] ACP Journal Club 2001;134:A11–A13 Evidence-Based Medicine 2001;6:36-38. [PubMed] [Google Scholar]

- 17.Fleiss J. Statistical Methods for Rates and Proportions. 2nd edition. New York: Wiley; 1981.

- 18.Langton KB, Horsman J, Hayward RS, Ross SA. A Clinical Informatics Network (CLINT) to support the practice of evidence-based health care Proc AMIA Annu Fall Symp 1996:428-432. [PMC free article] [PubMed]

- 19.Straus SE, Richardson WS, Galsziou P, Haynes RB. Evidence-based Medicine. How to Practice and Teach EBM. 3rd edition. London: Churchill Livingstone; 2005. pp. 121-122.

- 20.Gosling AS, Westbrook JI, Coiera EW. Variation in the use of online clinical evidence: a qualitative analysis Int J Med Inform 2003;69:1-16. [DOI] [PubMed] [Google Scholar]