Abstract

Objective

Authors developed a picture-graphics display for pulmonary function to present typical respiratory data used in perioperative and intensive care environments. The display utilizes color, shape and emergent alerting to highlight abnormal pulmonary physiology. The display serves as an adjunct to traditional operating room displays and monitors.

Design

To evaluate the prototype, nineteen clinician volunteers each managed four adverse respiratory events and one normal event using a high-resolution patient simulator which included the new displays (intervention subjects) and traditional displays (control subjects). Between-group comparisons included (i) time to diagnosis and treatment for each adverse respiratory event; (ii) the number of unnecessary treatments during the normal scenario; and (iii) self-reported workload estimates while managing study events.

Measurements

Two expert anesthesiologists reviewed video-taped transcriptions of the volunteers to determine time to treat and time to diagnosis. Time values were then compared between groups using a Mann-Whitney-U Test. Estimated workload for both groups was assessed using the NASA-TLX and compared between groups using an ANOVA. P-values < 0.05 were considered significant.

Results

Clinician volunteers detected and treated obstructed endotracheal tubes and intrinsic PEEP problems faster with graphical rather than conventional displays (p < 0.05). During the normal scenario simulation, 3 clinicians using the graphical display, and 5 clinicians using the conventional display gave unnecessary treatments. Clinician-volunteers reported significantly lower subjective workloads using the graphical display for the obstructed endotracheal tube scenario (p < 0.001) and the intrinsic PEEP scenario (p < 0.03).

Conclusion

Authors conclude that the graphical pulmonary display may serve as a useful adjunct to traditional displays in identifying adverse respiratory events.

Introduction

The 1999 report of the Institute of Medicine, To Err is Human (published in 2000) emphasizes that human error is responsible for the majority of accidents and mishaps in the health care industry. 1 In response, researchers have built and evaluated systems to help clinicians improve decision making and to focus clinicians’ attention on important problems. For years, anesthesiologists have recognized the potential for harm due to human error. Cooper et al. concluded that human error causes 82% of preventable anesthesia-related patient injuries. 2 Further anesthesia research found significant opportunities for error reduction through improved attention to, and interpretation of data available in the operating room. 3,4

Anesthesiologists must assimilate monitored data to assess the patient’s true status and to discover and treat problems before injury occurs. Onset of a critical event requires anesthesiologists to develop a differential diagnosis quickly and accurately. Graphical displays that effectively integrate and present information can increase situational awareness and reduce the time to detection and treatment. 5,6

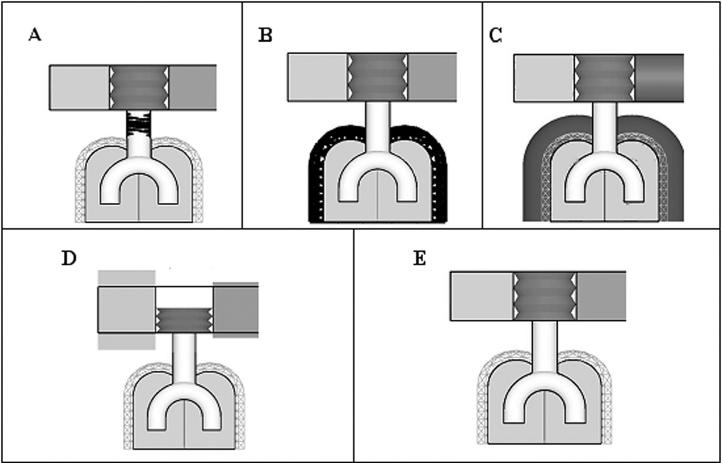

We used an iterative development process to create a graphical pulmonary display that presents critical information about the respiratory system (▶). 7 The display illustrates physiological and anatomical respiratory parameters for intubated, mechanically ventilated patients.

Figure 1.

Examples of the pulmonary display: the pulmonary display anatomically represents the bellows, airway, lungs, inspired gas, and expired gas. The upper left box (green) represents inhaled oxygen (FIO2). The middle accordion box (blue) is similar to the bellows of the ventilator and moves along the vertical axis representing tidal volume. The upper right box (gray) represents end tidal carbon dioxide (ETCO2). (A) The obstructed endotracheal tube event is depicted with upper airway “black restrictive fingers.” (B) The endobronchial intubation event is shown with a thickened compliance cage surrounding the lung icon. (C) Intrinsic PEEP is shown with an over inflated lung icon extending past the normal boundary of the lung icon and the compliance cage. (D) Hypoventilation is shown with a short bellows icon representing low tidal volume. (E) The normal event was shown with all parameters set within normal limit.

In their pioneering work on pulmonary graphical displays, Cole and Stewart used a series of rectangles representing a patient’s tidal volume and respiratory rate for specific snapshots in time. 8,9 Using the intuitive pulmonary displays, their study subjects could “see” the data in the context of ventilator weaning. To further advance the concept of intuitive displays, display shapes and colors in the current study represented bellows, airway, lungs, inspired gas, and expired gas parameters. Emergency alerts emphasized abnormal pulmonary variables by changing shape and shade of particular elements of the display. A normal reference frame surrounded each element. A prior study by the authors evaluated intuitiveness of the displays by requesting untrained “naïve” anesthesiologists to identify anatomical representations and interpretations of respiratory parameters values. Using the graphical pulmonary display, they identified the anatomy, respiratory parameter values, and sources of adverse respiratory events with 98,91, and 79% accuracy respectively. 7

We also observed clinicians’ use and acceptance of the pulmonary graphical displays in the intensive care unit (ICU). Physicians, respiratory therapists, and nurses could examine pulmonary graphical displays on entering patient rooms. Clinicians’ response to survey questionnaires indicated that they perceived the pulmonary graphical displays to be useful, desirable additions to current ICU monitors, and that the displays accurately represented respiratory variables. 10

This study used a high-resolution graphical patient simulator to evaluate clinician volunteers’ ability to diagnose and treat adverse respiratory events using the pulmonary graphical displays and standard, conventional monitors. We hypothesized that clinician volunteers using the graphical pulmonary display in addition to conventional monitors would: (i) require less time to make accurate diagnoses and to initiate proper treatments for adverse respiratory events; (ii) administer unnecessary treatments to normal patients less often; (iii) identify each scenario more accurately using the pulmonary display; and (iv) report decreased subjective workloads while managing simulated respiratory events.

Methods

Nineteen clinician volunteers managed four adverse respiratory events and one normal event on a high-resolution human simulator (METI, Sarasota, FL). The simulated operating room consisted of a physiologic monitor (AS/3 Datex, Helsinki, Finland), an anesthesia machine (Narkomed Anesthesia Machine - Drager, Telford, PA), and a cart containing airway management equipment. Suctioning equipment was available next to the airway management cart. Actors played the roles of a surgeon, circulator nurse, and anesthesia resident. The physiologic monitor displayed the electrocardiogram, arterial blood pressure, pulse oximeter, and capnogram waveforms. Digital values were displayed for heart rate, blood pressure, oxygen saturation, end-tidal carbon dioxide, and fraction of inspired oxygen. A pulse oximeter tone was provided. All standard monitor alarms were enabled and initially set at factory default limits for consistency. A 17-inch monitor placed beside the physiological monitor was used to present the graphical pulmonary display.

The graphical pulmonary display changed shape and color according to measures of pulmonary function. The graphical pulmonary display received data from a respiratory monitor (CO2SMO, NOVAMETRIX, Hartford, CT) and a patient simulator (HIDEP protocol, METI) that provided information to drive the pulmonary display. The respiratory monitor measured airway pressure, airflow, respiratory rate, end-tidal carbon dioxide, and tidal volume. Airway resistance and total lung compliance were then calculated from measurements of airway pressure and airflow. The computers managing the METI simulator events and the CO2SMO respiratory monitor were hidden from view of the clinician volunteers.

Clinician volunteers (9 faculty, 4 second year residents, and 6 third year residents) from the University of Utah School of Medicine and the University of Arizona School of Medicine gave their consent and participated in the study (▶). The participants received compensation. The study protocol was reviewed and approved by both the University of Utah Hospital IRB and the University of Arizona Hospital IRB committees.

Table 1.

Table 1. Experience Level per Study Group: The Clinician Volunteers Were Randomly Assigned to a Study Group. The Clinician Volunteers Comprised of 3 Levels of Experience: Faculty, Resident CA2, and Resident CA3

| Study Group | Control | |

|---|---|---|

| Faculty | 6 | 3 |

| CA2 | 2 | 2 |

| CA3 | 2 | 4 |

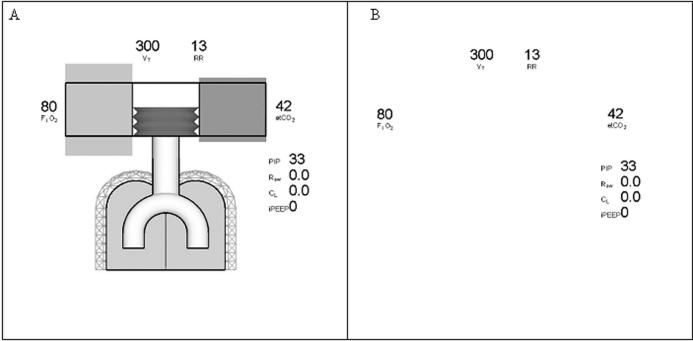

The 19 clinician volunteers were assigned alternately to one of the two study conditions—intervention pulmonary display present vs. control display. Both groups had access to standard displays. The clinician volunteers were asked to step into the simulated operating room (OR) and assume care of a simulated patient midway through a surgical procedure. The volunteers were instructed to play the role of an attending anesthesiologist called into the OR because of an unspecified problem. Clinician volunteers in the intervention group viewed the graphical pulmonary display on the 17-inch monitor (▶A), standard physiologic displays, and the anesthesia machine. Clinician volunteers in the control group viewed the control version of the graphical pulmonary display, which showed numeric values, standard physiologic displays, and the anesthesia machine (▶B). Prior to participation, all subjects in both groups received 15 minutes of training on the use of the simulator, anesthesia equipment, the anesthesia simulator, and the graphical pulmonary display. Pilot studies indicated that only 15 minutes of training—primarily focusing on the pulmonary display—was necessary. All volunteers were previously familiar with the anesthesia simulator and typical anesthesia displays and equipment.

Figure 2.

Illustrations of experimental and control display: (A) The experimental display contains both the digital and graphical representation of: tidal volume (TV), fraction of inspired oxygen (FiO2), end-tidal carbon dioxide (ETCO2), airway resistance, and lung compliance. (B) The control display contains only digital information. The TV, FiO2 and ETCO2 were measured by the respiratory monitor. Airway resistance and lung compliance were calculated from airflow and airway pressure, as measured by the respiratory monitor.

Each subject was asked to manage five simulated respiratory events. Each event developed over 5 minutes: obstructed endotracheal, endobronchial intubation, intrinsic positive end expiration pressure (PEEP), hypoventilation, and a normal event (▶). The adverse respiratory events where chosen so that one or more of the elements of the graphical pulmonary display would change so that the graphical pulmonary display could be adequately evaluated. The study events, although common in the OR, did not reflect their frequency of occurrence in the OR. The order of presentation of the five respiratory events was randomized for each subject. Prior to starting each of the five scenarios, clinician volunteers reviewed the patient history and anesthetic record. An actor, playing the part of an anesthesia resident, encouraged the volunteers to verbalize their thought processes. The volunteers were videotaped.

Table 2.

Table 2. Description of Pulmonary Scenarios: Each of the 5 Scenarios Used during the Pulmonary Graphical Display Study Are Described Above. The METI Simulator Was Programmed, the Anesthesia Machines Were Adjusted, and/or the Endotracheal Tube Was Adjusted to Create Each of the Scenarios

| Scenario | Description |

|---|---|

| Obstructed endotracheal tube | Increased upper airway resistance from 12 cmH2O/l/s to 1000 cmH2O/l/s over 5 minutes |

| An airway obstruction element that progressed through low (greater than 3 cmH2O/l/s), medium greater than 12.5 cmH2O/l/s), and high (greater than 25 cmH2O/l/s) airway resistance over 5 minutes | |

| Endobronchial intubation | The distal end of the endotracheal tube was inserted to 28cm measured at the teeth to create a right mainstem intubation |

| Verification of endotracheal tube placement in the right mainstem was done prior to starting the scenario by observing decreased left sided chest rise and decreased breath sounds over the left lung field | |

| Breath sounds were diminished over the left lung field, peak inspiratory pressures were elevated, and airway resistance was increased when the clinician volunteers attempted manual ventilation | |

| The graphical pulmonary display showed a decrease in lung compliance by the appearance of a thickened black cage surrounding the lung objects | |

| Intrinsic PEEP | The inspiratory to expiratory (I:E) ratio on the ventilator was set to 1:1 |

| The anesthesia monitors correspondingly showed shorter expiratory times on the pressure and volume waveforms | |

| The graphical pulmonary display showed an expanded lung that represented the increased residual volume from breath stacking | |

| Hypoventilation | The ventilator bellows was set to deliver 500ml tidal volume |

| The positive inspired pressure limit on the ventilator was lowered until only a 250ml tidal volume was delivered | |

| The graphical pulmonary display showed a low tidal volume | |

| Normal | Selected “normal adult” in the METI simulator |

| The anesthesia monitors showed normal values | |

| The graphical pulmonary display showed all pulmonary variables within normal range | |

| Upon entering the simulation room, the volunteers were told by the resident that the patient was wheezing |

Upon completion of each scenario, volunteers were asked to respond to a National Aeronautics and Space Administration Task Load Index (NASA-TLX) questionnaire to assess perceived workload and performance (see Appendix A). 11

Data Analysis

The videotapes of the subjects were transcribed. Two expert anesthesiologists (board certified in anesthesiology and blinded to the volunteer’s name and test condition) reviewed the transcripts and recorded a time from start of simulation until the volunteer stated the correct diagnosis and administered the correct treatment (▶). A third expert anesthesiologist was asked to review discrepancies between the reviewing anesthesiologists. An event time of 5 minutes was assigned when a clinician volunteer failed to correctly diagnose or failed to correctly treat a scenario condition. Time to diagnosis and time to treatment were compared between groups using a Mann-Whitney-U Test for all scenarios. P-values less than 0.05 were considered significant.

Table 3.

Table 3. Correct Diagnosis and Treatment for the Simulated Events: A Correct Diagnostic Time Was Noted When Volunteers Stated the Above Phrases during Each of the Respective Events within 5 Minutes. A Correct Treatment Time Was Noted when Clinician Volunteers Performed the above Treatments Corresponding to Each of the Events. An Incorrect Treatment Time Was Indicated during the Normal Scenario If a Volunteer Performed a Maneuver as Described

| Event | Correct Diagnosis | Correct Treatment |

|---|---|---|

| Obstructed endotracheal tube |

|

suctioned endotracheal tube |

| Endobronchial intubation |

|

pulled back on the endotracheal tube to the correct depth allowing proper ventilation |

| Intrinsic PEEP |

|

changed I:E ratio |

| Hypoventilation |

|

changed to ventilator’s pressure limit knob to increase the delivered tidal volume |

| Normal |

|

no treatment required |

| “I don’t see anything wrong” | Incorrect treatment: bronchodilators, adjusted the ventilator settings, altered the position of the endotracheal tube, or suctioned the endotracheal tube |

For the normal patient scenario, the number of treatments administered by each group was compared with a Fisher Exact Probability test. P-values less than 0.05 were considered significant. For the abnormal patient scenarios, the number of correctly diagnosed conditions within 5 minutes was compared between groups using the Fisher’s Exact Probability test.

Estimated workload for both groups was assessed using the NASA-TLX. 11 The NASA-TLX categorizes workload based on six dimensions, including mental demand, physical demand, temporal demand, perceived performance effort, and frustration level. The workload estimates were compared between groups for each individual scenario condition using an ANOVA. P-values < 0.05 were considered significant.

Results

The two anesthesiologists who independently reviewed agreed on all but 34 instances (9%) in translation of study transcripts. For each of such occurrence, the opinion of a third anesthesiologist settled the discrepancy.

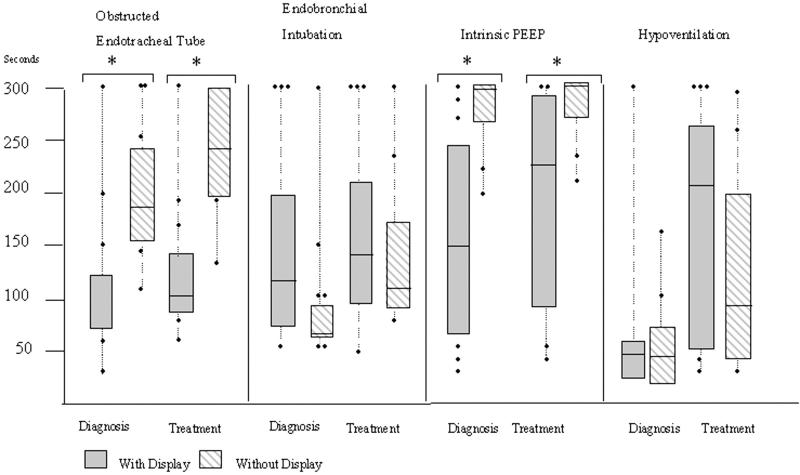

▶ presents the average time to a correct diagnosis and correct treatment for each scenario condition, for each group. Subjects using the study display required less time to diagnose (160 ± 109 seconds vs. 277 ± 38 seconds, p < 0.05) and treat (189 ± 111 seconds vs. 277 ± 38 seconds, p < 0.05) intrinsic PEEP problems. The measured differences between groups were 117 and 88 seconds for diagnosis and treatment, respectively. Subjects using the intervention display required less time to diagnose (87 ± 49 seconds vs. 201 ± 68 seconds) and treat (111 ± 43 seconds vs. 238 ± 60 seconds, p < 0.05) an obstructed endotracheal tube. The measured differences between groups were 114 and 127 seconds for diagnosis and treatment, respectively. No significant differences were detected between groups in the time required to diagnose and treat endobronchial intubation or hypoventilation. A comparison of performances for clinicians at three experience levels found no significant differences (▶). The intervention (pulmonary graphical display) and control display groups administered unnecessary treatments to normal scenario patients 30% vs. 50% of the time, respectively.

Figure 3.

Diagnosis and treatment time: the diagnosis and treatment times per scenario for each condition are shown above. The 95% confidence intervals define the box height with a line representing the mean. ∗Significant differences between display conditions (p < 0.05).

Table 4.

Table 4. Statistical (P values) of the Differences in Times to Diagnosis and Times to Treat Pulmonary Events between the Control Group and the Study Group, by Experience Level: Comparing Times to Diagnosis and Times to Treat by Experience Level Did Not Prove to Be Significant as Shown by the Calculated p-values in the Table. The Mann-Whitney-U Test Was Used to Compare Experience Level for All Scenarios.

| Faculty |

CA2 |

CA3 |

CA2 & CA3 |

|||||

|---|---|---|---|---|---|---|---|---|

| Diag | Treat | Diag | Treat | Diag | Treat | Diag | Treat | |

| Obstructed endotracheal tube | 0.0476 | 0.0238 | 0.6667 | 0.3333 | 0.8000 | 0.8000 | 0.4762 | 0.3524 |

| Endobronchial intubation | 0.9048 | 0.9048 | 0.3333 | 0.3333 | 0.8000 | 0.5333 | 0.1143 | 0.0667 |

| Intrinsic PEEP | 0.0952 | 0.0952 | 0.6667 | 0.6667 | 0.8000 | 0.8000 | 0.2571 | 0.6095 |

| Hypoventilation | 0.7143 | 0.7143 | 0.3333 | 0.3333 | 0.8000 | 0.5333 | 0.2571 | 0.1143 |

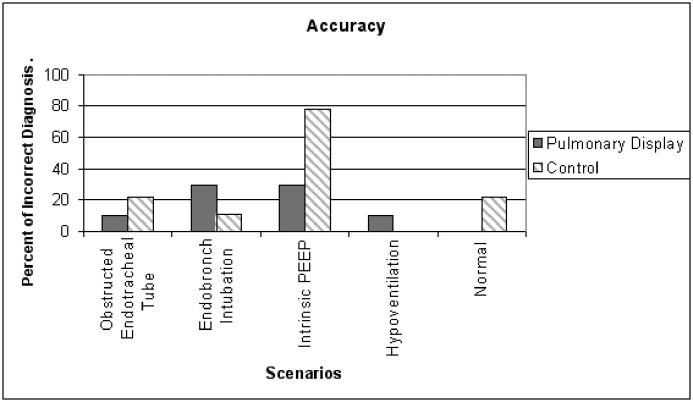

▶ presents the rate that subjects failed to diagnose events correctly within 5 minutes. Intervention clinicians using the graphical display correctly diagnosed scenario events with 86% accuracy compared to 78% accuracy for the control group. This overall difference was not significant. More subjects in the control group failed to diagnose intrinsic PEEP problems correctly (9 vs. 3, p < 0.05).

Figure 4.

Accuracy of diagnosis: data are presented as percentage of clinician volunteers who provided an incorrect diagnostic answer of the total # of clinician volunteers.

Analysis of variance revealed that clinician volunteers using the graphical pulmonary display reported lower perceived workloads during the obstructed endotracheal tube (p < 0.001) and the intrinsic PEEP (p < 0.03) scenarios.

Discussion

The present study found that clinician volunteers using the intervention graphical pulmonary display diagnosed and treated selected pulmonary events more quickly and more accurately. Intervention subjects also reported a perceived decrease in workload.

We believe that selected elements of the pulmonary graphical display assisted clinician volunteers to make quicker diagnoses. We believe that the critical display elements included the airway restriction element and the hyperinflated lung image. The airway restriction element contained within the trachea (▶A) suggested an increased airway resistance, and allowed subjects using the intervention display to diagnose and treat an obstructed endotracheal tube more quickly than controls. Similarly, the hyperinflated lung image indicated a problem with intrinsic PEEP (air trapping) that allowed intervention subjects to diagnose and treat this problem more quickly than controls (▶C). Study results suggest that these features conveyed their intended message and were intuitive to the clinician volunteers.

The other alerting features of the graphical pulmonary display (thickened pleural wall indicating poor lung compliance, and shrinking bellows indicating low tidal volume) did not seem to assist intervention subjects in making correct diagnoses for endobronchial intubation and hypoventilation.

Clinician volunteers may not have associated a fall in lung compliance with endobronchial intubation. During this scenario, the graphical pulmonary display used a thickened black cage surrounding the lung object to suggest a decrease in lung and chest wall compliance. Perhaps a display that showed only the right lung ventilated would have been more useful in making this diagnosis. However, with conventional monitoring, discriminating between decreased lung compliance and endobronchial intubation may require physical examination that includes auscultation of the lung fields and observation of chest wall movement. In addition, several types of underlying pathophysiology can decrease lung compliance. The difficulty of excluding such possibilities may have further confused the clinician volunteers. However, study clinicians did not provide statements regarding alternative diagnoses they considered to explain decreased lung compliance.

The intervention pulmonary display indicated hypoventilation through decreased bellows height. In the hypoventilation scenario, direct observation of the ventilator bellows (as opposed to the simulated monitors) would indicate presence of this condition. In effect, the same information was available to both study groups.

The graphical pulmonary display was designed to appear balanced when all the pulmonary variables were within their normal range. A schematic with boundary lines for each component (i.e., bellows, lung volumes, etc.) within the pulmonary graphical display was used in the background to assist clinician volunteers to recognize abnormal values. Shapes representative of pulmonary variables were allowed to dynamically change shape during each scenario. When the shape exceeded or was much smaller than the schematic background, this indicated that these pulmonary variables were abnormal. We hypothesized that clinician volunteers would be less likely to implement unnecessary treatments when using a display that presented normal lung physiology. Although the intervention group had fewer unnecessary treatments, the difference with the “usual monitoring” group was not significant. This may be reflective of the simulation environment where clinician volunteers are typically in a hyper-vigilant state. They may have been pre-conditioned to make false-positive diagnoses. During the normal lung scenario, study investigators marked every treatment given as erroneous, including benign treatments such as suctioning the airway.

Diagnostic accuracy did not significantly improve using the intervention graphical pulmonary display, except for the scenario involving intrinsic PEEP. In the other scenarios, the percentage of inaccurate diagnoses was 25% or less for both groups. By contrast, the number of incorrect diagnoses in the intrinsic PEEP scenario was much higher in the control group than in the experimental group. These findings indicate the potential benefit of the pulmonary graphical display in assisting clinicians to make difficult diagnoses and execute proper treatments for patients who suffer from specific adverse pulmonary conditions.

Prior work has established that complex and unintuitive displays may add cognitive workload to the user. 5,12,13 To make the display intuitive, and with a goal of reducing cognitive workloads, the study consulted human factors and cognitive psychology specialists to help design the pulmonary graphical display. 13 Using perceived workload scores from the NASA-TLX instrument, the study found that intervention subjects reported decreased estimated workloads for two of the five scenarios. Of interest, in these same two scenarios, clinician volunteers in the display group made quicker diagnoses and treatments. When the graphical pulmonary display impacts the speed required to make a diagnosis and implement a treatment, it also appears to improve the perceived workload.

The current study evaluated the utility of a specifically designed graphical pulmonary display (rather than graphical displays in general) when compared to traditional text-based (and numerical) displays. We recognize that the control display may not have been ideal (i.e., the graphical pulmonary display minus the graphics). Clinicians in the study group may have had an advantage because the graphical pulmonary display changed dynamically and in doing so, highlighted abnormal parameters. The numeric display used by the control group did not highlight changes. This disparity was justified to test the utility of the graphical display compared to a commercially available monitor commonly used in OR settings.

To evaluate the graphical pulmonary display, the study chose a simulated environment rather than observing respiratory events in the OR. Adverse events in the OR are relatively uncommon. As with any simulation-based investigation, clinician volunteers may have reacted to the simulated events differently from actual clinical events. Preconceived notions of the simulator’s inadequacy in depicting these adverse respiratory events may have affected clinician volunteers’ performance in the simulations.

In summary, the present study evaluated through simulation the use of a graphical pulmonary display to detect adverse pulmonary events, when the display was used as an adjunct to conventional monitors. We hypothesized that the graphical pulmonary display would allow clinician volunteers to make more timely diagnoses and treatments, would improve diagnostic accuracy, would decrease the number of unnecessary treatments, and would decrease perceived workloads. Study results in part confirmed these hypotheses. The graphical pulmonary display used several alerting features to emphasize abnormal pulmonary disease states. Two of the features, a restricted airway element (illustrating airway obstruction) and a hyperinflated lung improved diagnosis and led to a more timely recognition and treatment of selected adverse respiratory events. In contrast, the graphical pulmonary display did not improve clinician volunteers’ diagnostic accuracy except in one scenario (the intrinsic PEEP scenario) and did not impact the number of unnecessary treatments under normal pulmonary conditions. The display did decrease the perceived workload in the same two scenarios in which it led to more timely diagnosis and treatment. We conclude that the graphical pulmonary display may be a useful adjunct to conventional monitors when used to manage selected adverse respiratory events in intubated, mechanically ventilated patients. Further work is warranted to explore the potential benefit of the graphical pulmonary display in actual clinical settings.

Appendix A

NASA-TLX Questionnaire

Please mark a vertical line to indicate your workload on each of the six scales that follow.

1) Mental Demand:

Table foo1.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| Lowest | Highest | |||||||||

How much mental and perceptual activity was required (e.g., thinking, deciding, calculating, remembering, looking, searching, etc.)? Was the task easy or demanding, simple or complex, forgiving or exacting?

2) Physical Demand:

Table foo2.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| Lowest | Highest | |||||||||

How much physical activity was required (e.g., pushing, pulling, turning, controlling, activating, etc.)? Was the task easy or demanding, slow or brisk, slack or strenuous, restful or laborious?

3) Temporal Demand:

Table foo3.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| Lowest | Highest | |||||||||

How much time pressure did you feel due to the rate of pace at which the tasks or task elements occurred? Was the pace slow and leisurely or rapid and frantic?

4) Performance:

Table foo4.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| Lowest | Highest | |||||||||

How successful do you think you were in accomplishing the goals of the tasks? How satisfied were you with your performance in accomplishing these goals?

5) Effort:

Table foo5.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| Lowest | Highest | |||||||||

How hard did you have to work (mentally and physically) to accomplish your level of performance?

6) Frustration Level:

Table foo6.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| Lowest | Highest | |||||||||

How insecure, discouraged, irritated, stressed, and annoyed versus secure, gratified, content, relaxed, and complacent did you feel during the task?

Footnotes

This research is funded by the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health and the NASA Space Grant program (NIH: 5 RO1 HL 064590-03, NASA: NGT540101).

The authors would like to thank Amy Aleman, Diane Tyler, Jim Agutter, and Rachana Viseria for their help and support and Dr. Robert Loeb and Scott Morgan at the University of Arizona for welcoming our study and allowing us to use their simulator.

References

- 1.Kohn L, Corrigan J, Donaldson M. To err is human: building a safer health system. Washington, D.C: National Academy Press; 2000. [PubMed]

- 2.Cooper JB, Newbower RS, Long CD, McPeek B. Preventable anesthesia mishaps: a study of human factors 1978. Qual Saf Health Care 2002;11(3):277-282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jungk A, Thull B, Hoeft A, Rau G. Evaluation of two new ecological interface approaches for the anesthesia workplace J Clin Monit Comput 2000;16(4):243-258. [DOI] [PubMed] [Google Scholar]

- 4.Blike GT, Surgenor SD, Whalen K. A graphical object display improves anesthesiologists’ performance on a simulated diagnostic task J Clin Monit Comput 1999;15(1):37-44. [DOI] [PubMed] [Google Scholar]

- 5.Allnutt MF. Human factors in accidents Br J Anaesth 1987;59(7):856-864. [DOI] [PubMed] [Google Scholar]

- 6.Kerr JH. Symposium on anaesthetic equipmentWarning devices. Br J Anaesth 1985;57(7):696-708. [DOI] [PubMed] [Google Scholar]

- 7.Wachter SB, Agutter J, Syroid N, Drews F, Weinger MB, Westenskow D. The employment of an iterative design process to develop a pulmonary graphical display J Am Med Inform Assoc 2003;10(4):363-372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cole WG, Stewart JG. Metaphor graphics to support integrated decision making with respiratory data Int J Clin Monit Comput 1993;10(2):91-100. [DOI] [PubMed] [Google Scholar]

- 9.Cole WG, Stewart JG. Human performance evaluation of a metaphor graphic display for respiratory data Methods Inf Med 1994;33(4):390-396. [PubMed] [Google Scholar]

- 10.Wachter SB, Markewitz B, Rose R, Westenskow D. Evaluation of a pulmonary graphical display in the medical intensive care unit: an observational study J Biomed Inform 2005;38(3):239-243. [DOI] [PubMed] [Google Scholar]

- 11.Hart SG, Staveland LE. Development of NASA-TLX: results of empirical and theoretical researchIn: Hancock P, Meshkati N, editors. Human Mental Workload. Amsterdam, North-Holland: Elsevier; 1988. pp. 139-183.

- 12.Rasmussen J, Vincente KJ. Evaluation of a Rankine cycle display for nuclear power plant monitoring and diagnosis Human Factors 1996;38:506-521. [Google Scholar]

- 13.Bennet K, Walters B. Configural display design techniques considered at multiple levels of evaluation Human Factors 2001;43(3):415-434. [DOI] [PubMed] [Google Scholar]