Summary

Episodic memories are characterized by their contextual richness, yet little is known about how the various features comprising an episode are brought together in memory. Here we employed fMRI and a multidimensional source memory procedure to investigate processes supporting the mnemonic binding of item and contextual information. Volunteers were scanned while encoding items for which the contextual features (color and location) varied independently, allowing activity elicited at study to be segregated according to whether both, one, or neither feature was successfully retrieved on a later memory test. Activity uniquely associated with successful encoding of both features was identified in the intra-parietal sulcus, a region strongly implicated in the support of attentionally-mediated perceptual binding. The findings suggest that the encoding of disparate features of an episode into a common memory representation requires that the features are conjoined in a common perceptual representation when the episode is initially experienced.

Keywords: memory binding, episodic memory, encoding, multidimensional source memory, contextual encoding, fMRI

Introduction

Episodic memories consist of a rich array of inter-related elements, but the cognitive and neural operations that bind the different elements of an episode into memory remain unclear. In the laboratory, episodic memory is often operationalized as successful retrieval of information about the context in which a recognized item was presented in a preceding study phase. In source memory tests, for example, study items are presented in one of a small range of different study contexts. Accurate source judgments – the assignment of a test item to the correct study context – are taken as evidence that the study item and the relevant contextual features were bound into a coherent episodic memory (Johnson and Chalfonte, 1994).

Proposals about the neural bases of memory binding have focused mainly on interactions between the hippocampus and cortical regions involved in the online processing of a stimulus event (Alvarez and Squire, 1994; Eichenbaum, 1992; Marr, 1971; Norman and O’Reilly, 2003; Rolls, 2000). According to such proposals, an event is represented in the hippocampus in terms of the pattern of cortical activity engendered as it was experienced. This allows components of the event that were processed and represented in different cortical regions to be bound by the hippocampus into a common memory representation. Evidence supporting a hippocampal role in memory binding comes from non-human and human lesion studies, as well as from functional neuroimaging experiments (see Eichenbaum, 2004, for review). Of particular relevance in this latter regard are findings from fMRI studies demonstrating that encoding of items later given accurate source memory judgments is associated with enhancement of activity in the hippocampus and adjacent medial temporal cortex (Davachi et al., 2003; Ranganath et al., 2003; Kensinger and Schacter, 2006), as well as in cortical regions that support the online demands of the encoding task (Cansino et al., 2002; Davachi et al., 2003; Ranganath et al., 2003; Sommer et al., 2005, 2006; Kensinger and Schacter, 2006; Reynolds et al., 2004).

Whereas the importance of the hippocampal region in episodic memory formation is not in doubt, the manner in which it interacts with the cortex remains to be fully elucidated. Two extreme scenarios can be imagined. On the one hand, the role of the cortex might be limited to supplying the hippocampal region with representations of the disparate features of an episode that must be bound into a coherent representation. On the other hand, binding might occur at the cortical level, such that the input to the hippocampus comes in the form of what might be termed an ‘episodic packet’. The first scenario implies that the cortical correlates of multi-featural memories are comprised of an additive contribution from the regions associated with the encoding of each individual feature. By contrast, the second scenario raises the possibility that multi-featural encoding requires engagement of regions additional to those supporting the encoding of each feature individually.

In considering the circumstances under which these different scenarios might apply, the manner in which study context is manipulated seems likely to be important. Contextual variables can be classified in a number of ways (Baddeley, 1982). One distinction is between ‘independent’ and ‘interactive’ context. Independent context refers to elements of an episode, such as the identity of the testing room or the background color of the display monitor, that might differ between study episodes, but which do not affect how a study item is processed. Interactive context, by contrast, refers to variables that influence the processing of the study item. Examples of such variables include explicit task cues (e.g. instructions to perform semantic vs. non-semantic judgments), or co-presented items that implicitly bias how the critical item is processed (e.g. traffic-JAM vs. strawberry-JAM). A related distinction is between ‘extrinsic’ and ‘intrinsic’ context. These terms distinguish contextual features for which processing is optional (e.g. identity of the test room) as opposed to features that are inherent to the study item, and that must be processed in service of the study task. Examples of intrinsic variables include the color, font or spatial location of a study word. To date, the foregoing distinctions have been somewhat neglected in fMRI studies of source encoding; different investigators have employed interactive (Davachi et al., 2003; Kensinger and Schacter, 2006; Reynolds et al., 2004) or intrinsic (Cansino et al., 2002; Sommer et al., 2005, 2006; Prince et al., 2004) variables, as well as combinations of the two types of variable (Ranganath et al., 2003). In keeping with several behavioral studies investigating memory for different contextual features (e.g. Chalfonte and Johnson, 1996; Meiser and Broder, 2002; Starns and Hicks, 2005; Marsh et al., 2004; Kohler et al., 2001), in the present experiment we varied study context across trials by manipulating two non-interactive, intrinsic variables, namely the color and location of a set of study words.

It has been argued that hippocampally-mediated encoding of an episode is restricted to the components of the episode that are incorporated into its conscious online representation (Moscovitch, 1992). From this perspective, the fact that a particular feature of a study item (its color, say) is represented by a specific pattern of cortical activity does not guarantee that the feature will be incorporated into the memory of the study episode; for the feature to be bound into an episodic memory representation, it must have first been bound into a consciously apprehended, online perceptual representation of the episode (see also Hanna and Remington, 1996). Neuropsychological and neuroimaging evidence suggest that perceptual binding of intrinsic features of an item, such as its color and location, depends upon specific cortical regions, notably parietal cortex within the intra-parietal sulcus (e.g. Cusak, 2005; Friedman-Hill et al., 1995; Humphreys, 1998; Shafritz et al., 2002; for reviews, see Robertson, 2003, and Treisman, 1998). If episodic encoding is indeed restricted to features that are incorporated into the online representation of the episode (see above), these regions should be important not only for perceptual binding, but for memory binding also. This proposal implies that at least some of the binding operations necessary to form a multi-featural memory occur outside the hippocampus, and depend on how attention is allocated during study. This can be contrasted with the alternative proposal that different intrinsic contextual features are encoded and represented separately in memory (Light and Berger, 1976; Marsh et al., 2004; Starns and Hicks, 2005).

The present study attempts to differentiate between the foregoing proposals. Using the ‘subsequent memory procedure’, numerous event-related fMRI studies have demonstrated that, relative to forgotten items, study items that are later remembered elicit greater activity in a variety of cortical regions (e.g. Brewer et al., 1998; Wagner et al., 1998; for review see Paller and Wagner, 2002). The loci of these cortical ‘subsequent memory effects’ vary systematically according to the cognitive operations engaged by the study task (Davachi et al., 2001; Mitchell et al., 2004; Otten and Rugg, 2001; Otten et al., 2002), suggesting that the information about a study item that is encoded into memory depends on the attributes that receive emphasis in the course of online processing (for review, see Rugg et al., 2002). Thus, if the mnemonic binding of the different contextual features of an episode depends upon their first being bound into a common perceptual representation, successful multi-featural encoding should be associated with subsequent memory effects in regions that support perceptual binding. To test this prediction it is necessary to employ a procedure that allows memory for different contextual features of a study episode to be independently assessed. To our knowledge, all prior fMRI studies that have applied the subsequent memory procedure to judgments of source memory have used either a single source attribute (e.g. Cansino et al., 2002; Davachi et al., 2003), or multiple attributes that did not vary independently (Ranganath et al., 2003)

In the present study, we contrasted encoding-related activity elicited by study items according to whether later memory for source features was complete or partial, rather than ‘all or none’ as in prior experiments. We employed fMRI and a procedure similar to that used in previous studies of multidimensional source memory (Meiser and Broder, 2002; Starns and Hicks, 2005; see Figure 1). We investigated the neural activity elicited during the encoding of words whose source features varied independently on two intrinsic dimensions (color and location). We were therefore able to contrast the neural activity elicited by the study words according to whether their later recognition was accompanied by successful retrieval of both, one, or neither feature. This allowed us to test the prediction that multi-featural encoding is associated with a qualitatively different pattern of cortical activity (reflecting perceptual binding) than that associated with the encoding of only a single feature. We predicted that successful encoding of a single feature would be associated with subsequent memory effects in cortical regions that support the online processing of the feature, whereas successful encoding of both features would be associated with additional effects in regions that support feature integration at the perceptual level.

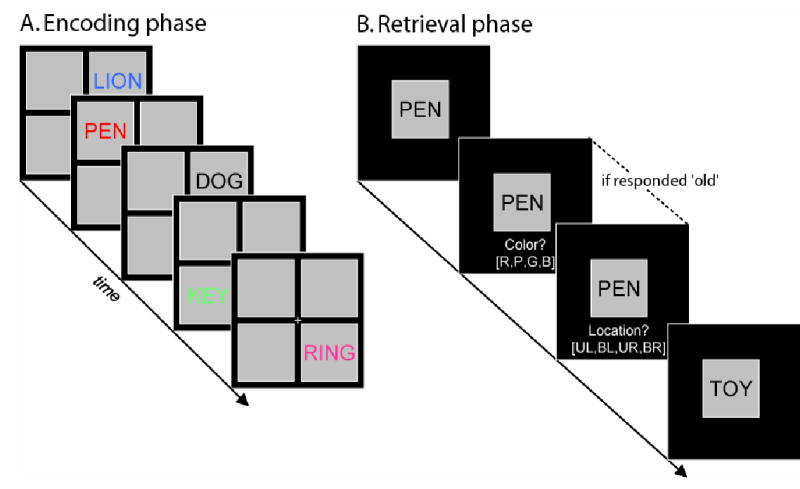

Figure 1.

Schematic representation of experimental design. (A) Encoding phase. During each scanned encoding phase, volunteers made animacy judgments (living or non-living?) to a series of words presented one at a time, in one of four colors (red, green, blue and pink) and quadrants of the screen. Color and location of the stimuli were orthogonally varied. To direct processing emphasis towards the color of the stimuli (see Experimental Procedures), an additional set of words (accounting for one fifth of the total) were pseudorandomly interspersed among the critical study items. These words were presented in black and volunteers were instructed to perform size judgments (bigger or smaller than a shoebox?). Subsequent memory for these words was not tested. (B) Retrieval phase. Immediately following each encoding phase, volunteers made recognition memory judgments (old or new?) to studied and unstudied words. If a word was judged old, source judgments for color and location were required.

Results

Behavioral performance

Study Task

Accuracy was high for both classes of study judgment (animacy: 95% correct, SD = 4%; size: 88% correct, SD = 9%). Study reaction times for the animacy judgments did not vary according to later memory performance (Both Correct: 1426 (SD = 282), Location Only: 1455 (SD = 267), Color Only: 1411 (SD = 237), Item Only: 1454 (SD = 264), Miss: 1438 (282); F(1,19) < 1).

Retrieval Task

The proportion of study items endorsed old regardless of source accuracy was 71%, with a new item false alarm rate of 7%. Source memory performance was calculated as a proportion of all correctly recognized study items [Both Correct: 24.9 (SD = 12.8), Location Only: 27.6 (SD = 7.7), Color Only: 18.1 (SD = 4.9), Item Only: 29.4 (SD = 10.3)]. A pairwise contrast revealed that location judgments were more accurate than color judgments (t(19) = 3.30, p < .005). In both cases, however, accuracy was significantly above the chance level of 25% (location: t(19) = 5.19, p < .0001; color: t(19) = 2.13, p < .05). The proportion of trials on which both sources attracted an accurate judgment was also significantly above chance (chance = 6.25%; t(19) = 5.03, p < .0001).

To determine whether the probability of retrieving one source feature was affected by whether retrieval of the other feature was or was not successful, we conditionalized source accuracy for each feature according to the accuracy of the judgment for the other feature. Thus, for each subject, we calculated: i) the probability of a correct location judgment, given that color was also correct [pBoth Correct/(pColor Only + pBoth Correct)], and ii) the probability of a correct location judgment, given that color was incorrect [pLocation Only/(pItem Only + pLocation Only)]. The analogous probabilities were also calculated for correct color judgments. The across-subject means of the four resulting probabilities are listed in Table 2. A 2×2 ANOVA, with factors of feature (color vs. location) and accuracy of the judgment for the other feature (correct vs. incorrect), revealed that the probability of a correct feature judgment was greater when the other judgment was also correct rather than when it was incorrect (F(1,19) = 7.21, p < .015). Pairwise comparisons revealed that this effect was significant both for color (t(19) = 2.78, p < .015) and for location (t(19) = 2.59, p < .02). Thus, accuracy of the source judgments for the two features was not independent.

Table 2.

Regions showing subsequent memory effects uniquely for items for which one source feature (location only or color only) was later remembered

| Location (x,y,z) | Peak Z (# of voxels) | Region | Approximate Brodmann Area | |

|---|---|---|---|---|

| Location | ||||

| -45 21 -3 | 3.42 (6) | Inferior frontal gyrus | 47 | |

| 3 -60 15 | 4.32 (141) | Posterior cingulate/Retrosplenial cortex | 23/31/30 | |

| -33 -54 18 | 3.80 (21) | Superior temporal gyrus | 22 | |

| -60 -45 15 | 3.72 (8) | Superior temporal gyrus | 22/13 | |

| Color | ||||

| -57 -36 -9 | 3.23 (6) | Poster inferior temporal cortex | 21 | |

fMRI Findings

The fMRI data were subjected to three principal analyses. First, we searched for regions where activity was uniquely associated with subsequent memory for one or the other of the source features (location or color; henceforth, ‘Location Only’ and ‘Color Only’ judgments). Next, we identified regions that were activated during encoding of items that attracted accurate judgments on the later memory test for both source features (henceforth ‘Both Correct’ judgments). Finally, to identify regions associated with encoding of item information, we searched for voxels where correctly recognized study items elicited greater activity than words that were later forgotten, regardless of the accuracy of the associated source memory judgment.

Feature-Specific Effects

We first searched for regions that exhibited subsequent memory effects associated specifically with correct location judgments. These regions were identified with a two-stage procedure. First, a contrast was performed to identify voxels where activity elicited by items attracting Location Only judgments was greater than activity elicited by recognized items for which both source judgments were inaccurate (‘Item Only’). To remove voxels that also exhibited a subsequent memory effect for color memory, this contrast was then exclusively masked with the corresponding contrast for Color Only judgments (thresholded at p < .05). As shown in Figure 2A and listed in Table 2, regions displaying subsequent memory effects specific to correct location judgments included the ventral aspect of the left inferior frontal gyrus (IFG), posterior cingulate/retrosplenial cortex, and superior temporal gyrus (STG). To assess the extent to which these location-only effects were shared with items for which both source judgments were correct, the outcome of the procedure described above was masked with the Both Correct > Item Only contrast (thresholded at p < .001). No overlapping clusters were identified.

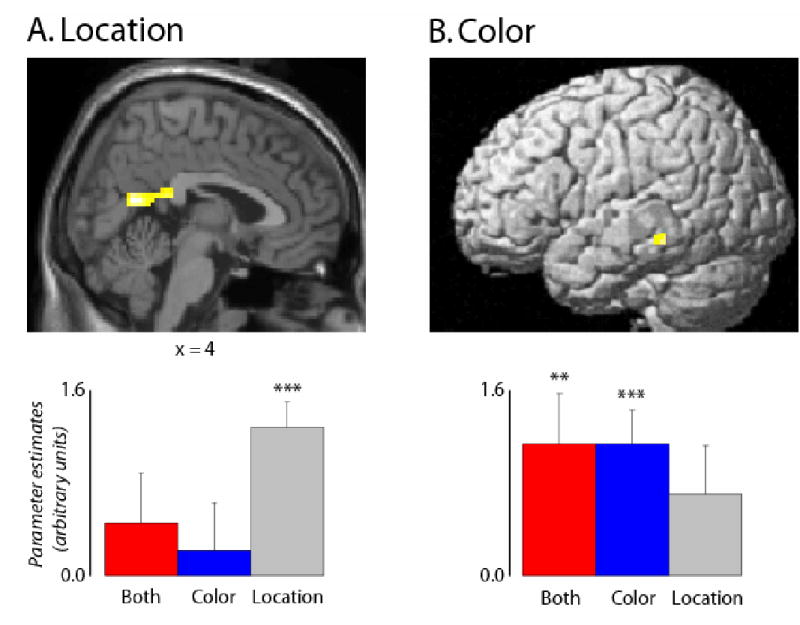

Figure 2.

Feature-specific subsequent memory effects. (A) Location-specific subsequent memory effects. Anatomical overlay (top) and mean parameter estimates (and standard errors; bottom) of the cluster in retrosplenial/posterior cingulate cortex exhibiting significant (p < .001) subsequent memory effects associated with items for which location (but not color, p > .05) was later retrieved. (B) Color-specific subsequent memory effects. Surface rendering (top) and mean parameter estimates (and standard errors; bottom) of the cluster in posterior inferior temporal cortex exhibiting significant (p < .001) subsequent memory effects associated with items for which color (but not location) was later retrieved. Effects are displayed at p < .001 on a standardized brain. Both = subsequent memory effects for Both Correct; Color = subsequent memory effects for Color Only; Location = subsequent memory effects for Location Only. ** p < .01; *** p < .001

An analogous procedure to that described above was employed to identify subsequent memory effects uniquely associated with retrieval of color information. Figure 2B (see also Table 2) illustrates the one region, in posterior inferior temporal cortex, that exhibited a color-specific effect. As with location-specific effects, there was no overlap between the color effect and the effects associated with Both Correct items.

Multifeatural Effects

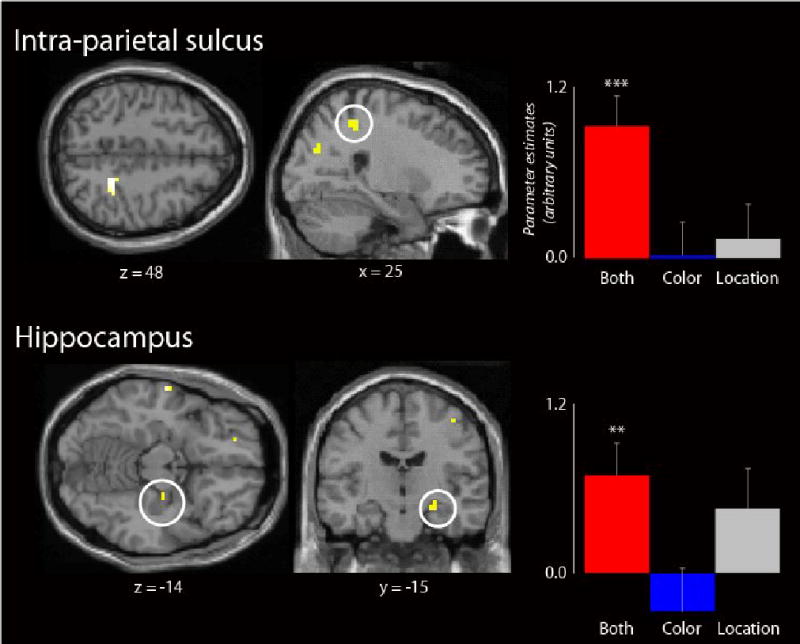

Table 5 details the outcome of the contrast between Both Correct and Item Only trials. The contrast revealed subsequent memory effects in left dorsal and ventral inferior frontal gyrus, along with superior parietal cortex (intra-parietal sulcus). Contrary to the pre-experimental prediction, no effects were evident in the hippocampal formation at a p < .001 threshold. However, a small cluster localized to the right hippocampus (peak at 27, -15 -15, Z = 2.73, 3 voxels) was revealed at a lowered threshold of p < .005. To address the question whether qualitatively different effects emerge when both features are encoded successfully, relative to when one or the other is encoded in isolation, the foregoing contrast was exclusively masked with the two contrasts that together identified regions showing a subsequent memory effect for color or location [(Location Only > Item Only) OR (Color Only > Item Only); each thresholded at p < .05]. As illustrated in Figure 3 and documented in Table 3, among the regions that survived the masking procedure were left dorsal IFG and right intra-parietal sulcus. Hippocampal subsequent memory effects were also evident when the primary contrast was thresholded at p < .005.

Figure 3.

Multifeatural subsequent memory effects. Anatomical overlays and mean parameter estimates (and standard errors) illustrate clusters in right intra-parietal sulcus (top) and hippocampus (bottom) that exhibit subsequent memory effects associated with items for which both source features (but neither feature alone, p > .05) are later remembered. Intra-parietal and hippocampal effects are displayed at p < .001, and p <.005 respectively on a standardized brain. ** p < .005; *** p < .001

Table 3.

Regions showing subsequent memory effects associated with items for which both source features were later remembered (asterisks denote regions associated uniquely with contrast of Both Correct > Item Only; see text)

| Location (x,y,z) | Peak Z (# of voxels) | Region | Approximate Brodmann Area |

|---|---|---|---|

| -45 24 -9 | 3.92 (16) | Ventral inferior frontal gyrus | 47/45 |

| -48 27 9 | 3.74 (7) | Middle inferior frontal gyrus | 45 |

| -48 18 27 | 3.36 (6) | Dorsal inferior frontal gyrus* | 44 |

| -48-9 24 | 3.32 (5) | Precentral gyrus | 6 |

| 21 -42 48 | 3.70 (20) | Intraparietal sulcus* | 7 |

| 24 -72 27 | 3.45 (9) | Precuneus* | 31 |

Feature-Insensitive Effects

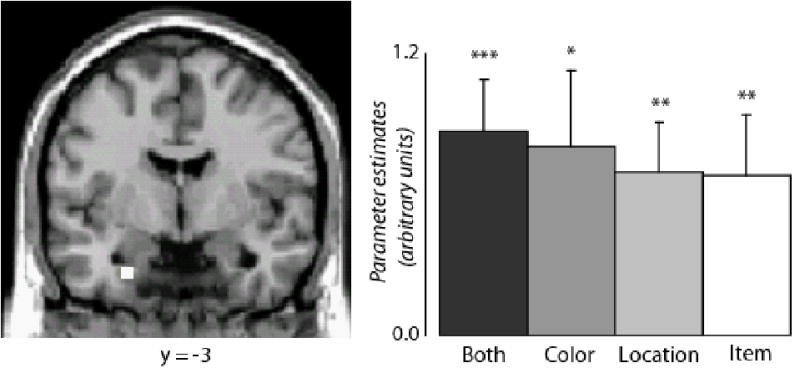

Subsequent memory effects associated with item recognition alone were identified by contrasting the activity elicited by all words that were later recognized, irrespective of source accuracy, with the activity elicited by words that were later forgotten (All Hits > Misses). This contrast identified effects in several regions, including a region in the vicinity of left perirhinal cortex (see Figure 4 and Table 4). For every region, ANOVA of the parameter estimates associated with the peak voxel failed to reveal significant differences between the different classes of recognized word (p > .05). Pairwise contrasts of the peak parameter estimates within the perirhinal region (see Figure 4) revealed significant subsequent memory effects for each class of recognized item relative to misses (p < .001, p < .005, p < .02, and p < .01, for Both Correct, Location Correct, Color Correct and Item Only, respectively).

Figure 4.

Feature-insensitive subsequent memory effects. Left anterior medial temporal subsequent memory effects invariant with respect to number of source features later retrieved (illustrated at p<.001) . Mean parameter estimates (and standard errors) are also shown. Both = subsequent memory effects for Both Correct; Color = subsequent memory effects for Color Only; Location = subsequent memory effects for Location Only; Item = subsequent memory effects for Item Only. * p < .05; ** p < .01; *** p < .001

Table 4.

Regions showing greater activity for items later remembered regardless of source accuracy relative to items later forgotten

| Location (x,y,z) | Peak Z (# of voxels) | Region | Approximate Brodmann Area |

|---|---|---|---|

| -48 6 33 | 3.64 (11) | Dorsal inferior frontal gyrus | 9 |

| -24 -3 -30 | 3.72 (12) | Perirhinal cortex | 35 |

| 24 -93 30 | 4.27 (16) | Posterior occipital lobe | 19 |

| -27 -96 15 | 3.70 (10) | Posterior occipital lobe | 19 |

Discussion

The aim of the present study was to shed light on neural processing that supports the incorporation of disparate source features of a stimulus event into a common episodic memory representation. There were two principal findings. First, as is discussed in more detail below, cortical regions engaged during the online processing of location and color appear also to be involved in the formation of memories for these features. Second, consistent with the proposal outlined in the Introduction, study items for which location and color were successfully encoded elicited enhanced activity in a cortical region – the intraparietal sulcus – that has been strongly implicated in cross-featural integration (perceptual binding).

Previous studies of multidimensional source memory (Meiser and Broder, 2002; Starns and Hicks, 2005) investigated the relationship between accuracy of source retrieval and subjective reports of whether item recognition was associated with episodic recollection or merely a sense of familiarity (as indexed by the ‘Remember/Know’ procedure; Tulving, 1985). Both studies reported that when study items were endorsed as Remembered, rather than Known, subsequent source judgments for the two contextual features were stochastically dependent. This prompted Meiser and Broder to propose that recollection is uniquely associated with retrieval of ‘configural’ information about a study episode. The present finding of dependence in the accuracy of color and location judgments parallels these prior results. However, these behavioral findings do not speak to the question whether integration of disparate source features of a recollected episode depends on how the episode is encoded rather than, say, how it is retrieved. The present fMRI findings strongly suggest that the binding of different aspects of source information in memory depends, at least partly, on processes engaged during encoding.

As is discussed below, the present findings suggest that the encoding operations supporting memory for single versus multiple source features of a study item qualitatively differ. A potential objection to this conclusion arises from the possibility that accurate source judgments were more likely to reflect a ‘lucky guess’ when one rather than both features were successfully retrieved. To the extent this was the case, subsequent memory effects associated with items for which only one feature was later retrieved would necessarily be weaker than effects elicited by items for which both features were retrieved. Thus, the differential effects associated with recovery of one versus both features might merely reflect differences in the proportion of study items that were encoded sufficiently well to support later veridical retrieval of at least one source feature. This explanation seems unable to account for the present findings, however. First, items for which only color or location information could be later retrieved were associated with robust, qualitatively distinct subsequent memory effects. The presence of these effects is inconsistent with the possibility that source memory was supported by a generic encoding process that merely varied in its efficacy across study trials. Rather, distinct processes appear to be associated with successful encoding of location and color respectively. Second, subsequent memory effects in regions associated with memory for color or location were not enhanced when the two features were retrieved conjointly, as would be expected if recovery of both features was associated with a stronger memory for each feature alone. This makes it highly unlikely that the additional subsequent memory effects associated with retrieval of both source features emerged as a result of a general strengthening of memory in this condition relative to retrieval of a single feature. Instead, the difference in the pattern of effects associated with the two retrieval conditions is strongly suggestive of a shift between two different classes of encoding process.

As already noted, the present data address two questions concerning the neural determinants of successful memory encoding. First, the data are relevant to the question whether, as proposed previously (see Introduction), successful encoding of a specific feature of a study event is associated with engagement of cortical regions that also support online processing of the feature. The subsequent memory effects selectively associated with retrieval of location or color information speak to this issue. Several regions demonstrated effects that were selective for location. These included retrosplenial and posterior cingulate cortex, as well as left ventral inferior frontal gyrus. A retrosplenial region overlapping the one identified here has been implicated in processing of spatial information and object location in several prior studies (e.g. Committeri et al., 2004; Frings et al., in press; Mayes et al., 2004; Wolbers and Buchel, 2005). This parallel suggests that, in the present case, location memory was facilitated when location processing was emphasized at study. The locus of the sole color subsequent memory effect suggests that a similar conclusion holds for this feature also. The effect was localized to a small area of posterior inferior temporal cortex (-57, -36, -12) that corresponds remarkably well to a region that has been previously associated with the processing of color knowledge [(-52, -36, -12) in Chao and Martin, 1999, and (-62, -42, -16) in Kellenbach et al., 2001]. Thus, as with location, it would appear that memory for color was facilitated when processing involving this feature received emphasis. It is notable that the color subsequent memory effect was localized not to a region implicated in low-level color processing, but to an area engaged when color information is processed at a ‘semantic’, object-based level. This may be a reflection of the demands of the study task, in which word color dictated the nature of the semantic judgment that was required. Thus, color was both explicitly task-relevant, and associated with recovery of a specific class of semantic information.

The more interesting question addressed by the present experiment concerns encoding processes that support the later retrieval of multiple source features. According to the proposal outlined in the Introduction, successful retrieval of both location and color, rather than one or the other alone, is facilitated when the two features are encoded conjointly in a common memory representation. It was further argued that for such encoding to occur, the features must have been bound together at the perceptual level, a process dependent on cortical regions additional to those that support feature-specific processing. Consistent with these proposals, items for which color and location information were both later retrieved elicited subsequent memory effects in regions additional to those engaged when only one feature was retrieved. As noted already, one of these regions, right intra-parietal sulcus, has been implicated previously in attentionally-mediated perceptual binding (see Introduction).

Thus the present findings indicate that the mechanisms underlying encoding of single and multiple source features qualitatively differ. The findings suggest that encoding of a single feature is facilitated when it attracts, or is allocated, a relatively high level of attentional resource. So long as attention is directed primarily to the featural level, however, facilitation of the encoding of any one feature will fail to benefit concomitant encoding of other features (indeed, to the extent that the different features compete for attention, the impact may be negative). For multiple features to be encoded successfully, attentional resources must allocated to the ‘object level’, allowing the different features to be conjoined into a common perceptual representation (Treisman and Gelade, 1980). It is allocation of attention to this level, and the consequent emphasis on configural processing, that leads to the encoding of an integrated representation of the study item.

In addition to the intraparietal sulcus, items for which color and location were both later retrieved were also uniquely associated with subsequent memory effects in left dorsal inferior frontal gyrus (~BA 44). This region has been consistently linked to phonological and, to a lesser extent, semantic processing of visual words (e.g. Burton et al., 2005; Demonet et al., 1992; Gold and Buckner, 2002; Poldrack et al., 1999), and has demonstrated subsequent memory effects in numerous prior studies of visual word encoding (e.g. Baker et al., 2001; Brewer et al., 1998; Ranganath et al., 2003; for review see Blumenfield and Ranganath, 2006). The present findings suggest that the encoding operations that supported the conjoint encoding of color and location also led to the incorporation of additional, more abstract, information into the encoded memory representation. This can be understood in light of evidence that the incidental encoding of surface features of a stimulus event, such as its sensory modality or location, is facilitated by study conditions that promote relatively ‘deep’ processing of the event (at least in young adults) (Hayman and Rickards, 1995; Kohler et al., 2001; Naveh-Benjamin, 1987). According to Kohler et al. (2001), such processing necessitates allocation of attention at the object level, and hence promotes the binding of the separate features of the study item into a common perceptual (and, ultimately, mnemonic) representation. This account reinforces the conclusion arrived at above that successful conjoint encoding of color and location occurred not on study trials where the individual features were the focus of attention, but on trials where the study episode as a whole was strongly attended.

In addition to the cortical subsequent memory effects discussed above, study trials for which both location and color information were later retrieved also elicited effects in the hippocampus, albeit only at a reduced statistical threshold. This finding is consistent with prior fMRI evidence for a hippocampal role in the encoding of item-context associations between the different elements of a study episode (Davachi and Wagner, 2002; Ranganath et al., 2003; Kensinger and Schacter, 2006; but see Sommer et al., 2005, 2006; Cansino et al., 2002; Reynolds et al., 2004, for failures to observe hippocampal effects and Gold et al., 2006, for a failure to find differential hippocampal subsequent memory effects according to accuracy of later source judgments) and, more generally, with the notion that the hippocampus plays an important role in memory binding (for reviews see Eichenbaum, 2004; O’Reilly and Rudy, 2001). In contrast to study items for which both features were later remembered, hippocampal subsequent memory effects were not evident when only one feature was later remembered. This null finding should be treated with caution, however, as the hippocampal effect associated with later memory for both features was itself relatively weak. To the extent that the magnitude of hippocampal subsequent memory effects scales with the amount of information encoded, effects elicited by items for which only one feature could be later retrieved may simply have been too small to be detectable.

As was noted in the Introduction, prior fMRI studies of contextual (source) encoding have manipulated a diverse range of variables in order to vary study context on a trial-by-trial basis. As in some of these prior studies, and a number of relevant behavioral studies, the contextual features manipulated here were intrinsic properties of the study items. It seems unlikely that the proposed mechanism for the binding into memory of intrinsic contextual features – attentional binding of the features at the object level – would also serve to bind an item with extrinsic features. Likewise, it seems unlikely that successful associative encoding of multiple items, such as face-name pairs, requires that the items be attentionally conjoined in a common perceptual representation. In both of these cases, memory would seem more likely to depend upon the encoding of associations between components of a study episode that, at the object level, are attended and processed independently. It remains to be determined however whether, as appears to be the case for the contextual features manipulated here, the encoding of associations more generally depends upon cortical activity additional to that subserving the encoding of their constituent elements.

In addition to the hippocampal subsequent memory effects discussed above, a further effect was also localized to the medial temporal lobe. Regardless of the number of source features that could be retrieved, later recognized items elicited greater activity in the vicinity of left perirhinal cortex. This finding suggests that the encoding operations supported by this region play a role in the encoding of item information per se, rather than associations between an item and context information. The finding is consistent with previous evidence that subsequent memory effects in anterior medial temporal cortex reflect encoding of information that supports later recognition regardless of whether this is accompanied by recollection of episodic details (Davachi et al., 2003; Ranganath et al., 2003; Uncapher and Rugg, 2005; Gold et al., 2006).

In conclusion, the present data add weight to prior proposals that cortical regions supporting the online processing of a specific feature of a study item contribute to the instantiation of an enduring memory representation for the feature. The data further suggest that memory for multiple features does not depend on the concurrent engagement of independent, feature-specific, encoding operations, but rather on the encoding of a representation of the study event in which the disparate features have been attentionally conjoined.

Experimental Procedures

Subjects

Twenty-three volunteers [nine female; age range: 18 – 26 years, mean = 21.3 (SD = 2.4)] consented to participate in the study. All volunteers reported themselves to be in good general health, right-handed, with no history of neurological disease or other contra-indications for MR imaging, and to have learned English as their first language. Volunteers self-reported no history of color-blindness, and were tested for color discrimination prior to participating in the experiment proper. Volunteers were recruited from the University of California at Irvine (UCI) community and remunerated for their participation, in accordance with the human subjects procedures approved by the Institutional Review Board of UCI. Two volunteers’ data were excluded because of inadequate memory performance (>2 SDs below the sample mean for recognition accuracy), and one volunteer’s data were excluded for contributing fewer than 10 trials per condition in all conditions (see Data analysis below).

Stimulus Materials

Critical stimuli were drawn from a pool of 286 concrete words (four to nine letters long; mean written frequency between 1 and 30 counts per million according to Kucera and Francis, 1967). This pool was used to create seven lists of 40 items each, with half the words in each list denoting animate objects and the other half inanimate objects. Half of each set of words denoting animate or inanimate objects comprised words denoting objects that are smaller than a shoebox, with the other half denoting objects larger than a shoebox. Two study lists of 100 critical items each were created from five of the lists, with two extra items serving as buffers. The two remaining lists were used to create a pool of 40 new items for each of the two source memory tests (see Procedure). Word lists were rotated between study and test conditions across subjects. A separate pool of 75 words was used to create three sets of practice study and test lists (with 50 items used as practice study items and the remaining 25 as practice new items).

Procedure

Volunteers performed two encoding-retrieval cycles in the scanner, with MR images acquired during the encoding phases only. Instructions were given, and three practice encoding-retrieval cycles performed, outside the scanner. During each encoding phase, volunteers viewed a black screen with a white central fixation cross and a grey box (subtending 9° vertical and horizontal visual angles) centered in each quadrant of the screen (subtending 1° vertical and horizontal visual angles from the central fixation cross). Every 3 s (excluding null events, see below), a study word appeared in the center of one of the four boxes for 1 s. Words were presented with equal probability in one of four colors (red, green, blue or pink) or in black, (that is, in each encoding phase 20 words were presented in red, green, blue, pink and black letters respectively). Additionally, each color (including black) appeared in each quadrant with equal probability. In the case of words presented in one of the four colors, volunteers were instructed to decide whether each word denoted an object that was living or non-living, and to indicate their decision with the index (animate) or middle (inanimate) finger of one hand. These words comprised the critical study items for which memory would be subsequently tested. When a black word appeared, volunteers were required to decide whether the object it denoted was smaller or larger than a shoebox, indicating their decision with the index (smaller than a shoebox) or middle (larger than a shoebox) finger of the opposite hand. This size judgment task was included because pilot studies revealed that memory for color was very poor when volunteers simply performed the animacy task on study items. The inclusion of a task which explicitly directed attention towards the color of the study items increased memory performance for this feature such that memory for the two features was approximately equivalent. The hand used to indicate animacy decisions was counterbalanced across volunteers. Speed and accuracy of responding were given equal emphasis, as was performance on both the animacy and the size tasks. All words were presented visually in white upper case letters. In both the encoding and retrieval phases, words were presented via VisuaStim (Resonance Technology, Inc., Northridge, CA, USA) XGA MRI compatible headmounted display goggles with a field of view of 30° visual angle and a resolution of 640×480 pixels. The words subtended maximum horizontal and vertical visual angles of 8° and 1.5°, respectively.

In each encoding phase, 80 colored words and 20 black words were presented during a single scanning session (lasting approximately 7 min). Study item stimulus onset asynchrony (SOA) was stochastically distributed with a minimum SOA of 3 s modulated by the addition of one-third (50) randomly intermixed null trials (Josephs and Henson, 1999). A central fixation cross and the grey boxes in each quadrant were present throughout the inter-item interval. Study items were presented in pseudo-random order, with no more than three trials of one item-type occurring consecutively.

Prior to the first practice encoding phase, volunteers were informed that a memory test would follow, and that memory would be tested only for words appearing in color (and not those appearing in black). They were additionally informed that memory would be tested for the location and for the color in which the word was studied. They were encouraged to use an encoding strategy that would facilitate memory of the two source features with the study word.

A non-scanned source memory test was administered immediately upon completion of each encoding phase. In each case, all 80 colored study words were presented one at a time, interspersed among 40 unstudied (new) words. Volunteers were instructed to judge whether each word was old or new, and to indicate their decision with the index (old) or middle (new) finger of their right hand. If volunteers were uncertain of whether an item was old or new, they were instructed to indicate ‘new’ in an effort to ensure that subsequent source memory judgments (see below) would be confined to confidently recognized items. Volunteers were required to indicate their old/new decision within 3 s of the onset of the test word. Each test word was presented for 300ms; however, if a word was indicated to be old, the word reappeared on the screen and volunteers were visually prompted in two stages to make a decision about the color and the location in which the word was studied. This was accomplished by presenting below the test word the question ‘Color?’ followed by the question ‘Location?’, below which was presented the relevant response mapping. Volunteers indicated their source judgment with the index (red/upper left), middle (pink/bottom left), ring (green/upper right), or little (blue/bottom right) fingers of their right hand. Both source memory judgments were self-paced and were always in the same order (color and then location judgments). Volunteers were instructed to make their best guess if they were uncertain of the color or the location in which the word was studied.

The source memory tests were each presented in two consecutive blocks, separated by a short self-paced rest period. Old and new items were presented pseudo-randomly with no more than three trials of one item-type occurring consecutively. One additional new buffer item was added to the beginning of each test block. A grey box was presented in the center of the screen (subtending 9° vertical and horizontal visual angles) continuously throughout the test phase. Each word was centrally-presented in the grey box in white uppercase letters, with all other display parameters the same as at study. A white fixation cross was presented in the center of the grey box during the intertrial-interval.

fMRI Scanning

A Philips Eclipse 1.5T MR scanner (Philips Medical Systems, Andover, MA, USA) was used to acquire both T1–weighted anatomical volume images (256 × 256 matrix, 1mm3 voxels, SPGR sequence) and T2*–weighted echoplanar images [64 × 92 matrix, 2.6 × 3.9mm pixels, echo time (TE) of 40ms] with blood-oxygenation level dependent (BOLD) contrast. Each EPI volume comprised 27 3mm-thick axial slices separated by 1.5mm, positioned to give full coverage of the cerebrum and most of the cerebellum. Data were acquired in two sessions comprised of 191 volumes each, with a repetition time (TR) of 2.5s/volume. Volumes within sessions were acquired continuously in a descending sequential order. The first four volumes were discarded to allow tissue magnetization to achieve a steady state. The 3.0s SOA allowed for an effective sampling rate of the hemodynamic response of 2 Hz.

fMRI Data Analysis

Data preprocessing and statistical analyses were performed with Statistical Parametric Mapping (SPM2, Wellcome Department of Cognitive Neurology, London, UK: http://www.fil.ion.ucl.ac.uk/spm/; Friston et al., 1995) implemented in MATLAB6 (The Mathworks, Inc., USA). All volumes were corrected for differences in acquisition times between slices (temporally realigned to the acquisition of the middle slice), and were realigned spatially to the first volume of the first timeseries. Inspection of movement parameters generated during spatial realignment indicated that no volunteer moved more than 3mm in any direction during each session. Resulting images were spatially normalized to a standard EPI template based on the Montreal Neurological Institute (MNI) reference brain (Cocosco et al., 1997) and resampled into 3mm3 voxels using non-linear basis functions (Ashburner and Friston, 1999). Image volumes were concatenated across sessions [comparisons of concatenated vs. non-concatenated designs revealed qualitatively similar patterns of activation, with more stable parameter estimates for concatenated data, likely due to increasing the number of trials per condition when data were concatenated]. Normalized images were smoothed with an isotropic 8mm full width half maximum (FWHM) Gaussian kernel. Each volunteer’s T1 anatomical volume was coregistered to their mean EPI volume and normalized to a standard T1 template of the MNI brain.

Statistical analyses were performed in two stages of a mixed effects model. In the first stage, neural activity was modeled by a delta function (impulse event) at stimulus onset. These functions were then convolved with a canonical hemodynamic response function (HRF) and its temporal and dispersion derivatives (Friston et al., 1998) to yield regressors in a General Linear Model that modeled the BOLD response to each event-type. The two derivatives model variance in latency and duration, respectively. Analyses of the parameter estimates pertaining to these derivatives added no theoretically meaningful information to that contributed by the HRF, and are not reported (results are available from the corresponding author upon request).

Five event-types of interest were defined; studied words later attracting correct source judgments for both features (Both Correct), for color only (Color Only), for location only (Location Only), studied words attracting a correct recognition judgment but where neither source feature is correctly judged (Item Only), or studied words that were later incorrectly judged to be new (Miss). Only words that received correct classifications on the animacy task were included. Words that were incorrectly classified at study, or for which a response was omitted, were modeled as events of no interest, as were buffer items and words presented in the size task. Six regressors modeling movement-related variance (three rigid-body translations and three rotations determined from the realignment stage) and two session-specific constant terms modeling the mean over scans in each session were also employed in the design matrix.

The timeseries in each voxel were highpass-filtered to 1/128 Hz to remove low-frequency noise and scaled within-session to a grand mean of 100 across both voxels and scans. Parameter estimates for events of interest were estimated using a General Linear Model. Nonsphericity of the error covariance was accommodated by an AR(1) model, in which the temporal autocorrelation was estimated by pooling over suprathreshold voxels (Friston et al., 2002). The parameters for each covariate and the hyperparameters governing the error covariance were estimated using Restricted Maximum Likelihood (ReML). Effects of interest were tested using linear contrasts of the parameter estimates. These contrasts were carried forward to a second stage in which subjects were treated as a random effect. Unless otherwise specified, only effects surviving an uncorrected threshold of p < .001 and including five or more contiguous voxels were interpreted. The peak voxels of clusters exhibiting reliable effects are reported in MNI coordinates.

Regions of overlap between the outcomes of two contrasts were identified by inclusively masking the relevant SPMs. Exclusive masking was employed to identify voxels where effects were not shared between two contrasts. In each case, the SPM constituting the exclusive mask was thresholded at p < .05, whereas the contrast to be masked was thresholded at p < .001. Note that the more liberal the threshold of an exclusive mask, the more conservative is the masking procedure.

Table 1.

Probabilities of making a correct source judgment conditionalized on whether or not the other source judgment was also accurate (SD in parentheses)

| Class of response | Probability |

|---|---|

| Colorcorrect if Locationcorrect | .47 (.17) |

| Colorcorrect if Locationincorrect | .39 (.12) |

| Locationcorrect if Colorcorrect | .57 (.14) |

| Locationcorrect if Colorincorrect | .49 (.11) |

Acknowledgments

This research was supported by the National Institute of Mental Health (1R01MH074528); L. Otten was supported by the Wellcome Trust. The authors thank the members of the UCI Research Imaging Center for their assistance with fMRI data acquisition.

References

- Alvarez P, Squire LR. Memory consolidation and the medial temporal lobe–a simple network model. Proc Natl Acad Sci. 1994;91:7041–7045. doi: 10.1073/pnas.91.15.7041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. Nonlinear spatial normalization using basis functions. Hum Brain Mapp. 1999;7:254–266. doi: 10.1002/(SICI)1097-0193(1999)7:4<254::AID-HBM4>3.0.CO;2-G. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley AD, Lewis V, Eldridge M, Thomson N. Attention and retrieval from long-term memory. JExp Psychol Gen. 1984;113:518–540. [Google Scholar]

- Baker JT, Sanders AL, Maccotta L, Buckner RL. Neural correlates of verbal memory encoding during semantic and structural processing tasks. Neuroreport. 2001;12:1251–1256. doi: 10.1097/00001756-200105080-00039. [DOI] [PubMed] [Google Scholar]

- Blumenfeld RS, Ranganath C. Dorsolateral prefrontal cortex promotes long-term memory formation through its role in working memory organization. J Neurosci. 2006;26:916–925. doi: 10.1523/JNEUROSCI.2353-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brewer JB, Zhao Z, Desmond JE, Glover GH, Gabrieli JD. Making memories: brain activity that predicts how well visual experience will be remembered. Science. 1998;281:1185–1187. doi: 10.1126/science.281.5380.1185. [DOI] [PubMed] [Google Scholar]

- Burton MW, LoCasto PC, Krebs-Noble D, Gullapalli RP. A systematic investigation of the functional neuroanatomy of auditory and visual phonological processing. Neuroimage. 2005;26:647–661. doi: 10.1016/j.neuroimage.2005.02.024. [DOI] [PubMed] [Google Scholar]

- Cansino S, Maquet P, Dolan RJ, Rugg MD. Brain activity underlying encoding and retrieval of source memory. Cereb Cortex. 2002;12:1048–1056. doi: 10.1093/cercor/12.10.1048. [DOI] [PubMed] [Google Scholar]

- Chalfonte BL, Johnson MK. Feature memory and binding in young and older adults. Mem Cognit. 1996;24:403–16. doi: 10.3758/bf03200930. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Cortical regions associated with perceiving, naming, and knowing about colors. J Cogn Neurosci. 1999;11:25–35. doi: 10.1162/089892999563229. [DOI] [PubMed] [Google Scholar]

- Cocosco C, Kollokian V, Kwan RS, Evans A. Brainweb: online interface to a 3D MRI simulated brain database. Neuroimage. 1997;5:S425. [Google Scholar]

- Committeri G, Galati G, Paradis AL, Pizzamiglio L, Berthoz A, LeBihan D. Reference frames for spatial cognition: different brain areas are involved in viewer-, object-, and landmark-centered judgments about object location. J Cogn Neurosci. 2004;16:1517–1535. doi: 10.1162/0898929042568550. [DOI] [PubMed] [Google Scholar]

- Craik FIM, Govoni R, Naveh-Benjamin M, Anderson ND. The effects of divided attention on encoding and retrieval processes in human memory. J Exp Psychol Gen. 1996;125:159–180. doi: 10.1037//0096-3445.125.2.159. [DOI] [PubMed] [Google Scholar]

- Cusack R. The intraparietal sulcus and perceptual organization. J Cogn Neurosci. 2005;17:641–651. doi: 10.1162/0898929053467541. [DOI] [PubMed] [Google Scholar]

- Davachi L, Maril A, Wagner AD. When keeping in mind supports later bringing to mind: neural markers of phonological rehearsal predict subsequent remembering. J Cogn Neurosci. 2001;13:1059–1070. doi: 10.1162/089892901753294356. [DOI] [PubMed] [Google Scholar]

- Davachi L, Mitchell JP, Wagner AD. Multiple routes to memory: Distinct medial temporal lobe processes build item and source memories. Proc Natl Acad Sci USA. 2003;100:2157–2162. doi: 10.1073/pnas.0337195100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davachi L, Wagner AD. Hippocampal contributions to episodic encoding: insights from relational and item-based learning. J Neurophysiol. 2002;88:982–990. doi: 10.1152/jn.2002.88.2.982. [DOI] [PubMed] [Google Scholar]

- Demonet JF, Chollet F, Ramsay S, Cardebat D, Nespoulous JL, Wise R, Rascol A, Frackowiak R. The anatomy of phonological and semantic processing in normal subjects. Brain. 1992;115:1753–1768. doi: 10.1093/brain/115.6.1753. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H. The hippocampal system and declarative memory in animals. J Cogn Neurosci. 1992;4:217–231. doi: 10.1162/jocn.1992.4.3.217. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H. Hippocampus: cognitive processes and neural representations that underlie declarative memory. Neuron. 2004;44:109–120. doi: 10.1016/j.neuron.2004.08.028. [DOI] [PubMed] [Google Scholar]

- Friedman-Hill SR, Robertson LC, Treisman A. Parietal contributions to visual feature binding: Evidence from a patient with bilateral lesions. Science. 1995;269:853–855. doi: 10.1126/science.7638604. [DOI] [PubMed] [Google Scholar]

- Frings L, Wagner K, Quiske A, Schwarzwald R, Spreer J, Halsband U, Schulze-Bonhage A. Precuneus is involved in allocentric spatial location encoding and recognition. Exp Brain Res. doi: 10.1007/s00221-006-0408-8. in press. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- Friston KJ, Fletcher P, Josephs O, Holmes A, Rugg MD, Turner R. Event-related fMRI: characterizing differential responses. Neuroimage. 1998;7:30–40. doi: 10.1006/nimg.1997.0306. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Penny W, Phillips C, Kiebel S, Hinton G, Ashburner J. Classical and Bayesian inference in neuroimaging: theory. Neuroimage. 2002;16:465–483. doi: 10.1006/nimg.2002.1090. [DOI] [PubMed] [Google Scholar]

- Gold BT, Buckner RL. Common prefrontal regions coactivate with dissociable posterior regions during controlled semantic and phonological tasks. Neuron. 2002;35:803–812. doi: 10.1016/s0896-6273(02)00800-0. [DOI] [PubMed] [Google Scholar]

- Gold JJ, Smith CN, Bayley PJ, Shrager Y, Brewer JB, Stark CE, Hopkins RO, Squire LR. Item memory, source memory, and the medial temporal lobe: Concordant findings from fMRI and memory-impaired patients. Proc Natl Acad Sci USA. 2006;103:9351–6. doi: 10.1073/pnas.0602716103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanna A, Remington R. The representation of color and form in long-term memory. Mem Cognit. 1996;24:322–330. doi: 10.3758/bf03213296. [DOI] [PubMed] [Google Scholar]

- Hayman CAG, Rickards C. A dissociation in the effects of study modality on tests of implicit and explicit memory. Mem Cognit. 1995;23:95–112. doi: 10.3758/bf03210560. [DOI] [PubMed] [Google Scholar]

- Humphreys GW. Neural representation of objects in space: A dual coding account. Philos Trans R Soc Lond B Biol Sci. 1998;353:1341–1351. doi: 10.1098/rstb.1998.0288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson O, Schacter DL. Encoding activity in anterior medial temporal lobe supports subsequent associative recognition. Neuroimage. 2004;21:456–462. doi: 10.1016/j.neuroimage.2003.09.050. [DOI] [PubMed] [Google Scholar]

- Johnson MK, Chalfonte BL. Binding complex memories: The role of reactivation and the hippocampus. In: Schacter DL, Tulving E, editors. In Memory Systems 1994. Cambridge, MA: MIT Press; 1994. pp. 311–350. [Google Scholar]

- Josephs O, Henson RN. Event-related functional magnetic resonance imaging: modelling, inference and optimization. Philos Trans R Soc Lond B Biol Sci. 1999;354:1215–1228. doi: 10.1098/rstb.1999.0475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Large, colorful, or noisy? Attribute- and modality-specific activations during retrieval of perceptual attribute knowledge. Cogn Affect Behav Neurosci. 2001;1:207–221. doi: 10.3758/cabn.1.3.207. [DOI] [PubMed] [Google Scholar]

- Kellogg RT. Conscious attentional demands of encoding and retrieval from long term memory. Am J Psychol. 1982;95:183–198. [PubMed] [Google Scholar]

- Kensinger EA, Schacter DL. Amygdala activity is associated with the successful encoding of item, but not source, information for positive and negative stimuli. J Neurosci. 2006;26:2564–70. doi: 10.1523/JNEUROSCI.5241-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirwan CB, Stark CEL. Medial temporal lobe activation during encoding and retrieval of novel face–name pairs. Hippocampus. 2004;14:919–930. doi: 10.1002/hipo.20014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler S, Moscovitch M, Melo B. Episodic memory for object location versus episodic memory for object identity: Do they rely on distinct encoding processes? Mem Cognit. 2001;29:948–959. doi: 10.3758/bf03195757. [DOI] [PubMed] [Google Scholar]

- Light LL, Berger DE. Are there long-term ‘literal copies’ of visually presented words? J Exp Psychol Hum Learn Mem. 1976;6:654–662. [PubMed] [Google Scholar]

- Marr D. Simple memory: A theory for archicortex. Philos Trans R Soc Lond B Biol Sci. 1971;262:23–81. doi: 10.1098/rstb.1971.0078. [DOI] [PubMed] [Google Scholar]

- Marsh RL, Hicks JL, Cook GI. Focused attention on one contextual attribute does not reduce source memory for a different attribute. Memory. 2004;12:183–192. doi: 10.1080/09658210344000008. [DOI] [PubMed] [Google Scholar]

- Mayes AR, Montaldi D, Spencer TJ, Roberts N. Recalling spatial information as a component of recently and remotely acquired episodic or semantic memories: an fMRI study. Neuropsychology. 2004;18:426–441. doi: 10.1037/0894-4105.18.3.426. [DOI] [PubMed] [Google Scholar]

- Meiser T, Broder A. Memory for multidimensional source information. J Exp Psychol Learn Mem Cogn. 2002;28:116–137. doi: 10.1037/0278-7393.28.1.116. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Macrae CN, Banaji MR. Encoding-specific effects of social cognition on the neural correlates of subsequent memory. J Neurosci. 2004;24:4912–4917. doi: 10.1523/JNEUROSCI.0481-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moscovitch M. Memory and working with memory: A component process model based on modules and central systems. J Cogn Neurosci. 1992;4:257–267. doi: 10.1162/jocn.1992.4.3.257. [DOI] [PubMed] [Google Scholar]

- Naveh-Benjamin M. Coding of spatial location information: An automatic process? J Exp Psychol Learn Mem Cogn. 1987;13:595–605. doi: 10.1037//0278-7393.13.4.595. [DOI] [PubMed] [Google Scholar]

- Norman DA. Memory while shadowing. Q J Exp Psychol. 1969;21:85–93. doi: 10.1080/14640746908400200. [DOI] [PubMed] [Google Scholar]

- Norman KA, O’Reilly RC. Modeling hippocampal and neocortical contributions to recognition memory: a complementary-learning-systems approach. Psychol Rev. 2003;110:611–646. doi: 10.1037/0033-295X.110.4.611. [DOI] [PubMed] [Google Scholar]

- O’Reilly RC, Rudy JW. Conjunctive representations in learning and memory: principles of cortical and hippocampal function. Psychol Rev. 2001;108:311–345. doi: 10.1037/0033-295x.108.2.311. [DOI] [PubMed] [Google Scholar]

- Otten LJ, Rugg MD. Task- dependency of the neural correlates of episodic encoding as measured by fMRI. Cereb Cortex. 2001;11:1150–1160. doi: 10.1093/cercor/11.12.1150. [DOI] [PubMed] [Google Scholar]

- Otten LJ, Henson RN, Rugg MD. State-related and item-related neural correlates of successful memory encoding. Nat Neurosci. 2002;5:1339–1344. doi: 10.1038/nn967. [DOI] [PubMed] [Google Scholar]

- Paller KA, Wagner AD. Observing the transformation of experience into memory. Trends Cogn Sci. 2002;6:93–102. doi: 10.1016/s1364-6613(00)01845-3. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AS, Prull MW, Desmond JE, Glover GH, Gabrieli JDE. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10:15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Yonelinas AP, Cohen MX, Dy CJ, Tom SM, D’Esposito M. Dissociable correlates of recollection and familiarity within the medial temporal lobes. Neuropsychologia. 2003;42:2–13. doi: 10.1016/j.neuropsychologia.2003.07.006. [DOI] [PubMed] [Google Scholar]

- Robertson L. Binding, spatial attention and perceptual awareness. Nat Rev Neurosci. 2003;4:93–102. doi: 10.1038/nrn1030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET. Memory systems in the brain. Annu Rev Psychol. 2000;51:599–630. doi: 10.1146/annurev.psych.51.1.599. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Otten LJ, Henson RN. The neural basis of episodic memory: evidence from functional neuroimaging. Philos Trans R Soc Lond B Biol Sci. 2002;357:1097–110. doi: 10.1098/rstb.2002.1102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shafritz KM, Gore JC, Marois R. The role of the parietal cortex in visual feature binding. Proc Natl Acad Sci USA. 2002;99:10917–10922. doi: 10.1073/pnas.152694799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommer T, Rose M, Weiller C, Buchel C. Contributions of occipital, parietal and parahippocampal cortex to encoding of object-location associations. Neuropsychologia. 2005;43:732–43. doi: 10.1016/j.neuropsychologia.2004.08.002. [DOI] [PubMed] [Google Scholar]

- Sommer T, Rose M, Buchel C. Dissociable parietal systems for primacy and subsequent memory effects. Neurobiol Learn Mem. 2006;85:243–51. doi: 10.1016/j.nlm.2005.11.002. [DOI] [PubMed] [Google Scholar]

- Sperling R, Chua E, Cocchiarella A, Rand-Giovannetti E, Poldrack R, Schacter DL, Albert M. Putting names to faces: successful encoding of associative memories activates the anterior hippocampal formation. Neuroimage. 2004;20:1400–1410. doi: 10.1016/S1053-8119(03)00391-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starns JJ, Hicks JL. Source dimensions are retrieved independently in multidimensional monitoring tasks. J Exp Psychol Learn Mem Cogn. 2005;31:1213–1220. doi: 10.1037/0278-7393.31.6.1213. [DOI] [PubMed] [Google Scholar]

- Treisman A. Feature binding, attention and object perception. Phil Trans Royal Soc Lond B Biol Sci. 1998;353:1295–1306. doi: 10.1098/rstb.1998.0284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman A, Gelade G. Feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Tulving E. Memory and consciousness. Can J Psychol. 1985;26:1–12. [Google Scholar]

- Uncapher MR, Rugg MD. Encoding and the durability of episodic memory: a functional magnetic resonance imaging study. J Neurosci. 2005;25:7260–7267. doi: 10.1523/JNEUROSCI.1641-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner AD, Schacter DL, Rotte M, Koutstaal W, Maril A, Dale AM, Rosen BR, Buckner RL. Building memories: remembering and forgetting of verbal experiences as predicted by brain activity. Science. 1998;281:1188–1191. doi: 10.1126/science.281.5380.1188. [DOI] [PubMed] [Google Scholar]

- Wolbers T, Buchel C. Dissociable retrosplenial and hippocampal contributions to successful formation of survey representations. J Neurosci. 2005;25:3333–3340. doi: 10.1523/JNEUROSCI.4705-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yonelinas AP. Components of episodic memory: the contribution of recollection and familiarity. Philos Trans R Soc Lond B Biol Sci. 2001;356:1363–1374. doi: 10.1098/rstb.2001.0939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeineh MM, Engel SA, Thompson PM, Brookheimer SY. Dynamics of the hippocampus during encoding and retrieval of face-name pairs. Science. 2003;299:577–580. doi: 10.1126/science.1077775. [DOI] [PubMed] [Google Scholar]