Abstract

Visual cues from faces provide important social information relating to individual identity, sexual attraction and emotional state. Behavioural and neurophysiological studies on both monkeys and sheep have shown that specialized skills and neural systems for processing these complex cues to guide behaviour have evolved in a number of mammals and are not present exclusively in humans. Indeed, there are remarkable similarities in the ways that faces are processed by the brain in humans and other mammalian species. While human studies with brain imaging and gross neurophysiological recording approaches have revealed global aspects of the face-processing network, they cannot investigate how information is encoded by specific neural networks. Single neuron electrophysiological recording approaches in both monkeys and sheep have, however, provided some insights into the neural encoding principles involved and, particularly, the presence of a remarkable degree of high-level encoding even at the level of a specific face. Recent developments that allow simultaneous recordings to be made from many hundreds of individual neurons are also beginning to reveal evidence for global aspects of a population-based code. This review will summarize what we have learned so far from these animal-based studies about the way the mammalian brain processes the faces and the emotions they can communicate, as well as associated capacities such as how identity and emotion cues are dissociated and how face imagery might be generated. It will also try to highlight what questions and advances in knowledge still challenge us in order to provide a complete understanding of just how brain networks perform this complex and important social recognition task.

Keywords: face recognition, face emotion, face imagery, neural encoding, temporal cortex

1. Introduction

The use of visual cues for recognition of individuals and their emotional state is of key importance for humans and has clear advantages over olfactory cues which may need to be highly proximal and auditory ones that are totally dependent upon whether an individual vocalizes or not. Therefore, it is not surprising that social recognition using visual cues is widely used by diurnal social mammals. However, there has been some debate as to the extent that the use of specialized visual cues from the face, and consequent development of associated specializations within the brain, is a unique feature of primate social evolution. This is also particularly suggested for face emotion with the extensive evolution of facial expressions in primates, notably in the great apes and humans.

However, studies performed on ungulate species such as sheep and goats, particularly those in our own group, yield compelling evidence for the use of facial cues in both identification and recognition of emotional state and associated brain specializations in sub-primate mammals. This has offered further opportunities to investigate general principles of how the brain may be organized to carry out the highly complex task of distinguishing between large subsets of highly homogeneous faces. It also provides further opportunities to develop a better understanding of how identity and emotion cues are integrated by these specialized systems as well as offering potential insights into the extent to which other species may be able to form mental images of the faces of individuals who are missing from the social environment.

In this review, we focus on what we have learned about the abilities of different mammalian species to use visual cues from the face to identify individuals. We also consider the extent to which faces provide an important source of potential information for social attraction and interpretation of emotional state. It will then consider what has been learned from neurophysiological experiments on non-human species about the way the brain encodes faces during perception and imagery. Finally, we suggest where future research may advance our understanding further of how brain networks process these important social signals.

2. Behavioural evidence

Compared with the large amount of behavioural work carried out on human face recognition, there is considerably less concerning other animal species. Of course, faces are just exemplars of complex visual patterns, so it should be possible to demonstrate face-recognition abilities in many different species. However, it is important in this context to show firstly whether individuals of a particular species normally use the facial cues to identify others. Secondly, if we are to compare other species with humans, do the same advantages and limitations exist in a particular species for recognizing faces that have been demonstrated in humans? Such evidence would indicate the presence in the brain of similar mechanisms specific to processing faces and distinct from those for processing other visual objects.

3. Face identity recognition and memory for faces

(a) Face recognition in non-human primates

The human face processing system has been found to be configurally sensitive and exhibits a profound inversion effect (Yin 1969) and shows evidence for hemispheric asymmetry (see review by Kanwisher & Yovel 2006). Given that most non-human primates have excellent binocular vision and are social animals living in large cohorts, it may be expected that there will exist a system for the visual recognition of conspecifics. Indeed, many studies (Rosenfeld & Van Hoesen 1979; Bruce 1982; Phelps & Roberts 1994; Pascalis et al. 1998; Weiss et al. 2001) have found evidence for face-recognition abilities in different non-human primate species.

Researchers have also examined whether non-human primates make use of features corresponding to those used by humans for individual recognition. Dittrich (1990) found that the configuration of facial features was important to macaques and concluded that the facial outline was important to the recognition, as well as both the eyes and the mouth, suggesting an emotional component to their recognition system. Study of schematic face preferences in infant macaques ascertained that before one month of age, the configuration of the features of the face was more important in recognition than the features themselves (Kuwahata et al. 2004). Investigations into an inversion effect in non-human primates have shown mixed and contrasting results. Perrett et al. (1988) showed that monkeys were significantly slower to respond to faces presented upside-down compared with upright faces, although others (Rosenfeld & Van Hoesen 1979; Bruce 1982) found that macaques were able to distinguish faces regardless of their orientation. It is likely that non-human primates show an inversion effect to some degree, but this may simply degrade rather than abolish recognition ability (Perrett et al. 1995). In humans, some adjustments to face stimuli, such as changes in colour and lighting, have no effect on the recognition abilities. A similar effect is found in non-human primates with alterations in colour, lighting or size having no effect on the animal's ability to recognize a face (Dittrich 1990; Hietanen et al. 1992).

(b) Face recognition in sheep

Sheep, like other ungulates such as goats, cattle and horses, are a social species that live in large groups and possess a highly developed visual sense. The importance of visual cues from the face for individual recognition in natural social contexts was first suggested by Shillito-Walser (1978) who noted that mother ewes had difficulty in recognizing their lambs at a distance when the appearance of the head region was altered using coloured dyes.

Behavioural studies in our laboratory using choice mazes and operant discrimination tasks have revealed quite remarkable face-recognition abilities in sheep, similar to those found in humans. The first of these simply showed preferences for particular types of faces independent of learning and which therefore indicated that the animals were using face-recognition cues as part of their normal lives. Here, the reward for choosing a particular face was gaining access to the individual to whom the face belonged. These experiments showed that sheep could discriminate between sheep and human faces, between different breeds of sheep and between sexes in the same breed (Kendrick et al. 1995). The eyes appeared to play the most important single feature in recognition similar to humans. Interestingly, while sheep had greater difficulty in discriminating between the same individuals using vocal cues, mismatching face and vocal cues for the same individuals impaired performance, suggesting some degree of integration between face and vocal processing.

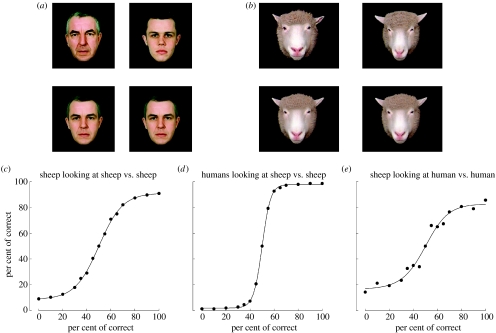

By employing test paradigms which use face pairs of either socially familiar or unfamiliar faces and by providing food rewards for correct choices, we have found that sheep have very good acuity for discriminating between faces. They can still recognize face pictures reduced to one-third of normal size, and using face-morphing programmes, we have shown that they can discriminate between pairs of faces which differ only 10% from one another (figure 1).

Figure 1.

(a) Example of human face pictures that sheep (n=8) learned to discriminate between and below the same pictures morphed so that the difference between them is reduced to 10%; (b) same as (a) but for sheep face pictures and these were also tested on 10 human subjects to provide a comparison; (c) discrimination accuracy curve plotted for sheep discriminating between different degrees of morphing between the two images; (d) same but for humans looking at sheep and (e) for the same sheep looking at humans. In all the cases, 70% choice is considered significant (p<0.05).

To date, we have not investigated the developmental time courses for face recognition in detail. However, initial studies attempted to determine how long it took for lambs to learn to recognize their mother's face; it was clear that this took at least one to two months (Kendrick 1998). Therefore, it seems likely that this reflects a slow time course, as in humans, and a lengthy phase of developing the necessary expertise through learning.

But do sheep recognize faces in the same expert way as humans? Sheep also show classical inversion effects with faces but not objects and can use configural cues from the internal features of faces in the same way as we do (Kendrick et al. 1996; Peirce et al. 2000). They learn to discriminate between the faces of socially familiar individuals to obtain a reward more quickly than with unfamiliar ones (Kendrick et al. 1996) and can remember faces of conspecifics for a period of up to 2 years (Kendrick et al. 2001c). They can also recognize different human faces and show inversion effects, although they take longer to learn to discriminate between them (Kendrick et al. 1996). Under free-viewing conditions, Peirce et al. (2000) found evidence for a left visual-field bias (right-hemisphere advantage) for familiar, but not unfamiliar, sheep faces using a series of experiments using chimeric face composites, an effect also found in human face processing. Interestingly, sheep did not show this visual-field bias for human faces (Peirce et al. 2001) suggesting that there is an expertise and familiarity requirement for developing a right brain hemisphere advantage.

Therefore, sheep seem to have a specialized ability for identifying faces comparable with non-human primates and, as such, provide an important additional animal model for studying the neural basis of face processing.

4. Face attraction

Both monkeys and sheep exhibit preferences for faces of familiar conspecifics, but this does not necessarily address the question as to whether they find faces attractive per se or if some faces are more attractive than others. However, we have shown that female sheep exhibit specific preferences for the faces of individual males independent of social familiarity with them (Kendrick et al. 1995). In addition, simple exposure to face pictures of sheep of the same breed (but unfamiliar individuals) reduces behavioural, autonomic and endocrine indices of stress caused by social isolation (da Costa et al. 2004).

Cross-fostering studies between sheep and goats have also shown that general preferences for sheep and goat faces are determined by the species providing maternal care even when individuals are exposed to extensive social interactions with both the species during development (Kendrick et al. 1998, 2001b). There is a small amount of evidence suggesting that this may also be the case in monkeys (Fujita 1993).

5. Face-based emotion recognition (FaBER)

The expression of emotions in action and physiology is mainly determined by brain structures and neuronal networks, such as the limbic system (overview, Morgane et al. 2005). These networks have been conserved and are, at least to a certain extent, shared between the higher vertebrate phyla.

In any social animal species, emotion displays are sources of information, have evocative functions and provide incentives for desired social behaviour. The expression of emotional signals therefore represents both an emotional response and a social communication. Initially, the ability to extract emotional information from facial expression was attributed exclusively to species with sophisticated orofacial motor systems (primates and humans, Chevalier-Skolnikoff 1973; Sherwood et al. 2005). However, our own research on sheep which, as we have described above, have excellent acuity in using visual cues for recognizing the identity of individuals from facial cues, also reveals a capacity for responding to emotion cues in faces. Therefore, it seems likely that face-based emotion recognition (FaBER) might be quite widespread in mammals with good visual acuity.

Obviously, carrying out formal behavioural assessments of face-emotion recognition in animals can be quite difficult in terms of determining the optimal stimulus face pictures for conveying a particular emotion expression. This is important not only for the face appearance, but also in terms of the role of dynamic aspects of the making of expression. Another very important consideration is to control face stimuli such that neither identity cues nor subtle differences in the images used can be used to make a discrimination independent of the emotion cues.

(a) Face emotion recognition in non-human primates

Species with highly developed orofacial motor systems, such as apes and monkeys (e.g. Huber 1931) possess a large repertoire of emotional facial displays. Like humans, facial expressions in non-human primates are not limited to ‘discrete’ displays of a given emotion, allowing the expressed emotion to be ‘graded’ (Marler 1976). Thus, facial expression of emotion is partly a dynamic process involving eye, eyelid and mouth movements as well as changes in the shape of a facial feature or the appearance of additional features (e.g. displaying teeth; Van Hooff 1962). This means that FaBER requires the perception and processing of both static and transient facial features, including different face views (e.g. full versus profile) and head positions. This explains why, in general, the performance of animals during any kind of FaBER tests depends strongly on the design of the behavioural tests, e.g. whether static face images or dynamic video presentations are presented (e.g. Bauer & Phillip 1983; Parr 2003).

Apes and monkeys looking at face pictures show a predominant interest in the eyes and the region surrounding eyes and mouth, i.e. the primary components of facial expressions (Keating & Keating 1982; Nahm 1997). Behavioural studies in apes suggest that, for certain emotions, FaBER depends on the number of specific facial features that differs between two expression types, such as eye shape, mouth position or the presence of teeth (Parr et al. 1998). However, other expressions are reliably recognized despite the absence of such distinctive visual features (Parr 2003). Like humans, but unlike other monkey species (Kanazawa 1996), apes seem to process conspecific face expressions categorically (Parr 2003). Experiments using face chimeras have also shown that they exhibit a left visual-field advantage for face emotion recognition (Fernandez-Carriba et al. 2002a,b).

(b) Face emotion recognition in sheep

In combination with general body language, sheep, like other ungulates, use facial features to display emotional information. These displays are limited to negatively valenced emotions, such as stress or anxiety. However, it seems reasonable to assume that the absence of a facial display of negative emotion plays an equally important role during social communication. Stress-related facial cues include enlarged protruding eyes, pupils showing the whites, flared nostrils and flattened ears. Are sheep able to recognize (and use) face emotion cues to interact socially?

An initial approach to address this question used face pictures of the same sheep when it was calm or stressed/anxious (following a period of social isolation and where heart rate, as an autonomic indicator of stress, was significantly increased; da Costa et al. 2004). We also used human face pictures with the same individual either smiling or showing an expression of anger. When the sheep (n=8) were given a free choice of the two pictures (they received a food reward whichever one they chose), they showed more than 80% preference for the calm sheep face or the smiling human one over the first 40 trials. Similar results were obtained for face sets using familiar or unfamiliar individual sheep or humans.

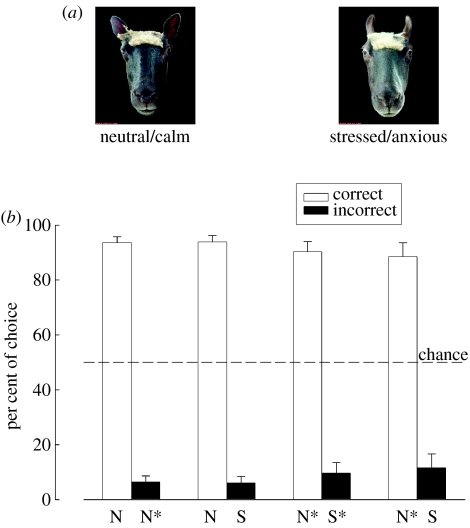

We have also trained sheep to discriminate between pairs of frontal view face pictures of familiar conspecifics, with each pair consisting of a calm versus a stressed facial display (figure 2a) and where choice of the calm face was rewarded but not the stressed one. When presented subsequently with pairs of unfamiliar conspecific faces, sheep significantly preferred the picture displaying a neutral facial expression to the picture displaying signs of stress or anxiety (figure 2b). When the calm face in pairs of familiar conspecifics was replaced by one of the unfamiliar conspecific, the sheep showed a preference for this even though the stressed face was from a familiar animal (figure 2b). As sheep normally prefer the faces of familiar individuals to those of unfamiliar ones, this shows that they find the sight of a stressed face of a familiar individual more aversive than the calm face of an unfamiliar one. From a solely behavioural point of view, this suggests a greater bias towards face emotion cues than for those of identity.

Figure 2.

(a) Pair of sheep faces presented during operant discrimination tasks. An example of (i) neutral/calm sheep face and the same individual displaying (ii) stress/anxiety is shown. During face identity recognition tasks, pairs of neutral/calm faces of two different conspecifics were presented. (b) Performance during face identity and face emotion recognition tasks. Sheep are able to recognize individual conspecifics by their faces ((i) N, neutral/calm face of a familiar conspecific; N*, neutral/calm face of an unfamiliar individual). Furthermore, sheep are able to discriminate between calm and stressed/anxious facial displays of familiar conspecific (N versus S). When presented with the same choice, however, using pictures of unfamiliar conspecifics (N* versus S*), sheep prefer the neutral display. When eventually presented with a choice between unfamiliar neutral faces and familiar stressed/anxious faces ((ii) N* versus S), sheep prefer the unfamiliar neutral faces.

Other experiments have started to reveal the key facial features used for face emotion recognition in sheep. These have shown that ear position and appearance of the eyes are of particular importance. With the latter, the most prominent change in a stressed and anxious state is an increase in the amount of sclera (whites of eyes) visible and pupil dilation. The importance of the amount of white visible in the eyes for fear/stress detection has also been reported in cattle (Sandem et al. 2004). Recent experiments using a two choice maze and chimeric face images have also revealed that sheep, like humans and chimpanzees, use left visual-field cues more than right visual-field ones for detecting negative emotion cues on faces, suggesting a right brain hemisphere bias in detecting negative emotions (Elliker 2006; Kendrick 2006). However, at this stage, we do not know whether the cues from the right visual field are more important for discriminating accurately between face emotions as has been shown in recent human experiments (Indersmitten & Gurr 2003).

6. Face imagery

Although the memory for faces is robust in both monkeys and sheep, this does not mean in itself that they can voluntarily ‘think’ about absent individuals. A key and difficult question to address in animal species other than humans is the capacity to form and use mental imagery. This can allow individuals potentially to ‘think’ of individuals or other objects in their absence and is a key element of consciousness.

However, while it is easy with humans to ask individuals to imagine faces, this is not something which can be done with other species. Thus, it is necessary to develop tasks where solution would appear to depend upon the ability of an individual to form and hold some kind of mental image. In general, such tasks involve objects which disappear for varying lengths of time and whose identity is required to be remembered in order to complete subsequent choice decisions. The classic one of these is delayed matching or non-matching to sample where a learning stimulus is first presented and then disappears followed by a variable delay and then the presentation of two test stimuli, one of which is the same as the original leaning stimulus. The correct decision is then to identify which stimulus is the same (matching) or different (non-matching) from the original. There are a number of variations on the paradigm which can make it simpler since many animal species, including monkeys, find it quite difficult to learn. However, monkeys, like humans, are generally capable of performing such tasks (Sereno & Amador 2006). Sheep and goats can also perform matching to sample tasks with simple visual symbols (Soltysik & Baldwin 1972) and although they may have some ability to do this with faces, we have had difficulty in getting them to perform consistently (Kendrick et al. 2001c). They are nevertheless capable of dealing with delays in remembering the identity and location of disappearing faces at delays of up to 10 s (Man et al. 2003).

Another potential example where mental imagery might be employed is in the context of mental rotation. Many spatial memory tasks require a subject to imagine how an object would appear from another viewpoint, and to solve such problems, it is necessary to rotate the test object in some way mentally (e.g. Gaylord & Marsh 1975). The same can be true for faces where a subject is presented only with a frontal or profile view of a face and then required to match another view to it. In sheep, we have found that they can do this with either human or sheep faces without relearning the task (Kendrick et al. 2001c). However, it is always difficult to overcome the potential criticism that there is some element of stimulus generalization being used, even though frontal and profile views of faces appear very different.

7. Neural encoding of face identity, face emotion and face imagery

Research on humans based primarily on functional imaging and evoked potential experiments, and neuropsychological observations on patients with brain damage has provided a reasonably detailed picture of the neural substrates involved in face identity and face emotion recognition as well as in face imagery (see Kanwisher & Yovel 2006; Skuse 2006). However, electrophysiological work with both monkeys and sheep has been able to investigate in more detail how faces are processed by the neural networks within the different substrates. Most of this work has relied on single-unit recordings which are somewhat limited in terms of their ability to sample reliably large-scale neural networks and where it is difficult to assess the extent to which population/global encoding principles may be operating. However, recent developments have allowed simultaneous recordings to be made from more than 200 neurons simultaneously and some of this work we have carried out in sheep is indeed suggesting the presence of such population encoding within face-processing networks.

8. Face identity recognition

(a) Non-human primates

Studies in non-human face recognition have concentrated primarily on single-unit electrophysiological recordings of the macaque visual system, in particular, the temporal cortex. The temporal cortex receives afferents from the striate cortex via the prestriate area, and these visual inputs, plus the fact that removal of the area led to specific visual defects, led early researchers to surmise that it must have some additional part to play in visual processing beyond that of the simple processing in the visual cortex (Gross et al. 1972).

Initial work on anaesthetized macaques established that the majority of cells in the temporal cortex were sensitive to many separate parameters (size, shape, orientation and direction of movement). However, some cells had very unique trigger features, such as hands and faces (Gross et al. 1972). Further studies involving conscious behaving macaques found specific cells in one particular area of the temporal lobe, the inferior temporal cortex (IT), had complex trigger features and seemed to be modulated by the situation and how much the attention the animal was paying (Gross et al. 1979). This led researchers to suggest that the area had a function in the recognition of complex visual stimuli, such as objects, and was likely influenced by top-down feedback from higher cognitive areas. Additional work on the IT studied the ability of neurons in the area to distinguish and retain behaviourally relevant visual features, suggesting that it was susceptible to the significance of the stimuli (Fuster & Jervey 1981). Many cells in the IT were found to respond non-specifically to all visual stimuli, but a small population was found that responded preferentially to faces. The IT cells were also established to be sensitive not only to the face, but also to the particular configuration of features of the face, with neuronal responses dependent on configuration of facial features but independent of size and position (Desimone et al. 1984; Yamane et al. 1988). Elaboration on configural coding found that cells in the IT were more sensitive to intact faces, with the total number of cells activated by an intact face greater than that of the separate elements of the face (Rolls et al. 1994). More recently, IT neurons have been found to be fine-tuned for specific facial features (Sigala 2004).

In another specific area of the temporal cortex, the superior temporal sulcus (STS), 30% of cells recorded in this area of the cortex exhibited specific trigger features, with some exhibiting selectivity purely for face stimuli (Bruce et al. 1981). Recordings from cells in the STS found that they were sensitive to different views of the head and most cells that responded were view-specific, i.e. only responded to one particular view of the head. They also found more cells that responded to frontal full face and side-profile views than intermediate views (Perrett et al. 1991). It is evident from the early studies that the IT and the STS are involved in different components of the face-recognition process. Hasselmo and co-workers were the first to study the dissociate identity from emotion on face processing, finding that although neurons in both the IT and the STS responded to faces, cells in the IT responded more strongly to the identity of the face, while the STS cells responded more to expression (Hasselmo et al. 1989). It has been suggested that the IT and STS have different roles to play in face processing, with the IT related to face identity and the STS related to other perceptual information (facial views, eye gaze and expression) and that the two areas work together to process the face (Eifuku et al. 2004).

In hierarchical societies, such as those seen in monkeys, a key component of facial expressions is the eye-gaze direction, which is involved in the expression of dominant or submissive social signalling (e.g. maintained stare versus eyes averted; Van Hooff 1962). In this connection, eye-gaze direction and head position are often compatible (e.g. profile view of the head also means that the eyes are averted from the viewer). The upper bank of the STS contains a population of face-specific neurons that are sensitive to head position, with different views of the head activating different subpopulations of face-specific neurons (Perrett et al. 1984, 1985). In addition, and depending on the view of the head, these neurons respond specifically to different eye-gaze direction: populations functionally tuned to full view of the face show high sensitivity to eye contact, whereas populations tuned to profiles respond to averted eyes (Perrett et al. 1985). However, a certain proportion of neurons responding to gaze direction lacks any apparent sensitivity to head view, suggesting that gaze direction and head position are, at least partly, processed independently of each other. Furthermore, most of the neurons are sensitive to the dynamics of facial expressions, i.e. the movement of facial features, such as mouth opening, eyebrow raising, etc. Sensitivity to the expression of static faces is frequently related to mouth configuration, such as open mouth threads and yawning (Perrett et al. 1984, 1985).

The most intriguing question relating to the processing of faces is how a population of neurons encodes a face. Two separate hypotheses have been put forward: firstly that the face could be encoded by distributed patterns of activity in a population of cells, or secondly that a single cell is activated by a specific face—the ‘grandmother cell’ hypothesis. Young and co-workers were the first to look at the how the population of face-sensitive neurons that had been found worked together to encode a face. They reported a high level of redundancy in the cells, suggesting that only a few cells would be sufficient to encode a face. These sparse population responses were statistically significant in relation to the dimensions of the face (Young & Yamane 1992). This hypothesis falls between the two extremes and suggests that a few individual cells which are highly selective to behaviourally relevant stimuli encode object properties. Sugase & Yamane (1999) found evidence that single neurons could convey information about specific faces in terms of response latency, without the need of a population code. They found that global (category) information was communicated in the earliest part of the response (approx. 117 ms after presentation) followed by the fine information (identity and expression) in the later stage on the response profile (approx. 165 ms after presentation; Sugase et al. 1999). The prevailing view is that of Young's sparse population code, with a small network of cells communicating via a temporal code to produce recognition of a face. Examinations of inhibitory neurons in the macaque IT suggest that they may exert a stimulus-specific inhibition on adjacent neurons, which contributes to the shaping of this stimulus selectivity and code in the IT (Eifuku et al. 2004).

Work on human face processing has suggested a hemispheric asymmetry in the processing, with the right hemisphere more responsive to faces than the left. However, work on macaques has found no such lateralization, or in fact the opposite, with cells more responsive in the left STS (Perrett et al. 1988).

How higher cognitive areas, such as those involved in memory and behaviour, influence face processing is also an area of present study. Early work found that the IT is modulated by attentional variables (Fuster 1990) and neurons in the prefrontal cortex selectively process information related to identity in faces and that the neurons responding to faces were localized to a very restricted area, suggesting a specialized system for aiding face processing in higher cognitive areas (O'Scalaidhe et al. 1997). Suggestion has been made that the IT is involved in the analysis of currently viewed shapes, with the prefrontal cortex (PFC) involved in decisions involving category, memory and behavioural meaning (Freedman et al. 2003). The hippocampus may also play a role and extracts the behaviourally relevant stimuli for encoding into memory and the unique combinations of features (Hampson et al. 2004). Taken together, mnemonic activity in the IT may be supported by top-down influences of the PFC, middle temporal cortex structures and the hippocampus (Ranganath & D'Esposito 2005). The visual field has also been found to be biased towards certain stimuli. Objects in the visual field compete for representation and the system is biased in favour of behaviourally relevant stimuli, decided by top-down influences (Chelazzi et al. 1998).

How the face-processing system develops is another interesting question in the field and may illuminate how it is organized. Both humans and monkey infants are capable of fixating a face from birth; therefore, it has been suggested that there must be at least a partially innate ability to recognize faces, and studies of infant monkeys have been carried out to look at how the face system of non-human animals might develop. Study of schematic face preferences in infant macaques ascertained that before one month of age, the configuration of the features of the face was more important in recognition than the features themselves (Kuwahata et al. 2004), and although neurons in the infant monkeys have lower responses than those of adults to faces, these responses are similar to adult monkey neurons (Rodman et al. 1993). Similarly, training has an effect on the activity of neurons responding to faces, with visual expertise being acquired through development and the proportion of cells responding to faces becoming greater in trained monkeys (Kobatake et al. 1998; Crair 1999). This suggests that although neuronal activity is not needed for the development of the gross morphology of the cortex, it is essential for the final connections of neurons. Thus, early exposure to faces is necessary for a completely developed face-processing system (Crair 1999).

A small amount of work has been performed on the ability of other primates apart from macaques to recognize faces. Cotton-top tamarins (a species of New World monkey) seem to have the ability to recognize faces although their face-processing system seems to be simpler than that of macaques. They seem to use only the external features to recognize faces and do not show an inversion effect, suggesting a lack of configural encoding (Weiss et al. 2001).

Research on the ability of animals to recognize their own faces in a self-recognition test has been inconclusive. Chimpanzees marked with a red spot were able to use a mirror to assess and touch the spot on themselves (Gallup 1977). This capability has also been shown in other animals that are seen to have superior cognitive abilities, such as other higher primates (Keenan et al. 2001), dolphins (Marten & Psarakos 1994, 1995; Reiss & Marino 2001), whales and sea lions (Delfour & Marten 2001). In chimpanzees, one study showed approximately 50% of animals tested displayed some ability to recognize themselves (de Veer et al. 2003), but also suggested that this ability may decline with age. In macaques, electrophysiological studies have demonstrated a possible right hemisphere advantage for self-recognition (Keenan et al. 2001). This ability is lacking in lower primates and other animals. The methodology of some of these studies has been criticized and this research leads into a more vague field of whether an animal has a sense of itself and can discriminate between ‘own’ and ‘other’, and the ethical implications surrounding such findings (Griffin 2001; Bekoff 2002).

(b) Sheep

The existence of similar neuronal populations in the temporal cortex of a non-primate species, the sheep, responding preferentially to faces was first reported by Kendrick & Baldwin (1987). These initial single-unit studies deliberately focused on trying to ascertain whether faces of specific behavioural and emotional significance were encoded differentially. They did indeed reveal that separate populations of cells tuned to faces with horns were sensitive to horn size (an important visual feature for determining relative social dominance and also gender) and faces of individuals of the same breed, and in particular, socially familiar individuals (sheep prefer to stay with members of their own breed and form consortships with specific individuals) and faces of potentially threatening species (humans and dogs). As with cells in primate temporal cortex, response latencies were relatively short (ca 100–150 ms) and indicated that the networks were organized for rapid identification of different classes of individual, or facial attributes such as horn size, which evoked discrete behavioural or emotional responses. The response latencies are proportional to the level of identity specificity. Cells responding to simple facial features, such as horns, or generically to faces, have shorter response latencies than those responding only to categories of face or even to one or two specific individuals (Kendrick 1991; Peirce & Kendrick 2002). This suggests a degree of hierarchical encoding within the network (see Kendrick 1994).

Studies revealed that face-sensitive cells often responded poorly to inverted views of faces (Kendrick & Baldwin 1987; Kendrick 1991). Detailed analysis of response profiles to different face views has also confirmed the presence of separate face-sensitive populations which are either view-dependent or view-independent. The majority of view-dependent cells are tuned to a frontal view of the face, although some are also tuned to a profile view. The view-independent cells are in the greatest proportion and particularly in the frontal cortex where this reaches 69% (see figure 4a).

Figure 4.

(a) Histograms show proportions of view-dependent and view-independent face responsive neurons recorded in the temporal (n=69 cells from four sheep) and medial frontal (n=57 cells from five sheep) cortices. (b) Mean±s.e.m. latencies and rise times of 20 neurons in the right frontal cortex responding to the different views or faces in stimulus set shown in figure 3a.

The view-independent cells have significantly longer response latencies than view-dependent ones (figure 4b) and in terms of their response magnitude are relatively insensitive to manipulations of different facial features or inversion or presentation of hemi-faces (figure 3). By contrast, the view-dependent cells tend to show a reduced magnitude of response not only to inversion and view, but also to whether the eyes are visible, or the external or internal face features are removed, or which half of the face is viewed (figure 3). It is these latter types of manipulations which impair behavioural discrimination of faces (Kendrick et al. 1995, 1996; Peirce et al. 2000) and so a reasonable hypothesis is that it is the cells in the network which show view-dependent tuning that are used primarily for accurate and rapid identification of faces, at least in the first instance. The view-independent ones may be of more importance for maintaining recognition as the individual being viewed moves and, as we will discuss in a moment, possibly for the formation of face imagery.

Figure 3.

(a) Picture set used to investigate response specificities of cells responding to a sheep face. (b) Histograms show overall mean±s.e.m. firing rate changes (per cent change from period with fixation spot displayed immediately before face stimulus is displayed) for 14 view-dependent (frontal view only) and 21 view-independent (equivalent responses to front and profile views) neurons recorded from the right temporal cotex of four sheep. (c) Same as (b) but for eight view-dependent and 11 view-independent neurons from the left temporal cortex.

(c) Learning and memory for face identity

Relatively little work has been carried out on how learning influences face-processing networks. Studies using molecular markers of neural plasticity changes in sheep (brain-derived nerve growth factor and its receptor trk-B) have found increased mRNA levels in face-processing regions of the temporal and frontal cortices and basal amygdala after successful social recognition memory formation in sheep (Broad et al. 2002). At the single neuron level, electrophysiological recordings provide clear evidence of learning, with cells showing varying degrees of specific tuning to the faces of particular familiar sheep or human faces in both the temporal and the frontal cortices (Kendrick et al. 2001a). We have also found evidence that categorization of humans as distinct from sheep can be modified; cells responding to socially familiar sheep respond equivalently to a highly familiar human but not to other humans (Kendrick et al. 2001a).

In terms of neural correlates of the behavioural evidence for long-term memory for faces, we found clear evidence for maintained responses to the faces of familiar individual sheep and humans that had not been seen for a year or more (Kendrick et al. 2001a). Interestingly though, while the overall proportion of cells responding to these faces was not significantly influenced, there was a reduction in the number responding to each selectively (Kendrick et al. 2001a). This suggests that becoming familiar with the faces of members in a social group results in a progressive increase in the proportion of cells which selectively encode them. When such individuals leave, the process of forgetting their faces is associated with their faces gradually becoming more generically encoded.

(d) Population encoding

As described earlier, for many years researchers have been recording electrical activity of individual neurons in the IT while animals perform behavioural tasks related to face perception and memory. This technique allows for extensive study of the response properties of single cells while an animal is presented with face stimuli. However, it does not allow one to study the coordinated activity of many neurons simultaneously. Recently, a number of research groups (e.g. Dave et al. 1999; Wiest et al. 2005), including ours (Tate et al. 2005), have started using multielectrode array (MEA) techniques to study large numbers of neurons together to elucidate how a population of neurons might cooperate and compete to encode stimuli. The development of MEA techniques to record neural activity not only allows researchers to continue the study of individual neuronal responses to stimuli, but also allows for investigation into simultaneous activation/deactivation of subpopulations within a neural ensemble. Therefore, an investigator can study both the local response of neurons as well as more global activity changes across a population. This approach combines the high temporal resolution of single-cell recordings with the study of large spatial arrangements of cells. In our laboratory, large-scale MEA recordings have enabled us to acquire extensive datasets in response to face stimuli which, in turn, has also led to the development of novel techniques for their analysis and interpretation (Horton et al. 2005, submitted). Using these data, it is possible to plot basic activity maps showing differential activation and deactivation across the recording area (figure 5) in response to familiar faces. Preliminary results suggested that the learning of identity is associated with a reduction in the number of cells in an ensemble responding to the face pairs (figure 5b) and this reduction may also be primarily in excitatory neurons, suggesting a large role for inhibition during learning. As described later in this article, reduction due to learning—sparsening—may be computationally beneficial for the brain.

Figure 5.

Activity maps: pseudo-colour grids showing activity changes across the recording array during face discrimination tasks. (a) Example of different activation profiles in response to either a novel, non-familiar face or a learnt, familiar face. Overall, the number of units responding to the face decreases as the face becomes more familiar to the subject. Individually, some units no longer react to the stimulus while others show increases/decreases in their firing rates. (b) Activity map across grid in response to familiar face showing higher levels of inhibition as opposed to excitation across the population.

(e) Face attraction

In humans, faces are an important source of sexual attraction (see Cornwell et al. 2006; Fisher et al. 2006) with differential potential for altering activity in brain regions controlling emotional and sexual responses and reward. This also seems to be the case in sheep. Initial studies using a molecular marker of altered brain activation (c-fos mRNA expression) showed that exposure to visual cues from males only activated brain regions beyond the temporal cortex mediating sexual, emotional and reward responses in females when the male was sexually attractive (Ohkura et al. 1997). A further study found that when females were presented with the faces of two males to which they were differentially attracted, only the preferred male elicited release of dopamine, noradrenaline and serotonin in the hypothalamus during the period of their cycle when they found a male sexually attractive (Fabre-Nys et al. 1997).

(f) Lateralization of face-identity processing

The behavioural bias towards using visual cues from the half of the face appearing in the left visual field does indeed seem to have some basis in a right brain hemisphere bias. Several studies using molecular markers of altered brain activation (c-fos and zif/268 mRNA expression) have shown significant changes only in the right inferior and superior temporal cortices, frontal cortex and basolateral amygdala (Broad et al. 2000; da Costa et al. 2004).

However, as with observations from work on rhesus monkey, temporal cortex single-unit recordings do not reveal differences in the numbers or tuning specificities of face-sensitive cells in the right and the left hemispheres (Peirce & Kendrick 2002). The only exception to this is that view-dependent cells in the right hemisphere show a more pronounced reduction in response to faces where the half of the face appearing in the left visual field is obscured (figure 3). However, there are pronounced response latency differences in cells tuned to categories of faces or specific individuals as distinct from those with generic responses to all visual objects or faces. Cell responses can be up to 400 ms faster in the right than in the left temporal cortex (Peirce & Kendrick 2002). Indeed, the response latencies of many of the cells in the left hemisphere are longer than the time the animals need to make an accurate identification of faces. This has led us to speculate that the right hemisphere may indeed be involved primarily in face identification with the left dealing more with the behavioural, emotional and mnemonic consequences of recognition. Present work in this laboratory using MEA electrophysiological recordings has confirmed these latency differences between the two hemispheres and aims to try to elucidate potential encoding differences that may exist.

(g) Comparisons with human face identity recognition

As has already been discussed, human studies have primarily relied on non-invasive neuroimaging studies which cannot, unlike the above electrophysiological studies on monkeys and sheep, reveal detailed neural network encoding principles. However, in general, similar brain substrates seem to be involved in the different species, although in humans a highly delineated area in the temporal lobe, the fusiform face area, has been identified (Kanwisher et al. 1997).

Two recent studies have crossed this threshold, using functional magnetic resonance imaging (fMRI) scanning on macaque monkeys. ‘Face patches’ have been established in the areas from V4 to IT using fMRI, whereas previous studies had always suggested that face-responsive neurons were scattered throughout the temporal cortex, with limited concentration in one area (Tsao et al. 2003). Specific face selective areas have also been found in the posterior and the anterior STS of the macaque using fMRI (Pinsk et al. 2005); this study also found a hemispheric asymmetry, with the posterior STS more active in the right hemisphere.

Kanwisher & Yovel (2006) argue for the merits of a face-specific system over domain-general alternatives in humans, so is the system found in animals also face-specific? The evidence from studies involving animal face processing supports this view. Animals are able to recognize conspecific faces with a degree of accuracy comparative with humans and also exhibit similar patterns of recognition, such as configural coding, inversion effects and view invariance. It seems logical that conserved mechanisms for face processing will exist alongside increasing complexities of the visual system. Although the lack of orofacial musculature of lower primates and mammals reduces the complexity of their faces, it is still evident that animals using vision as their primary sense could, and in fact do, use the face for both identity recognition and expression recognition.

9. Face-based emotion recognition

The functional model of face processing by Bruce & Young (1986) still offers the best fit to present findings and is applied to humans and animals alike. It proposes separate parallel routes for processing facial identity and facial expression cues. However, by extensively reviewing the existing experimental evidence in humans and animals, the actual degree of separation between both pathways has recently been questioned (Calder & Young 2005).

One major reason for this challenge is the experimental design of the studies involved: relatively few functional imaging (George et al. 1993; Sergent et al. 1994; Winston et al. 2004) and electrophysiological (Hasselmo et al. 1989; Sugase et al. 1999) approaches have investigated the processing of facial identity and expression in a single experiment, and their results are inconsistent. Furthermore, in animals, face-emotion processing has been almost exclusively addressed post-perceptually, despite the presentation of faces with different expressions often forming ‘routine’ parts of experimental paradigms, e.g. to assess changes in general cognitive abilities as part of aetiological studies (e.g. Lacreuse & Herndon 2003) or in the context of more general brain-functional investigations (e.g. O'Scalaidhe et al. 1999). This means, despite a considerable amount of behavioural evidence showing that a variety of animal species are capable of recognizing faces, still very little is known about the cellular mechanism underlying face identity and face expression recognition and their mutual interaction.

In animal studies in particular, and from an experimental point of view, one of the greatest challenges is still the design of test paradigms that allow for an experimental distinction between the neural mechanisms encoding face identity, as opposed to face expression/emotion.

(a) Non-human primates

In general, the primate brain contains over 30 regions dedicated to visual processing, including areas with neurons responding to visual social signals such as facial expressions. In apes and monkeys, neurons responsive to facial expression are predominantly (but not exclusively) located in the upper and lower bank of the STS, whereas neurons responding to identity are primarily (but not exclusively) found in the IT region. Furthermore, within the population of face-specific neurons responding to expression, responses of individual neurons are related to particular expressions, such as threat or fear (Perrett et al. 1984; Hasselmo et al. 1989). Neurons particularly responsive to ‘facial feature arrangement’ and ‘overall configuration of many features’ had been previously identified in the macaque IT region (Desimone et al. 1984; Baylis et al. 1985). Based on the stimulus paradigms used (i.e. monkey face with neutral expression versus same picture with scrambled features, Desimone et al. 1984; faces with different expression in different individuals, Rolls 1984; Baylis et al. 1985), no direct conclusion as to whether these neurons encode identity and/or expression can be drawn. However, taken together with investigations correlating quantified facial features, such as intereye and eye-to-mouth distances, with response characteristics of face-specific neurons in the macaque IT region (Yamane et al. 1988) and together with recordings from infant monkeys (Rodman et al. 1991), this supports the hypothesis that at least some of the IT neurons might also be involved in FaBER. A recent detailed analysis of the response of individual face-specific neurons in the macaque IT cortex, including the STS region, revealed that the response encodes two different scales of information subsequently: (i) global information, thereby initially categorizing a visual stimulus as either face or object, followed by (ii) fine information, and depending on type/location of the cell, encoding either identity or expression (Sugase et al. 1999).

(b) Sheep

Face emotion research in monkeys has almost exclusively focused upon the response characteristics of single neurons to face emotion cues. By using bilateral MEAs, and thereby recording from a large number of neurons simultaneously, our work now extends this scope and focuses on principles of how face emotion information is represented across subpopulations of neurons in the temporal cortex during FaBER tasks and on how this representation interacts with the representation of other facial non-emotional cues, such as identity.

Our results show that the total number of recorded cells responding either with an increase (i.e. ‘excitatory’-type, E-type) or a decrease (‘inhibitory’-type, I-type response) in spike frequency to face emotion stimuli did not change significantly over the four month time period during which the recordings were made. In addition, the level of population sparseness, i.e. the proportion of cells responsive to face emotion stimuli, was observed to be constant.

Overall, approximately 90% of the responsive cells exhibited exclusively either E- or I-type responses to a face emotion stimulus. For the remaining cells, both E- and I- responses were found. None of the units examined exhibited high selectivity for a particular identity (familiar, unfamiliar) or emotional (stressed/anxious, calm) cue.

Differential activity maps (figure 6a) comparing the population response with face emotion stimuli displayed by familiar versus unfamiliar conspecifics (figure 2) were used for an analysis of the spatio-temporal activity patterns. In each hemisphere, the total number of responsive neurons did not change significantly depending on whether the individuals presented were familiar or unfamiliar (figure 6b). However, during trials using familiar conspecifics, the number of I-type responses was significantly higher and the number of E-type responses significantly lower than in trials using novel faces (figure 6b).

Figure 6.

(a) Differential activity map of temporal cortical neurons during a face-based emotion recognition (FaBER) task. The map highlights the activity differences across the population observed in response to an emotional (stressed/anxious) face stimulus of a familiar sheep face as opposed to an unfamiliar sheep face. (b) In both hemispheres, the total number of neurons (T) responding to the face does not change irrespective of whether a familiar (N) or an unfamiliar (N*) neutral/calm sheep face is presented during the discrimination task. However, the proportion of E-type neurons (i.e. neurons with an increased firing rate, E) is higher whereas the number of I-type responses (decreased firing rate, I) is lower across the population if the neutral/calm face is unfamiliar (N*). (c) Bihemispherical comparison of the response latencies (ΔtL−R) of the neurons reveals right hemisphere dominance during sole face identity recognition tasks where animals discriminated between a familiar neutral/calm (N) and an unfamiliar neutral/calm (N*) face. Right hemisphere dominance is less pronounced during the face emotion recognition tasks (N versus S, N* versus S).

The difference between given activity maps (so-called array difference, i.e. the numeric difference as an estimate of the reliability of the representation of a particular face stimulus across a defined population of neurons) was approximately 16% when a given face emotion stimulus was presented repeatedly. Furthermore, the array difference between average activity maps representing faces of different familiar conspecifics was significantly higher (29%), whereas the average array difference reached its highest values for familiar versus unfamiliar faces (approx. 33%).

For both E- and I-type neurons, our present results do not show a significant difference between the average response latencies to a face emotion stimulus displayed by a familiar or by an unfamiliar conspecific.

Our data suggest that in sheep, the representation of face identity and face emotion relies to a certain extent on population encoding by cortical visual networks.

What are the advantages of a distributed representation of face information using population encoding in cortical networks, as opposed to more ‘local’ encoding schemes?

Algorithms applied to samples of single-cell data obtained from the primate temporal visual cortex revealed that the representational capacity (i.e. the number of stimuli that can be encoded) of a population of neurons using a distributed representation scheme increases exponentially as the number of cells in the population increases (Rolls et al. 1997). Since visual information is highly complex, the representational capacity using a population encoding scheme is therefore much higher than using local encoding schemes, such as ‘grandmother’ cells, in which the number of stimuli encoded increases only linearly with population size. Furthermore, extremely sparse codes, such as ‘grandmother’ schemes where the coding of relatively large amounts of information (e.g. a whole face plus different views), confer high sensitivity to damage and low capacity.

Nevertheless, using sparse codes in combination with distributed representations offers certain theoretical advantages (Perez-Orive et al. 2002). These include a reduction in the amount of overlap between individual representations, thereby limiting interference between memories, much simpler (hence involving fewer elements) comparisons between stimulus-evoked activity patterns and stored memories, e.g. in terms of any amygdala–cortical emotional assessment (Aggleton & Young 2000; Sato et al. 2004) and, in general, more synthetic representations. Finally, given a large total population size of the temporal cortex visual neurons and levels of sparseness that are not extreme, the memory capacity of the system can still be very high.

Our present findings also suggest that the process of recognition, i.e. ‘getting familiar’ with a conspecific, might be represented by an increased number of inhibited neurons across the recorded population. Interestingly, research on olfactory networks in vertebrates and, particularly in invertebrates, suggests a similar principle, showing increased numbers of inhibited neurons during olfactory memory formation (Sachse & Galizia 2002). Increased levels of inhibition might therefore be a common principle whereby neural networks encode complex multi-component stimuli such as odours or faces. This can enable a globally modulated, contrast-enhanced and predictable representation of information across subpopulations of neurons.

(c) Lateralization of face-emotion processing

Like humans, apes (Parr & Hopkins 2000; Fernandez-Carriba et al. 2002a,b) and monkeys (Hauser 1993; Hook-Costigan & Rogers 1998) express emotions more intensively in the left hemi-face. In this context, cerebral asymmetries (lateralization and laterality) in emotional processing have received a great deal of attention. Presently, there are two major theories: (i) the right-hemisphere theory (e.g. Suberi & McKeever 1977; Ley & Bryden 1979; Borod et al. 1997) suggests that the right hemisphere is predominantly processing all emotional information regardless of its valence; on the contrary, (ii) the valence theory (e.g. Davidson 1992) suggests that the two cerebral hemispheres are differentially involved in emotion processing, with the left hemisphere dominating positive emotions, whereas negative emotions are associated with higher right-hemisphere activity.

In animals, evidence on lateralization during face emotion recognition comes predominantly from behavioural studies. Furthermore, the evidence regarding the nature of lateralization is still conflicting. For example, when using human chimeric faces as stimuli, findings in chimpanzees suggest a right hemisphere advantage perceiving positive emotional (smile) facial displays (Morris & Hopkins 1993). For the same species, a more recent study has shown a left-hemisphere bias during neutral and positive (play) visual emotional stimuli, whereas a right-hemisphere bias was found during negative (aggression) stimuli (Parr & Hopkins 2000). However, studies in adult split-brain monkeys by using a paradigm separating facial identity from (positive and negative) expression cues suggest right-hemisphere superiority (Vermeire et al. 1998), more pronounced in females than in males (Vermeire & Hamilton 1998).

Similar to humans, apes and monkeys, our data in sheep indicate right hemisphere dominance (figure 6c). However, our present data suggest that these hemispherical differences might be less pronounced during face emotion recognition, implicating a greater involvement of the left hemisphere in face emotion tasks. Interestingly, this is in agreement with the valence hypothesis in humans, suggesting a left-biased processing of positive (in our case, neutral) facial information, as opposed to negative emotions, biased to towards the right (e.g. Demaree et al. 2005).

10. Neural encoding of face imagery

In humans, brain-imaging studies have revealed a remarkable concordance in patterns of activation changes in face-processing regions during actual perception of faces and imagining them (Kanwisher & Yovel 2006), suggesting that common networks are involved in face perception and face imagery. Is this also the case in other species? If so, can electrophysiological studies reveal potential differences in the neural representation of perceived and imagined faces?

(a) Neural activity during face imagery in non-human primates

Only one study has investigated electrophysiological responses of IT neurons in conditions where object permanence is being tested in the context of individual recognition and therefore face/body imagery might be anticipated to occur. This used a simple approach of individuals/objects disappearing and reappearing from behind a screen, and found cells in STS which showed activity changes for periods of up to 11 s when objects were completely obscured behind the screen (Baker et al. 2001). Many studies have shown cell activity changes being maintained during the delay period in a matching or non-matching to sample experiment in a variety of brain regions (O'Scalaidhe et al. 1997, 1999), but we are not aware of any using faces during recordings in the temporal cortex.

(b) Neural activity during face imagery in sheep

We have used various approaches to attempt to cue face imagery in sheep. The first of these was to use the high level of motivation that maternal ewes have to find their lambs when they are temporarily absent. Under these circumstances, showing a picture of the lamb's face or hearing its bleat (but not a scrambled sequence of the bleat) activated (c-fos mRNA expression), the region of the temporal cortex that responds to faces. Since cells have not normally been found in this region that respond specifically to vocalizations as opposed to simple auditory stimuli, one possibility is that the vocal cue has evoked a mental image of the missing lamb's face (Kendrick et al. 2001c).

Single unit recording approaches have also been used in varying contexts where face imagery might be evoked. In the first of these, video sequences revealing a highly familiar sheep in its home pen were used, while recordings were made from cells in the temporal cortex that responded preferentially to the individual's face. It was found that the cells showed activity changes both in anticipation of the appearance of the sheep in the film as well as when it actually appeared. They also responded to the point in a film where the sheep should have appeared, but did not because it had been edited out (Kendrick et al. 2001c). This certainly shows that these face-sensitive cells can respond in the absence of a face and may reflect the generation of face imagery, although there is obviously no way of proving this.

Finally, in a recent preliminary experiment, we have made recordings from cells in the frontal cortex of maternal sheep that respond significantly to the sight of their lamb's face. The view-independent population of these cells also showed highly selective responses to the odour of the lamb as well. However, when face and odour were combined, there was no alteration in the magnitude of the response (figure 7) and very few other cells were responsive to odours. Again, this might suggest that the odour stimuli were evoking face imagery. If so, it is interesting that it is only the view-independent cells which are involved and not the view-dependent ones. This again suggests that the networks of view-dependent cells are particularly involved in identification of faces which are actually perceived.

Figure 7.

Mean±s.e.m. per cent change in firing rate in frontal cortex neurons in five maternal sheep responding to the sight of their lamb's face and to its odour. View-dependent neurons (n=12) only respond to a frontal view and not to the lamb's odour. However, view-independent neurons (n=25) respond equivalently to frontal and profile views and also to just the lamb's odour. For 8 of these cells when the lamb's face and odour were presented in combination there was no evidence for any additive response.

It is clearly necessary for more experimental approaches to be carried out to investigate the capacity for these face-recognition networks in non-human species to generate imagery and to establish how this differs from actual perception of faces. However, at this stage, there is at least some supportive evidence for the idea that, like the human brain, there may also be considerable overlap between imagery and perceptual mechanisms in these species.

11. Conclusions and future directions

In this paper, we have reviewed experimental evidence for specialized face processing systems in animals, available from behavioural, electrophysiological and neuroimaging studies.

Behavioural studies of the capacities for different animal species to use visual cues for face identity and face emotion are still relatively sparse compared with humans. Yet the obvious prediction from studies showing that mammals other than primates do appear to have sophisticated recognition abilities in this respect suggests that the use of face cues may be quite wide-spread in species with reasonable visual acuity. However, we still know relatively little about how extensively emotion cues are transmitted via changes in facial appearance in these species, or indeed, how able they are at distinguishing the many different expressions they may see on our faces when they interact closely with us. Similarly, faces appear to play an important role for individual social and sexual attraction in other species besides humans, although we still know relatively little about what makes one face more attractive than another and whether some of the same general rules of attraction, such as symmetry and configuration, are important.

As in humans, many animal studies have revealed face-responsive areas in the temporal cortex, with individual neurons responding preferentially to faces as opposed to other visual objects. Where the tuning of these cells has been tested, they show high specificity for different categories of face information, including different faces and various face views, features and expressions.

Whereas recording from single cells allows a controlled and detailed analysis of individual cell response properties, this also means focusing on the local mechanisms of face representations in the brain. However, evidence from a variety of studies using different approaches, including recent developments in animal neuroimaging studies, suggests that face recognition relies on a distributed network of subpopulations of neurons located in various brain areas.

In sheep, our approach to bridge the gap between the single-cell recording and neuroimaging is the use of MEA electrophysiology. This enables us not only to study the individual cell responses to a face, but also to investigate an entire (though limited by array size) subpopulation of neurons involved in the processing of face information. Our data suggest that the representation of face identity and face emotion relies to a certain extent on sparse population encoding by cortical visual networks. However, major questions of what neural mechanisms underlie this encoding process still remain unanswered. What core principles might neuronal populations employ to represent information? How does the neural activity of a given population of neurons correspond to a particular visual stimulus? How might previous experience affect this activity? How does a population differentially encode face emotion as opposed to face identity? How does encoding in the temporal cortex influence patterns of activity in regions important for emotional control and expression, such as the frontal cortex and the amygdala? How does representation of mental images of faces differ from actual perception of them?

Present investigations in our laboratory are focused on spatio-temporal distributions of neural activity and their changes in relation to identity, emotion and imagery cues. This also includes analysing the formation and repetition of certain activity patterns across the population using specific pattern-detecting software and identifying correlational strength between pairs of neurons of a given population. These are already beginning to reveal the presence of altered patterning and correlation shifts across distributed networks of neurons independent of firing frequency changes (Tate et al. 2005).

The ability to recognize faces and their emotional content is a key feature underlying successful social interactions and bonding. However, it is clear that social cognition is a highly complex task which relies strongly on additional features such as the ability to direct attention towards conspecifics, interpreting the emotional context of visual cues presented and relating present experience to memory of previously encountered situations. Only by combining behavioural, neuroimaging, single-cell and MEA studies on all these systems working together, and by employing computational approaches, will we be able to move closer to understanding the organizational and functional principles that operate within the social brain.

Acknowledgments

This work was partly supported by a BBSRC Grant (BBS/B/07961). Dr Jon Peirce contributed to some of the single cell recording studies described and we are grateful to Mr Michael Hinton for his help with preparing the figures.

Footnotes

One contribution of 14 to a theme issue ‘The neurobiology of social recognition, attraction and bonding’.

References

- Aggleton J.P, Young A.W. The enigma of the amygdala: on its contribution to human emotion. In: Lane R.D, Nadel L, editors. Cognitive neuroscience of emotion. Oxford University Press; New York, NY: 2000. pp. 106–128. [Google Scholar]

- Baker C.I, Keysers C, Jellema T, Wicker B, Perrett D.I. Neuronal representation of disappearing and hidden objects in temporal cortex of the macaque. Exp. Brain. Res. 2001;140:375–381. doi: 10.1007/s002210100828. doi:10.1007/s002210100828 [DOI] [PubMed] [Google Scholar]

- Bauer H.R, Philip M. Facial and vocal individual recognition in the common chimpanzee. Psychol. Rec. 1983;33:161–170. [Google Scholar]

- Baylis G.C, Rolls E.T, Leonard C.M. Selectivity between faces in the responses of a population of neurons in the cortex in the superior temporal sulcus of the monkey. Brain. Res. 1985;342:91–102. doi: 10.1016/0006-8993(85)91356-3. doi:10.1016/0006-8993(85)91356-3 [DOI] [PubMed] [Google Scholar]

- Bekoff M. Animal reflections. Nature. 2002;419:255. doi: 10.1038/419255a. doi:10.1038/419255a [DOI] [PubMed] [Google Scholar]

- Borod J.C, Haywood C.S, Koff E. Neuropsychological aspects of facial asymmetry during emotional expression: a review of the normal adult literature. Neuropsychol. Rev. 1997;7:41–60. doi: 10.1007/BF02876972. [DOI] [PubMed] [Google Scholar]

- Broad K.D, Mimmack M.L, Kendrick K.M. Is right hemisphere specialization for face discrimination specific to humans? Eur. J. Neurosci. 2000;12:731–741. doi: 10.1046/j.1460-9568.2000.00934.x. doi:10.1046/j.1460-9568.2000.00934.x [DOI] [PubMed] [Google Scholar]

- Broad K.D, Mimmack M.L, Keverne E.B, Kendrick K.M. Increased BDNF and trk-B mRNA expression in cortical and limbic regions following formation of a social recognition memory. Eur. J. Neurosci. 2002;16:2166–2174. doi: 10.1046/j.1460-9568.2002.02311.x. doi:10.1046/j.1460-9568.2002.02311.x [DOI] [PubMed] [Google Scholar]

- Bruce C. Face recognition by monkeys: absence of an inversion effect. Neuropsychologia. 1982;20:515–521. doi: 10.1016/0028-3932(82)90025-2. doi:10.1016/0028-3932(82)90025-2 [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. Br. J. Psychol. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross C.G. Visual properties of neurons in a polysensory area in the superior temporal sulcus of the macaque. J. Neurophysiol. 1981;46(2):369–384. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Calder A.J, Young A.W. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. doi:10.1038/nrn1724 [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Duncan J, Miller E.K, Desimone R. Responses of neurons in inferior temporal cortex during memory-guided visual search. J. Neurophysiol. 1998;80:2918–2940. doi: 10.1152/jn.1998.80.6.2918. [DOI] [PubMed] [Google Scholar]

- Chevalier-Skolnikoff S. Visual and tactile communication in Macaca arctoides and its ontogenetic development. Am. J. Phys. Anthropol. 1973;38:515–518. doi: 10.1002/ajpa.1330380258. doi:10.1002/ajpa.1330380258 [DOI] [PubMed] [Google Scholar]

- Cornwell R.E, Law Smith M.J, Boothroyd L.G, Moore F.R, Davis, H.P, Stirrat M, Tiddeman B, Perrett D.I. Reproductive strategy, sexual development and attraction to facial characteristics. Phil. Trans. R. Soc. B. 2006;361:2143–2154. doi: 10.1098/rstb.2006.1936. doi:10.1098/rstb.2006.1936 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crair M.C. Neuronal activity during development: permissive or instructive? Curr. Opin. Neurobiol. 1999;9:88–93. doi: 10.1016/s0959-4388(99)80011-7. doi:10.1016/S0959-4388(99)80011-7 [DOI] [PubMed] [Google Scholar]

- Da Costa A.P.C, Leigh A.E, Man M.-S, Kendrick K.M. Face pictures reduce behavioural, autonomic, endocrine and neural indices of stress and fear in sheep. Proc. R. Soc. B. 2004;271:2077–2084. doi: 10.1098/rspb.2004.2831. doi:10.1098/rspb.2004.2831 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dave A.S, Yu A.C, Gilpin J.J, Margoliash D. Methods for chronic neuronal ensemble recordings in singing birds. In: Nicolelis M.A.L, editor. Methods for neural ensemble recordings. CRC Press; Boca Raton, FL: 1999. pp. 101–120. [Google Scholar]

- Davidson R.J. Anterior cerebral asymmetry and the nature of emotion. Brain Cogn. 1992;20:125–151. doi: 10.1016/0278-2626(92)90065-t. doi:10.1016/0278-2626(92)90065-T [DOI] [PubMed] [Google Scholar]

- Delfour F, Marten K. Mirror image processing in three marine mammal species: killer whales (Orcinus orca), false killer whales (Pseudorca crassidens) and California sea lions (Zalophus californianus) Behav. Process. 2001;53:181–190. doi: 10.1016/s0376-6357(01)00134-6. doi:10.1016/S0376-6357(01)00134-6 [DOI] [PubMed] [Google Scholar]

- Demaree H.A, Everhart D.E, Youngstrom E.A, Harrison D.W. Brain lateralization of emotional processing: historical roots and a future incorporating “dominance”. Behav. Cogn. Neurosci. Rev. 2005;4:3–20. doi: 10.1177/1534582305276837. doi:10.1177/1534582305276837 [DOI] [PubMed] [Google Scholar]

- Desimone R, Albright T.D, Gross C.G, Bruce C. Stimulus-selective properties of inferior temporal neurons in the macaque. J. Neurosci. 1984;4:2051–2062. doi: 10.1523/JNEUROSCI.04-08-02051.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Veer M.W, Gallup G.G, Theall L.A, van den Bos R, Povinelli D.J. An 8-year longitudinal study of mirror self-recognition in chimpanzees (Pan troglodytes) Neuropsychologia. 2003;41:229–234. doi: 10.1016/s0028-3932(02)00153-7. doi:10.1016/S0028-3932(02)00153-7 [DOI] [PubMed] [Google Scholar]

- Dittrich W.H. Representation of faces in longtailed macaques (Macaca fascicularis) Ethology. 1990;85:265–278. [Google Scholar]

- Eifuku S, De Souza W.C, Tamura R, Nishijo H, Ono T. Neuronal correlates of face identification in the monkey anterior temporal cortical areas. J. Neurophysiol. 2004;91:358–371. doi: 10.1152/jn.00198.2003. doi:10.1152/jn.00198.2003 [DOI] [PubMed] [Google Scholar]

- Elliker, K. 2006 Recognition of emotion in sheep. Ph.D. thesis, University of Cambridge.

- Fabre-Nys C, Ohkura S, Kendrick K.M. Male faces and odours evoke differential patterns of neurochemical release in the mediobasal hypothalamus of the ewe during oestrus: an insight into sexual motivation? Eur. J. Neurosci. 1997;9:1666–1677. doi: 10.1111/j.1460-9568.1997.tb01524.x. doi:10.1111/j.1460-9568.1997.tb01524.x [DOI] [PubMed] [Google Scholar]