Abstract

For more than a century we have understood that our brain's left hemisphere is the primary site for processing language, yet why this is so has remained more elusive. Using positron emission tomography, we report cerebral blood flow activity in profoundly deaf signers processing specific aspects of sign language in key brain sites widely assumed to be unimodal speech or sound processing areas: the left inferior frontal cortex when signers produced meaningful signs, and the planum temporale bilaterally when they viewed signs or meaningless parts of signs (sign-phonetic and syllabic units). Contrary to prevailing wisdom, the planum temporale may not be exclusively dedicated to processing speech sounds, but may be specialized for processing more abstract properties essential to language that can engage multiple modalities. We hypothesize that the neural tissue involved in language processing may not be prespecified exclusively by sensory modality (such as sound) but may entail polymodal neural tissue that has evolved unique sensitivity to aspects of the patterning of natural language. Such neural specialization for aspects of language patterning appears to be neurally unmodifiable in so far as languages with radically different sensory modalities such as speech and sign are processed at similar brain sites, while, at the same time, the neural pathways for expressing and perceiving natural language appear to be neurally highly modifiable.

The left hemisphere of the human brain has been understood to be the primary site of language processing for more than 100 years, with the key prevailing question being why is this so; what is the driving force behind such organization? Recent functional imaging studies of the brain have provided powerful support for the view that specific language functions and specific brain sites are uniquely linked, including those demonstrating increased regional cerebral blood flow (rCBF) in portions of the left superior and middle temporal gyri as well as the left premotor cortex when processing speech sounds (1–7), and the left inferior frontal cortex (LIFC) when searching, retrieving, and generating information about spoken words (8–10). The view that language functions processed at specific left-hemisphere sites reflects its dedication to the motor articulation of speaking or the sensory processing of hearing speech and sound is particularly evident regarding the left planum temporale (PT), which participates in the processing of meaningless phonetic-syllabic units that form the basis of all words and sentences in human language. This PT region of the superior temporal gyrus (STG) forms part of the classically defined Wernicke's receptive language area (11, 12), receives projections from the auditory afferent system (13, 14), and is considered to constitute unimodal secondary auditory cortex in both structure and function based on cytoarchitectonic, chemoarchitectonic, and connectivity criteria. The prevailing fundamental problem, however, is whether the brain sites involved in language processing are determined exclusively by the mechanisms for speaking and hearing, or whether they also involve tissue dedicated to aspects of the patterning of natural language.

The existence of naturally evolved signed languages of deaf people provides a powerful research opportunity to explore the underlying neural basis for language organization in the human brain. Signed and spoken languages possess identical levels of linguistic organization (for example, phonology, morphology, syntax, semantics) (15), but signed languages use hands and vision whose neural substrates are distinct from the substrates associated with talking and hearing. If the functional neuroanatomy of human spoken language is determined exclusively by the production and perception of speech and sound, then signed and spoken languages may exhibit distinct patterns of cerebral organization. Studies of profoundly deaf babies acquiring signed languages have found that they babble on their hands with the same phonetic and syllabic linguistic organization as hearing babies babble vocally (16) and acquire signed languages on the same maturational time table as spoken languages (17, 18), suggesting that common brain mechanisms may govern the acquisition of signed and spoken languages despite radical modality differences (19, 20).

To date, however, no study has directly demonstrated that the specific left hemisphere sites observed in the processing of specific linguistic functions in speech are also the same for sign. Instead, the few lesion and imaging studies have yielded important, but contradictory, results. For example, the landmark lesion studies of brain-damaged deaf adults by Bellugi and colleagues (21, 22) have shown that deaf signers suffer aphasic symptoms in sign language following left-hemisphere lesions that are similar to those seen in Broca's and Wernicke's aphasia in hearing patients. Because lasting deficits to signed language processing are not usually observed after lesions to the right hemisphere, the evidence suggests that the contribution of the right hemisphere may not be central to the processing of natural signed languages. Findings from the handful of recent brain-imaging studies neither fully concur with this view, nor are they consistent across studies. For example, one functional MRI study of deaf adults viewing sentences in sign language (23, 24) has reported activation within left-hemisphere language areas, especially dorsolateral left prefrontal cortex, the inferior precentral sulcus, and the left anterior superior temporal sulcus. However, robust and extensive bilateral activation of homologous areas within the right hemisphere also was observed, including the entire extent of the right superior temporal lobe, the angular gyrus, and the inferior prefrontal cortex—an activation pattern not typical of spoken language processing. Likewise one positron emission tomography (PET) study of deaf adults viewing signed sentences (25) found both left and right hemisphere activation, although the right-hemisphere activation sites differed; here, activation was observed in the right occipital lobe and the parieto-occipital parts of the right hemisphere. By contrast, one other PET study in which deaf adults covertly signed simple sentences yielded maximal activation in the left inferior frontal region, but with no significant right hemisphere activation (26).

In addition to general contradictions regarding the presence or absence of right hemisphere activation in deaf signers, opposite claims exist regarding the participation of specific brain regions, especially involving the STG. One study already noted above (23, 24) reports significant superior temporal sulcus, but not STG, activity, although their control condition involved nonsign gestures that may have obscured such activity. One other study (27), using both functional MRI and magnetoencephalography, specifically reports no evidence of STG activity in response to visual stimuli in one deaf person. One other PET study (28) of a single deaf subject viewing signing reports little of the typical and expected language-related cerebral activity observed above, but does report bilateral STG activity, although which aspects of the stimuli or task resulted in this pattern of activity are not specified.

Inconsistency among the above findings may result from two factors. First, previous deaf imaging studies have used unconstrained tasks involving passive viewing of signing. The danger here is that there is no way to ensure that all subjects are attending to and processing the stimuli in the same way. Second, previous studies have used language stimuli of a general, but complex, sort involving largely sentence level stimuli, which, in turn, has yielded activation results of a general sort. The linguistic structure of even a simple sentence in natural language is complex, involving multiple grammatical components (a noun and a verb, and other information about tense, gender, and number with which nouns and verbs must agree), and carries semantic information. In spoken language, the processing of sentences simultaneously activates multiple brain regions that can vary across speakers, involving areas of activation that include both classical left-hemisphere speech-language areas and those that involve other regions not necessarily crucial to linguistic processes, such as visual processing areas. Variable and distributed cerebral activation in deaf signers processing signed sentences may also occur. Both factors render it difficult to link specific brain activation sites to the processing of specific language functions and, crucially, prevent the identification of the neuroanatomical function of specific brain tissue.

Moving beyond the observation that the processing of signed language engages largely the left hemisphere and to some extent the right, we wanted to understand the neural basis by which specific language functions are associated with specific neuroanatomical sites in all human brains, and we believed that natural signed languages can provide key insights into whether language processed at specific brain sites was because of the tissue's sensitivity to sound, per se, or to the patterns encoded within it. In the present study, we measured rCBF while deaf signers underwent PET brain scans. Vital to this study's design was our examination of two highly specific levels of language organization in signed languages, including the generation of “sign” (identical to the “word” or “lexical” processing level) and the “phonetic” or “sublexical” levels of language organization, which in all world languages (be they signed or spoken) comprises the restricted set of meaningless units from which a particular natural language is constructed. We intentionally chose these two levels of language organization because their specific cerebral activation sites are relatively well understood in spoken languages and, crucially, their neuroanatomical activation sites are thought to be uniquely linked to sensory-motor mechanisms for hearing and speaking. Also vital to this study's design was our adaptation of standardized tasks for examination of these specific levels of language organization that have already been widely tested in previous functional imaging studies across multiple spoken languages (including, for example, English, French, and Chinese) (1–10). Taken together, this permitted us to make precise predictions about the neuroanatomical correlates of sign-language processing, as well as to test directly speech-based hypotheses about the neural basis of the tissue underlying human language processing in a manner not previously possible. If the brain sites underlying the processing of words and parts of words are specialized exclusively for sound, then deaf people's processing of signs and parts of signs should engage cerebral tissue different from those classically linked to speech. Conversely, if the human brain possesses sensitivity to aspects of the patterning of natural language, then deaf signers processing these specific levels of language organization may engage tissue similar to that observed in hearing speakers, including specifically the LIFC during verb generation.

Here we also studied two entirely distinct cultural groups of deaf people who used two distinct natural signed languages. Five were native signers of American Sign Language (ASL; used in the United States and parts of Canada) and six were native signers of Langue des Signes Québécoise (LSQ; used in Québec and other parts of French Canada). ASL and LSQ are grammatically autonomous, naturally evolved signed languages; our use of two distinct signed languages constitutes another design feature unique to the present research and was intended to provide independent, cross-linguistic replication of the findings within a single study. We further compared these 11 deaf people to 10 English-speaking hearing adult controls who had no knowledge of signed languages. Moreover, we designed five experimental conditions with the intention that participants would not only view stimuli but, crucially, would actively perform specific tasks.

Methods

Subjects (Ss).

The treatment of Ss and all experiments were executed in full compliance with the guidelines set forth by the Ethical Review Board of the Montreal Neurological Hospital and Institute of McGill University. All Ss underwent extensive screening procedures before being selected for participation in the study (see supplemental data, Methods, published on the PNAS web site, www.pnas.org and at LAP www.psych.mcgill.ca/faculty/petitto/petitto.html). All deaf adults were healthy, and congenitally and profoundly deaf from birth; all learned signed language as a first-native language from their profoundly deaf parents or deaf relatives, and all have had signed language as their primary language of communication from birth to present. All deaf adults completed at least a high school education and possessed no other neurological or cognitive deficits; similar criteria were used to select hearing speakers. Finally, the deaf and the hearing adults had equally high linguistic proficiency in each of their respective native languages as was established in our intensive prescanning screening tasks. Deaf Ss were six males (mean age 31 years) and five females (mean age 25 years). Hearing Ss consisted of five males (mean age = 31 years) and five females (mean age = 33 years). All 21 subjects were right-handed.

Conditions.

The five conditions were: (i) visual fixation point, (ii) meaningless, but linguistically organized nonsigns (similar to tasks requiring Ss to listen to meaningless phonetic units produced in short syllable strings), and (iii) meaningful lexical signs (similar to tasks requiring Ss to listen to meaningful real words); in condition (iv) they saw a different set of meaningful signs and were asked to imitate what they had seen (similar to word-repetition/imitation tasks), and in (v) they saw signed nouns and were instructed to generate appropriate signed verbs (similar to verb generation tasks). Hearing controls received the same five conditions, but for condition v they saw a printed English word and generated a spoken verb; this intentional design feature permitted us to evaluate cerebral activity while each subject group performed the identical linguistic task in each of their respective languages. Had all tasks been administered in English to the hearing Ss and all in signed language to the deaf Ss, we would not have been able to identify any differences in cerebral activity between speakers and signers relative to the processing of specific linguistic units. Conversely, had we only used signed stimuli across all deaf and hearing Ss, we would not have been able to evaluate any similarities in cerebral activity when deaf and hearing Ss processed the identical parts of natural language. Five of the 10 hearing controls viewed the ASL stimuli and five viewed LSQ, thereby yielding four experimental groups: deaf ASL, deaf LSQ, hearing controls viewing ASL stimuli (hearing 1), and hearing controls viewing LSQ stimuli (hearing 2).

Stimuli.

To ensure that the visual fixation condition i involved a constrained behavioral state, rather than an unconstrained resting scan, it had the identical temporal and sequential structure as trials presenting experimental stimuli, thereby providing both a visual control and the most equitable condition with which to compare our two deaf and hearing groups. The finger movements used in condition ii were meaningless sign-phonetic units that were syllabically organized into possible but nonexisting, short syllable strings. Like spoken languages, all signs (homologous to words) and sentences in signed languages are formed from a finite set of meaningless units called phonetic units (29), (e.g., the unmarked, frequent phonetic unit in LSQ involving a clenched fist with an extended thumb), which are further organized into syllables (30) (e.g., specific hand shapes organized into temporally constrained, movement-nonmovement alternations). That our sign-phonetic stimuli indeed contained true meaningless phonetic and syllabic units was established by using rigorous psycholinguistic experimental procedures (see supplemental data, Methods). All other stimuli (including English in condition v) were also extensively piloted before their use in the scanner with different volunteers from those finally tested to ensure that all meaningful sign stimuli (conditions iii–iv) were single-handed, high-frequency nouns and that condition v contained meaningful high frequency single-handed nouns that were extremely likely to yield equally high-frequency single-handed appropriate verbs (for each sign in ASL, and separately in LSQ). Signed stimuli were produced by a native signer of ASL and LSQ. All Ss were scanned twice in each of the five conditions using different stimuli. Each stimulus was presented twice per trial for 1.5 s followed by an inter stimulus interval of 4 s within which Ss responded (conditions iv and v), with each scan condition containing 13 trials. Ss on-line behavioral productions were videotaped during scanning for subsequent analysis of accuracy; a response was scored as correct in condition v only if a grammatical verb was produced and if it was semantically correct relative to the presented target. To further ensure equal output/response rates (i.e., that all Ss in the scanner would produce precisely one form per trial within the time allotted to respond), all Ss were instructed to say or sign “pass” if they could not think of a verb (condition v), or if they missed seeing a sign and hence did not know what form to imitate (condition iv); off-line behavioral analyses of the videotapes confirmed that all Ss produced one form per trial within the 4 s allotted.

Scanning.

PET scans were acquired with a Siemens Exact HR+ scanner operating in three-dimensional mode to measure rCBF using the H2O15 water bolus technique during a 60-s scan period. MRI scans (160 1-mm thick slices) also were obtained for every subject with a 1.5-T Philips Gyroscan ACS and coregistered with each S's PET data, providing a precise neuroanatomical localization of cerebral activation. rCBF images were reconstructed by using a 14-mm Hanning filter, normalized for differences in global rCBF, coregistered with the individual MRI data (31), and transformed into the standardized Talairach stereotaxic space (32) via an automated feature-matching algorithm. The significance of focal CBF changes was assessed by a method based on three-dimensional Gaussian random-field theory (33). The threshold for reporting a peak as statistically significant was set at 3.53 (P < 0.0004, uncorrected) for an exploratory search, corresponding to a false-alarm rate of 0.58, in a search volume of 182 resolution elements (each of which has dimensions 14 × 14 × 14 mm), if the volume of brain gray matter is 500 cm3. After this, for a directed search (to test a specific hypothesis), the t-threshold was set at 2.5.

Results

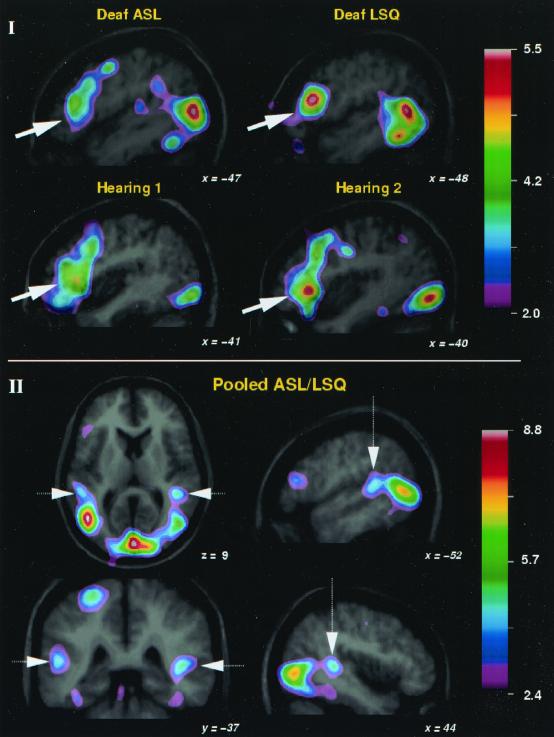

The most remarkable results pertain to two specific questions. First, to test the hypothesis that parts of natural language structure, such as meaningful words, are specialized at brain sites dedicated to the processing of sound, we compared conditions in which deaf and hearing Ss generated verbs when presented with nouns in each of their respective languages. These analyses revealed that the deaf Ss exhibited clear rCBF increases within the LIFC (Brodmann areas 45/47), as well as in the left middle frontal region (Brodmann areas 45/9) when using signed languages. Highly similar activation also was seen among the hearing control Ss performing the same task in spoken English (Fig. 1I and Table 1). Second, as the strongest possible test of the hypothesis that parts of natural language structure are processed in tissue specialized for sound, we scrutinized conditions in the deaf Ss involving aspects of language organization universally associated with sound: phonetic-syllabic units. Here our analyses of conditions in which deaf Ss viewed meaningless but linguistically organized phonetic-syllabic units in sign (“nonsigns”), as well as real signs, revealed surprising bilateral activity in auditory tissue, the STG (Fig. 1II; Table 2); no such activity was found among the hearing control group when they viewed the identical nonsigns or signs (a full report of the coordinates from the subtractions in Tables 1 and 2 and Fig. 1I can be found in Tables 3–8, which are published as supplemental data).

Figure 1.

Common patterns of cerebral activation in two groups of deaf people using two different signed languages, ASL and LSQ, and in two groups of hearing controls, including hearing persons viewing printed nouns in English that were semantically identical to the nouns presented in ASL (hearing 1), and hearing persons viewing printed nouns in English that were semantically identical to the nouns presented in LSQ (hearing 2). (I) Averaged PET subtraction images are shown superimposed on the averaged MRI scans for the verb generation (condition v)–visual fixation (condition i) comparison in each of the four groups of deaf and hearing Ss. Focal changes in rCBF are shown as a t-statistic image, values for which are coded by the color scale. Each of the saggital slices taken through the left hemisphere illustrate the areas of increased rCBF in the LIFC (white arrow), which were common across all four groups. Also visible are regions of posterior activity corresponding to extrastriate visual areas that are different in deaf and hearing because of the different visual stimuli used (moving hand signs vs. static printed text). (II) PET/MRI data for the pooled comparison including all conditions in which signs or linguistically organized nonsigns were presented compared with baseline, averaged across all 11 deaf Ss (see Table 2). The saggital, coronal, and horizontal images show the area of significant rCBF increase located within the STG in each hemisphere. Also visible are areas of increased activity in striate and extrastriate visual cortex. Left side of images correspond to left side of brain.

Table 1.

Stereotaxic coordinates for foci in inferior and middle frontal cortex in ASL and LSQ deaf Ss, and hearing controls

| Condition and area | Group | x | y | z | t |

|---|---|---|---|---|---|

| Verb-fixation | |||||

| Left inferior frontal/opercular | ASL | −32 | 20 | 0 | 4.83 |

| (45/47) | LSQ | −36 | 34 | 0 | 5.69 |

| Hearing 1 | −47 | 22 | 3 | 6.13 | |

| Hearing 2 | −40 | 27 | 2 | 6.12 | |

| Left middle frontal | ASL | −47 | 29 | 17 | 4.47 |

| (45/9) | LSQ | −48 | 24 | 18 | 6.36 |

| Hearing 1 | −47 | 15 | 36 | 4.87 | |

| Hearing 2 | −50 | 24 | 26 | 5.45 | |

| Verb-view signs | |||||

| Left inferior frontal/opercular | ASL | −35 | 18 | 8 | 4.53 |

| (45/47) | LSQ | −46 | 20 | 14 | 5.93 |

| Verb-imitate | |||||

| Left inferior frontal/opercular | ASL | −46 | 32 | 2 | 5.15 |

| (45/47) | −31 | 22 | 3 | 4.38 | |

| LSQ | −34 | 24 | −9 | 6.55 | |

| Left middle frontal | ASL | −46 | 20 | 30 | 4.55 |

| (45/9) | LSQ | −47 | 25 | 21 | 5.17 |

| Right inferior frontal/opercular | ASL | 35 | 27 | −2 | 4.98 |

| (45/47) | LSQ | 32 | 22 | −11 | 4.25 |

Hearing 1 and hearing 2 saw verbs semantically identical to ASL stimuli and LSQ, respectively. Brodmann areas appear in parentheses.

Table 2.

Stereotaxic coordinates for left and right foci in superior temporal gyrus (Brodmann areas 42/22) in ASL and LSQ Ss

| Condition | Group | x | y | z | t |

|---|---|---|---|---|---|

| View signs-fixation | ASL | −55 | −28 | 8 | 2.52 |

| 46 | −37 | 8 | 4.43 | ||

| LSQ | −58 | −33 | 6 | 2.63 | |

| 41 | −38 | 3 | 4.31 | ||

| Nonsigns-fixation | ASL | −48 | −42 | 13 | 3.81 |

| 46 | −38 | 11 | 3.47 | ||

| LSQ | −42 | −44 | 14 | 2.79 | |

| 54 | −45 | 12 | 4.51 | ||

| Imitation-fixation | ASL | −54 | −35 | 12 | 3.91 |

| 46 | −38 | 8 | 3.62 | ||

| LSQ | −52 | −40 | 14 | 3.27 | |

| 40 | −31 | 0 | 3.05 | ||

| Verb-fixation | ASL | −52 | −28 | 11 | 3.23 |

| 43 | −38 | 9 | 2.84 | ||

| LSQ | −56 | −33 | 3 | 3.83 | |

| Pooled conditions | ASL + LSQ | −52 | −38 | 12 | 4.62 |

| 44 | −37 | 8 | 4.92 |

Other patterns of cerebral activation in each condition included expected visual cortical regions and particularly motion-sensitive regions of extrastriate cortex. Indeed the sites of visual cortical activity were very similar for all deaf and hearing groups when viewing signs and correspond well to areas identified as V1 and V5 in prior studies during Ss' processing of complex visual stimuli; for example, the mean stereotaxic coordinates of left and right V5 foci in the nonsigns (condition ii) minus visual fixation (condition i) comparison (−41, −72, 7; and 44, −70, 4) are similar to those previously reported (34). In addition, as expected, the motor demands of the verb generation and imitation tasks recruited areas of activity within the primary motor cortex, supplementary motor area, and cerebellum.

LIFC Activation.

To examine cerebral activity during lexical processing, we focused on the verb generation and visual fixation comparison because it placed the most comparable task demands on the deaf and hearing groups. In the verb generation task all Ss perceived and generated lexical items in their native languages (signs or words, respectively) and the cognitive and neural demands of the visual fixation task were relatively constant. Having observed common LIFC activation during lexical processing across the deaf and hearing groups, we asked whether the left frontal regions in these two groups were indeed equivalent in location and extent of activation. To test this, we performed a direct between-group comparison by pooling the rCBF pattern corresponding to verb generation (condition v) minus visual fixation (condition i) within each group, and contrasting these image volumes to one another. No significant difference anywhere within the inferior or lateral frontal cortex emerged from this analysis, indicating that the recruitment of left frontal cortex is equivalent in deaf and hearing.

The LIFC activation observed in the deaf people was similar in location and extent across both the ASL and LSQ groups and was observed in both the comparison of verb generation (condition v) to visual fixation (condition i; Fig. 1I), as well as in comparison of (condition v) to viewing signs (condition iii) (Table 1). The lack of ASL-LSQ group differences in these comparisons was further confirmed by a direct between-group comparison of both verb generation (condition v) minus visual fixation (condition i) as well as verb generation (condition v) minus imitation (condition iv), both of which showed that there were no differences between groups in the inferior or lateral frontal cortex.

The pattern of LIFC activation in the deaf people was very similar to that previously associated with the search and retrieval of information about words from spoken language across a variety of similar tasks (8–10). Therefore, to further evaluate whether we were observing a form of semantic processing, we controlled for motor output and input components of the task by comparing verb generation (condition v) and imitation (condition iv) in the deaf people; in both conditions, signs are produced and the subtraction removes motor activation, thereby leaving more of the search and retrieval processing aspects. Again, we observed strong LIFC activation but, unlike before, this time we also observed weaker right hemisphere inferior frontal activation (Table 1), which is a phenomenon also observed in hearing-speaking Ss across similar tasks and comparisons (35–37). That all Ss were indeed processing this specific linguistic-semantic function during the verb generation condition is further corroborated by the analysis of their videotaped on-line behavioral data, which indicated that both deaf and hearing Ss performed this task with adequate levels of accuracy (deaf 86%, hearing 97%).

STG Activation.

Additional activity noted in the verb generation and visual fixation comparison involved, as expected, STG activation in the hearing subjects who were able to hear themselves produce their own spoken verbs. Remarkably, however, this comparison also revealed STG activation in the deaf Ss (see Table 2). To understand whether the STG activity was restricted to this lone comparison or whether it occurred more generally, as well as to control for different aspects of the stimuli, we compared each of the two deaf groups in the subtraction of the viewing of meaningful signs (condition iii), the viewing of meaningless, but phonetic-syllabic nonsigns (condition ii), as well as the imitation of meaningful signs (condition iv) from visual fixation (condition i) and observed systematic STG activation, albeit weakly in some comparisons (Table 2). By contrast, there was a striking absence of any similar activation in the two hearing groups in any of these conditions involving visually presented signs (homologous to words) and phonetic parts of real signs (like pseudowords).

To verify that the STG activation was specific to the deaf in the three conditions in which it was observed (viewing meaningful signs, viewing meaningless nonsigns, and imitation), we conducted a contrast between all hearing and deaf on these conditions pooled together versus visual fixation; the result confirmed the previous analyses because a significant difference between deaf and hearing was found close to the two STG sites identified in Table 2 (left: −55, −33, 12, t = 4.09; right: 50, −37, 11, t = 3.48). To examine whether semantic content was the important determinant of STG activity in processing signed languages, we performed a further subtraction involving the deaf Ss viewing of meaningful signs (condition iii) and meaningless nonsigns (condition ii), and we observed no significant difference in STG activity.

To determine with greater precision the location, extent, and neuroanatomical specificity of the observed deaf STG activation, and to maximize the power of the analysis, two additional corroborative analyses were conducted. Regarding location and extent, we first pooled together all conditions in which signs or nonsigns were presented (conditions ii–v) and compared them to visual fixation (condition i), averaging across both deaf groups. This analysis yielded highly significant STG activation in both the left and right hemispheres, confirming that there is clear rCBF increase in this presumed auditory processing region across multiple conditions in profoundly deaf people (Fig. 1II). As is shown in Fig. 1II, the activation sites within the STG are symmetrically located within the two hemispheres, as may be best seen in the horizontal and coronal sections and its location corresponds to the region of the PT. A second analysis evaluated the neuroanatomical specificity of the deaf STG activation. Here we compared the precise position of our deaf STG activations with those from anatomical probability maps calculated from the MRIs of hearing brains of Heschl's gyrus (the primary auditory cortex) and the PT (tissue in the STG, or secondary auditory cortex). We found that the rCBF peaks reported here for deaf signers fall posterior to Heschl's gyrus (as is also evident in the horizontal and saggital sections of Fig. 1II) but, crucially, the extended areas of STG activation overlap with the PT probability map (38). Thus, across multiple subtractions, we observed persistent STG activation in the two deaf groups but not in the two hearing groups, suggesting robust differential processing of the identical visual stimuli by the deaf versus hearing.

Discussion

Our data do not support the view that the human brain's processing of language at discrete neuroanatomical sites is determined exclusively by the sensory-motor mechanisms for hearing sound and producing speech. The input/output modes for signed and spoken languages are so plainly different. Yet despite such radical differences, we observed common patterns of cerebral activation at specific brain sites when deaf and hearing people processed specific linguistic functions. Moreover, our results were extremely consistent across two entirely distinct signed languages, ASL and LSQ, providing independent replication of the findings within a single study and indicating their generalizability.

Common cerebral activation patterns were observed in deaf and hearing people involving a brain site previously shown to be associated with the search and retrieval of information about spoken words (8–10), the LIFC (Brodmann areas 45/47). It is particularly interesting that the most similar cerebral activity for deaf and hearing occurred for tasks that are maximally different in terms of modality of input (vision vs. hearing) and effector muscles used for output (hands and arms vs. vocal musculature). This finding thus provides powerful evidence in support of the hypothesis that specific regions of the left frontal cortex are key sites for higher-order linguistic processes related to lexical operations, as well as some involvement of corresponding tissue on the right. Indeed the present findings make clear that the network of associative patterning essential to searching and retrieving the meanings of words in all human language involves tissue at these unique brain sites that do not depend on the presence of sound or speech. Such similarities notwithstanding, we do not necessarily claim that no differences exist between deaf and hearing; rather in this paper we focused on brain areas that were predicted to participate in specific aspects of language processing. Differences in brain activity patterns between deaf and hearing may arise at various levels, most obviously as related to the differences in input/output requirements of sign vs. speech (e.g., in Fig. 1 the differences in visual cortical response are likely related to the different types of visual inputs used). Exploring such differences would be an important topic for future research.

The discovery of STG activation during sign language processing in deaf people is also remarkable because this area is considered to be a unimodal auditory processing area. Many prior studies have shown STG activity in hearing Ss in the absence of auditory input; these include tasks of phonological working memory (39), lip reading (40), and musical imagery (41). These results have been interpreted as reflecting access to an internal auditory representation, which recruits neural mechanisms in the STG. Yet in the present study we witnessed activation of the STG in the brains of deaf people who have never processed sounds of any type and, thus, could not be based on auditory representation as it is traditionally understood: the transduction of sound waves, and their pressure on the inner ear, into neural signals. Although there was no evidence for left-hemisphere lateralization of PT activation in the deaf, we have demonstrated the recruitment of what has hitherto been thought to be unimodal auditory cortex with purely visual stimuli. This observation is consistent with studies indicating the existence of cross-modal plasticity in other domains, both linguistic and nonlinguistic (42–45). The region of the PT therefore may be a polymodal site initially responsive to both auditory and visual inputs, which, as a result of sensory experience in early life, and depending on the modality of the input, permits the stabilization of one modality over the other. It is also clear that processing of sign activates quite similar regions of striate and extrastriate visual cortex in both deaf and hearing, suggesting that perceptual analysis of the visual components of sign are similar in both groups, whether the signs are meaningful or not.

A question remains as to whether the activation pattern within STG regions specifically reflects linguistic processing, as it is entirely possible that complex visual stimuli per se could activate the temporal cortices in deaf people (42–45). Because the activation pattern in the STG was similar for real signs and nonsigns, semantic content does not appear to be the important determinant of the activity. Although entirely visual, the stimuli did, however, contain sublexical sign-phonetic units organized into syllables relevant only to natural signed languages. This may be the reason we observed robust differential PT activation in the deaf versus the hearing. In examining the similarities between the sublexical level of language organization in signed and spoken languages, we find striking commonalities: both use a highly restricted set of units, organized into regular patterns, which are produced in rapid temporal alternation. Such structural commonalities suggest the hypothesis that the PT can be activated either by sight or sound because this tissue may be dedicated to processing specific distributions of complex, low-level units in rapid temporal alternation, rather than to sound, per se. Alternatively, the cortical tissue in the STG may be specialized for auditory processing, but may undergo functional reorganization in the presence of visual input when neural input from the auditory periphery is absent. What is certain, however, is that the human brain can entertain multiple pathways for language expression and reception, and that the cerebral specialization for language functions is not exclusive to the mechanisms for producing and perceiving speech and sound.

Supplementary Material

Acknowledgments

We thank K. Dunbar, B. Milner, P. Ahad, J. Kegl, D. Perani, D. Perlmutter, M. Piattelli-Palmerini, M. Bouffard, and A. Geva. L.A.P. thanks the Ospedale-Università San Raffaele, in Milan, Italy, and The Guggenheim Foundation. Funding was provided by the Natural Sciences and Engineering Research Council of Canada, Medical Research Council of Canada, and McDonnell-Pew Cognitive Neuroscience Program.

Abbreviations

- PET

positron emission tomography

- rCBF

regional cerebral blood flow

- STG

superior temporal gyrus

- PT

planum temporale

- LIFC

left inferior frontal cortex

- ASL

American Sign Language

- LSQ

Langue des Signes Québécoise

- Ss

subjects

Footnotes

See commentary on page 13476.

References

- 1.Démonet J-F, Chollet F, Ramsay S, Cardebat D, Nespoulous J L, Wise R, Rascol A, Frackowiak R. Brain. 1992;115:1753–1768. doi: 10.1093/brain/115.6.1753. [DOI] [PubMed] [Google Scholar]

- 2.Zatorre R J, Evans A C, Meyer E, Gjedde A. Science. 1992;256:846–849. doi: 10.1126/science.1589767. [DOI] [PubMed] [Google Scholar]

- 3.Price C J, Wise R J S, Warburton E A, Moore C J, Howard D, Patterson K, Frackowiak R S J, Friston K J. Brain. 1996;119:919–931. doi: 10.1093/brain/119.3.919. [DOI] [PubMed] [Google Scholar]

- 4.Zatorre R J, Meyer E, Gjedde A, Evans A C. Cereb Cortex. 1996;6:21–30. doi: 10.1093/cercor/6.1.21. [DOI] [PubMed] [Google Scholar]

- 5.Binder J R, Frost J A, Hammeke T A, Cox R W, Rao S M, Prieto T. J Neurosci. 1997;17:353–362. doi: 10.1523/JNEUROSCI.17-01-00353.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Klein D, Milner B, Zatorre R J, Zhao V, Nikelski E J. NeuroReport. 1999;10:2841–2846. doi: 10.1097/00001756-199909090-00026. [DOI] [PubMed] [Google Scholar]

- 7.Zatorre R J, Binder J R. In: Brain Mapping: The Systems. Toga A, Mazziota J, editors. San Diego: Academic; 2000. pp. 124–150. [Google Scholar]

- 8.Petersen S E, Fox P T, Posner M I, Mintun M, Raichle M. Nature (London) 1988;331:585–589. doi: 10.1038/331585a0. [DOI] [PubMed] [Google Scholar]

- 9.Buckner R, Raichle M, Petersen S. J Neurophysiol. 1995;74:2163–2173. doi: 10.1152/jn.1995.74.5.2163. [DOI] [PubMed] [Google Scholar]

- 10.Klein D, Milner B, Zatorre R J, Evans A C, Meyer E. Proc Natl Acad Sci USA. 1995;92:2899–2903. doi: 10.1073/pnas.92.7.2899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Geschwind N, Levitsky W. Science. 1968;161:186–187. doi: 10.1126/science.161.3837.186. [DOI] [PubMed] [Google Scholar]

- 12.Galaburda A M, Sanides F. J Comp Neurol. 1980;190:597–610. doi: 10.1002/cne.901900312. [DOI] [PubMed] [Google Scholar]

- 13.Morel A, Garraghty P E, Kaas J H. J Comp Neurol. 1993;335:437–459. doi: 10.1002/cne.903350312. [DOI] [PubMed] [Google Scholar]

- 14.Rauschecker J P, Tian B, Pons T, Mishkin M. J Comp Neurol. 1997;382:89–103. [PubMed] [Google Scholar]

- 15.Petitto L A. Signpost Int Q Sign Linguistics Assoc. 1994;7:1–10. [Google Scholar]

- 16.Petitto L A, Marentette P F. Science. 1991;251:1493–1496. doi: 10.1126/science.2006424. [DOI] [PubMed] [Google Scholar]

- 17.Petitto L A. Cognition. 1987;27:1–52. doi: 10.1016/0010-0277(87)90034-5. [DOI] [PubMed] [Google Scholar]

- 18.Petitto, L. A., Katerelos, M., Levy, B. G., Gauna, K., Tétrault, K. & Ferraro, V. (2000) J. Child Lang., in press. [DOI] [PubMed]

- 19.Petitto L A. In: The Inheritance and Innateness of Grammars. Gopnik M, editor. Oxford: Oxford Univ. Press; 1997. pp. 45–69. [Google Scholar]

- 20.Petitto L A. In: The Signs of Language Revisited. Emmorey K, Lane H, editors. Mahwah: Erlbaum; 2000. pp. 447–471. [Google Scholar]

- 21.Bellugi U, Poizner H, Klima E S. Trends Neurosci. 1989;12:380–388. doi: 10.1016/0166-2236(89)90076-3. [DOI] [PubMed] [Google Scholar]

- 22.Hickok G, Bellugi U, Klima E S. Trends Cognit Sci. 1998;2:129–136. doi: 10.1016/s1364-6613(98)01154-1. [DOI] [PubMed] [Google Scholar]

- 23.Neville H J, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, Braun A, Clark V, Jezzard P, Turner R. Proc Natl Acad Sci USA. 1998;95:922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bavelier D, Corina D, Jezzard P, Clark V, Karni A, Lalwani A, Rauschecker J P, Braun A, Turner R, Neville H J. NeuroReport. 1998;9:1537–1542. doi: 10.1097/00001756-199805110-00054. [DOI] [PubMed] [Google Scholar]

- 25.Söderfeldt B, Ingvar M, Ronnberg J, Eriksson L, Serrander M, Stone-Elander S. Neurology. 1997;49:82–87. doi: 10.1212/wnl.49.1.82. [DOI] [PubMed] [Google Scholar]

- 26.McGuire P K, Robertson D, Thacker A, David A S, Kitson N, Frackowiak R S J, Frith C D. NeuroReport. 1997;8:695–698. doi: 10.1097/00001756-199702100-00023. [DOI] [PubMed] [Google Scholar]

- 27.Hickok G, Poeppel D, Clark K, Buxton R B, Rowley H A, Roberts T P L. Hum Brain Mapp. 1997;5:437–444. doi: 10.1002/(SICI)1097-0193(1997)5:6<437::AID-HBM4>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- 28.Nishimura H, Hashikawa K, Doi K, Iwaki T, Watanabe Y, Kusuoka H, Nishimura T, Kubo T. Nature (London) 1999;397:116. doi: 10.1038/16376. [DOI] [PubMed] [Google Scholar]

- 29.Brentari D. A Prosodic Model of Sign Language Phonology. Cambridge: MIT Press; 1999. [Google Scholar]

- 30.Perlmutter D. Linguistic Inquiry. 1992;23:407–442. [Google Scholar]

- 31.Evans A C, Marrett S, Neelin P, Collins L, Worsley K, Dai W, Milot S, Meyer E, Bub D. NeuroImage. 1992;1:43–53. doi: 10.1016/1053-8119(92)90006-9. [DOI] [PubMed] [Google Scholar]

- 32.Talairach J, Tournoux P. Co-Planar Stereotaxic Atlas of the Human Brain. New York: Thieme; 1988. [Google Scholar]

- 33.Worsley K J, Evans A C, Marrett S, Neelin P. J Cereb Blood Flow Metab. 1992;12:900–918. doi: 10.1038/jcbfm.1992.127. [DOI] [PubMed] [Google Scholar]

- 34.Watson J D G, Myers R, Frackowiak R S J, Hajnal J V, Woods R P, Mazziotta J C, Shipp S, Zeki S. Cereb Cortex. 1993;3:79–94. doi: 10.1093/cercor/3.2.79. [DOI] [PubMed] [Google Scholar]

- 35.Binder J R, Rao S M, Hammeke T A, Frost J A, Bandettini P A, Jesmanowicz A, Hyde J S. Arch Neurol. 1995;52:593–601. doi: 10.1001/archneur.1995.00540300067015. [DOI] [PubMed] [Google Scholar]

- 36.Desmond J E, Sum J M, Wagner A D, Demb J B, Shear P K, Glover G H, Gabrieli J D, Morrell M J. Brain. 1995;118:1411–1419. doi: 10.1093/brain/118.6.1411. [DOI] [PubMed] [Google Scholar]

- 37.Ojemann J G, Buckner R L, Akbudak E, Snyder A Z, Ollinger J M, McKinstry R C, Rosen B R, Petersen S E, Raichle M E, Conturo T E. Hum Brain Mapp. 1998;6:203–215. doi: 10.1002/(SICI)1097-0193(1998)6:4<203::AID-HBM2>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Westbury C F, Zatorre R J, Evans A C. Cereb Cortex. 1999;9:392–405. doi: 10.1093/cercor/9.4.392. [DOI] [PubMed] [Google Scholar]

- 39.Paulesu E, Frith C D, Frackowiak R S J. Nature (London) 1993;362:342–344. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- 40.Calvert G A, Bullmore E T, Brammer M J, Campbell R, Williams S C R, McGuire P K, Woodruff P W R, Iversen S D, David A S. Science. 1997;276:593–596. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- 41.Zatorre R J, Halpern A R, Perry D W, Meyer E, Evans A C. J Cognit Neurosci. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]

- 42.Sharma J, Angelucci A, Sur M. Nature (London) 2000;404:841–847. doi: 10.1038/35009043. [DOI] [PubMed] [Google Scholar]

- 43.Rauschecker J P. Trends Neurosci. 1995;18:36–43. doi: 10.1016/0166-2236(95)93948-w. [DOI] [PubMed] [Google Scholar]

- 44.Cohen L G, Celnik P, Pascual-Leone A, Corwell B, Falz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catala M D, Hallett M. Nature (London) 1997;389:180–183. doi: 10.1038/38278. [DOI] [PubMed] [Google Scholar]

- 45.Levänen S, Jousmäki V, Hari R. Curr Biol. 1998;8:869–872. doi: 10.1016/s0960-9822(07)00348-x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.