Abstract

Aims: To assess inter/intraobserver variability in the interpretation of a series of digitised images of columnar cell lesions (CCLs) of the breast.

Methods: After a tutorial on breast CCL, 39 images were presented to seven staff pathologists, who were instructed to categorise the lesions as follows: 0, no columnar cell change (CCC) or ductal carcinoma in situ (DCIS); 1, CCC; 2, columnar cell hyperplasia; 3, CCC with architectural atypia; 4, CCC with cytological atypia; 5, DCIS. Concordance with the tutor’s diagnosis and degree of agreement among pathologists for each image were determined. The same set of images was re-presented to the pathologists one week later, their diagnoses collated, and inter/intraobservor reproducibility and level of agreement for individual images analysed.

Results: Diagnostic reproducibility with the tutor ranged from moderate to substantial (κ values, 0.439–0.697) in the first exercise. At repeat evaluation, intraobserver agreement was fair to perfect (κ values, 0.271–0.832), whereas concordance with the tutor varied from fair to substantial (κ values, 0.334–0.669). There was unanimous agreement on more images during the second exercise, mainly because of agreement on the diagnosis of DCIS. The lowest agreement was seen for CCC with cytological atypia.

Conclusions: Interobserver and intraobserver agreement is good for DCIS, but more effort is needed to improve diagnostic consistency in the category of CCC with cytological atypia. Continued awareness and study of these lesions are necessary to enhance recognition and understanding.

Keywords: columnar cell lesions, cytological atypia, reproducibility, κ statistic, ductal carcinoma in situ

Columnar cell lesions (CCLs) of the breast comprise a spectrum of benign to atypical entities that have in common variably dilated terminal duct lobular units lined by columnar epithelial cells with prominent apical cytoplasmic snouts.1,2 They are increasingly being encountered in breast biopsies because their associated microcalcifications are detected on mammographic screening.2

CCLs present a new challenge in breast pathology. Many are benign histologically and biologically, others display cytological atypia, and yet others show both cytological and architectural alterations that place them into the category of ductal carcinoma in situ (DCIS).3,4 For CCL with cytological atypia, a major issue is the reproducible recognition of the degree of atypia on core biopsies that would warrant a further excision biopsy, because this has implications for cost and anxiety in affected women.

“Columnar cell lesions are increasingly being encountered in breast biopsies because their associated microcalcifications are detected on mammographic screening”

A uniform approach to the diagnosis of this relatively new entity is necessary for rational treatment strategies. Here, we assess interobserver and intraobserver reproducibility in the evaluation of breast CCL, which to the best of our knowledge has not previously been reported.

METHODS

After a didactic tutorial on CCL of the breast (including an explanation of a classification scheme accompanied by images) conducted by pathologist AH, 39 representative digitised images were presented sequentially to a panel of staff pathologists from two institutions who regularly interpret breast surgical specimens. They were instructed to categorise the lesions as follows: 0, no columnar cell change (CCC) or DCIS; 1, CCC; 2, columnar cell hyperplasia (CCH); 3, CCC with architectural atypia; 4, CCC with cytological atypia; 5, DCIS. Each image was displayed for approximately one minute. There was no review option or discussion among participating pathologists during the process. Several repeat images were interspersed among others during the exercise, some were of identical magnification, whereas others were identical histological lesions at different magnifications. A discussion of the images and their diagnoses (given by pathologist AH) followed. Diagnostic agreement between the tutor and the staff pathologists was determined using the κ statistic. The degree of agreement among pathologists for each image was also ascertained using the highest proportion of identical answers for each case, expressed as a percentage of the total number of participating pathologists (including AH). The same set of images was circulated to the same staff pathologists one week later for their individual evaluation; their diagnoses were collated, the interobserver concordance (compared with the diagnoses given by the tutor), intraobserver reproducibility, and the level of agreement for individual images were determined.

SPSS 11.5 statistical software (SPSS Inc, Chicago, Illinois, USA) was used for statistical analysis. Interobserver and intraobserver agreement was analysed using the χ2 test and pairwise κ statistic, and κ values were interpreted according to the guidelines of Landis and Koch.5 Briefly, the greater the κ value, the stronger the agreement between the tests (variables). When the κ value ranged from 0.81 to 1.0, 0.61 to 0.8, 0.41 to 0.6, 0.21 to 0.4, or 0 to 0.2, the strength of agreement was perfect, substantial, moderate, fair, or slight, respectively.

RESULTS

Of the seven staff pathologists who participated in the initial exercise, agreement with the tutor for all 39 images ranged from moderate to substantial (κ values, 0.439–0.708; mean, 0.597; median, 0.608). At the second evaluation, concordance with the tutor varied from fair to substantial (κ values, 0.334–0.699; mean, 0.519; median, 0.479), whereas intraobserver agreement ranged from fair to perfect (κ values, 0.271–0.832; mean, 0.520; median, 0.482). Table 1 shows the individual κ values of the participating pathologists.

Table 1.

Individual κ values for inter/intraobserver reproducibility of 7 participating staff pathologists in both exercises

| Pathologist | Interobserver reproducibility, 1st exercise (κ) | Interobserver reproducibility, 2nd exercise (κ) | Intraobserver reproducibility (κ) |

| 1 | 0.697 | 0.699 | 0.832 |

| 2 | 0.493 | 0.633 | 0.482 |

| 3 | 0.708 | 0.479 | 0.405 |

| 4 | 0.687 | 0.443 | 0.438 |

| 5 | 0.608 | 0.664 | 0.682 |

| 6 | 0.439 | 0.379 | 0.527 |

| 7 | 0.548 | 0.334 | 0.271 |

When individual images were considered, identical answers were given by three to all pathologists for the 39 images. In the repeat exercise, two to all pathologists agreed for each image. Table 2 shows the distribution of pathologists giving similar diagnoses for the 39 images in the initial and repeat evaluations. Of note, there was a greater number of images with unanimous agreement among pathologists during the second exercise (six and 10 images, respectively), mainly because of agreement in the diagnosis of DCIS (fig 1).

Table 2.

The degree of agreement among participating pathologists for individual images used in the initial and repeat classification exercises

| Agreement by pathologists | No. of images in initial exercise (%) | No. of images in repeat exercise (%) |

| Complete agreement | 6 (15.4) | 10 (25.6) |

| 7 of 8 pathologists agree | 11 (28.2) | 2 (5.1) |

| 6 of 8 pathologists agree | 8 (20.5) | 10 (25.6) |

| 5 of 8 pathologists agree | 7 (17.9) | 9 (23.1) |

| 4 of 8 pathologists agree | 5 (12.8) | 3 (7.7) |

| 3 of 8 pathologists agree | 2 (5.1) | 3 (7.7) |

| 2 of 8 pathologists agree | 0 (0) | 2 (5.1) |

| Total number of images | 39 (100) | 39 (100) |

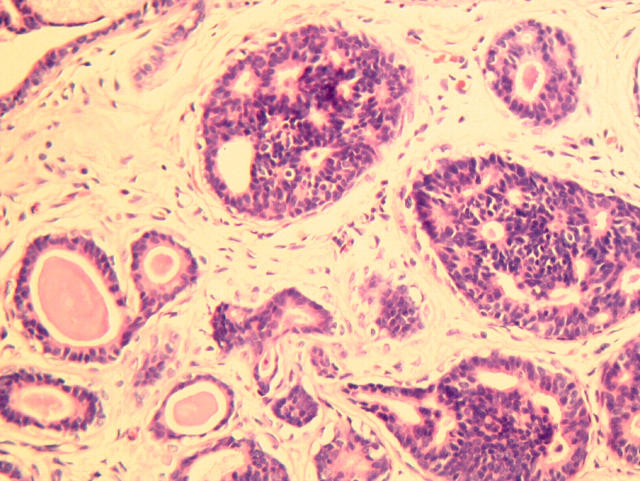

Figure 1.

Ductal carcinoma in situ with columnar cells, featuring cribriform epithelial proliferation with rigid spaces.

Table 3 shows the agreement of pathologists stratified according to the diagnosis of CCL given by AH. The highest number of common answers for each image did not necessarily mirror the tutor’s diagnosis, although they agreed in most cases. In the first exercise, for four images the majority diagnosis differed from the tutor: one case was diagnosed by the tutor as CCC, whereas three pathologists considered the image to show CCC with cytological atypia (fig 2); the tutor’s diagnosis in two cases was CCC with architectural atypia, but four pathologists thought that the images showed CCC (fig 3); in the fourth case, the diagnosis given was CCC with cytological atypia, but participating pathologists felt that the image showed CCH (fig 4). In the second exercise, the number of images in which the majority answer differed from the tutor increased to six. Three of these were repeats of those in the first exercise, whereas the other three were: a case of CCH thought by three pathologists to be CCC with architectural atypia; a case of CCC with architectural atypia, regarded as CCH by four pathologists; and a case of CCC with cytological atypia, viewed by four pathologists as CCH.

Table 3.

The degree of agreement among participating pathologists for images stratified according to the proffered pathological diagnosis by AH, used in the initial and repeat classification exercises

| Pathological diagnosis of the image (no. of images) | Agreement by pathologists in 1st exercise (%) | Agreement by pathologists in 2nd exercise (%) |

| DCIS (5) | Complete agreement: 1 (20) | Complete agreement: 5 (100) |

| 7 of 8 pathologists agree: 4 (80) | ||

| CCC with cytological atypia (14) | Complete agreement: 2 (14.3) | Complete agreement: 1 (7.1) |

| 7 of 8 pathologists agree: 2 (14.3) | 6 of 8 pathologists agree: 7 (50) | |

| 6 of 8 pathologists agree: 4 (28.6) | 5 of 8 pathologists agree: 3 (21.4) | |

| 5 of 8 pathologists agree: 4 (28.6) | 4 of 8 pathologists agree: 2 (14.3) | |

| 4 of 8 pathologists agree: 2 (14.3) | 3 of 8 pathologists agree: 1 (7.1) | |

| CCC with architectural atypia (4) | Complete agreement: 1 (25) | 5 of 8 pathologists agree: 2 (50) |

| 4 of 8 pathologists agree: 2 (50) | 4 of 8 pathologists agree: 1 (25) | |

| 3 of 8 pathologists agree: 1 (25) | 3 of 8 pathologists agree: 1 (25) | |

| Columnar cell hyperplasia (5) | Complete agreement: 1 (20) | Complete agreement: 3 (60) |

| 7 of 8 pathologists agree: 3 (60) | 6 of 8 pathologists agree: 1 (20) | |

| 4 of 8 pathologists agree: 1 (20) | 3 of 8 pathologists agree: 1 (20) | |

| Columnar cell change (11) | Complete agreement: 1 (9.1) | Complete agreement: 1 (9.1) |

| 7 of 8 pathologists agree: 2 (18.2) | 7 of 8 pathologists agree: 2 (18.2) | |

| 6 of 8 pathologists agree: 4 (36.4) | 6 of 8 pathologists agree: 2 (18.2) | |

| 5 of 8 pathologists agree: 3 (27.3) | 5 of 8 pathologists agree: 4 (36.4) | |

| 3 of 8 pathologists agree: 1 (9.1) | 2 of 8 pathologists agree: 2 (19.2) |

CCC, columnar cell change; DCIS, ductal carcinoma in situ.

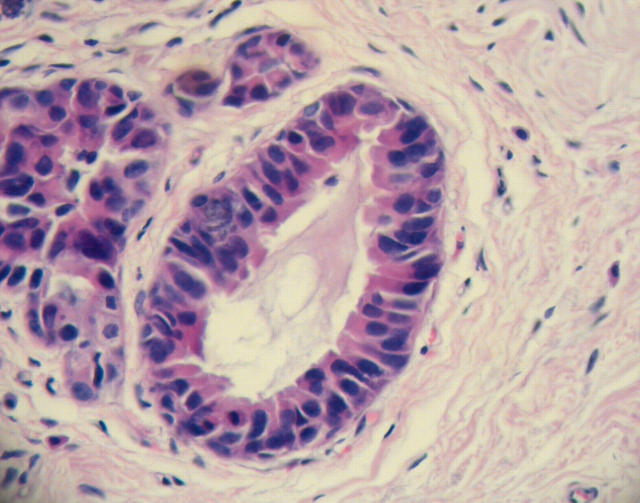

Figure 2.

Columnar cell lesion interpreted by three participating pathologists to be columnar cell lesion with cytological atypia.

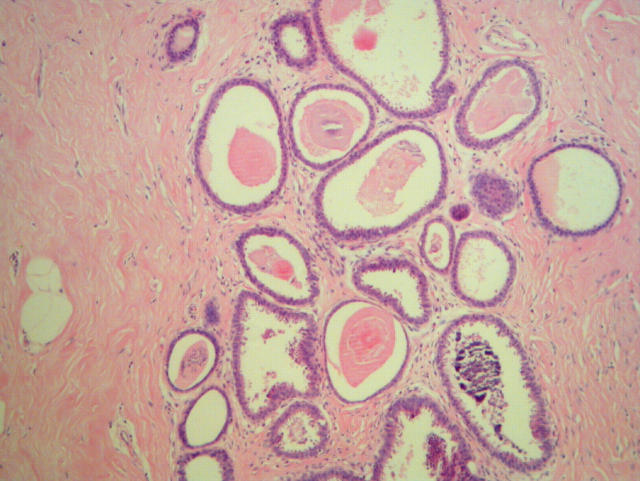

Figure 3.

Columnar cell lesion with architectural atypia (lower field) diagnosed as columnar cell change by four participating pathologists.

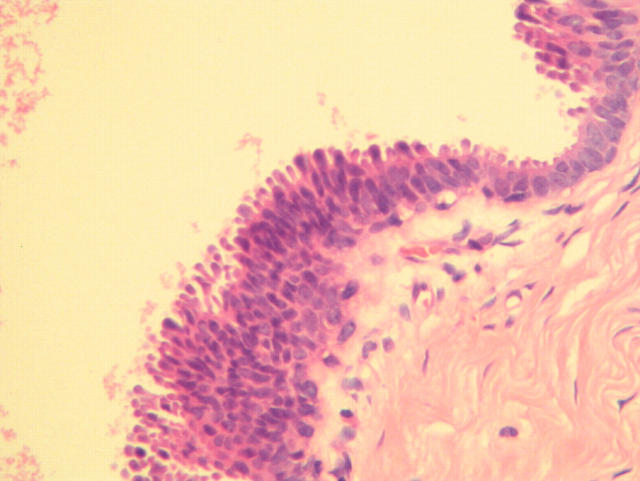

Figure 4.

Image considered by the tutor to be columnar cell change with cytological atypia, although the participating pathologists voted for columnar cell change.

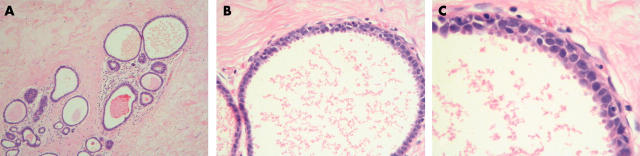

Table 4 shows the sets of repeated images/lesions and the number of pathologists in each exercise whose answers were individually at variance for the same image/lesion. When images were identical (same magnification), fewer pathologists gave different diagnoses for these repeat images than when the images represented different magnifications of the same lesion. Of note, for images 9, 10, and 12, which showed the same lesion at different magnifications (fig 5), there was greatest individual variation in both exercises. In the first exercise, three pathologists changed their diagnosis from CCC (image 9) to CCC with cytological atypia (images 10 and 12); one pathologist changed his diagnosis from a lesion that was not CCC/DCIS to CCC with cytological atypia; one pathologist called image 9 not CCC/DCIS but images 10 and 12 CCC; another pathologist proffered diagnoses of not CCC/DCIS to CCC with cytological atypia to CCC with architectural atypia; and the last pathologist rendered diagnoses of CCC to CCH to CCC with cytological atypia. Similarly, in the second exercise, most of the pathologists concluded that image 12 (at high magnification) represented CCC with cytological atypia, whereas cytological atypia was not noticed in the images shown at lower magnification.

Table 4.

Repeated images/lesions, their proffered diagnoses by AH, and the intraobserver agreement of pathologists in both exercises

| Repeated images (according to sequence of appearance in the set) | No. of pathologists with different answers in the 1st exercise | No. of pathologists with different answers in the 2nd exercise | Comments |

| Images 1 and 13 (CCC) | 2 (CCC to CCC with cytol atypia; CCC to CCH) | 2 (CCH to CCC with cytol atypia; CCC to CCH) | Low and high power magnification of the same lesion |

| Images 4, 15, 26 (DCIS) | 1 (DCIS to CCC with cytol atypia) | 0 | Identical images |

| Images 5 and 11 (CCC) | 2 (not CCC/DCIS to CCC; not CCC/DCIS to CCC with arch atypia) | 0 | Low and high magnification of the same lesion |

| Images 9, 10, 12 (CCC with arch atypia for image 9, CCC with cytol atypia for images 10 and 12) | 7 (see text) | 5 (see text) | Low, medium, and high magnification of the same lesion |

| Images 19 and 36 (CCH) | 1 (CCH to CCC with cytol atypia) | 0 | Identical images |

| Images 25 and 32 (CCC) | 0 | 3 (CCC to CCC with cytol atypia; CCH to CCC with cytol atypia; not CCC/DCIS to CCC) | Identical images |

| Images 34 and 35 (DCIS) | 0 | 0 | Low and high power magnification |

arch, architectural; CCC, columnar cell change; CCH, columnar cell hyperplasia; cytol, cytological; DCIS, ductal carcinoma in situ.

Figure 5.

(A) Low power image of a columnar cell lesion, with dilated ducts lined by columnar cells (image 9). (B) Medium power image of the same lesion (image 10). (C) High power image in which the cytological atypia was discerned by most of the participating pathologists (image 12).

DISCUSSION

Reproducibility studies are useful in determining the degree of agreement among pathologists in morphological diagnoses, and in the assessment of the universal applicability of histological criteria in classification schemes. The grading of invasive breast cancer,6,7,8,9,10,11 the evaluation of different DCIS classifications,12–16 and the assessment of diagnostic agreement between general and expert breast pathologists in core biopsy interpretation17,18 and among community based surgical pathologists19 have been the subject of several published reports. Results from these studies have provided insight into problem issues in diagnostic breast pathology and identified areas that require further refinement in classification schemes, particularly in honing objective histological criteria to allow improved reproducibility and correlation with clinical outcome.

Rosai in 1991 defined borderline epithelial lesions of the breast as “a type of proliferative process placed somewhere between the usual type of hyperplasia and carcinoma in situ, both in terms of morphological features and propensity for the development of invasive carcinoma”, and found an “unacceptably high” degree of interobserver variability among a group of experienced pathologists in the interpretation of such lesions.20 Although most CCLs do not fall into the “borderline” category, those with cytological atypia, also referred to as flat epithelial atypia,21 present similar diagnostic problems, with a suggestion that these may either be precursors of or the earliest morphological manifestation of DCIS. It has also been recommended that the identification of CCL with cytological atypia on core biopsies should lead to an open excision, because a more advanced lesion is seen in about a third of cases.21

Therefore, it is of paramount importance that the spectrum of CCL should be recognised by practising surgical pathologists who interpret breast specimens, specifically in identifying CCL with cytological atypia, so that proper management can be instituted. This is particularly relevant for Singapore, because we have a National Breast Screening Programme (BreastScreen Singapore) and a proportion of core biopsies carried out for mammographic calcifications contain CCL.

In our study, interobserver agreement with the tutor’s diagnoses immediately after a didactic session on CCL varied from moderate to substantial, indicating that an acceptable degree of agreement can be achieved through an educational session incorporating digitised images. We believe that the results from this first assessment provide an unbiased reflection of interobserver variability, because all answers proffered by the participating pathologists were independently concluded, and an equal amount of time was given to the presentation of each image for their analysis. In the second exercise, the interobserver agreement ranged from fair to substantial, with the κ values of four of the pathologists being lower than in the first exercise, confirming that the recollection of histological criteria and diagnostic reproducibility are better when criteria have been just expounded. These results also imply that recurrent educational sessions may be needed to maintain reproducible diagnoses, particularly for the relatively new and emerging entity of CCL. The intraobserver agreement varied from fair to perfect, which can also be explained by the diminishing consistency of application of histological criteria with increasing time since the educational session.

When we assessed the degree of agreement among all pathologists for individual images, there was complete diagnostic agreement in only six of the 39 images during the first exercise, which improved to 10 in the second exercise. This improvement was mainly because of agreement among all pathologists with regard to DCIS images during the second exercise, suggesting that for the extreme end of the spectrum, where the impact on management is greatest, pathologists learn and retain diagnostic criteria more effectively, especially after a post exercise discussion. It could also be that the severe degree of cytological atypia and the characteristic architectural abnormalities of the presented images were more readily appreciated after they had been specifically pointed out during the discussion after the first exercise. Overall, however, agreement among pathologists decreased during the second exercise, with at least seven of the eight pathologists agreeing in 12 and 17 images in the second and first exercises, respectively. In fact, in the second exercise, there were two images in which common answers were given by only two pathologists.

“Closer scrutiny of all columnar cell lesions at medium to high magnification is necessary to recognise any accompanying cytological atypia that may have implications for management”

When stratified according to the type of CCL, the lowest numbers of complete agreement for individual images were in the categories of CCC with cytological atypia and CCC, in both exercises. This underscores the fact that cytological atypia is subjective, and the threshold between pure CCC and that with cytological atypia is difficult to delineate. A concerted effort should be made to define what constitutes cytological atypia in CCL in a semiquantitative manner, similar to the way that nuclear grading of DCIS is taught, applied,22,23 and validated.24 This is so that CCL with cytological atypia can be diagnosed more reproducibly and consistently, especially because this lesion has management implications for breast core biopsies. Interestingly, among images/lesions that were repeated during the sequential presentation of all 39 images, the greatest internal variation in pathologists’ diagnoses occurred when the same lesion was presented at different magnifications, with cytological atypia being noticed only at medium to high magnification. This corroborates the opinion expressed in a review by Schnitt that cytological atypia in these lesions becomes evident only at high magnification,21 so that all CCLs should not just be accorded a cursory view, but should be subjected to a high magnification examination.

Take home messages.

We assessed inter/intraobserver variability in the interpretation by seven staff pathologists of a series of digitised images of columnar cell lesions (CCL) of the breast after a tutorial on breast CCL

Interobserver and intraobserver agreement was good for ductal carcinoma in situ, but more effort is needed to improve diagnostic consistency in the category of columnar cell changes with cytological atypia

Continued awareness and study of these lesions are necessary to enhance recognition and understanding

The limitations of our study include the fact that digitised images were used instead of histological sections on glass slides, which represent the real practical situation. However, it can be argued that for teaching, focusing on a specific image may be advantageous to the learning process. Even in past studies using circulated glass slides, an area of interest is circled or marked on the slide for participating pathologists.12,13,20 Other potentially contentious issues that may have affected our findings are: the use of the same series of images for the second evaluation (such that the inter/intraobserver reproducibility results from this second exercise may be that of pure memory rather than true assimilation of learnt criteria); no time limitations imposed on the second evaluation (with possible review option by pathologists, thereby allowing comparison of and realisation that there were repeated images/lesions that were interspersed within the series of images); an unequal number of images from each category; and differing levels of interest in breast pathology and motivation among participating pathologists.

Nevertheless, our study shows that moderate to substantial interobserver reproducibility for breast CCL can be achieved after a tutorial, although it is recommended that follow up educational sessions should be conducted to maintain satisfactory consistency in the evaluation of these lesions. At the DCIS end of the spectrum, a diagnostic reproducibility of 100% can be achieved. The category that requires more attention in terms of refining diagnostic criteria is that of CCL with cytological atypia, where more objective guidelines as to what constitutes cytological atypia should be reached. Closer scrutiny of all CCLs at medium to high magnification is necessary to recognise any accompanying cytological atypia that may have implications for management. This difficulty is similar to that seen in studies of reproducibility in the diagnosis of atypical ductal hyperplasia which, unfortunately, has remained refractory to efforts to improve diagnostic consistency.25 Indeed the most (as yet unpublished) recent version of the UK breast screening pathology guidelines recommends that CCL with atypia should be categorised as equivalent to atypical ductal hyperplasia—that is, B3.

In conclusion, further reproducibility and follow up studies will assist in arriving at a clinically relevant consensus classification for CCL of the breast. Nuclear morphometry can help define thresholds of nuclear atypia, and molecular studies on microdissected lesions may provide important biological clues beyond morphology. From a practical standpoint, multiple step sections and consultation with a breast pathologist may be prudent when there is uncertainty about the presence or otherwise of cytological atypia in CCL, particularly in core biopsies.

Acknowledgments

We thank the staff pathologists who participated in both exercises.

Abbreviations

CCC, columnar cell changes

CCH, columnar cell hyperplasia

CCL, columnar cell lesion

DCIS, ductal carcinoma in situ

REFERENCES

- 1.Schnitt SJ, Vincent-Salomon A. Columnar cell lesions of the breast. Adv Anat Pathol 2003;10:113–24. [DOI] [PubMed] [Google Scholar]

- 2.Fraser JL, Raza S, Chorny K, et al. Columnar alteration with prominent apical snouts and secretions: a spectrum of changes frequently present in breast biopsies performed for microcalcifications. Am J Surg Pathol 1998;22:1521–7. [DOI] [PubMed] [Google Scholar]

- 3.Koerner FC, Oyama T, Maluf H. Morphological observations regarding the origins of atypical cystic lobules (low-grade clinging carcinoma of flat type). Virchows Arch 2001;439:523–30. [DOI] [PubMed] [Google Scholar]

- 4.Oyama T, Maluf H, Koerner F. Atypical cystic lobules: an early stage in the formation of low-grade ductal carcinoma in situ. Virchows Arch 1999;435:413–21. [DOI] [PubMed] [Google Scholar]

- 5.Landis JR, Koch GG. The measurement of observed agreement for categorical data. Biometrics 1977;33:159–74. [PubMed] [Google Scholar]

- 6.Frierson HF, Wolber RA, Berean KW, et al. Interobserver reproducibility of the Nottingham modification of the Bloom and Richardson histologic grading scheme for infiltrating ductal carcinoma. Am J Clin Pathol 1995;103:195–8. [DOI] [PubMed] [Google Scholar]

- 7.Theissig F, Kunze KD, Haroske G, et al. Histological grading of breast cancer: interobserver reproducibility and prognostic significance. Pathol Res Pract 1990;186:732–6. [DOI] [PubMed] [Google Scholar]

- 8.Harvey JM, de Klerk NH, Sterrett GF. Histological grading in breast cancer: interobserver agreement and relation to other prognostic factors including ploidy. Pathology 1992;24:63–8. [DOI] [PubMed] [Google Scholar]

- 9.Hopton DS, Thorogood J, Clayden AD, et al. Observer variation in histological grading of breast cancer. Eur J Surg Oncol 1989;15:21–3. [PubMed] [Google Scholar]

- 10.Robbins P, Pinder S, de Klerk Nm, et al. Histological grading of breast carcinomas: a study of interobserver agreement. Hum Pathol 1995;26:873–9. [DOI] [PubMed] [Google Scholar]

- 11.Delides GS, Garas G, Georgouli G, et al. Interlaboratory variations in the grading of breast carcinoma. Arch Pathol Lab Med 1982;106:126–8. [PubMed] [Google Scholar]

- 12.Bethwaite P, Smith N, Delahunt B, et al. Reproducibility of new classification schemes for the pathology of ductal carcinoma in situ of the breast. J Clin Pathol 1998;51:450–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sneige N, Lagios MD, Schwarting R, et al. Interobserver reproducibility of the Lagios nuclear grading system for ductal carcinoma in situ. Hum Pathol 1999;30:257–62. [DOI] [PubMed] [Google Scholar]

- 14.Scott MA, Lagios MD, Axelsson K, et al. Ductal carcinoma in situ of the breast: reproducibility of histologic subtype analysis. Hum Pathol 1997;28:967–73. [DOI] [PubMed] [Google Scholar]

- 15.Wells WA, Carney PA, Eliassen MS, et al. Pathologists’ agreement with experts and reproducibility of breast ductal carcinoma-in-situ classification schemes. Am J Surg Pathol 2000;24:651–9. [DOI] [PubMed] [Google Scholar]

- 16.Sloane JP, Amendoeira I, Apostolikas N, et al. Consistency achieved by 23 European pathologists in categorizing ductal carcinoma in situ of the breast using five classifications. European Commission working group of breast screening pathology. Hum Pathol 1998;29:1056–62. [PubMed] [Google Scholar]

- 17.Collins LC, Conolly JL, Page DL, et al. Diagnostic agreement in the evaluation of image-guided breast core needle biopsies. Results from a randomized clinical trial. Am J Surg Pathol 2004;28:126–31. [DOI] [PubMed] [Google Scholar]

- 18.Verkooijen HM, Peterse JL, Schipper MEI, et al. Interobserver variability between general and expert pathologists during the histopathological assessment of large-core needle and open biopsies on non-palpable breast lesions. Eur J Cancer 2003;39:2187–91. [DOI] [PubMed] [Google Scholar]

- 19.Wells WA, Carney PA, Eliassen MS, et al. Statewide study of diagnostic agreement in breast pathology. J Natl Cancer Inst 1998;90:142–5. [DOI] [PubMed] [Google Scholar]

- 20.Rosai J. Borderline epithelial lesions of the breast. Am J Surg Pathol 1991;15:209–21. [DOI] [PubMed] [Google Scholar]

- 21.Schnitt SJ. The diagnosis and management of pre-invasive breast disease: flat epithelial atypia—classification, pathologic features and clinical significance. Breast Cancer Res 2003;5:263–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sloane JP, Anderson TJ, Blamey RW, et al. Classifying epithelial proliferation. In: Pathology reporting in breast cancer screening. 2nd ed. National coordinating group for breast screening pathology. Sheffield, UK: NHSBSP publication no, 3 1995:18–22.

- 23.Schwartz GF, Lagios MD, Carter D, et al. Consensus conference on the classification of ductal carcinoma in situ. The consensus conference committee. Cancer 1997;80:1798–802. [DOI] [PubMed] [Google Scholar]

- 24.Tan PH, Goh BB, Chiang G, et al. Correlation of nuclear morphometry with pathologic parameters of ductal carcinoma in situ of the breast. Mod Pathol 2001;14:937–41. [DOI] [PubMed] [Google Scholar]

- 25.Elston CW, Sloane JP, Amendoeira I, et al. Causes of inconsistency in diagnosing and classifying intraductal proliferations of the breast. European Commission working group on breast screening pathology. Eur J Cancer 2000;36:1769–72. [DOI] [PubMed] [Google Scholar]