Abstract

Whole-head magnetoencephalography was employed in 40 normal subjects to investigate whether the basic functional organization of the auditory cortex varies with linguistic environment. Robust activations of the bilateral supratemporal auditory cortices to 1-kHz pure tones, maximum at about 100 ms after stimulus onset, were studied in Finnish and German female and male subject groups with monolingual background. Activations elicited by the tones were mutually indistinguishable in German and Finnish women. In contrast, German men showed significantly stronger auditory responses to pure tones in the left, language-dominant hemisphere than Finnish men. We discuss the possibility that the prominent left-hemisphere activation in German males reflects higher frequency resolution required for distinguishing between German than Finnish vowels and that the clear effect of native language in male but not in female auditory cortex derives from more pronounced functional lateralization in men. The present data suggest that the influence of native language can extend to auditory cortical processing of pure-tone stimuli with no linguistic content and that this effect is conspicuous in the male brain.

Cortical networks undergo extensive changes in early life (1), affected strongly by sensory experience (2, 3). Considering the unparalleled role speech has in human communication, and the large variance in acoustic properties of the different languages, early linguistic experience may affect the functional organization of the human auditory cortex. Humans certainly become selectively sensitive to the phonemes of their native language (4). We tested whether differences in native language are reflected in cortical processing of simple, nonverbal auditory input in the adult human brain.

We used whole-head magnetoencephalography (MEG) to assess noninvasively activation strengths and timing in the left and right auditory cortices. Two identical MEG systems, one in Finland and the other in Germany, were calibrated to allow direct quantitative comparison of the subjects’ activation patterns. Because of the existing evidence for sex differences in brain responses to auditory (5, 6) and linguistic (7) stimuli, both female and male subjects were included in the study.

Generally, the primary auditory cortex in the Heschl’s gyrus (HG) is known to respond within 20–30 ms after tone onset (8–10) but the strongest, most robust, and most reproducible auditory activation observed with the electromagnetic imaging techniques peaks about 100 ms after stimulus onset (N100) (11, 12). The source location of the magnetic N100m, about 1 cm posterior to the sources of the earliest responses, suggests contribution of both primary and associative auditory cortices (13). Both MEG (14) and intracranial recordings (15) have implied participation of the adjacent planum temporale (PT) in generation of the N100 response.

Our study revealed that the left-hemisphere N100m response to pure tones was significantly stronger in German than Finnish males. The auditory activations in Finnish and German females did not differ from each other. In the right hemisphere, males showed stronger N100m responses than females, regardless of native language. Functional organization of the left auditory cortex thus appears to vary with native language in men.

MATERIALS AND METHODS

Ten Finnish females (21–37 years), 10 Finnish males (23–37 years), 10 German females (18–38 years), and 10 German males (25–40 years) participated in the study. The subjects were right-handed university students or graduates, with no neurological or hearing problems. All subjects had a fully monolingual background in at least two generations.

The stimuli were monaural 50-ms (15-ms rise/fall time) 1-kHz tones, 70 dB above the individually determined hearing threshold. The ipsi- and contralateral pathways in each hemisphere were probed by delivering the tones alternately to the left and right ear, at a randomized 0.8- to 1.2-s interstimulus interval. To maintain stable measurement conditions across subjects, the responses to tones were recorded while the subjects were (i) reading silently Stephen Hawking’s The Brief History of Time in their native language and (ii) studying perceptually challenging drawings by M. C. Escher. The order of the tasks was randomized across subjects. The magnetic fields, signaling cortical current flow, were averaged over about 100 tones in each task, separately for left- and right-ear stimulation. The measurement (reading text) was repeated at least once in three subjects, and the effect of small-intensity variations (70 ± 6 dB) also was tested in three subjects.

In auditory experiments, subjects are commonly asked to read a text to stabilize their attentional level and to prevent them from, e.g., counting the stimuli. We also included a nonverbal task (pictures) because silent reading of the text possibly could affect auditory processing; cortical structures in the superior temporal gyrus, bordering the left auditory cortex, recently have been reported to be involved in sentence comprehension (16). If silent reading interferes with auditory processing, picture viewing could serve as a nonverbal baseline for comparison. On the other hand, if the nature of the stabilizing task does not affect the auditory responses, the two tasks would provide confirming replications of the cortical activation patterns. The responses in the two conditions then could be pooled in each individual, resulting in an enhanced signal-to-noise ratio (averages over about 200 tone presentations).

The auditory cortical responses were recorded with Neuromag-122 whole-head magnetometers (17) in Helsinki (Finnish subjects) and in Düsseldorf (German subjects). The same person tested and calibrated the two neuromagnetometers, using artificial current dipoles particularly at locations and orientations typical for sources of the N100m response, and the auditory stimuli, using the same sound-level meter. To further verify the compatibility of the data from the two sites, three subjects (two males, one female) were studied both in Helsinki and in Düsseldorf. The MEG signals were recorded by using a 130-Hz low-pass filter and digitized at 0.4 kHz (corresponding to a time resolution of 2.5 ms). Horizontal and vertical electrooculograms were recorded simultaneously for on-line rejection of epochs contaminated by eye and eyelid movements. MEG signals are associated with synchronous postsynaptic activation in tens of thousands of parallel apical dendrites of pyramidal cells within a few square centimeters of cortex (18). MEG is most sensitive to electric currents flowing parallel to the skull, i.e., to fissural activation.

The auditory N100m response was modeled with an equivalent current dipole (ECD) (18). With this model, the center of the active cortical area and the mean direction and total strength of current flow therein can be estimated from the magnetic field pattern. From the dipolar patterns elicited by auditory stimulation at about 100 ms after stimulus onset, the sources were localized with an accuracy of typically better than 5 mm (95% confidence limit). The sources of the auditory N100m responses, low-pass-filtered at 40 Hz, were determined in each individual subject from subsets of 22 sensors over the lateral parts of both hemispheres. Thereafter, the locations and orientations of the two ECDs, one in each hemisphere, were kept fixed, while their amplitudes (i.e., source strengths) were allowed to vary to best explain the field pattern recorded by all 122 sensors. The two sources explained, on average, 95% (range 70–99% across subjects) of the MEG signals within the 70- to 130-ms time window after tone onset. The onset and peak latencies and the peak amplitude were measured from each individual source waveform and subjected to statistical analysis. To be accepted as significant, differences in source strengths were required to exceed 5 nanoamperemeters (nAm) (prestimulus baseline variance less than 2 nAm) and differences in latencies were required to exceed 5 ms (two sampling points).

The location of the sources is defined in head coordinates, set by clearly identifiable fiducial points in front of the ear canals (x axis, from left to right) and by the nasion (positive y axis); the z axis is oriented toward the vertex. The position of the head within the magnetometer was found by attaching three small coils on the subject’s head, measuring their location in the head coordinate system with the help of a three-dimensional digitizer, and energizing them briefly to obtain their locations in the magnetometer coordinate system. Finally, the MEG and MRI coordinate frames were aligned by marking the fiducial points in the MR images.

RESULTS

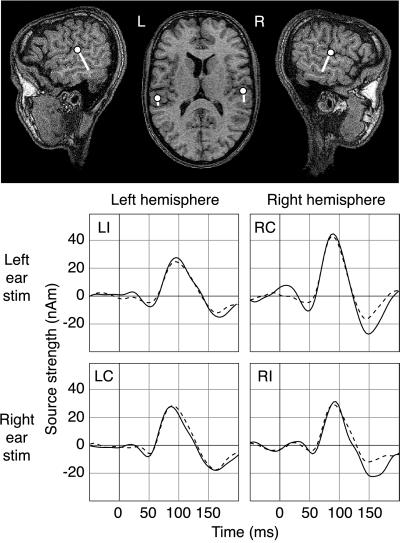

Fig. 1 shows the bilateral supratemporal regions activated by the monaural 50-ms 1-kHz tones in one male Finn. The time courses of activation during reading and viewing pictures are superimposed. The ipsilateral responses in each hemisphere (left-hemisphere response to left-ear stimulation, LI; right-hemisphere response to right ear stimulation, RI) are depicted on the diagonal and contralateral responses (left-hemisphere response to right-ear stimulation, LC; right-hemisphere response to left-ear stimulation, RC) off-diagonally.

Figure 1.

Cortical areas activated by tones and their activation strengths as a function of time in one male Finnish subject. (Upper) The center of the active area (dot) and the direction of current flow (tail) superimposed on the subject’s MR image. (Lower) Time course of activation of the left (Left) and right auditory cortex (Right) to stimulation of the left (Upper) and right ear (Lower), when the subject was reading (solid line) or studying pictures (dashed line). The source strengths are expressed in nanoamperemeters. LI and LC indicate left-hemisphere responses to stimulation of the ipsi- (left) and contralateral (right) ear, respectively. RI and RC denote the right-hemisphere responses.

An ANOVA was performed, with task (text vs. pictures), sex (female vs. male), and native language (Finnish vs. German) as between-subjects factors and hemisphere (left vs. right) and stimulated ear (left vs. right) as within-subjects factors. No verbal vs. nonverbal task effect emerged, and the subsequent analysis was performed on source waveforms averaged over the two tasks in each individual (cf. Fig. 1). Table 1 summarizes the mean (±SEM) source strengths and latencies in the four subject groups.

Table 1.

N100m source strengths and latencies (mean ± SEM) in Finnish (Fin) and German (Ger) females (F) and males (M)

| Group | LC* | LI* | RC† | RI† | IHB‡ | ▵LE§ | ▵RE¶ |

|---|---|---|---|---|---|---|---|

| Strength, nAm | |||||||

| Fin F | 29 ± 5 | 19 ± 4 | 38 ± 5 | 24 ± 4 | 1.0 ± 0.2 | ||

| Fin M | 28 ± 6 | 19 ± 4 | 51 ± 6 | 35 ± 4 | 1.1 ± 0.2 | ||

| Ger F | 29 ± 5 | 20 ± 3 | 40 ± 4 | 29 ± 3 | 1.1 ± 0.1 | ||

| Ger M | 53 ± 7 | 40 ± 6 | 49 ± 6 | 38 ± 6 | 1.1 ± 0.1 | ||

| Onset, ms | |||||||

| Fin F | 54 ± 3 | 68 ± 3 | 48 ± 2 | 62 ± 3 | 19 ± 3 | 8 ± 3 | |

| Fin M | 57 ± 2 | 62 ± 2 | 48 ± 2 | 56 ± 3 | 15 ± 3 | −1 ± 2 | |

| Ger F | 57 ± 3 | 66 ± 2 | 54 ± 2 | 66 ± 2 | 13 ± 1 | 9 ± 4 | |

| Ger M | 51 ± 3 | 63 ± 2 | 41 ± 2 | 55 ± 3 | 22 ± 2 | 4 ± 4 | |

| Peak, ms | |||||||

| Fin F | 86 ± 2 | 95 ± 2 | 84 ± 1 | 93 ± 2 | 11 ± 2 | 7 ± 2 | |

| Fin M | 84 ± 2 | 89 ± 3 | 82 ± 2 | 92 ± 2 | 9 ± 4 | 9 ± 3 | |

| Ger F | 85 ± 4 | 98 ± 3 | 84 ± 2 | 95 ± 2 | 14 ± 3 | 10 ± 4 | |

| Ger M | 87 ± 2 | 95 ± 2 | 86 ± 3 | 96 ± 2 | 10 ± 2 | 9 ± 2 | |

LC/LI, left hemisphere response to contra/ipsilateral stimulation. When the N100m response was preceded by a deflection of opposite polarity, the onset latency was measured at the zero crossing of the ascending side. The LI (in two Finnish males) and the LC (in one Finnish female) onset and peak latencies could not be obtained because the response did not exceed baseline variation (source strength set to 0).

RC/RI, right hemisphere response to contra/ipsilateral stimulation.

Interhemispheric balance of activation, IHB = (LC/LI)/(RC/RI).

Ipsi vs. contralateral latency difference for stimulation of the left ear, ▵LE = LI − RC.

Ipsi vs. contralateral latency difference for stimulation of the right ear, ▵RE = RI − LC.

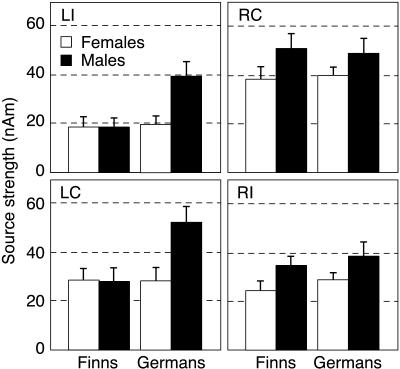

Fig. 2 shows the N100m source strengths in German and Finnish female and male subjects. The responses in males exceeded those in females in both hemispheres, reflected in a significant sex effect [F(1, 36) = 6.2, P < 0.02] and a nonsignificant sex-by-hemisphere interaction [F(1, 36) = 0.007, P = 0.93]. However, as indicated by a highly significant language-by-sex-by-hemisphere interaction [F(1, 36) = 9.3, P < 0.005], the responses in the Finnish and German females did not differ from each other whereas German males showed stronger left-hemisphere activation than Finnish males. N100m was equal or stronger in the left than right hemisphere in 8 of 10 German males and stronger in the right than left hemisphere in 9 of 10 Finnish males. Thus, a pure sex effect was evident in the right hemisphere whereas an additional native language effect was observed in the left hemisphere.

Figure 2.

N100m source strengths in the four subject groups. Mean ± SEM values are displayed in the same layout as in Fig. 1. The female (open bars) and male subjects (solid bars) are grouped by native language.

Hearing thresholds varied by ±5 dB (SD) across subjects, with no systematic sex differences, and ±3 dB in the same subject across repetitions (measured in three subjects). The N100m source strengths, typically 20–50 nAm (cf. Table 1 and Figs. 1 and 2), showed a within-subject variation of about 3 nAm. Variation of intensity by ±6 dB around 70 dB HL changed the N100m strengths by about 5 nAm, in agreement with earlier reports (19). Thus, the observed sex and language differences cannot be attributed to a systematic error in determining the hearing thresholds or setting the stimulus intensity.

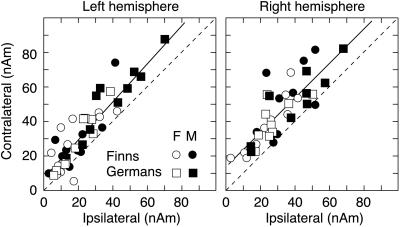

On the other hand, there was also marked conformity across subject groups in the activation strengths and latencies (cf. Table 1). Although German males had equally strong responses in both hemispheres, as a main effect, the N100m responses were stronger in the right than left hemisphere [F(1, 36) = 18.7, P < 0.0001]. As illustrated in Fig. 3, the contralateral ear had a stronger representation than the ipsilateral ear in each hemisphere, resulting in a significant ear-by-hemisphere interaction [F(1, 36) = 102.9, P < 0.00001].

Figure 3.

Source strength of the N100m contralateral to stimulation as a function of the ipsilateral N100m response in all subjects, plotted separately for the left and right hemispheres. The coding of the four groups is given on the figure (F, female; M, male). Linear regression to the data points is depicted with solid lines (see text for details). Dashed lines indicate where contra- and ipsilateral responses would be equally strong. Clustering of symbols above this diagonal indicates that contralateral N100m exceeds the ipsilateral N100m.

Although source strengths varied both with native language and gender, the contra- and ipsilateral responses showed a remarkably constant linear dependence both in the left (contra = 1.1 × ipsi + 7 nAm, r = 0.90, P < 0.0001) and in the right (contra = 1.0 × ipsi + 13 nAm, r = 0.80, P < 0.0001) hemispheres. Furthermore, the correlation essentially was identical in the two hemispheres. The left and right hemispheres thus were equally sensitive to stimulation of ipsi- vs. contralateral ear in all subject groups, i.e., the interhemispheric balance (20) of auditory cortical activation was close to 1 (cf. Table 1).

The onset and peak latencies were qualitatively similar in all groups (cf. Table 1). The onset latencies showed a significant hemisphere effect [F(1, 33) = 31.5, P < 0.00001] and an ear-by-hemisphere interaction [F(1, 33) = 151.8, P < 0.00001]: both the contra- and ipsilateral responses started earlier in the right than left hemisphere. The delay from contra- to ipsilateral N100m onset was shorter for right-ear than left-ear stimulation (5 ms from left to right hemisphere vs. 18 ms from right to left hemisphere; P < 0.00001; paired two-tailed t test). These asymmetries were accentuated in males: the right-hemisphere onset was about 7 ms earlier in males than females [hemisphere-by-sex interaction F(1, 33) = 6.2, P < 0.02] and the directional asymmetry in interhemispheric delays was more pronounced (18 ms vs. 7 ms; P < 0.02, independent two-tailed t test). The difference was no longer evident in the peak latencies, where the delay from contra- to ipsilateral response was about 10 ms in both sexes for stimulation of either ear, in agreement with earlier studies (21, 22).

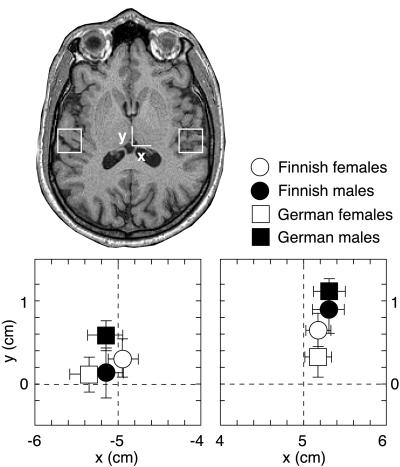

Fig. 4 shows the mean location of the neuronal population generating the N100m response in both hemispheres, for all subject groups. In agreement with previous observations, the sources were located, on average, 5 mm more frontally in the right than left hemisphere (P < 0.006) (5, 6, 23–25), and the right-hemisphere sources in males were about 6 mm anterior to those in females (P < 0.03) (5, 6). The N100m sources centered on the borderline between HG and PT in the left hemisphere (cf. ref. 26) and, on average, 5 mm anteriorly to the borderline in the right hemisphere (P < 0.0002), thus suggesting contribution of both HG and PT in the left hemisphere and predominantly of HG in the right hemisphere.

Figure 4.

Mean ± SEM N100m source locations in the horizontal plane (xy, see Materials and Methods) in Finnish and German female and male subjects. The positions of the 2 × 2-cm2 plots in the left and right hemisphere are indicated on the MR image.

The orientation of current flow, a sensitive indicator of fissural structure, varied by less than 10° in the sagittal (cf. Fig. 1) and coronal planes across subject groups and hemispheres. The N100m sources were located, on average, 1.5 cm below the outer surface of the brain. The distance of the source areas from the neuromagnetometer’s sensor array did not vary systematically with hemisphere, gender, or native language.

DISCUSSION

Certain general rules emerged in the functional organization of the human auditory cortices, which are likely to reflect an inherent stabilization process in the normal auditory system. In each hemisphere, agreeing with previous reports (22, 25), the contralateral ear was represented by a larger or more synchronized neuronal population than the ipsilateral ear, thus resembling the strong contralaterality characteristic of the other sensory modalities. Moreover, the ipsi- and contralateral activation strengths showed essentially the same linear dependence in the two hemispheres, resulting in a stable interhemispheric balance across all groups. Whether the subjects were performing a verbal (reading) or nonverbal (viewing pictures) task had no systematic effect on the N100m responses.

The delay from contra- to ipsilateral N100m onset was shorter for right- than left-ear stimulation. This difference can arise from the overall faster responses in the right than left hemisphere. Penhune et al. (27) reported a larger volume of white matter in human left than right primary auditory cortex and suggested that the increase in white matter in the left HG reflects stronger intrinsic connections to the adjacent PT, which is usually larger in the left than right hemisphere in right-handed individuals (26, 28, 29). In line with the anatomical evidence, N100m locations in the present data set suggest a stronger contribution of PT in the left than right hemisphere. Such differences in HG/PT connections could underlie the variance of N100m onset latencies in the left and right hemispheres. On the other hand, because the contralateral auditory cortex appears to exert a modifying effect on the ipsilateral N100m through callosal connections (30), the directional differences in N100m onsets also may reflect a more efficient callosal transfer from left to right than right to left hemisphere in our right-handed subjects.

Effect of Native Language and Gender. Strikingly, the N100m responses to simple, nonverbal stimuli were different in Finns and Germans, but only in the left hemisphere of the male subjects. The German males had an extraordinarily strong left auditory representation, quite unlike the right-hemisphere dominance evident in the other subject groups. This is the first report on an effect of native language on basic functional organization of the auditory cortex. Whole-head MEG studies of bilateral auditory function in Finns (22, 31, 32) or Germans (20, 25, 33) by using paradigms comparable to ours typically have included less than 10 subjects in total, with females and males in varying proportions, and no control for the subjects’ linguistic background. Still, previous reports also have demonstrated right-hemisphere dominance in Finns (22) and equally strong responses in the two hemispheres in Germans when most subjects were males (20, 25, 33).

The right-hemisphere N100m responses were stronger and more anteriorly located in males than females. Such gender differences would be readily accounted for by genetic variation. The increased left-hemisphere auditory representation in German males could, in principle, be genetically determined as well. This concept, however, suggests a larger genetic distance between Finnish and German males than between Finnish and German females, which would be rather surprising, even in light of recent reports of higher mutation rates in male than female chromosomes, or “male-driven evolution” (34, 35). At this stage it would seem more parsimonious to consider whether differences in linguistic environment could account for the observed variance in the left hemisphere. Speech is an exceptionally meaningful and behaviorally motivating auditory stimulus and may well affect the basic functional organization of the auditory system, possibly more profoundly in the left, language-dominant hemisphere.

The interaction of native language and gender in cortical responses to pure tones is an intriguing but also surprising finding. Below, we will discuss one distinction between the Finnish and German languages and a timing difference in male and female brains that could account for the observed variance in auditory cortical processing of pure tones.

Characteristics of Finnish and German Languages. Finnish and German languages differ substantially in their phonetic properties (36, 37). Segmental differences are evident, e.g., in the number of possible vowels (Finnish 8, German 12–17), consonants (Finnish 13, German 20–24), and diphthongs (Finnish 18, German 3). At the suprasegmental level, the Finnish prosody is characterized by syllabic and word stress, whereas the German language has a more centralized organization in which the speech rhythm is determined by the sentence structure.

Acoustically, there is an interesting difference between the vowel sounds of the two languages, which potentially could affect auditory cortical processing. Vibrations of the vocal cords determine the fundamental frequency, i.e., pitch of speech, and resonances of the vocal tract determine the spectrum of the sound. The spectral maxima are called formants. The first and second formants (F1 and F2) span the vowel triangle, with/i/,/a/, and/u/in the corners in the Finnish and German languages. In Finnish, F1 ranges from 250 to 750 Hz and F2 ranges from 600 to 2,500 Hz (in male speech). However, the German short vowels are more densely packed, spanning an F1 range of 400–700 Hz and F2 range of 1,100–1,900 Hz (38, 39). In sum, the spectral distances between adjacent vowels are about 50% smaller in German than in Finnish (39), and the frequency resolution of the analysis network thus should be higher for processing German than Finnish language.

Stimulus Features and N100m. N100 is very similar to various kinds of acoustic stimuli, including clicks, tones, noise bursts, and speech sounds, as long as there is an abrupt change (11). N100 is affected by physical and temporal aspects of the stimulus but its amplitude does not seem to correlate directly with perceived loudness or pitch (cf. refs. 11 and 12). At the short interstimulus interval used in the present study, the magnetic N100m is believed to be closely related to stimulus properties, rather than to temporal integration or sensory memory (40, 41).

An increase in the N100m amplitude reflects a larger number of synchronously active neurons or increased synchrony within the neuronal population. Because only the N100m source strength was exceptionally high in German males but the onset and peak latencies did not distinguish them from the other three subject groups, the increased left auditory cortical activation in German males most probably is due to involvement of a significantly larger neuronal population in this group. In principle, the N100m amplitude also could be affected by a change in the balance of fissural vs. gyral activation, i.e., in the amount of current readily detected by MEG. However, this explanation is unlikely because no differences between groups were evident either in the locations or orientations of current flow or in the course of the HG in the left hemisphere.

An expansion of the synchronously active neuronal population contributing to the N100m amplitude could result from a need for higher frequency resolution in German than Finnish auditory cortex. Recanzone et al. (42) taught monkeys to discriminate between frequencies of two tones and found an increase in the cortical area representing the trained frequency range. No enlargement was detected in monkeys who did not attend to the tone pairs. The authors concluded that behavioral relevance of the stimulus is necessary for cortical reorganization to occur. The recent finding of a stronger N100m response to piano tones in trained musicians than in musically untrained control subjects (43) suggests a similar mechanism in the human auditory cortex. Here, the relevant stimulus feature was probably the timbre of the tone rather than frequency discrimination per se, because there was no simultaneous increase of N100m for pure tones. Functional reorganization may occur differently for distinct aspects of sensory input, e.g., temporal and spectral features (44).

It is hard to imagine an auditory stimulus that could surpass speech in behavioral relevance or persistence of training. The frequency of 1 kHz and intensity of 70 dB above hearing threshold used in the present study fall within the range typical for speech (45) and, therefore, would probe the auditory representations needed for processing speech signals, especially the F2 component. Recent brain-imaging studies have suggested that the right auditory cortex is particularly sensitive to perception of melodic structures (46) and prosody (47), whereas the left auditory cortex is strongly involved in phonetic discrimination (47, 48), compatible with the usual left-hemisphere dominance of language function. If the brain can make efficient use of the frequency discrimination capabilities of both hemispheres, a higher frequency resolution needed for phonetic discrimination in German than Finnish would not necessarily lead to increase of the neuronal population involved. On the other hand, if the auditory cortex of the language-dominant left hemisphere alone must provide the neural substrate for phonetic analysis, one would expect a larger neuronal population and thus a stronger N100m in the left hemisphere of German than Finnish subjects.

Left Auditory Cortex and Native Language in Men. Intriguingly, we observed a particularly strong left-hemisphere N100m response only in the male German subjects. The asymmetry of onset latencies from left-to-right and right-to-left hemispheres also was significantly more pronounced and the right-hemisphere onset was significantly earlier in male than female subjects, suggesting increased functional segregation between the auditory cortices in the male subjects. The gender differences in timing are particularly interesting in light of the proposal by Ringo et al. (49), suggesting that longer time delays in interhemispheric transfer promote hemispheric specialization, with performance of time-critical tasks gathered in one hemisphere. Early auditory cortical processing thus could be more strongly lateralized in male than female subjects. Shaywitz et al. (7) have demonstrated a stronger lateralization of cortical activation patterns in language tasks in males (left hemisphere) than females (bilateral), and behavioral studies have suggested stronger lateralization of auditory function in men (50).

Within this framework, we would interpret the similarity of the N100m patterns in Finnish and German females as a manifestation of efficient interactive use of the analysis capacity of the bilateral auditory cortices. In contrast, the combination of lateralized auditory processing in the male brain and the narrower vowel space in German than Finnish language would set higher demands for frequency resolution in the left auditory cortex of German men, resulting in the pronounced left N100m response. The enlarged neuronal population providing the higher spectral sensitivity in the left hemisphere would subserve the time-critical process of speech perception, but responds also when the stimulus is a simple 1-kHz tone. Behaviorally, we would not expect differences in nonlinguistic frequency-discrimination tasks, where the processing capacity of both hemispheres can be used.

For testing the validity of the mechanism discussed above, it seems crucial to probe the auditory cortex with different tone frequencies. The largest difference between German and Finnish males should appear for speech frequencies, especially for those within the typical F2 range, and less variance should appear for frequencies outside the speech range. If the frequency extent of the vowel space is an important factor in the functional organization of the auditory cortex, one would expect similarities in auditory activation patterns between, e.g., Finnish and Japanese subjects (51). Right-hemisphere dominance of N100m responses to pure-tone stimuli, indeed, has been reported in a group of right-handed Japanese subjects, consisting of 31 men and 6 women (52).

In conclusion, the present data illustrate that responses to pure tones in the human left auditory cortex vary with native language. This effect appears to be stronger in male than female subjects, probably because of more pronounced functional lateralization in the male brain. It is important to acknowledge the possibility of such dependencies not only in research into higher cognitive functions but also in studies of basic auditory processing.

Acknowledgments

We thank M. Leiniö for help in testing the measurement systems and M. Hämäläinen and J. Mäkelä for valuable comments on the manuscript. This work was supported by the Human Frontier Science Program, the Academy of Finland, Wihuri Foundation, and Volkswagen-Stiftung.

ABBREVIATIONS

- F1 and F2

first and second formants, respectively

- HG

Heschl’s gyrus

- MEG

magnetoencephalography

- N100m

magnetoencepholographic response peaking about 100 ms after auditory stimulation

- PT

planum temporale

References

- 1.Rakic P, Bourgeois J-P, Eckenhoff N, Zecevic N, Goldman-Rakic P S. Science. 1986;232:232–235. doi: 10.1126/science.3952506. [DOI] [PubMed] [Google Scholar]

- 2.Hubel D H, Wiesel T N, LeVay S. Philos Trans R Soc London B. 1977;278:377–409. doi: 10.1098/rstb.1977.0050. [DOI] [PubMed] [Google Scholar]

- 3.Knudsen E I. Trends Neurosci. 1984;7:326–330. [Google Scholar]

- 4.Kuhl P K, Williams K A, Lacerda F, Stevens K N, Lindblom B. Science. 1992;255:606–608. doi: 10.1126/science.1736364. [DOI] [PubMed] [Google Scholar]

- 5.Baumann S B, Rogers R L, Guinto F C, Saydjari C L, Papanicolaou A C, Eisenberg H M. Electroenceph Clin Neurophysiol. 1991;80:53–59. doi: 10.1016/0168-5597(91)90043-w. [DOI] [PubMed] [Google Scholar]

- 6.Reite M, Cullum C M, Stocker J, Teale P, Kozora E. Brain Res Bull. 1993;32:325–328. doi: 10.1016/0361-9230(93)90195-h. [DOI] [PubMed] [Google Scholar]

- 7.Shaywitz B A, Shaywitz S E, Pugh K R, Constable R T, Skudlarski P, Fulbright R K, Bronen R A, Fletcher J M, Shankweiler D P, Katz L, et al. Nature (London) 1995;373:607–609. doi: 10.1038/373607a0. [DOI] [PubMed] [Google Scholar]

- 8.Celesia G G. Brain. 1976;99:403–414. doi: 10.1093/brain/99.3.403. [DOI] [PubMed] [Google Scholar]

- 9.Lee Y S, Lueders H, Dinner D S, Lesser R P, Hahn J, Klem G. Brain. 1984;107:115–131. doi: 10.1093/brain/107.1.115. [DOI] [PubMed] [Google Scholar]

- 10.Scherg M, Hari R, Hämäläinen M. In: Advances in Biomagnetism. Williamson S J, Hoke M, Stroink G, Kotani M, editors. New York: Plenum; 1989. pp. 97–100. [Google Scholar]

- 11.Näätänen R, Picton T. Psychophysiology. 1987;24:375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- 12.Hari R. In: Auditory Evoked Magnetic Fields and Electric Potentials. Grandori F, Hoke M, Romani G L, editors. Vol. 6. Basel: Karger; 1990. pp. 222–282. [Google Scholar]

- 13.Pelizzone M, Hari R, Mäkelä J P, Huttunen J, Ahlfors S, Hämäläinen M. Neurosci Lett. 1987;82:303–307. doi: 10.1016/0304-3940(87)90273-4. [DOI] [PubMed] [Google Scholar]

- 14.Lütkenhöner B, Steinsträter O. Audiol Neurootol. 1998;3:191–213. doi: 10.1159/000013790. [DOI] [PubMed] [Google Scholar]

- 15.Liégois-Chauvel C, Musolino A, Badier J M, Marquis P, Chauvel P. Electroenceph Clin Neurophysiol. 1994;92:204–214. doi: 10.1016/0168-5597(94)90064-7. [DOI] [PubMed] [Google Scholar]

- 16.Helenius P, Salmelin R, Service E, Connolly J F. Brain. 1998;121:1133–1142. doi: 10.1093/brain/121.6.1133. [DOI] [PubMed] [Google Scholar]

- 17.Ahonen A I, Hämäläinen M S, Kajola M J, Knuutila J E T, Laine P P, Lounasmaa O V, Parkkonen L T, Simola J T, Tesche C D. Physica Scripta. 1993;T49:198–205. [Google Scholar]

- 18.Hämäläinen M, Hari R, Ilmoniemi R J, Knuutila J, Lounasmaa O V. Rev Mod Phys. 1993;65:413–497. [Google Scholar]

- 19.Pantev C, Hoke M, Lehnertz K, Lütkenhöner B. Electroenceph Clin Neurophysiol. 1989;72:225–231. doi: 10.1016/0013-4694(89)90247-2. [DOI] [PubMed] [Google Scholar]

- 20.Salmelin R, Schnitzler A, Schmitz F, Jäncke L, Witte O W, Freund H-J. NeuroReport. 1998;9:2225–2229. doi: 10.1097/00001756-199807130-00014. [DOI] [PubMed] [Google Scholar]

- 21.Elberling C, Bak C, Kofoed B, Lebech J, Saermark K. Acta Neurol Scand. 1982;65:553–569. doi: 10.1111/j.1600-0404.1982.tb03110.x. [DOI] [PubMed] [Google Scholar]

- 22.Mäkelä J, Ahonen A, Hämäläinen M, Hari R, Ilmoniemi R, Kajola M, Knuutila J, Lounasmaa O, McEvoy L, Salmelin R, et al. Hum Brain Mapp. 1993;1:48–56. [Google Scholar]

- 23.Nakasato N, Fujita S, Seki K, Kawamura T, Matani A, Tamura I, Fujiwara S, Yoshimoto T. Electroenceph Clin Neurophysiol. 1995;94:183–190. doi: 10.1016/0013-4694(94)00280-x. [DOI] [PubMed] [Google Scholar]

- 24.Kanno A, Nakasato N, Ohtomo S, Hatanaka K, Suzuki K, Fujiwara S, Yoshimoto T. Electroenceph Clin Neurophysiol. 1997;103:70–71. [Google Scholar]

- 25.Pantev C, Ross B, Berg P, Elbert T, Rockstroh B. Audiol Neurootol. 1998;3:183–190. doi: 10.1159/000013789. [DOI] [PubMed] [Google Scholar]

- 26.Steinmetz H, Volkmann J, Jäncke L, Freund H-J. Ann Neurol. 1991;29:315–319. doi: 10.1002/ana.410290314. [DOI] [PubMed] [Google Scholar]

- 27.Penhune V B, Zatorre R J, MacDonald J D, Evans A C. Cereb Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- 28.Geschwind N, Levitsky W. Science. 1968;161:186–187. doi: 10.1126/science.161.3837.186. [DOI] [PubMed] [Google Scholar]

- 29.Witelson S F, Kigar D L. J Comp Neurol. 1992;323:326–340. doi: 10.1002/cne.903230303. [DOI] [PubMed] [Google Scholar]

- 30.Mäkelä J P, Hari R. NeuroReport. 1992;3:94–96. doi: 10.1097/00001756-199201000-00025. [DOI] [PubMed] [Google Scholar]

- 31.Vasama J-P, Mäkelä J P, Tissari S O, Hämäläinen M S. Acta Otolaryngol (Stockholm) 1995;115:616–621. doi: 10.3109/00016489509139376. [DOI] [PubMed] [Google Scholar]

- 32.Levänen S, Ahonen A, Hari R, McEvoy L, Sams M. Cereb Cortex. 1996;6:288–296. doi: 10.1093/cercor/6.2.288. [DOI] [PubMed] [Google Scholar]

- 33.Eulitz C, Diesch E, Pantev C, Hampson S, Elbert T. J Neurosci. 1995;15:2748–2755. doi: 10.1523/JNEUROSCI.15-04-02748.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Shimmin L C, Chang B H, Li W H. Nature (London) 1993;362:745–747. doi: 10.1038/362745a0. [DOI] [PubMed] [Google Scholar]

- 35.Ellegren H, Fridolfsson A K. Nat Genet. 1997;17:182–184. doi: 10.1038/ng1097-182. [DOI] [PubMed] [Google Scholar]

- 36.Karlsson F. Finnish Grammar. Helsinki: Werner Söderström; 1982. [Google Scholar]

- 37.Rausch I, Rausch R. Deutsche Phonetik für Ausländer. Munich: VEB Verlag Enzyklopädie Leipzig; 1988. [Google Scholar]

- 38.Iivonen A. Phonetica. 1995;52:221–227. doi: 10.1159/000262174. [DOI] [PubMed] [Google Scholar]

- 39.Iivonen A. In: Der Ginkgo-Baum Germanistisches Jahrbuch für Nordeuropa. Korhonen J, Gimpl G, editors. Vol. 15. Helsinki: Finn Lectura; 1997. pp. 66–83. [Google Scholar]

- 40.Loveless N, Levänen S, Jousmäki V, Sams M, Hari R. Electroenceph Clin Neurophysiol. 1996;100:220–228. doi: 10.1016/0168-5597(95)00271-5. [DOI] [PubMed] [Google Scholar]

- 41.McEvoy L, Levänen S, Loveless N. Psychophysiology. 1997;34:308–316. doi: 10.1111/j.1469-8986.1997.tb02401.x. [DOI] [PubMed] [Google Scholar]

- 42.Recanzone G H, Schreiner C E, Merzenich M M. J Neurosci. 1993;13:87–103. doi: 10.1523/JNEUROSCI.13-01-00087.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pantev C, Oostenveld R, Engelien A, Ross B, Roberts L E, Hoke M. Nature (London) 1998;392:811–814. doi: 10.1038/33918. [DOI] [PubMed] [Google Scholar]

- 44.Kilgard M P, Merzenich M M. Nat Neurosci. 1998;1:727–731. doi: 10.1038/3729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Zwicker E, Fastl H. Psychoacoustics: Facts and Models. Berlin: Springer; 1990. [Google Scholar]

- 46.Zatorre R J, Evans A C, Meyer E. J Neurosci. 1994;14:1908–1919. doi: 10.1523/JNEUROSCI.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Imaizumi S, Mori K, Kiritani S, Hosoi H, Tonoike M. NeuroReport. 1998;9:899–903. doi: 10.1097/00001756-199803300-00025. [DOI] [PubMed] [Google Scholar]

- 48.Näätänen R, Lehtokoski A, Lennes M, Cheour M, Huotilainen M, Iivonen A, Vainio M, Alku P, Ilmoniemi R J, Luuk A, et al. Nature (London) 1997;385:432–434. doi: 10.1038/385432a0. [DOI] [PubMed] [Google Scholar]

- 49.Ringo J L, Doty R W, Demeter S, Simard P Y. Cereb Cortex. 1994;4:331–343. doi: 10.1093/cercor/4.4.331. [DOI] [PubMed] [Google Scholar]

- 50.Hiscock M, Inch R, Jacek C, Hiscock-Kalil C, Kalil K M. J Clin Exp Neuropsychol. 1994;16:423–435. doi: 10.1080/01688639408402653. [DOI] [PubMed] [Google Scholar]

- 51.Sato S, Yokota M, Kasuya H. Phonetica. 1982;39:36–46. doi: 10.1159/000261649. [DOI] [PubMed] [Google Scholar]

- 52.Kanno A, Nakasato N, Fujita S, Seki K, Kawamura T, Ohtomo S, Fujiwara S, Yoshimoto T. In: Visualization of Information Processing in the Human Brain. Advances in MEG and Functional MRI. Hashimoto I, Okada Y, Ogawa S, editors. EEG Suppl. 47. Amsterdam: Elsevier; 1996. pp. 129–132. [Google Scholar]