Abstract

Objectives

To compare student and faculty perceptions of the delivery and achievement of professional competencies in a doctor of pharmacy program in order to provide data for both accountability and curricular improvement purposes.

Design

A survey instrument was designed based on current learning theory, and 76 specific competency statements generated from mission and goal statements of The Ohio State University College of Pharmacy and the Center for the Advancement of Pharmaceutical Education. This instrument was administered to PharmD program students and faculty.

Assessment

The number of competencies by program year that are delivered in the curriculum, the percent of students and faculty reporting individual competency delivery and achievement, and differences between student and faculty perceptions of competency delivery and achievement are reported.

Conclusion

The faculty and student opinions provided an in-depth view of curricular outcomes. Gathering perception data from faculty and students about the delivery and achievement of competencies in a PharmD program can be used to both meet accreditation requirements (accountability) and to improve the curriculum (improvement).

Keywords: outcomes assessment, assessment, survey research, curriculum reform, competency

INTRODUCTION

Over 20 years ago, reports on undergraduate education prompted the use of assessments to improve education and accountability.1-6 Questions about student learning led to recommendations for institutions to set goals and standards, encourage active learning, and assess student outcomes.7 While the purpose of assessment was to improve student learning, and the purpose of accreditation was to show accountability to external stakeholders, the distinction separating these 2 activities as functions has become less clear. The shift toward student learning-centered accreditation provides the common thread that ties the processes of student outcomes assessment and accreditation together.8 Most assessment scholars now advocate models for assessment that can fulfill both accountability and improvement agendas.9 Assessment can be undertaken at institutional, departmental/program, or course levels. This research addresses the process at the program level.

The first challenge faced by those engaged in outcomes assessment is developing methodologies that can lead to programmatic improvements, ie, “to complete the assessment loop.”10 The assessment literature contains many more examples of assessment methodologies and tools than it does documented evidence of how assessment results have been used to guide curricular change and improve student learning.11 If the overall goal of program level assessment is to improve student learning, then assessment data should inform continuous curricular reform. In the accredited disciplines (eg, pharmacy, medicine, business, engineering) an additional challenge centers on how outcomes assessment efforts can be designed to meet both accountability and improvement agendas. A powerful motivator for the initiation of an assessment program in an accredited discipline is an impending self-study and subsequent accreditation visit and evaluation. Ensuring that assessment programs serve both formative and summative goals, ie, assessment that leads to continuous improvement of curricula while also generating results that can be used to demonstrate learning outcomes to accrediting bodies, is challenging.7

Assessment as a means to continuous educational improvement implies that assessment data are collected not only to satisfy the accountability aspect of assessment, but also to prompt positive changes to the educational process.12,13 Assessment becomes a dynamic process initiated by the questions of decision makers, involving them in the gathering and interpreting of data that informs and helps guide continuous curricular improvement.14 When assessment focuses on outcomes consistent with missions, (ie, institutional values), closing the assessment loop becomes a natural next step and increases the likelihood that the institution and its stakeholders benefit directly from the process.12,15 Assessment shares many of the basic principles of total quality management, which also emphasizes institutional mission and the reporting and use of results as a means for continuous improvement.16 The dynamic of closing the assessment loop links the accountability and improvement aspects of program assessment. The most recent (2006) revision of the accreditation standards calls for colleges of pharmacy to document this entire process.17

Studies presenting data from professional pharmacy program-level assessment can be categorized according to both the focus of the assessment, and the groups of respondents involved in the research. The first category or the focus of the assessment can be divided into 3 subgroups: achievement-based studies, eg, empirical tests of knowledge or competence; studies focused on perceptions of competence or skills; or perceptions about the curriculum (ie, satisfaction with the curriculum). The second categorization deals with the stakeholder groups that were assessed, eg, students, faculty members, or alumni.

Several studies have documented the achievement of outcomes using empirical tests of knowledge or skills in the student18-25 and alumni26-29 groups. They report on evaluations of program-level outcomes using portfolios,18 multiple-choice testing,18-20 the achievement of critical thinking skills (program-level skill assessments),21,22 the assessment of student performance on 4 key abilities (group interaction, problem-solving ability, written communication, and interpersonal communication),23,24 and evaluation of alumni outcomes, including career outcomes such as employment patterns.26-29

Another category of focus of this literature is studies that capture stakeholder perceptions about the curriculum including: satisfaction surveys (in faculty and/or alumni populations),20,25,26 course-level assessments (in student populations),30,31 and other specific faculty evaluations of curricular issues such as curricular mapping or content delivery.18,32-34 Students have been asked about their achievement of competencies, which are defined as the necessary skills or abilities of an entry-level practitioner,18,20,31,34,35 and alumni have been asked about academic preparation to practice pharmacy.27,29,36 Faculty members and students have been assessed independently regarding their perceptions of the delivery of instruction to achieve educational outcomes and competencies in the curriculum,18,33,37 and faculty self-reports of delivery of instruction to achieve competencies have been compared to student self-reports of competency achievement.34

Previous studies most similar to this research are those that assess perceptions of achievement of competency or practice skills. Self-assessment is a central methodology for this research. However, none of these studies attempted to compare the perceptions of both faculty members and students with regard to the delivery of instruction and achievement of programmatic competencies. The project discussed in this paper offers a more comprehensive understanding of the relationships between curriculum and competencies because it reports on perceptions of students and faculty members, and about both delivered and realized competencies.

Defining an appropriate yardstick for program-level assessment involves educational goals, the structure of the curriculum, and some comparison of educational goals and achieved learning outcomes. The theoretical framework utilized in this research is based on Peter Ewell's conceptions of curriculum and assumes that faculty member and student perspectives about teaching, learning, and the curriculum are likely to differ.38 This theoretical model focuses closely on the teaching/learning process, and offers 4 distinct conceptions of curriculum. These conceptions highlight, in an elegant and careful way the potential differences between purposes, perceptions, and experiences of students and faculty members. (1) The designed curriculum consists of the content and course sequences as defined in institutional documents such as syllabi and course catalogs. (2) The expectational curriculum is made up of the requirements that must be met and the performance expected from the students to meet these requirements. (3) The delivered curriculum consists of the material (content) that faculty members teach and the consistency with which they do so. And finally, (4) the experienced curriculum consists of the educational environment as experienced by the students. “What faculty say they do and value and what students say is delivered or experienced, constitute the most straightforward methods available to get at the behavioral aspects of curriculum.”38 Further, to gain a more thorough view of these perceptions about curriculum, both groups were also asked to assess the degree to which competencies are achieved. This research conducted at the Ohio State University College of Pharmacy examines both faculty and student perspectives in an effort to fulfill both improvement and accountability agendas.

The Ohio State University (OSU) is a public extensive doctoral/research university.39 The first professional PharmD program at the College of Pharmacy is a 4-year, graduate-professional curriculum. Two particular design elements address the new philosophy of pharmaceutical care: (1) Substantial clinical experiences and training are incorporated through the first year and the third year, while students participate in full-time practice experiences in their fourth year. (2) Integration across the curriculum is intended to help students make key connections between basic pharmaceutical science principles and delivery of care or practical applications.40 The first cohort of students entered the program in autumn of 1998.41About 40% of colleges or schools of pharmacy in the United States are located in similar institutions.42

The project objectives addressed 3 questions central to the assessment of educational outcomes:

Which competencies are delivered at the completion of each year of a 4-year doctor of pharmacy program?

How do faculty and student perceptions about the delivery and achievement of professional competencies compare?

Could the changes made to the curriculum be discerned over time through successive surveys?

DESIGN

This research examined the delivery and achievement of competencies in a PharmD program at The Ohio State University College of Pharmacy. A descriptive survey instrument that posed the same questions to faculty members and students served as the primary data collection tool and was distributed to faculty members and students in 3 successive academic years (2001-2004). The survey instrument was originally developed as part of a doctoral dissertation and also served as the starting point for the College of Pharmacy's self-study for accreditation.

The design was cross-sectional and data were collected from PharmD students and faculty members involved in each of the 4 years of the curriculum.43 The analysis used the survey responses in 2 ways: (1) an item-by-item analysis of the competencies as “delivered” and as “achieved,” combining the responses of faculty members and students from each program year, and (2) an analysis which compared responses about “delivered” and “achieved” competencies between faculty members and students after grouping the competencies according to 4 curricular categories. To obtain a score by category (see below for the definitions of the survey categories), the items in a given category were summed by respondent and then a mean score was generated for each group of respondents (eg, first-year students).

The survey instrument was developed in several stages. First, professional competencies were defined for each year of the program, ie, the knowledge, skills, and values that an entry-level practitioner would be expected to possess and utilize. Using the mission and goals statement from the College and the AACP CAPE Educational Outcomes Document, 61 competencies, grouped into 4 major categories, were defined and served as the basis for the survey instrument.44,45 The 4 major categories specified in the Program Mission and Goals document represent 4 curricular areas: (1) general educational abilities, (2) pharmaceutical sciences abilities, (3) pharmacy specific abilities, and (4) general pharmacy-related educational goals. Faculty members and students completing the instrument were asked to report on the extent to which each competency was delivered and achieved using a 5-point anchored scale with only the ends labeled (where 0 = none and 4 = highest). Prior to establishing the reliability and validity of the instrument in the study population, Human Subjects Approval of this research was obtained on October 10, 2001.

A panel consisting of 9 College faculty members, an external consultant (from the Office of Faculty and Teaching Assistant Development), and the members of the dissertation committee reviewed 5 versions of the survey instrument. This group plus 2 faculty members from other colleges formed a panel of experts who reviewed the survey instrument for face and content validity, and also commented on the instrument's clarity and ease of use (without completing it).

After the expert panel reviews and revisions, the survey instrument was pilot tested in a convenience sample of bachelor of science in pharmacy degree students (n = 24), to identify confusing items and to check the reliability of the instrument. The students were offered extra credit points in one of their pharmacy courses as an incentive to complete the survey. A reliability coefficient alpha of 0.70 was established prior to the pilot test as a threshold for each of the 4 major categories of the instrument. Reliability measures for each of the 4 categories on delivery and achievement were greater than 0.85. No students indicated that the length of the survey instrument was problematic.

Based on information gathered during the first administration of the instrument in 2001, the College committee responsible for assessment helped revise the instrument in early 2003. During this revision, 15 new competency statements were added, bringing the total to 76 competency statements, and the management competencies were revised with input from the management faculty members to more accurately reflect course content. The revised instrument was used for the second and third administrations (2003 and 2004).

The administration of all 3 surveys (fall 2001, spring 2003, and spring 2004) followed a 5 contact scheme called the tailored design method, which specifies a series of verbal and written contacts with participants to help ensure a high response rate.46

ASSESSMENT

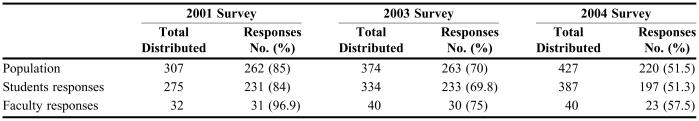

The total number of survey instruments distributed and the total number completed (response rates) for each of the 3 survey administrations are listed in Table 1. Selected data relating to each of the 3 research questions are presented to illustrate findings related to assessment and accountability and to support subsequent discussion of relevant findings and observations.

Table 1.

Survey Response Rates for Faculty Members and Students of the Ohio State University College of Pharmacy Regarding Competency-based Assessment

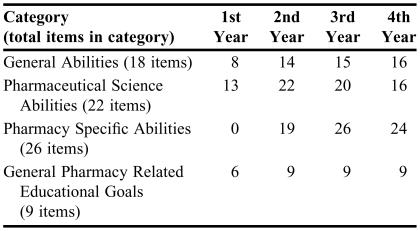

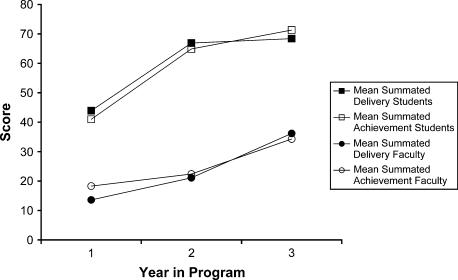

In order to determine which competencies are being achieved, it is helpful to determine which competencies are reported to be delivered in the curriculum. Therefore, a competency was considered to be delivered in the curriculum if at least 50% of faculty members and students rated that competency as a level 3 or 4 on the 0-4 scale (where 0 equals none and 4 equals highest). Table 2 summarizes the number of competencies delivered for each year of the program and by each of the 4 categories (research question 1). Achievement data were tabulated in a similar fashion. Figure 1 shows the comparison of faculty member and student responses for 1 survey category (pharmacy specific abilities). The data depicted support observations associated with research question 2.

Table 2.

Number of Competencies Delivered in a PharmD Curriculum as Assessed by Faculty Members and Students*

*Delivered is defined as ≥50% of faculty and students rating the competency as level 3 or level 4. Items were rated on a 5-point anchored scale where 0 = none and 4 = highest

Figure 1.

Faculty and student mean summated construct scores for the pharmacy specific ability competencies.

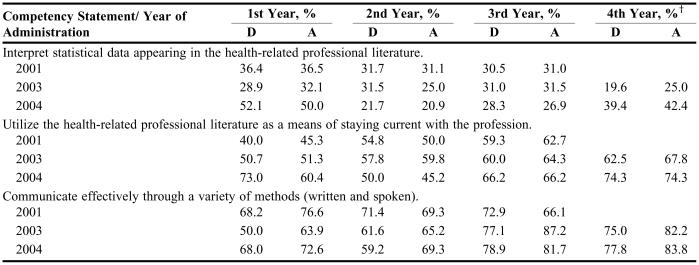

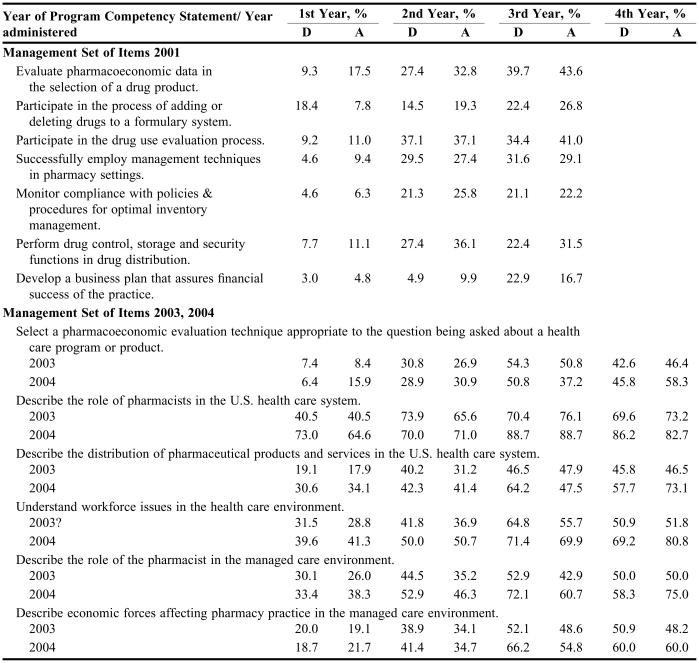

Item-level survey scores for students and faculty members combined by year of the program (Tables 3 and 4) address research question 3. The items presented in these tables illustrate the impact of changes to the curriculum over the 3 years of survey administration. These items were used by the College to monitor curricular improvements. Table 3 shows competency statements related to “literature evaluation” and “communication skills.” The table combines student and faculty member responses for both “delivery” and “achievement” of 3 competencies, showing the percentage who gave the statements relatively high scores, eg, at level 3 or 4, in all surveys. The impact of a single course revision (in 2003) is shown by the increase in the percentage of first-year students and faculty members reporting delivery and achievement of the literature evaluation skills. And the impact of efforts to bolster student communication skills across the curriculum is shown by the increasing percentages of students and faculty members reporting delivery and achievement of the communication competency. Table 4 shows the change in faculty member and student response to competencies in the “management skills” area of the curriculum across 3 surveys. After the 2001 administration of the survey, the range and definition of these management competencies were changed. The table shows response to the statements in the 2001 survey, and then to the new list of competencies used in the 2003 and 2004 surveys. Improved scores for delivery and achievement can be seen for the revised set of management outcomes.

Table 3.

Combined Faculty and Student Response* by Year of Program to Competencies in Literature Evaluation and Communication Skills

*Percent scoring the item with a level 3 or level 4 rating. Items were rated on a 5-point anchored scale where 0 = none and 4 = highest

†No 4th year students had completed the program as of the 2001 administration. The first PharmD class graduated spring 2002

D = delivery; A = Achievement

Table 4.

Combined Pharmacy Faculty and Student Response* to Questions Regarding Delivery and Achievement of Competencies in Management Skills

*Percentage of faculty members and students scoring the item with a level 3 or level 4 rating. Items were rated on a 5-point anchored scale where 0 = none and 4 = highest

D = delivery; A = Achievement

DISCUSSION

A general trend can be seen in Table 2: as students progressed through the curriculum more competencies were rated as being delivered in the curriculum. Items that did not garner the required 50% rating (ie, 50% or more of faculty and students rated the competency as delivered at a level 3 or 4) were forwarded to the appropriate college-level committee where action was taken to investigate the situation. This information also served in part as a basis for the curriculum section of the College's accreditation self-study report (curriculum section).

Figure 1 shows the faculty member and student scores for delivery and achievement of 1 survey category (“pharmacy specific abilities”) for the 2001 survey. Responses are plotted for delivery and achievement of competencies by year of the program. This representative figure summarizes a great deal of data in a parsimonious fashion and shows an overall trend toward increasing delivery and achievement of the pharmacy-specific abilities across years of the program. Although only 1 figure is presented here, it is representative of the other data. Plotted this way, the trends are similar to faculty member and student data for the 3 administrations of the survey and across the 4 survey categories.

Also visible in this figure are the consistently higher delivery and achievement scores reported by students compared with faculty members. In completing their survey instruments, faculty members appear to have utilized the “0” score (not at all delivered or achieved) much more frequently than students, resulting in much lower summated mean scores than students. Why might this happen? Three types of explanation can be posed: (1) the structure of the survey instrument; (2) differences in student and faculty perception of the curriculum broadly; and/or (3) the dynamics of curricular development and faculty culture.

Survey structure.

The rating scales on the initial survey instrument did not adequately accommodate a respondent need to reply “not applicable.” On the 2001 survey, faculty members who wished to indicate a lack of familiarity with curriculum content were offered only one choice on a scale which was structured from “0 = not at all” to “4 = highest,” namely to rate items with a zero. Frequent use of a “zero” score for items generates consistently lower category scores when compared with student scores because of the way category scores were generated: items in a category were summed by respondent and then a mean of the summed score was generated for each of the 4 categories.

The survey instruments in 2003 and 2004 included an “Item Not Applicable” response for the faculty members and partially addressed the problem from the previous survey, when faculty members’ use of the “0” could have meant either “I don't know” or “not at all.” However, use of “item not applicable,” created a different issue, namely, missing data. When an item was checked “not applicable” it was treated as missing in the data analysis. Missing data impacts the generation of the category scores, and produces a new set of data analysis challenges.

Student and faculty perceptions of curriculum.

It may be that student perspectives of the curriculum differ significantly from faculty perspectives. Their perspective could be described as cumulative in nature and therefore, more comprehensive. Or, in other terms, students might be described as responding at the “program level” while faculty members tend to respond at the “course level.” For example, a third-year student could rate a competency statement based on multiple exposures through a series of classes, and he or she would have the benefit of having experienced the entire didactic curriculum when answering the survey instrument. By contrast, faculty perspectives tend to be limited to their course or discipline. For example, a professor teaching a pharmaceutical science course in the first year of the curriculum may not be familiar with the details of course syllabi taught later in the curriculum. Faculty lacking a comprehensive understanding across the curriculum might have preferred an option of responding “not enough information” to a survey item, an option that was unavailable on the 2001 survey.

Curricular development and faculty culture.

At least 3 rationales related to curriculum development and faculty culture could explain the pattern in the faculty scoring. One possibility is that faculty member scores reflect the lack of practice in looking broadly across the curriculum. Program-level assessment for the College is a relatively new concept. Although the PharmD curriculum was designed to integrate efforts to deliver program competencies (ie, coordination between instructors), these data may suggest that such integration was not yet occurring at a program-wide level.

A second alternative is that the lower faculty scores may reflect a subtle, yet critical, aspect of faculty culture, namely, an inherent respect for individuality and independence in pursuing intellectual endeavors, which often includes course development and teaching. In their own classrooms, faculty members can observe cumulative learning. However, on a college-wide survey instrument, faculty members may be reluctant to score items reflecting competencies that would be within the purview of a colleague's course. To the degree that this dynamic is operative, the survey results may underestimate the knowledge of faculty members about the broader curriculum and the degree to which competencies are addressed in other courses.

Yet a third rationale for the lower faculty ratings could result from faculty perspectives on the relationship between the content of an individual course and the total knowledge base in their pharmacy discipline. The time limitations of a 10-week course force professors to choose a subset from the broader subject matter to include in their syllabi, ie, those topics which appear most salient for the students at that stage of the curriculum. Knowing that students have been introduced to only a segment of the knowledge base, a professor might score the delivery of that subject matter relatively low on a survey instrument, and then rate student achievement in a similar fashion.

These alternative explanations for the lower faculty scores, ie, whether differences between faculty member and student scores reflect the structure of the survey instrument, the dynamics of faculty culture, or the “silo mentality” often found between disciplines in academia, reveal the importance of involving faculty members in an evaluation of assessment results. The scores also draw attention to the importance of dialogue about overall program goals and appropriate strategies for addressing competencies in an integrated manner across the curriculum. While these issues appear to be complicated and may make the use of the charts questionable, the usefulness of presenting student and faculty data about a category on the same chart should not be minimized. A large amount of data was captured in this visual display. Moreover, the data helped frame discussions about curricular design and delivery for faculty members and highlighted the need to begin the more detailed process of curricular mapping in order to continue curricular improvement activities.

Tables 3 and 4 depict the impact of changes to 2 specific elements of the curriculum and illustrate how the competencies were used to monitor these interventions and thus document curricular improvements based on program assessment. Table 3 shows the impact of several efforts to bolster the coverage of literature evaluation and communication skills in the curriculum. The first 2 items in Table 3 addressed the skills of literature evaluation and use. The first column of the 2001 and 2003 data for these 2 competencies shows scores below 52%. An entire course in the first year of the curriculum is devoted to teaching literature evaluation skills. Prompted by information from this survey and using corroborating data from other assessment methods, the course was completely revamped for the 2003-2004 academic year. The survey data of first-year students from the 2004 survey showed an increase in the percentages for the delivery and achievement for both these competencies and suggests that that the new course resulted in improved outcomes on these competencies.

Based on this instrument and other assessments, communication skills also appeared to be an area of curriculum that needed strengthening after the first survey administration in 2001 which showed flat results for students in all 3 years of the curriculum (refer to Table 3, the 2001 row of item 3). Several efforts were made to increase student exposure to and practice with communication skills. First, a standardized grading rubric was introduced in spring 2003 for use throughout the program. Instructors were encouraged to have students give formal presentations and receive feedback on their performance. Beginning in 2002, third-year students interacted more with patients by providing immunization information and educating patients and caregivers about immunizations. This helped students to build confidence in interacting with the public and practice their interpersonal skills. Reading horizontally across the rows in Table 3 for item 3, the table shows an increasing trend in delivery and achievement scores by year in the program for this category of skills and appears to illustrate the impact of curricular change. The highest scores are reported by fourth-year students in 2004. By reading the table vertically, a slight dip in the scores from 2001 to 2003 can be seen, which may reflect both faculty members’ and students’ adjustment to the new requirements.

Table 4 shows the changes made to the set of competencies representing management skills in the curriculum. The first 7 items, labeled “management set of items 2001,” were used for the 2001 survey, which produced low scores for both delivery and achievement. Follow-up revealed that the faculty members who taught these courses in the curriculum had not been consulted about the wording of these competencies during the instrument design and the wording in the 2001 survey did not represent the material being taught in the classroom. The competencies were revised for 2003 and 2004 and, as shown in Table 3, the respondents scored the revised competencies higher for both delivery and achievement. During the College's self-study, in preparation for accreditation review in 2004, competency statements from the survey instrument were compared with the standards for accreditation. Some of the original management competencies from 2001 that had been removed or revised to more accurately reflect classroom content actually represent content that is required by the accreditation standards. Therefore, the management courses are undergoing another revision that should bring the content more in line with accreditation mandates.

These 2 examples illustrate the dynamic nature of curricular reform, by showing how the results of this work, combined with other data collection instruments, were used to monitor and then change the curriculum, ie, completing the assessment loop.

Limitations.

The overall response rate declined over the 3 administrations (Table 1). Several factors may explain this finding. First, there may have been greater rigor in following the tailored design method for implementing survey instruments46 during the 2001 administration of the survey instrument. Second, no incentive was offered for the 2003 and 2004 administrations, and a shortened follow-up schedule was utilized for the third survey. Some decline in the response rate may reflect response fatigue, ie, the respondents grew tired of filling out the same instrument, as the same groups of faculty members and students were approached for each survey. Fatigue could have also resulted from the length of the survey instrument. In addition to the declining response rate over time, 2 specific demographic characteristics of the study population may limit the generalizability of the results to all colleges or schools of pharmacy. First, OSU has a higher percentage of PhD level faculty (83%) than the national average (46.7%), and second, OSU has a lower percentage of minority students enrolled (5.3%) than the national average (13.9%).47,48

CONCLUSIONS

A detailed review of the use of competency-based program-level assessment based on faculty and student perspectives has yielded several valuable outcomes. First, the development of competency statements that align with accreditation standards and local mission and goals has allowed for comprehensive data collection. Second, these data have been used dynamically as part of an “assessment loop” to modify and improve the professional curriculum for this program. The data appear to have satisfactorily served both curricular improvement and accountability agendas. Third, gathering both faculty members’ and students’ opinions proved valuable. The comprehensive data set reveals the curricular structure in a new way. Item-level analysis of competencies (from the combined student and faculty perspective) offers the possibility for monitoring both delivery and achievement. In addition, it serves as a method for measuring the impact of changes to the curriculum. The entire survey and assessment process discussed here has served as a catalyst for conversations among faculty members about program-level outcomes. It has improved their awareness and understanding of the relationships between program assessment, accreditation, and curricular improvement.

References

- 1.Bennett W. Washington D.C: National Endowment for the Humanities; 1984. To reclaim a legacy. [Google Scholar]

- 2.Association of American Colleges. Washington D.C: Association of American Colleges; 1985. Integrity in the college curriculum: A report to the academic community. [Google Scholar]

- 3.National Institute of Education. Washington D.C: U.S. Government Printing Office; 1984. Involvement in learning: realizing the potential of American higher education. Report of the Study Group on the Conditions of Excellence in American Higher Education. [Google Scholar]

- 4.Boyer C. Transforming the State Role in Undergraduate Education: Time for a Different View. Denver, Co: Education Commission of the States; 1986. [Google Scholar]

- 5.Boyer E. Princeton, NJ: Carnegie Foundation for the Advancement of Teaching; 1987. College: The undergraduate experience in America. [Google Scholar]

- 6.Wright BD. Evaluating learning in individual courses. In: Gaff JG, Ratcliff JL, editors. Handbook of the Undergraduate Curriculum: A Comprehensive Guide to Purposes, Structures, Practices, and Change. 1st ed. San Francisco: Jossey-Bass Publishers; 1997. [Google Scholar]

- 7.Palomba CA, Banta TW. Assessing Student Competence in Accredited Disciplines. Sterling, Va: Stylus Publishing; 2001. [Google Scholar]

- 8.Lubinescu ES, Ratcliff JL, Gaffney MA. Two continuums collide: accreditation and assessment. In: Lubinescu ES, Ratcliff JL, Gaffney MA, editors. How Accreditation Influences Assessment. San Francisco: Jossey-Bass Publishers; 2001. [Google Scholar]

- 9.Angelo TA. Doing assessment as if learning matters most. AAHE Bulletin. 1999;51:3–6. [Google Scholar]

- 10.Maki P. Developing an assessment plan to learn about student learning. J Acad Librarianship. 2002;28:8–13. [Google Scholar]

- 11.Peterson MW, Einarson MK. What are colleges doing about student assessment? Does it make a difference? J Higher Educ. 2001;72:629–69. [Google Scholar]

- 12.Banta TW, Lund JP, Black KE, Oblander FW. Assessment in Practice: Putting Principles to Work on College Campuses. San Francisco: Jossey-Bass; 1996. [Google Scholar]

- 13.Farmer DW, Napieralski EA. Assessing learning in programs. In: Gaff JG, Ratcliff JL, editors. Handbook of the Undergraduate Curriculum: A Comprehensive Guide to Purposes, Structures, Practices, and Change. 1st ed. San Francisco: Jossey-Bass Publishers; 1997. [Google Scholar]

- 14. Astin AW, Banta TW, Cross P, et al. Nine principles of good practice for assessing student learning. American Association for Higher Education; 1992. Available at: http://www.aahe.org/principl.htm.

- 15.Loacker G, Mentkowski M. In: Making a Difference: Outcomes of a Decade of Assessment in Higher Education. 1st ed. San Francisco: Jossey-Bass; 1993. Creating a culture where assessment improves learning. [Google Scholar]

- 16.Liston C. Managing quality and standards. Philiadelphia: Open University Press; 1999. [Google Scholar]

- 17. Accreditation Council for Pharmacy Education. Accreditation Standards and Guidelines for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree. http://www.acpe-accredit.org/standards/default.asp: Accreditation Council for Pharmacy Education; January 15, 2006.

- 18.Draugalis JR, Slack MK, Sauer KA, Haber SL, Vaillancourt RR. Creation and implementation of a learning outcomes document for a doctor of pharmacy curriculum. Am J Pharm Educ. 2002;66:253–60. [Google Scholar]

- 19.Draugalis JR, Jackson TR. Objective currricular evaluation: applying the Rasch model to a cumulative examination. Am J Pharm Educ. 2004;68 Article 35. [Google Scholar]

- 20.Mehvar R, Supernaw RB. Outcome assessment in a PharmD program: the Texas Tech experience. Am J Pharm Educ. 2002;66:243–53. [Google Scholar]

- 21.Jackson TR, Draugalis JR, Slack MK, Zachry WM. Validation of authentic performance assessment: a process suited for Rasch modeling. Am J Pharm Educ. 2002;66:233–43. [Google Scholar]

- 22.Phillips CR, Chesnut RJ, Rospond RM. The California Critical Thinking Instruments for benchmarking, program assessment, and directing curricular change. Am J Pharm Educ. 2004;68 Article 101. [Google Scholar]

- 23.Purkerson DL, Mason HL, Chalmers RK, Popovich NG, Scott DS. Evaluating pharmacy student's ability-based educational outcomes using an assessment center approach. Am J Pharm Educ. 1996;60:239–48. [Google Scholar]

- 24.Purkerson DL, Mason HL, Chalmers RK, Popovich NG, Scott SA. Expansion of ability-based education using an assessment center approach with pharmacists as assessors. Am J Pharm Educ. 1997;61:241–8. [Google Scholar]

- 25.Mort JR, Messerschmidt KA. Creating an efficient outcome assessment plan for an entry-level PharmD program. Am J Pharm Educ. 2001;65:358–62. [Google Scholar]

- 26.Carroll NV, Erwin WG, Beaman MA. Practice Patterns of Doctor of Pharmacy Graduates - Educational-Implications. Am J Pharm Educ. 1984;48:125–30. [PubMed] [Google Scholar]

- 27.Quinones AC, Mason HL. Assessment of pharmacy graduates educational outcomes. Am J Pharm Educ. 1994;58:131–6. [Google Scholar]

- 28.Sauer BL, Koda-Kimble MA, Herfindal ET, Inciardi JF. Evaluating curricular outcomes by use of a longitudinal alumni survey - influence of gender and residency training. Am J Pharm Educ. 1994;58:16–24. [Google Scholar]

- 29.Howard PA, Henry DW, Fincham JE. Assessment of graduate outcomes: focus on professional and community activities. Am J Pharm Educ. 1998;62:31–6. [Google Scholar]

- 30.McCollum M, Cyr T, Criner TM, et al. Implementation of a web-based system for obtaining curricular assessment data. Am J Pharm Educ. 2003;67 Article 80. [Google Scholar]

- 31.Scott DM, Robinson DH, Augustine SC, Roche EB, Ueda CT. Development of a professional pharmacy outcomes assessment plan based on student abilities and competencies. Am J Pharm Educ. 2002;66:357–64. [Google Scholar]

- 32.Curtiss FR, Swonger AK. Performance-measures analysis (PMA) as a means of assessing consistency between course objectives and evaluation process. Am J Pharm Educ. 1981;45:47–55. [Google Scholar]

- 33.Mort JR, Houglum JE. Comparison of faculty's perceived coverage of outcomes: pre-versus post-implementation. Am J Pharm Educ. 1998;62:50–3. [Google Scholar]

- 34.Kirkpatrick AF, Pugh CB. Assessment of curricular competency outcomes. Am J Pharm Educ. 2001;65:217–24. [Google Scholar]

- 35.Plaza CM, Draugalis JR, Retterer J, Herrier RN. Curricular evaluation using self-efficacy measurements. Am J Pharm Educ. 2002;66:51–4. [Google Scholar]

- 36.Koda-Kimble MA, Herfindal ET, Shimomura SK, Adler DS, Bernstein LR. Practice patterns, attitudes, and activities of University of California Pharm.D. graduates. Am J Hosp Pharm. 1985;42:2463–71. [PubMed] [Google Scholar]

- 37.Mort JR, Houglum JE, Kaatz B. Use of outcomes in the development of an entry level PharmD curriculum. Am J Pharm Educ. 1995;59:327–33. [Google Scholar]

- 38.Ewell P. Identifying indicators of curricular quality. In: Gaff JG, Ratcliff JL, editors. Handbook of the Undergraduate Curriculum: A comprehensive guide to purposes, structures, practices, and change. 1st ed. San Francisco: Jossey-Bass Publishers; 1997. [Google Scholar]

- 39. Carnegie Foundation. Carnegie classification of institutions of higher education. Available at: http:\/www.carnegiefoundation.org/classification/classification.htm.

- 40.College of Pharmacy Faculty. Columbus, OH: Ohio State University College of Pharmacy; 1998. Program development plan for a new graduate professional degree program leading to the Doctor of Pharmacy. [Google Scholar]

- 41.College of Pharmacy Faculty. Columbus, OH: Ohio State University College of Pharmacy; 1997. Self-study for accreditation. [Google Scholar]

- 42. American Association of Colleges of Pharmacy. Pharmacy colleges in the United States. Available at: http://www.aacp.org/links/pharmacy_schools.html.

- 43.Fraenkel JR, Wallen NE. How to Design and Evaluate Research in Education. 4th ed. Boston: McGraw Hill; 2000. [Google Scholar]

- 44. Center for the Advancement of Pharmaceutical Education Advisory Panel on Educational Outcomes. http://www.aacp.org/Resources/resources.html: AACP; 1998.

- 45.Curriculum Committee. Columbus, OH: Ohio State University College of Pharmacy Faculty; 1993. Missions, outcomes, competencies, practice functions, and goals associated with an entry-level Pharm.D. curriculum. [Google Scholar]

- 46.Dillman DA. Mail and Internet Surveys: The Tailored Design Method. New York: John Wiley & Sons, Inc; 2000. [Google Scholar]

- 47. American Association of Colleges of Pharmacy. Profile of pharmacy students, fall 2003. Alexandria, VA 2003 Available at: http://www.aacp.org/site/page.asp?TRACKID=&VID=1&CID=106&DID=3113.

- 48. American Association of Colleges of Pharmacy. Profile of pharmacy faculty. Alexandria, VA 2003 Available at: http://www.aacp.org/site/page.asp?TRACKID=&VID=1&CID=105&DID=3112.