Abstract

Objective (1) To analyse trends in the journal impact factor (IF) of seven general medical journals (Ann Intern Med, BMJ, CMAJ, JAMA, Lancet, Med J Aust and N Engl J Med) over 12 years; and (2) to ascertain the views of these journals' past and present Editors on factors that had affected their journals' IFs during their tenure, including direct editorial policies.

Design Retrospective analysis of IF data from ISI Web of Knowledge Journal Citation Reports—Science Edition, 1994 to 2005, and interviews with Editors-in-Chief.

Setting Medical journal publishing.

Participants Ten Editors-in-Chief of the journals, except Med J Aust, who served between 1999 and 2004.

Main outcome measures IFs and component numerator and denominator data for the seven general medical journals (1994 to 2005) were collected. IFs are calculated using the formula: (Citations in year z to articles published in years x and y)/(Number of citable articles published in years x and y), where z is the current year and x and y are the previous two years. Editors' views on factors that had affected their journals' IFs were also obtained.

Results IFs generally rose over the 12-year period, with the N Engl J Med having the highest IF throughout. However, percentage rises in IF relative to the baseline year of 1994 were greatest for CMAJ (about 500%) and JAMA (260%). Numerators for most journals tended to rise over this period, while denominators tended to be stable or to fall, although not always in a linear fashion. Nine of ten eligible editors were interviewed. Possible reasons given for rises in citation counts included: active recruitment of high-impact articles by courting researchers; offering authors better services; boosting the journal's media profile; more careful article selection; and increases in article citations. Most felt that going online had not affected citations. Most had no deliberate policy to publish fewer articles (lowering the IF denominator), which was sometimes the unintended result of other editorial policies. The two Editors who deliberately published fewer articles did so as they realized IFs were important to authors. Concerns about the accuracy of ISI counting for the IF denominator prompted some to routinely check their IF data with ISI. All Editors had mixed feelings about using IFs to evaluate journals and academics, and mentioned the tension between aiming to improve IFs and ‘keeping their constituents [clinicians] happy.’

Conclusions IFs of the journals studied rose in the 12-year period due to rising numerators and/or falling denominators, to varying extents. Journal Editors perceived that this occurred for various reasons, including deliberate editorial practices. The vulnerability of the IF to editorial manipulation and Editors' dissatisfaction with it as the sole measure of journal quality lend weight to the need for complementary measures.

INTRODUCTION

In 1955, Eugene Garfield created the impact factor (IF). It was intended as a means ‘to evaluate the significance of a particular work and its impact on the literature and thinking of the period.’1 Little did he dream that it would become a means to rank journals2 and to evaluate institutions and academics.3,4 The UK Government has suggested that ‘after the 2008 RAE [Research Assessment Exercise], the system for assessing research quality and allocating “quality-related” research funding to universities... will be mainly metrics-based.’5 Moreover, journals often commend their own IFs in advertisements3 targeting readers, subscribers, authors and advertisers, among others. Yet many, including Garfield himself, have warned against misuse of the IF as a surrogate measure of research quality.2,3 Despite this, we found no studies directly exploring Editors' perspectives and policies regarding the IF. We believe such study is vital, as these may dictate what is published, and consequently what is read by health care workers trying to put research into practice.

Thus, we decided to explore the IF phenomenon with two aims:

To review trends in the IFs of selected general medical journals from 1994 to 2005, including several high-impact, prestigious journals held in high general regard; and

To explore what factors these journals' past and present Editors considered had affected their IFs during their tenure, including any direct editorial policies.

METHODS

We purposively selected seven journals from the ‘Medicine, General & Internal’ category of the ISI Web of Knowledge Journal Citation Reports—Science Edition database.6 The journals we selected were as follows: five high-IF journals—Annals of Internal Medicine (Ann Intern Med), British Medical Journal (BMJ), Journal of the American Medical Association (JAMA), Lancet, and New England Journal of Medicine (N Engl J Med); our own journal—Medical Journal of Australia (Med J Aust); and that of a country with a comparable socioeconomic and healthcare system to ours—Canadian Medical Association Journal (CMAJ). We focused on high-impact journals as the general paradigm is that these are the journals that many authors aspire to publish in, and that we look to for guidance on best practice, be it medical or editorial. It was also likely that Editors of high IF journals had already invested some thought in this phenomenon, and we wished to explore whether they believed their editorial policies had contributed the their journals' high IFs. A journal's IF is calculated yearly using citation and publication data from the previous two years. For instance, the IF for 2005 is:

|

While the numerator count comprises citations to any article published by that journal in the previous two years, the denominator of citable articles comprises research articles and reviews only, and excludes ‘editorials, letters, news items, and meeting abstracts.’6

Our study comprised a quantitative and a qualitative arm. The quantitative analysis was exploratory in nature, consisted primarily of descriptive assessments of the data, and was intended to generate issues for the qualitative phase of the study.

Quantitative study of IF statistics

From Journal Citation Reports,6 we collected yearly data on IFs, citations and citeable article counts from 1994 to 2005 for the seven selected journals. Absolute and relative annual changes were calculated using 1994 as the base year. We drew inferences (necessarily broad) from these simple observational data to identify issues for exploration in the qualitative phase.

Qualitative study of interviews with Editors

We e-mailed the ten Editors-in-Chief of these journals (except Med J Aust) who had served between 1999 and 2004 to seek a telephone interview regarding influences on their journal's IF. If no response to the first e-mail was received within two weeks, a second was sent. Once each Editor agreed to be interviewed, he or she was sent the relevant journal's yearly IFs, citation and article counts from 1994 to 2003 (2004/5 data being unavailable at that time), and our prime interview question: ‘What factor/s do you believe contributed to the rise in your impact factor, and how?’. A telephone interview was also scheduled in advance.

Interviews were conducted from November 2004 to February 2005 by two of the authors (MC and MVDW), around the above question, including any deliberate editorial strategies. Detailed notes of the interviews were taken and analysed manually by MC. Data analysis was conducted concurrently with data collection, to enable later interviews to build on and explore further our under-standing of Editors' views. Using template analysis, a coding template was constructed, comprising codes to label emergent themes (including contrasting views) that were identified by careful reading and re-reading of interview data, and constant comparison.7 The template was modified as new themes emerged or previous themes disregarded. We subsequently selected direct quotes to illustrate each theme.

RESULTS

Quantitative analysis

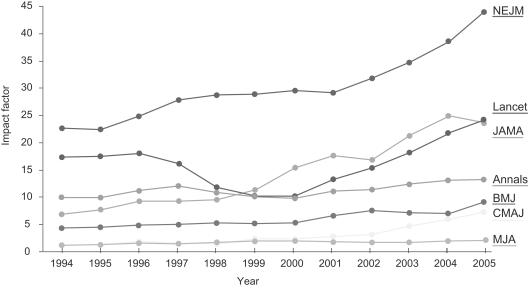

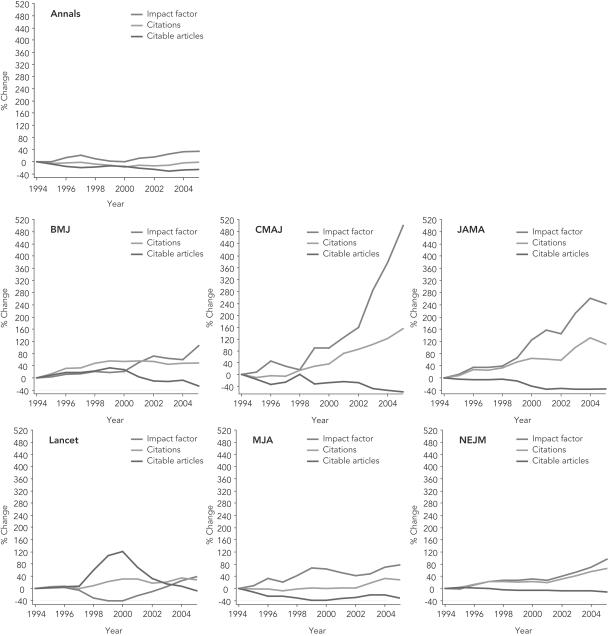

All seven IFs rose from 1994 to 2005 (Figure 1), the N Eng J Med having the highest IF throughout. However, percentage increases in IF relative to the baseline year of 1994 were greatest for CMAJ (about 500%) and JAMA (260%) (Figure 2). Citation counts tended to rise, while citeable article counts tended to be stable or to fall (Figure 2). In some cases, these changes were non-linear, as with the Lancet, which clearly displayed troughs and peaks between 1998 and 2001. This prompted us to explore possible influences on the IF by distinguishing those on citation counts from those on citeable article counts, which were more likely to be under editorial control.

Figure 1.

Trends in impact factors for seven general medical journals, 1994-2005

Figure 2.

Percentage change in impact factor, citation count and citable article count, relative to 1994, for seven general medical journals from 1994 to 2005.

Interviews

Nine of ten Editors-in-Chief were contactable, and all these agreed to be interviewed. These comprised all five current Editors (Ann Intern Med, CMAJ, JAMA, Lancet and N Engl J Med), one who had resigned just before the interview period (BMJ), and three of four other former Editors (Ann Intern Med, JAMA, N Engl J Med). Guided by our quantitative analysis, our initial coding template assigned first-level codes to the proffered reasons for IF rises as (1) factors influencing citation counts and (2) those influencing citeable article counts. As specific factors were identified through the interview process, these were assigned second-level, and in some cases further third-level codes. A separate first-level theme emerged as the interviews progressed: Editors' concerns about the IF as a phenomenon in itself. Thematic saturation was achieved by the final interview, when no new reported influences on the IF or new issues surrounding it were identified.

Editors gave the following possible specific reasons for their IF rises (see Table 1 for representative quotes):

Table 1.

Possible reasons given by journal Editors for rising impact factors: illustrative quotes from interviews

| FACTORS INFLUENCING CITATION COUNTS |

| Active recruitment of high-impact papers |

| Courting researchers |

| `Our IF increased because I hustled for key papers—I talked to people I'd known for years and who hadn't previously submitted to [our journal]... I had a cadre of people I knew personally who told me what was hot; I would call researchers to ask why they never sent anything good to [us]—they were amazed that I would call. One author sent us a `test' paper, a secondary [data] analysis, and found working with us such a good experience that they've just sent us their two hottest new articles.' |

| `I deliberately cultivated relationships with [national research institutions], personally met them once a year, told them why they should publish in [our journal] rather than our competitors'. We had greater publicity etc... [we made ourselves] approachable... authors found us easy to talk to, they were amazed that our Editors answered the phone, they could ring and find out if we'd be interested in an article. We made ourselves warm and fuzzy.' |

| `We vigorously recruit high-impact papers with an aggressive approach to getting new research; e.g. during the XXX outbreak, I rang my ex-trainees [involved in the outbreak] to ask for a case series.' |

| Hiring editorial staff |

| `We hired relatively young, fresh Professors or Assistant Professors about to be Professors, with fire in their belly... to sniff out the best research and bring it to [us].' |

| Improving services to authors |

| `We introduced fast-track publication... for high impact papers of clinical, public health or news significance authors... believe it's the most important thing [our journal] has done in my time as Editor. It's transformed our relationship with authors.' |

| Finding niches |

| `We don't get the big trials but have niche products... mainly due to our exclusive partnership with X [institution] since I became Editor.' |

| Media promotion |

| `I consider which articles will get media coverage in making publication decisions.' |

| `We gave lots of press releases and conferences. I cultivated reporters and didn't betray them—I only gave them good stuff which they could trust; we had weekly... news releases... authors loved this! They loved being on X [television station], Y [newspaper] etc.' |

| `We're all over TV or the media... at least one article is mentioned in Z [weekly newspaper science feature] so there might be a higher likelihood that authors want to submit [to us] for publicity.' |

| Article selection |

| `We try to find papers that will change medicine in 100 years and these may be RCTs on the benefits of ACE inhibitors or molecular genetics changing cancer treatment.' |

| `We actively decided to make our acceptance criterion those articles that we felt would make a contribution to the international literature. Now our basis for rejection is often `I don't think this paper is going to be cited.' |

| FACTORS INFLUENCING CITEABLE ARTICLE COUNTS |

| Publication of fewer citeable articles |

| `Our advisory board and regular contributors... thought [a falling impact factor would be] seriously bad, affect tenure commitments etc... so we decided to cut down material published.' |

| Article classification by ISI |

| `Every year, we have a formal conversation with ISI before their data are published... [When] the journal was re-designed... we had a chat with ISI to ensure they understood what's eligible for counts; we double-check ISI figures by estimating citable items ourselves then checking with ISI—there's not much variance now... We take on trust that the numerator is correct. We now know that [other] publishers do this with ISI—we'd been slightly naïve before.' |

Factors influencing citation counts

-

Active recruitment of ‘high-impact’ articles

- Courting researchers. Some Editors deliberately cultivated major research institutions and personally approached lead investigators of major research projects (Table 1). For another Editor, such recruitment was occasional rather than systematic: he relied instead on the journal name and long history of quality to get the best trials.

- Hiring editorial staff. Several Editors found having good editorial staff necessary for journal promotion, and had both employed and carefully trained more. Experts in particular fields were sometimes contracted as Editors to advise on what was ‘hot’, to attend research presentations, and to commission ensuing papers at these meetings (Table 1). Similarly, such Editors were also encouraged to become members of various research advisory panels.

Improving ‘services’ to authors. Other means to attract authors included speeding up turn-around times, introducing fast track publication for potential high impact papers (e.g. papers with great public health significance), and timing publication of papers with their presentation at research meetings (Table 1).

Finding niches. Some Editors looked for a ‘niche’ in the market, a particular area of interest to attract academics in that field to publish with the journal (Table 1). For one Editor, however, such an attempt didn't get off the ground, as it did not seem to generate much general interest, while the submitted papers were methodologically problematic and unlikely to be cited.

Media promotion. Boosting the journal's media profile was thought to attract first-class authors, and therefore citable articles. Many Editors actively promoted their journals to the popular media, for instance by putting great effort into media releases and media conferences, and by cultivating reporters (Table 1). On the other hand, two Editors stated that they didn't seek to court media attention (e.g. with media releases or deliberate acceptance of articles on this basis). This was to avoid being misquoted and or being seen to be ‘shopping’ for publicity.

Article selection. Careful article selection based on the quality of papers was also considered crucial to citation. Thus, articles were scrutinised for originality, interest and substantive contribution to the international literature (Table 1). To one Editor, other selection criteria included topics of interest to the general public, sometimes collated in theme issues (to attract special articles on important subjects, create impact, provide data that could change government policy, and attract media coverage). However, the experience was less positive for another Editor, who found that a call for special papers for theme issues did not yield quality articles, prompting him to stop publishing such issues. An Editor whose journal was part of a larger group of journals found that fostering collegiality within the group was helpful in ensuring that the best submissions were published in the most suitable journals within the group.

Going online. Only one Editor stated that going online with free full access had increased his journal's IF, which had risen faster than those of specialty journals in the same publishing group that were not fully online. Other Editors doubted that going online (whether with free full or limited access) had made a difference to their citations, although all felt that it was an important step to take.

Non-editorial policy. Two Editors pointed out that journal citations are generally rising anyway, and that this could be due to more journals being included in the ISI database, and/or more citations being made in articles.

Factors influencing citeable article counts

Publication of fewer citeable articles. While IF denominators generally fell, thereby increasing IFs, most Editors denied having deliberate policies to publish fewer citeable articles. They attributed the fall to Editors generally being ‘choosier’ about what they published, and to the trend for publishing longer research articles so that fewer articles ‘fitted’ in each issue. In one instance, it was also the unintended result of redesigning journal layout. However, two Editors had deliberately reduced the number of citeable articles (such as case reports), as they realised IFs were an important consideration for authors in deciding where to submit their articles (Table 1).

Article classification by ISI. While there was general acceptance that citation counts by ISI were probably correct, there were concerns about the accuracy of ISI counting for the IF denominator, as articles were sometimes misclassified as citeable. Two Editors had noted such inaccuracies for their journals, prompting them to routinely check the coding of their IF denominator data each year; one of these also changed the journal's article categories to make misclassification less likely (Table 1). On the other hand, three Editors stated that they deliberately stayed at arm's length from ISI, not wanting to be seen to be ‘too close’.

Editors' attitudes toward IF

Mixed feelings and concerns. Although all Editors were pleased about their journals' rising IFs, they expressed mixed feelings toward the IF phenomenon (Table 2). Most stated that the IF meant more to researchers than to clinicians. It was also pointed out that the IF favoured English-language and US journals, and could be an ‘uneven playing field’ that was ‘open to abuse’, as the denominator could be manipulated internally. There were misgivings about the emphasis placed on IFs in academic culture, with publication in high-IF journals often used as a surrogate index of academic performance (Table 2).

Editorial effort. The extent of interest Editors expressed in their own journals' IF ranged from ‘not taking it that seriously’, through ‘aiming for a robust but not overwhelming IF’, to seeking high IFs as a means to an end (attracting attention to the journal's broader message). Almost all felt, however, that too much focus on this might alienate their clinical readership (Table 2). Two Editors remarked that keeping their clinical ‘constituents’ interested was important, not just as the core readership of general medical journals, but because this affected advertising revenue (predicated on clinicians reading the journal). Efforts to appeal to clinicians included publishing articles devoted to clinical interests, clinical reviews (that served a dual purpose, being both considered authoritative by clinicians and citable) and articles on the humanities, and seeking suggestions for review topics at clinical meetings. In contrast, one Editor felt that it was more important to ‘give readers what they need, not necessarily what they want... I didn't want to dumb down the journal.’

Alternatives to the IF. Most Editors would not be unhappy if the IF no longer existed but felt that it served a purpose, was easily measurable, was objectively calculated and would be difficult to replace. One discussed the necessity to educate others about better use of the IF, and several suggested devising other criteria for judging journals that complemented, rather than replaced, the IF. These included citations in Cochrane reviews, guidelines or textbooks; measures of readership ‘such as Letters to the Editor, rapid responses [that tell us] how much readers engage with the journal’; and measures of clinical impact or how much papers ‘advance the health of community’, such as that devised by the Royal Netherlands Academy of Arts and Sciences.

Table 2.

Journal Editors' attitudes to the impact factor: illustrative quotes from interviews

| EDITORS' ATTITUDES |

| Mixed feelings |

| `Having our IF go up is a measure of success—having articles more people want to read.' |

| `It gives some indication of [quality] even if it is imperfect, like democracy.' |

| `I have a mild attraction-hate relationship with the IF... I wouldn't mourn it if it died!' |

| Concerns about emphasis on IFs in academia |

| `The IF is attractive to authors as they are judged by the IF of journals in which they publish.' |

| `Researchers do use it because they want their research cited and the IF tallied into grant applications, although not in a codified fashion, and for academic promotion.' |

| `Using IF as a surrogate for the impact of journals is illogical. Its inventor [Eugene Garfield] would agree if the whole research enterprise were driven by the IF, which tends to favour basic science papers, there would be more molecular biology, less health sciences research... the IF should be a general guide to judgement although its three decimal points make people think it has a precision that it doesn't have.' |

| Concerns that IFs don't mean much to clinicians |

| `The IF is only one of many ways to judge a publication. The IF measures how well the journal is used to help researchers, not doctors, communicate... I've found little correlation between articles that changed the world and number of citations to them...' |

| `What may be important to practising physicians may have no impact on IF.' |

| `Most clinicians are not concerned by IF, so if we got too concerned about it, our relationship with them would dwindle.' |

| `The good of medicine and the good of public health are badly damaged by the IF culture because it stops journals accurately reflecting the burden of disease priorities—an issue of economics and perverse incentives: to be successful in getting a message across, you have to be read by the rich—hence the IF.' |

| `I tried to grow IF and personally went after it, but then became worried that this would change the nature of the journal and focus from doctors to researchers... I feel very strongly that we can give readers what they want to know and want to read; i.e. aim for the middle ground with a mix from the top down and the bottom up.' |

DISCUSSION

This is the first study we are aware of that explores Editors' views on what affected IFs during their tenure. From 1994 to 2005, IFs of these seven general medical journals rose, mostly due to rising numerators and falling denominators. We postulated that these component data might be malleable, and our qualitative exploration showed that Editors believed this to be so, with some Editors going to great lengths to improve their IFs.

Editorial influences on the IF

These influences were believed to include active recruitment of researchers, accelerating publication, careful article selection and media promotion. Our study cannot show that these policies directly affected citations, but they did include factors known to favour higher citation counts, such as publishing more review articles.3 Media promotion may not only attract authors keen for wider publicity of their research, but may also influence citations: a 1991 study showed that articles from the New Engl J Med publicized in the New York Times received more scientific citations than articles not so publicized; this effect was not apparent for articles published during a strike of the Times when an ‘edition of record’ was prepared but not distributed.8 A more recent study has also demonstrated that an article's perceived newsworthiness is one of the strongest predictors of its citation, along with sample size and use of a control group, but after the publishing journal's IF.9

All but one interviewed Editor believed that going online was unlikely to have influenced their citation count markedly. In the field of computer science, where researchers rely heavily on articles freely available online and not published by journals, citation counts appear to correlate with online availability.10 However it is less clear whether this holds for medical scientists, who appear more reliant on print than electronic journals.11 The timing and extent of online availability of journals in our study varied over our period of study, and there were insufficient temporal data to show any real differences in IF following web access (analysis not shown). Such future analysis would be of interest and could include other variables that may be more greatly affected, such as immediacy of citations and validated hit counts, for which most of our interviewed Editors did not have formal data.

Whether intentional or not, changes in citeable article counts (the IF denominator) can change IFs markedly and are subject to editorial policy. Unintended factors included greater editorial selectivity and publication of lengthier research papers (thereby fitting less into each issue). However, to render their journal IFs more attractive to potential authors, two Editors deliberately published fewer citable articles. It has also previously been noted that when the Lancet began publishing research letters in 1997, their inclusion in its citable article count led to a fall in its IF in 1998 and 1999.12

Misclassification of articles as citable by ISI, and hence inaccurate calculations of the IF, have also been noted for JAMA,12 CMAJ,13 Nature,14 and The Lancet.15 Notably, recategorization of articles and negotiation with ISI about categories for consideration as citable articles (or not), were conducted by some Editors with an eye on their IFs.

Non-editorial influences

Interviewed Editors expressed the belief that more articles are being cited, even as more journals are included in the ISI database. The data appear to confirm this: from 2000 to 2005, the number of journals in the JCR Science Edition rose by approximately 6% overall, from 5686 to 6008.6 In that period, the total number of citations to internal and general medicine journals rose yearly, with an overall increase of approximately 22% (from 570 475 to 695 155), while the total number of articles published dropped by approximately 11% (from 14 103 to 12 600).

IFs as measures of quality

While our interviewed Editors were generally pleased at their journal's IF improvement over time, they were uneasy about its use as a measure of journal quality or as a means of keeping their clinical readers engaged. They are not alone in their concerns. The two-year time span of the IF is known to favour dynamic research fields such as the basic sciences, rather than clinical medicine or public health.2,3 The journal IF (which includes total citations to the journal) is not necessarily representative of citations to individual articles, as these vary widely.3,16 Garfield himself states that ‘of 38 million items cited from 1900-2005, only 0.5% were cited more than 200 times. Half [of the published articles] were not cited at all...’17 The most-cited 50% of papers published in Australian and New Zealand Journal of Psychiatry (Aust NZ J Psychiatry) and in the Canadian Journal of Psychiatry (Can J Psychiatry) between 1990 and 1995 account for 94% of all citations to these publications.18

Citation counts do bear some correlation with quality14 and proposed hierarchies of evidence.19 Journal citation counts in the US Preventive Services Task Force guidelines were found to correlate with their IFs.20 Yet this study showed that low IF journals were also cited frequently as providing important evidence. There are other disadvantages to relying solely on citation counts as quality indicators: they do not reflect the context of the citation, as a paper may be much cited for being misleading or erroneous;14 they favour journals that publish many review articles; and they are subject to author biases (e.g. the tendency to cite others in the same discipline).21

Moreover, citation counts as used in the IF calculation are subject to other biases. Citations are counted for all items in a journal, but denominators only include specific items; thus the IF favours journals that publish many articles contributing to the numerator but not the denominator (e.g. letters to the Editor).3 One study has shown that when IF numerators were corrected using citations to source items alone, 32% of 152 general medical journals dropped by at least three places in ranking.22 Bias may also arise from author or journal self-citation (e.g. a fifth of citations in the diabetes literature have been shown to be author self-citations unrelated to the quality of the original article);23 Editors are also known to have asked authors to add citations to their journals.24

Some authors have voiced concerns about the dominance of English language and US publications in the ISI database as possible sources of bias,3 but author biases may be more influential: like native English speakers, authors in countries where English is not the first language prefer to publish in English (possibly as such articles have a higher impact than those in their native tongue);25,26 they also prefer to cite English-language articles, even in non-English language publications.25

Alternatives to the IF

Concerns raised in our study and in the literature should be an impetus to seek alternative or complementary measures for journal impact or quality. Several initiatives to evaluate individual research papers have arisen, essentially based on peer review. These include BMJ Updates (a joint initiative with McMasters University, whereby articles from over 100 clinical journals are selected on clinical relevance and scientific criteria, then rated on relevance and news-worthiness);27 Biomed Central Faculty of 1000 (whereby evaluators identify the most interesting papers they have read and rate these as ‘recommended’, ‘must read’ or ‘exceptional’);28 and a similar yearly initiative by the Aust NZ J Psychiatry (whereby articles considered to have contributed most to knowledge and future research in psychiatry that year are identified).18 The Royal Netherlands Academy of Arts & Sciences is exploring indicators for the societal impact of applied health research that not only include citations in journals, Cochrane reviews and policy documents, but also output as health care technologies, services and publicity.29

However, finding objective, reproducible and comprehensive indicators of journal quality that can be regularly updated is more difficult. Such indicators are most likely to complement, rather than substitute for, the journal IF. They may comprise composite, weighted scores that could include citations in clinically important publications such as evidence-based guidelines (as well as journals), and the ‘performance’ of journal articles in initiatives such as BMJ Updates. Other suggested bibliometric measures ‘focus more on the particular choice of publication period and citation window, the calculation of separate indicators for different document types, the development of “relative”, field-normalized measures... supplementary measures and clarification of the technical correctness of the processed indicators.’31 As an example of the last, IFs using citation counts corrected for substantive items as in the denominator are now available in another Thompson Scientific database, Journal Performance Indicators (http://scientific.thomson.com/products/jpi/).17

It is clear that we have a long way to go in quest of better measures of journal impact. However, we join the clamour for all with a stake in appropriate evaluation of research publication to increase our endeavours in this quest.

Study limitations

Our sample was purposive and not intended to be representative of all general medical journals. We focused on several high-impact journals in order to determine whether they had specific strategies that might explain their ‘success’ in the IF stakes. Our quantitative analysis of simple observational data was exploratory in nature, generating issues for the qualitative phase of the study, and we chose not to employ formal tests of hypotheses.

The interview question and prompts were validated through triangulation, using data from our quantitative study and preliminary literature survey. Respondent validation was also employed: additional issues raised by earlier interviewees were ‘fed back’ to subsequent interviewees for their responses on whether these were reasonable; any contradictory responses were further explored in the interviews and refined our analysis.32 Interviews were not audio-taped, as we felt this might inhibit responses, particularly to sensitive issues such as editorial manipulation of the IF. Due to resource constraints, independent analysis of the qualitative data by a second investigator was not possible, but we attempted to enhance reliability with meticulous note-taking of interviews and documentation of the analysis.

Competing interests MC was Deputy Editor of the Medical Journal of Australia when this research was conducted and subsequently presented at the 5th International Congress of Peer Review and Biomedical Publication in September 2005. She is currently an Associate Editor with the BMJ. MVDW is Editor of the Medical Journal of Australia. EV has no competing interests to declare.

Funding None.

Ethical approval Not required.

Guarantor Mabel Chew.

Acknowledgments Our thanks to Joanne Elliot for assistance with data collection and to all Editors who participated.

References

- 1.Garfield E. Citation indexes to science: a new dimension in documentation through association of ideas. Science 1955;122: 108-11 [DOI] [PubMed] [Google Scholar]

- 2.Garfield E. How can impact factors be improved? BMJ 1996;313: 411-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Seglen PO. Why the impact factor of journals should not be used for evaluating research. BMJ 1997;314: 498-502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Abbasi K. Let's dump impact factors. BMJ 2004; 32914764496

- 5.Science and innovation investment framework 2004-2014: next steps. Available at http://www.hm-treasury.gov.uk/media/1E1/5E/bud06_science_332.pdf (accessed 27/01/2007)

- 6.ISI Web of Knowledge. Journal Citation Reports. Available at: http://isiknowledge.com (accessed 27/01/2007)

- 7.King N. Template Analysis. Available at http://www.hud.ac.uk/hhs/research/template_analysis/intro.htm (accessed 27/01/2007)

- 8.Phillips DP, Kanter EJ, Bednarczyk B, Tastad PL. Importance of the lay press in the transmission of medical knowledge to the scientific community. N Engl J Med 1991;325: 1180-3 [DOI] [PubMed] [Google Scholar]

- 9.Callaham M, Wears RL, Weber E. Journal prestige, publication bias, and other characteristics associated with citation of published studies in peer-reviewed journals. JAMA 2002;287: 2847-50 [DOI] [PubMed] [Google Scholar]

- 10.Lawrence S. Free online availability substantially increases a paper's impact. Nature 2001;411: 521. [DOI] [PubMed] [Google Scholar]

- 11.Tenopir C, King DW, Bush A. Medical faculty's use of print and electronic journals: changes over time and in comparison with scientists. J Med Libr Assoc 2004;92: 233-41 [PMC free article] [PubMed] [Google Scholar]

- 12.Joseph KS. Quality of impact factors of general medical journals. BMJ 2003;326: 283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Joseph KS, Hoey J. CMAJ's impact factor: room for recalculation. CMAJ 1999;161: 977-8 [PMC free article] [PubMed] [Google Scholar]

- 14.[No authors listed.] Errors in citation statistics. Nature 2002;415: 101. [DOI] [PubMed] [Google Scholar]

- 15.Hopkins KD, Gollogly L, Ogden S, Horton R. Strange results mean it's worth checking ISI data. Nature 2002;415: 732. [DOI] [PubMed] [Google Scholar]

- 16.Opthof T. Sense and nonsense about the impact factor. Cardiovasc Res 1997;33: 1-7 [DOI] [PubMed] [Google Scholar]

- 17.Garfield E. The history and meaning of the journal impact factor. JAMA 2006;295: 90-3 [DOI] [PubMed] [Google Scholar]

- 18.Walter G, Bloch S, Hunt G, Fisher K. Counting on citations: a flawed way to measure quality. Med J Aust 2003;178: 280-1 [DOI] [PubMed] [Google Scholar]

- 19.Patsopoulos NA, Analatos AA, Ioannidis JP. Relative citation impact of various study designs in the health sciences. JAMA 2005;293: 2362-6 [DOI] [PubMed] [Google Scholar]

- 20.Nakayama T, Fukui T, Fukuhara S, et al. Comparison between impact factors and citations in evidence-based practice guidelines. JAMA 2003; 290: 755-6 [DOI] [PubMed] [Google Scholar]

- 21.Joyce J, Rabe-Hesketh S, Wessely S. Reviewing the reviews: the example of chronic fatigue syndrome. JAMA 1998;280: 264-6 [DOI] [PubMed] [Google Scholar]

- 22.Moed HF and van Leeuwen TN. Impact factors can mislead. Nature 1996;381: 186. [DOI] [PubMed] [Google Scholar]

- 23.Gami AS, Montori VM, Wilczynski NL, Haynes RB. Author self-citation in the diabetes literature. CMAJ 2004;170: 1925-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Smith R. Journal accused of manipulating impact factor. BMJ 1997; 314: 4619056791 [Google Scholar]

- 25.Garfield E, Welljams-Dorof A. Language use in international research: a citation analysis. Ann Am Acad Pol Soc Sci 1990;511: 10-24 [Google Scholar]

- 26.Van Leeuwen TN, Moed HF, Tijssen RW, et al. Language biases in the coverage of the Science Citation Index and its consequences for international comparisons of national research performance. Scientometrics 2001;51: 335-46 [Google Scholar]

- 27.BMJ Updates. Available at http://bmjupdates.mcmaster.ca (accessed 27/01/2007)

- 28.Biology Reports Ltd: Faculty of 1000. What is Faculty of 1000? London: BioMed Central. Available at http://www.f1000biology.com/about/system (accessed 27/01/2007)

- 29.Council for Medical Sciences, Royal Netherlands Academy of Arts and Sciences. The Societal Impact of Applied Health Research: Towards A Quality Assessment System. Available at http://www.knaw.nl/publicaties/pdf/20021098.pdf (accessed 27/01/2007)

- 30.Glänzel W, Moed HF. Journal impact measures in bibliometric research. Scientometrics 2002;53: 171-93 [Google Scholar]

- 31.Mays N, Pope C. Assessing quality in qualitative research. BMJ 2000; 320: 50-2 [DOI] [PMC free article] [PubMed] [Google Scholar]