Abstract

Does our ability to visually identify everyday objects rely solely on access to information about their appearance or on a more distributed representation incorporating other object properties? Using functional magnetic resonance imaging, we addressed this question by having subjects visually match pictures of novel objects before and after extensive training to use these objects to perform specific tool-like tasks. After training, neural activity emerged in regions associated with the motion (left middle temporal gyrus) and manipulation (left intraparietal sulcus and premotor cortex) of common tools, whereas activity became more focal and selective in regions representing their visual appearance (fusiform gyrus). These findings indicate that this distributed network is automatically engaged in support of object identification. Moreover, the regions included in this network mirror those active when subjects retrieve information about tools and their properties, suggesting that, as a result of training, these previously novel objects have attained the conceptual status of “tools.”

Keywords: object recognition, neural plasticity, FMRI, tools, concepts, temporal lobes, parietal lobes, premotor cortex

Introduction

Everyday we are confronted with objects that we have never seen before, yet we are able to identify them as members of a particular category nearly instantaneously and without effort (e.g., as a dog or a hammer). This phenomenon underscores 2 fundamental characteristics of visual object perception: first, that perception requires access to stored information, and second, that this information is retrieved automatically whenever we attend to an object. However, the nature of these memory representations has not been adequately characterized. Neurophysiological recording studies in awake, behaving monkeys have revealed experience-dependent changes in the response properties of neurons in inferior temporal cortex as long-term object memories are formed (Erickson and others 2000; Baker and others 2002). For example, as novel visual objects become familiar through perceptual or discrimination training, inferior temporal neurons become more selective for learned, relative to novel, stimuli (Baker and others 2002), and neurons in close proximity to one another develop similar response preferences (Erickson and others 2000). Although neuropsychological and functional brain-imaging studies in humans have confirmed the importance of the temporal lobes in object memory, they also indicate that these memories are not stored in a single location. Rather, they are distributed throughout different regions of the cortex, and for certain broadly defined object categories, such as animate objects and tools, are represented in at least partially distinct neural circuitry (for review, see Martin and Chao 2001; Capitani and others 2003). Moreover, these category-related neural circuits appear to be organized according to sensory- and motor-related properties, consistent with the notion that these memory representations are grounded in perception and action (Warrington and McCarthy 1987; Damasio 1990; Martin 1998; Barsalou 1999). In support of this view are findings suggesting that, in contrast to animate objects, engaging in perceptual and conceptual tasks concerning tools activates a network of regions presumed to store information about what tools look like (the medial aspect of the fusiform gyrus), how they move (the left middle temporal gyrus), and how they are grasped and manipulated (left posterior parietal and premotor cortices) (Chao and others 1999, 2002; Chao and Martin 2000; Jeannerod 2001; Martin and Chao 2001; Beauchamp and others 2002, 2003; Handy and others 2003; Kellenbach and others 2003; Emmorey and others 2004). Thus, object concepts are thought to be embodied in the sense that information about the properties associated with an object’s appearance and use is represented within the sensory and motor systems that are active when that information was acquired (Martin 1998; Barsalou 1999). If this is so, it should be possible to elicit activity within this system de novo when subjects acquire functional information about objects having no preexisting memory representation. More specifically, experience manipulating novel objects to perform specific functions should lead to enhanced activity in regions associated with their appearance and use when the once-novel objects are later encountered.

To test this hypothesis, we designed 2 sets of novel objects (16 per set) bearing no resemblance to real objects (Fig. 1). Each object was designed to perform a specific functional task, and subjects were trained on these tasks using one set of the novel objects. Each subject participated in 2 identical functional magnetic resonance imaging (fMRI) sessions, one prior to and one after training. During scanning, subjects performed a visual object-matching task that required them to decide whether 2 pictures were of the same novel object photographed from different views, or of different objects (Fig. 2A,B). This design allowed us to directly evaluate learning-related changes in neural activity by contrasting object matching before and after subjects acquired information about object functions.

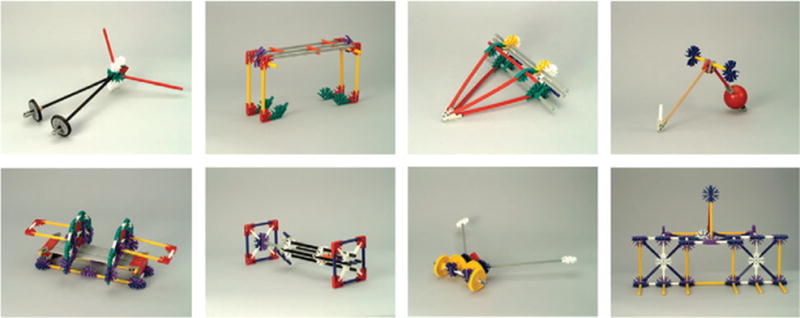

Figure 1.

Examples of novel objects. Thirty-two different objects were created for study. Across three 1.5-h training sessions, subjects performed timed trials of functional tasks using 16 of the objects, with sets counterbalanced across subjects.

Figure 2.

Sample trials for the perceptual matching task. (A, B) During object-matching blocks, subjects indicated for each stimulus pair whether the 2 photographs were of the same object photographed from different views (A) or of two different objects (B). (C, D) During blocks of scrambled image matching, subjects indicated whether each pair depicted identical scrambled images (C) or 2 different scrambled images (D). (E) Increased efficiency in using the novel objects to perform functional tasks during the training sessions. The average time to complete each training trial for all objects in the training set is plotted along the y axis. (F) Reaction times during functional brain scanning for matching to-be-trained (or trained) (T) and not-to-be-trained (or not-trained) (NT) novel objects (red bars) and matching scrambled images of the T and NT novel objects (white bars) prior to and after the training sessions. Error bars represent standard error of the mean.

Materials and Methods

Subjects

Twelve right-handed individuals participated in the fMRI study (6 female, mean age = 29 years, range 21–39; mean verbal intelligence quotient as estimated by the National Adult Reading Test = 117, range 109–128). Informed consent was obtained in writing under an approved National Institute of Mental Health protocol.

Stimuli and Experimental Design

Thirty-two novel objects were created using K’NEX™, a children’s construction toy (see Fig. 1 for examples of the novel objects). Each object was designed to perform 1 of 8 functions (lifting, pushing, swinging, crushing, separating, scattering, tugging, and poking), with 4 exemplars for each functional type. The 32 objects were divided into 2 sets of 16 with the stipulation that there be no functional overlap between sets. Each object was photographed from 5 different camera angles, and the resulting 160 photographs were each seen 4 times over the course of each scanning session. Phase-scrambled versions of these photographs served as control stimuli during scanning (Fig. 2C,D).

Prior to the imaging study, we collected ratings for the novel objects from a separate group of subjects (N = 32) who rated color photographs of each object for the degree to which they resembled real objects on a 7-point scale (1 = very much, 7 = not at all) and subsequently provided the name of any real object each novel object resembled. The most common response was “nothing” (accounting for 46.1% of the total responses). The most common label consisted of a type of building, vehicle, or their parts. Names of specific tools or other manipulable objects (e.g., “a toy”) or of biological objects (e.g., an animal) were rarely given (7.5% and 2.7% of the responses, respectively).

The functional training tasks required subjects to use each object to manipulate a set of small items (e.g., paper cups, ping-pong balls, wooden blocks, buttons) in a specified manner (e.g., lifting wooden blocks and placing them in their specific location in a form board, crushing paper cups, lifting items and transferring them between containers, pulling or pushing objects along a specific path). The majority of the objects had moveable parts that the subject manipulated in order to perform the tasks. Subjects were trained on one set of 16 novel objects, with sets counterbalanced between subjects. During each of 3 training sessions (lasting approximately 1.5 h each), the experimenter demonstrated the function of each object in the training set and timed subjects as they performed the functional tasks. After completing this procedure for each object in the training set, a second timed trial was conducted, yielding 6 timed trials with each object across the 3 training sessions. Each subject participated in one magnetic resonance image (MRI) scanning session prior to training and an identical scanning session after training. Training sessions were separated by 24–48 h, and scanning sessions were separated by approximately 10 days.

During scanning, subjects performed an object-matching task while viewing pairs of object pictures. Subjects pressed 1 of 2 buttons (held in the left hand) if the pictures were of the same object photographed from different views and the other button if the pictures were of 2 different objects (see Fig. 2A,B). Object-matching trials were grouped such that each block contained objects either that would be (or were) used for training or that would not be (or were not) used for training. Object-matching blocks alternated with blocks of trials using phase-scrambled versions of the object pictures, during which subjects decided if the 2 scrambled images were identical (baseline task) (Fig. 2C,D). Each of the 4 imaging runs lasted 4 min and contained 8 blocks (evenly divided between object and scrambled image matching) of 10 trials each. Within blocks, half of the trials were matches and half were nonmatches, arranged in pseudorandom order. In each trial, a pair of novel objects or scrambled pictures were presented side by side for 2500 ms followed by a 500-ms interstimulus interval, during which a fixation cross appeared on the screen. For the first scanning session, neither were the subjects given information about the objects nor were they told about the subsequent training sessions. An Apple Macintosh G3 computer running Superlab (Cedrus Corporation, San Pedro, CA) presented the stimuli and recorded subjects’ reaction times. Stimuli were rear projected onto a translucent screen and viewed via a mirror mounted on the head coil.

After completing the second scanning session, incidental recognition memory for the trained objects was tested. The 160 object photographs shown during scanning were presented (5 views of each of the 16 trained and not-trained objects), and subjects indicated via a button press whether or not the object had been part of the training set. Stimulus presentation times for this task were identical to those of the matching task used during scanning.

In summary, the experiment consisted of 2 identical MRI scanning sessions with 3 interspersed behavioral training sessions and a recognition memory test immediately following the second scanning session. The first scanning session occurred at least 24 h prior to the first training session, and the second scanning session occurred 24–48 h after the last training session.

Imaging Parameters

Anatomical (spoiled grass imaging sequence, 124 slices, 1 × 1 × 1.2 mm voxels) and functional data (gradient-echo echo-planar imaging sequence, repetition time = 3 s, echo time = 40 ms, field of view = 240 mm, flip angle = 90L, 40 contiguous 3.5-mm sagittal slices covering the entire brain, 86 volumes per scan, 3.75 × 3.75 × 3.5 mm voxels) were acquired on a 3-T GE scanner.

Behavioral Data Analysis

Training session data (task completion times for each timed trial) were submitted to a 3 × 2 (training session × trial) repeated measures analysis of variance (ANOVA). For the matching task, individuals’ median reaction times collected during scanning were submitted to a 2 × 2 (scanning session × training object set, nontraining object set) repeated measures ANOVA. Due to technical difficulties, data for one subject in Session 1 and one subject in Session 2 were not recorded. Planned comparisons were carried out between conditions.

Imaging Analysis

For each subject, each fMRI session was first analyzed separately to evaluate within-session effects. Each session’s functional images were motion corrected, and a 4.5-mm 3-dimensional smoothing filter was applied to each scan. Two regressors of interest (training objects and nontraining objects) were convolved with a gamma-variate estimate of the hemodynamic response, and multiple regression was performed on each voxel’s time series using AFNI v.2.40e (Cox 1996). The scrambled image-matching epochs comprised the baseline for all analyses.

To directly compare the functional data from the pre- and posttraining scanning sessions, an across-session analysis was performed for each subject. The anatomical data from both scanning sessions were coregistered, and within-session motion correction was performed on the functional data from Session 1. Functional data from Session 2 then underwent intra- and intersession registration simultaneously. Data for one subject was eliminated from these analyses due to difficulty aligning the imaging data across sessions. Four regressors of interest (to-be-trained objects, not-to-be-trained objects, trained objects, and not-trained objects) were convolved as described above prior to performing multiple regression on each voxel’s time series. To examine the time series, the average response to each stimulus type was generated (without assumptions about the shape of the hemodynamic response) and normalized by dividing each voxel’s value by its mean across time points.

Individual’s Z-score maps from the regression analyses were normalized to standardized space (Talairach and Tournoux 1988), and fixed effect group maps were created. Regions of interest (ROIs) were defined on the across-session group Z-score map as clusters of voxels that exceeded a statistical threshold of P < 10–6 for the overall experimental effect, and P < 0.01 for the main effect of session (i.e., contrast of Session 2 object matching [trained and not-trained objects combined] vs. Session 1 object matching [trained and not-trained objects combined]). A stringent threshold was used at this stage to ensure a conservative criterion for inclusion of voxels in each ROI. A mask of these voxels was applied to each individual’s across-session data. Voxels chosen for the random effect time series analysis included those in the mask that also exceeded a threshold of P < 0.001 for the overall experimental effect and P < 0.05 for main effect of session (i.e., Session 2 object matching vs. Session 1 object matching) in each subject’s cross-session analysis. The more lenient threshold used at this stage served to maximize sensitivity when selecting voxels at the individual subject level and to reduce noise in the data by eliminating nonsignificant voxels. Combined, these thresholding criteria served to restrict the random effect analysis to active voxels from each subject that were also within the boundaries of the ROIs defined by the group statistical map. Time series data were extracted from each subject’s single session functional data, averaged, and submitted to a 2 × 2 (scanning session × training object set, nontraining object set) repeated measures ANOVA, treating subjects as a random factor, with planned comparisons between conditions.

A separate set of ROIs was identified from the group statistical map of the second scanning session to evaluate training-related differences between object sets. These ROIs included voxels exceeding a statistical threshold of P < 10−6 for the overall experimental effect and P < 0.01 for the contrast of trained objects versus not-trained objects during Session 2. A mask of these regions was applied directly to each subjects’ single-session data. Time series were extracted for each ROI and submitted to a 2 × 2 (scanning session × training object set, nontraining object set) ANOVA with planned comparisons between conditions. All reported P values were Greenhouse–Geiser corrected (Keppel 1991).

Results

Behavioral Data

Analysis of the training data (trial completion times) revealed a main effect for training session, F2,11 = 68.40, P < 0.0001, as well as a trial effect within each session (P < 0.0001, P < 0.002, and P < 0.02 for Training Sessions 1, 2, and 3, respectively). Thus, subjects showed steady learning both within and across training sessions. In addition, performance was faster on the first trial of Training Session 2 than on the last trial of Training Session 1 (P < 0.0001), a pattern linked to memory consolidation of motor skills (Karni and others 1995) (Fig. 2E).

Analysis of the behavioral data collected during the 2 fMRI scanning sessions indicated that subjects were highly accurate at performing the object-matching task (mean percent correct > 95% for all conditions). Analysis of reaction time data revealed an overall practice effect, with faster visual matching times after than prior to training (main effect of session: F1,9 = 18.28, P < 0.003). In fact, subjects demonstrated faster reaction times for both object sets (trained and not-trained) in Session 2, compared with their counterparts in Session 1 (P < 0.0001 for the trained set in Session 2 vs. Session 1 and P < 0.002 for the not-trained set in Session 2 vs. Session 1). Analysis of the matching data from the first scanning session revealed that prior to training, response times were equally fast for all stimulus types (object sets and their scrambled images) (main effect for stimulus type: F < 1.0) (Fig. 2F). In contrast, after training, subjects performed faster with the trained objects than with the not-trained objects (P < 0.01) (Fig. 2F). Thus, subjects showed increased efficiency matching pictures of objects with which they had functional experience, relative to objects that they had only seen before in picture form.

Analysis of the incidental recognition memory data documented that subjects were highly accurate at identifying pictures of the objects used during training versus those that were not (recognition, mean percent correct ± standard deviation = 94.75 ± 4.75 for the trained objects, mean percent correct rejection = 97.90% ± 2.47 for not-trained objects).

Imaging Data

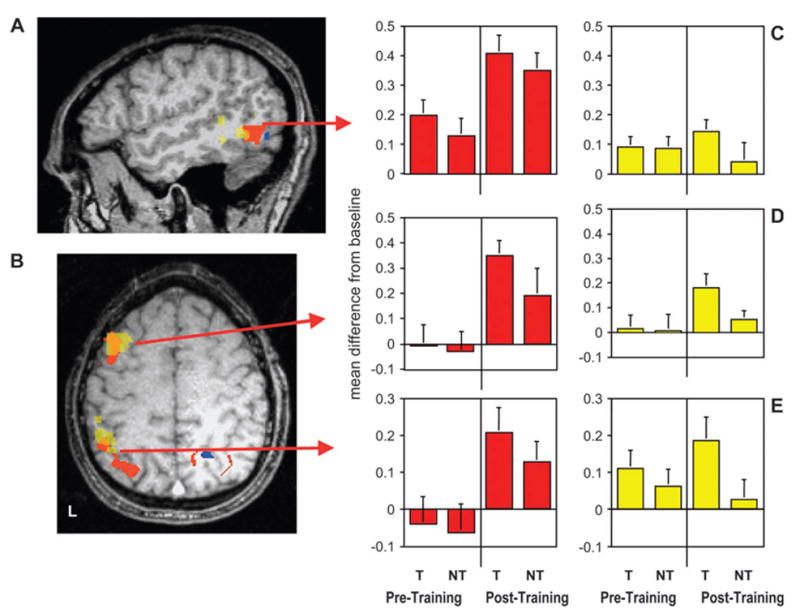

The fixed effects analysis of the functional imaging data across sessions revealed a significant main effect of session in a number of regions (Session 2 [novel object matching − scrambled image matching] − Session 1 [novel object matching − scrambled image matching]). These changes included increased activation in specific regions of lateral temporal, parietal, and frontal cortices in the left hemisphere, and both increased and decreased responses after training in ventral occipitotemporal cortex. As illustrated in Figure 3, increased activation was observed in the posterior region of the left middle temporal gyrus, left premotor cortex, and left intraparietal sulcus (Fig. 3A,B, red regions). As shown by the accompanying histograms (Fig. 3C,D,E, red bars), the random effects analysis of the time series data revealed that after training there was more activity for object matching as compared with baseline than prior to training (main effect of session: F1,10 = 18.6, P < 0.002; F1,8 = 17.4, P < 0.003; and F1,10 = 22.3, P < 0.001 for left middle temporal gyrus, left intraparietal sulcus, and left premotor cortex, respectively). These left lateralized changes were readily observable in individual subjects (Fig. 4). Although some activity was observed in the left middle temporal gyrus before training (P < 0.001 for objects vs. phase-scrambled images during Session 1), neither the left intraparietal nor the premotor regions were active during object matching prior to training (F < 1 for objects vs. phase-scrambled images during Session 1) (Fig. 3C,D,E, red bars). In addition, there was no difference in activity between object sets in any region during Session 1 (F < 2 for trained vs. not-trained objects in the middle temporal gyrus, F < 1 in intraparietal and premotor regions). As illustrated, after training, the response to the trained objects tended to be numerically larger than to the not-trained objects in each region. However, neither was this difference significant nor was the interaction between scanning session and object set significant.

Figure 3.

Group results showing learning-related changes in hemodynamic response for novel object matching, relative to scrambled image matching in lateral cortical regions. Group activation maps are displayed on the structural magnetic resonance image from a single subject. Regions in red were more active, and regions in blue were less active, after training than before training. Regions in yellow, which overlap with regions in red, were more active for trained (T) objects than for not-trained (NT) objects during Session 2 but not Session 1. (A) Sagittal section (Talairach and Tournoux coordinate, x = −50) showing the location of learning-related activity in the left middle temporal gyrus/inferior temporal sulcus (red region, local maxima in Brodmann area [BA] 37, −50, −60, +5; yellow region, BA 37/21, −53, −51, −3). (B) Axial section (z = +47) showing the location of emergent learning-related activity in the left premotor/prefrontal (red region, BA 6, −36, +2, +40; yellow region, BA 6/8, −43, +10, +40) and intraparietal cortices (red region, BA 7/40, −32, −59, +36; yellow region, BA 7/40, −42, –43, +38). (C, D, E) Group-averaged activity expressed as the difference between novel object matching and scrambled image matching for all voxels in the middle temporal gyrus, left premotor, and intraparietal ROIs, respectively. Red bars represent brain regions that showed increased activity for object matching after but not prior to training; yellow bars represent regions that demonstrated greater activity for trained objects than not-trained objects after but not prior to training. Error bars represent standard error of the mean.

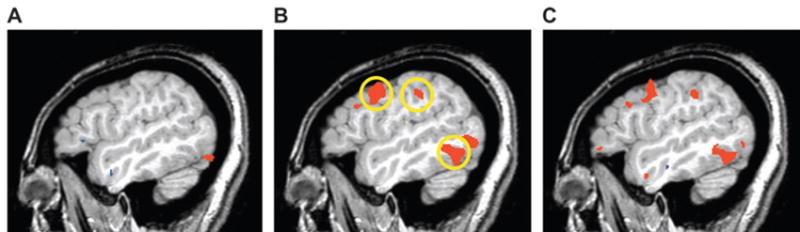

Figure 4.

Hemodynamic response in a single subject prior to and after training. Shown is a single, 1-mm-thick magnetic resonance image of the left hemisphere (x = −47). Regions in red were significantly more active when performing the matching task with the novel objects relative to scrambled object matching. (A) Prior to training, the object-matching task was associated with activity in ventral occipital cortex, which continued anteriorly along the fusiform gyrus (not visible in this lateral slice). (B) After training, activity emerged in the left middle temporal gyrus and left premotor and intraparietal cortices (circled regions). (C) Direct comparison of the pre- and posttraining scans revealed significantly more activity in these regions after training (all P < 0.001).

Although the interaction between session and object set failed to reach significance, we evaluated differences between object sets (trained vs. not-trained) within each scanning session based on our a priori hypothesis of training-specific effects in Session 2. These analyses revealed distinct clusters of voxels showing increased activity specifically for the trained objects during the second scanning session (see Fig. 3A,B, yellow regions). These clusters overlapped with the anterior extent of each of the regions located on the lateral surface of the left hemisphere identified by the main effect of session in the across-session analysis. Time series extracted from these regions for both scanning sessions were submitted to a random effects repeated measures ANOVA of session × object set. Although the interaction again failed to reach significance, planned comparisons revealed significantly enhanced responses to the trained versus the not-trained objects in the middle temporal gyrus and posterior parietal cortex, with a trend toward significance in premotor/prefrontal cortex after training (P < 0.05, P < 0.05, P < 0.08, in each region, respectively) but not prior to training (F < 1 in each region) (Fig. 3C,D,E, yellow histograms). Moreover, in each of these regions, the not-trained object set failed to elicit activity above the scrambled image-matching baseline during the second scanning session (F < 1). Thus, following training, increased activity in these regions showed significantly heightened activity only in response to the trained object set.

As illustrated in Figure 5A, the across-session analysis also revealed a training-related increase in neural activity in the medial aspect of the fusiform gyrus bilaterally; especially in the left hemisphere, indicating more activity after than prior to training (random effects ANOVA, main effect of session: F1,8 = 9.12, P < 0.02) (Fig. 5B). In contrast, a decreased response was observed in the lateral portion of the fusiform gyrus, indicating that this region was more active prior to than after training (main effect of session: F1,10 = 7.50, P < 0.02) (Fig. 5C). No differential activity was observed for the trained versus the not-trained objects in the fusiform gyrus after training (F < 2 and F < 1 for the interaction of session × object set in the lateral and medial fusiform gyrus, respectively) (Fig. 5B,C). In addition, separate analysis of Session 2 failed to reveal a differential response to the trained versus not-trained object sets in ventral cortex.

Figure 5.

Group results showing learning-related changes in ventral temporal cortex. As is Figure 3, regions in red were more active and regions in blue were less active, after training than before training. (A) Coronal section (y = −62) showing changes in the fusiform gyrus. The medial portion of the left fusiform gyrus showed increased activity (red) (local maxima, Brodmann area [BA] 37, −27, −60, −9), whereas the more lateral region showed decreased activity (blue) (with 2 local maximas, BA 37, extending anteriorly from −38, −63, −11 to −38, −44, −21) after training. Increased activity in the left inferior temporal sulcus that extended into the middle temporal gyrus (see Fig. 3) can also be seen. (B, C) Group-averaged activity expressed as the difference between novel object matching and scrambled image matching for all voxels in the medial (red bars) and lateral (blue bars) fusiform ROIs. Error bars represent standard error of the mean.

Discussion

To investigate the effects of functional experience on the neural circuitry for object recognition, we created novel objects and scanned subjects before and after they were trained to perform functional tasks with a subset of the objects. We found that training induced highly specific changes in the neural circuitry recruited during visual object perception.

Behaviorally, training resulted in increased efficiency performing a simple visual matching task and creation of a strong representation in memory. Notably, although subjects could easily differentiate between the trained and not-trained objects after the second scanning session, debriefing revealed that subjects were unaware that matching trials had been grouped according to whether or not an object was part of the training set.

During scanning, subjects were significantly faster at matching a simple both novel object sets during the posttraining session: those on which they were trained and those on which they were not, indicating a general practice effect. Moreover, subjects were faster at matching the trained objects than the not-trained objects after training, indicating object-set–specific learning.

These behavioral enhancements were reflected by changes in the cortical regions recruited during visual object matching. Specifically, after training, activity increased in discrete regions on the lateral surface of the left hemisphere, including the left middle temporal gyrus, intraparietal sulcus, and premotor cortex. Moreover, in the anterior aspect of each of these regions, these increases were specific to the trained objects. In ventral regions, we observed a more complex pattern of change: learning-related decreases were found in the lateral portion of the fusiform gyrus, whereas activity increased in the more medial portion. Finally, in contrast to the more anterior aspects of the lateral cortical regions, neural changes were equivalent for the trained and not-trained object sets in ventral temporal cortex (fusiform gyrus).

Each of the lateral regions demonstrating learning-related increases in activity has been implicated in perceiving and knowing about tools and their actions. Activation of the posterior region of the left middle temporal gyrus is one of the most consistent neuroimaging findings for semantic tasks involving tools and manipulable objects (Devlin and others 2002). This region shows an increase in response to pictures of tools or their written names during naming (Martin and others 1996; Chao and others 1999, 2002; Moore and Price 1999), property generation (Martin and others 1995; Fiez and others 1996), and property verification tasks (Chao and others 1999). Damage to this region can result in impaired naming of tools and action knowledge deficits (Tranel and others 1997, 2003; Damasio and others 2004). In addition, functional imaging studies have demonstrated that the posterior region of the left middle temporal gyrus is strongly and selectively responsive to the rigid, unarticulated motion patterns associated with tools and other non-biological objects (Beauchamp and others 2002, 2003). Within this context, the robust response observed in the left middle temporal gyrus after training in the current study may reflect the automatic retrieval of experientially acquired information about the tool-like motion of the objects to aid recognition.

Activity emerged in 2 additional lateral regions after training: the left intraparietal sulcus and left premotor cortex, extending into prefrontal cortex. Studies in monkeys have shown that neurons in ventral premotor cortex (area F5) and the anterior region of the intraparietal sulcus respond when monkeys grasp or view objects that they have had experience manipulating (Jeannerod and others 1995; Sakata and others 1999). In addition to “mirror neurons,” which fire when the animal performs or observes another performing an action, F5 contains “canonical neurons,” which respond when the monkey simply views graspable objects. Canonical neurons are thought to represent specific object–hand transformations necessary for grasping objects (for review, see Rizzolatti and others 2002), such as those learned by our subjects during training. Similarly, neuroimaging studies in humans report activity in premotor, prefrontal, and posterior parietal cortices when subjects view, name, and answer questions about tools and utensils associated with specific grasp-related object–transformations (Grafton and others 1997; Grabowski and others 1998; Chao and Martin 2000; Handy and others 2003; Kellenbach and others 2003; Creem-Regehr and Lee 2005) and during mental imagery of such actions (Decety 1996). Whereas many of these studies have reported enhanced ventral premotor activity for tasks involving manipulable tools compared with other objects, we found a more dorsal premotor region selectively active for viewing the trained objects. This is consistent with neurophysiological studies in monkeys reporting that neurons in dorsal premotor cortex respond when arbitrary links are formed between a movement and a stimulus (Mitz and others 1991). The same region in humans may be involved in forming similar associations needed to use graspable objects such as tools (Grafton and others 1997). In fact, a number of human functional neuroimaging studies have implicated dorsal premotor cortex in visual motor associations (Grafton and others 1997; Binkofski and others 1998; Picard and Strick 2001). Finally, an extensive neuropsychological literature implicates left posterior parietal and left premotor/prefrontal cortices in the representation of skilled movements and knowledge about tools and their related actions (Buxbaum and others 2000; Haaland and others 2000; Buxbaum and Saffran 2002; Tranel and others 2003; Damasio and others 2004). Taken together, these findings converge to support the idea that the left middle temporal gyrus, intraparietal sulcus, and premotor and adjacent prefrontal cortices store information about the perceptual and motor properties underlying our conceptual knowledge of tools and their use. Our findings extend these reports by demonstrating that activity can be elicited in these regions de novo by altering representations via functional experience with novel objects.

Importantly, in the anterior portion of each of these activated regions, the response to the trained objects was markedly stronger than the response to the not-trained objects (consistent with faster reaction times for trained than for not-trained objects after training). In fact, the not-trained objects failed to elicit activity above the scrambled image-matching baseline task in these sites. This finding of heightened activity specific to the trained objects in the anterior aspect of lateral cortical regions is compatible with the idea that more anterior regions of perceptual processing (Grill-Spector and Malach 2004) and motor systems (Sakata and others 1997; Picard and Strick 2001) house more complex representations than posterior regions. Moreover, it implies that training induced object-specific memory representations in regions that store information tightly coupled to functional properties (i.e., properties of motion and manipulation directly associated with an object’s use).

However, in the posterior aspect of each region, the increased activity was not limited to the objects on which subjects were trained. Rather, as shown in Figure 3 (red regions and histograms), enhanced neural responses were observed for both object sets. This finding was unexpected. These comparable changes to both trained and not-trained object sets after training may suggest that the information acquired about the trained objects generalized to objects that were highly similar in visual appearance.

In line with this possibility, the same pattern of results—increased activity after training, coupled with a lack of a difference between the trained and not-trained object sets—was also found in ventral temporal cortex. There is considerable evidence that information about visual attributes needed for object identification, such as form and color, is stored in ventral temporal cortex (for recent review, see Grill-Spector and Malach 2004). Our finding of similar changes to the trained and not-trained objects in this region suggests that the neural response associated with a specific set of objects shows strong generalization to objects of similar appearance. In the current study, for example, all the objects were created from the same materials and thus had comparable component parts, textures, and colors. In contrast, information about motion and manipulation was unique for each trained object, perhaps leading to the creation of more object-specific representations (and thus, object-training-set–specific activation) in dorsal than in ventral regions. Although our data do not address the reason for generalized learning in ventral regions, one possibility is that the visual similarity between the not-trained and trained objects may have led subjects to automatically infer that they were members of the same category and thus had similar functional properties. Such inferences may have resulted in comparable changes in activity coupled with increased selectivity in the fusiform gyrus for both object sets.

It may be noteworthy that neurophysiological studies recording from inferior temporal neurons in nonhuman primates have sometimes failed to observe differences between trained and not-trained objects after extensive training when learning is measured by changes in firing rates. That is, despite increased neuronal specificity and sharper tuning with learning, no differences in the overall magnitude of response (i.e., firing rates) have been observed (Erickson and others 2000; Baker and others 2002). Because the blood oxygenation level–dependent response measured with MRI is a direct reflection of local neuronal processing (Logothetis and others 2001; for review, see Logothetis and Wandell 2004), perhaps it should not be surprising that we observed no differences between trained and not-trained object sets in ventral temporal cortex.

In contrast to enhanced activity within the dorsal stream, activity in ventral temporal cortex was reduced in some regions but enhanced in others following training. Specifically, activity was reduced in the lateral region of the fusiform gyrus known to prefer faces and animate objects (Martin and others 1996; Kanwisher and others 1997; McCarthy and others 1997; Chao and others 1999; Beauchamp and others 2002; Yovel and Kanwisher 2004) and increased in the more medial part known to prefer manipulable objects such as tools (Chao and others 1999, 2002; Beauchamp and others 2002, 2003). This pattern of change can be viewed within the context of a large number of functional brain imaging studies of object repetition. These studies have shown that repeating the same task with the same visual stimuli results in a reduced response, most commonly referred to as repetition suppression (for recent reviews, see Henson 2003; Grill-Spector and others 2006). It has also been shown that this repetition suppression effect endures over long repetition lags spanning a day or more (Wagner and others 2000; Chao and others 2002; van Turennout and others 2003), even for meaningless objects (van Turennout and others 2000). Thus, the reduced neural response to the objects in the lateral fusiform gyrus during the second scanning session is consistent with this previous work and with the behavioral data showing faster posttraining reaction times for matching the novel objects. However, under certain circumstances, repeated presentation may lead to increased rather than decreased activity, commonly referred to as repetition enhancement (Dolan and others 1997; Henson and others 2000). This repetition enhancement seems to occur when there is a qualitative change in the way an object is processed from one occurrence to the next (Henson and others 2000), especially when the experimental conditions encourage formation of a new object representation (Henson 2003). For example, repetition of ambiguous degraded objects led to increased ventral temporal activity when subjects were exposed to intact, unambiguous versions of the objects interspersed between repetitions (Dolan and others 1997). In a similar fashion, hands-on experience with the objects in our study may have augmented their representations with detailed information about their appearance (medial portion of the fusiform gyrus) and, as discussed above, with information about the motion (middle temporal gyrus) and motor-related properties (parietal and premotor cortices) associated with their use. As a result, objects perceived as meaningless during the first scanning session were now perceived as objects with distinct functional properties. Thus, the training interspersed between scanning sessions transformed the representation of these objects, leading to heightened activity from one scanning session to the next. Moreover, this heightened activity occurred in circumscribed regions associated with tools and their use, each of which is presumed to store information about perceptual or functional object properties. In ventral cortex, this increased activity was confined to the medial fusiform gyrus, a region associated with the identification of manipulable objects, such as tools.

It remains possible that, in addition to functional experience, other factors contributed to our findings, and not all alternative explanations can be ruled out. Additional experiments examining the effects of different learning paradigms are warranted. For example, we cannot rule out the possibility that subjects implicitly associated names with the trained objects. A control condition in which subjects are taught a name for each object would reveal what role naming might play (e.g., James and Gauthier 2004). Likewise, one might question whether increased familiarity with the objects used during training may have contributed to the effects we observed. Equating subjects’ experience with trained and not-trained stimuli would mitigate familiarity as a possible confound. However, we think familiarity is an unlikely explanation for our results for several reasons. First, it is important to note that the same photographs were shown in the pre- and posttraining scanning sessions, and this was the only exposure subjects received to those stimuli. That is, for the stimuli used during scanning, familiarity was equated between object sets. Second, despite additional exposure to the actual objects used during training, no repetition-related decreases in neural activity were observed for the trained compared with the not-trained objects. In fact, in all but one region, we found increased activity for the trained objects after training. The one exception was in the lateral fusiform gyrus, where equivalent reductions were found for both object sets. If familiarity was playing a significant role, item-specific effects should have been especially evident in ventral temporal cortex, based on typical findings in imaging studies of object repetition (e.g., van Turennout and others 2000). Furthermore, as there is no reason to expect that familiarity would produce opposite effects in neighboring regions of the fusiform gyrus (decreases in the lateral aspect associated with biological objects and increases medially in the region associated with tools), our findings are not consistent with typical familiarity effects. Third, the experience subjects had with the actual objects during training was qualitatively different from that which occurred during scanning. It is precisely under such circumstances, that is, when there is a qualitative change in the representation of objects, that one finds enhanced activity for subsequent object repetitions, as we observed in lateral cortical regions and in the medial fusiform gyrus. Thus, we believe that learning about object function produced a change in the representation of the trained objects, leading to enhanced activity in specific regions where information about manipulable objects, such as tools, is stored.

In summary, functional experience with novel objects changed how these objects were represented in the brain. The cortical loci of these changes were highly predictable based on studies of perceiving and retrieving information about the properties of familiar tools. Regions identified by these studies have commonly included the medial portion of the fusiform gyrus, the posterior region of the left middle temporal gyrus, left intraparietal sulcus, and left premotor cortex. These regions have in turn been linked to the representation of the properties of form, motion, and manipulation associated with tools (for review, see Martin and Chao 2001; Thompson-Schill 2003). All these regions showed enhanced responses following training, whereas activity was reduced in a region linked to the representation of faces and other animate objects (lateral fusiform gyrus). Our findings are consistent with the idea that we possess specialized neural circuitry for learning about specific sensory- and motor-related properties associated with an object’s appearance and use (Santos and others 2001, 2003). Furthermore, the fact that this network was automatically engaged when perceiving the objects after training suggests that one role of these specialized systems may be to allow the organism to acquire information about the properties critical for identifying a category of objects, and to use this information to discriminate among them quickly and efficiently (Mahon and Caramazza 2003). Whether similar learning-dependent changes can be elicited by observational or detailed verbal training regimens remains to be determined, although there is some evidence that associating verbal biographical information with novel objects can impact neural representations (James and Gauthier 2003, 2004). Nevertheless, our findings show that the locus of learning-related cortical plasticity appears to be highly constrained by both the nature of the information to be learned and how it is acquired.

Footnotes

We thank Gretchen Fry for helping with task design and constructing the novel objects, Greg Moor for behavioral data collection, and Robert Cox and Ziad Saad for developing the across-session fMRI data analysis method. This work was supported by the National Institute of Mental Health Intramural Research Program. Conflict of Interest: None declared.

References

- Baker CI, Behrmann M, Olson CR. Impact of learning on representation of parts and wholes in monkey inferotemporal cortex. Nat Neurosci. 2002;5:1210–1216. doi: 10.1038/nn960. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI responses to video and point-light displays of moving humans and manipulable objects. J Cogn Neurosci. 2003;15:991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, Freund HJ. Human anterior intraparietal area subserves prehension—a combined lesion and functional MRI activation study. Neurology. 1998;50:1253–1259. doi: 10.1212/wnl.50.5.1253. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain Lang. 2002;82:179–199. doi: 10.1016/s0093-934x(02)00014-7. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Veramonti T, Schwartz MF. Function and manipulation tool knowledge in apraxia: knowing ‘what for’ but not ‘how’. Neurocase. 2000;6:83–97. [Google Scholar]

- Capitani E, Laiacona M, Mahon B, Caramazza A. What are the facts of semantic category-specific deficits? A critical review of the clinical evidence. Cogn Neuropsychol. 2003;20:213–261. doi: 10.1080/02643290244000266. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chao LL, Weisberg J, Martin A. Experience-dependent modulation of category-related cortical activity. Cereb Cortex. 2002;12:545–551. doi: 10.1093/cercor/12.5.545. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Lee JN. Neural representations of graspable objects: are tools special? Cogn Brain Res. 2005;22:457–469. doi: 10.1016/j.cogbrainres.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Category-related recognition defects as a clue to the neural substrates of knowledge. Trends Neurosci. 1990;13:95–98. doi: 10.1016/0166-2236(90)90184-c. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Decety J. The neurophysiological basis of motor imagery. Behav Brain Res. 1996;77:45–52. doi: 10.1016/0166-4328(95)00225-1. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Moore CJ, Mummery CJ, Gorno-Tempini ML, Phillips JA, Noppeney U, Frackowiak RSJ, Friston KJ, Price CJ. Anatomic constraints on cognitive theories of category specificity. Neuroimage. 2002;15:675–685. doi: 10.1006/nimg.2001.1002. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Fink GR, Rolls E, Booth M, Holmes A, Frackowiak RSJ, Friston KJ. How the brain learns to see objects and faces in an impoverished context. Nature. 1997;389:596–599. doi: 10.1038/39309. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Damasio H, Ponto L, Hichwa R, Bellugi U. Motor-iconicity of sign language does not alter the neural systems underlying tool and action naming. Brain Lang. 2004;89:27–37. doi: 10.1016/S0093-934X(03)00309-2. [DOI] [PubMed] [Google Scholar]

- Erickson CA, Jagadeesh B, Desimone R. Clustering of perirhinal neurons with similar properties following visual experience in adult monkeys. Nat Neurosci. 2000;3:1143–1148. doi: 10.1038/80664. [DOI] [PubMed] [Google Scholar]

- Fiez JA, Raichle ME, Balota DA, Tallal P, Petersen SE. PET activation of posterior temporal regions during auditory word presentation and verb generation. Cereb Cortex. 1996;6:1–10. doi: 10.1093/cercor/6.1.1. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Damasio H, Damasio AR. Premotor and prefrontal correlates of category-related lexical retrieval. Neuroimage. 1998;7:232–243. doi: 10.1006/nimg.1998.0324. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Fadiga L, Arbib MA, Rizzolatti G. Premotor cortex activation during observation and naming of familiar tools. Neuroimage. 1997;6:231–236. doi: 10.1006/nimg.1997.0293. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Sci. 2006;10:14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Haaland KY, Harrington DL, Knight RT. Neural representations of skilled movement. Brain. 2000;123:2306–2313. doi: 10.1093/brain/123.11.2306. [DOI] [PubMed] [Google Scholar]

- Handy TC, Grafton ST, Shroff NM, Ketay S, Gazzaniga MS. Graspable objects grab attention when the potential for action is recognized. Nat Neurosci. 2003;6:421–427. doi: 10.1038/nn1031. [DOI] [PubMed] [Google Scholar]

- Henson R, Shallice T, Dolan R. Neuroimaging evidence for dissociable forms of repetition priming. Science. 2000;287:1269–1272. doi: 10.1126/science.287.5456.1269. [DOI] [PubMed] [Google Scholar]

- Henson RNA. Neuroimaging studies of priming. Prog Neurobiol. 2003;70:53–81. doi: 10.1016/s0301-0082(03)00086-8. [DOI] [PubMed] [Google Scholar]

- James TW, Gauthier I. Auditory and action semantic features activate sensory-specific perceptual brain regions. Curr Biol. 2003;13:1792–1796. doi: 10.1016/j.cub.2003.09.039. [DOI] [PubMed] [Google Scholar]

- James TW, Gauthier I. Brain areas engaged during visual judgments by involuntary access to novel semantic information. Vision Res. 2004;44:429–439. doi: 10.1016/j.visres.2003.10.004. [DOI] [PubMed] [Google Scholar]

- Jeannerod M. Neural simulation of action: a unifying mechanism for motor cognition. Neuroimage. 2001;14:S103–S109. doi: 10.1006/nimg.2001.0832. [DOI] [PubMed] [Google Scholar]

- Jeannerod M, Arbib MA, Rizzolatti G, Sakata H. Grasping objects—the cortical mechanisms of visuomotor transformation. Trends Neurosci. 1995;18:314–320. [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karni A, Meyer G, Jezzard P, Adams MM, Turner R, Ungerleider LG. Functional MRI evidence for adult motor cortex plasticity during motor skill learning. Nature. 1995;377:155–158. doi: 10.1038/377155a0. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: the importance of manipulability and action in tool representation. J Cogn Neurosci. 2003;15:30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Keppel G. Design and analysis: a researchers handbook. Upper Saddle River, NJ: Prentice Hall; 1991. [Google Scholar]

- Logothetis NK, Pauls J, Augath M, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Wandell BA. Interpreting the BOLD signal. Annu Rev Physiol. 2004;66:735–769. doi: 10.1146/annurev.physiol.66.082602.092845. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. Constraining questions about the organization and representation of conceptual knowledge. Cogn Neuropsychol. 2003;20:433–450. doi: 10.1080/02643290342000014. [DOI] [PubMed] [Google Scholar]

- Martin A. The organization of semantic knowledge and the origin of words in the brain. In: Jablonski N, Aiello L, editors. The origins and diversification of language. San Francisco, CA: California Academy of Sciences; 1998. pp. 69–98. [Google Scholar]

- Martin A, Chao LL. Semantic memory and the brain: structure and processes. Curr Opin Neurobiol. 2001;11:194–201. doi: 10.1016/s0959-4388(00)00196-3. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG. Discrete cortical regions associated with knowledge of color and knowledge of action. Science. 1995;270:102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Neural correlates of category-specific knowledge. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. J Cogn Neurosci. 1997;9:605–610. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- Mitz AR, Godschalk M, Wise SP. Learning-dependent neuronal-activity in the premotor cortex—activity during the acquisition of conditional motor associations. J Neurosci. 1991;11:1855–1872. doi: 10.1523/JNEUROSCI.11-06-01855.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore CJ, Price CJ. A functional neuroimaging study of the variables that generate category-specific object processing differences. Brain. 1999;122:943–962. doi: 10.1093/brain/122.5.943. [DOI] [PubMed] [Google Scholar]

- Picard N, Strick PL. Imaging the premotor areas. Curr Opin Neurobiol. 2001;11:663–672. doi: 10.1016/s0959-4388(01)00266-5. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Motor and cognitive functions of the ventral premotor cortex. Curr Opin Neurobiol. 2002;12:149–154. doi: 10.1016/s0959-4388(02)00308-2. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M, Kusunoki M, Murata A, Tanaka Y. The TINS lecture—the parietal association cortex in depth perception and visual control of hand action. Trends Neurosci. 1997;20:350–357. doi: 10.1016/s0166-2236(97)01067-9. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M, Kusunoki M, Murata A, Tsutsui K, Tanaka Y, Shein WN, Miyashita Y. Neural representation of three-dimensional features of manipulation objects with stereopsis. Exp Brain Res. 1999;128:160–169. doi: 10.1007/s002210050831. [DOI] [PubMed] [Google Scholar]

- Santos LR, Hauser MD, Spelke ES. Recognition and categorization of biologically significant objects by rhesus monkeys (Macaca mulatta): the domain of food. Cognition. 2001;82:127–155. doi: 10.1016/s0010-0277(01)00149-4. [DOI] [PubMed] [Google Scholar]

- Santos LR, Miller CT, Hauser MD. Representing tools: how two non-human primate species distinguish between the functionally relevant and irrelevant features of a tool. Anim Cogn. 2003;6:269–281. doi: 10.1007/s10071-003-0171-1. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Thompson-Schill SL. Neuroimaging studies of semantic memory: inferring “how” from “where”. Neuropsychologia. 2003;41:280–292. doi: 10.1016/s0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Tranel D, Damasio H, Damasio AR. A neural basis for the retrieval of conceptual knowledge. Neuropsychologia. 1997;35:1319–1327. doi: 10.1016/s0028-3932(97)00085-7. [DOI] [PubMed] [Google Scholar]

- Tranel D, Kemmerer D, Adolphs R, Damasio H, Damasio AR. Neural correlates of conceptual knowledge for actions. Cogn Neuropsychol. 2003;20:409–432. doi: 10.1080/02643290244000248. [DOI] [PubMed] [Google Scholar]

- van Turennout M, Bielamowicz L, Martin A. Modulation of neural activity during object naming: effects of time and practice. Cereb Cortex. 2003;13:381–391. doi: 10.1093/cercor/13.4.381. [DOI] [PubMed] [Google Scholar]

- van Turennout M, Ellmore T, Martin A. Long-lasting cortical plasticity in the object naming system. Nat Neurosci. 2000;3:1329–1334. doi: 10.1038/81873. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Maril A, Schacter DL. Interactions between forms of memory: when priming hinders new episodic learning. J Cogn Neurosci. 2000;12:52–60. doi: 10.1162/089892900564064. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy RA. Categories of knowledge—further fractionations and an attempted integration. Brain. 1987;110:1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]